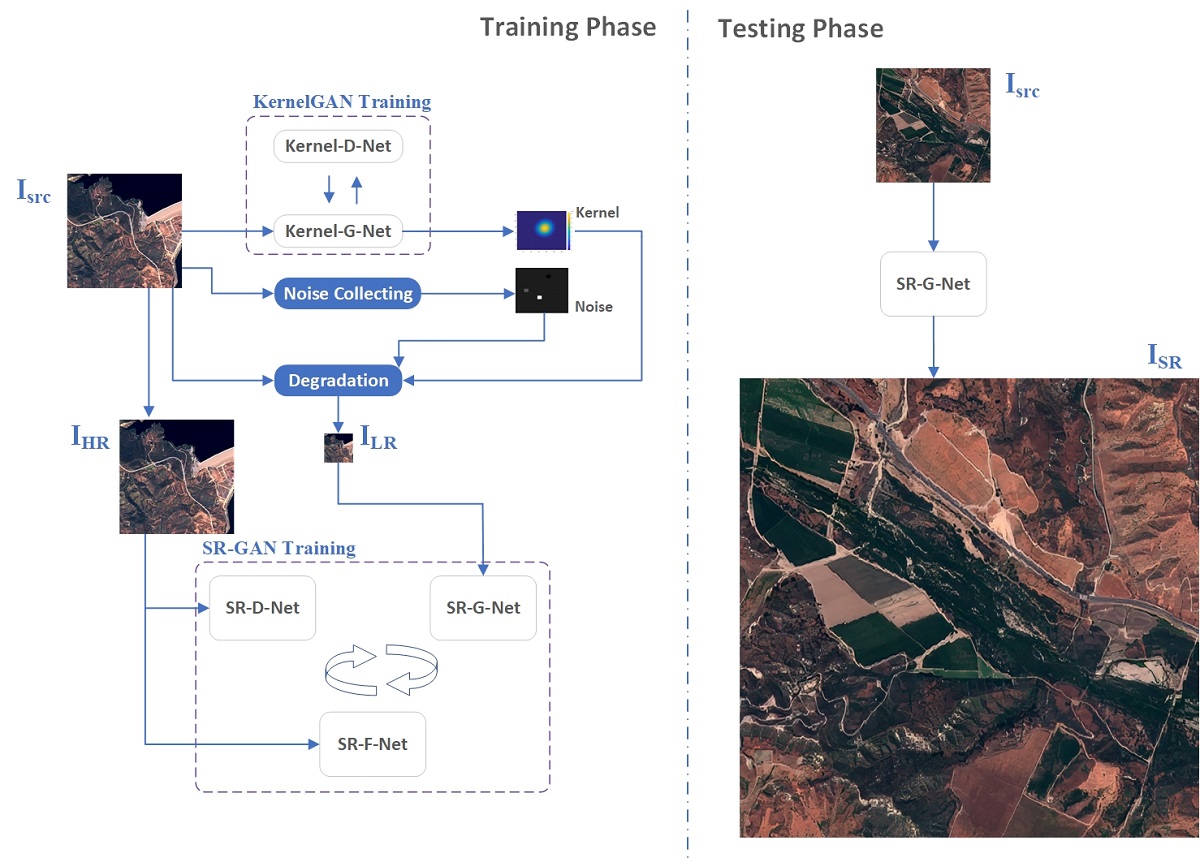

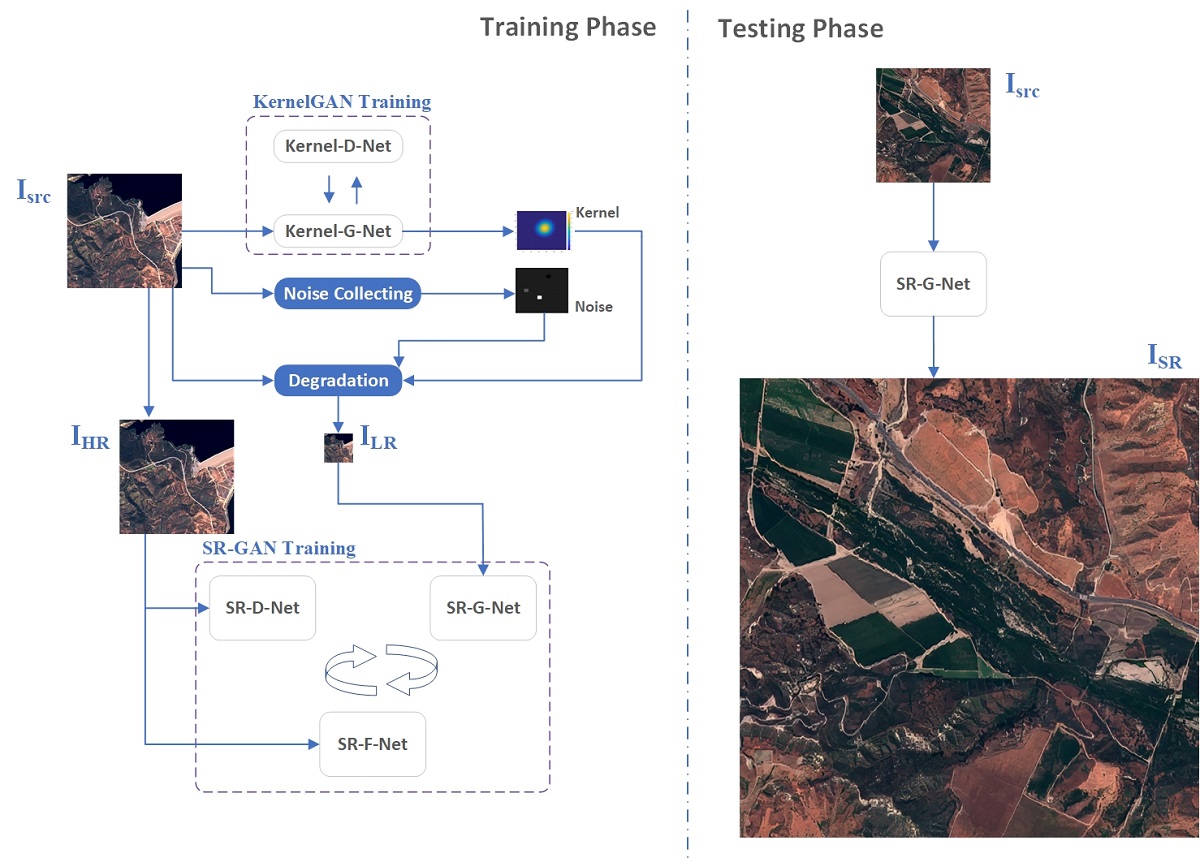

Sentinel-2 can provide multi-spectral optical remote sensing images in RGBN bands with a spatial resolution of 10m, but the spatial details provided are not enough for many applications. WorldView can provide HR multi-spectral images less than 2m, but it is a commercial paid resource with relatively high usage costs. In this paper, without any available reference images, Sentinel-2 images at 10m resolution are improved to a resolution of 2.5m through super-resolution (SR) based on deep learning technology. Our model, named DKN-SR-GAN, uses degradation kernel estimation and noise injection to construct a dataset of near-natural low-high-resolution (LHR) image pairs, with only low-resolution (LR) images and no high-resolution (HR) prior information. DKN-SR-GAN uses the Generative Adversarial Networks (GAN) combined of ESRGAN-type generator, PatchGAN-type discriminator and the VGG-19-type feature extractor, using perceptual loss to optimize the network, so as to obtain SR images with clearer details and better perceptual effects. Experiments demonstrate that in the quantitative comparison of the non-reference image quality assessment (NR-IQA) metrics like NIQE, BRISQUE and PIQE, as well as the intuitive visual effects of the generated images, compared with state-of-the-art models such as EDSR8-RGB, RCAN and RS-ESRGAN, our proposed model has obvious advantages.