1. Introduction

Many creative projects have small budgets, aggressive timelines, and a cacophony of voices that demand sets of unachievable deliverables. It appears the use of AI and Deep Neural Networks (DNN) (Moran et al. 2020) has the potential to improve productivity within the professional practices of designers. This paper looks at the use of AI for productivity and explores whether the routine tasks of UX design could be improved or have the potential for automation. As a UX professional who studies new technologies, I’ve always sought ways to be more efficient in business operations for myself and within my teams. My central argument is that AI can potentially take over mundane tasks and become intelligent enough to contribute to creativity, critical thinking, and original thought within the field of UX.

2. Exploration of creative processes

The training of an AI model involves three steps: training, validating, and testing (Amazon Web Services, Inc 2021). Suppose we suggest improvements to productivity by automating the UX design process. In that case, an exploration of the creative process is required to ensure the process is efficient, repeatable, scalable, consistent, and valid.

The field of design is a very pragmatic yet experimental discipline that requires cognitive interaction at critical points to structure design problems, produce novel artefacts, manage refinement, and gain consensus among design teams and stakeholders. This can be observed in the work of British psychologist Graham Wallas, who studied the working practices of inventors and other creative types over several years in his book

The Art of Thought (Wallas 2014). Wallas theories are that all creatives follow a four-stage creative process, Preparation

1, Incubation

2, Illumination

3 and Verification

4.

The UK Design Council has also made great strides to analyse creative processes. Formalising a framework for innovation in 2005 (Design Council 2015) with the Double Diamond. The Double Diamond represents a process for exploring issues more widely or deeply (divergent thinking) and then taking focused action (convergent thinking). The framework Discover, Define, Develop and Deliver adapts the divergence-convergence model proposed in Béla H. Bánáthy’s book Designing Social Systems in a Changing World (Banathy 1997).

However, all too often, our collective understanding of the design process is the assumption that ‘

If you do this, then this will happen. It may do, yet it may not. This is something to be cautious about if we are to suggest automating elements of the UX design process as the design process is agile

5 in nature.

Often projects require frequent changes because the result is rarely known. Defined as ‘The solution-first strategy’ (Cooley 2000; Carroll 2002; Vicente 2013), interim design solutions are generated as a method for gathering feedback. These feedback cycles are important to product optimisation and truly user-centred design. It can be assumed from the research that the AI would need to adapt based on new information at various stages of the process to be useful and productive.

Further research uncovers an Illumination or ‘eureka’ moment as a defining aspect of the design creation phase. Numerous design scholars (Lawson 2005; Cross 2011; Dorst 2011) have sought to understand the cognitive processes designers use—leading to the belief that most ideas come from the subconscious. In recent decades, scientific approaches to inform our understanding of how designers think have been explored by a range of authorities (Flaherty 2005; Carson 2010). Each scholar found that a combination of distraction and dopamine was required to improve the creativity and ideation process. Triggers were found within events that made participants feel great and relaxed (releasing dopamine), allowing the subconscious mind to solve the problems that the conscious mind failed to consider.

If this is true, that ideas come from dreams and the subconscious. For our algorithm to be useful it would need to ideate autonomously. But can we create algorithms that dream, let their thoughts wander and begin to innovate? Research suggests this can be answered in many ways, but must begin with the assessment of intelligence.

The Turing Test (Turing 1950), for example, is claimed by many to be a way to test a machine’s ability to exhibit intelligent behaviour. Turing proposed that a human judge evaluate natural language conversations between a person and a machine designed to generate human-like responses. Unfortunately, attempts to build computational systems able to pass the Turing Test have devolved into manipulation games designed to trick participants into thinking they are interacting with a human (Bringsjord 2013), falling short of emulating real intelligence. Despite this, the Turing Test is still highly influential and remains an important concept in assessing Artificial Intelligence (Pinar Saygin et al. 2000). Many scholars use it as the basis to design other tests such as the Lovelace Test (Bringsjord et al. 2003: 215–239).

Named after the 19th-century mathematician Ada Lovelace, the Lovelace Test has the notion that if you want to replicate human-like abilities, AI must have the ability to create new things. Demonstrating Intelligence and original thought. Thus the level of creative ability becomes a proxy for the measurement of Intelligence. This could create a huge problem for this study. As in theory, ideas and creativity come from dreams and the subconscious. As found in the research, the subconscious is an area too challenging to measure with a level of confidence.

The measurement of consciousness has seen several proposals (Arrabales et al. 2010; Brazdău and Mihai 2011). However, this has led to much debate. Some scholars argue that consciousness could be considered a quantitative property (Baum 2004: 362) rather than a qualitative one, and others see consciousness as a multidimensional feature (Fazekas and Overgaard 2016). Whether consciousness can be measured or developed with any accuracy, I would consider it too difficult to resolve within this paper, so I will attempt to resolve this feature in a future study.

4. Primary research of creative processes

Our secondary research theories that all creatives follow a four-stage creative process: Preparation, Incubation, Illumination and Verification. However, secondary research is often outdated and may no longer be accurate. The study invited design professionals to participate in a survey to validate the secondary research. Again, to ensure our model is trained accurately enough to automate components.

4.1. Participants

Twenty digital graphic designers were recruited by personal invites on Linkedin to complete a ten-question survey. The data set comprised 4 participants, aged 25-34, 12 participants aged 35-44 and 4 participants aged 45-54. The participants were similar in that they all had a fairly high level of education, either completing an Undergraduate degree (13 participants) or a Postgraduate degree (7 participants).

4.2. Research questions

A range of questions was asked around the origins of briefs, core skills, design process, tools, techniques and project success. The primary research identified divergent thinking, identification and mitigation of blind spots and variant idea generation as key requirements for designers. The findings also suggest Sprint 2.0

6 and Design Thinking

7 ideation techniques would be the recommended processes to teach an AI in addition to Abstractive summarisation

8.

RQ1: Participants indicated that most professional design works responded to either Horizontal Integration

9 or Vertical integration

10 strategies. Horizontal integration is where a client has acquired someone or created a new product and service—usually requiring a redesign or integration of a new process. Whereas Vertical integration is when something isn’t working, a client needs to reduce costs by improving operational efficiencies. This can be anything but commonly starts with root cause analysis.

RQ2: Our participants identified ten soft skills graphic designers must have. They identified Analytical thinking, Collaboration, Communication, Composition, Creativity, Cultural awareness, Curiosity, Empathy, Problem-solving and Understanding as the most desirable traits and behaviours.

RQ3: Participants’ design processes varied, but loosely followed processes as identified by Wallas (Wallas 2014).

RQ4: A common sentiment in our sample is that working alone allowed focus but was rare in the modern-day workplace. Collaboration is also identified as a way to improve the work and innovate. For example, one responder explained: “Working with other people takes you to new places, and that’s exciting. It’s also reassuring when you then realign, and it’s a much more neurotic process alone. Due to the size of the business, it’s very rare for an individual to design completely in isolation.”

RQ5: A broad range of ideation tools and techniques were used across our sample size. Sprint 2.0 and Design Thinking Ideation techniques were popular, with 18 participants mentioning some practice elements, such as Sketching, Experience mapping, Crazy 8s, Mood boarding and Mind mapping.

RQ6: Our participants identified several research techniques. The most popular techniques identified were: Online desk research, Data analysis, Books and library research. Competitor analysis, Process analysis, Experience mapping, Primary research, Consumer trend and market research reports investigation were also seen.

RQ7: Project management, creation process, Research scope, Speed and breadth of idea generation, validation, and sign-off were identifiable trends. For example, one responder goes on to say: “The distilling and picking one path forward. So many fears around “is this the right way?” and “there are so many good nuggets of ideas here, how can I leave this behind?”.

RQ8 and RQ9: Metrics, feedback loops and serendipitous discovery were cited as the main measurements when designers felt the work was really good and successful.

RQ10: Participants showed that AI could optimise the Graphic Design ideation processes in several ways. For instance, the QA and Testing process in digital product creation. Management of repetitive and time-consuming tasks. Analysis of processes that had to be repeated, such as Design optimisation, creation of assets within design systems. Exploration of ideas, iteration and abstractions was cited by a majority of participants (15), with divergent thinking being a key requirement. One participant went on to say, “It would be amazing if AI could help generate many variations of an overall design approach and do some testing to see which version(s) perform the best. A quick way to help distil possible approaches.”

5. AI landscape research

Our primary research aligns with our secondary research. It was surprising to observe that the answers to question three mirrored what others (Cooley 2000; Carroll 2002; Vicente 2013) have defined as ‘The solution-first strategy’ for gathering requirements. These observations are important to us as Reitman (Reitman 1965) calls design an ill-structured problem in that: (1) The problem state is not fully specified, (2) Possible moves in the problem space are not all given and (3) The goal state is not specified.

The research also identifies areas where AI could support a project’s preliminary stages and determines divergent thinking, identification and mitigation of blind spots and variant idea generation as key areas designers would appreciate automation. The research indicates that designers would use AI algorithms for research tasks, such as mining tone of voice in documents. The sorting and classification of the image banks, sentiment identification in historical designs, and insight extraction from product analytics to determine where design optimisations could be made.

An exploration of the AI landscape is required to explore the solutions that could potentially be trained to automate the aspects identified by designers.

AI could be one of the most transformative technologies in human history and has seen use across multiple industries (Amazon Web Services 2020; Caulfield 2021). PricewaterhouseCoopers (2016) predicts a $15.7 trillion investment in AI by 2030. In addition, contributions presented at the International Conference on Human Interaction and Emerging Technologies: Future Applications (Ahram et al. 2020) have demonstrated how AI undoubtedly would take over routine tasks and liberate us to do more stimulating work. With the AI landscape growing so rapidly, the challenge was to explore the ecosystem to evaluate current uses, how each technique works and what methods could be repurposed for the benefit of designers.

One of the most well-known architectures of DL is Convolutional neural network (CNN). It is typically used in image processing applications (Valueva et al. 2020). CNN has seen use in identifying user intention (Ding et al. 2015), which suggests great potential for our model if integrated.

Another is Recurrent neural networks (RNN), typically designed to recognise sequences and patterns in speech, handwriting and text applications (Mandic et al. 2001). RNN research suggests (Zhou et al. 2019) it could aid conversion in complex user journeys, guide creative design (text and images), and lead to higher product effectiveness which demonstrates great potential for our model.

An extension of AutoEncoder (AE), Denoising AutoEncoder (DAE) is an asymmetrical neural network for learning features from noisy datasets (Kramer 1991). DEA has seen use in image classification (Druzhkov and Kustikova 2016) and speaker verification. This model shows great potential for mining and sorting variables from various datasets, significantly reducing the time in manual processes currently used within my organisation.

Typically employed for high dimensional manifold learning (Bengio 2009), Deep belief networks (DBNs) is one of the most reliable deep learning methods with high accuracy and computational efficiency (Bengio et al. 2007). Its application has been seen in many engineering and scientific problems—specifically, human emotion detection (Movahedi et al. 2018), which would be an exciting feature of our model.

An RNN method that benefits from feedback connections is Long Short-Term Memory (LSTM). LSTM can be used for sequence, pattern recognition and image processing applications (Graves et al. 2009). LSTM can decide when to let input (data) enter the neuron and remember what was computed (happened) in the previous step. The malleable nature of LSTM benefits our research immensely. Other authors have explored LSTM use in design classification models (Corpuz 2021) to learn text patterns and classify customer complaints, feedback, and commendations.

A class of ML frameworks designed by Ian Goodfellow and his colleagues (Goodfellow et al. 2014) is Generative Adversarial Networks (GANs). GANs are a DL based generative model that involves two sub-models. A generator model for generating new examples and a discriminator model for classifying whether generated examples are real, from the domain, or fake, generated by the generator model. Its application has seen use in Art generation, Astronomy, Video games (Schawinski et al. 2017; Wang et al. 2018; Yu et al. 2018), and the generation of Deepfake (Singh et al. 2020) images, which would serve us well to generate design variants based on user feedback.

6. Visualisation

This section visualises my hypothesis and suggests an AI design that could be developed given the technical knowledge and resources.

AI Design

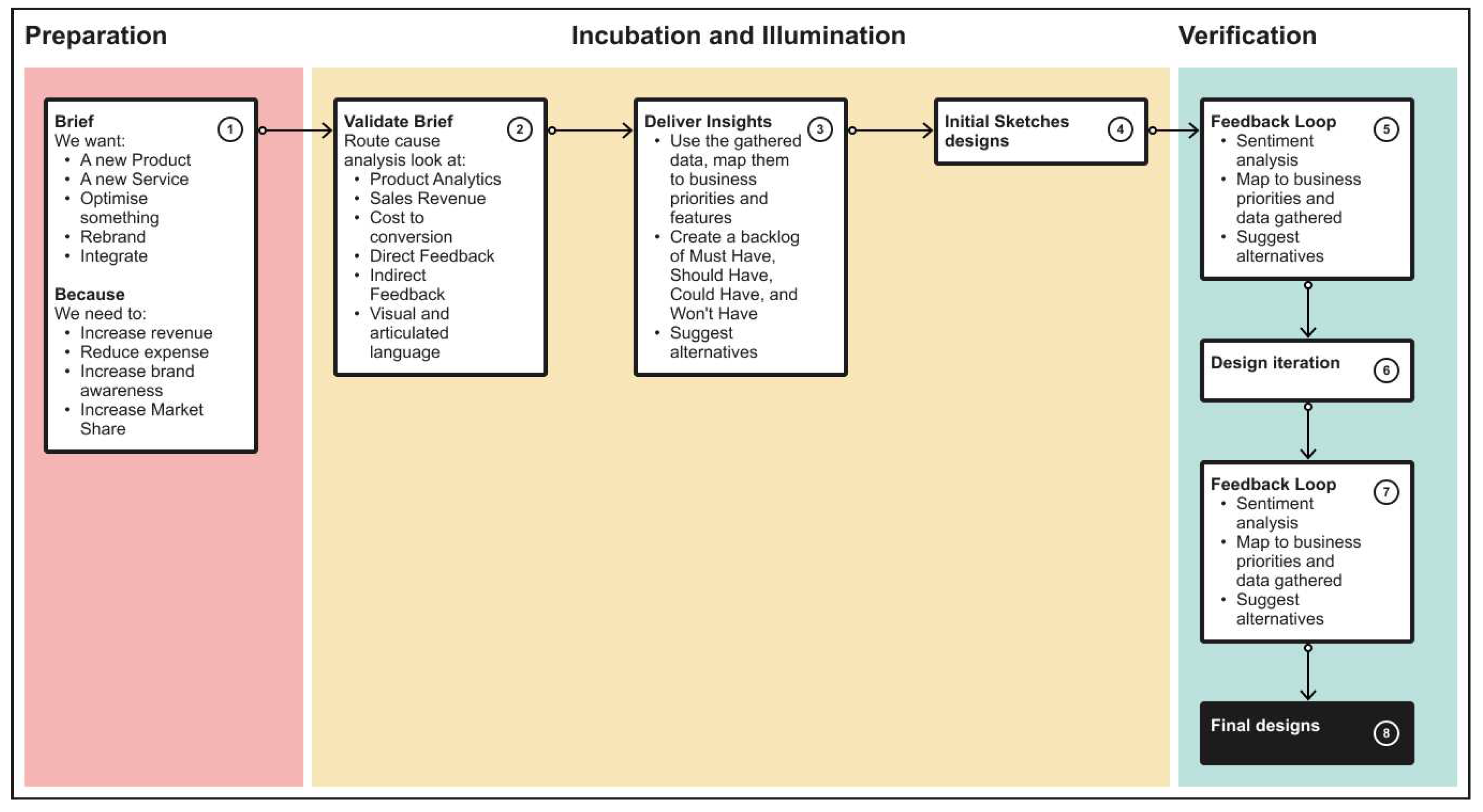

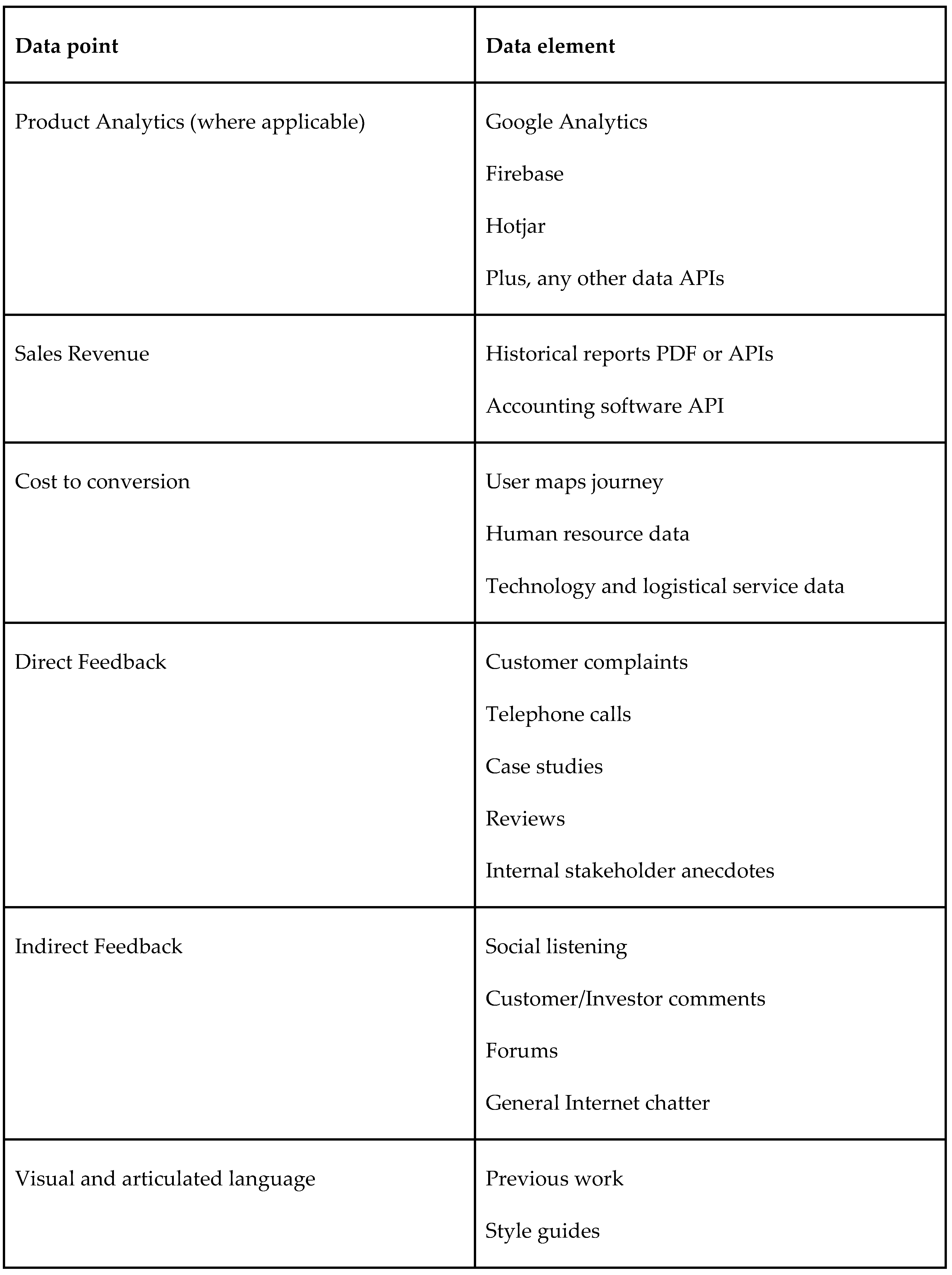

For product development, I will refer to the collective AI, ML and DL processes as AI Orchestration (AIO), as depending on the brief and type of work, the required AIO will differ.

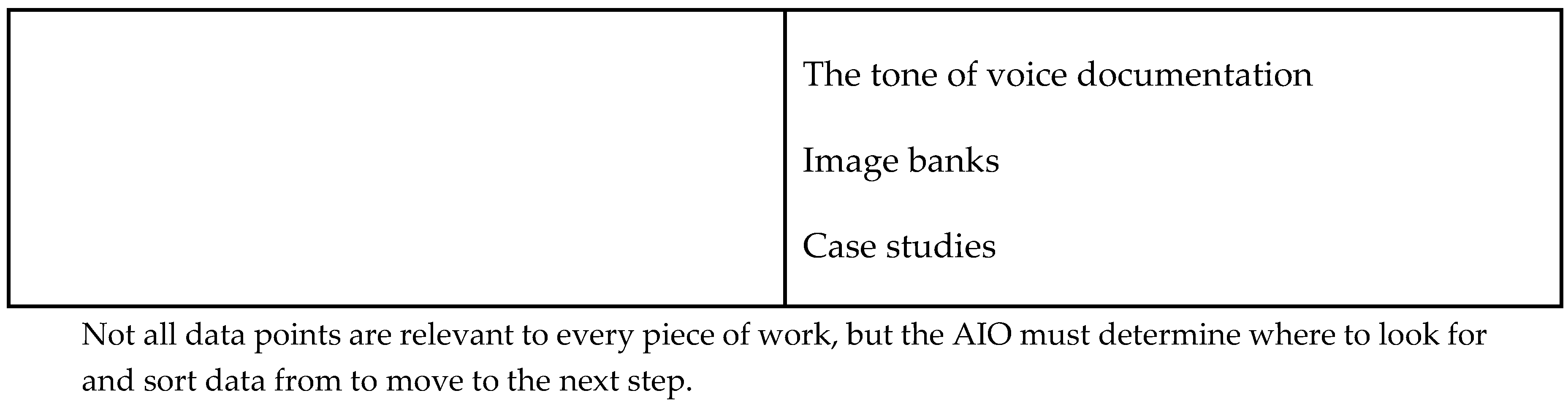

An initial visualisation of the Design Process (

Figure 1) used in my studio allows us to map the necessary AIO steps and create an AI design that could be developed given the technical knowledge and resources (

Figure 2).

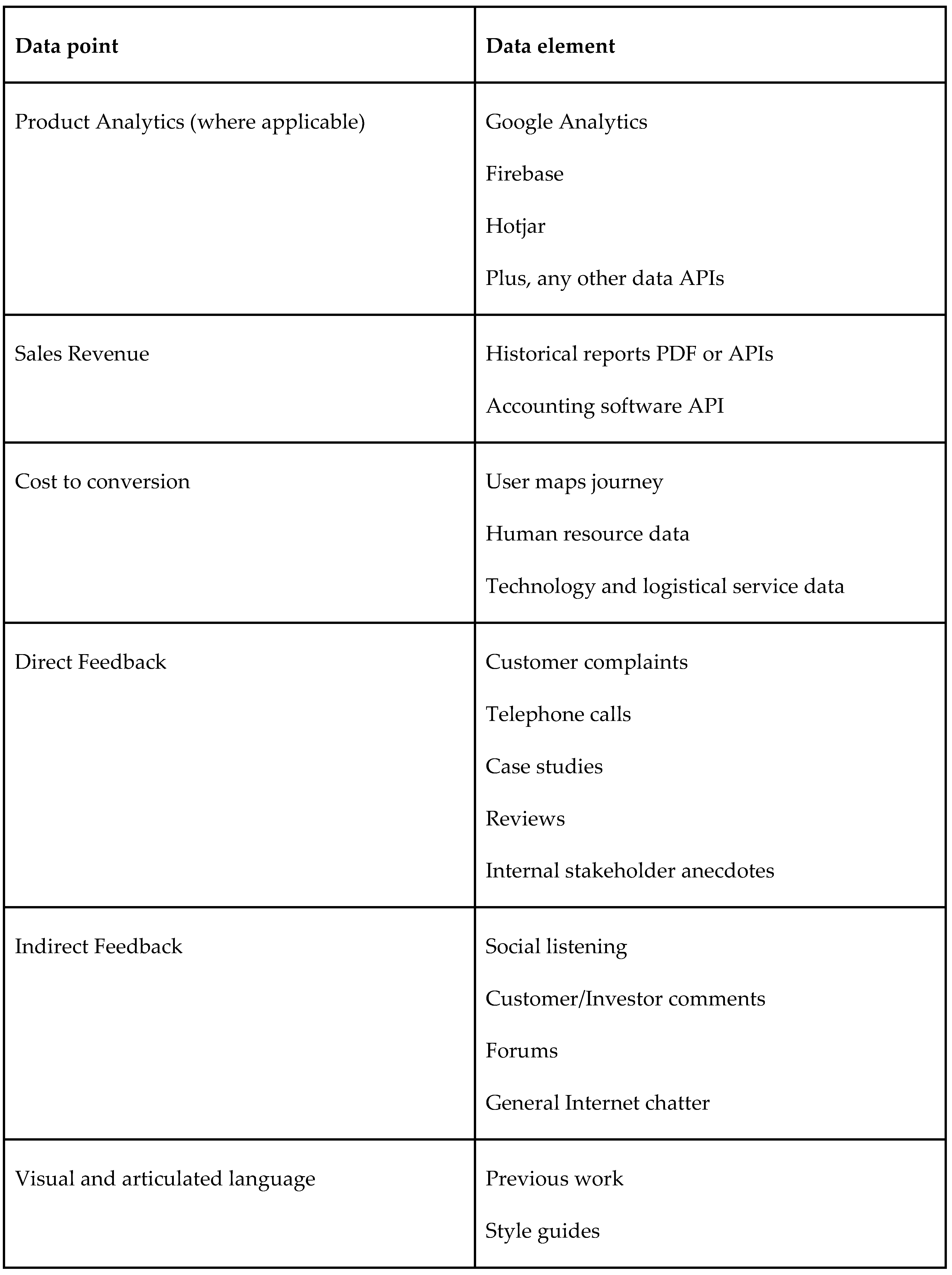

In this step (

Figure 2), the AIO would need to identify the type of work, typically either: the creation of a new product or service. Optimisation of an existing product or service. The rebrand of a new/existing product or service or the integration of a new/existing product or service. Project goals are also typically identified at this stage. Usually, increase revenue, reduce expenses, increase brand awareness and increase market share. From personal experience, a Request for Proposal (RFP

11) is usually the best way to gather this type of information. In addition to the RPF, additional data points are required. Below I have detailed the specific data sources required:

The AIO must validate the brief and what the client wants in this step (

Figure 2). For example, a telephone company’s project owner might want to reduce complaints by providing self-service channels online—this is one solution. However, after a set of user interviews, customers told us they were not informed of extra charges within their call plans at sign up and subsequently complained when the first bill arrived.

Providing a self-service channel online may reduce the cost of ‘handling’ complaints, but that doesn’t ‘reduce’ the total number of complaints. Better training for sales representatives and clear articulation of call plan charges at sign-up should reduce the number of complaints and fulfil the project owner’s goal—a better solution and one we can only get to by collecting evidence. The AIO would need to detect this pattern and conclude the route cause. Initially, the AIO would put all the data through a Natural language Process

12 (NLP) to summarise the company’s products and services and extract keywords on what sets them apart from competitors. Outline what would need to be done. The goals they expect to accomplish from the project. Deadlines and list of expected deliverables. Any metrics or KPIs the project will be evaluated on and possible roadblocks.

In this step (

Figure 2), the AI delivers insights based on data gathered in Step 2. The AIO would identify or pull out causal chains such as ‘A’ led to ‘B’ which led to ‘C’. Forming hypotheses to confidently devise a project strategy to justify work produced.

Once the insights have been validated, the AIO will generate designs using a GANs Model. These can be text-based or image-based. However, there are limitations in this part of the process as an algorithm could introduce inaccurate data in the design process if we rely too much on feedback found in historical data sets alone.

A combination of user interviews, usability testing, and quantitative analysis to understand peoples’ actions will help avoid the bias that comes with overly relying on any single method. Therefore an optional supervision step is in place to allow for new information when the client feels the design is going in the wrong direction.

Feedback would be processed and actioned using an LSTM model so that the GANs model can produce original designs and advance from the work from previous iterations. Recalling feedback as and when needed, for instance, the client may change their minds or discover new information from testing. The AIO will generate designs and repeat the process until the final designs have been signed off.

7. Conclusions

Observations in individual results across different experiments seemingly correlate with the routine tasks performed in UX design. For instance, recent investigations indicate that Convolutional neural networks could identify user intention. Recurrent neural networks could aid conversion in complex user journeys and guide creative design decisions. Denoising AutoEncoder could mine and sort variables from various datasets. Deep belief networks could detect human emotion. Long Short-Term Memory could automate the design feedback processes and suggest design alternatives, and finally, Generative Adversarial Networks could generate design variants based on user feedback.

These discoveries allow us to hypothesise that AI could aid designers in all four stages of the creative process. Using a combination of technologies, AI could mine variables ranging from the tone of voice, image banks, historical records, and product use during the Preparation stage. Deliver insights from the gathered data, map them to business priorities and features during the Incubation and Illumination stages. And automate the feedback process during the Verification stage.

An AI design in response to my research findings will be developed in the form of an artefact. However, the creation of such a model is beyond the skill-set of this author and would require a larger team.

8. Further study

This work is part of my ongoing research into the efficacy of business operations for myself and my teams. Inevitably I see further alignment in my practice with advanced AI systems. The authors of ‘Understanding Consumer Journey Using Attention Based Recurrent Neural Networks’ (Zhou et al. 2019) have made great steps in creating models that aid conversion in complex user journeys. They provide testing criteria that could be used to develop the findings in this paper, given the technical knowledge and resources.

Additionally, the measurement of consciousness and whether it can be developed with confidence seems too intriguing to ignore. Fundamentally, it isn’t easy to measure consciousness itself as it is believed to be a constellation of features, mental abilities, and thought patterns. Although some people may experience their own consciousness as a unified whole, we assume that consciousness is a multidimensional set of attributes present to differing degrees in a given mind. i.e. you might give love a higher value than me and so on. So whether consciousness can be measured or developed with any accuracy would be interesting to study.

| 1 |

Preparation - Within Preparation, Wallas observes that creatives (usually) begin with gathering information, materials, sources of inspiration and acquiring knowledge about the project or problem at hand. |

| 2 |

Incubation - The Incubation period is where the designer marinates their thoughts, ideas, and data gathered to draw connections. During this germination period, creatives (usually) take their focus away from the problem and allow their conscious mind to wander. |

| 3 |

Illumination - Wallas classifies the next phase as the Illumination phase, where insights arise from the deeper layers of the mind and breakthrough to conscious awareness, often in a dramatic way. The sudden 'hold-on what if we do this', either while showering, walking and seemingly out of nowhere. |

| 4 |

Verification - Wallas observes Verification as the moment we apply critical thinking and seek aesthetic judgement. Designers do this in various ways, either via internal thinking or external feedback. But ultimately to determine whether our peers or clients deem our ideas worthy of dedication. |

| 5 |

Agile workflow is an iterative method of delivering a project. In Agile, multiple individual teams work on particular tasks for a certain duration of time termed as 'Sprints'. Agile Workflow can be defined as the set of stages involved in developing an application, from ideation to sprints completion. |

| 6 |

A variation of the Google Ventures Design Sprint that is more refined and condensed and aims to solve Design Challenges over four days. |

| 7 |

Design Thinking is an iterative process in which we seek to understand the user, challenge assumptions, and redefine problems to identify alternative strategies and solutions that might not be instantly apparent with our initial level of understanding. |

| 8 |

Abstractive text summarisation is the task of generating a headline or short summary of a few sentences that capture the salient ideas of an article or a passage of text. |

| 9 |

Horizontal integration can refer to acquiring someone or creation of a new product and service. This can usually mean a redesign or integration of a new process. |

| 10 |

Vertical integration can refer to something not working or a need to reduce costs by improving operational efficiencies. This can be anything but commonly starts with root cause analysis. |

| 11 |

RFPs typically contain: 1 - Company Background, 2 - Details around the teams involved, 3 - Product information, 4 - Project Goals and Scope, 5 - Project Timelines, 6 - Expected Deliverables, 7 - Evaluation Criteria such as Metrics to evaluate project success. 8 - Possible Roadblocks such as specific material, software or medium, 9 - Budget |

| 12 |

Natural language processing (NLP) refers to the AI process which gives computers the ability to understand text and spoken word in much the same way humans can. |

References

- AHRAM, Tareq, Redha TAIAR, Karine LANGLOIS and Arnaud CHOPLIN. 2020. Human Interaction, Emerging Technologies and Future Applications III: Proceedings of the 3rd International Conference on Human Interaction and Emerging Technologies: Future Applications (IHIET 2020), -29, 2020, Paris, France. Cham: Springer Nature. Available at: https://play.google.com/store/books/details?id=Nbb1DwAAQBAJ. 27 August.

- AMAZON WEB SERVICES. 2020. ‘BlueDot Case Study’. Amazon Web Services, Inc. [online]. Available at: https://aws.amazon.com/solutions/case-studies/bluedot.

- AMAZON WEB SERVICES, INC. 2021. Amazon Machine Learning. Amazon Web Services, Inc. Available at: https://docs.aws.amazon.com/machine-learning/latest/dg/machinelearning-dg.pdf#training-ml-models.

- ARRABALES, Raul, A. LEDEZMA and A. SANCHIS. 2010. ‘ConsScale: A Pragmatic Scale for Measuring the Level of Consciousness in Artificial Agents’. Journal of Consciousness Studies 2010, 17, 131–164. Available online: https://www.ingentaconnect.com/content/imp/jcs/2010/00000017/f0020003/art00008.

- BANATHY, Bela H. 1997. ‘Designing Social Systems in a Changing World: A Journey to Create Our Future’. The Systemist 1997, 19, 187–216.

- BAUM, Eric B. 2004. What Is Thought? MIT Press. Available at: https://play.google.com/store/books/details?id=TPh8uUFiM0QC.

- BENGIO, Yoshua. 2009. ‘Learning Deep Architectures for AI’. Foundations and Trends® in Machine Learning 2(1), [online], 1–127. Available at. [CrossRef]

- BENGIO, Yoshua, Pascal LAMBLIN, Dan POPOVICI and Hugo LAROCHELLE. 2007. ‘Greedy Layer-Wise Training of Deep Networks’. In B. SCHÖLKOPF, J. PLATT, and T. HOFFMAN (eds.). Advances in Neural Information Processing Systems. Available at: https://proceedings.neurips.cc/paper/2006/file/5da713a690c067105aeb2fae32403405-Paper.pdf.

- BRAZDĂU, Ovidiu and Cristian MIHAI. 2011. ‘The Consciousness Quotient: A New Predictor of the Students’ Academic Performance’. Procedia, social and behavioral sciences 2011, 11, 245–250. Available online: https://linkinghub.elsevier.com/retrieve/pii/S1877042811000723.

- BRINGSJORD, Selmer. 2013. What Robots Can and Can’t Be. Springer Science & Business Media. Available at: https://play.google.com/store/books/details?id=yT3wCAAAQBAJ.

- BRINGSJORD, Selmer, Paul BELLO and David FERRUCCI. 2003. ‘Creativity, the Turing Test, and the (Better) Lovelace Test’. In James H. MOOR (ed.). The Turing Test: The Elusive Standard of Artificial Intelligence. Dordrecht: Springer Netherlands, 215–39. Available at. [CrossRef]

- CARNEGIE LEARNING. 2020. ‘MATHiaU’. Carnegie Learning [online]. Available at: https://www.carnegielearning.com/solutions/math/mathiau [accessed 6 Oct 2021].

- CARROLL, J. M. 2002. ‘Scenarios and Design Cognition’. In Proceedings IEEE Joint International Conference on Requirements Engineering. 3–5. Available at. [CrossRef]

- CARSON, Shelley. 2010. Your Creative Brain: Seven Steps to Maximize Imagination, Productivity, and Innovation in Your Life. John Wiley & Sons. Available at: https://play.google.com/store/books/details?id=WQKuAEn9MeEC.

- CAULFIELD, Brian. 2021. ‘NVIDIA, BMW Blend Reality, Virtual Worlds to Demonstrate Factory of the Future’. The Official NVIDIA Blog [online]. Available at: https://blogs.nvidia.com/blog/2021/04/13/nvidia-bmw-factory-future/ [accessed 9 Jan 2022].

- COOLEY, M. 2000. ‘Human-Centered Design’. Information design [online], 59–81. Available at: https://books.google.com/books?hl=en&lr=&id=vnax4nN4Ws4C&oi=fnd&pg=PA59&dq=Human+centered+design+Cooley&ots=PbG936pwBg&sig=bzOU_ICXMNT96HEjtvL9nffbdAg.

- CORPUZ, Ralph Sherwin A. ‘Categorizing Natural Language-Based Customer Satisfaction: An Implementation Method Using Support Vector Machine and Long Short-Term Memory Neural Network’. International Journal of Integrated Engineering 2021, 13, 77–91. Available online: https://publisher.uthm.edu.my/ojs/index.php/ijie/article/view/7334 (accessed on 9 January 2022).

- CROSS, Nigel. 2011. Design Thinking: Understanding How Designers Think and Work. Berg. Available at: https://play.google.com/store/books/details?id=F4SUVT1XCCwC.

- DESIGN COUNCIL. 2015. ‘What Is the Framework for Innovation? Design Council’s Evolved Double Diamond’. Design Council [online]. Available at: https://www.designcouncil.org.uk/news-opinion/what-framework-innovation-design-councils-evolved-double-diamond [accessed 6 Oct 2021].

- DING, Xiao, Ting LIU, Junwen DUAN and Jian-Yun NIE. 2015. ‘Mining User Consumption Intention from Social Media Using Domain Adaptive Convolutional Neural Network’. Proceedings of the AAAI Conference on Artificial Intelligence 2015, 29. Available online: https://ojs.aaai.org/index.php/AAAI/article/view/9529 (accessed on 9 January 2022).

- DORST, Kees. ‘The Core of “design Thinking” and Its Application’. Design Studies 2011, 32, 521–532. Available online: https://www.researchgate.net/publication/232382843_The_core_of_’design_thinking’_and_its_application (accessed on 31 October 2021). [CrossRef]

- DRUZHKOV, P. N. and V. D. KUSTIKOVA. ‘A Survey of Deep Learning Methods and Software Tools for Image Classification and Object Detection’. Pattern Recognition and Image Analysis 2016, 26, 9–15. Available online: https://doi.org/10.1134/S1054661816010065. [CrossRef]

- FAZEKAS, Peter and Morten OVERGAARD. 2016. ‘Multidimensional Models of Degrees and Levels of Consciousness’. Trends in cognitive sciences. Available at. [CrossRef]

- FLAHERTY, Alice W. ‘Frontotemporal and Dopaminergic Control of Idea Generation and Creative Drive’. The Journal of comparative neurology 2005, 493, 147–53 Available at:. [CrossRef]

- GOODFELLOW, Ian J. et al. 2014. ‘Generative Adversarial Networks’. arXiv [stat.ML]. Available at: http://arxiv.org/abs/1406.2661.

- GRAVES, Alex et al. ‘A Novel Connectionist System for Unconstrained Handwriting Recognition’. IEEE transactions on pattern analysis and machine intelligence 2009, 31, 855–68. [CrossRef]

- KRAMER, Mark A. ‘Nonlinear Principal Component Analysis Using Autoassociative Neural Networks’. AIChE journal. American Institute of Chemical Engineers 1991, 37, 233–243. Available online: https://onlinelibrarywileycom/doi/101002/aic690370209. [CrossRef]

- LAWSON, Bryan. 2005. How Designers Think: Demystifying the Design Process. 4th Edition. Taylor & Francis. Available online: https://play.google.com/store/books/details?id=uYWTzgEACAAJ (accessed on 31 October 2021).

- MANDIC, Danilo, Danilo P. MANDIC and Jonathon A. CHAMBERS. 2001. Recurrent Neural Networks for Prediction: Learning Algorithms, Architectures and Stability. Chichester: Wiley. Available online: https://play.google.com/store/books/details?id=QV1QAAAAMAAJ (accessed on 31 October 2021).

- MORAN, Sean et al. 2020. ‘DeepLPF: Deep Local Parametric Filters for Image Enhancement’. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 20, 12823–32. [CrossRef]

- MOVAHEDI, Faezeh, James L. COYLE and Ervin SEJDIC. 2018. ‘Deep Belief Networks for Electroencephalography: A Review of Recent Contributions and Future Outlooks’. IEEE journal of biomedical and health informatics 2018, 22, 642–652. [CrossRef]

- PINAR SAYGIN, Ayse, Ilyas CICEKLI and Varol AKMAN. 2000. ‘Turing Test: 50 Years Later’. Minds and Machines 2000, 10, 463–518. [CrossRef]

- PRICEWATERHOUSECOOPERS. 2016. ‘PwC’s Global Artificial Intelligence Study: Sizing the Prize’. PwC [online]. Available online: https://www.pwc.com/gx/en/issues/data-and-analytics/publications/artificial-intelligence-study.html (accessed on 6 October 2021).

- REITMAN, W. R. 1965. ‘Cognition and Thought: An Information Processing Approach’ [online]. Available at: https://psycnet.apa.org/fulltext/1965-35027-000.pdf.

- SCHAWINSKI, Kevin et al. ‘Generative Adversarial Networks Recover Features in Astrophysical Images of Galaxies beyond the Deconvolution Limit’. Monthy Notices of the Royal Astronomical Society Letters 2017, 467, L110–4 Available at: https://academicoupcom/mnrasl/article.

- SINGH, Simranjeet, Rajneesh SHARMA and Alan F. SMEATON. 2020. ‘Using GANs to Synthesise Minimum Training Data for Deepfake Generation’. arXiv [cs.CV]. Available at: http://arxiv.org/abs/2011.05421.

- TURING, A. M. 1950. ‘I.—COMPUTING MACHINERY AND INTELLIGENCE’. Mind; a quarterly review of psychology and philosophy LIX(236), [online], 433–60. Available at: https://academic.oup.com/mind/article-pdf/LIX/236/433/30123314/lix-236-433.pdf [accessed 4 Jan 2022].

- VALUEVA, M. V. ‘Application of the Residue Number System to Reduce Hardware Costs of the Convolutional Neural Network Implementation’. Mathematics and computers in simulation 2020, 177, 232–43 Available at: https://wwwsciencedirectcom/science/article/pii/S0378475420301580. [Google Scholar] [CrossRef]

- VICENTE, Kim J. 2013. The Human Factor: Revolutionizing the Way People Live with Technology. Routledge. Available at: https://www.taylorfrancis.com/books/mono/10.4324/9780203944479/human-factor-kim-vicente.

- WALLAS, Graham. 2014. The Art of Thought. Solis Press. Available at: https://play.google.com/store/books/details?id=JR6boAEACAAJ.

- WANG, Xintao et al. 2018. ‘ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks’. arXiv [cs.CV]. Available at: http://arxiv.org/abs/1809.00219.

- YU, Jiahui et al. 2018. ‘Generative Image Inpainting with Contextual Attention’. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18. Available at: https://openaccess.thecvf.com/content_cvpr_2018/papers/Yu_Generative_Image_Inpainting_CVPR_2018_paper.pdf [accessed 5 Jan 2022]. 20 June.

- ZHOU, Yichao et al. 2019. ‘Understanding Consumer Journey Using Attention Based Recurrent Neural Networks’. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 3102–11. Available at: https://doi.org/10.1145/3292500.3330753 [accessed 9 Jan 2022]. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).