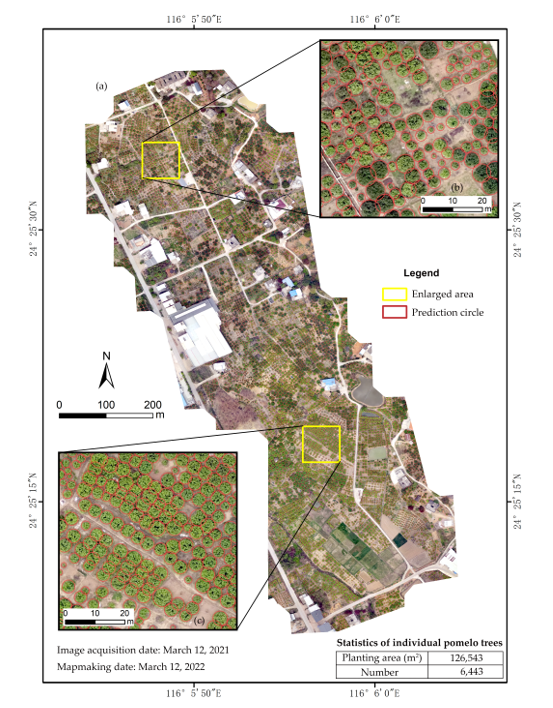

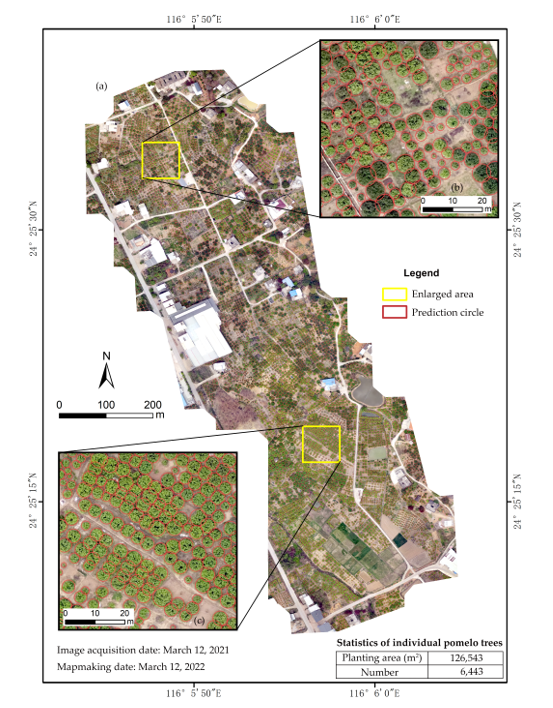

The location and number data of individual fruit trees is critical for planting area investigation, fruit yield prediction, and smart orchard management and planning. These data are conventionally obtained through manual investigation and statistics with time-consuming and laborious effort. Object detection models in deep learning used widely in computer vision could provide an opportunity for accurate detection of individual fruit trees, which is essential for obtaining the data rapidly and reducing errors of human operations. This study aims to propose an approach to detecting individual fruit trees and mapping their spatial distribution by integrating deep learning with unmanned aerial vehicle (UAV) remote sensing. UAV remote sensing was used to collect high-resolution true-color images of fruit trees in the experimental pomelo tree orchards in Meizhou city, South China. An image dataset of deep learning samples of individual pomelo trees (IPTs) was constructed through visual interpretation and field investigation based on the fruit tree images captured by UAV remote sensing. Four different scales of YOLOv5 (YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x) for object detection were selected to train, validate, and test on the image dataset of pomelo trees. The results show that the average precision (AP@0.5) of the four YOLOv5 models for validation reaches 87.8%, 88.5%, 89.1%, and 90.7%, respectively. The larger the model scale, the higher the average accuracy of the detection result of validation. It suggests that YOLOv5x is a preferred high-accuracy model among the YOLOv5 family and is suitable to realize the detection of IPTs. The number of the IPTs in the study area was predicted and counted using YOLOv5x and their spatial distribution map was made using the non-maximum suppression method and ArcGIS software. This study is desired to provide primary data and technical support for smart orchard management in Meizhou city and other fruit-producing areas.