Preprint

Article

Dual Variational Formulations for a Large Class of Non-Convex Models in the Calculus of Variations

Altmetrics

Downloads

793

Views

1039

Comments

1

A peer-reviewed article of this preprint also exists.

This version is not peer-reviewed

Submitted:

23 January 2023

Posted:

25 January 2023

Read the latest preprint version here

Alerts

Abstract

This article develops dual variational formulations for a large class of models in variational optimization. The results are established through basic tools of functional analysis, convex analysis and duality theory. The main duality principle is developed as an application to a Ginzburg-Landau type system in superconductivity in the absence of a magnetic field. In the first sections, we develop new general dual convex variational formulations, more specifically, dual formulations with a large region of convexity around the critical points which are suitable for the non-convex optimization for a large class of models in physics and engineering. Finally, in the last section we present some numerical results concerning the generalized method of lines applied to a Ginzburg-Landau type equation.

Keywords:

Subject: Computer Science and Mathematics - Applied Mathematics

1. Introduction

In this section we establish a dual formulation for a large class of models in non-convex optimization.

The main duality principle is applied to the Ginzburg-Landau system in superconductivity in an absence of a magnetic field.

Such results are based on the works of J.J. Telega and W.R. Bielski [2,3,13,14] and on a D.C. optimization approach developed in Toland [15].

About the other references, details on the Sobolev spaces involved are found in [1]. Related results on convex analysis and duality theory are addressed in [5,6,7,9,12]. Finally, similar models on the superconductivity physics may be found in [4,11].

Remark 1.

It is worth highlighting, we may generically denote

simply by

where denotes a concerning identity operator.

Other similar notations may be used along this text as their indicated meaning are sufficiently clear.

Also, denotes the Laplace operator and for real constants and , the notation means that is much larger than

Finally, we adopt the standard Einstein convention of summing up repeated indices, unless otherwise indicated.

In order to clarify the notation, here we introduce the definition of topological dual space.

Definition 1

(Topological dual spaces). Let U be a Banach space. We shall define its dual topological space, as the set of all linear continuous functionals defined on U. We suppose such a dual space of U, may be represented by another Banach space , through a bilinear form (here we are referring to standard representations of dual spaces of Sobolev and Lebesgue spaces). Thus, given linear and continuous, we assume the existence of a unique such that

The norm of f , denoted by , is defined as

At this point we start to describe the primal and dual variational formulations.

Let be an open, bounded, connected set with a regular (Lipschitzian) boundary denoted by

Firstly we emphasize that, for the Banach space , we have

For the primal formulation we consider the functional where

Here we assume , , . Moreover we denote

Define also by

by

and by

where

It is worth highlighting that in such a case

Furthermore, define the following specific polar functionals specified, namely, by

by

if where

At this point, we give more details about this calculation.

Observe that

Defining we have so that

where are solution of equations (optimality conditions for such a quadratic optimization problem)

and

and therefore

and

Replacing such results into (7) we obtain

if

Finally, is defined by

Define also

by

and by

2. The main duality principle, a convex dual formulation and the concerning proximal primal functional

Our main result is summarized by the following theorem.

Theorem 1.

Considering the definitions and statements in the last section, suppose also is such that

Under such hypotheses, we have

and

Proof.

Since

from the variation in we obtain

so that

From the variation in we obtain

From the variation in we also obtain

and therefore,

From the variation in u we get

and thus

Finally, from the variation in , we obtain

so that

that is,

From such results and we get

so that

Also from this and from the Legendre transform proprieties we have

and thus we obtain

Summarizing, we have got

On the other hand

Finally by a simple computation we may obtain the Hessian

in , so that we may infer that is concave in in .

The proof is complete. □

3. A primal dual variational formulation

In this section we develop a more general primal dual variational formulation suitable for a large class of models in non-convex optimization.

Consider again and let and be three times Fréchet differentiable functionals. Let be defined by

Assume is such that

and

Denoting , define by

Denoting and , define also

for an appropriate to be specified.

Observe that in the Hessian of is given by

Observe also that

and

Define now

so that

From this we may infer that and

Moreover, for sufficiently big, is convex in a neighborhood of .

Therefore, in the last lines, we have proven the following theorem.

Theorem 2.

Under the statements and definitions of the last lines, there exist and such that

and is such that

Moreover, is convex in

4. One more duality principle and a concerning primal dual variational formulation

In this section we establish a new duality principle and a related primal dual formulation.

The results are based on the approach of Toland, [15].

4.1. Introduction

Let be an open, bounded, connected set with a regular (Lipschitzian) boundary denoted by

Let be a functional such that

where .

Suppose are both three times Fréchet differentiable convex functionals such that

and

Assume also there exists such that

Moreover, suppose that if is such that

then

At this point we define by

where

Observe that so that

On the other hand, clearly we have

so that we have got

Let .

Since J is strongly continuous, there exist and such that,

From this, considering that is convex on V, we may infer that is continuous at u,

Hence is strongly lower semi-continuous on V, and since is convex we may infer that is weakly lower semi-continuous on V.

Let be a sequence such that

Hence

Suppose there exists a subsequence of such that

From the hypothesis we have

which contradicts

Therefore there exists such that

Since V is reflexive, from this and the Katutani Theorem, there exists a subsequence of and such that

Consequently, from this and considering that is weakly lower semi-continuous, we have got

so that

Define by

and

Suppose now there exists such that

From the standard necessary conditions, we have

so that

Define now

From these last two equations we obtain

From such results and the Legendre transform properties, we have

so that

and

so that

4.2. The main duality principle and a related primal dual variational formulation

Considering these last statements and results, we may prove the following theorem.

Theorem 3.

Let be an open, bounded, connected set with a regular (Lipschitzian) boundary denoted by

Let be a functional such that

where .

Suppose are both three times Fréchet differentiable functionals such that there exists such that

and

Assume also there exists and such that

Assume is such that

Define

Assume is such that if then

Suppose also

Define by

and

Define also by

and

Observe that since is such that

we have

Let be a small constant.

Define

Under such hypotheses, defining by

we have

Proof.

Observe that from the hypotheses and the results and statements of the last subsection

where

Moreover we have

Also from hypotheses and the last subsection results,

so that clearly we have

From these last results, we may infer that

The proof is complete.

□

Remark 2.

At this point we highlight that has a large region of convexity around the optimal point , for sufficiently large and corresponding sufficiently small.

Indeed, observe that for ,

where is such that

Taking the variation in in this last equation, we obtain

so that

From this we get

On the other hand, from the implicit function theorem

so that

and

Similarly, we may obtain

and

Denoting

and

we have

and

From this we get

about the optimal point

5. A convex dual variational formulation

In this section, again for an open, bounded, connected set with a regular (Lipschitzian) boundary , and , we denote , and by

and

We define also

and and by

and

if where

for some small real parameter and where denotes a concerning identity operator.

Finally, we also define

Assuming

by directly computing we may obtain that for such specified real constants, in convex in and it is concave in on

Considering such statements and definitions, we may prove the following theorem.

Theorem 4.

Let be such that

and be such that

Under such hypotheses, we have

so that

Proof.

Observe that so that, since is convex in and concave in on , we obtain

Now we are going to show that

From

we have

and thus

From

we obtain

and thus

Finally, denoting

from

we have

so that

Observe now that

so that

The solution for this last system of equations (30) and (31) is obtained through the relations

and

so that

and

and hence, from the concerning convexity in u on V,

Moreover, from the Legendre transform properties

so that

Joining the pieces, we have got

The proof is complete.

□

Remark 3.

We could have also defined

for some small real parameter . In this case, is positive definite, whereas in the previous case, is negative definite.

6. Another convex dual variational formulation

In this section, again for an open, bounded, connected set with a regular (Lipschitzian) boundary , and , we denote , and by

and

We define also

and and by

and

At this point we define

where

and

Finally, we also define

and by

Moreover, assume

By directly computing we may obtain that for such specified real constants, is concave in on

Indeed, recalling that

and

we obtain

in and

in .

Considering such statements and definitions, we may prove the following theorem.

Theorem 5.

Let be such that

and be such that

Under such hypotheses, we have

so that

Proof.

Observe that so that, since concave in on , and is quadratic in , we get

Consequently, from this and the Min-Max Theorem, we obtain

Now we are going to show that

From

we have

and thus

Finally, denoting

from

we have

so that

Observe now that

so that

The solution for this last equation is obtained through the relation

so that from this and (39), we get

Thus,

and

and hence, from the concerning convexity in u on V,

Moreover, from the Legendre transform properties

so that

Joining the pieces, we have got

The proof is complete.

□

7. A third duality principle and related convex dual variational formulation

In this section, we assume a finite dimensional version for the model in question, in a finite differences or finite elements context, although the concerning spaces and operators have not been relabeled.

Again, for an open, bounded, connected set with a regular (Lipschitzian) boundary , , and , we denote , and by

and

We define also

and and by

and

At this point we define

where

and

By direct computation we may obtain

so that is convex.

Finally, we also define

And by

We assume and

Recalling that

and

we obtain

in and

Also,

where

and

Observe that at a critical point and so that, for the dual formulation, we set the restrictions and

Thus, we define

and

Observe also that

on , so that is convex on

Considering such statements and definitions, we may prove the following theorem.

Theorem 6.

Let be such that

and be such that

Under such hypotheses, we have

so that

Proof.

Observe that so that, since convex on the convex set , we have that

Now we are going to show that

From

we have

and thus

Finally, denoting

from

we have

so that

Observe now that

so that

The solution for this last equation is obtained through the relation

so that from this and (39), we get

Thus,

and

and hence, from the concerning cocavity in u on V,

Moreover, from the Legendre transform properties

so that

Finally, observe that

where we recall that .

Joining the pieces, we have got

The proof is complete.

□

8. Closely related primal-dual variational formulations

Consider again the functional where

where , , , and

Observe that

Having obtained , we propose the following exactly penalized primal-dual formulation , where

so that

In particular, if we set

and

we may also define

where

for appropriate

Here we highlight that is concave in on (indeed it is concave on ) and the parameter multiplying a positive definite quadratic functional in u improves the convexity conditions of .

9. One more duality principle suitable for the primal formulation global optimization

In this section we establish one more duality principle and related convex dual formulation suitable for a global optimization of the primal variational formulation.

Let be an open, bounded, connected set with a regular (Lipschitzian) boundary denoted by

For the primal formulation, we define and consider a functional where

Here we assume , and define

and

for an appropriate constant to be specified.

Define also the functionals , and by

and

for appropriate positive constants to be specified.

Moreover, define and and by

and

for appropriate and and

Furthermore, we define

for an appropriate constant to be specified.

Define also

and by

Moreover, assuming .

By directly computing denoting

we may obtain, considering that

on

Moreover,

where

and

At a critical point we have and

With such results, we may define the restrictions

Here, we define

On the other hand, clearly we have

From such results, we may obtain that in convex in and it is concave in on

9.1. The main duality principle and a related convex dual formulation

Considering the statements and definitions presented in the previous section, we may prove the following theorem.

Theorem 7.

Let be such that

and be such that

Assume also

Under such hypotheses, we have

and

Proof.

Observe that so that, since is convex in and

we obtain

Consequently, from this and the Saddle Point Theorem, we obtain

Now we are going to show that

From

and

we have

and

Observe now that

Denoting

there exists such that

and

so that

Summarizing, we have got

From such results and the Legendre tranform proprieties we get

and

On the other hand, from the variation of in , we have

From such results, since

we get

Finally, from the variation of in we obtain

so that

Thus,

Consequently, from such last results, we have

Summarizing,

Furthermore, also from such last results and the Legendre transform properties, we have

so that

Finally, observe that

Therefore,

so that

Summarizing, we have got

Joining the pieces, we have got

The proof is complete.

□

10. Another duality principle for a related model in phase transition

In this section we present another duality principle for a related model in phase transition.

Let and consider a functional where

and where

and

A global optimum point is not attained for J so that the problem of finding a global minimum for J has no solution.

Anyway, one question remains, how the minimizing sequences behave close the infimum of J.

We intend to use duality theory to approximately solve such a global optimization problem.

Denoting , at this point we define, and by

and

Observe

where refers to a quasi-convex regularization of

We define also

and

by

and

Observe that if is large enough, both and G are convex.

Denoting we also define the polar functional by

Observe that

With such results in mind, we define a relaxed primal dual variational formulation for the primal problem, represented by , where

Having defined such a functional, we may obtain numerical results by solving a sequence of convex auxiliary sub-problems, through the following algorithm.

- Set and and

- Choose such that and

- Set

- Calculate solution of the system of equations:andthat isandso thatand

- Calculate by solving the system of equations:andthat isand

- If , then stop, else set and go to item 4.

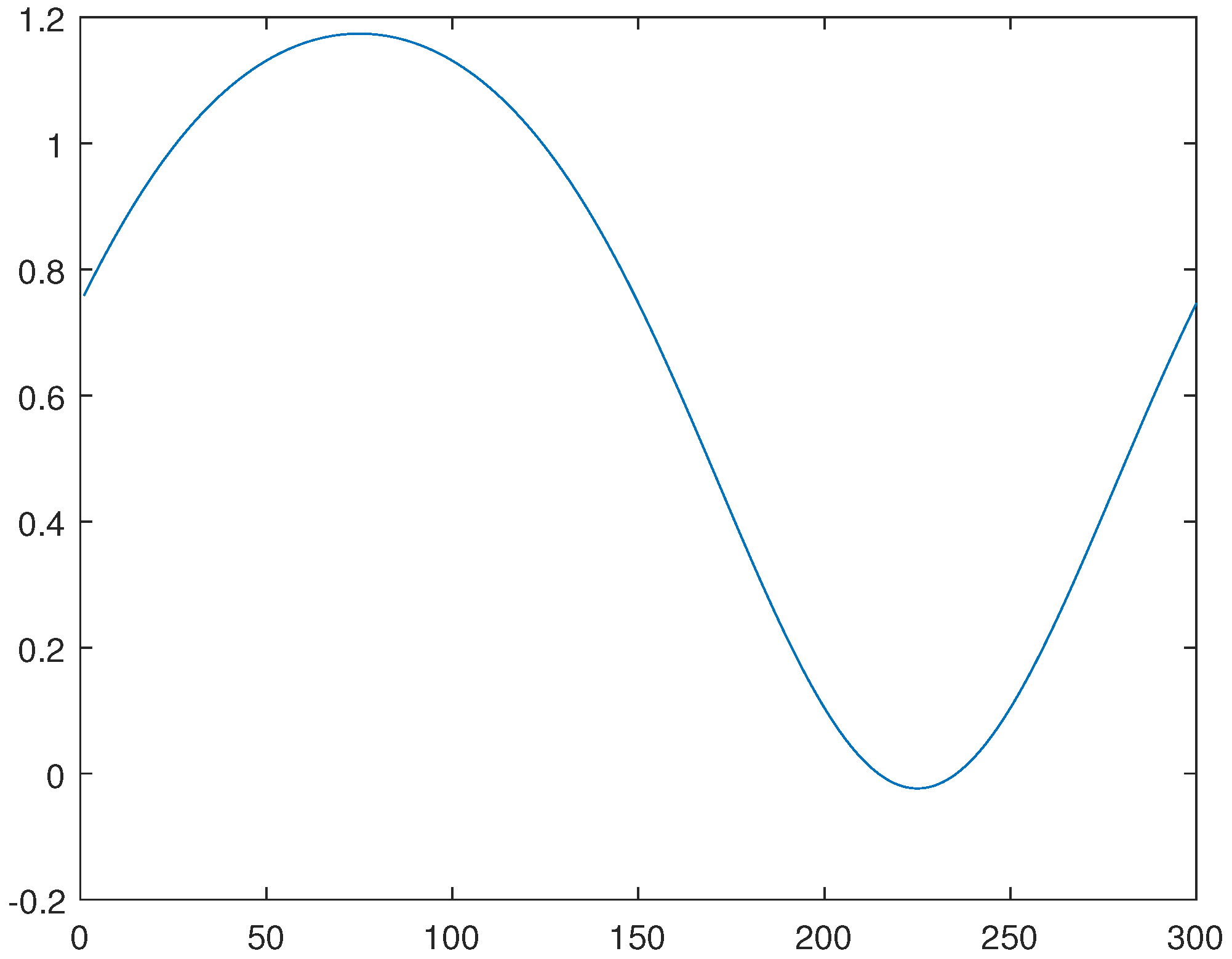

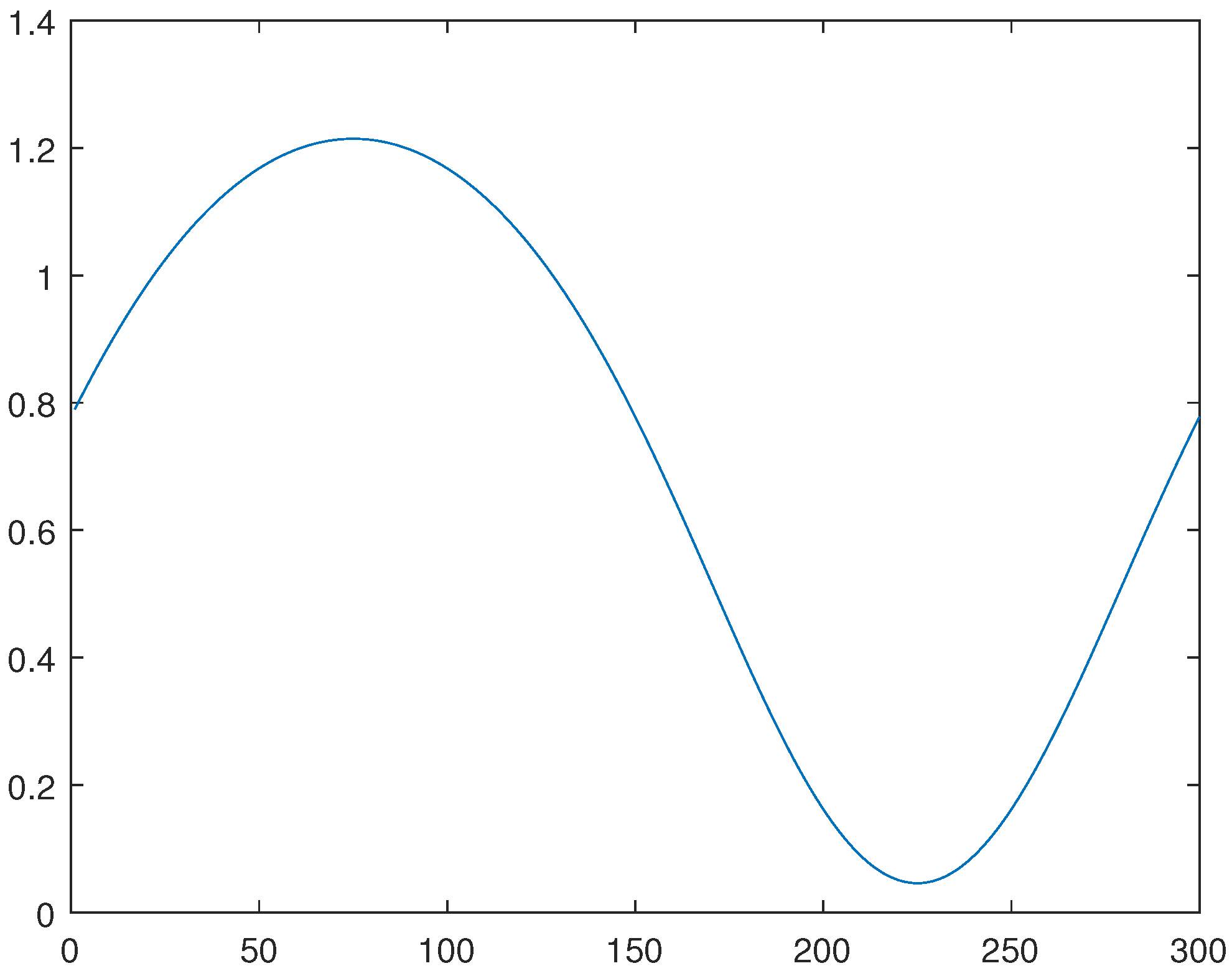

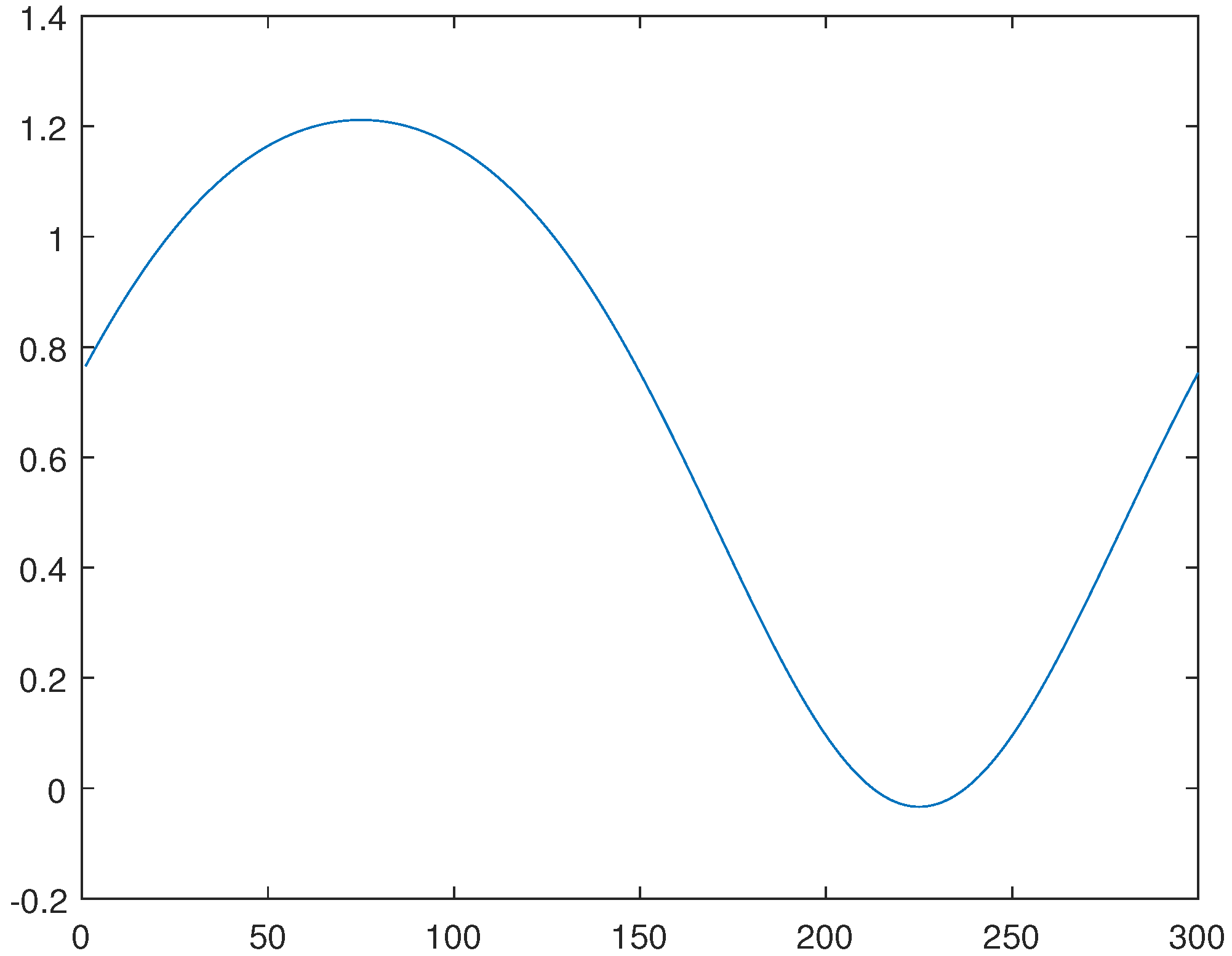

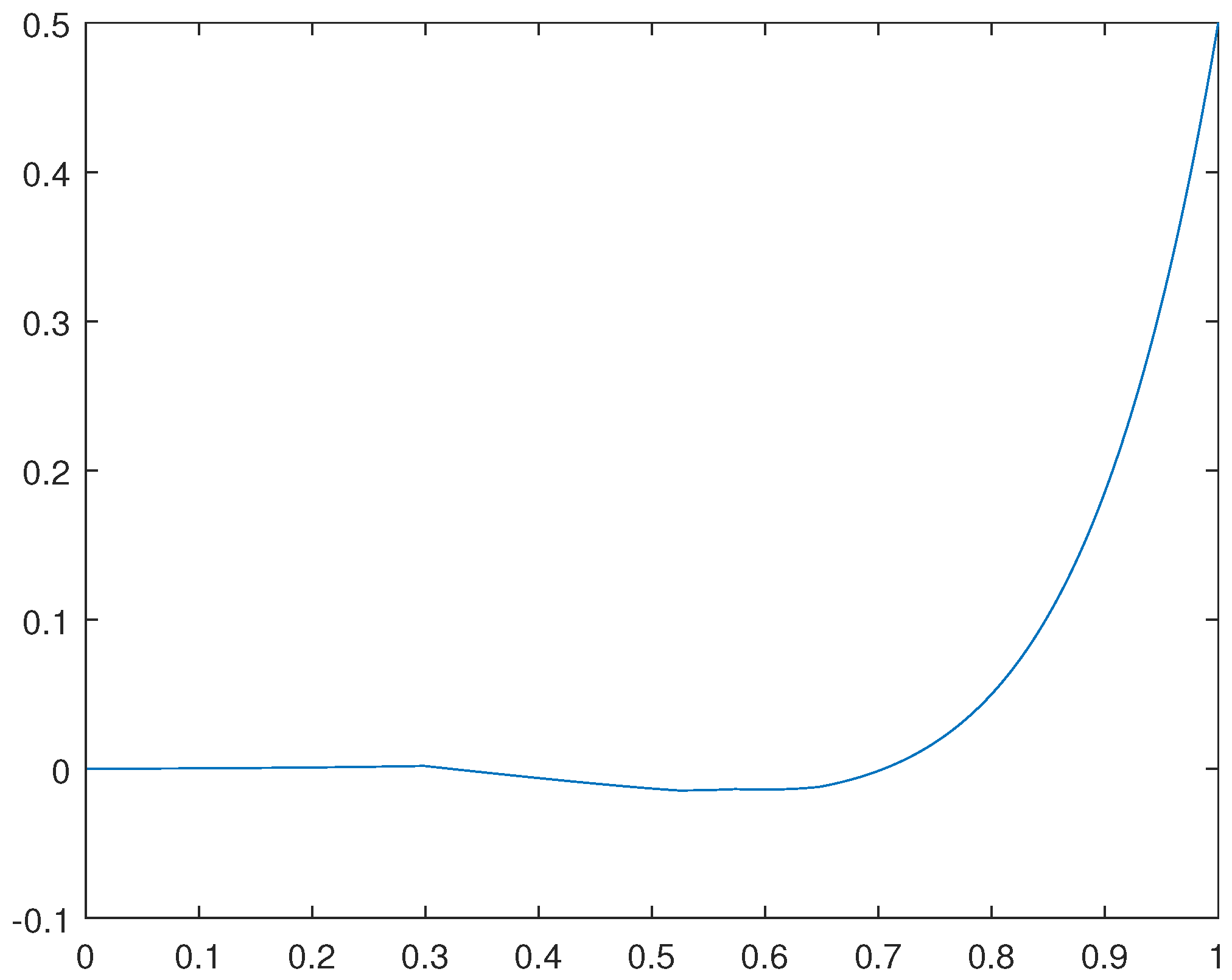

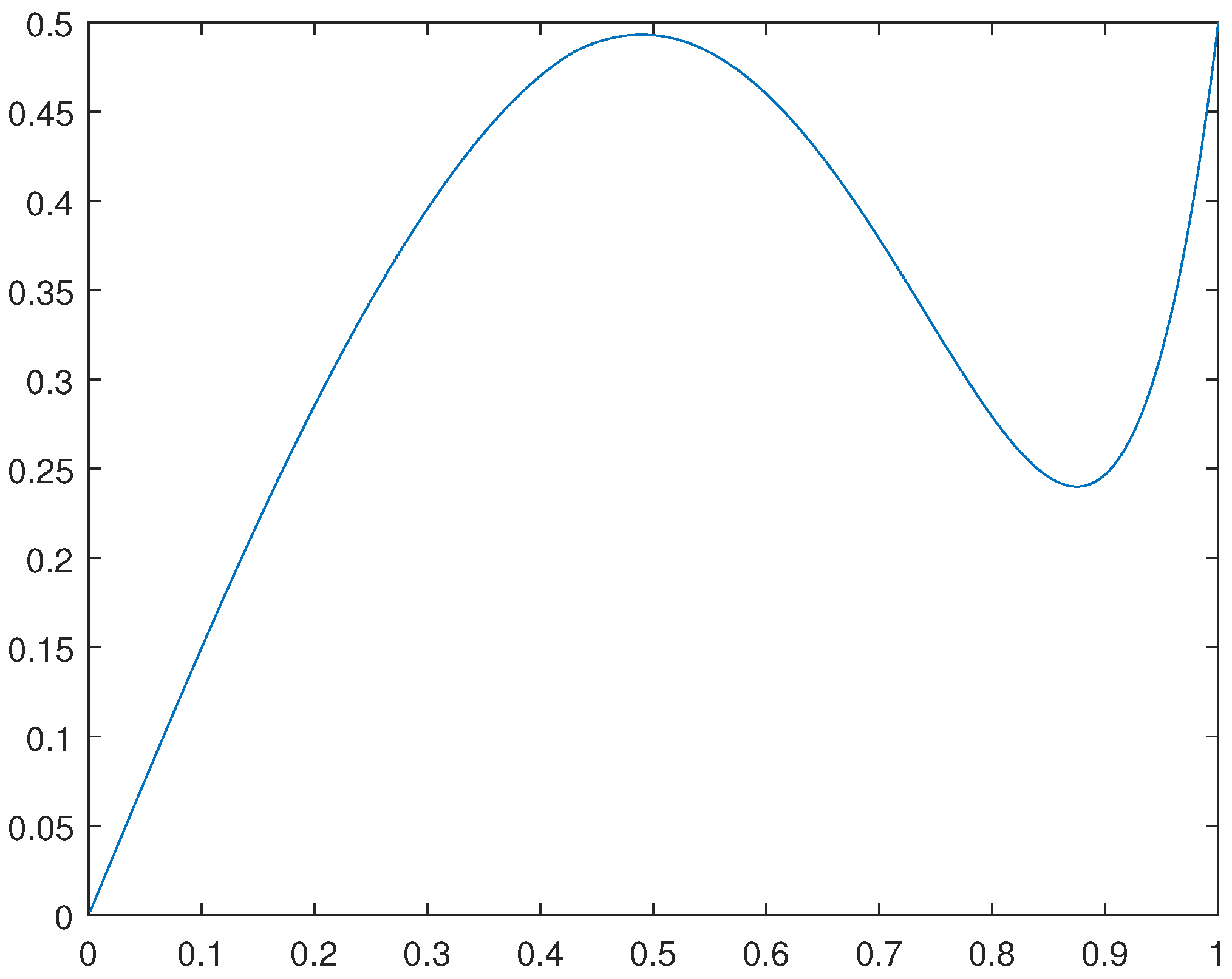

For the case in which , we have obtained numerical results for and . For such a concerning solution obtained, please see Figure 1. For the case in which , we have obtained numerical results for and . For such a concerning solution obtained, please see Figure 2.

Remark 4.

Observe that the solutions obtained are approximate critical points. They are not, in a classical sense, the global solutions for the related optimization problems. Indeed, such solutions reflect the average behavior of weak cluster points for concerning minimizing sequences.

11. A related numerical computation through the generalized method of lines

We start by recalling that the generalized method of lines was originally introduced in the book entitled "Topics on Functional Analysis, Calculus of Variations and Duality" [7], published in 2011.

Indeed, the present results are extensions and applications of previous ones which have been published since 2011, in books and articles such as [5,7,8,9]. About the Sobolev spaces involved we would mention [1]. Concerning the applications, related models in physics are addressed in [4,11].

We also emphasize that, in such a method, the domain of the partial differential equation in question is discretized in lines (or more generally, in curves) and the concerning solution is written on these lines as functions of boundary conditions and the domain boundary shape.

In fact, in its previous format, this method consists of an application of a kind of a partial finite differences procedure combined with the Banach fixed point theorem to obtain the relation between two adjacent lines (or curves).

In the present article, we propose an improvement concerning the way we truncate the series solution obtained through an application of the Banach fixed point theorem to find the relation between two adjacent lines. The results obtained are very good even as a typical parameter is very small.

In the next lines and sections we develop in details such a numerical procedure.

11.1. About a concerning improvement for the generalized method of lines

Let where

Consider the problem of solving the partial differential equation

Here

, and

In a partial finite differences scheme, such a system stands for

with the boundary conditions

and

Here N is the number of lines and

In particular, for we have

so that

We solve this last equation through the Banach fixed point theorem, obtaining as a function of

Indeed, we may set

and

Thus, we may obtain

Similarly, for , we have

We solve this last equation through the Banach fixed point theorem, obtaining as a function of and

Indeed, we may set

and

Thus, we may obtain

Now reasoning inductively, having

we may get

We solve this last equation through the Banach fixed point theorem, obtaining as a function of and

Indeed, we may set

and

Thus, we may obtain

We have obtained ,

In particular, so that we may obtain

Similarly,

an so on, up to obtaining

The problem is then approximately solved.

11.2. Software in Mathematica for solving such an equation

We recall that the equation to be solved is a Ginzburg-Landau type one, where

Here

, and In a partial finite differences scheme, such a system stands for

with the boundary conditions

and

Here N is the number of lines and

At this point we present the concerning software for an approximate solution.

Such a software is for (10 lines) and .

************************************

- ;

- ;

- ; (

- ;

- ;

- ;

-

- ;

- ];

*************************************

The numerical expressions for the solutions of the concerning lines are given by

11.3. Some plots concerning the numerical results

In this section we present the lines related to results obtained in the last section.

Indeed, we present such mentioned lines, in a first step, for the previous results obtained through the generalized of lines and, in a second step, through a numerical method which is combination of the Newton's one and the generalized method of lines. In a third step, we also present the graphs by considering the expression of the lines as those also obtained through the generalized method of lines, up to the numerical coefficients for each function term, which are obtained by the numerical optimization of the functional J, below specified. We consider the case in which and .

For the procedure mentioned above as the third step, recalling that lines, considering that , we may approximately assume the following general line expressions:

Defining

and

we obtain by numerically minimizing J.

Hence, we have obtained the following lines for these cases. For such graphs, we have considered 300 nodes in x, with as units in

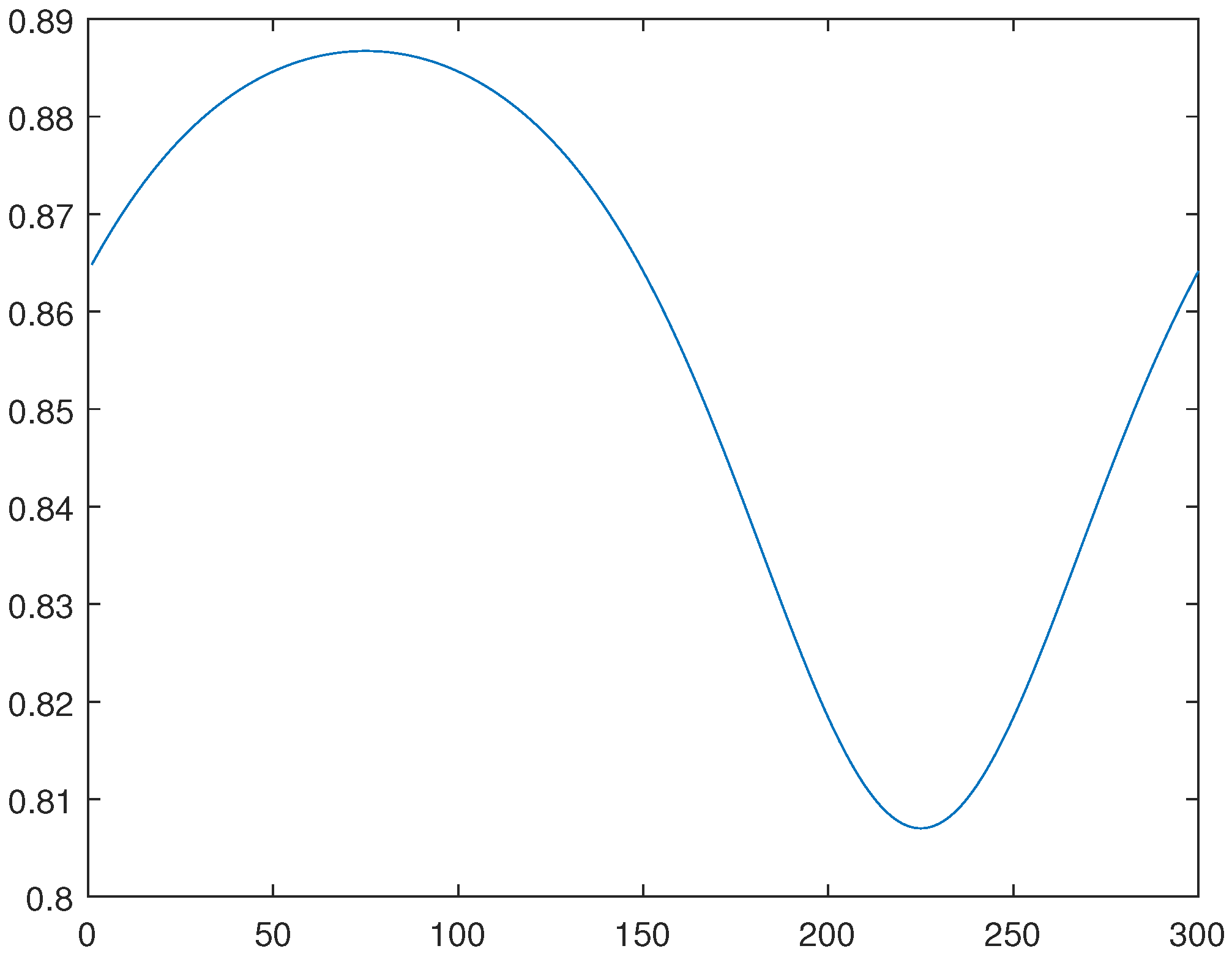

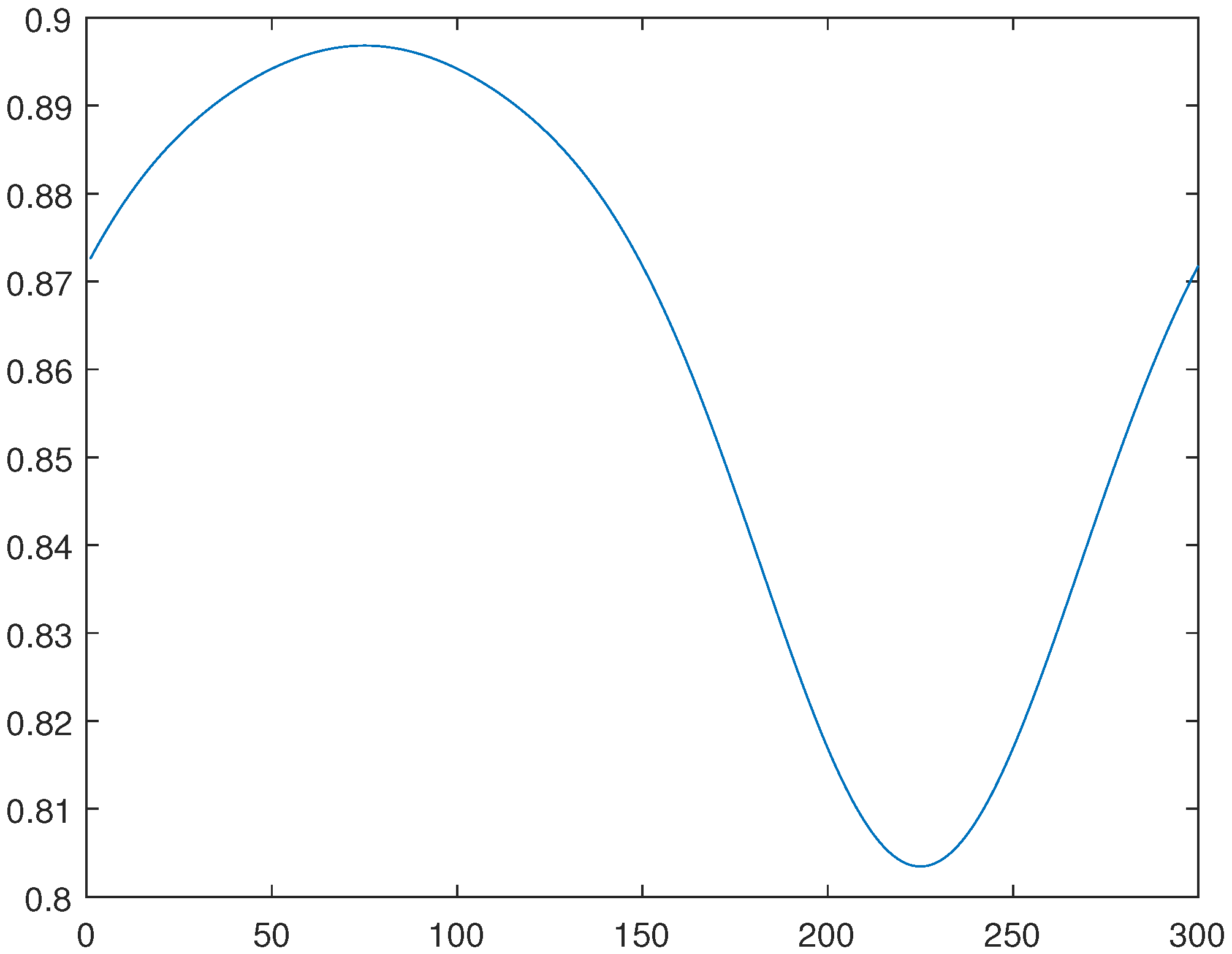

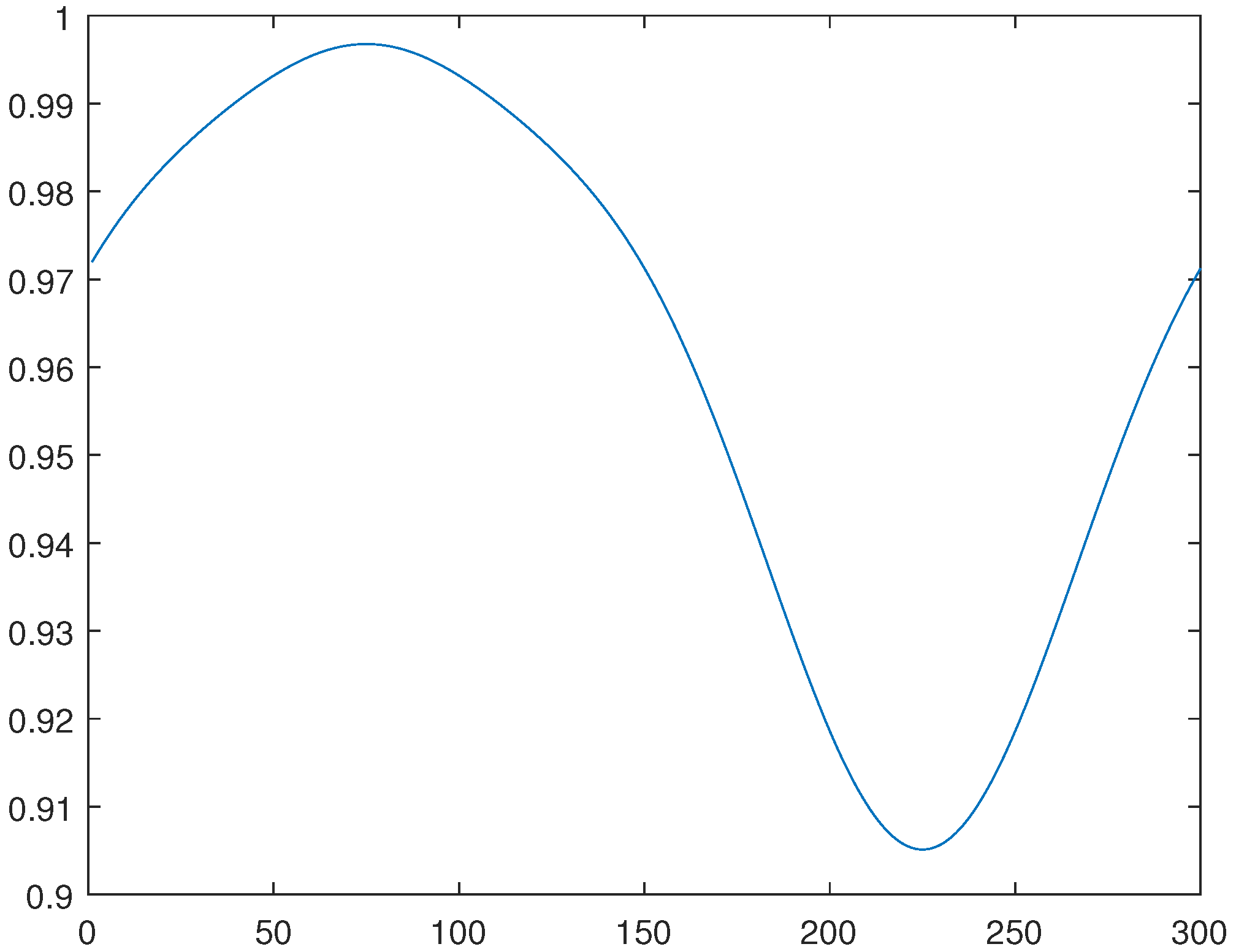

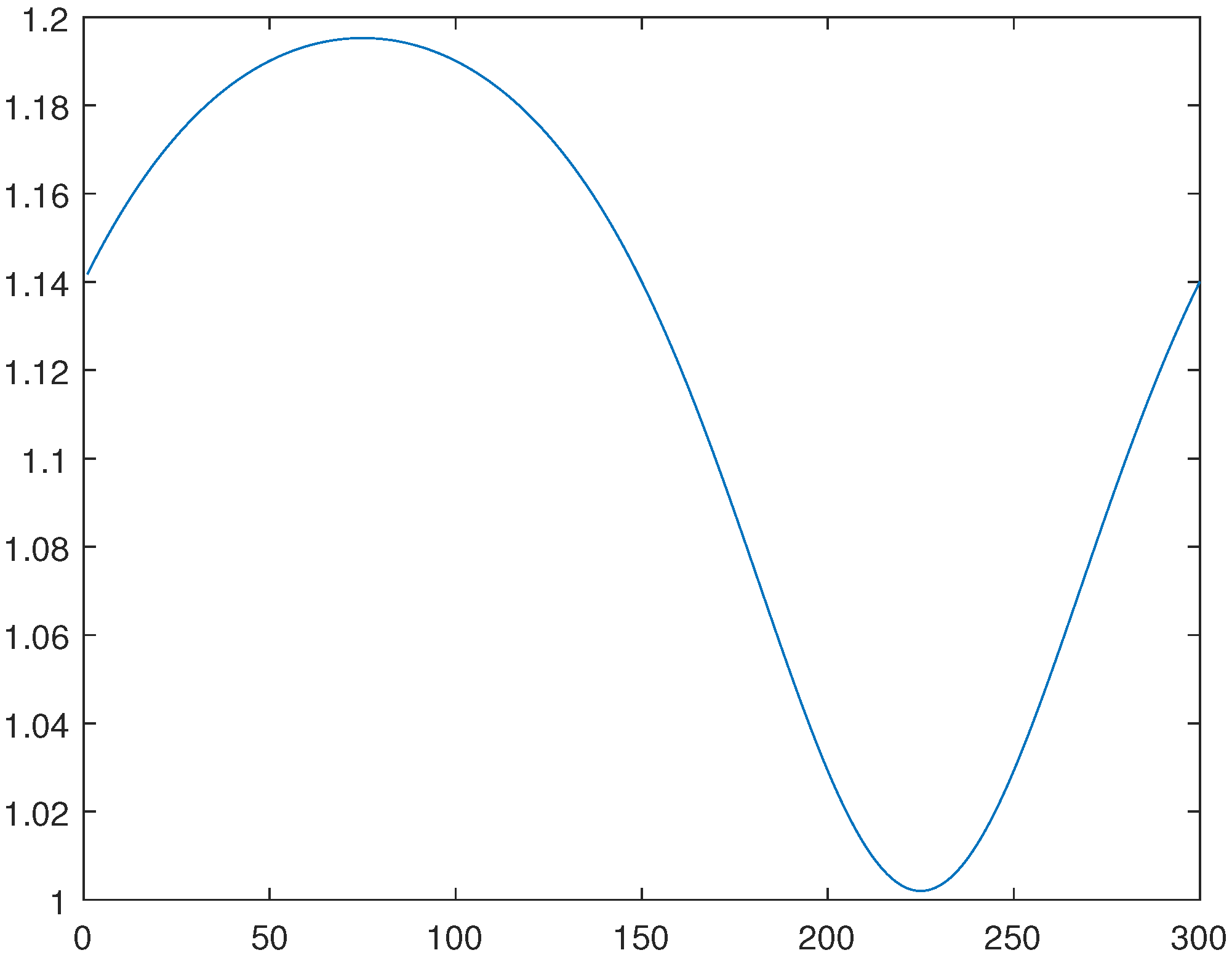

For the Lines 2, 4, 6, 8, through the generalized method of lines, please see Figure 3, Figure 6, Figure 9 and Figure 12.

For the Lines 2, 4, 6, 8, through a combination of the Newton's and the generalized method of lines, please see Figure 4, Figure 7, Figure 10 and Figure 13.

Finally, for the Line 2, 4, 6, 8 obtained through the minimization of the functional J, please see Figure 5, Figure 8, Figure 11 and Figure 14.

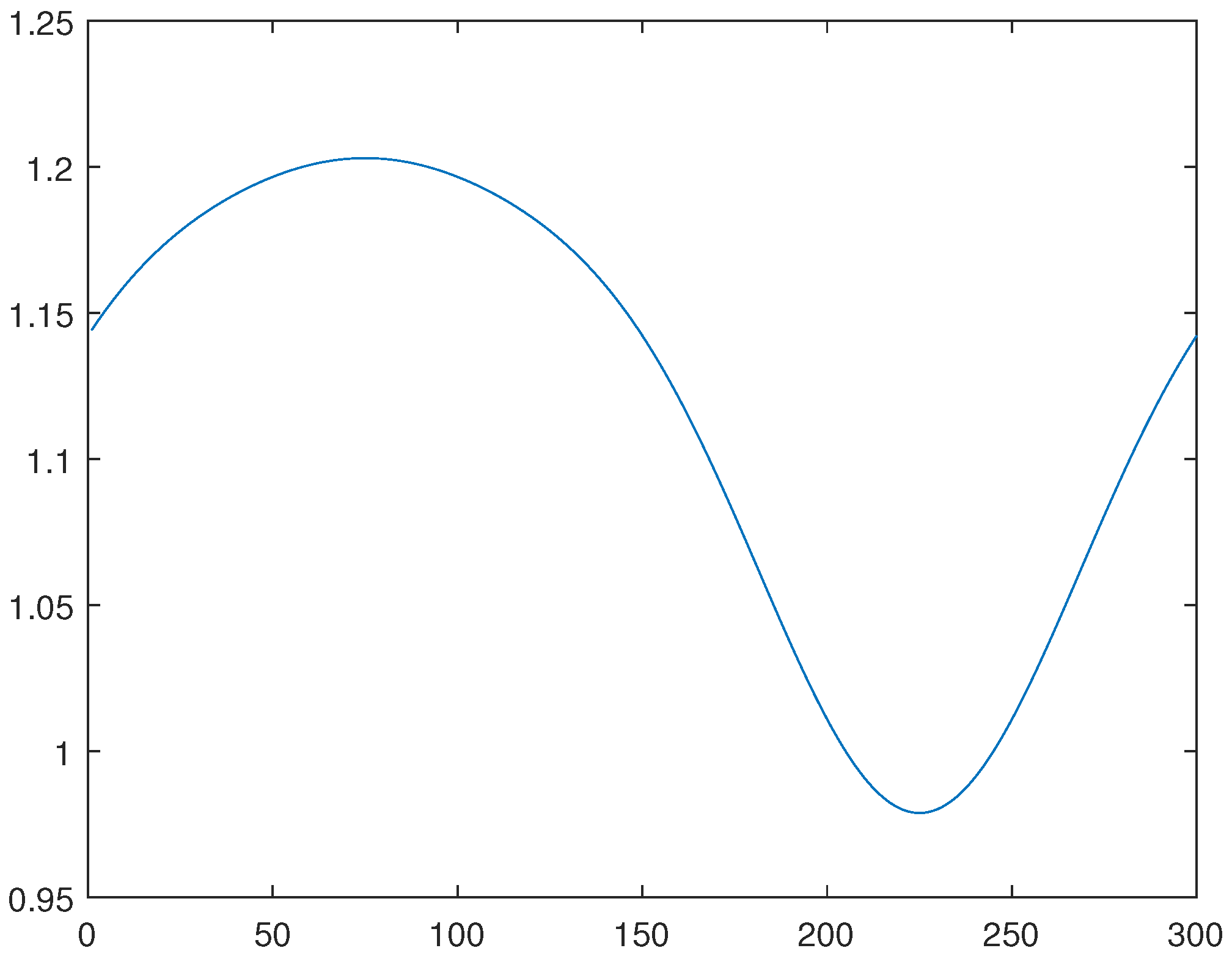

Figure 3.

Line 2, solution through the general method of lines

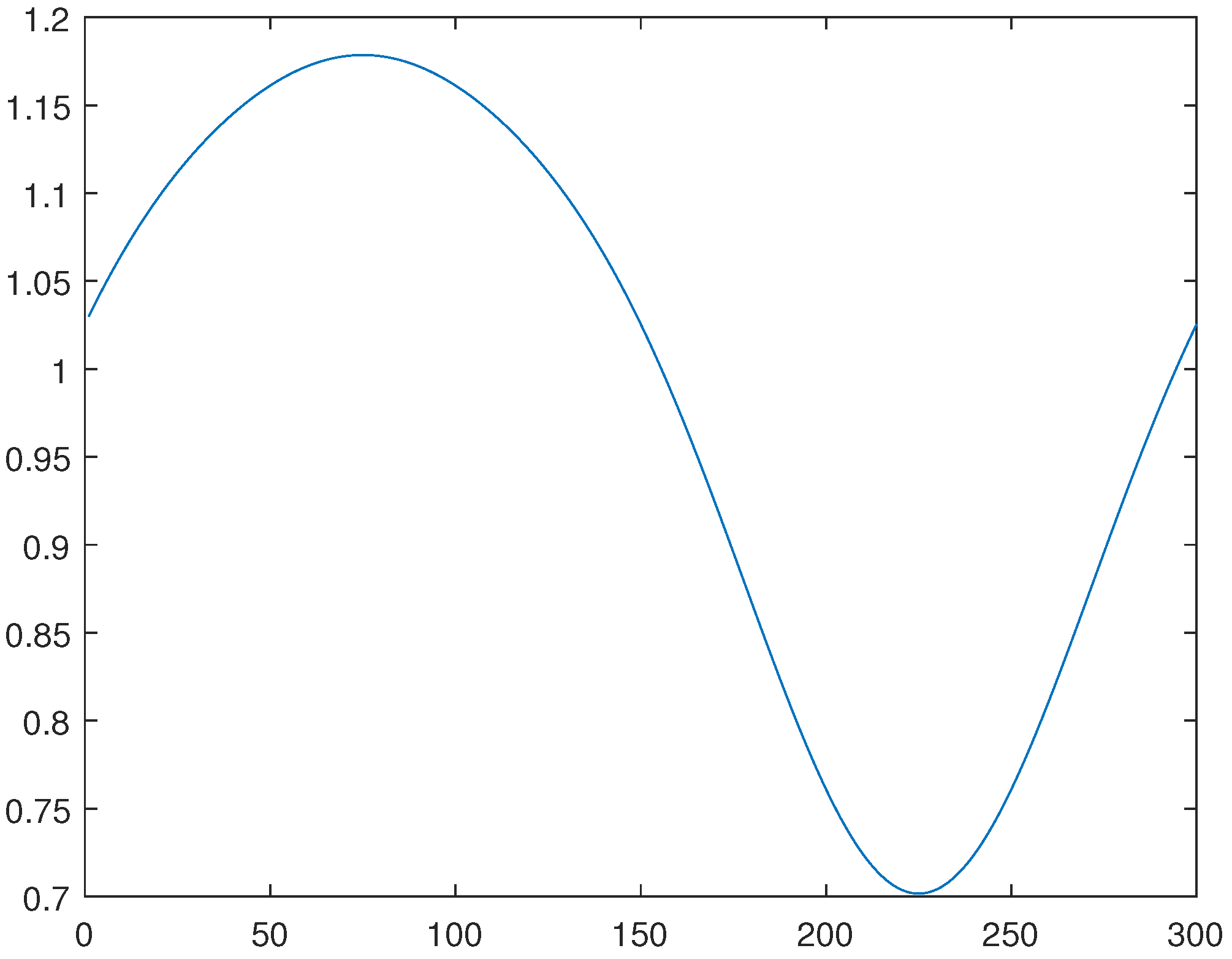

Figure 4.

Line 2, solution through the Newton's Method

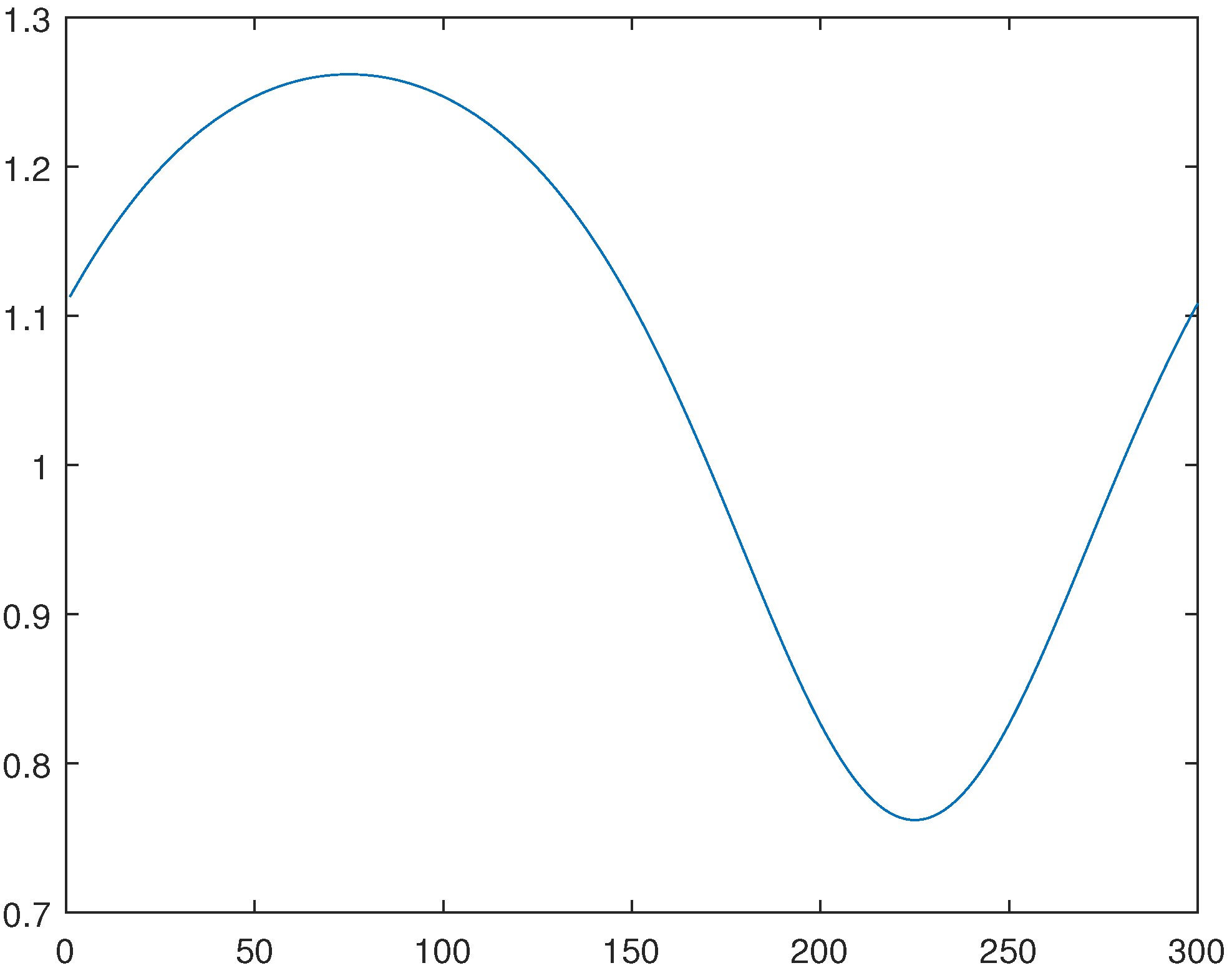

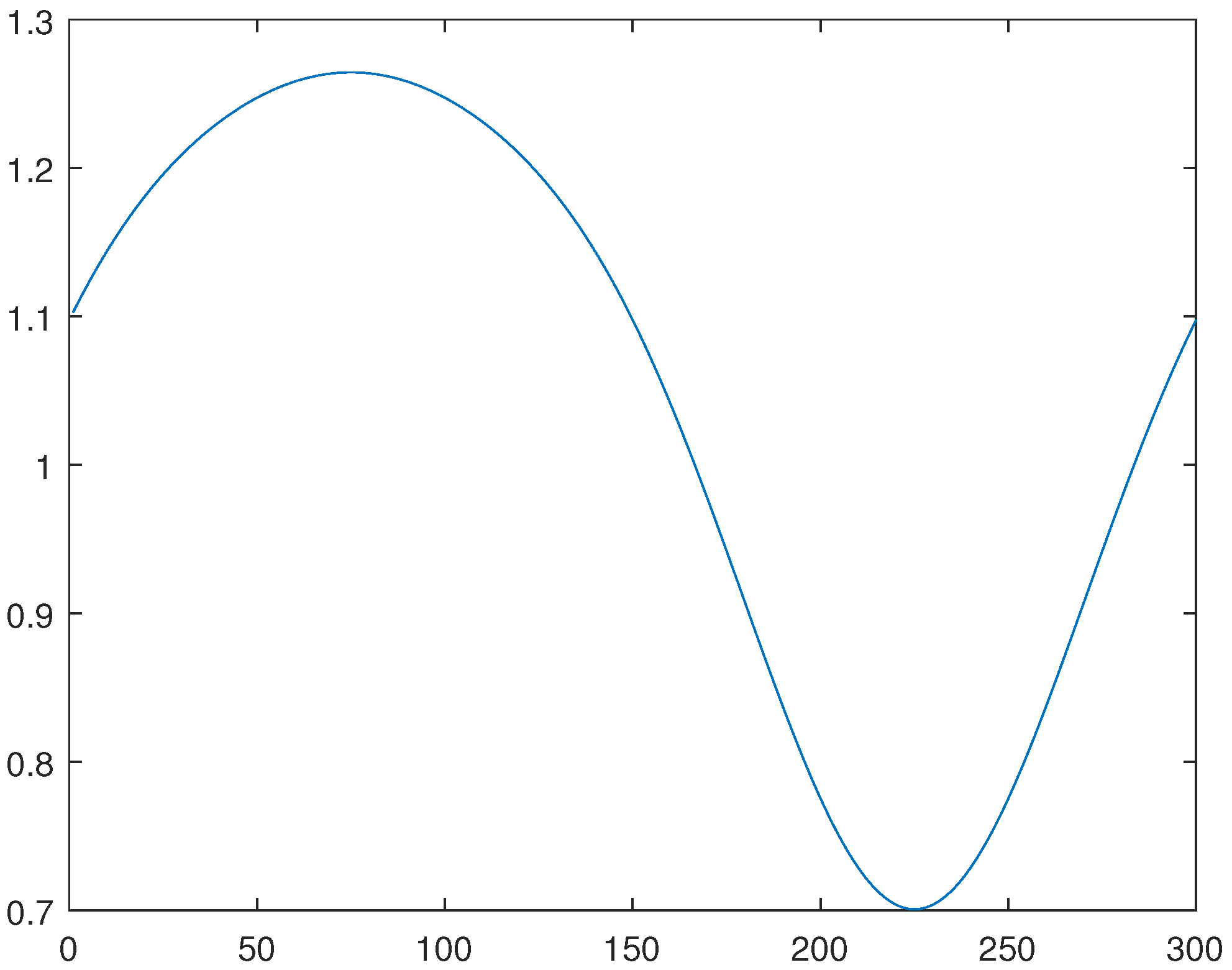

Figure 5.

Line 2, solution through the minimization of functional J

Figure 6.

Line 4, solution through the general method of lines

Figure 7.

Line 4, solution through the Newton's Method

Figure 8.

Line 4, solution through the minimization of functional J

Figure 9.

Line 6, solution through the general method of lines

Figure 10.

Line 6, solution through the Newton's Method

Figure 11.

Line 6, solution through the minimization of functional J

Figure 12.

Line 8, solution through the general method of lines

Figure 13.

Line 8, solution through the Newton's Method

Figure 14.

Line 8, solution through the minimization of functional J

12. Conclusion

In the first part of this article we develop duality principles for non-convex variational optimization. In the final concerning sections we propose dual convex formulations suitable for a large class of models in physics and engineering. In the last article section, we present an advance concerning the computation of a solution for a partial differential equation through the generalized method of lines. In particular, in its previous versions, we used to truncate the series in however, we have realized the results are much better by taking line solutions in series for and its derivatives, as it is indicated in the present software.

This is a little difference concerning the previous procedure, but with a great result improvement as the parameter is small.

Indeed, with a sufficiently large N (number of lines), we may obtain very good qualitative results even as is very small.

References

- R.A. Adams and J.F. Fournier, Sobolev Spaces, 2nd edn. (Elsevier, New York, 2003).

- W.R. Bielski, A. Galka, J.J. Telega, The Complementary Energy Principle and Duality for Geometrically Nonlinear Elastic Shells. I. Simple case of moderate rotations around a tangent to the middle surface. Bulletin of the Polish Academy of Sciences, Technical Sciences, Vol. 38, No. 7-9, 1988.

- W.R. Bielski and J.J. Telega, A Contribution to Contact Problems for a Class of Solids and Structures, Arch. Mech., 37, 4-5, pp. 303-320, Warszawa 1985.

- J.F. Annet, Superconductivity, Superfluids and Condensates, 2nd edn. ( Oxford Master Series in Condensed Matter Physics, Oxford University Press, Reprint, 2010).

- F.S. Botelho, Functional Analysis, Calculus of Variations and Numerical Methods in Physics and Engineering, CRC Taylor and Francis, Florida, 2020.

- F.S. Botelho, Variational Convex Analysis, Ph.D. thesis, Virginia Tech, Blacksburg, VA -USA, (2009).

- F. Botelho, Topics on Functional Analysis, Calculus of Variations and Duality, Academic Publications, Sofia, (2011).

- F. Botelho, Existence of solution for the Ginzburg-Landau system, a related optimal control problem and its computation by the generalized method of lines, Applied Mathematics and Computation, 218, 11976-11989, (2012). [CrossRef]

- F. Botelho, Functional Analysis and Applied Optimization in Banach Spaces, Springer Switzerland, 2014.

- J.C. Strikwerda, Finite Difference Schemes and Partial Differential Equations, SIAM, second edition (Philadelphia, 2004).

- L.D. Landau and E.M. Lifschits, Course of Theoretical Physics, Vol. 5- Statistical Physics, part 1. (Butterworth-Heinemann, Elsevier, reprint 2008).

- R.T. Rockafellar, Convex Analysis, Princeton Univ. Press, (1970).

- J.J. Telega, On the complementary energy principle in non-linear elasticity. Part I: Von Karman plates and three dimensional solids, C.R. Acad. Sci. Paris, Serie II, 308, 1193-1198; Part II: Linear elastic solid and non-convex boundary condition. Minimax approach, ibid, pp. 1313-1317 (1989).

- A.Galka and J.J.Telega Duality and the complementary energy principle for a class of geometrically non-linear structures. Part I. Five parameter shell model; Part II. Anomalous dual variational priciples for compressed elastic beams, Arch. Mech. 47 (1995) 677-698, 699-724.

- J.F. Toland, A duality principle for non-convex optimisation and the calculus of variations, Arch. Rat. Mech. Anal., 71, No. 1 (1979), 41-61. [CrossRef]

Figure 1.

solution for the case .

Figure 2.

solution for the case .

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Copyright: This open access article is published under a Creative Commons CC BY 4.0 license, which permit the free download, distribution, and reuse, provided that the author and preprint are cited in any reuse.

MDPI Initiatives

Important Links

© 2024 MDPI (Basel, Switzerland) unless otherwise stated