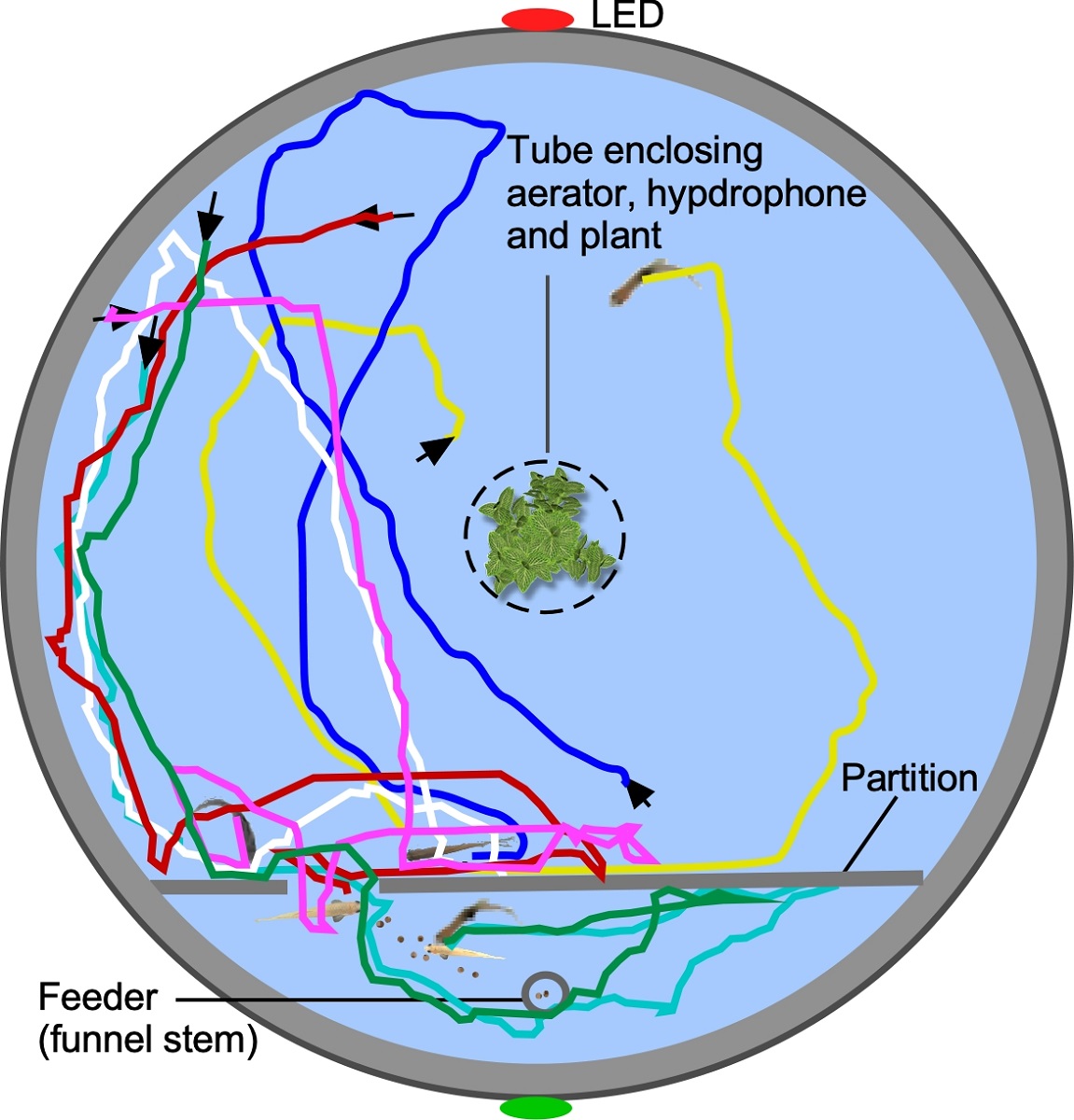

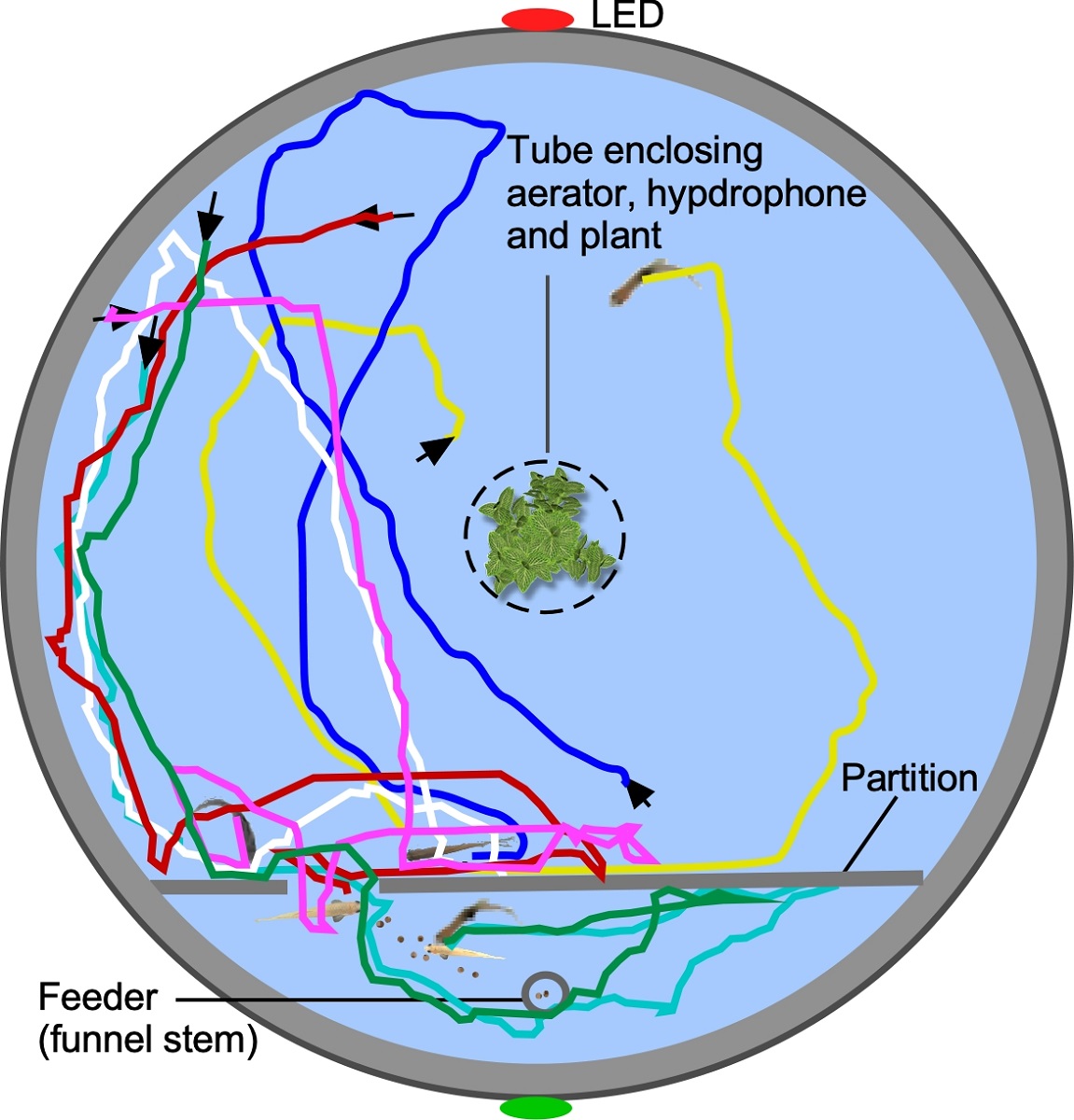

Directed movement towards a target requires spatial working memory, including processing of sensory inputs and motivational drive. In a stimulus-driven, operant conditioning paradigm designed to train zebrafish, we present a pulse of light via LED’s and/or sounds via an underwater transducer. A webcam placed below a glass tank records fish swimming behavior. During operant conditioning, a fish must interrupt an infrared beam at one location to obtain a small food reward at the same or different location. A timing-gated interrupt activates robotic-arm and feeder stepper motors via custom software controlling a microprocessor (Arduino). “Ardulink”, a JAVA facility, implements Arduino-computer communication protocols. In this way, full automation of stimulus-conditioned directional swimming is achieved. Precise multiday scheduling of training, including timing, location and intensity of stimulus parameters, and feeder control is accomplished via a user-friendly interface. Our training paradigm permits tracking of learning by monitoring, turning, location, response times and directional swimming of individual fish. This facilitates comparison of performance within and across a cohort of animals. We demonstrate the ability to train and test zebrafish using visual and auditory stimuli. Current methods used for associative conditioning often involve human intervention, which is labor intensive, stressful to animals, and introduces noise in the data. Our relatively simple yet flexible paradigm requires a simple apparatus and minimal human intervention. Our scheduling and control software and apparatus (NemoTrainer) can be used to screen neurologic drugs and test the effects of CRISPR-based and optogenetic modification of neural circuits on sensation, locomotion, learning and memory.