Submitted:

01 November 2023

Posted:

02 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Related Work

1.2. Contributions

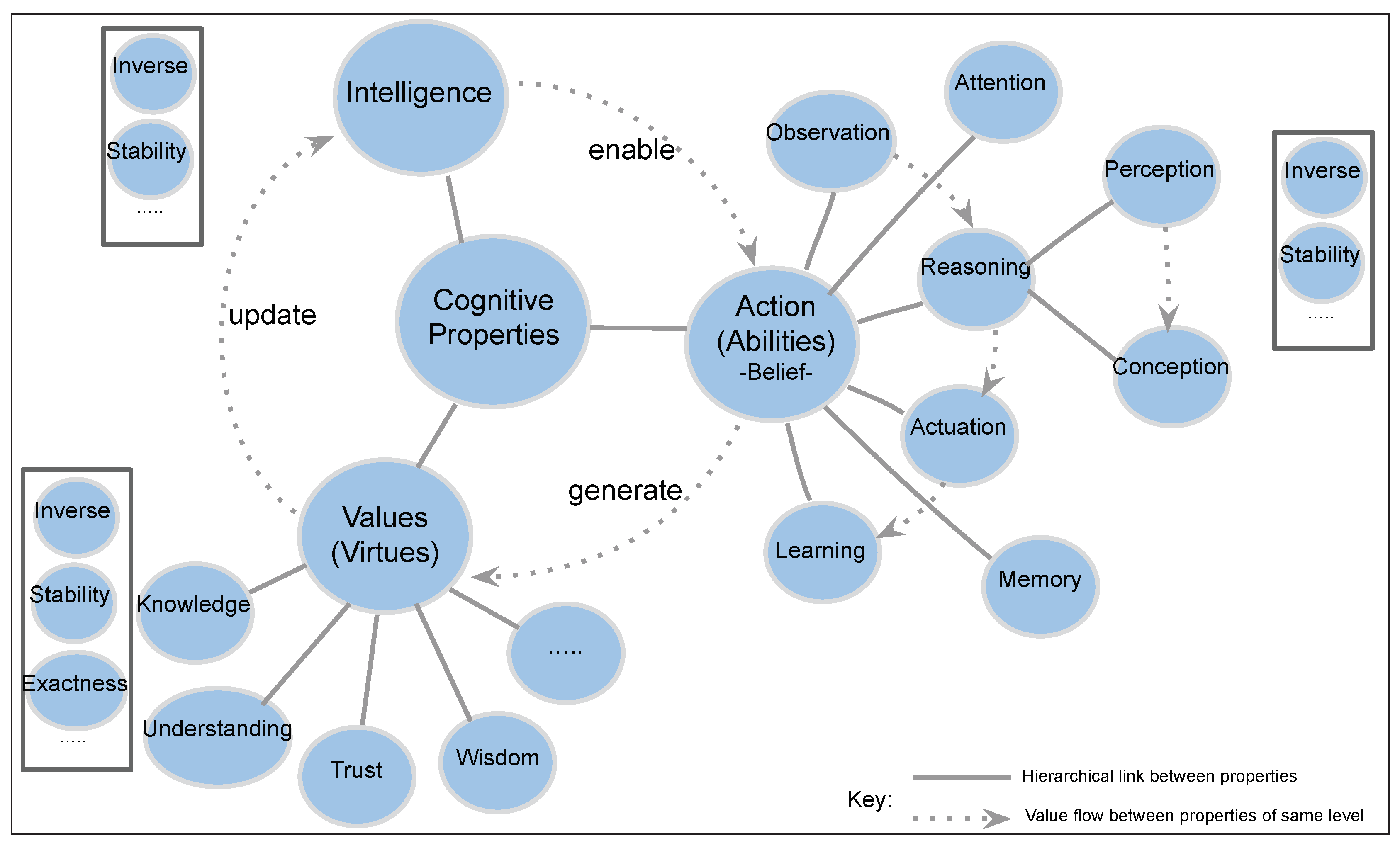

- A detailed abstraction of cognitive properties and their interrelationships in a cognitive intelligent agent.

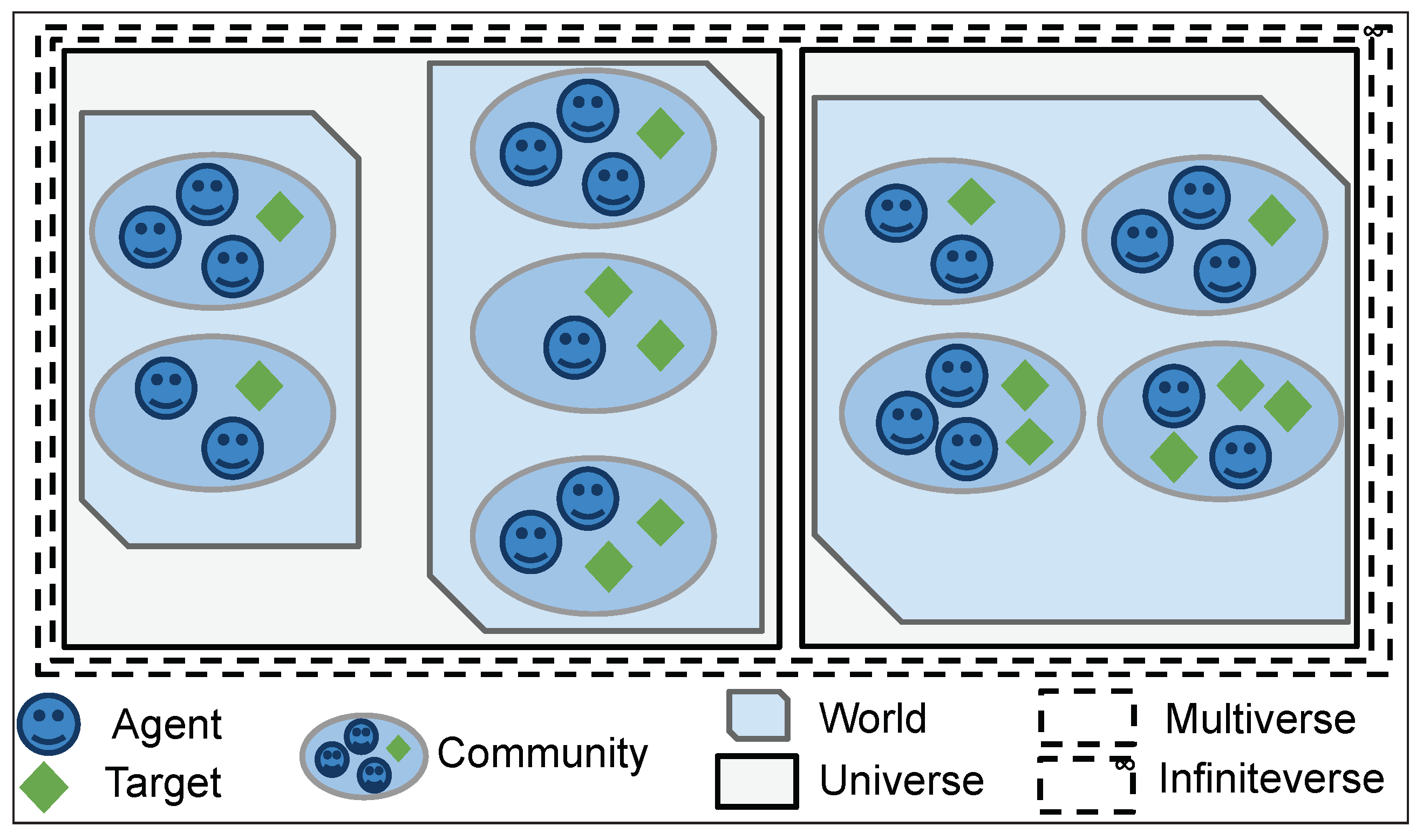

- A classification scheme for intelligent agents.

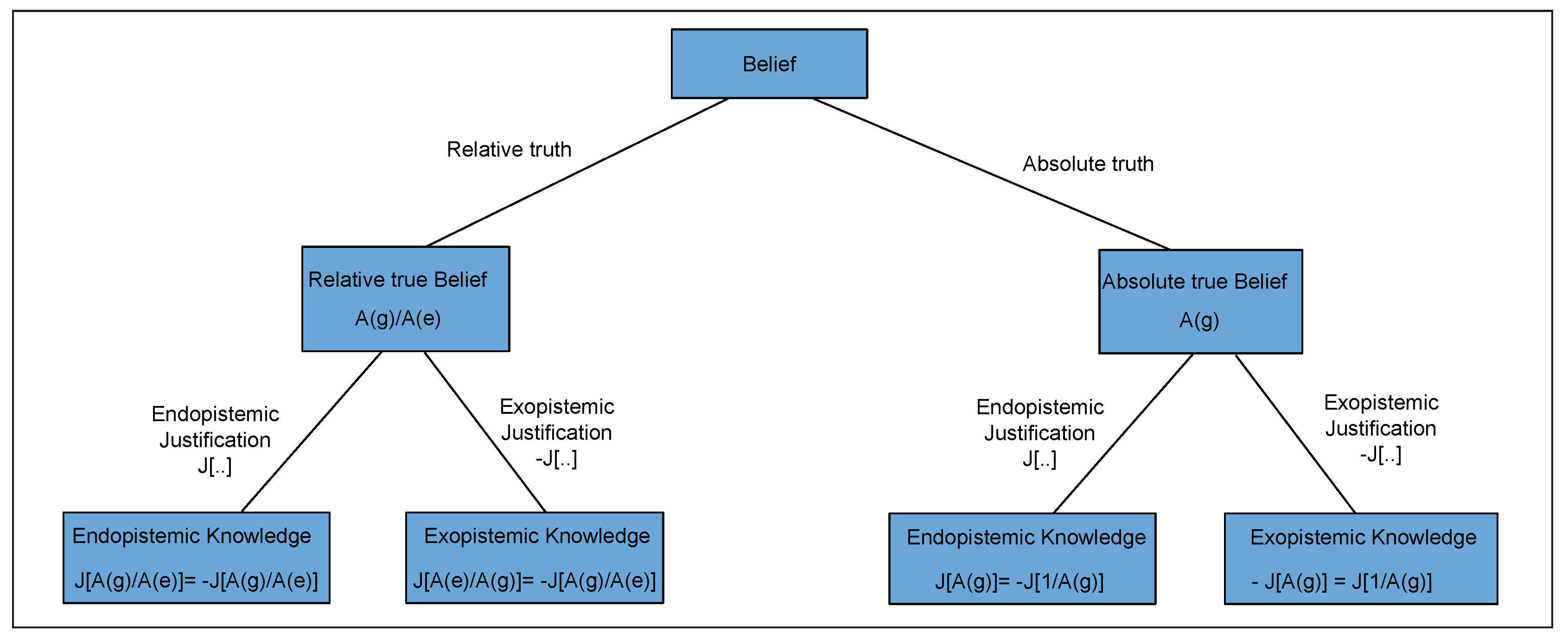

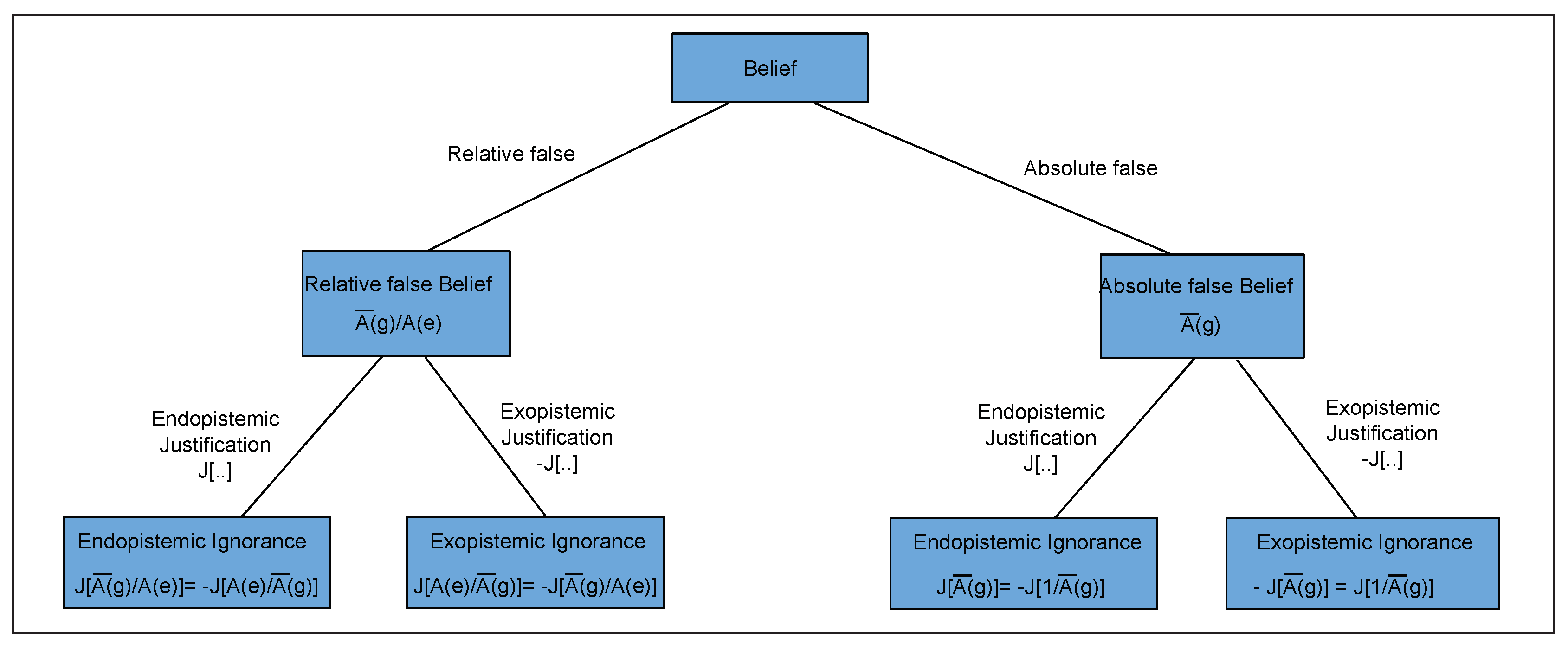

- A concise mathematical definition of belief, knowledge, ignorance, stability, and exactness properties in relation to cognition and epistemology.

1.3. Organization

2. Definition of Entities and Properties

2.1. Cognitive Property

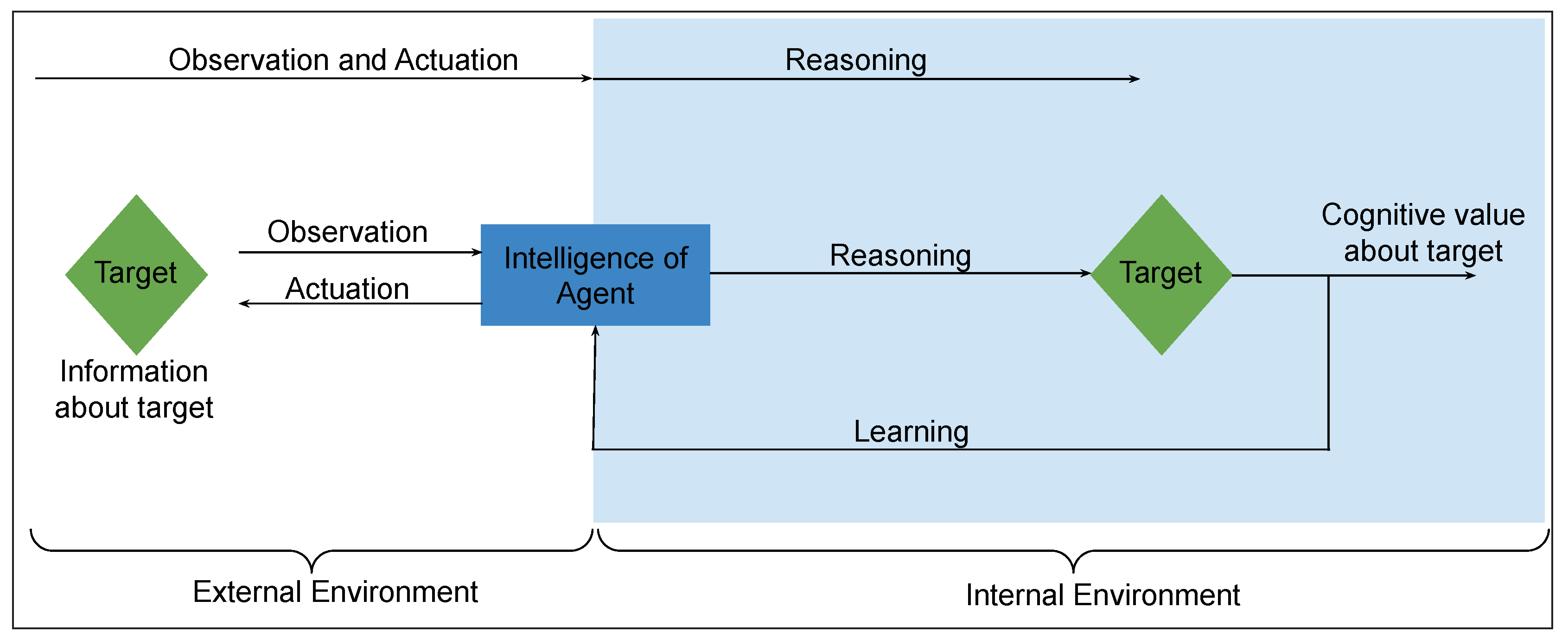

2.1.1. Knowledge, Action, and Intelligence

2.1.2. Observation, Reasoning, and Actuation

| Main abilities | |||||

|---|---|---|---|---|---|

| Types | Observation | Actuation | Learning | Reasoning | Examples |

| Type 0 | no | no | no | no | non intelligent agent |

| Type 1 | no | no | no | yes | clock |

| Type 2 | no | no | yes | yes | learning clock |

| Type 4 | no | yes | no | yes | controllers |

| Type 5 | no | yes | yes | yes | learning controllers |

| Type 6 | yes | no | no | yes | sensors |

| Type 7 | yes | no | yes | yes | learning sensors |

| Type 8 | yes | yes | no | yes | Automata, computers |

| Type 9 | yes | yes | yes | yes | AI bot, humans |

2.2. Definitions of Entities

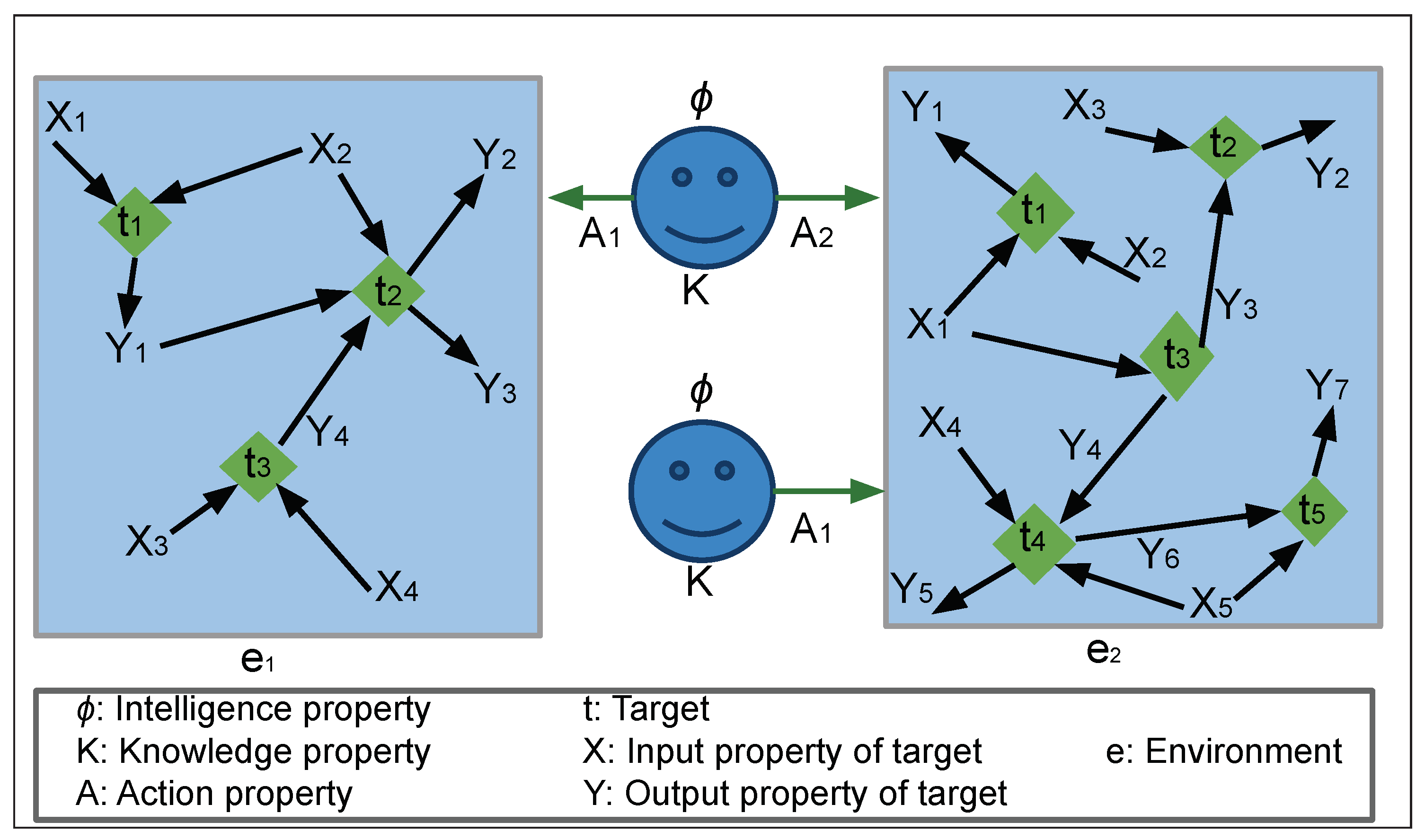

2.2.1. Description and Properties

2.2.2. Logical Relationships Between Entities

2.2.3. Logical operations between entities

3. Cognitive Property Quantification

3.1. Action Property

3.1.1. Action Quantification

3.1.2. Types of Action Values

3.1.3. Logical Operations on Action Values

3.2. Intelligence Property ()

3.2.1. Intelligence Quantification

3.3. Cognitive Value Property

3.3.1. Knowledge Quantification

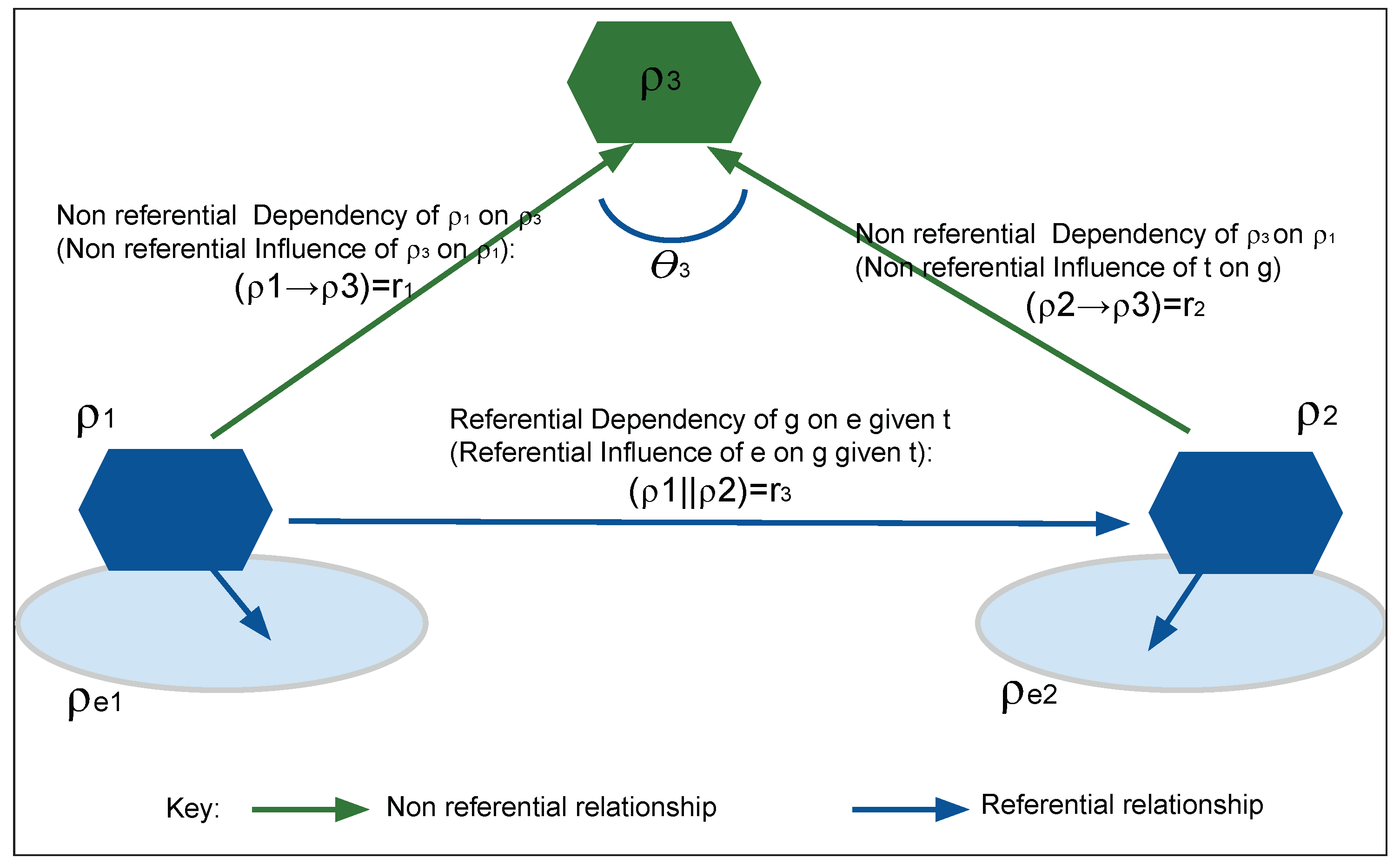

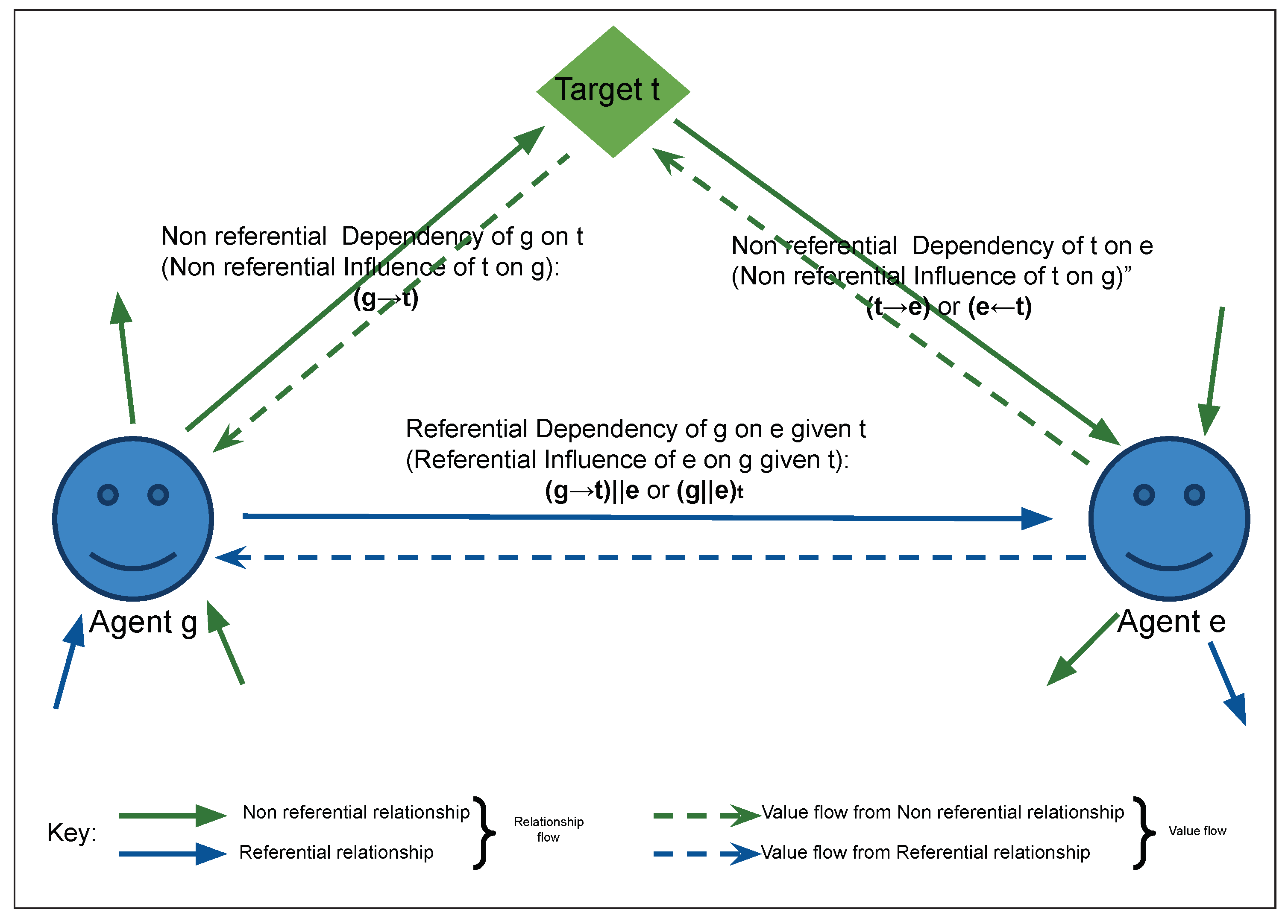

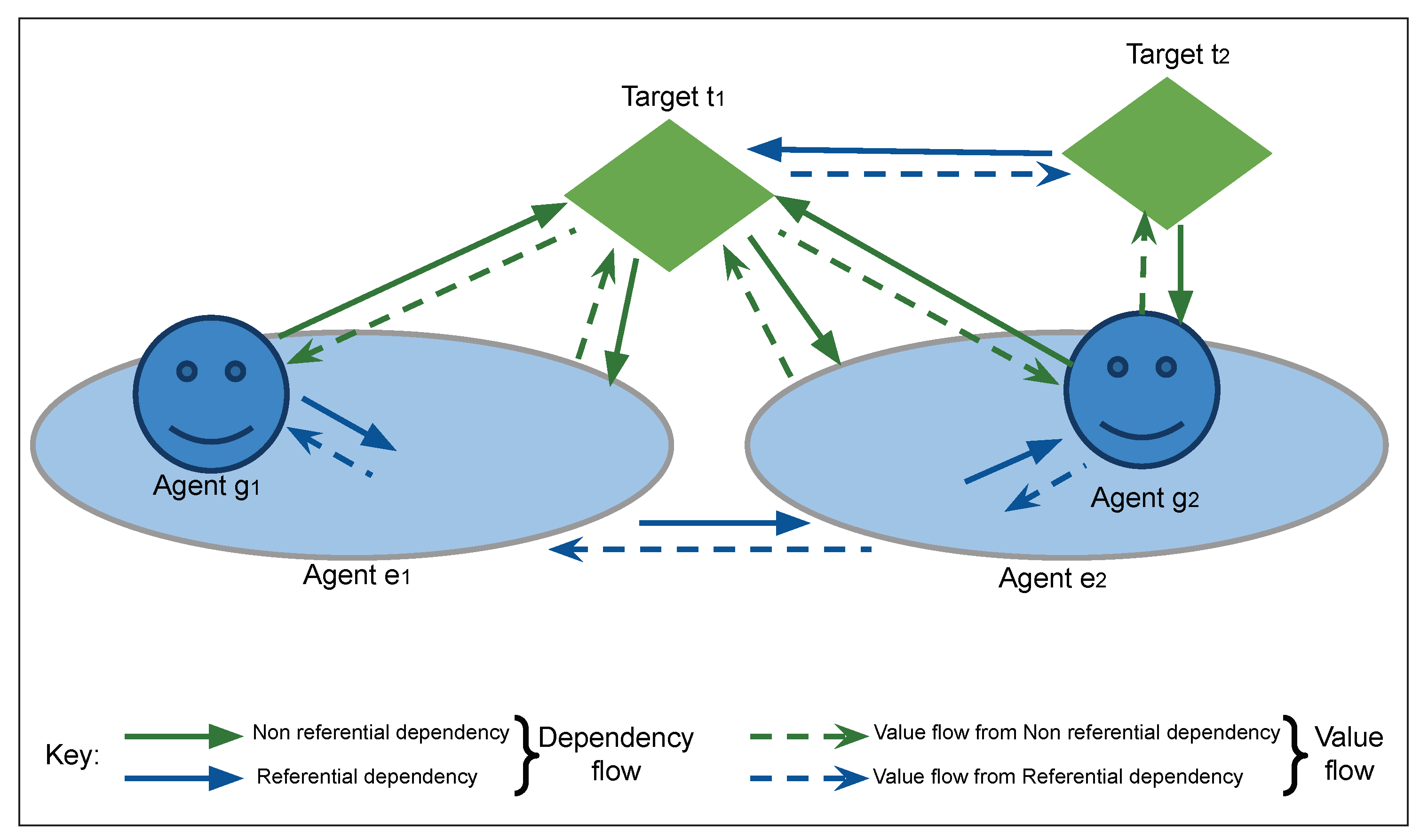

- For any relationship, and at any time instance, all entities are either of two types: agent or target.

- The target is the center (purpose) of all cognitive actions and value generation of an agent.

- All value generated during cognition flow from the influencer entity to the dependent entity, contrary to the flow of dependency.

- All relationships between same entity type are dependent relationships: self, conditional, mutual joint, referencing, etc.

- The relationship between targets is defined by agent (action) and the relationship between agents is defined by target (state).

- The relationship between agent and its environment is referential but between agent and its target is non-referential.

3.3.2. Cognitropy: Expected Cognitive Property Value

3.3.3. Resultant Values

3.3.4. Dissimilarities of Cognitropy from Other Quantities

3.3.5. Types of Knowledge

3.3.6. Knowledge Structures

3.3.7. Logical Operations on Knowledge

3.3.8. Properties of the Knowledge Value

4. Conclusion

Appendix A. Proofs

Appendix A.1

Appendix B. List of Symbols

References

- Turing, A.M. Computing machinery and intelligence. Mind 1950, LIX, 433–460. [CrossRef]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach, 3 ed.; Prentice Hall, 2010.

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065. [CrossRef]

- Sun, S.; Cao, Z.; Zhu, H.; Zhao, J. A Survey of Optimization Methods From a Machine Learning Perspective. IEEE Transactions on Cybernetics 2020, 50, 3668–3681. [CrossRef]

- de Grefte, J. Knowledge as Justified True Belief. Erkenntnis 2021. [CrossRef]

- Ichikawa, J.J.; Steup, M. The Analysis of Knowledge. In The Stanford Encyclopedia of Philosophy, Summer 2018 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University, 2018.

- Armstrong, D.M. Belief, Truth And Knowledge, 1 ed.; Cambridge University Press, 1973. [CrossRef]

- Liew, A. DIKIW: Data, Information, Knowledge, Intelligence, Wisdom and their Interrelationships. Business Management Dynamics 2013.

- Rowley, J. The wisdom hierarchy: Representations of the DIKW hierarchy. J Inf Sci 2007, 33. [CrossRef]

- Zhong, Y. Knowledge theory and information-knowledge-intelligence trinity. Proceedings of the 9th International Conference on Neural Information Processing, 2002. ICONIP ’02., 2002, Vol. 1, pp. 130–133 vol.1. [CrossRef]

- Dretske, F.I. Knowledge and the Flow of Information; MIT Press, 1981. [CrossRef]

- Topsoe, F. On truth, belief and knowledge. IEEE International Symposium on Information Theory - Proceedings, 2009, pp. 139 – 143. [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423.

- Kolmogorov, A.N. Three approaches to the quantitative definition of information. International Journal of Computer Mathematics 1968, 2, 157–168.

- Konorski, J.; Szpankowski, W. What is information? 2008 IEEE Information Theory Workshop, 2008, pp. 269–270. [CrossRef]

- Valdma, M. A general classification of information and systems. Oil Shale 2007, 24.

- Steup, M.; Neta, R. Epistemology. In The Stanford Encyclopedia of Philosophy, Fall 2020 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University, 2020.

- .

- Hasan, A.; Fumerton, R. Foundationalist Theories of Epistemic Justification. In The Stanford Encyclopedia of Philosophy, Fall 2018 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University, 2018.

- Lewin, K. Field Theory and Experiment in Social Psychology: Concepts and Methods. American Journal of Sociology 1939, 44, 868 – 896.

- Martin, J. What is Field Theory? American Journal of Sociology - AMER J SOCIOL 2003, 109, 1–49. [CrossRef]

- Audi, R. The Architecture of Reason: The Structure and Substance of Rationality; Oxford University Press, 2001.

- Audi, R. Précis of the Architecture of Reason. Philosophy and Phenomenological Research, Wiley-Blackwell 2003, 67, 177–180. [CrossRef]

- Lenat, D. Ontological versus knowledge engineering. IEEE Transactions on Knowledge and Data Engineering 1989, 1, 84–88. [CrossRef]

- Wang, W.; De, S.; Toenjes, R.; Reetz, E.; Moessner, K. A Comprehensive Ontology for Knowledge Representation in the Internet of Things. 2012 IEEE 11th International Conference on Trust, Security and Privacy in Computing and Communications, 2012, pp. 1793–1798. [CrossRef]

- Lombardi, O. Dretske, Shannon’s Theory and the Interpretation of Information. Synthese 2005, 144, 23–39. [CrossRef]

- Oxford University Press and Dictionary.com.

- Kiely, K.M. Cognitive Function; Springer Netherlands: Dordrecht, 2014; pp. 974–978. [CrossRef]

- Gudivada, V.N.; Pankanti, S.; Seetharaman, G.; Zhang, Y. Cognitive Computing Systems: Their Potential and the Future. Computer 2019, 52, 13–18. [CrossRef]

- Douglas, H. The Value of Cognitive Values. Philosophy of Science 2013, 80, 796–806. [CrossRef]

- Hirsch Hadorn, G. On Rationales for Cognitive Values in the Assessment of Scientific Representations. Journal for General Philosophy of Science 2018, 49, 1–13. [CrossRef]

- Todt, O.; Luján, J.L. Values and Decisions: Cognitive and Noncognitive Values in Knowledge Generation and Decision Making. Science, Technology, & Human Values 2014, 39, 720–743. [CrossRef]

- Wirtz, P., Entrepreneurial finance and the creation of value: Agency costs vs. cognitive value; 2015; pp. 552–568. [CrossRef]

- Cleeremans, A. Conscious and unconscious cognition: A graded, dynamic perspective. Int. J. Psychol 2004, 39. [CrossRef]

- Epstein, S. Demystifying Intuition: What It Is, What It Does, and How It Does It. Psychological Inquiry 2010, 21, 295–312. [CrossRef]

- Oxford Dictionary.

- Biggam, J. Defining knowledge: an epistemological foundation for knowledge management. Proceedings of the 34th Annual Hawaii International Conference on System Sciences, 2001, pp. 7 pp.–. [CrossRef]

- Beránková, M.; Kvasnička, R.; Houška, M. Towards the definition of knowledge interoperability. 2010 2nd International Conference on Software Technology and Engineering, 2010, Vol. 1, pp. V1–232–V1–236. [CrossRef]

- Firestein, S. Ignorance: How it drives science; Oxford University Press, 2012. [CrossRef]

- Oxford Dictionary.

- Foo, N.; Vo, B. Reasoning about Action: An Argumentation - Theoretic Approach. Journal of Artificial Intelligence Research 2011, 24. [CrossRef]

- Giordano, L.; Martelli, A.; Schwind, C. Reasoning about actions in dynamic linear time temporal logic. Logic Journal of the IGPL 2001, 9, 273–288. [CrossRef]

- Hartonas, C. Reasoning about types of action and agent capabilities. Logic Journal of the IGPL 2013, 21, 703–742. [CrossRef]

- Zhong, S.; Xia, K.; Yin, X.; Chang, J. The representation and simulation for reasoning about action based on Colored Petri Net. 2010 2nd IEEE International Conference on Information Management and Engineering, 2010, pp. 480–483. [CrossRef]

- Legg, S.; Hutter, M. A Collection of Definitions of Intelligence. Advances in Artificial General Intelligence: Concepts, Architectures and Algorithms 2007, 157.

- Wilhelm, O.; Engle, R. Handbook of understanding and measuring intelligence; 2005; p. 542. [CrossRef]

- Wang, Y.; Widrow, B.; Zadeh, L.; Howard, N.; Wood, S.; Bhavsar, V.; Budin, G.; Chan, C.; Gavrilova, M.; Shell, D. Cognitive Intelligence: Deep Learning, Thinking, and Reasoning by Brain-Inspired Systems. International Journal of Cognitive Informatics and Natural Intelligence 2016, 10, 1–20. [CrossRef]

- Zhong, Y. Mechanism approach to a unified theory of artificial intelligence. 2005 IEEE International Conference on Granular Computing, 2005, Vol. 1, pp. 17–21 Vol. 1. [CrossRef]

- Rens, G.; Varzinczak, I.; Meyer, T.; Ferrein, A. A Logic for Reasoning about Actions and Explicit Observations. 2010, Vol. 6464, pp. 395–404. [CrossRef]

- Pereira, L.; Li, R. Reasoning about Concurrent Actions and Observations 1999.

- Kakas, A.C.; Miller, R.; Toni, F. E-RES: A System for Reasoning about Actions, Events and Observations. ArXiv 2000, cs.AI/0003034.

- Costa, A.; Salazar-Varas, R.; Iáñez, E.; Úbeda, A.; Hortal, E.; Azorín, J.M. Studying Cognitive Attention Mechanisms during Walking from EEG Signals. 2015 IEEE International Conference on Systems, Man, and Cybernetics, 2015, pp. 882–886. [CrossRef]

- Wickens, C. Attention: Theory, Principles, Models and Applications. International Journal of Human–Computer Interaction 2021, 37, 403–417. [CrossRef]

- Atkinson, R.C.; Shiffrin, R.M. Human Memory: A Proposed System and its Control Processes. Psychology of Learning and Motivation, 1968.

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need; Curran Associates Inc.: Red Hook, NY, USA, 2017; NIPS’17.

- Miller, G.A. The magical number seven plus or minus two: some limits on our capacity for processing information. Psychological review 1956, 63 2, 81–97.

- Fatemi, M.; Haykin, S. Cognitive Control: Theory and Application. IEEE Access 2014, 2, 698–710. [CrossRef]

- Nunes, T. Logical Reasoning and Learning. In Encyclopedia of the Sciences of Learning; 2012; pp. 2066–2069. [CrossRef]

- Stenning, K.; van Lambalgen, M. Reasoning, logic, and psychology. WIREs Cognitive Science, 2, 555–567. [CrossRef]

- Johnson-Laird, P.N. Mental models and human reasoning 2010. 107, 18243–18250. [CrossRef]

- Atkinson, R.; Shiffrin, R. Human Memory: A proposed system and its control processes. In Human Memory; BOWER, G., Ed.; Academic Press, 1977; pp. 7–113. [CrossRef]

- Baddeley, A.D.; Hitch, G. Working Memory; Academic Press, 1974; Vol. 8, Psychology of Learning and Motivation, pp. 47–89. [CrossRef]

- Baddeley, A.; Conway, M.; JP, A. Episodic Memory: New Directions in Research 2002. [CrossRef]

- Angluin, D. Computational Learning Theory: Survey and Selected Bibliography. Proceedings of the Twenty-Fourth Annual ACM Symposium on Theory of Computing. Association for Computing Machinery, 1992, p. 351–369. [CrossRef]

- Vapnik, V. An overview of statistical learning theory. IEEE Transactions on Neural Networks 1999, 10, 988–999. [CrossRef]

- Beránková, M.; Kvasnička, R.; Houška, M. Towards the definition of knowledge interoperability. 2010 2nd International Conference on Software Technology and Engineering, 2010, Vol. 1, pp. V1–232–V1–236. [CrossRef]

- Chen, Z.; Duan, L.Y.; Wang, S.; Lou, Y.; Huang, T.; Wu, D.O.; Gao, W. Toward Knowledge as a Service Over Networks: A Deep Learning Model Communication Paradigm. IEEE Journal on Selected Areas in Communications 2019, 37, 1349–1363. [CrossRef]

- Corbett, D. Semantic interoperability of knowledge bases: how can agents share knowledge if they don’t speak the same language? Sixth International Conference of Information Fusion, 2003. Proceedings of the, 2003, Vol. 1, pp. 94–98. [CrossRef]

- Hunter, A.; Konieczny, S. Approaches to Measuring Inconsistent Information. 2005, Vol. 3300, pp. 191–236. [CrossRef]

- Sayood, K. Information Theory and Cognition: A Review. Entropy 2018, 20, 706. [CrossRef]

- Démuth, A. Perception Theories; Kraków: Trnavská univerzita, 2013.

- Briscoe, R.; Grush, R. Action-based Theories of Perception. In The Stanford Encyclopedia of Philosophy; 2020.

- Guizzardi, G. On Ontology, ontologies, Conceptualizations, Modeling Languages, and (Meta)Models. Seventh International Baltic Conference, DB&IS, 2006, pp. 18–39.

- Chitsaz, M.; Hodjati, S.M.A. Conceptualization in ideational theory of meaning: Cognitive theories and semantic modeling. Procedia - Social and Behavioral Sciences 2012, 32, 450–455. [CrossRef]

- El Morr, C.; Ali-Hassan, H., Descriptive, Predictive, and Prescriptive Analytics: A Practical Introduction; 2019; pp. 31–55. [CrossRef]

- Megha, C.; Madhura, A.; Sneha, Y. Cognitive computing and its applications. 2017 International Conference on Energy, Communication, Data Analytics and Soft Computing (ICECDS), 2017, pp. 1168–1172. [CrossRef]

- Zhang, X.N. Formal analysis of diagnostic notions. 2012 International Conference on Machine Learning and Cybernetics, 2012, Vol. 4, pp. 1303–1307. [CrossRef]

- Francesco, B.; Ahti-Veikko, P. Charles Sanders Peirce: Logic. In The Internet Encyclopedia of Philosophy; 2022.

- Pishro-Nik, H. Introduction to Probability, Statistics, and Random Processes; Kappa Research LLC, 2014.

- Lefcourt, H.M. Locus of control: Current trends in theory and research; Psychology Press, 1982. [CrossRef]

- Shramko, Y.; Wansing, H. Truth Values. In The Stanford Encyclopedia of Philosophy; 2021.

- Parent, T. Externalism and Self-Knowledge. In The Stanford Encyclopedia of Philosophy; 2017.

- Ted, P. Epistemic Justification. In The Internet Encyclopedia of Philosophy; 2022.

- Artemov, S.; Fitting, M. Justification Logic. In The Stanford Encyclopedia of Philosophy; 2021.

- Smith, G. Newton’s Philosophiae Naturalis Principia Mathematica. In The Stanford Encyclopedia of Philosophy; 2008.

- Eddington, A.S.S. The mathematical theory of relativity; Cambridge University Press, 1924. [CrossRef]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. The Annals of Mathematical Statistics 1951, 22, 79 –86. [CrossRef]

- Berge, T.; van Hezewijk, R. Procedural and Declarative Knowledge. Theory & Psychology 1999, 9, 605 – 624. [CrossRef]

| Symbols | Dependency types | Action values | Knowledge values |

|---|---|---|---|

| non-referential | |||

| referential | |||

| non-referential | |||

| non-referential | |||

| referential | |||

| non-referential | |||

| non-referential | |||

| referential | |||

| referential | |||

| non-referential | |||

| It should be noted that, other non-referential dependencies can be used such as mutual (;), joint (,), etc., apart from conditional dependency (|). | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).