1. Introduction

Unmanned aerial vehicles (UAVs) have several applications in mobile communication, academia, and vertical industries. Besides those applications, the uncontrolled use of UAVs can pose serious security threats to public and private organizations [

1]. The Federal Aviation Administration (FAA) forecasts that the fleet of small UAVs should quadruple by 2021, increasing from 1.1 million units in 2016 to 3.5 million by 2021 [

2,

3]. Nonetheless, the availability of drones has posed significant privacy and secrecy dilemma. Moreover, to emphasize the significance of the problem, we noticed security threats from the uncontrolled usage of UAVs that severely damaged the infrastructure [

4]. Drones were initially developed for defense and counterinsurgency and controlled by the aerospace and defense industries. Usually, the most common types of UAVs adopted in global military applications are multi-rotor, fixed-wing, and single-rotor UAVs, shown in

Figure 1. Based on the above facts, we conclude that it is critical to have a drone detection system capable of classifying and localizing types of drones, particularly those that pose security threats.

UAV detection is an object detection problem that has lately made significant progress. According to Lykou

et al., 6% of UAV detection systems are based on acoustic sensors, 26% are radio frequency (RF), 28% are radar-based, and 40% are visual [

5,

6,

7]. YOLO (You Only Look Once) is single-shot object detection and deep learning algorithm that attracted the attention because of its durability, validity, quick detection, and rapidity, which ensures real-time detection [

8]. YOLO consumes fewer computation resources than many deep-CNN detectors, which often demand 4 GB of RAM and computer graphics cards [

9]. In this paper, we perform a multiclass and multiscale UAV identification based on the most recent version of the YOLO detector. Below are the main contributions to this paper.

Gathered the customized multi-class UAV dataset with multi-size, multi-type targets in challenging weather conditions and complex backgrounds.

Trained YOLOv5l and v7 model on the custom dataset with pre-trained weights of coco dataset hence embedded the transfer learning concept and named those models as TransLearn-YOLOv5l and TransLearn-YOLOv7.

To the best of our knowledge, this is the first effort to compare YOLOv5 with YOLOv5 with transfer learning for the task of multi-class drone detection from visual images.

Figure 1.

Military UAVs (a) Multi-Rotor [

10](b) Fixed-Wing [

11] (c) Single-Rotor [

12].

Figure 1.

Military UAVs (a) Multi-Rotor [

10](b) Fixed-Wing [

11] (c) Single-Rotor [

12].

2. Literature Review

Drones have been adopted for both educational and commercial purposes in a wide range of disciplines. The last decade has seen a surge in research for effective and precise methods for UAV recognition. However, due to the nature of the locations in which drones often operate, identification can be a challenge. As a result, sophisticated methods are required for UAV identification, whether they are flying alone or in a swarm. Singha

et al. developed a YOLOv4-based auto-drone detection system and tested it on drone and bird footage. This architecture was trained using 479 bird and 1916 drone images from publicly available sources. The achieved F1 score, mAP, recall, and precision values were 79%, 74.36%, 68%, and 95%, respectively, in [

13]. YOLOv4 is used to detect and identify UAVs in visual images of helicopters, multirotor, and birds. This network has an mAP of 84% and an accuracy of 83%. This paper excellently addressed the detection problem but only identified multirotor and helicopter drones; it does not perform well for other UAV types [

10].

In [

14], researchers solved the problem of drones versus birds by proposing a visual drone detector based on YOLOV5 and an air-to-air UAV dataset containing small objects and complex backgrounds. They additionally trained a model using faster region convolutional neural network (R-CNN) and feature pyramid network (FPN) techniques. YOLOv5 outperformed the faster R-CNN + FPN in both simple cases and complex settings, with a 0.96 recall and a 0.98 mAP. Coluccia

et al. classified multirotor and fixed-wing UAVs present in video clips using the YOLOv3 and YOLOv5 architectures. The monitoring system linked to a warning algorithm that triggers the alarm whenever it detects a drone. The detection rate and average accuracy showed performance improvement, but it still needs additional data in complex weather conditions for further improvements [

15]. The neural network was trained, tested, and evaluated using datasets containing different kinds of UAVs (multirotor, fixed-wing aircraft, helicopters, and vertical takeoff landing aircraft) and birds and achieved an 83% mAP [

16].

The authors in [

12] proposed Yolov5-based multirotor UAV target detection. They replaced the baseline model’s backbone with EfficientLite for parameter reduction and computation, introduced adaptive feature fusion to facilitate the fusion of feature maps at various scales, and added angle as a constraint to the baseline loss function. The results showed that EfficientLite struck an optimal balance between the number of parameters and detection accuracy, with enhanced target identification compared to the baseline model. In [

17], the authors proposed one-stage detector-based deep learning with simplified filtering layers. For lower complexity, SSD-AdderNet was proposed to reduce multiplications operations performed in the convolutional layer. The video data contained varying sizes of drones. The AdderNet’s accuracy was lower than other well-known methods for training on RGB images, but it achieved noteworthy complexity reduction. However, when tested on IR pictures, the SSD performance of AdderNet is much higher than that of competing algorithms. Here, real-time image classification was performed by training a deep learning model on stereoscopic images [

18]. This research confirmed that synthetic images could be used to speed up the image classification issues for imbalanced, skewered, or no-image dataset problems.

A convolutional neural network (CNN) based model presented in [

19] detected UAVs present in video footage. This model was trained with computer-generated visuals and tested using a real-world drone dataset. Drones were categorized as either DJI Mavics, DJI Phantoms, or DJI Inspires, with an average accuracy of 92.4%. In [

11], researchers used multi-stage feature fusion utilizing multi-cascaded auto-encoders to eliminate rain patterns in input pictures and used ResNet as a feature extractor. This system can successfully block the entry of UAVs into the airspace with an average identification accuracy of 82% and 24 frames per second (FPS).

After screaming through the literature, we notice that significant improvement in drone detection technology and solutions is required. Multirotor UAVs (quadcopters) have a substantial market share, so these UAVs need to be closely watched for safe operation, as small drone detection has multiple difficulties. Consumer-grade UAVs often fly at low altitudes, producing complex and changeable backgrounds and frequently being obscured by things like trees and homes. Regular aircraft, such as planes and helicopters, may often fly over a location like an airport or a hospital. The detection technique should be capable of distinguishing between them and different types of UAVs. UAVs may emerge from all directions, so monitoring systems should be capable of detecting drones in multiple directions simultaneously. Our problem statement is the multi-class detection and classification of UAVs under complex weather conditions. Therefore, we adopted the two latest and fastest object detection algorithms,YOLOv5 and YOLOv7, by combining the transfer learning technique.

3. Proposed TransLearn-YOLOx: Improved-YOLO with Transfer Learning

3.1. YOLOv5

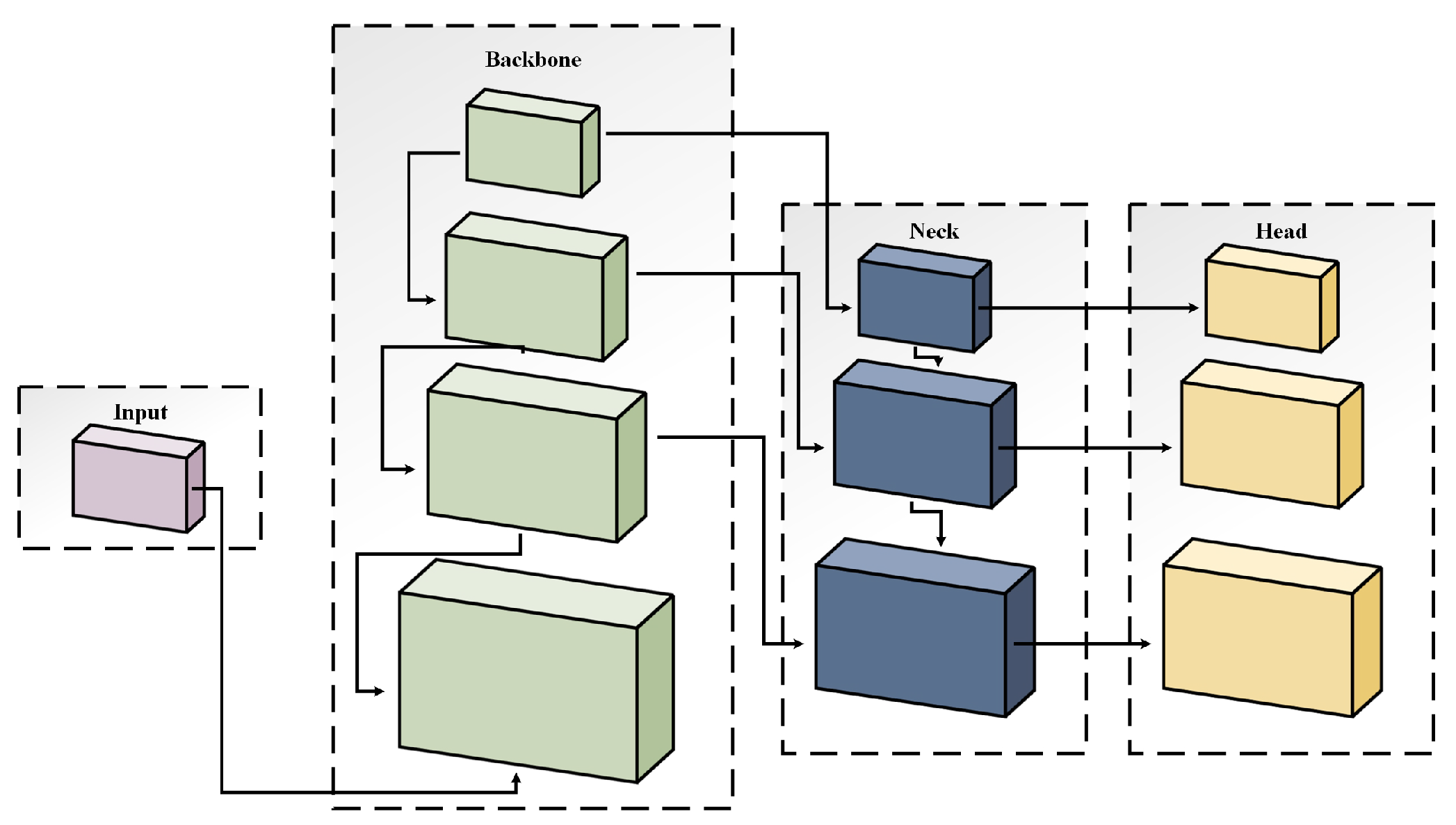

YOLOv5 is one of the most recent versions of the YOLO family, presented in

Figure 2 is distinct from earlier releases because it integrated PyTorch instead of Darknet. It uses CSPDarknet53 as its structural support in the backbone block, which eliminates the redundant gradient information present in large backbones. It also incorporates gradient change into feature maps, which speeds up the inference rate, improves accuracy, and shrinks the size of the model by reducing the number of parameters. It boosts the information flow by using the path aggregation network (PANet) in the neck block. A feature pyramid network (FPN) with bottom-up and top-down layers is adopted by the PANet architecture, which enhances the model’s transmission of low-level features [

20].

3.2. YOLOv7

YOLOv7 is a real-time, single-stage object detection algorithm that claims to outperform all YOLO models in precision and speed, with a maximum average precision of 56.8% on the COCO dataset [

8]. It has a head, a neck, and a backbone, as shown in

Figure 2. The projected model outputs are located in the head. YOLOv7 is not constrained to just one head because it was inspired by Deep Supervision. The lead head is in charge of producing the ultimate product, while the auxiliary head is utilized to support middle-layer training. A

label assigner is also embedded in it that assigns soft labels after taking ground truth and network prediction outcomes. The

extended efficient layer aggregation network (E-ELAN) performs the main computation in the YOLOv7 backbone. By employing "expand, shuffle, and merge cardinality" to accomplish the capacity to constantly increase the learning capability of the network without breaking the original gradient route, the YOLOv7 E-ELAN architecture helps the network improve its learning.

3.3. Transfer learning

Transfer learning is a method for using a trained model as a starting point to train a model to handle a different but related job [

21]. On our unique UAV dataset, we trained the YOLOv5l and YOLOv7 models with three classes using pre-trained YOLOv5 and v7 weights with 80 classes. With minimal training time, this approach successfully converges the model’s weight and optimizes model loss.

4. Dataset and Model Training

In this paper, we evaluated the two most recent models of YOLO, named YOLOv5l and YOLOv7, with a transfer learning approach, TransLearn-YOLOv5l and TransLearn-YOLOv7. Both of these models require the dataset to be available with the class category, bounding boxes, and annotation files. We used Roboflow an open-sourced dataset platform, to create a customized dataset of 11733 images that had three different classes of UAV, i.e., multi-rotor (3911 images), single-rotor (3911 images), and fixed-wing (3911 images). We use 93% of the dataset for training (11,000 images), 3% for validation (343 images), and 4% for testing (423 images). Before training, the images underwent pre-processing, resizing, saturation + exposure adjustment, and then model training. For smooth data training, we set the initial learning rate (lr0) for the SGD optimizer at 0.01. For TransLearn-YOLOv7, the one-cycle learning rate (lrf) is 0.1, and for TransLearn-YOLOv5, it is 0.01 at the end. with a weight decay of 0.0005 and an optimizer momentum of 0.937. The first warmup momentum is 0.8 and the initial warmup bias is 0.1 at 3.0 warmup epochs. The box loss gain is 0, the class loss gain is 0, the object loss gain is 1, the focal loss gamma is 0, the anchor-multiple threshold is 4.0, and the IoU training threshold is 0.20. The dataset was trained on Google Colab with a python-3.8.16 and torch-1.13.0 environment and a Tesla T4 GPU. TransLearn-YOLOv5 took 1 hour, 49 minutes, and 22 seconds and TransLearn-YOLOv7 took 3 hours, and 3 minutes with the transfer learning approach. After training, we evaluated the models’ performance using standard evaluation metrics like precision, recall, mAP, f1-score, and detection accuracy. The box loss of TransLearn-YOLOv5 during training went from 0.06–0.02, while for TransLearn-YOLOv7 it was 0.05–0.021, as shown in

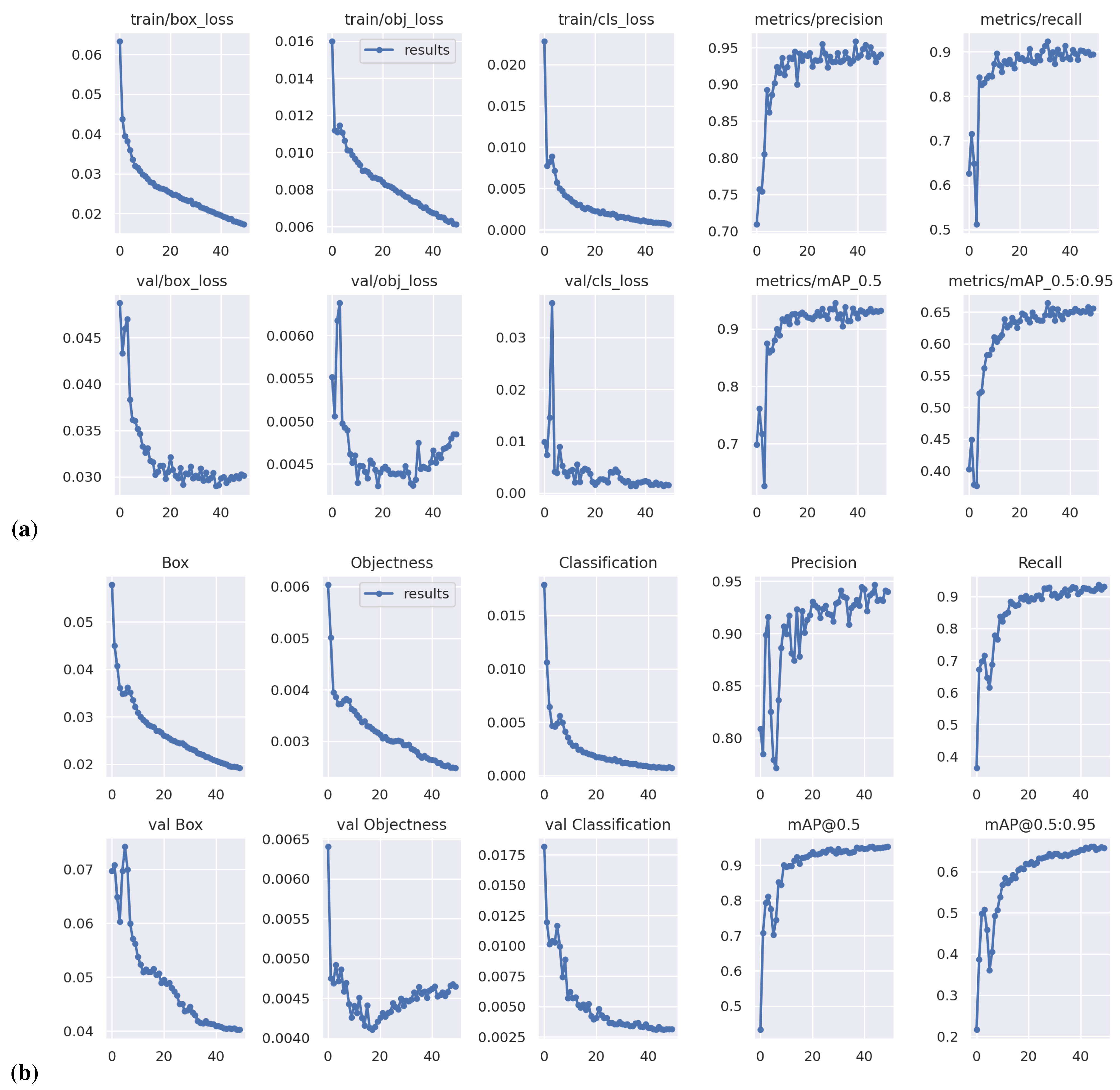

Figure 3. TransLearn-YOLOv7 has low box loss, which means that it has excellent capability to locate an object’s center point and a predicted bounding box that covers the specific object quite well. The objectness loss for TransLearn-YOLOv5l is 0.016–0.006 during training, and TransLearn-YOLOv7 has 0.006-0.003. Each box has an associated prediction called "objectivity." TransLearn-YOLOv7 performs quite well in scoring objects with high precision values. That’s why its object loss is quite low as compared to YOLOv5. A classification loss is applied to train the classifier head to determine the type of target object. Its values are 0.020–0.000 for TransLearn-YOLOv5l and 0.015–0.000 for TransLearn-YOLOv7. TransLearn-YOLOv5l shows an increased classification loss as compared to TransLearn-YOLOv7, which means that YOLOv7 will have high threshold detection accuracy in unknown scenarios. The precision, recall, mAP@0.5, and mAP@0.5:0.95 graphs over 50 epochs for both models show an increasing trend, which implies that both models’ learning patterns are going well with the transfer learning approach. These results still have room for improvement, which will be addressed in future work.

5. Evaluation and Comparison

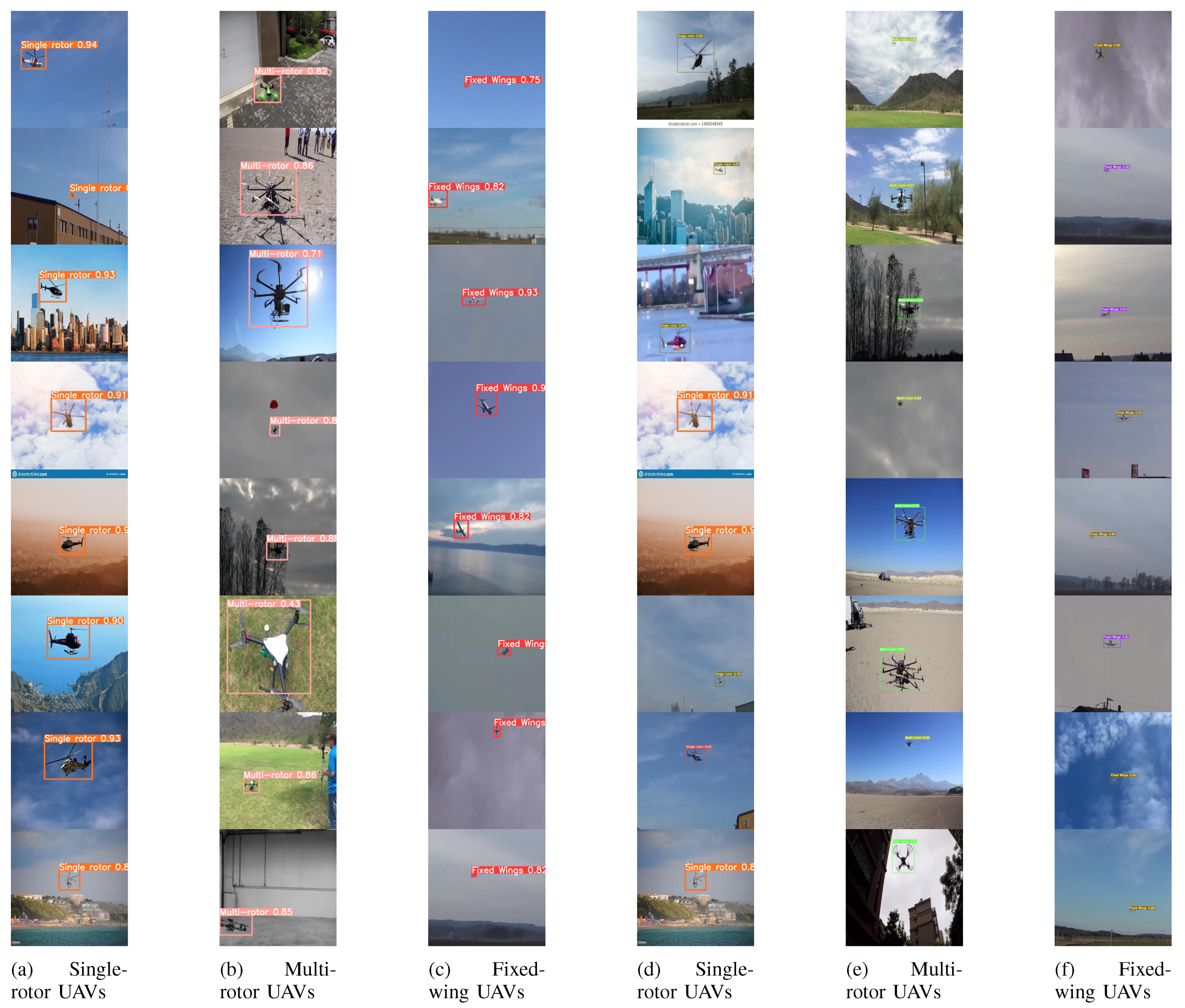

Table 1 gives us a detailed performance evaluation of each target class. TransLearn-YOLOv5l achieved the highest precision of 95.7% for single-rotor UAVs, the highest recall of 98% with the highest mAP of 99%, and the highest f1score of 95.96% for multi-rotor UAVs. This means that YOLOv5 has the best capability for real-time multi-rotor UAV detection in challenging conditions, as shown in

Figure 3-b. TransLearn-YOLOv7 achieved the highest precision of 96.4% and the highest f1score of 93.50% for single-rotor UAVs. It achieved the highest recall of 95.7% and the highest mAP of 97.1% for multi-rotor UAVs. This means that YOLOv7 has improved detection competence for both single and multi-rotor UAVs in challenging and complex conditions. For fixed-wing UAVs, TransLearn-YOLOv5l achieved 90.6% precision, and TransLearn-YOLOv7 achieved 92.2% precision, which proved the fact that fixed-wing UAVs are the hardest to detect by both models.

If we compare the overall/average metrics evaluation, then YOLOv7 achieved the best average precision of 94%, best average recall of 93.1%, best average mAP of 95.3%, and the best average f1score of 92.44% for multi-class detection and classification of UAVs. This makes YOLOv7 the best model for anyone wanting to perform multi-sized and multi-class UAV target detection in challenging backgrounds and complex weather conditions. In real-time, whenever we want a model to recognize UAVs operating inside the specified territory, then, by seeing the overall precision performance, [

22] we suggest that YOLOv7 be used. The recall score indicates the model’s learning ability to properly identify positives. It also assesses the effectiveness of the algorithm based on the correctness of all positive predictions [

23]. YOLOv7 has a higher overall recall score, which indicates that it has an increased and efficient ability to perform classification. Multi-rotor has the highest true positive rate (TPR) of 98% for the YOLOv5 model, while single-rotor has the highest TPR of 92% for the YOLOv7 model, while fixed-wing UAV showed the highest TPR of 93% during YOLOv7 model training. TRP is also called "sensitivity." That means that the YOLOv7 model is most sensitive to single-rotor and fixed-wing UAVs.

Figure 4 shows the results of the models when they were tested with very small targets for detection and classification. YOLOv7 achieved the best test accuracy of 98% for single-rotor UAVs and the best test accuracy of 90% for fixed-wing UAVs, while YOLOv5 achieved the best test accuracy of 87% for multi-rotor UAVs. YOLOv5l has 468 layers, 46149064 training parameters, and 46149064 training gradients with 108.3 GFLOPs, while YOLOv7 utilized 415 layers total and extracted 37207344 training parameters and 37207344 training gradients with 105.1 GFLOPs for 50 epochs.

6. Comparison with State-of-the art

The state-of-the-art comparison of the TransLearn-YOLOv5l and TransLearn-YOLOv7 with the schemes mentioned in the literature is shown in

Table 2. It is evident that the mAP of YOLOv5 and v7 with transfer learning approach has outperformed the work given in [

10,

13,

14,

15,

16] with reduced amount of training. The TransLearn-YOLOv7 also performed well in terms of F1 score and yielded the highest value compared to both the YOLOv4 and YOLOv5 existing schemes. Moreover, we identified that no prior work has considered YOLOv7 transfer learning for drone detection and classification.

7. Conclusion

In this paper, we show how a single-stage object detector (YOLOv5/v7) with transfer learning approach can detect and identify multi-rotor, fixed-wing, and single-rotor UAVs. That’s why we grounded a multi-class UAV dataset with automatic annotation, and then we made sure that all classes had the same number of images for model training. This step removed the problems of data imbalance and model over-fitting. This dataset contained images with varied, complex, and challenging backgrounds, which increased the trained model’s credibility for real-time detection. The results showed that the trained models can perform multiclass classification and detection with high precision and mAP. In our future study, we intend to prove the feasibility of detecting small flying objects through camera images using our improved drone detector in real-time with implementation on leading-edge devices.

Acknowledgments

This work was supported by the Higher Education Commission (HEC) Pakistan under the NRPU 2021 Grant#15687.

References

- Wang, C.; Tian, J.; Cao, J.; Wang, X. Deep Learning-Based UAV Detection in Pulse-Doppler Radar. IEEE Transactions on Geoscience and Remote Sensing 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Yavariabdi, A.; Kusetogullari, H.; Celik, T.; Cicek, H. FastUAV-net: A multi-UAV detection algorithm for embedded platforms. Electronics 2021, 10, 724. [Google Scholar] [CrossRef]

- Xue, W.; Qi, J.; Shao, G.; Xiao, Z.; Zhang, Y.; Zhong, P. Low-Rank Approximation and Multiple Sparse Constraint Modeling for Infrared Low-Flying Fixed-Wing UAV Detection. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2021, 14, 4150–4166. [Google Scholar] [CrossRef]

- Casabianca, P.; Zhang, Y. Acoustic-based UAV detection using late fusion of deep neural networks. Drones 2021, 5, 54. [Google Scholar] [CrossRef]

- Lykou, G.; Moustakas, D.; Gritzalis, D. Defending airports from UAS: A survey on cyber-attacks and counter-drone sensing technologies. Sensors 2020, 20, 3537. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.U.; Alam, M.Z.; Orakazi, F.A.; Kaleem, Z.; Yuen, C. ; others. SafeSpace MFNet: Precise and Efficient MultiFeature Drone Detection Network. arXiv preprint, 2022; arXiv:2211.16785. [Google Scholar] [CrossRef]

- Misbah, M.; Khan, M.U.; Yang, Z.; Kaleem, Z. TF-Net: Deep Learning Empowered Tiny Feature Network for Night-time UAV Detection. arXiv preprint, 2022; arXiv:2211.16317. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv preprint, 2022; arXiv:2207.02696. [Google Scholar] [CrossRef]

- Unlu, E.; Zenou, E.; Riviere, N.; Dupouy, P.E. Deep learning-based strategies for the detection and tracking of drones using several cameras. IPSJ Transactions on Computer Vision and Applications 2019, 11, 1–13. [Google Scholar] [CrossRef]

- Samadzadegan, F.; Dadrass Javan, F.; Ashtari Mahini, F.; Gholamshahi, M. Detection and Recognition of Drones Based on a Deep Convolutional Neural Network Using Visible Imagery. Aerospace 2022, 9, 31. [Google Scholar] [CrossRef]

- Wisniewski, M.; Rana, Z.A.; Petrunin, I. Drone model classification using convolutional neural network trained on synthetic data. Journal of Imaging 2022, 8, 218. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Luo, H. An Improved Yolov5 for Multi-Rotor UAV Detection. Electronics 2022, 11, 2330. [Google Scholar] [CrossRef]

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Samadzadegan, F.; Dadrass Javan, F.; Ashtari Mahini, F.; Gholamshahi, M. Detection and Recognition of Drones Based on a Deep Convolutional Neural Network Using Visible Imagery. Aerospace 2022, 9, 31. [Google Scholar] [CrossRef]

- Coluccia, A.; Fascista, A.; Schumann, A.; Sommer, L.; Dimou, A.; Zarpalas, D.; Méndez, M.; De la Iglesia, D.; González, I.; Mercier, J.P.; others. Drone vs. bird detection: Deep learning algorithms and results from a grand challenge. Sensors 2021, 21, 2824. [Google Scholar] [CrossRef] [PubMed]

- Dadrass Javan, F.; Samadzadegan, F.; Gholamshahi, M.; Ashatari Mahini, F. A Modified YOLOv4 Deep Learning Network for Vision-Based UAV Recognition. Drones 2022, 6, 160. [Google Scholar] [CrossRef]

- Zitar, R.A. A Less Complexity Deep Learning Method for Drones Detection. IRC: International Conference on Radar 2023, 2023.

- Öztürk, A.E.; Erçelebi, E. Real UAV-bird image classification using CNN with a synthetic dataset. Applied Sciences 2021, 11, 3863. [Google Scholar] [CrossRef]

- Yang, T.W.; Yin, H.; Ruan, Q.Q.; Da Han, J.; Qi, J.T.; Yong, Q.; Wang, Z.T.; Sun, Z.Q. Overhead power line detection from UAV video images. 2012 19th International Conference on Mechatronics and Machine Vision in Practice (M2VIP). IEEE, 2012, pp. 74–79.

- Bai, F.; Sun, H.; Cao, Z.; Zhang, H. Low-altitude drone detection method based on environmental antiinterference. ICMLCA 2021; 2nd International Conference on Machine Learning and Computer Application. VDE, 2021, pp. 1–5.

- Liu, W.; Quijano, K.; Crawford, M.M. Yolov5-tassel: Detecting tassels in rgb uav imagery with improved yolov5 based on transfer learning. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Kaleem, Z.; Rehmani, M.H. Amateur drone monitoring: State-of-the-art architectures, key enabling technologies, and future research directions. IEEE Wireless Communications 2018, 25, 150–159. [Google Scholar] [CrossRef]

- Anwar, M.Z.; Kaleem, Z.; Jamalipour, A. Machine learning inspired sound-based amateur drone detection for public safety applications. IEEE Transactions on Vehicular Technology 2019, 68, 2526–2534. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).