2. Structural Models for Pion, Muon and Neutrino

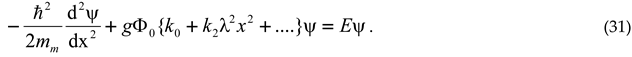

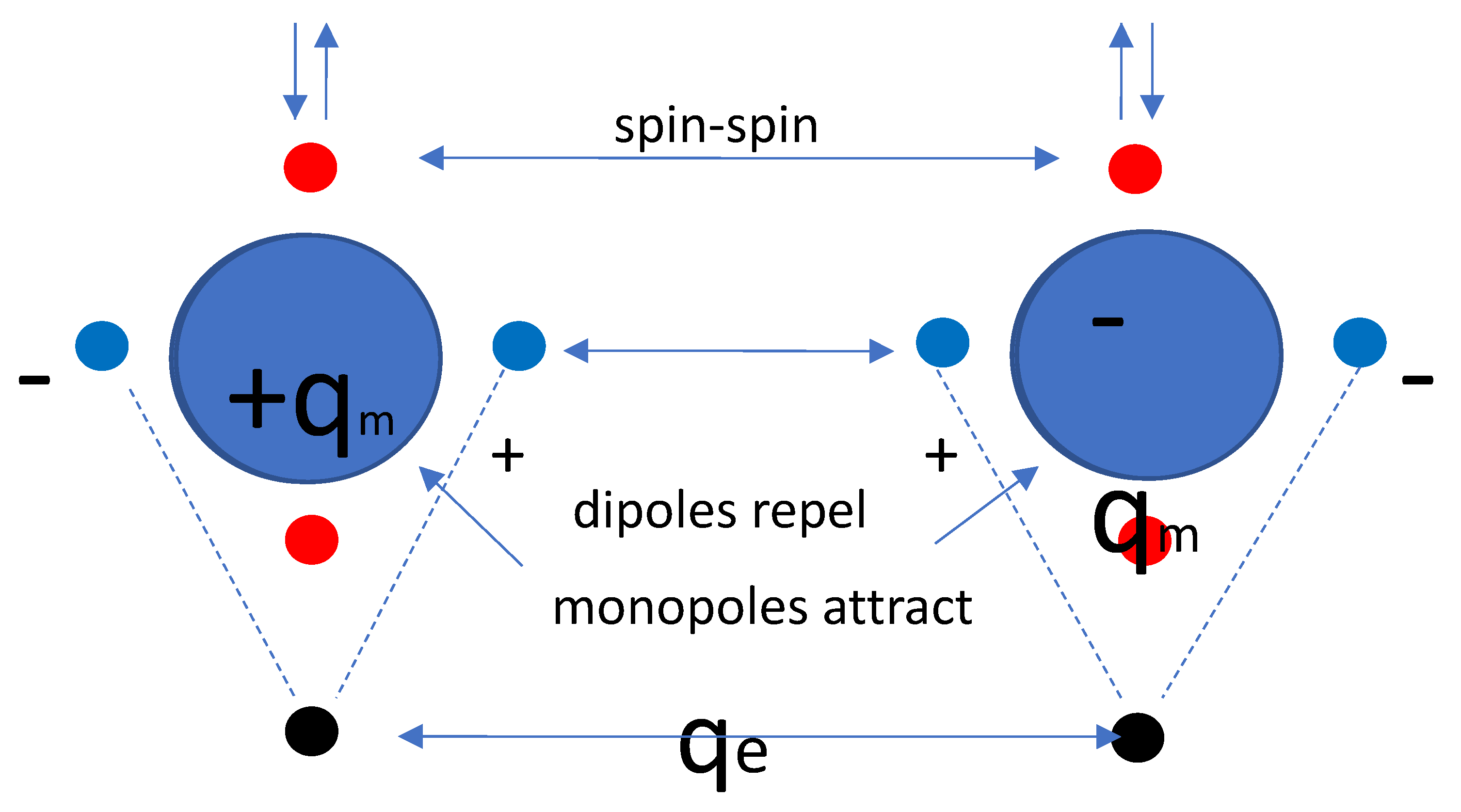

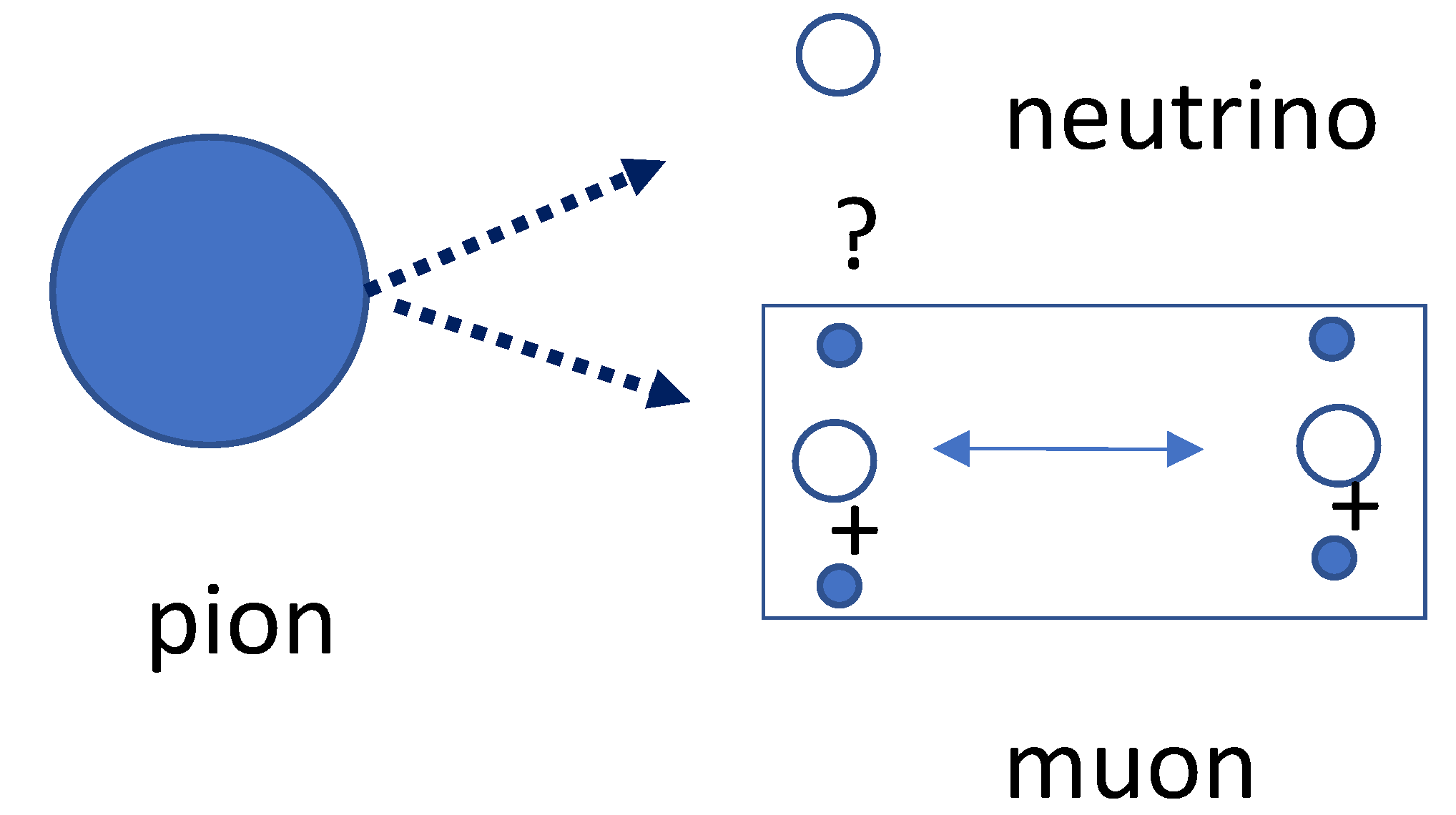

Figure 2 is an illustration of the structural model for a pion as developed by the author over the years [

1]. It shows that two quarks are structured by a balance of two nuclear forces and two sets of dipoles. The two quarks are described as Dirac particles with two real dipole moments as the virtue of particular gamma matrices. The vertical one is the equivalent of the magnetic dipole moment of an electron. The (real valued) horizontal dipole moment is the equivalent of the (imaginary valued) electric dipole moment of an electron [

11,

12].

In a later description, after recognizing that this structure shows properties that match with a Maxwellian description, the quarks have been described as magnetic monopoles in Comay’s Regular Charge Monopole Theory (RCMT), [

13,

14]. This allows to give an explanation of the quark’s electric charge by assuming that the quarks second dipole moments (the horizontal ones) coincide with the magnetic dipole moments of electric kernels

. This description allows to conceive the nuclear force as the cradle of baryonic mass (the ground state energy of the created anharmomic oscillator) as well as the cradle of electric charge.

The model allows a pretty accurate calculation of the mass spectrum of mesons. It also allows the development of a structural model of baryons including an accurate calculation of the mass spectrum of baryons as well. This calculation relies upon the recognition that the structure can be modelled as a quantum mechanical anharmonic oscillator. Such anharmonic oscillators are subject to excitation, thereby producing heavier hadrons with larger (constituent) masses of their constituent quarks. The increase of baryonic energy under excitation is accompanied with a loss of binding energy between the quarks. This sets a limit to the maximum constituent mass value of the quarks. It is the reason why quarks heavier than the bottom quark cannot exist and why the topquark has to be interpreted different from being the isospin sister of the bottom quark [

1].

Because lepton generations beyond the tauon have not been found, they probably don’t exist for the same reason. In such a picture the charge lepton structure would result from the decay loss of the magnetic charges of the kernels in the pion structure under simultaneous replacement by their electric charges. This structure is bound together by an equilibrium of the repelling force between the electric charges and the attraction force between the scalar dipole moments. In spite of its resemblance with the pion structure, its properties are fundamentally different. Whereas the pion consists of a particle and an antiparticle making a boson, the charged lepton consists of two kernels making a fermion.

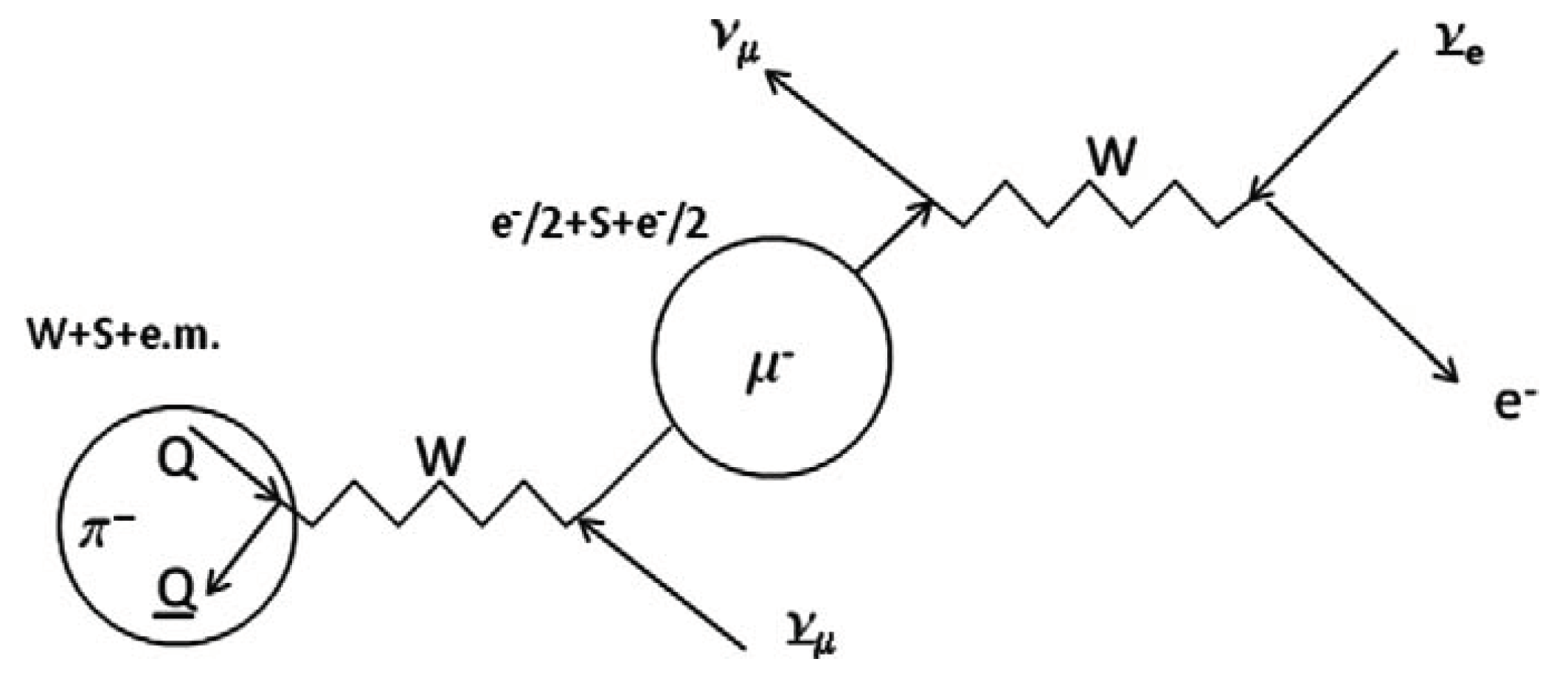

Figure 3 shows a naive picture of the decay process. Under decay, the pion will be split up into a muon and a neutrino. In the rest frame of the muon, the muon will obviously contain the electric kernels and some physical mass. The remaining energy will fly away as a neutrino with kinetic energy and some remaining physical mass.

Figure 3 shows the model, in which a structureless neutrino is shown next to a muon with a hypothetical substructure.

In this picture the muon is considered to be a half spin fermion in spite of the appearance of two identical kernels in the same structure. Assigning the fermion state to the structure seems being in conflict with the convention to distinguish the boson state from the fermion state by a naive spin count. Instead, a true boson state for particles in conjunction should be based upon the state of the temporal part of the composite wave function. In this particular case, the reversal of the particle state into antiparticle of one of the quarks marks a transformation from the bosonic pion state into the fermionic muon state under conservation of the weak interaction bond.

Let us proceed from the observation that there is no compelling reason why the weak interaction mechanism between a particle and an antiparticle kernel would not hold for two subparticle kernels. In such model, the structure for the charged lepton is similar to the pion one. It can therefore be described by a similar analytical model. Hence, conceiving the muon as a structure in which a kernel couples to the field of another kernel with the generic quantum mechanical coupling factor

, the muon can be modeled as a one-body equivalent of a two-body oscillator, described by the equation for its wave function

,

In which is Planck’s reduced constant, 2 the kernel spacing, is the effective mass of the center, its potential energy, and the generic energy constant, which is subject to quantization. The potential energy can be derived from a potential . Similarly as in the case of the pion quarks, this potential is a measure for the energetic properties of the kernels. It characterized by a strength (in units of energy) and a range (in units of length: the dimension of is [m-1]).

The potential

of a pion quark has been determined as,

These quantities have more than a symbolic meaning, because in the structural model for particle physics developed so far [

1],

has been quantified by

, in which

(

126 GeV) is the energy of the Higgs particle as the carrier of the energetic background field. The quantity

has been quantified by

, in which

(

80.4 GeV) is the energy of the weak interaction boson. An equal expression for the potential

would make the muon model to a Chinese copy of the pion model.

Instead, we wish to describe the potential

of the muon kernels as,

The rationale for this modification is twofold. In the structural model for the pion, the exponential decay is due to the shielding effect of an energetic background field. If the muon is a true electromagnetic particle, there is no reason why its potential field would be shielded. This may explain why an additional energetic particle (the neutrino) is required to compensate for the difference between the shielded and the unshielded potential. As shown in the Appendix of ref. [

9], the format of the wave function is holds along the dipole moment axis of two-body structures with energetic monopole sources that spread a radial potential field

and a dipole moment field

.

Similarly as in the pion case, it will make sense to normalize the wave function equation to,

and

In which

and

are dimensionless coefficients that depend on the spacing 2

between the quarks. To proceed it is imperative to establish a numerical value for the constant

. This touches an essential element of the theory as outlined in this essay. In a classical field theory, the baryonic mass

has no relation with the potential field parameters in terms of strength

, range

and coupling constant

. In this case the bare mass of the two kernels don’t contribute to the baryonic energy built up in the anharmonic oscillator that they compose. This baryonic energy distributed over the two kernels can be conceived as constituent mass that compose

. In previous work [

15,

16] it has been shown that Einstein’s theory of General Relativity (GR) allows to calculate this baryonic energy from the potential field of the two kernels. This requires the recognition that the kernels are Dirac particles with particular gamma matrices giving the kernels a real valued second dipole moment, thereby giving rise to a quasi-harmonic nature to the quantum mechanical oscillator that they build.

This view enables to identify

as [

1,

15,

16],

I wish to emphasize that these two elements, i.e., application of Einstein’s GR and recognition of the particular nature of the quarks as Dirac particles) have not been recognized, hence not been credited), in the Standard Model of particle physics. It makes a structured model as outlined in this essay possible, thereby revealing quite some unrecognized relationships between quantities and concepts that in the present state of particle physics theory are taken as axioms.

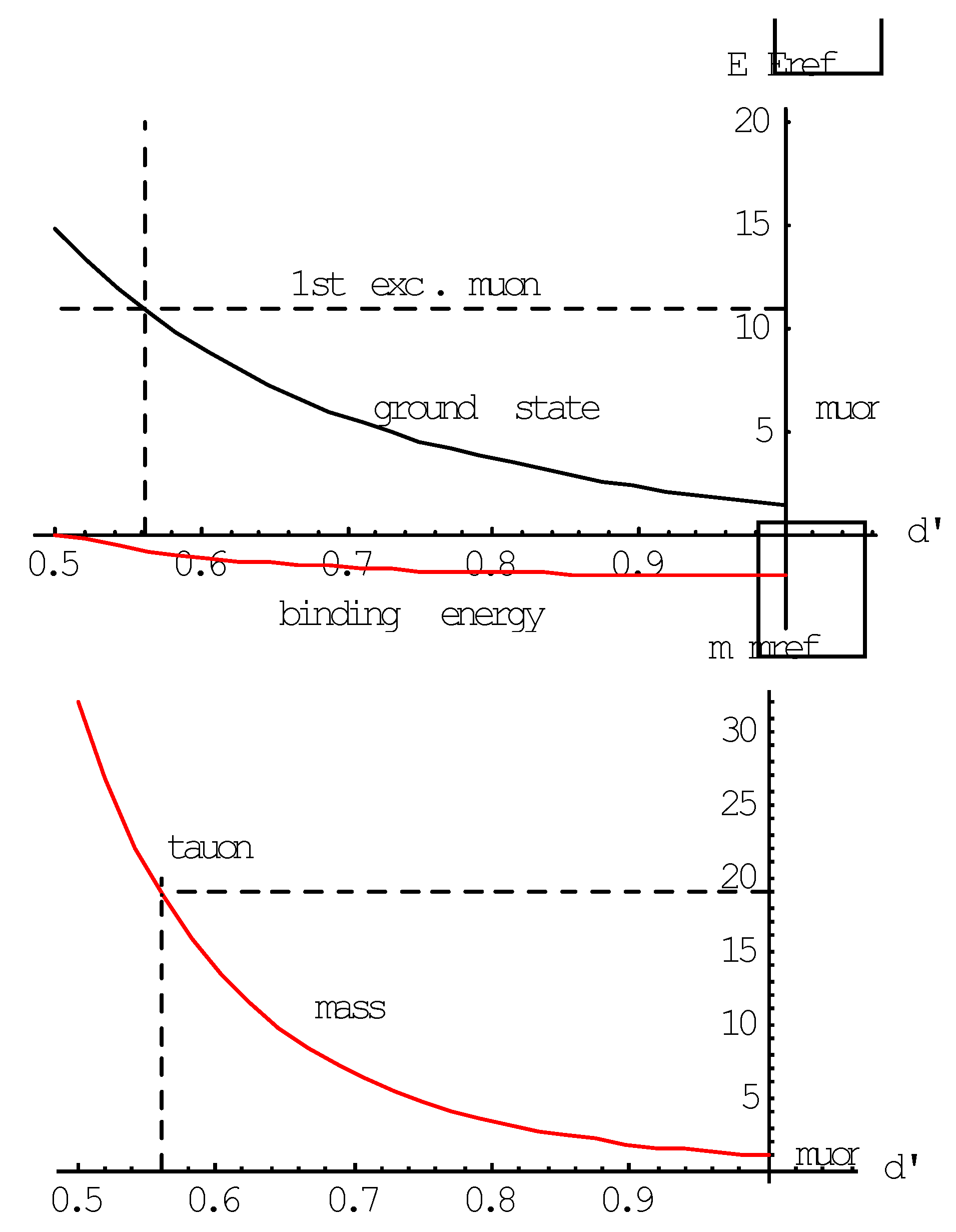

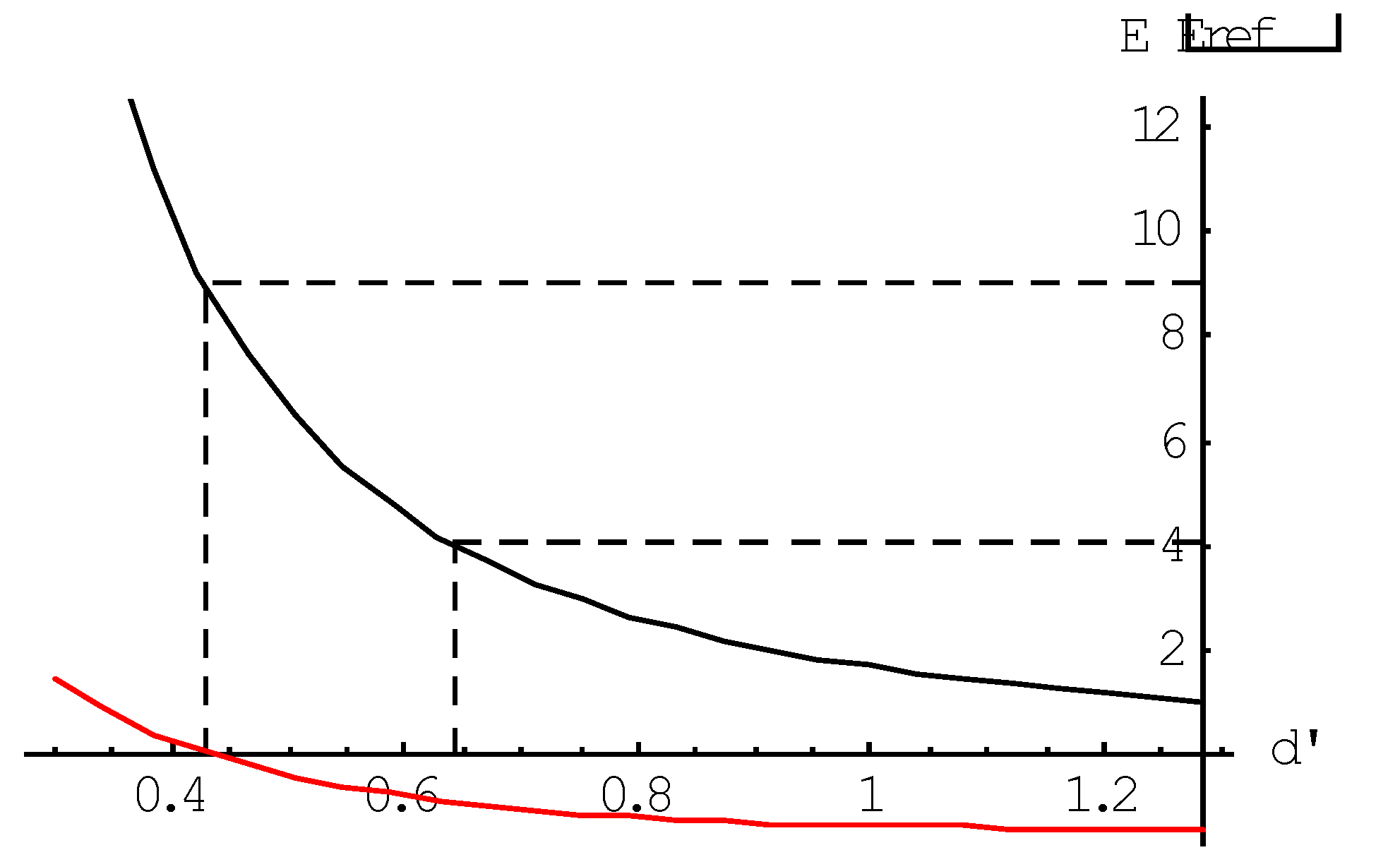

The muon model shown by (4) and (5) is the same as the model of the pion discussed in [

1]. The potential function of the kernels, though, shown in (3) is slightly different. This has an impact on the constants

and

, but that is all. It means that, similarly as the pion meson, charged lepton muon is subject to excitation. It explains why the tauon can be conceived as a scaled muon. The muon and the tauon are charged leptons in different eigenstates. The kernel spacing in the tauon is smaller than in the muon, thereby increasing its rest mass. Details can be found in [

9].

Figure 4 shows the result. The calculated tau mass is 1.89 GeV/c

2. This is rather close to the tau particle’s PDG (Paricle Data Group) rest mass (1.78 GeV/c

2), [

17]. The difference is due to a slight inaccuracy of the calculation. The picture shows that the loss of binding energy prevents the existence of other charged leptons.

The pion model shown in

Figure 2 illustrates that the polarity of the real valued second dipole moments of the quarks is fixed by the structure. It implies that the polarity of the magnetic dipole moments of the two electric kernels is fixed by the structure as well. This loss of freedom in orientation of the dipole moments is the reason why the initial spatial orientation of dipole moment of a muon that results from the pion decay is not a free choice. Once made free, the dipole moment orientation (= spin) of the muon can be influenced under external influence. This however, cannot be done for the accompanying neutrino in the decay process, because of its insensitivity for external influences. It explains why neutrinos are left handed.

3. Fermi’s Theory

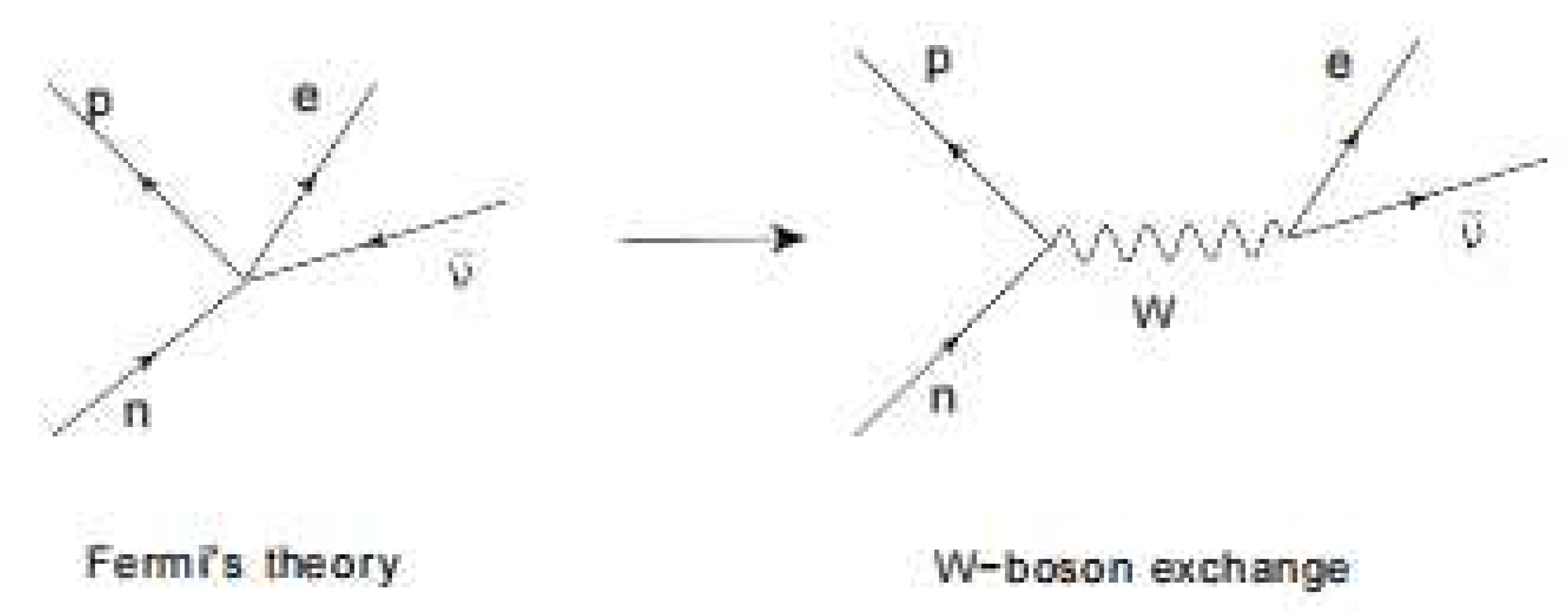

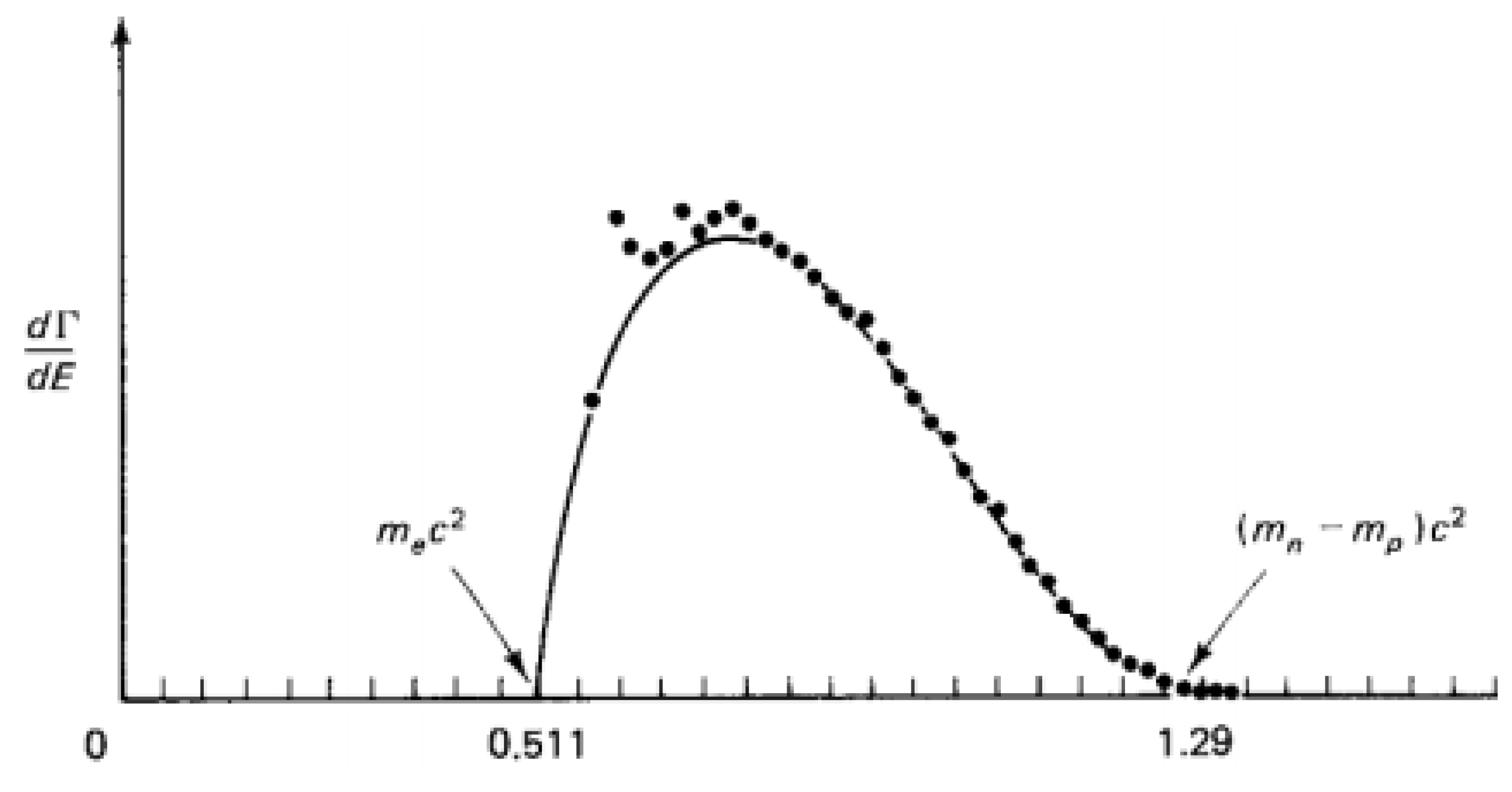

Unfortunately, the excitation model muon-tauon does not hold for the electron-muon relationship. Whereas the muon and the tauon are interrelated because of their internal weak interaction bond, such a bond does not exist for the electron, because the electron is produced as a decay from the muon caused by the loss of this bond. It is instructive to analyze this decay process on the basis of Fermi’s theory, which as forerunner of the weak interaction theory by bosons, describes the origin of the neutrinos as the inevitable consequence from the shape of the energy spectrum of electrons shown in measurements on beta decay.

Fermi's model is conceptually simple, but rather complex in its implementation. For the purposes of this essay, a simplified representation will suffice, illustrated in the left hand part of

Figure 5. This shows the decay of a neutron into a proton under

release of an electron. To satisfy the conservation of energy, the energy difference

between the neutron and the proton must be absorbed by the energy

of the electron and the energy

of the neutrino moving at about the speed of light. To satisfy momentum conservation, the momentum

of the nucleon must be taken over by the momenta

and

of the electron and neutrino, respectively, so that

To explain the occurring energy spectrum of the electron, Fermi has developed the concept of density of states. This is based on the assumption that fermions, such as the electron and the neutrino, despite their pointlike character, require a certain spatial space for their wave function that does not allow a second wave function of a similar particle in the same space. That comes down to respecting the exclusion principle that was posited by Pauli in 1925 in the further development of Niels Bohr's atomic theory from 1913.

In a simple way, that spatial space is a small cube whose sides are determined by half the wavelength (

) of the fermion. A volume

contains

fermions. So,

For a particle in a relativistic state holds

. In that case, after differentiating,

A somewhat more fundamental derivation in terms of a spheric volume split up by solid angles

expresses this differential density of states as,

The difference is about a factor of 2. The difference can be explained, because the roughly derived quantity admits two particles in the interval by taking into account a difference in spin. The differential density of states that can be attributed to the decay product of neutron to proton is equal to the product of the contributions of the electron and of the neutrino, so that

For the differentiated density of states holds,

For the electron, which is not necessarily in a relativistic state, holds

Since the neutrinos do not contribute to the spectrum of the electrons, the spectral density of the electrons can be calculated by integrating over the moments of the neutrinos. Hence,

Since the decay occurs from the nucleon in state of rest, the conservation law of momentums prescribes

. For the neutrino it then follows that,

It will be clear that the first term in

is infinitesimally small with respect to the second term and that therefore,

So here is the number of electrons per m3 in an energy interval between and . In terms of energy, therefore,

, so that

This expression can be rewritten as,

, with

The relationship takes on a simple form if the decay product is relativistic. This is usually true when muons decay into electrons, because in the major part of the spectrum

. In that case,

The relevance of this expression because clear from Fermi's "Golden Rule", which relates the half-life

(in which half of a nuclear particle decays) and the integral

of the decay spectrum,

This expression shows that the lifetime of a nuclear particle is determined by the time it takes to build up the decay spectrum with the total energy value

. Note that the expression resembles Heisenberg's uncertainty relation,

The rule is based on a statistical analysis of the decay process, the details of which can be found in literature [

18,

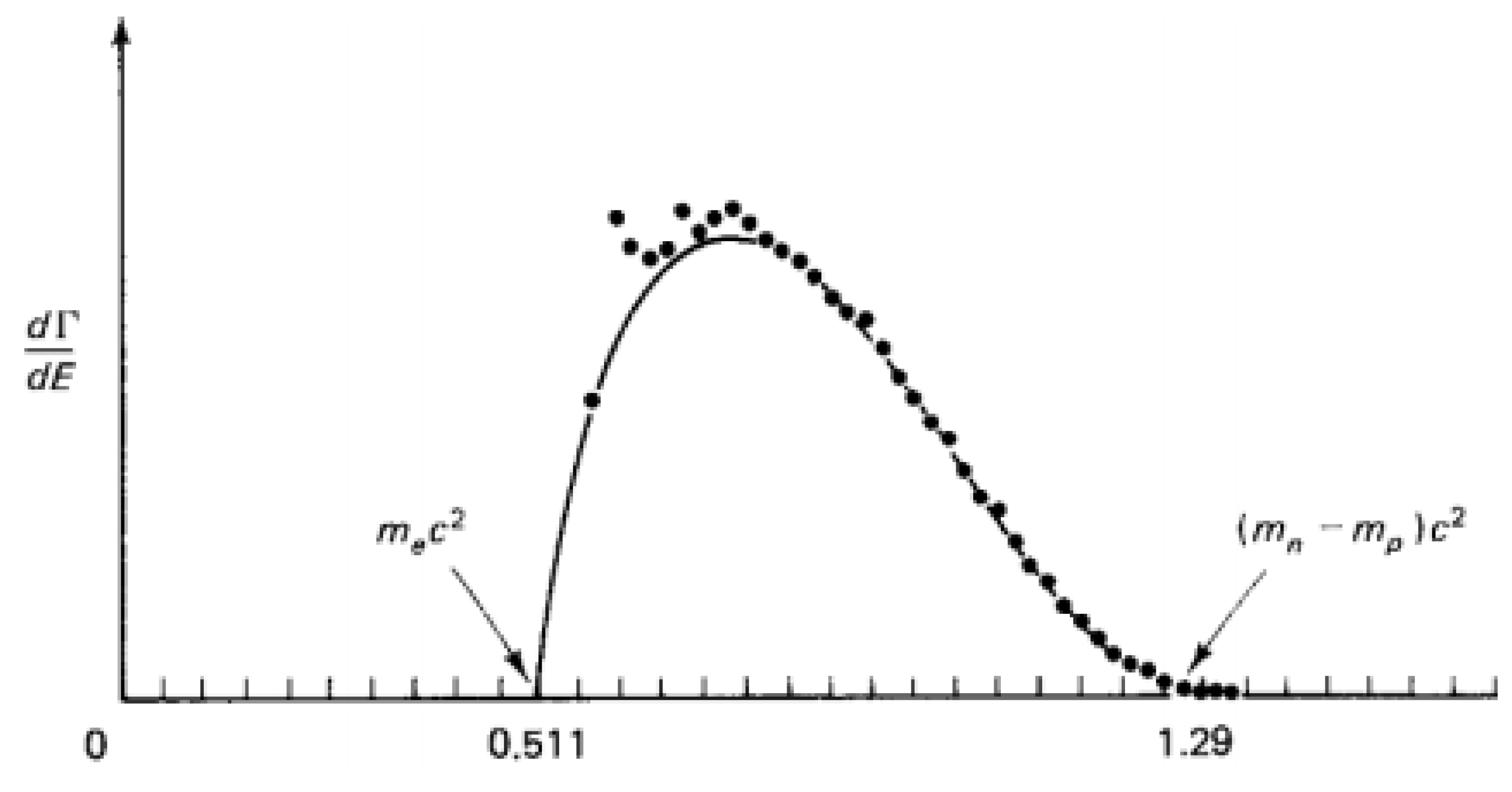

19]. The calculated energy density spectrum is shown in

Figure 6. The fifth-power shape, which relates the massive energy

of a nuclear particle to its decay half-life, is known as (Bernice Weldon) Sargent's law, as formulated empirically in 1933.

It would be nice if it would appear being possible to derive by theory an estimate for the numerical value of . Let us see if this can be done.

If we would know , the constant can be calculated. Since the calculation of the density of states assumes the spatial boundary of the wave function of the decay products, it is reasonable to assume that the volume is determined by the spatial boundary of the wave function that fits the energy from which the decay products originate, so that

, and therefore

in which

the energy that determines the formation of the decay products. As illustrated in the right-hand part of

Figure 5, Fermi’s point contact model has been revised after the discovery of

bosons as carriers of the weak force, which is seen as the cause of the decay process. This insight led to the model shown in the right half. Zooming in on it shows that the neutron decay is caused by the change in composition of the nucleon from

. This is shown in the left-hand part of

Figure 7. This picture is symbolic, because it suggests that the energy difference between a

quark and a

quark is determined by a

boson, while we know that the free state energy of a boson is 80.4 GeV and there is only a 1.29 MeV mass difference between the

neutron and the

proton.

The figure in the middle gives a more realistic picture of the

boson. A positive pion decays in its entirety via a

boson into a (positive) muon and a neutrino. It is more realistic, because earlier in this essay we established that the pion moving at about the speed of light has a rest mass of 140 MeV, while it behaves relativisticly as 80.4 GeV prior to its decay into the muon's rest mass of 100 MeV plus a neutrino energy (that in the rest frame of the muon corresponds to a value of about 40 MeV). A (negative) muon in turn decays into electrons and antineutrons as shown in the right part of the figure. It will be clear from these figures that one

boson is not the other. Only the middle image of

Figure 7 justifies its energetic picture. Hence, as discussed earlier in the essay, the 140 MeV rest mass of the pion is the non-relativistic equivalent of the 80.4 GeV value of the boson.

These considerations lead us to consider the decay process from pion to muon to be the most suitable one to calculate the constant

. Because the pion decays to a muon, the rest mass of the muon (= 100 MeV) takes the place of the electron before. The energy

from which the decay product arises is the energy of the free state

boson, so that

This makes = 2.38 10-6 GeV-2.

This result has a predictive value, because it allows to calculate the decay time of any nuclear radioactive particle from the reference values of, respectively, the weak interaction boson

and the massive energy of the muon (

105 MeV). The result thus calculated for the muon itself is

2.56 x 10

-6 s. This compares rather well with the experimentally established value

2.2 × 10

−6 s reported by the Particle Data Group (PDG) [

17].

Let us emphasize that in this text the result is obtained by theory. The canonical theory is less predictive. Instead, the empirical established value of the muon’s half life is invoked for giving an accurate value for Fermi’s constant. The analytical model described in Griffith's book [

19] gives as canonically defined result,

The factor 192 (= 2 x 8 x 12) is the result of integer numerical values that play a role in the analysis. Unlike the predictive model described in this paragraph, this relationship does not allow to calculate the decay time of the muon. Instead, taking the experimentally measured result of this decay time (= 2.1969811 × 10−6 s) as a reference, the quantity , dubbed as Fermi’s constant is empirically established as 1.106 10-5 GeV-2. It confirms the predictive merit of .

In Griffith's book the factor

is deduced as,

Herein

is an unknown quantum mechanical coupling factor. In the Standard Model this is related to the weak force boson

and the vacuum expectation value

determined by the parameters of the background field, such that

This establishes a link between

and

such that

Unlike in the predictive model just described, these relations do not allow to calculate the decay time

of the muon. Instead, taking the experimentally measured result of this decay time

(= 2.1969811 × 10

−6 s) as a reference, the quantities are numerically assigned,

For the sake of completeness it should be noted that in the weak force theory of Glashow, Salam and Weinberg (GSW), this coupling factor is further nuanced.

In the first part of the essay it has been noted that the structural model, as described in the first part of this essay, has a different definition for the coupling factor and what it is based on. Whereas in the Standard Model the coupling is defined as , in the structural model the semantics are somewhat different. In the latter model we have , in which is taken as the square root of the electromagnetic fine constant (√137)-1. This gives a different value for . Under maintenance of the vacuum expectation relationship , we have different values for the Higgs parameters and . In both models, however, we have the same semantics for , because of its relationship with the Higgs boson ( [1,19 p. 364]). This makes the value different.

The theory as described in this text does not allow to relate the rest masses of the muon and the tauon with the rest mass of the electron. Within the Standard Model the three charged leptons are considered as elementary particles. Hence, their rest masses are taken for granted. What has been shown in this text, though, is, that the rest masses of the muon and the tauon are related by theory. Further detailing of the rest mass values requires a link with gravity. In this text it has been shown that the rest mass of the muon is related by theory with the rest mass of the pion. The pion’s rest mass relies upon the rest mass of the quark. Also here, a link with gravity is needed to reveal the true value for the quark’s rest mass. It will be discussed in paragraph 5 of this article.

What can be done for the mass of the neutrino, though, is a measurement on the basis of an careful analysis of the spectrum of beta radiation produced by the radioactivity of a suitably chosen element.

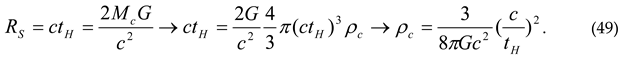

Figure 6 is suited for explaining the principle. It shows that the endpoint of the graph is characteristic for the maximum energy that an electron in the decay process may get. The difference between the measured energy of the fastest electrons with the theoretical endpoint must be equal to the rest mass of the electron neutrino. The accuracy obtained with KATRIN (KArlsruhe TRItium Neutrino) experiment, has enabled to assess an upper limit for the mass of the electron neutrino, such that

0.8 eV/c

2, [

21].

4. The Eigenstates of Neutrinos

Considering that the tauon is the result of the excitation of the muon modelled as an anharmonic oscillator built up by two kernels, it is worthwhile to consider the possibility that the muon neutrino is subject to excitation as well. Considering that the potential is a measure of energy and that the break-up of a pion into a muon and a neutrino takes place under conservation of energy, it is fair to conclude that the neutrino can be described in terms of a potential function as well, such that

We may even go a step further by supposing that similarly as the muon, the neutrino can be modelled by a composition of two kernels as well. If so, each of these neutrino kernels have a potential function

, such that

It is instructive to emphasize that the potential function of a particle, be it a quark, a charged lepton or a neutrino, does not contain any information about its mass. In that respect it is not different from the potential function of a charged particle like an electron. Furthermore it is of interest to emphasize that, like mentioned before, the quantities and have a physical meaning in quantitative terms. Similarly as in the muon case, this equation can be written as,

The only difference so far is that the expansion of the potential Function (4) will result in other values for the dimensionless constants

and

. Doing the same as with pions and muons we find three possible eigenstates before the binding energy is lost. The result is shown in

Figure 8.

This eigenstate model for neutrinos shares quite some characteristics with the present adopted model of neutrinos as in 1957 heuristically proposed Pontecorvo. [

8] Quite some questions, though, still remain. It is for instance not clear why neutrinos should interact on their own, making different flavour states, without being influenced by their charged partners. One of the issues to be taken into consideration is the statistical character of particle physics. Taking as an example the break-down of the weak interaction of muons into electrons and neutrinos, one might expect that the produced neutrinos are not only subject to a statistical distribution of their kinetic energy, but that their distribution over the three possible eigenstates is subject to a statistical behaviour as well. While in this view any of the neutrinos will physically be in different defined eigenstates out of three, one may adopt a mathematical description captured in a single matrix, common for all neutrinos in the decay of a specific neutrino flavour, such as done in the now commonly accepted theory of neutrinos [

10]. In fact, however, this is no more than a projection of the statistical behaviour of a multi-particle system on a single virtual particle.

Taking this in mind, let us consider the decay process of the pion, as shown in

Figure 9. There is no reason why the decay shown in would not be reversible, like shown by all quantum mechanical processes. It means that the neutrinos may change flavour, but not on their own. An electron neutrino with high energy may recoil with an electron to produce a weak interaction boson that subsequently disintegrates into a muon and an muon antineutrino. This observation, however, does not solve the solar neutrino problem mentioned before. And that problem is usually tackled by assuming that neutrinos may change for whatever reason flavour on their own under influence their eigenstate behaviour. But why should they do so?

It is in the author’s view quite probable that the answer to this question has to do with an interpretation of experimental evidence. A neutrino can only be detected if it produces its charged lepton partner. Such a production can be understood from the reverse process just described. As compared to the forward mode, the reverse mode is not impossible, but unlikely. This implies that the instrumentation for neutrino detection is based on the counting of rare events over considerable time. A beautiful example is the method used in the Super-Kamiokande experiment, which is based upon the detection of Cherenkov radiation [

22]. This radiation is produced by electrons and muons that may propagate in water at a faster speed than the light in water does. The radiation profile from electrons and muons produced by the matter interaction between neutrinos and (heavy) water molecules is slightly different. This difference enables to distinguish between electron-neutrinos and muon-neutrinos. The experimental evidence that the sum of the electron-neutrino counts and the muon-neutrino counts is equal to the corresponding calculated amount of solar electron-neutrinos is presently taken as proof that neutrinos change flavours on their own, thereby solving the mystery of the missing solar neutrinos.

Another explanation could be that the production of neutrinos in the reverse decay process is somewhat different from the production of neutrinos in the forward process. Whereas in the forward process the neutrinos are emitted in a certain flavour dependent distribution over the three eigenstates, the production of charged leptons in the reverse process might be selective on eigenstates. This would mean, that flavour changes between neutrinos on their own are non existing. It also implies that the oscillation phenomenon as observed in instrumentation based upon the detection of beat frequencies of propagating neutrino wave functions is not due to physical interaction between the neutrino flavours, but that this phenomenon is a result from non interacting physical eigenstates propagating at slightly different speeds. This means that the observed phenomenon is incorrectly interpreted as an oscillation between flavour states. Although this view does not allow to calculate the mass of the eigenstates, it allows to calculate the mass ordering between the eigenstates. It is shown in

Table 1.

It also allows to calculate the spacing between the two poles (kernels) in the neutrinos. For the ground state neutrino it amounts to

The size of the other two eigenstates is even smaller. Hence, this tiny size of the neutrinos allows to consider them within the scope of particle theory as pointlike particles, in spite of their two-pole substructure. This also holds for the muon and the tauon for which .

5. The Energetic Background Field (“Dark Matter”)

It has been shown so far that the view as exposed in this essay, in the first part and in this second part, allows to calculate the masses of the hadrons from the mass of the archetype quark, the masses of the charged leptons from the mass of the electron and the mass of the neutrinos from the eigenstate of the electron neutrino. This has been possible from the identification of the potential fields of these true basic elementary particles. However, the masses of these basic particles could not be assessed by theory. This wouldn’t be a problem if the masses of these three particles could be obtained from a direct measurement. Unfortunately, only for the electron means have found to do so. Lorentz force law and Newton’s second law of motion allow to develop equipment to measure the charge-to-mass ratio and Millikan’s oil drop experiment allows to determine the elementary electric charge.

The quark can only be retrieved from the particles they compose. This in spite of the claim in the Standard Model that all masses can be derived from the mass of the Higgs particle. Apart from the fact that the Higgs mass in the Standard Model needs an empirical assessment, the “naked” mass of quark is derived in lattice QCD not by “first principles” but, instead, by retrieving this mass from the rest masses of the pion and the kaon [

23], under the assumption that lattice QCD is a failure free theory.

In this essay the existence of the Higgs particle has been interpreted as the consequence of an energetic background field. This background field is responsible for shielding the potential field of quarks, such that the field, similarly as that of an electric charge in an ionic plasma [

24], decays as

. In this essay it has been shown that the range parameter

is related with the massive energy of the Higgs boson by

. It is as if this background energy consists of a space field

consisting of small tiny polarisable dipoles. In that case, the quark's static field can be written as a Poisson equation,

An analysis of the energetic dipole background field to be explained below shows that

can be written as,

Applying it, the Poisson equation can be rewritten to

The modification of Poisson’s equation is allowed by virtue of the relationship between the dipole moment density

and the space charge

, [

25],

Since in the static state eventually all dipoles within the range of the source will be polarized, the dipole moment density within this range is constant with value

. Hence,

and thus shows the same radial dependence as the regular potential profile at small

. Hence,s

It implies that the dipole moment density is fixed by the parameter and the source strength of the quark. Within the range of the source, the dipoles will be polarized, outside the source the dipole direction will no longer be directed, but will be randomly distributed by entropy in all directions. The number of dipoles per unit of volume will remain the same.

Let us proceed by discussing the potential nature of the energetic background field. If an elementary component of the background energy is a Dirac particle of the special type indeed, then it has two real dipole moments. One of the dipole moments can be polarized by the quark’s static potential. Its polarisable dipole moment

depends on an unknown charge

and an unknown small mass

. So,

The energetic background field will contain an unknown amount

of such particles per unit volume. With these quantities the density

can be expressed as

We may relate this ratio to the ratio of

to the "naked mass"

of the base quark according to,

These last two expressions allow us to express the bare mass of the quark into,

As noted above, the decay parameter

is related to the value of the Higgs boson. This value is derived in the frame of the pion. This frame flies almost at the speed of light. This imposes a relativistic correction on

. To this end, we first write

as,

We do so because we not only know the relativistic value

(80.4 GeV) of the weak force boson, but also its non-relativistic value, because (as described in the first part of this essay) this boson condenses as the rest mass of the pion (

140 MeV). This allows to correct

to,

The energy value of the “naked” quark mass then becomes

If we knew the quark mass, the density of energy carriers per unit volume of the background energy could be calculated. On the other hand, if we knew , we would be able to assess the quark’s “naked” mass.

Now it so happens that vacuum polarization is known in cosmology as well. There is an energetic background field that is responsible for the “dark matter” phenomenon. That dark matter causes objects at the edges of galaxies to orbit their kernels with a significantly faster orbital velocity than calculated by Newton's law of gravitation, even so by including corrections based on Einstein's Field Equation. In 1963, Mordehai Milgrom concluded that this in every known galaxy occurs to the same extent. In the absence of an explanation, he formulated an empirical adaptation to Newton's law of gravitation. This adaptation is characterized by an acceleration constant that is equal for all systems. Its value is

1.25 x 10

-10 m/s

2. In 1998, Vera Rubin reported another unexpected cosmological phenomenon. She concluded a more agressive expansion rate of the universe than the constant rate assumed on the basis of Einstein's Field Equation. Scientists reconciled the latter phenomenon with theory, by assigning a finite value to Einstein’s Lambda (

) in the famous Field Equation, which until then had been assumed to have a value of zero. The consequence of this is that the universe must have an energetic background field. Based on cosmological observations of these two phenomena, it has finally been empirically established that the matter in the universe is divided up into three components

0.0486 + 0.210 + 0.741 = 1, which are, respectively, the ordinary baryonic matter, the unknown dark matter and the unknown dark energy [

26]. In [

27], it is described how this relationship between these components can be theoretically determined.

The key to this is to revise the view that Einstein's Lambda is the cosmological constant as being a constant of nature. In Einstein's 1916 work on General Relativity, this quantity appears only as a footnote with the remark that an integration constant in the derivation is set to zero. See the footnote on p. 804 in [

28]. Steven Weinberg in 1972 valued this footnote and promoted this integration constant to the Cosmological Constant [

29]. Strictly speaking, though, this quantity is a constant in terms of space-time coordinates. It may depend, in theory at least, on coordinate-independent properties of a cosmological system under consideration (a solar system, a galaxy, the universe) like, for example, its mass. In that case, only at the level of the universe it is justified to qualify Einstein’s as the Cosmological Constant. In [

13] the relationship is derived that,

in which Einstein's is found to depend on the gravitational constant , the baryonic mass and on Milgrom's acceleration constant . Moreover, it turns out that the latter depends on the relative amount of the baryonic mass in the universe and on its Hubble age (13.6 Gigayears), such that

This implies It that it is not Einstein's to be regarded as the true Cosmological constant, but that this qualification rather applies to Milgrom's acceleration constant. In this theory, the distribution of the three energy elements of the universe can be traced back to a background energy consisting of elementary components with a “gravitational” dipole moment . Within the sphere of influence of the baryonic mass these are polarized and are by entropy randomly oriented outside it.

Considering that both the nuclear background energy and the cosmological background energy may consist of elementary polarizable components, it would be strange if these components were not the same. The cosmology theory developed in [

13] allows to determine the density

of these particles as,

Although at the gravitational level this is an extremely high density, at the nuclear femtometer level (10

-15 m) sustaining the hypothesis seems falling short. Nevertheless, if

is used to calculate the bare mass of the quark as deduced above, it turns out that

The dilemma disappears by remembering that volumes should be considered at wavelength level. Anyhow, that is what Fermi's theory described earlier in this text has taught us. A mass of 1.34 MeV/c2 corresponds to a De Broglie wavelength of 885 fm. That brings us to the picometer level. This is wide enough to consider the background energy effectively uniformly distributed at the quark level. The “naked” mass of the archetype quark as derived in this text depends on six physical quantities. Two of them are generic constants of nature: the vacuum light velocity, and Planck’s constant. Two of them are particle physics related: the mass ratio of the Higgs boson and the weak interaction boson and the rest mass of the pion. Two of them are cosmological: the gravitational constant (a constant of nature) and Milgrom’s acceleration constant (possibly a constant of nature as well).

The value thus calculated is of the same order of magnitude as the “naked” mass for the quarks, derived in lattice quantum chromodynamics (lattice QCD) [

30] from the rest masses of the pion and kaon as

2.3 MeV and

4.6 MeV for, respectively the

quark and the

quark. “Naked” mass has to be distinguished from “constituent” mass, which can be traced back as the distribution of the hadron’s rest mass over the quarks. Because these rest masses are mainly determined by the binding energy between the quarks, the relative large constituent mass hides the relative small amount of the true physical “naked” mass that shows up after removal of the binding energy component. Lattice QCD is based on the Standard Model with (the heuristic) gluons as the carrier of the color force, the (heuristic) Higgs particle as the carrier of the background energy and an interaction model based on Feynman's path-integral methodology. This methodology is based on the premise that in principle all possible interaction paths between the quarks in space-time matter and that the phase difference in transit time of “probability particles” (as a model of quantum mechanical wave functions) over those paths ultimately determines the interaction effect. Such paths can make more or less coherent contributions or completely incoherent contributions. In lattice QCD, the paths are discretized through a grid. This discretization is not by principle, but only intended to limit the computational work that is performed with supercomputers. The strength of this model is its unambiguity, because it assumes the application of the principle of least action to a Lagrangian (some authors therefore call Lattice QCD a “simple” theory). But it is also the weakness of the model, because the (now very complex) Lagrangian is “tuned” to all phenomenological phenomena observed in particle physics and has been given a heuristic mathematical formulation. It is quite conceivable that physical relationships have been remained hidden under a mathematical mask. A number of examples have been discussed in the two parts of this essay [

1].

It might therefore well be that the definition of “naked” mass is model dependent. In the structural model the pion rest mass is the mass reference. In lattice QCD both the rest mass of the pion and the rest mass of the kaon serve as reference (in the structural model it is shown that these two rest masses are interrelated). The lattice QCD “naked” mass values are validated from calculation of the proton mass, but, strictly speaking, this validation only shows the mass relation between the pion and the proton. In [

17], it shown that the structural model is even more accurate in this respect, particularly taking into account its capability for an accurate calculation of the mass difference between a proton and a neutron. It is quite curious that in the PDG listing of quark masses, the formerly used constituent masses of the

and

quark are replaced by “naked” masses, while for the other quarks (

and

) the constituent values are maintained. Another curiosity is the mass assignment to the

quark and the

quark in the same ratio as their presumed electric charge. This presupposes that the mass origin is electric. But why should it? And if so, why are these mass values not related by an integer factor with the mass of an electron?

The fact that two different models don’t yield exactly the same value for the indirectly determined unobservable "naked" mass of a quark does not necessarily prove the correctness or incorrectness of one the two. In neither of the two models the “naked” mass is used as “the first principle” for mass calculations, because the true mass reference is the rest mass of the pion. As long as comparable precisions are obtained, the value of the naked mass is irrelevant in fact. In the end, Occam's knife is decisive for making a choice.

Because the “naked” mass value of the quarks as calculated from the dark matter view as developed in [31] and summarized in this text rather well corresponds with the mass value of quarks calculated by lattice QCD supports the view that the particle density of the energetic background field constituents is the same for particle physics and for cosmology. Denoting these

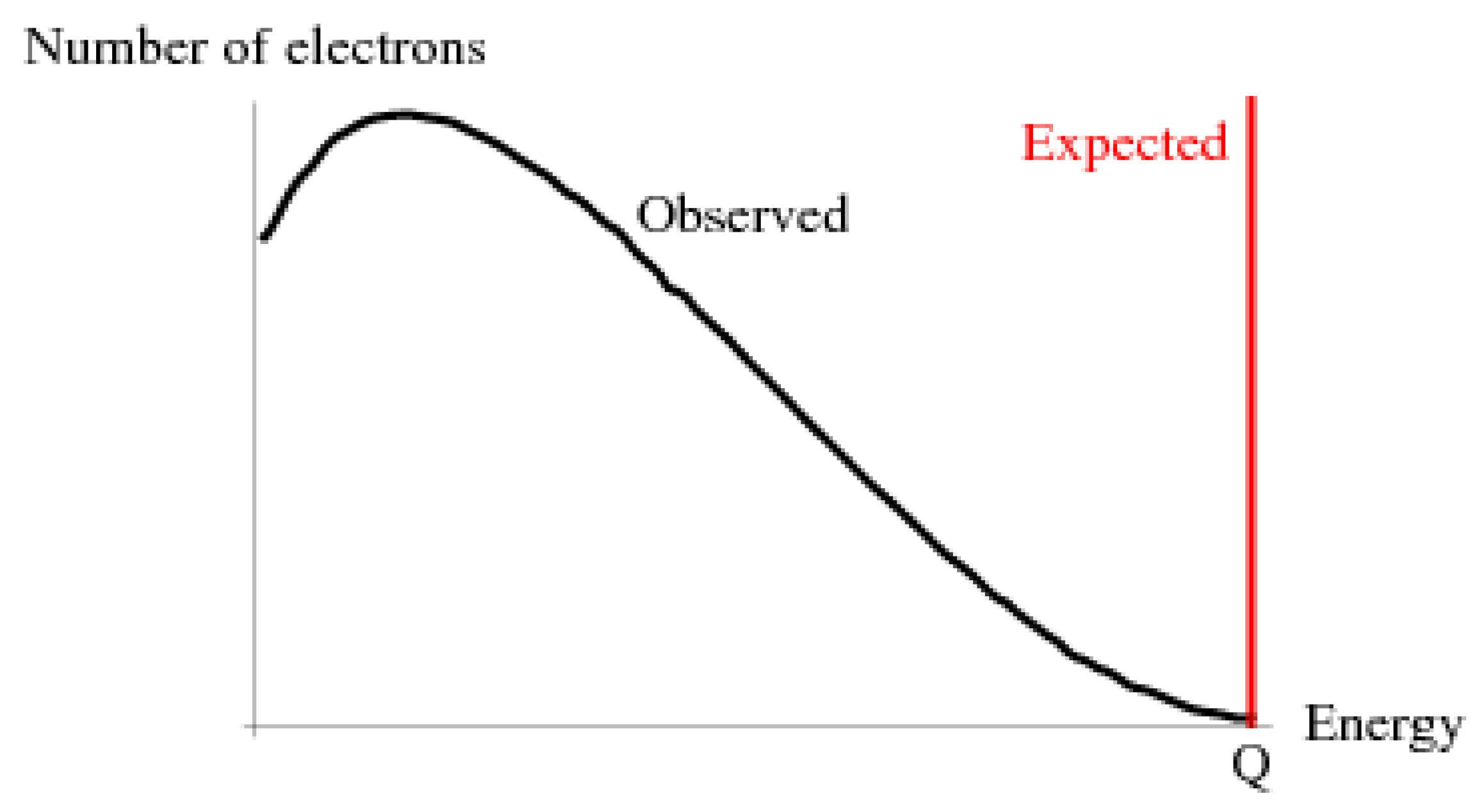

constituents as “darks”, an estimate for their gravitational mass can be derived from the critical mass density of the universe. The critical mass density can be expressed in terms of Hubble time from the consideration that the universe is a bubble from which light cannot escape: once a black hole, now expanding, where we still live in. Hence it has a (Schwarzschild) radius , such that

It gives 9.4 10-27 kg/m3. Divided over 1.7 x 1041 particles, it gives a mass of 5.55 10-68 kg per particle, which corresponds to a massive energy of 3 10-32 eV. This makes the darks virtually mass less. These particles (“darks”) are polarized (dark matter), randomly oriented (dark energy) or condensed (baryonic mass).

6. Conclusions

The theory as reviewed in the first part and the second part of this essay gives an unorthodox and an anti-dogmatic view on particle physics. It is based on three fundamental issues that have not been recognized and therefore not have been taken into consideration during the development of the Standard Model of particle physics.

The first one is the recognition that Dirac’s theory of the electron allows the existence of a particular particle type, which, under a modified set of gamma matrices shows two real dipole moments, instead of a real one and an imaginary one shown by electrons.

The second one is the recognition that two of those particles may compose a bond in which their non-baryonic potential energy is converted into baryonic mass that, under application of Einstein’s General Relativity Theory, can be conceived as constituent mass of the two particles.

The third one is the awareness of a background field with a uniformly distributed energetic space charge that shields the non-baryonic energy emitted from nuclear wells (quarks). The awareness that the vacuum is not empty has been inherited from a similar awareness in cosmology that since in 1998 [

32,

33] has been invoked to explain the accelerated expansion of the universe under interpretation of Einstein’s Lambda in his Field Equation.

Whereas the Standard Model is essentially descriptive with the aim to give an accurate match between experimental evidence and theory, the structural view as described in this essay with its two parts is aimed to show a physical relationship between the many axioms that for mathematical reasons have been introduced in the Standard Model. Among these are concepts as isospin, the Higgs field, the gluon, SU(2) and SU(3) gauging, etc. In the Standard Model, questions like “why are there so many elementary particles, why is there such a big mass gap between the (u/d, s, c,b) quarks and the topquark” and why does the lepton stops at the tauon level, have remained unanswered. The usual answer is “it is as it is”.

The reputation of the Standard Model is based upon the claimed accuracy between theory and experiment. That is certainly true for QED part. But is it true for the QCD part as well? Let us take into consideration that “the more axioms, the more accurate a theory is” and that “experimental evidence is usually not hard proof, because many nuclear particles are not observable and can only be detected as “signatures with a theory in mind”.

The structural view exposed in this review allows to remove many axioms in the theory by giving it a physical basis, thereby considerably reducing the number of elementary particles.

In spite of the fact that mass is one of the most relevant attribute of particles, it is probably fair to say that the Standard Model shows a weakness in calculating masses and mass relationships. Particularly here the structural view shows its strength. Furthermore, the structural model allows to connect particle physics with gravity. In the first part of this essay it has been shown that the gravitational Constant can be verifiably expressed in quantum mechanical quantities. In this second part it has been shown how the “dark matter” phenomenon in cosmology is related with the Higgs field phenomenon in particle physics.

Another strength of the structural model, shown in this second part of the essay, is its ability to include neutrinos within its theoretical framework under explanation of present experimental evidence of their features. How to do so In the Standard Model is an unsolved problem. It is probably fair to say that whereas the Standard Model of particle physics describes the “what”, the structural model describes the “why”.