1. Introduction

Vehicle surveillance is an essential task in public security. Unique features of vehicles like vehicle make, model, and license plate are typically utilized for traffic surveillance. With traffic cameras at every junction of the streets, the entrance of high-security buildings, parking lots, and public places, there is an opportunity to surveil and track the traffic while monitoring the road bringing forward a smart city perspective.

Images and/or videos are captured that provide a plethora of opportunities through scene understanding object detection, recognition, and segmentation using automated approaches such as image processing, machine learning, and deep learning techniques [

1,

3,

5]. Further subtasks are performed from these approaches such as re-identification[

8], tracking, and similarity matching [

4,

6,

7]. Transfer learning has been widely utilized for its computing efficiency using existing pretrained models for video surveillance [

2].

The requirement for robust vehicle identification lies in the need for public safety and security. Accuracy and real-time requirements are the prime concerns for this application. Privacy is one other element that requires to be identified in instance segmentation.

For surveillance of vehicles in particular, machine learning and deep learning models were applied to vehicle data to infer the make, model, and license plate region [

13]. In each case either the wholesome image was used for analysis, or a region of interest was carved where rectangular boundaries were drawn, to identify the exact location of the contextual features to categorize or re-identify[

8]. In the context of cars, the car make is most prominently defined by the frontal view of the car [

26]. The region of interest can be extracted from this view to identify the car’s make and model. This enables a better representation of the uniqueness of the car. Further, the license plate can also be extracted which further can be fed to an ALPR ( Automatic license plate recognition ) system for digit recognition enhancing identification of the vehicle.

In computer vision, the region of interest extraction has been a task accomplished by segmentation. The cropped region of interest is sometimes used as a pre-processing step for both deep learning and machine learning approaches [

18]. A pre-set unique features from these images are extracted for machine learning algorithm where as auto-feature extraction is performed by the deep learning models.

The data presented for learning, being the key to performance and validity of the algorithms for the given task requires rigorous labeling and reviewing. The images and/or video captured are that of varied illumination, background, and views making the data challenging to learn [

14]. With the region of interest extracted and labeled with key significant features extracted, there can be an improvement in learning as seen in many states of art concerning segmentation and classification [

17].

Instance segmentation is task used in tracking where it forms part of a pipeline for segmentation. Region of interest (ROI) segmented with each instance of that specific segment can be marked and identified enabling not just detection but also tracking of individual objects in a scene[

12].

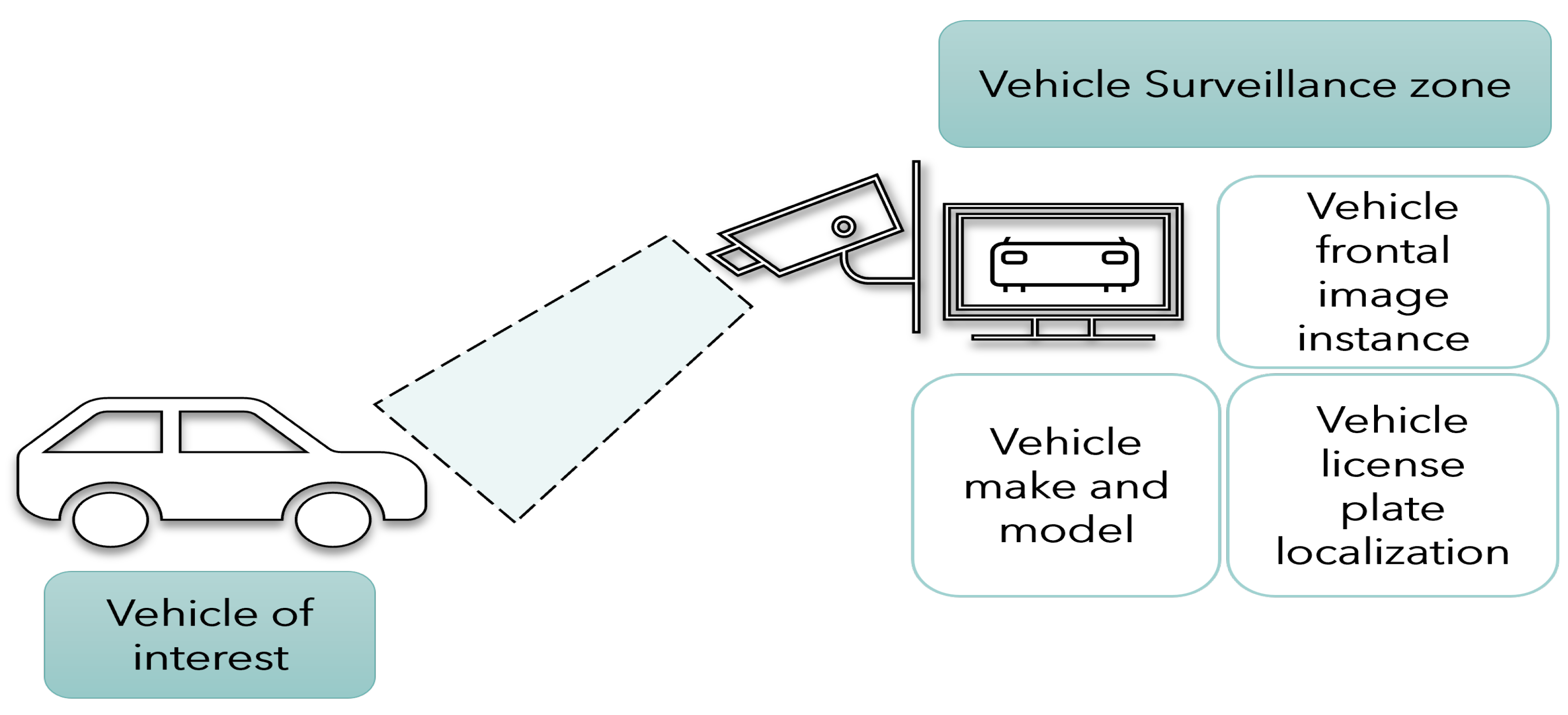

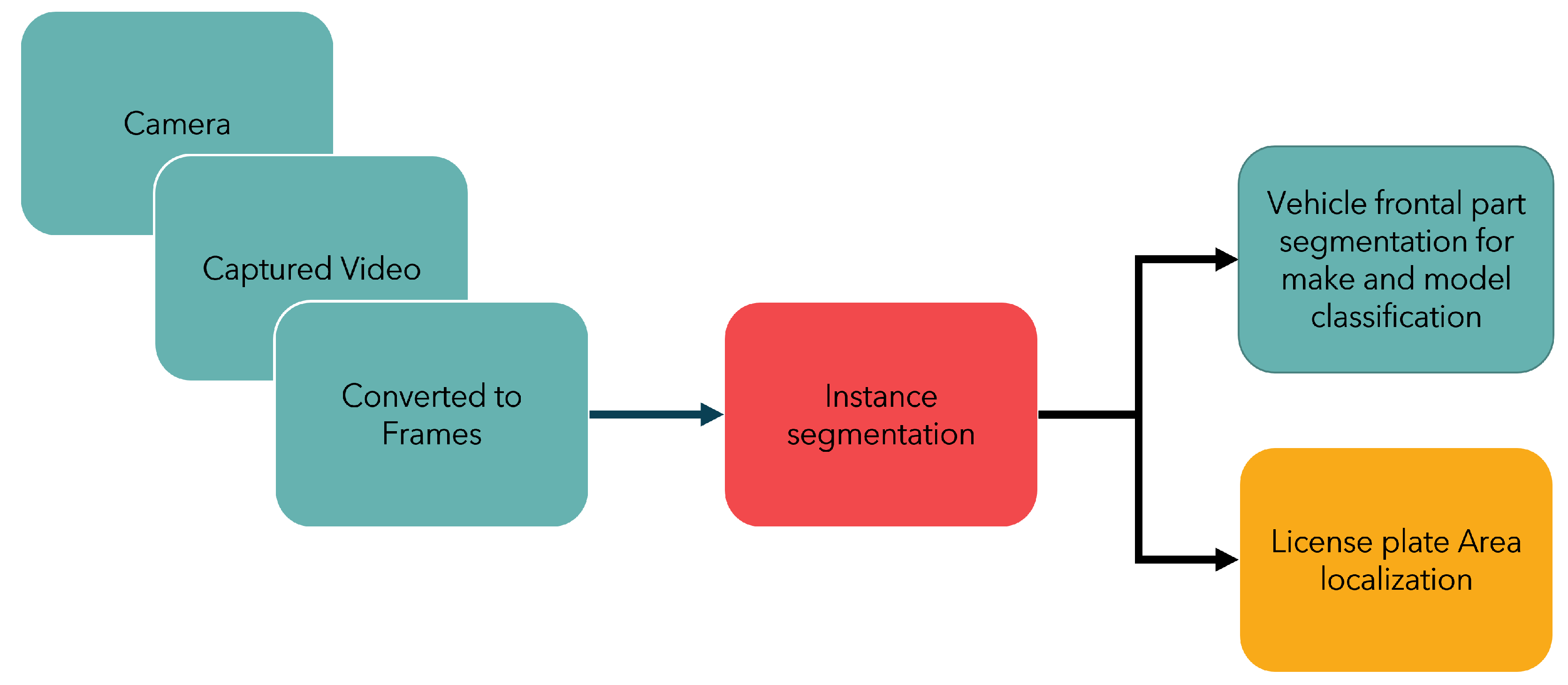

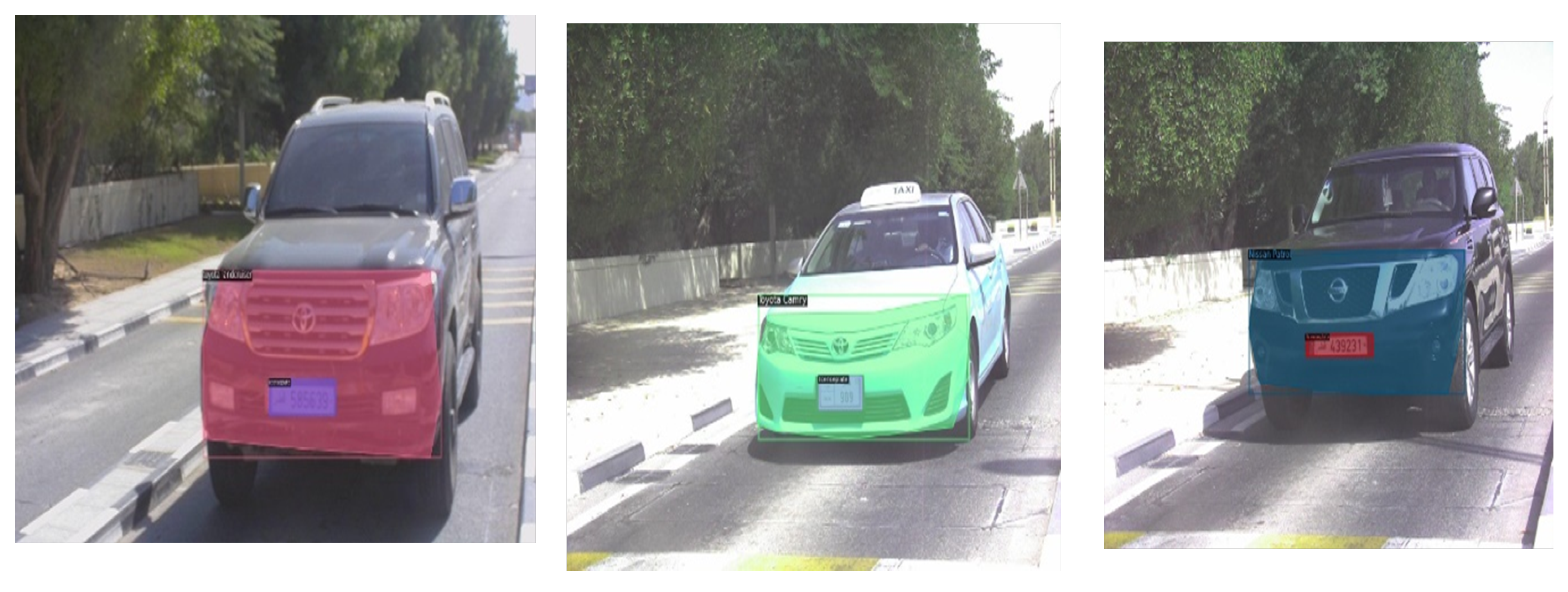

In this context, proposed here is a multi-class instance segmentation model for vehicle make and model recognition clubbed with license plate recognition presented in

Figure 1 and

Figure 2. Prominent tasks like recognition and classification are popular with this approach. However, they need a multi-step approach for vehicle frontal car segmentation and then classification. We propose a single shot segmentation network that not just identifies the vehicle make and model under varying conditions but also precedes it by segmenting the frontal part of the car as a single instance which is essential for individual unique identification and tracking. A unique region of interest labeled dataset for instance segmentation and a car make and license plate identification model using deep learning is utilized for this purpose.

Within this framework, a higher accuracy for the same task on the same dataset is achieved. The inference time for the two approaches is reduced as identification of vehicle type and license plate is performed simultaneously. To improve the dataset for class imbalance data augmentation is performed in different representations and is evaluated for the same. This produces a robust and accurate model for identification of vehicles in traffic, security-sensitive roads and entrances to high security areas.

The contributions of this paper are as follows:

An instance segmentation model for vehicle recognition through segmentation and classification. A single model for identifying a vehicle and identifying the make of the model with license plate.

Achieving higher mAP of detection with a deformed convolutional network with small dataset augmented by mosaic tiling method.

Analysis of several augmentation techniques and its effect on the recognition and detection of vehicle make identification using feature pyramid network and a deep residual network and deformed deep residual network.

2. Literature Review

Vehicle recognition is a widely researched area in the field of computer vision categorizing itself in different tasks like vehicle make and model recognition, vehicle license plate recognition and vehicle classification and vehicle re-identification. Each task is performed individually or consecutively. Application of this comes in requirements of traffic regulation systems, smart city automation, public security and even non civilian use cases. In this paper we take into perspective the requirements of a private and efficient automated vehicle make recognition system. Recent literature in this domain solves the challenges of diversity in dataset with multiple large scale datasets with large number of classes[

21,

26]. Further enhancing security several datasets focus on the parts and frontal area of the car enabling more fine-grained classification. In addition, datasets are varied in terms of illumination, exposure, and even environment. Large scale datasets were utilized in classifying vehicle make and model by detecting its parts specifically the frontal part which provides distinct features for vehicle classification. Of the latest in frontal image dataset was a large-scale fine-grained dataset, with diversity in scale from 103 classes. The dataset was annotated for make, model and year of manufacture providing a hierarchical representation of the vehicle. High resolution images with high quality were presented. The dataset was trained on CNN based methods. Several baseline methods have been utilized for vehicle classification including large scale models like Resnet-50. Further baseline analysis with Alexnet, VGG-16 and VGG-19 were performed. Each producing and accuracy above 85% thus being robust for classification[

26].

Changing vehicle ecosystem involving new manufacturers and new models is leading an open research domain in this field. There’s a requirement however for segmentation datasets annotated in polygonal format capturing enhanced contextual features of a vehicle that is not available as of now. With the aim of privacy in the perspective of application to public security, utilized in this paper is a dataset from [

18] for instance segmentation of the frontal part of the car which includes, segmentation, detection, and classification.

Classification of make is performed using traditional rule-based approaches which are dominant in this field due to the popularity of the problem. Local and global cues were utilized for classification in several approaches. Structural and edge-based features were also a common pick. Further, machine learning was performed with these features to enhance classification. With the feature extraction techniques, edge based feature extractors like HOG and Harris corner detectors performed significantly well for detecting parts of the car like the logo, the grille and the headlights [

16]. Robust feature detectors from key points like that of SIFT and SURF were employed in several state of art. Adding to these features, Corner detectors and line detectors like Hessian matrix and DoG (Difference of Guassian) were implemented, producing considerably higher accuracy for smaller number of classes in [

18]. With larger number of classes, they fail to produce similar accuracy. Further, a bag of feature or Bag of Words approach was implemented with feature detectors for unsupervised clustering producing a histogram of features for matching [

19]. A typical feature detector algorithm accompanies a matching technique like, hamming distance, euclidean distance, cosine similarity for identifying similar vehicles for recognition and classification. This is further used for re-identification task.

Naïve bayes[

22], SVM[

23], LBP[

23], and KNN were common machine learning algorithms used for vehicle make and model classification. CNN architecture used for vehicle make and model classification involve transfer learning on prominent pretrained models like that of Alexnet, VGG, Resnet, and mobilenet [

15]. Adding to this modified CNN networks were introduced such as residual squeezenet [

24] which produced a higher rank-5 accuracy of 99.38. Segmentation was applied as a pre-processing step to remove background. Compound scaling approach was employed on Efficient net pretrained on ImageNet for classification for the purpose of presenting an app for vehicle make and model classification. Unsupervised deep learning techniques such as auto-encoders were also utilized for this purpose [

23]. With each model producing different features automatically generated through CNN based approached or engineered through edge descriptors or geometrical descriptors, there’s a need for higher accuracy for a real time use case. The cropped region of the frontal part of the car is used for identification in most cases. A segmentation of the car parts are also employed such as in [

23].

Segmentation approaches are often used for removing the background and extracting the vehicle, later classifying the vehicle [

25]. In a real-time use case, cropped images should be generated from an image that will later be used for part detection. Almost all approaches necessitate an extra step for vehicle detection, which adds to the time complexity. As a result, a one-step approach to vehicle identification is required. License plate detection adds to the vehicle’s unique features, which are then added to the identification system for re-identification of the vehicle’s unique id tagging. As a result, a robust model is required that can detect the region of interest and classify, identifying each instance of the vehicle’s make.

We consider this challenge in this paper and propose instance segmentation for vehicle identification via segmentation and classification. A two-stage approach for feature extraction using FPN ( a feature pyramid network produced by multi-scale feature extraction ) and classification using Mask RCNN is utilized in this paper, and further experimentation is performed on a modified CNN to improve the performance of the network. Image augmentation techniques are explored for the purpose of improving an existing dataset.

3. Methods

Convolutional neural networks have been the key stone of computer vision applications. They are the most commonly used types of artificial neural networks. Convolutional operations applied to neural networks enable better feature extraction and classification. Convolutional neural networks have evolved based on the requirements of accuracy, generalization and optimization problems. The need for generalization and domain adaptation, lead to rise of several large-scale models trained on large scale data are present. Large scale data is trained on these networks which can be further adapted to other applications. Examples of convolutional neural networks being Alex net[

28], Lenet [

29], Resnet [

31], Google-net [

30], Squeeze-net [

27] and so on. In this paper, we utilize Resnet which is a deep residual network consisting of multiple CNN layers. It extracts deep features and with its residual skip connections, the network is efficient in solving the vanishing gradient descent problem.

Convolutional neural networks comprise of four key features which include weight sharing, local connection, pooling and a large number of layers [

9]. The layers include the convolutional layer that perform the convolutional operation on small local patches of the input where a given input x with a filter f will produce a feature map of x. The convolution operation for the whole image is computed by the following as shown in Equation

1.

where x, f, and N are the input image, filter, and the number of elements in x respectively. The output vector is represented by Y.

This is followed by activation function such as tanh, sigmoid and ReLU[

32]. The activation functions introduce non-linearity into the network. The sub-sampling layer that are the pooling layers reduce the feature map resolution leading to reduce complexity and parameters. The extracted features are mapped to the labels in the fully connected layer. All the neurons are transformed into 1D format[

10]. The output of convolutional and sampling layers is mapped to each of the neurons producing a fully connected layer. The fully connected layer is spatially aware extracting locational features as well as producing high level complex features. The result of this is linked to the output layer which produces output using a thresholding process. A final dense layer is sometimes used having same number of neurons as classes in case of a multi-class classification. A softmax activation function maps all the dense layer outputs to a vector producing a probability of each class.

Accuracy of this prediction is measured by its loss function where the result is compared to that of the ground truth or labelled data. A common loss function used is the categorical cross entropy loss computed as L as shown in Equation

2.

This setup is trained through a back-propagation technique. Hyper-parameters such as learning rate, regularization and momentum parameters are set before training process and adjusted according to brute force technique. evolutionary algorithms are further used to automate hyper-parameter tuning. During the back propagation technique, the biases and weights are updated. The loss function L as in equation

2 is required to be minimum in order to produce an accurate model. For this purpose, parameters such as kernel ( filters), and biases are optimized to achieve the minimum loss. The weights and biases are updated in each network and feed-forward process is iterated with the updated weights. The model converges at the least loss.

Deep residual networks are utilized as the backbone. Deep residual networks are large networks with skip connections that carry knowledge. The methodology utilized in this framework performs instance segmentation using CNN. Instance segmentation enables detection and delineation of each object in a given image or video. Each instance of an object is tagged with an ID enabling unique detection of every object in the scene.

3.0.1. Deformable Convolution:

With all its advantages of convolutional neural network, the geometric structures of its building modules are fixed. Augmentation is used for transforming the images as a pre-processing step in most convolutional neural networks. Thus these transformations such as rotation and orientation are fixed by modifying the training data. The structure of the filters in the kernel are also fixed rectangular window. Pooling mechanisms produce the same size of the kernels to reduce special resolution and thus the objects in the same receptive field are convoluted and presented to the activation function. Thus only identifying objects in that scale. Deformable convolution enhances geometric transformation and scaling by introducing the a 2D offset to the grid sampling locations and thereby the convolution operation offsets from its fixed receptive location to a deformed receptive field. Adding the offset thus augments the spatial sampling locations automatically. The offsets are added after the convolutional operation.

Further to enhance detection at lower levels, image pyramids are computed building a feature pyramid network. The object or segmentation area is scaled over different position levels in the pyramid. A proportionally sized feature maps at multiple levels are generated from a single input. Cross scale correlation is generated at each block to generate a fusion of these features. FPN’s are used with CNN’s as a generic solution for building feature maps. A bottom-up approach or top-down approach is used to produce a feature map. In terms of deep residual networks, the feature activation outputs are produced at each stages’ last residual block.

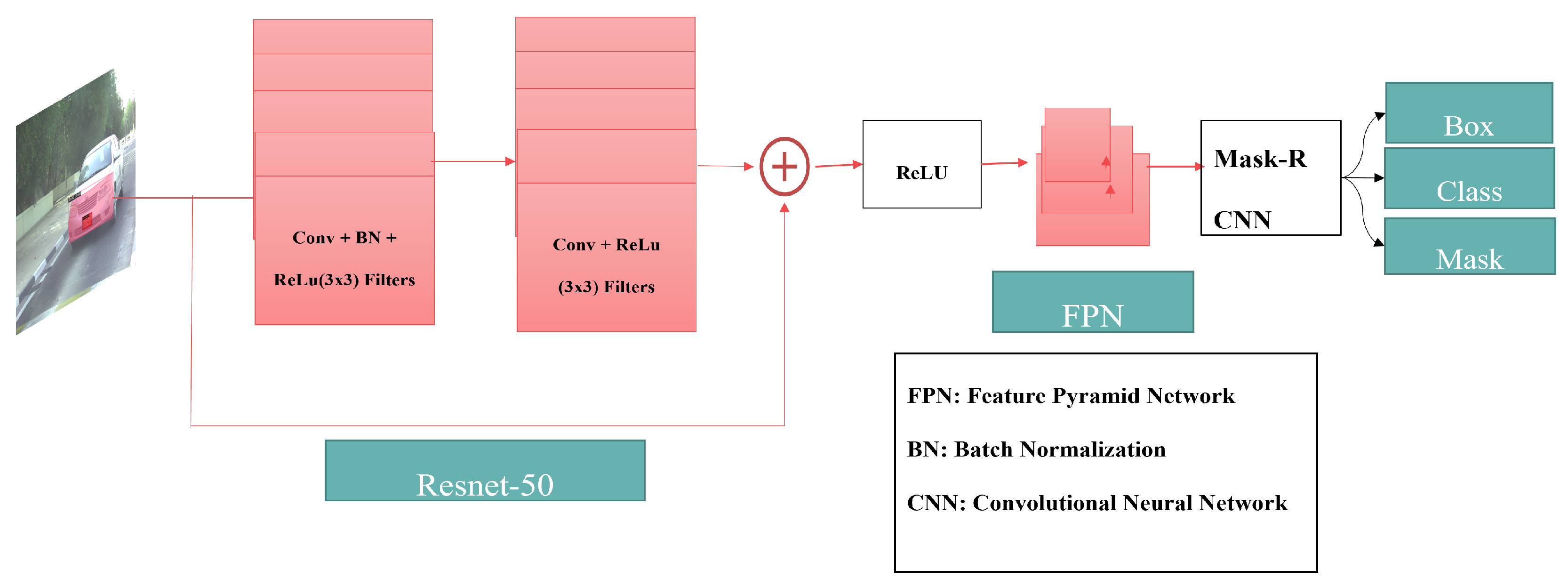

In this paper, we implement Mask RCNN with a Resnet backbone and Feature pyramid network. The use of this network is justified for its accuracy in object detection and segmentation where it is pretrained for several large datasets which have superior performance over other models. However, complexity of the model causes time complexity to increase. We further measure the trade-off of the accuracy vs the time enabling evaluation of a real time use case.

Figure 3 depicts the architecture of mask RCNN with FPN used for instance segmentation.

Mask R-CNN is a region-based CNN that performs object detection and classification with mask generation. The object detection is performed on a region on interest and evaluation was based on this region of interest. A multi-task loss is sampled on the Region of interest as the total of classification loss, object detection loss that is the bounding box loss and mask loss.

Complex hierarchical features are extracted from images. With extensive evaluation, the models are susceptible to overfitting. Regularization techniques are required to improve this overfitting.

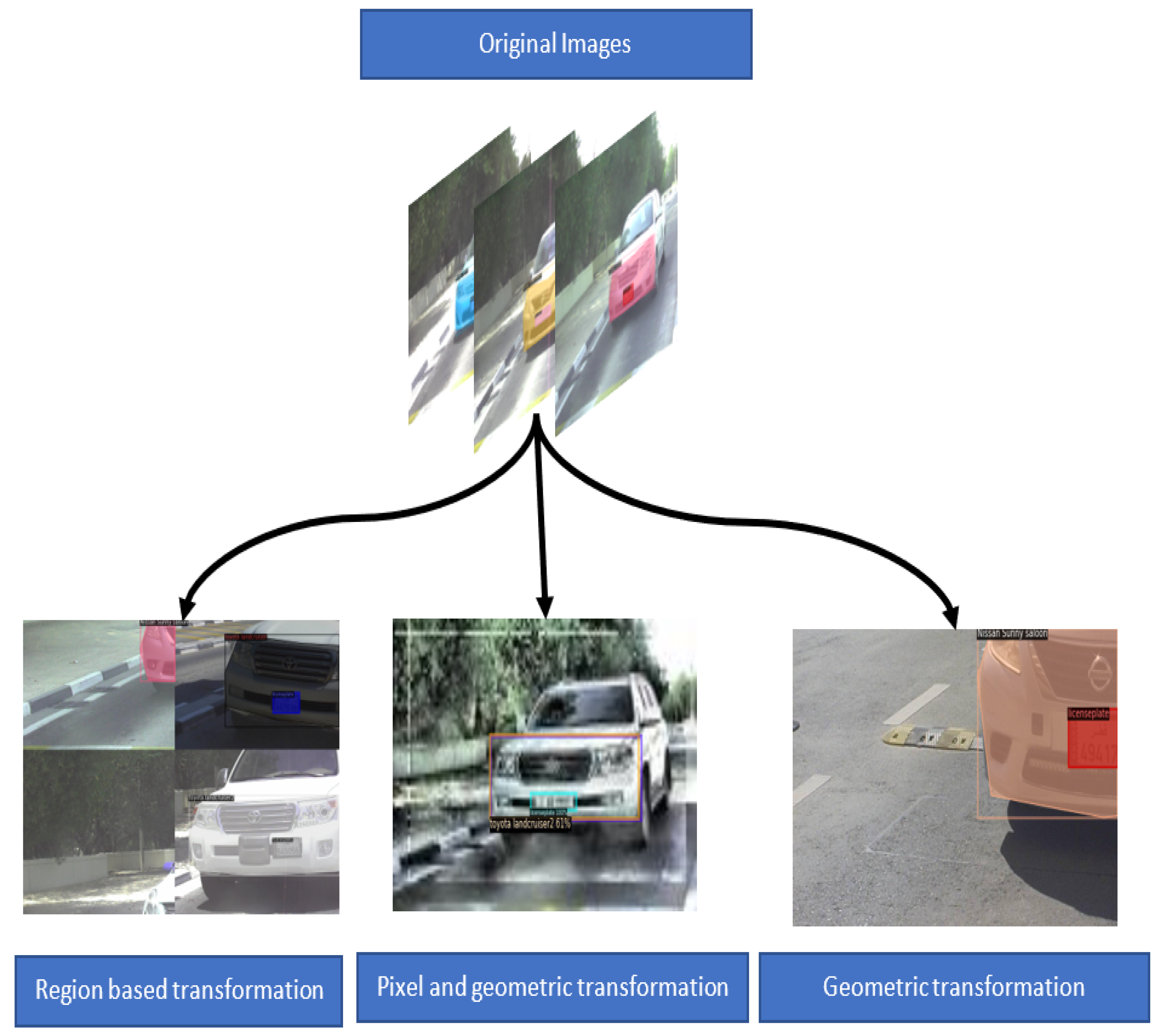

3.0.2. Data Augmentation

Augmentation techniques are often applied to reduce this overfitting, that includes image transformation such as scaling, translation, rotation and random flipping. It not only increases the data size but also provides a diversity of representation. The augmentation techniques can be divided into pixel level data augmentation, region-based augmentation and geometric data augmentation. Pixel based augmentation techniques include changes in pixel values. Adding contrast, brightness or color changes the pixel intensity of the image. Regional augmentation includes that of creating masks of the required region. Motion blur and cutout are common techniques used for region-based augmentation. Geometric transformations are also applied to the data that include flipping, reflection, rotation, cropping etc. In this paper we setup the data to augment at different levels that include geometric transformation and region-based transformation as seen in

Figure 4. This not only enhance the dataset but also improves the datasets diversity. One particular approach used in this model is mosaic tiling method proposed in [

20], where different training images, in this case 4, are taken in different context and stitched into one image performing a sort of mosaic tiling. Random cropping is performed on the image to reduce it to the original training image size.

Figure 5 is an illustration of mosaic tiled images of the dataset.

Thus a baseline method is used for instance segmentation and is then modified and evaluated in terms of data augmentation, different feature extractors as well as deformed convolution to identify the effect of each and chose the optimum configuration of vehicle instance segmentation.

4. Experimental Setup

The setup of this network involves three layers. The vehicle with the mask is fed as training data. The data is augmented in three formats separately based on geometric augmentation and pixel-based augmentation. The transformed data is taken as the testing data and is then trained on a Mask RCNN-FPN network. Further, experiment was performed on Mask RCNN-FPN by deforming the convolutional layers. Resnet-101 and Resnet-50 are used as feature extractor backbones for performing baseline assessment on the dataset. The setup is as shown in the

Figure 3. The experiment was performed on Intel(R) Xeon(R) CPU @ 2.30GHz using GPU instance on an Ubuntu machine.

4.1. Dataset:

An existing dataset was modified for instance segmentation by creating polygonal bounding boxes of the frontal part of the vehicle to capture not just the frontal dashboard but also the curvature of the vehicle. The dataset contains 12 makes of vehicles taken in difference variations of camera exposure during extremely sunny weather to that of evening sunset. The dataset is bit imbalanced and so augmentation was performed to improve the data count. In addition, license plate is treated as a single class having a rectangular bounding box.

Figure 6 are samples of the vehicle with their annotations. A total of 225 images were split for training, testing and validation with the 157 images for training, 44 images for validation and 24 images for testing with a 70-20-10 ratio from the original format. This split is utilized to match the split of the reference paper in [

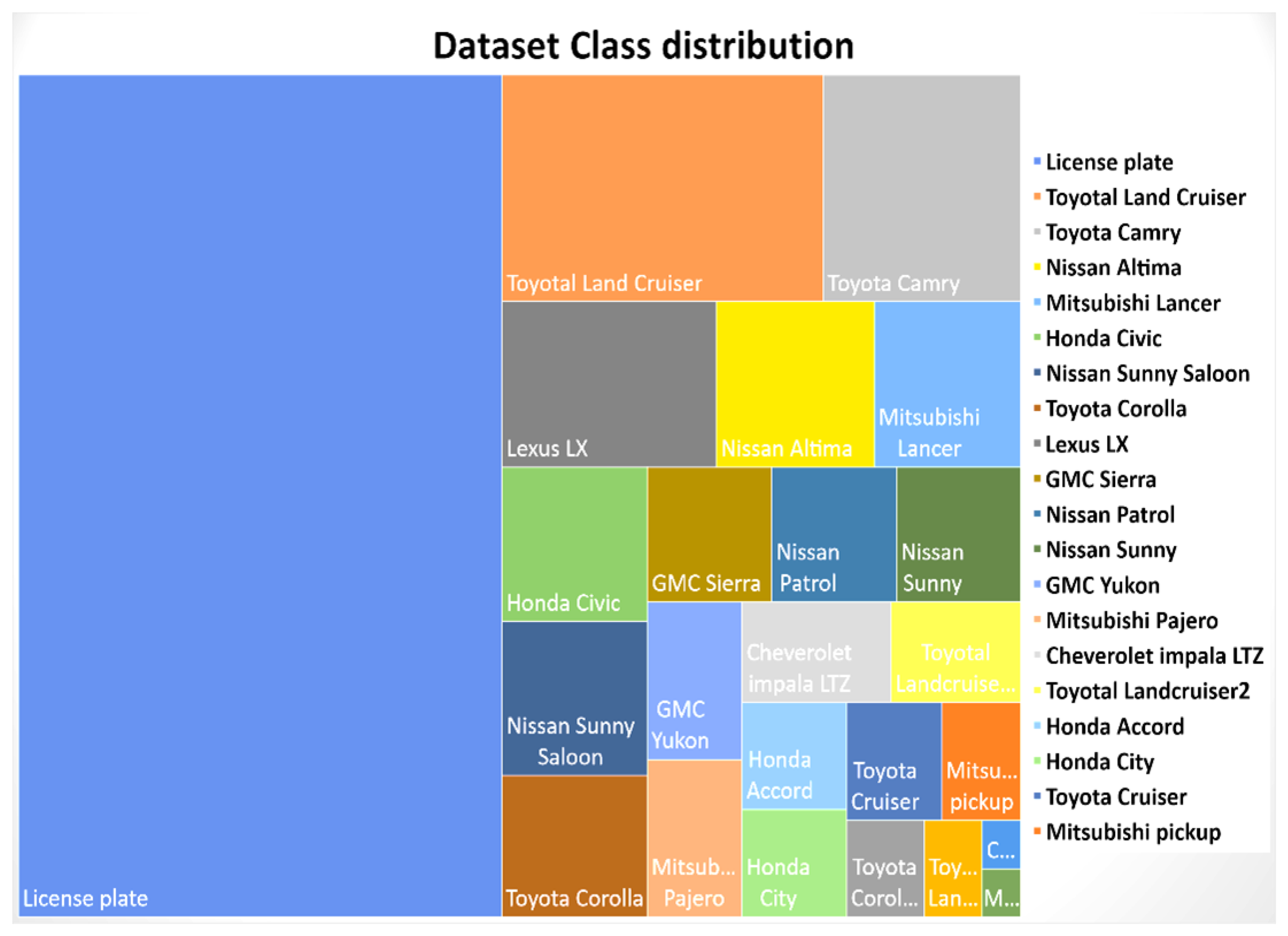

18]. The classes are very imbalanced and require further augmentation which is performed as per the methodology stated earlier. The image below displays class distribution of the dataset. This dataset contains vehicles that belong to the middle east region specifically Qatar.

The experiments were conducted by augmenting the dataset to mimic different camera orientations and noise parameters. An evaluation of both original dataset and partly augmented dataset was performed. Augmentation parameters included in pixel and geometric based include exposure and resizing with auto-orientation, noise, and rotation. Further, patch-based augmentation which is geometric augmentation. The third type of augmentation was mosaic tiled approach. The dataset with annotation is available at [

36].

Figure 5 is an example of data augmentation performed on the dataset and the

Figure 7 shows the distribution classes across the whole dataset.

4.2. Performance Metrics:

To calculate the average accuracy, precision and recall must be computed for each image. TP(true positive), FP(False positive), FN(false negative) and TN (true negative) are metrics used for precision and recall. Equation

3,

4 and

5 compute the accuracy, precision and recall respectively.

mAP: mean Average Precision per class Average precision (AP) measures how well the model classifies each class, while mean average precision(mAP) measures how well the model classifies for all the given test dataset. It is a measure of accuracy of identification. It evaluates the performance of the model by averaging the precision under the IoU ( intersection over union ) with a threshold of 0.50 to 0.95. AP is calculated in each point in the threshold.

The average precision (AP) is used to evaluate the experimental performance which is calculated by averaging the precision under IoU (intersection over union) thresholds from 0.50 to 0.95 with a step of 0.05. For different queries, the evaluation metrics are APS, APM, APL, AP50, AP75, and mAP. Subscripts “S,” “M,” and “L” refer to “small,” “medium,” and “large,” respectively. Subscripts “50” and “75” represent the IoU thresholds of 0.5 and 0.75, respectively. The mAP is the mean AP for each experiment.

Inference time:

The inference time is measured by the time taken to classify and generate a mask for a single input. In the context of this approach, it will be time taken to classify and generate masks for a single frame of a video.

5. Results and Discussion

Several experiments were conducted on different augmentation methods on the dataset. Resnet-50 backbone was used for the deform-able receptive field-based Mask RCNN. With a batch size of 2, the experiments ran for 1000 iterations and used a pretrained Resnet backbone on COCO dataset. Evaluation was performed using the COCO trainer module. The results without segmentation are listed in the

Table 1 and an ablation study based on difference backbones and feature extraction are tabulated in

Table 3 with the original dataset size, resolution, and clarity.

For a varied analysis, different baselines were experimented on for the purpose of evaluation and identifying the trade-off in the reliability and accuracy of an instance segmentation approach for the purpose of vehicle recognition. Mask RCNN was used as baseline with a Resnet-50 backbone with Feature pyramid network

Further modelled with a Resnet-101 backbone with Feature Pyramid Network. The original dataset was augmented in multiple methods to improve the dataset description. The results of the experimentation with original dataset is displayed in

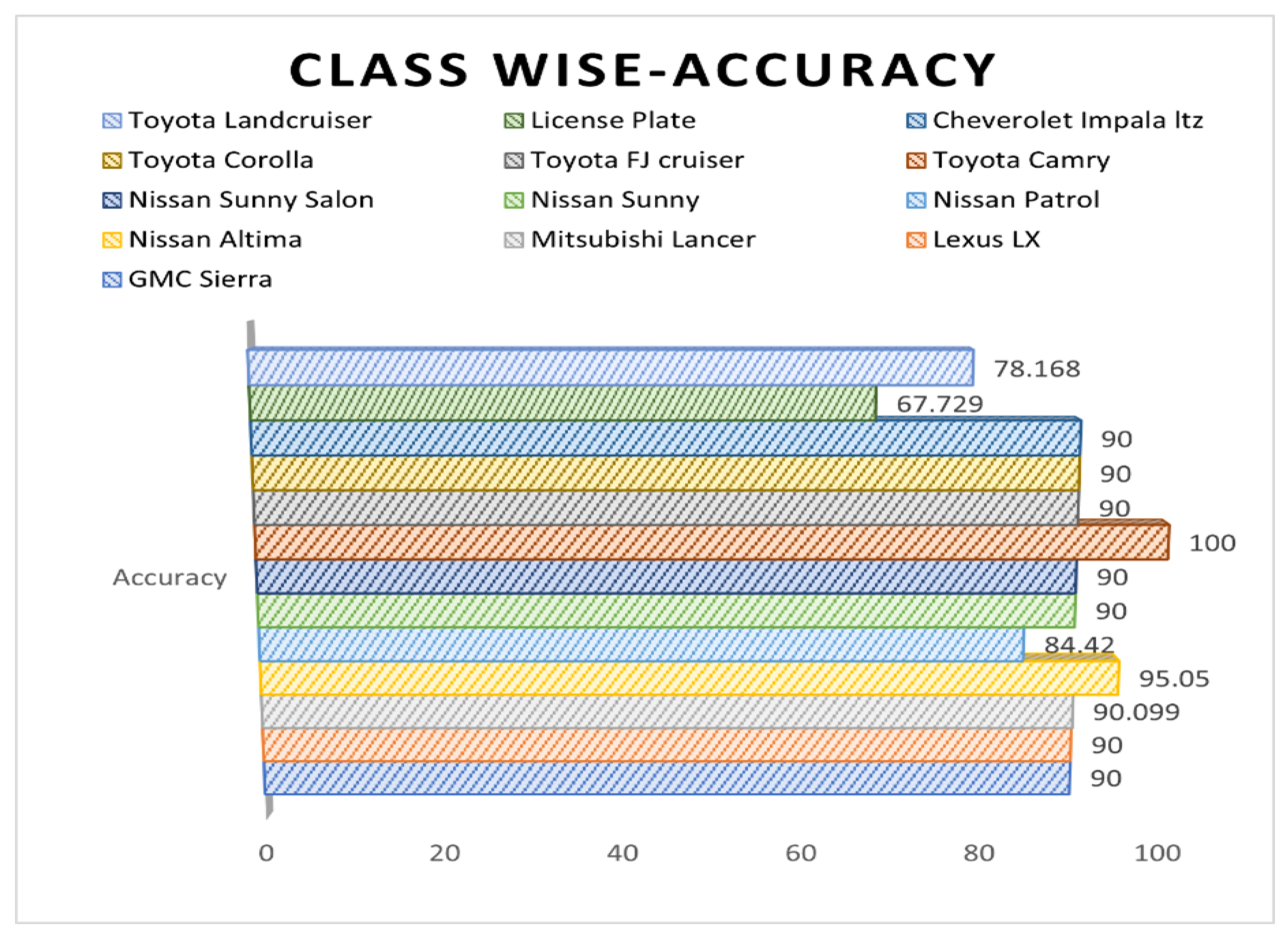

Table 1. The table describes the classification accuracy of mask RCNN with that of instance segmentation accuracy with the mean average precision metric. The execution time for inference of a single image from the test set is also presented. The resent-50 back bone without FPN with base RCNN produces a high mAP of 99.670. Although Resnet-50 backbone with FPN is hypothesized to produce higher accuracy, it lags 1% but produces faster inference with 174ms faster than base-RCNN. With further experimentation on the CNN module with a deformed convolutional operation the accuracy dropped to 90% which is significantly lesser than expected. This could be due to the added complexity and generalization of the network. It can be noted that the models are inferred on a test set with imbalanced data and thus not reliable for certain classes. With class wise precision, it can be noted that the largest class, the license plate has the poorest accuracy, license plate covers a smaller area and is similar in semantic to rectangular shapes which can be a reason for the poor performance. Class wise performance is depicted in

Figure 8.

The test data is either over-represented or under-represented and thus had to be balanced for a reliable result. Thus, multiple augmentation techniques are performed to improve data representation. Three types of augmentation approaches are utilized for this task. The following

Table 3 describes the results and the approaches used. A large network and smaller network were tested to evaluate the impact of augmentation on data size and the accuracy of the model. The table describes the results of each augmentation type on baseline models. The inference from the table is clear that mosaic augmentation performs considerably better than any other augmentation type. However, it fails to surpass images with same resolution. The patch based augmentation has very low inference than expected even though the number of images increases. This could be because of class empty patches in the dataset as each class is represented once in the original image.

With per class evaluation, each class performed well on every model achieving an average of around 80%. However, License plate detection was a challenge in many models with 62.813 as the highest mAP compared to all the other networks. The number of images didn’t have an impact on the performance of this class, which may be attributed to its reduced size of the license plate and its location in image with respect to models like Lexus. The

Figure 8 is per class result of mask RCNN with Resnet50 backbone with Toyota corolla having highest accuracy compared with other classes.

5.0.1. Benchmarking:

Bench-marking existing literature, the results in terms of accuracy using the existing dataset in terms of classification is given in the

Table 4. The table presented shows an incredibly significant increase in accuracy compared to traditional methods using SIFT and DoG. The notable change in the model complexity and the technique produce the difference in these parameters. Distinct features are extracted globally compared to the constant local feature points on the dataset. With the same dataset a considerable increase in recognition accuracy on the test data. Although it out-stands other models, it can be seen from the figure above that classes with low number of images were not part of the test data. An imbalance is noted.

6. Conclusion

Instance segmentation of vehicular frontal region is an effective tool for vehicle classification and identification. Existing techniques requires multiple techniques to identify the vehicle, segment and then identify the make and model from this data using multiple algorithms or a separately trained network for each task. In this approach all tasks are achieved with one model. Time complexity is measured and the approach that took less execution time was mask RCNN with resnet-50 and feature pyramid network with 136ms. With an enhanced dataset with instance segmentation and further data augmentation of performance an overall evaluation technique is presented. However, new, and latest models of vehicles need to be added to the data and imbalance of dataset improved for further improvement. Further, evaluation is required for a light weight model like that of center mask [

37] which is an anchor free approach that can further improve the inference time. The instance produced from this model can be further used for re-identification as each unique instance is created for each vehicle per model. Privacy is further advanced with processing proposed in a blockchain network rather than a centralized storage as each instance of the frontal part of the vehicle can be saved rather than the whole image itself. Thus, securing the privacy and reliability of automatic vehicle recognition system is achieved.

Author Contributions

Conceptualization, Najmath Ottakath and Somaya Al Maadeed; methodology, Najmath Ottakath.; software, Najmath Ottakath.; validation, Najmath Ottakath and Somaya Al Maadeed.; data curation, Najmath Ottakath; writing—original draft preparation, Najmath Ottakath; writing—review and editing, Najmath Ottakath and Somaya Al Maadeed.; visualization, Najmath Ottakath; supervision, Somaya Al Maadeed; project administration, Somaya Al Maadeed; funding acquisition,Somaya Al Maadeed. Authorship must be limited to those who have contributed substantially to the work reported.

Funding

This research work was made possible by research grant support (QUHI-CENG-22/23-548) from Qatar University Research Fund in Qatar.

Institutional Review Board Statement

Not Applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN |

Convolutional Neural Network |

| D-CONV |

Deformed Convolution. |

| TL |

Transfer learning. |

| RESNET |

Residual Network. |

| FPN |

Feature Pyramidal Network. |

References

- Elharrouss, O.; Al-Maadeed, S.; Subramanian, N.; Ottakath, N.; Almaadeed, N.; Himeur, Y. Panoptic segmentation: a review. arXiv 2021, arXiv:2111.10250. [Google Scholar]

- Himeur, Y.; Al-Maadeed, S.; Kheddar, H.; Al-Maadeed, N.; Abualsaud, K.; Mohamed, A.; Khattab, T. Video surveillance using deep transfer learning and deep domain adaptation: Towards better generalization. Engineering Applications of Artificial Intelligence 2023, 119, 105698. [Google Scholar] [CrossRef]

- Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S. A review of video surveillance systems. Journal of Visual Communication and Image Representation 2021, 77, 103116. [Google Scholar] [CrossRef]

- Akbari, Y.; Almaadeed, N.; Al-Maadeed, S.; Elharrouss, O. Applications, databases and open computer vision research from drone videos and images: a survey. Artificial Intelligence Review 2021, 54(5), 3887–3938. [Google Scholar]

- McCann, J.; Quinn, L.; McGrath, S.; Flanagan, C. Video Surveillance Architecture at the Edge (No. 9362). EasyChair 2022. [Google Scholar]

- Alshaikhli, M.; Elharrouss, O.; Al-Maadeed, S.; Bouridane, A. Face-Fake-Net: The Deep Learning Method for Image Face Anti-Spoofing Detection: Paper ID 45. 2021 9th European Workshop on Visual Information Processing (EUVIP); IEEE, 2021; pp. 1–6. [Google Scholar]

- Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S.; Bouridane, A.; Beghdadi, A. A combined multiple action recognition and summarization for surveillance video sequences. Applied Intelligence 2021, 51(2), 690–712. [Google Scholar] [CrossRef]

- Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S.; Bouridane, A. Gait recognition for person re-identification. The Journal of Supercomputing 2021, 77(4), 3653–3672. [Google Scholar]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: analysis, applications, and prospects. IEEE transactions on neural networks and learning systems 2021. [Google Scholar]

- Akbari, Y.; Britto, A.S.; Al-Maadeed, S.; Oliveira, L.S. Binarization of degraded document images using convolutional neural networks based on predicted two-channel images. 2019 International Conference on Document Analysis and Recognition (ICDAR); 2019; pp. 973–978. [Google Scholar]

- Akbari, Y.; Al-Maadeed, S.; Adam, K. Binarization of degraded document images using convolutional neural networks and wavelet-based multichannel images. IEEE Access 2020, 8, 153517–153534. [Google Scholar]

- Mohanapriya, S. Instance segmentation for autonomous vehicle. Turkish Journal of Computer and Mathematics Education (TURCOMAT) 2021, 12(9), 565–570. [Google Scholar]

- Ottakath, N.; Al-Ali, A.; Al Maadeed, S. Vehicle identification using optimised ALPR. 2021. [Google Scholar]

- Tian, B.; Morris, B. T.; Tang, M.; Liu, Y.; Yao, Y.; Gou, C.; Tang, S. Hierarchical and networked vehicle surveillance in ITS: a survey. IEEE transactions on intelligent transportation systems 2014, 16(2), 557–580. [Google Scholar] [CrossRef]

- Elharrouss, O.; Akbari, Y.; Almaadeed, N.; Al-Maadeed, S. Backbones-review: Feature extraction networks for deep learning and deep reinforcement learning approaches. arXiv 2022, arXiv:2206.08016. [Google Scholar]

- Lu, W.; Zhang, H.; Lan, K.; Guo, J. Detection of vehicle manufacture logos using contextual information. In Asian Conference on Computer Vision; Springer: Berlin, Heidelberg, 2009; pp. 546–555. [Google Scholar]

- Ali, M.; Tahir, M. A.; Durrani, M. N. Vehicle images dataset for make and model recognition. Data in brief 2022, 42. [Google Scholar] [CrossRef]

- Saadouli, G.; Elburdani, M. I.; Al-Qatouni, R. M.; Kunhoth, S.; Al-Maadeed, S. Automatic and Secure Electronic Gate System Using Fusion of License Plate, Car Make Recognition and Face Detection. 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT); 2020; pp. 79–84. [Google Scholar] [CrossRef]

- Das, J.; Shah, M.; Mary, L. Bag of feature approach for vehicle classification in heterogeneous traffic. 2017 IEEE International Conference on Signal Processing, Informatics, Communication and Energy Systems (SPICES) 2017, 1–5. [Google Scholar]

- Bochkovskiy, A.; Wang, C. Y.; Liao, H. Y. M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Yang, L.; Luo, P.; Change Loy, C.; Tang, X. A large-scale car dataset for fine-grained categorization and verification. In Proceedings of the IEEE conference on computer vision and pattern recognition; 2015; pp. 3973–3981. [Google Scholar]

- Pearce, G.; Pears, N. Automatic make and model recognition from frontal images of cars. 2011 8th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS); IEEE, 2011; pp. 373–378. [Google Scholar]

- Gao, Y.; Lee, H. J. Local tiled deep networks for recognition of vehicle make and model. Sensors 2016, 16(2), 226. [Google Scholar] [PubMed]

- Lee, H. J.; Ullah, I.; Wan, W.; Gao, Y.; Fang, Z. Real-time vehicle make and model recognition with the residual SqueezeNet architecture. Sensors 2019, 19(5), 982. [Google Scholar] [PubMed]

- Wu, M.; Zhang, Y.; Zhang, T.; Zhang, W. Background segmentation for vehicle re-identification. In International Conference on Multimedia Modeling; Springer: Cham, 2020; pp. 88–99. [Google Scholar]

- Lu, L.; Wang, P.; Huang, H. A Large-Scale Frontal Vehicle Image Dataset for Fine-Grained Vehicle Categorization. IEEE Transactions on Intelligent Transportation Systems 2022, 23(3), 1818–1828. [Google Scholar] [CrossRef]

- Iandola, F. N.; Han, S.; Moskewicz, M. W.; Ashraf, K.; Dally, W. J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Zahangir Alom, M.; Taha, T. M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Shamima Nasrin, M.; Asari, V. K. The history began from AlexNet: a comprehensive survey on deep learning approaches. arXiv 2018, arXiv:1803. [Google Scholar]

- LeCun, Y. LeNet-5, convolutional neural networks. 2015, 20, p. 14. Available online: http://yann. lecun. com/exdb/lenet.

- Ballester, P.; Araujo, R. M. On the performance of GoogLeNet and AlexNet applied to sketches. Thirtieth AAAI conference on artificial intelligence; 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition; 2016; pp. 770–778. [Google Scholar]

- Karlik, B.; Olgac, A. V. Performance analysis of various activation functions in generalized MLP architectures of neural networks. International Journal of Artificial Intelligence and Expert Systems 2011, 1(4), 111–122. [Google Scholar]

- He, H.; Shao, Z.; Tan, J. Recognition of Car Makes and Models From a Single Traffic-Camera Image. IEEE Transactions on Intelligent Transportation Systems 2015, 16(6), 3182–3192. [Google Scholar] [CrossRef]

- Bhatti, H. M. A.; Li, J.; Siddeeq, S.; Rehman, A.; Manzoor, A. Multi-detection and Segmentation of Breast Lesions Based on Mask RCNN-FPN. 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM); 2020; pp. 2698–2704. [Google Scholar] [CrossRef]

- Lin, T. Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition; 2017; pp. 2117–2125. [Google Scholar]

- Available online: https://drive.google.com/drive/folders/1zqR1s9YiTxAfjfF213WbiH3Xc-SHPIPs?usp=sharing.

- Lee, Y.; Park, J. Centermask: Real-time anchor-free instance segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition; 2020; pp. 13906–13915. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).