1. Introduction

With the rise of the digital age in the 21st century, the use of online platforms (e.g. social media) has grown exponentially over time with the growth of the Internet. Online platforms have allowed people to share information and free expression. However, these networks are also used for supporting sexual abuse behaviours, such as grooming, sex exploitation, indecent exposure, forced intercourse, sexual torture and coordinating sex trafficking[

1]. Online sexual abuse is a global issue but techniques for detecting and categorising are still limited in their availability and application [

2]. The production and consumption of child sexual abusive materials (CSAMs) have many negative lasting effects on the victims, and impacts society as a whole. Victims of child sexual abuse (CSA) can live with both short and long term physical and psychological effects, such as anxiety, depression, self-harm, suicide, sexually transmitted infections and/or pregnancy.

Abusers can create, consume or distribute CSAM in the form of text, image or video for their purposes. The number of unreported sexual abuse instances is typically far higher than the number actually reported, as indicated by U.S figures of (69%) unreported versus (31%) reported

1, because the victims are afraid to tell anyone, and the legal procedure for validating an episode is complex and traumatising for the victim. Sexual predator and CSAM detection is critical to not only protect child victims from being abused online but also prevent the cycle of abuse that is repeated each time as CSAMs are shared or viewed. Our aim in this paper is to provide an update to date analysis of research works in the domain of CSAM investigation and detection online.

Related work: Similar to our paper, there are 8 survey papers in the domain of CSAM; they are Ali et al. [

3], Christensen and Pollard [

4], Lee et al. [

1], Russell et al. [

5], Sanchez et al. [

2], Slavin et al. [

6], Steel et al. [

7] and Steel et al. [

8]. In [

3], the authors reviewed 42 papers to study the Internet’s role in developing CSA for commercial and non-commercial purposes. In [

4], 6 law enforcement strategies combating CSAM were selected. The paper explained how the strategies can work and success. In [

1], 21 CSAM research papers were reviewed about policy and legal frameworks, distribution channels and applied technologies. In that, distribution channels include P2P networks, darknet, web search engine and website, mobile devices and social media. The applied technologies were detailed as follows: an image hash database, web-crawler, visual detection algorithm and machine learning. In [

5], 8 research papers were reviewed to identify the nature of CSA prevention strategies and interventions in developing countries. In addition, the paper also analysed the typical settings and population groups which were used by the intervention strategies. In [

2], some papers were studied to analyse the functions, accuracy, importance and effectiveness of the tools used by the child exploitation investigators. In [

6], 21 papers were reviewed to measure potential risk factors and clinical correlates of compulsive child behaviour that may help to prevent abuse and treat victims more effectively. In [

7], 20 papers were reviewed to learn about the cognitive distortions associated with sexual touching of CSAM offenders. In [

8], 33 papers were reviewed to identify the technology used by CSAM offenders.

Our contributions: the review papers mentioned focus on papers published almost exclusively before 2019. Our review covers 43 papers, the vast majority published from 2018 to 2022. Moreover, our contribution is completely different from the previous review papers. This paper provides a comprehensive survey in the domain of online CSAM investigation and detection based on both information technology and social science. It analyses the data sets used, the tasks addressed and the AI techniques applied in the state-of-the-art CSAM literature. To the best of our knowledge, we are the first to focus exclusively on online CSA detection and prevention with no geographic boundaries, and the first survey to review papers published after 2018. This survey is a valuable resource for the research community working in the CSAM domain. It is a scoping review that identifies literature in the online CSAM, and the first assessment of the size of the literature since 2018. It can be used by researchers to identify gaps in knowledge and relevant publicly available datasets that may be useful for their research.

The rest of this paper is organised as follows: in

Section 2, we detail the methodology employed in selecting the papers form the literature. In

Section 3, the current research in CSAM is presented and analysed under the following headings: the datasets used, the tasks addressed and the analysis/modelling techniques applied.

Section 4 contains a discussion of the main findings from the survey. Finally,

Section 5 presents conclusion and future work.

2. Method

We focused on reviewing research papers in the area of online CSAM. Our overall methodology which is used to identify the relevant papers is based on the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA)

2, as used in previous review papers such as [

1,

6,

7,

8]. The following criteria were used:

Published by Springer, Elsevier, IEEE or ACM which respectively have libraries being Springer Nature

3, Science Direct

4, IEEE Xplore

5 or ACM DL

6.

Published from 2018 to 2022.

Written in English, not discriminating by geographical area and dataset language.

Title or keywords or abstract of each paper has keywords: (Child OR Children) AND (Sex OR Sexual) AND (Abuse OR Exploitation OR Material OR CSAM OR CSEM). The keywords are used in the Boolean search query based on the form requirements of each library.

As shown in

Table 1, with search criteria in above, there are 2,761 downloaded papers through the advanced search functions of the publisher libraries. After reviewing the title and abstract of the papers, we identified 119 relevant papers. For each of these, we continued to review the full text carefully, and selected 36 papers which are most relevant to the purpose of our paper. To avoid missing other relevant papers, we searched on Google Scholar

7 and did not limit publisher or publication year of papers. This search led us to find an extra 7 relevant papers, resulting in a total of 43 relevant papers. Finally, there are 35 research papers and 8 review papers in the domain of CSAM investigation and detection. We presented and compared the 8 review papers as related work in

Section 1. The 35 research papers will be analysed, classified and discussed in detail in the next sections.

3. Classification and Analysis

Table 2 presents classification of the current research in terms of datasets, the CSAM tasks addressed and the techniques applied. Each paper is listed under the associated publisher’s name and the year of publication is specified. The datasets are categorised based on their accessibility and origin. The CSAM task addressed by the research is based on the nature of the CSAM media involved, with categories of text, image and video. Finally, the applied technique categories are statistic, natural language processing (NLP) and machine learning (ML)/deep learning (DL). Some additional specific categorisation is also highlighted in the rare cases where papers do not fit the main categories.

3.1. CSAM Tasks addressed

CSAM related activities manifest as text based communications, images and videos.

3.1.1. Working on Text

The current social networks are regularly misused to post or spread CSA messages, comments and chat conversation. Hence, the 24 research papers [

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

24,

25,

27,

28,

29,

30,

31,

32,

37,

38,

40,

41,

43] proposed methods to detect, prevent and/or process CSAMs in text format.

Research works in this category involve two expert domain areas: computer science and social sciences. Sociologists studied about: (1) CSAM offenders ([

11,

17]); (2) The effect of perceived informal social control on physical CSA ([

12]); (3) CSA prevention related to parents ([

14,

19]); (4) Association of CSA with culture, race/ethnicity, psychological health and/or risk behaviours ([

13,

15,

18]); (5) Consideration of ’slut pages’ as a social form of CSA images ([

16]); (6) Correlation between the Greek Hotline reports and dark webs forum logs ([

24]); (7) How to support victims and identify places unsafe for children ([

37]); and (8) Dark web operation ([

43]).

To provide a safe environment for children in online networks, computer scientists applied artificial intelligent algorithms for: (1) Detecting abusive comments, chat conversations and/or messages ([

9,

10,

31,

38,

41]); (2) Analysing responses and posts on Twitter and Facebook in India ([

27]); (3) Processing chat logs to discover behaviours of potential predators ([

25]), or identify predatory conversations and sexual predators ([

28,

29,

30]); (4) Analysing the complex adult service websites to determine human trafficking organizations operating the websites ([

32]); and (5) Investigating CSAM collected on Tor Dark Net

8 ([

40]).

3.1.2. Working on Images

In 2021, 29.3 million were detected online and removed

9. The sheer volume and distribution levels of CSA images is such that human experts can no longer handle the manual inspection. Seven papers [

20,

21,

22,

23,

26,

35,

36] processed or worked on CSA images. In [

20], the authors proposed how to detect physical signs in the body parts of victims that will help general practitioners or hospital workers to detect and investigate CSA. The work in [

21,

22,

26,

35,

36] detected the CSA images with the goal of removing them from social media sites. In addition, a CSA image is often considered as evidence of a crime in progress. In [

23], to support professionals to detect victims of CSA when there is no forensic evidence, the authors addressed CSAM from self-figure drawings.

3.1.3. Working on Videos or Sensor Data

Three papers [

34,

39,

42] focused on processing video. While a fourth paper [

33] used information from sensors to detect child abuse activities. In [

34,

42], the authors proposed methods to detect and prevent the distribution of CSA images and videos on online sharing platforms. In [

39], Hole et al. developed a software prototype that used both faces and voices to match victims and offenders across CSA videos. The sensor-based work [

33] designed and implemented a system which used a smartwatch and two pressure sensors on a belt and on underclothes to detect sexual abuse behaviours. The smartwatch also contains some sensors to detect the body temperature, the heart rate and skin conductance.

3.2. Datasets Used

The datasets to support research work consist of data gathered directly by the research group, versus third party datasets.

3.2.1. Self-Build

Twenty-one research works [

10,

11,

12,

14,

15,

16,

20,

21,

23,

24,

27,

31,

32,

33,

34,

37,

39,

40,

41,

42,

43] created their own datasets to evaluate and discuss their systems. For example, Quayle [

17] collected CSA text from social networks and media sources, namely Facebook, BBC news, Skype, WhatsApp and Instagram. Westlake and Bouchard [

42] created a dataset containing over 4.8 million web pages which were crawled from the 10 networks with 300 websites in each network. In [

43], the authors used data based on descriptions of 53 anonymous CSAM suspects in the United Kingdom who were active on the dark web and noticed by the police. The number of pages of available data per suspect varied and ranged from 1 to 12 transcripts, and 1 to 411 pages.

With the exception of [

21], none of the self-build group have published their dataset. In [

21], the authors made Pornography-2M and Juvenile-80k datasets

10 which contain 2 million pornography images and 80 thousand age-group images, respectively.

3.2.2. Third-Party

The 14 papers [

9,

13,

17,

18,

19,

22,

25,

26,

28,

29,

30,

35,

36,

38] used datasets of third-parties to evaluate and analyse the methods. Of these, the datasets of the 10 papers are open, namely [

9,

13,

18,

19,

25,

28,

29,

30,

35,

38].

In [

9], the dataset was collected from YouTube. These collected raw comments were cleaned and manually labelled into abusive or non-abusive

11. In [

13], Graham et al. used the 2012 national child abuse and neglect data system child file data

12 for the research. In [

18], the dataset is from the national health and social life survey

13 which included more than 3,000 men and women aged 18–59. In [

19], Shaw et al. used the data from the national health and social life survey

14 with the sample size of over 1,500 U.S. participants.

In the papers [

25,

28,

29,

30], the authors used the PAN-2012 dataset ([

44]) which contains a total of 222,055 conversations with nearly 3 million online messages from sexual predators and non-malicious user. The conversations have short text abbreviations, emoticons, slang, digits, symbols and character repetitions. They were collected from various sources, such as regular conversations without any sexual content and sexual conversations between consenting adults from Omegle

15. In [

35], the authors created a dataset based on 2,138 CSAM, adult pornography and sensitive images from a benchmark child pornography dataset built by [

45]. Their data includes 836 CSA images and 285 adult pornography images which have annotations of body parts (e.g. head, breast and buttocks) and demographic attributes (e.g. age, gender, and ethnicity). In [

38], authors used a dataset published by the National Software Reference Library

16 that contains more than 32 million file names.

3.3. Applied Methods

3.3.1. Statistical

Statistical analysis of large datasets is a common approach used to discern patterns and trends without bias, enabling us to discover meaningful information from large amounts of raw unstructured data. The 14 papers [

12,

13,

15,

16,

17,

18,

19,

24,

33,

34,

37,

40,

42,

43] used statistical methods to analyse their datasets.

In [

12], each participant (in 100 fathers from Seoul and 102 parents from Novosibirsk) was supplied with a questionnaire form. Random effects regression models were then used to detect the relationship between child abuse and informal social control. In [

13], the generalized linear mixed models were used to analyse the CSA reports from the child welfare system. In [

15], the authors used bi-variate statistics and step-wise multiple logistic regression models.

Research works [

16,

17,

18,

19] used SPSS tool to analyse their datasets. Paper [

16] exploited features about age, gender, pornography use, social media use and team sport participation. Paper [

17] used features including scale of the online CSA problem; cybercrime and the avoidance of digital technology. In [

18,

19] the authors exploited features, namely unhealthy last year, unhappy last year, same-sex age groups, emotional problems interfered with sex, specific sexual problems and/or ever forced woman sexually.

In [

24], the illegal online content reports of the Greek Hotline and the ATLAS dark web dataset of Web-IQ were analysed. The authors wanted to discover the relationship between the open web and the dark web. For example, they discovered that more than 50% of the Hotline reports were discussed in the dark web. In [

33], the system applied a simple algorithm based on a threshold of returned values of sensors worn. The system could detect and prevent most acts of sexual abuse or assault against children by calling the parents, or taking a picture or producing an alarm. In [

34], the authors studied, measured and analysed CSAM distribution and the exponential increment of CSAM reports. In [

37], the data of an online survey and semi-structured interviews was analysed. Some information found included: (1) Location and types of abuse incidents in Bangladesh; (2) The relationship between the abuser and the victims; (3) Challenges in combating CSA.

Applying simple statistical methods, Owen and Savage [

40] analysed the type and popularity of the content, and Woodhams et al. [

43] analysed the characteristics and behaviours of anonymous users of dark web platforms. Westlake and Bouchard [

42] studied the hyperlinks of the websites distributing CSAM.

3.3.2. Natural Language Processing

The NLP techniques can be used to extract features and contents of CSAM, e.g. abuse chats and grooming conversation. There are 2 papers [

22,

27] applying NLP to detect CSAM. In [

22], the authors analysed patterns in the locations and folder/file naming practices of the CSA websites to detect non-hashed CSA images. The motivation for this is due to the increasing volume of CSA images being created and distributed an increasing percentage of images are not classified in hash value databases used for automatic detection. In [

27], the list of keywords about emotions and sentiments posted in social media was built. Then, the authors analysed the Twitter tweets and Facebook pages of some potential people in India based on the keyword list.

3.3.3. Machine Learning (ML) & Deep Learning (DL)

ML/DL techniques are often used to classify or cluster CSA text or images. The 14 papers [

9,

10,

21,

23,

25,

26,

28,

30,

31,

35,

36,

38,

39,

41] used and proposed ML/DL techniques in CSAM.

Some papers [

21,

23,

26,

35] applied deep Convolutional Neural Network (CNN) to detect CSA images. In [

21], visual attention mechanism, age-group scoring and porn image classification were exploited. In [

26], the data-driven concepts and characterisation aspects of images were used. In [

23], the authors detected CSA from self-figure drawings. In [

35], the model combined object categories, pornography detection, age estimation and image metrics (i.e. luminance and sharpness)

The work of [

9] used DL models, namely CNN, Long Short Term Memory Network (LSTM), Bidirectional Long Short Term Memory (BLSTM) and Contextual LSTM. Ngejane et al. [

25] used ML models, namely Logistic Regression (LR), XGBoost and Multilayer Perceptrons (MLP). In [

28,

30,

31,

41,

38], the authors exploited Bag of Words, TF-IDF, Word2Vec to Support Vector Machine (SVM), Naive Bayes, Decision Trees, LR, Random Forest and/or K-Nearest Neighbors.

Cecillon et al. [

10] used the DeepWalk model and graph embedding representations. While in [

36], the deep perceptual hashing algorithm based on the Apple NeuralHash was used. In [

39], Hole et al. proposed that combining facial recognition with other biometric modalities, namely speaker recognition, can reduce both false positive and false negative matches, and could enhance analytical capabilities during investigations.

3.3.4. Combined methods

Both [

29,

32] combine ML/DL and NLP. In [

29], Bours and Kulsrud applied CNN and Naıve Bayes based on features of message, author and conversation. While, Li et al. [

32] used the Hierarchical Density based Spatial Clustering of Applications with Noise Algorithm (HDBSCAN) to cluster CSAM. The HDBSCAN applied the phrase detection algorithm to find the template signatures reuse between clusters.

4. Results and Discussion

In this survey we synthesised the state-of-the-art in the online CSAM detection and prevention literature, with a focus on datasets utilised, tasks considered, and the analysis/modelling techniques applied. In this section we will discuss each of these categories in detail.

4.1. Datasets

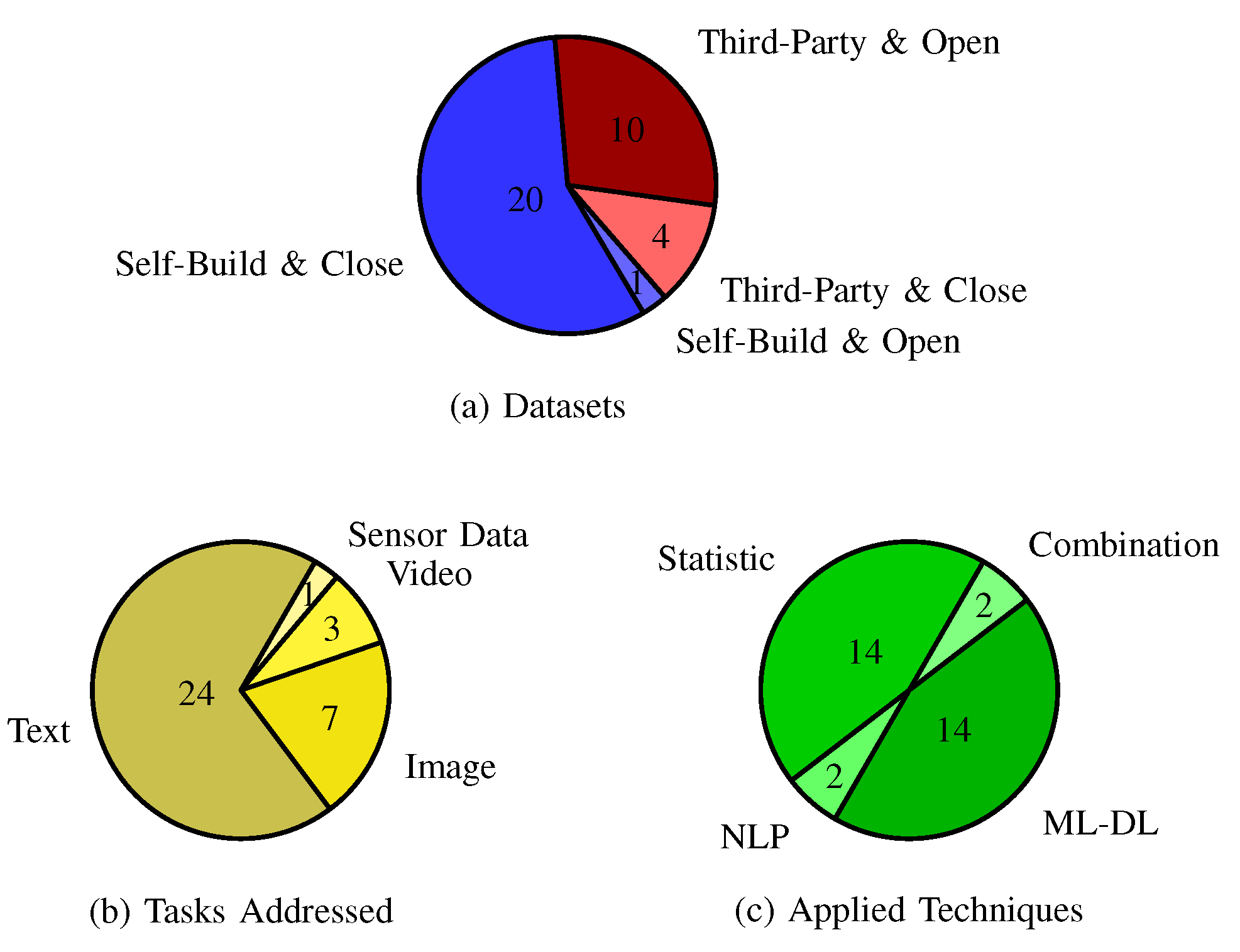

Figure 1(a) presents the breakdown of the datasets used in the selected papers from the literature. The majority (approximately 57%) of the papers used self-generated and closed data, which is not publicly available to the research community. A slightly smaller percentage (approximately 29%) of the literature used datasets that were generated from a third-party such as social media and subsequently made publicly available to the community. There are 7 open datasets created by third-parties. In that, 6 of them are CSA text and another is CSA images.

Typical pre-processing included the removal of personal data were applied before the information were extracted and exploited. Much smaller percentages of papers used datasets that were self-generated and then made available (approximately 3%) or which were generated by a third-party and kept private (approximately 11%).

4.2. Tasks Addressed

Figure 1(b) presents the breakdown of the type of task addressed in the selected papers from the literature. The tasks addressed focused on: (1) detecting sexual abuse comment or chat conversation; (2) understanding the abuser’s behaviours; and (3) detecting CSA images based on porn content, face detection and age estimation.

The majority (approximately 69%) of the research analysed text-based CSAM. Of lower focus as image-based CSAM (approximately 20%), video (approximately 8%), with only 1 paper (approximately 3%) using sensor data. One explanation of this breakdown can be the very sensitive and illegal nature of image and video based CSAM, which requires special permission to access in many jurisdictions. Moreover, the disturbing nature of the content may be too traumatising for many of the research community (particularly true for technologists rather than specially trained sociologists) to willingly deal with graphic content of this nature. In terms of the low number of sensor data, this may be due to the relatively recent development of such devices and the novelty around the proposal to use sensor data to detect abusive activities.

4.3. Applied Analysis/Modelling Techniques

Figure 1(c) presents the breakdown of the analysis and modelling techniques proposed in the selected literature. In papers applying statistic methods, the authors often used information from questionnaires and national hotlines to exploit features about victims and/or abusers, such as age, gender, race, pornography usage and emotional problems interfered with sex. The ML/DL papers typically applied SVM, Naive Bayes and/or CNN. The list of key word was used in the NLP papers and combined with ML/DL models in the combination techniques.

The most popular techniques are statistic and ML/DL (same approximately 44%). NLP and combination approaches are much less common in the domain, with only approximately 6% of papers proposing these. One explanation of this breakdown can be that sociologists are familiar with statistical algorithms and tools more than NLP and DL/ML algorithms and tools. While, comparing between NLP and DL/ML, DL/ML processes CSA images and videos more effective than NLP. Moreover, NLP tools are more difficult to use than ML/DL tools (even with computer scientist) in applying on CSAM (even with CSA text). So, the researchers often use statistical and ML/DL on processing and detecting CSAM.

5. Conclusions

This paper presented a comprehensive survey about the investigation, detection and prevention of online CSAM. We focused on research works in domain of CSA published by Springer, Elsevier, IEEE, or ACM from 2018 to 2022 to identify the relevant literature. The techniques used in the research papers include statistics, NLP, and ML/DL, applied to addressing CSAM problems across text, image and video content. The types of research datasets and their availability was also addressed. This information gives us a broad perspective on applying statistic and AI methods for solving the problems in domain of CSA on social networks. In addition, the open datasets in CSAM were reviewed and presented.

The survey paper can be a valuable resource for the CSAM research community as it provides the scope and size of the literature in the domain since 2018. This work can help researchers identify the gaps in knowledge and potential datasets that can be utilised for analysis or validation of proposed solutions.

CSAM detection and reduction are a complex task. In the future, the multiple methods need to be used in creating a combined method which may be combinations of deep learning methods and NLP technologies. In addition, to evaluate the CSAM detection models, a benchmark dataset must be created. We will re-use open CSAM datasets and collect more CSAM in hotlines and dark webs.

Author Contributions

For research articles with several authors, a short paragraph specifying their individual contributions must be provided. The following statements should be used “Conceptualization, X.X. and Y.Y.; methodology, X.X.; software, X.X.; validation, X.X., Y.Y. and Z.Z.; formal analysis, X.X.; investigation, X.X.; resources, X.X.; data curation, X.X.; writing–original draft preparation, X.X.; writing–review and editing, X.X.; visualization, X.X.; supervision, X.X.; project administration, X.X.; funding acquisition, Y.Y. All authors have read and agreed to the published version of the manuscript.”, please turn to the

CRediT taxonomy for the term explanation. Authorship must be limited to those who have contributed substantially to the work reported.

Acknowledgments

The survey paper is a part of the N-Light project which is funded by the Safe Online Initiative of End Violence and the Tech Coalition through the Tech Coalition Safe Online Research Fund (Grant number: 21-EVAC-0008-Technological University Dublin).

References

- Lee, H.; Ermakova, T.; Ververis, V.; Fabian, B. Detecting Child Sexual Abuse Material: A Comprehensive Survey. Forensic Science International: Digital Investigation 2020, 34, 301022. doi:10.1016/j.fsidi.2020.301022. [CrossRef]

- Sanchez, L.; Grajeda, C.; Baggili, I.; Hall, C. A practitioner survey exploring the value of forensic tools, ai, filtering, & safer presentation for investigating child sexual abuse material (csam). Digital Investigation 2019, 29, S124–S142. doi:10.1016/j.diin.2019.04.005. [CrossRef]

- Ali, S.; Haykal, H.A.; Youssef, E.Y.M. Child sexual abuse and the internet—a systematic review. Human Arenas 2021, pp. 1–18. doi:10.1007/s42087-021-00228-9. [CrossRef]

- Christensen, L.S.; Rayment-McHugh, S.; Prenzler, T.; Chiu, Y.N.; Webster, J. The theory and evidence behind law enforcement strategies that combat child sexual abuse material. International Journal of Police Science & Management 2021, 23, 392–405. doi:10.1177/14613557211026935. [CrossRef]

- Russell, D.; Higgins, D.; Posso, A. Preventing child sexual abuse: A systematic review of interventions and their efficacy in developing countries. Child abuse & neglect 2020, 102, 104395. doi:10.1016/j.chiabu.2020.104395. [CrossRef]

- Slavin, M.N.; Scoglio, A.A.; Blycker, G.R.; Potenza, M.N.; Kraus, S.W. Child sexual abuse and compulsive sexual behavior: A systematic literature review. Current addiction reports 2020, 7, 76–88. doi:10.1007/s40429-020-00298-9. [CrossRef]

- Steel, C.M.; Newman, E.; O’Rourke, S.; Quayle, E. A systematic review of cognitive distortions in online child sexual exploitation material offenders. Aggression and violent behavior 2020, 51, 101375. doi:10.1016/j.avb.2020.101375. [CrossRef]

- Steel, C.M.; Newman, E.; O’Rourke, S.; Quayle, E. An integrative review of historical technology and countermeasure usage trends in online child sexual exploitation material offenders. Forensic Science International: Digital Investigation 2020, 33, 300971. doi:10.1016/j.fsidi.2020.300971. [CrossRef]

- Akhter, M.; Jiangbin, Z.; Naqvi, I.; AbdelMajeed, M.; Zia, T. Abusive Language Detection from Social Media Comments Using Conventional Machine Learning and Deep Learning Approaches. Multimedia Systems 2021, pp. 1–16. doi:10.1007/s00530-021-00784-8. [CrossRef]

- Cecillon, N.; Labatut, V.; Dufour, R.; Linares, G. Graph embeddings for abusive language detection. SN Computer Science 2021, 2, 1–15. doi:10.1007/s42979-020-00413-7. [CrossRef]

- Christensen, L.S.; Pollard, K. Room for Improvement: How does the Media Portray Individuals Who Engage in Material Depicting Child Sexual Abuse? Sexuality & Culture 2022, pp. 1–13. doi:10.1007/s12119-022-09945-x. [CrossRef]

- Emery, C.R.; Wu, S.; Eremina, T.; Yoon, Y.; Kim, S.; Yang, H. Does informal social control deter child abuse? A comparative study of Koreans and Russians. International journal on child maltreatment: research, policy and practice 2019, 2, 37–54. doi:10.1007/s42448-019-00017-6. [CrossRef]

- Graham, L.M.; Lanier, P.; Finno-Velasquez, M.; Johnson-Motoyama, M. Substantiated reports of sexual abuse among Latinx children: Multilevel models of national data. Journal of family violence 2018, 33, 481–490. doi:10.1007/s10896-018-9967-2. [CrossRef]

- Guastaferro, K.; Zadzora, K.M.; Reader, J.M.; Shanley, J.; Noll, J.G. A parent-focused child sexual abuse prevention program: Development, acceptability, and feasibility. Journal of child and family studies 2019, 28, 1862–1877. doi:10.1007/s10826-019-01410-y. [CrossRef]

- Jonsson, L.S.; Fredlund, C.; Priebe, G.; Wadsby, M.; Svedin, C.G. Online sexual abuse of adolescents by a perpetrator met online: a cross-sectional study. Child and adolescent psychiatry and mental health 2019, 13, 1–10. doi:10.1186/s13034-019-0292-1. [CrossRef]

- Maas, M.K.; Cary, K.M.; Clancy, E.M.; Klettke, B.; McCauley, H.L.; Temple, J.R. Slutpage use among US college students: the secret and social platforms of image-based sexual abuse. Archives of sexual behavior 2021, 50, 2203–2214. doi:10.1007/s10508-021-01920-1. [CrossRef]

- Quayle, E. Prevention, disruption and deterrence of online child sexual exploitation and abuse. Era Forum. Springer, 2020, Vol. 21, pp. 429–447. doi:10.1007/s12027-020-00625-7. [CrossRef]

- Rind, B. First postpubertal male same-sex sexual experience in the National Health and Social Life Survey: Current functioning in relation to age at time of experience and partner age. Archives of Sexual Behavior 2018, 47, 1755–1768. doi:10.1007/s10508-017-1025-2. [CrossRef]

- Shaw, S.; Cham, H.J.; Galloway, E.; Winskell, K.; Mupambireyi, Z.; Kasese, C.; Bangani, Z.; Miller, K. Engaging Parents in Zimbabwe to Prevent and Respond to Child Sexual Abuse: A Pilot Evaluation. Journal of Child and Family Studies 2021, 30, 1314–1326. doi:10.1007/s10826-021-01938-y. [CrossRef]

- Borg, K.; Snowdon, C.; Hodes, D. A resilience-based approach to the recognition and response of child sexual abuse. Paediatrics and child health 2019, 29, 6–14. doi:10.1016/j.paed.2018.11.006. [CrossRef]

- Gangwar, A.; González-Castro, V.; Alegre, E.; Fidalgo, E. AttM-CNN: Attention and metric learning based CNN for pornography, age and Child Sexual Abuse (CSA) Detection in images. Neurocomputing 2021, 445, 81–104. doi:10.1016/j.neucom.2021.02.056. [CrossRef]

- Guerra, E.; Westlake, B.G. Detecting child sexual abuse images: traits of child sexual exploitation hosting and displaying websites. Child Abuse & Neglect 2021, 122, 105336. doi:10.1016/j.chiabu.2021.105336. [CrossRef]

- Kissos, L.; Goldner, L.; Butman, M.; Eliyahu, N.; Lev-Wiesel, R. Can artificial intelligence achieve human-level performance? A pilot study of childhood sexual abuse detection in self-figure drawings. Child Abuse & Neglect 2020, 109, 104755. doi:10.1016/j.chiabu.2020.104755. [CrossRef]

- Kokolaki, E.; Daskalaki, E.; Psaroudaki, K.; Christodoulaki, M.; Fragopoulou, P. Investigating the dynamics of illegal online activity: The power of reporting, dark web, and related legislation. Computer Law & Security Review 2020, 38, 105440. doi:10.1016/j.clsr.2020.105440. [CrossRef]

- Ngejane, C.H.; Eloff, J.H.; Sefara, T.J.; Marivate, V.N. Digital forensics supported by machine learning for the detection of online sexual predatory chats. Forensic science international: Digital investigation 2021, 36, 301109. doi:10.1016/j.fsidi.2021.301109. [CrossRef]

- Vitorino, P.; Avila, S.; Perez, M.; Rocha, A. Leveraging deep neural networks to fight child pornography in the age of social media. Journal of Visual Communication and Image Representation 2018, 50, 303–313. doi:10.1016/j.jvcir.2017.12.005. [CrossRef]

- Andrews, D.; Alathur, S.; Chetty, N.; Kumar, V. Child online safety in indian context. 2020 5th International Conference on Computing, Communication and Security (ICCCS). IEEE, 2020, pp. 1–4. doi:10.1109/ICCCS49678.2020.9277038. [CrossRef]

- Borj, P.R.; Raja, K.; Bours, P. Detecting Sexual Predatory Chats by Perturbed Data and Balanced Ensembles. 2021 International Conference of the Biometrics Special Interest Group (BIOSIG). IEEE, 2021, pp. 1–5. doi:10.1109/BIOSIG52210.2021.9548303. [CrossRef]

- Bours, P.; Kulsrud, H. Detection of cyber grooming in online conversation. 2019 IEEE International Workshop on Information Forensics and Security (WIFS). IEEE, 2019, pp. 1–6. doi:10.1109/WIFS47025.2019.9035090. [CrossRef]

- Fauzi, M.A.; Bours, P. Ensemble method for sexual predators identification in online chats. 2020 8th international workshop on biometrics and forensics (IWBF). IEEE, 2020, pp. 1–6. doi:10.1109/ICCCS49678.2020.9277038. [CrossRef]

- Islam, M.M.; Uddin, M.A.; Islam, L.; Akter, A.; Sharmin, S.; Acharjee, U.K. Cyberbullying detection on social networks using machine learning approaches. 2020 IEEE Asia-Pacific Conference on Computer Science and Data Engineering (CSDE). IEEE, 2020, pp. 1–6. doi:10.1109/CSDE50874.2020.9411601. [CrossRef]

- Li, L.; Simek, O.; Lai, A.; Daggett, M.; Dagli, C.K.; Jones, C. Detection and characterization of human trafficking networks using unsupervised scalable text template matching. 2018 IEEE International Conference on Big Data (Big Data). IEEE, 2018, pp. 3111–3120. doi:10.1109/BigData.2018.8622189. [CrossRef]

- Samra, S.; Alshouli, M.; Alaryani, S.; Aasfour, B.; Shehieb, W.; Mir, M. Shield: Smart Detection System to Protect Children from Sexual Abuse. 2021 13th Biomedical Engineering International Conference (BMEiCON). IEEE, 2021, pp. 1–6. doi:10.1109/BMEiCON53485.2021.9745227. [CrossRef]

- Bursztein, E.; Clarke, E.; DeLaune, M.; Elifff, D.M.; Hsu, N.; Olson, L.; Shehan, J.; Thakur, M.; Thomas, K.; Bright, T. Rethinking the detection of child sexual abuse imagery on the internet. The world wide web conference, 2019, pp. 2601–2607. doi:10.1145/3308558.3313482. [CrossRef]

- Laranjeira, C.; Macedo, J.; Avila, S.; Santos, J. Seeing without Looking: Analysis Pipeline for Child Sexual Abuse Datasets. Proceedings of 2022 ACM Conference on Fairness, Accountability, and Transparency (FAccT’22). ACM, 2022, p. 2189–2205. doi:10.1145/3531146.3534636. [CrossRef]

- Struppek, L.; Hintersdorf, D.; Neider, D.; Kersting, K. Learning to break deep perceptual hashing: The use case neuralhash. 2022 ACM Conference on Fairness, Accountability, and Transparency, 2022, pp. 58–69. doi:10.1145/3531146.3533073. [CrossRef]

- Sultana, S.; Pritha, S.T.; Tasnim, R.; Das, A.; Akter, R.; Hasan, S.; Alam, S.R.; Kabir, M.A.; Ahmed, S.I. ‘ShishuShurokkha’: A Transformative Justice Approach for Combating Child Sexual Abuse in Bangladesh. CHI Conference on Human Factors in Computing Systems, 2022, pp. 1–23. doi:10.1145/3491102.3517543. [CrossRef]

- Al-Nabki, M.W.; Fidalgo, E.; Alegre, E.; Alaiz-Rodríguez, R. Short Text Classification Approach to Identify Child Sexual Exploitation Material. arXiv preprint 2020.

- Hole, M.; Frank, R.; Logos, K.; Westlake, B.; Michalski, D.; Bright, D.; Afana, E.; Brewer, R.; Ross, A.; Swearingen, T.; others. Developing automated methods to detect and match face and voice biometrics in child sexual abuse videos. Trends and Issues in Crime and Criminal Justice [electronic resource] 2022, pp. 1–15. doi:10.52922/ti78566. [CrossRef]

- Owen, G.; Savage, N. The Tor dark net. Chatham House 2015.

- Pereira, M.; Dodhia, R.; Anderson, H.; Brown, R. Metadata-based detection of child sexual abuse material. arXiv preprint arXiv:2010.02387 2020.

- Westlake, B.G.; Bouchard, M. Liking and hyperlinking: Community detection in online child sexual exploitation networks. Social science research 2016, 59, 23–36. doi:10.1016/j.ssresearch.2016.04.010. [CrossRef]

- Woodhams, J.; Kloess, J.A.; Jose, B.; Hamilton-Giachritsis, C.E. Characteristics and behaviors of anonymous users of dark web platforms suspected of child sexual offenses. Frontiers in Psychology 2021, 12, 623668. doi:10.3389/fpsyg.2021.623668. [CrossRef]

- Inches, G.; Crestani, F. Overview of the International Sexual Predator Identification Competition at PAN-2012. CLEF 2012 Evaluation Labs and Workshop, Online Working Notes. CEUR-WS.org, 2012, Vol. 1178.

- Macedo, J.; Costa, F.; A. dos Santos, J. A Benchmark Methodology for Child Pornography Detection. 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), 2018, pp. 455–462. doi:10.1109/SIBGRAPI.2018.00065. [CrossRef]

| 1 |

|

| 2 |

|

| 3 |

|

| 4 |

|

| 5 |

|

| 6 |

|

| 7 |

|

| 8 |

|

| 9 |

|

| 10 |

|

| 11 |

|

| 12 |

|

| 13 |

|

| 14 |

|

| 15 |

|

| 16 |

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).