This paper is a response to the EPR paper [

1] titled: “Can quantum-mechanical description of physical reality be considered complete?”, published in Physical Review in 1935. The development below presents how a QM measurement function is completed by including the precision determined by calibration to a non-local unit standard in both QM measurement functions and metrology measurement processes.

I. A Measurement Result Quantity

In 1822, L. Euler identified that all measurement results are mutual relations [

2]. In 1891, J. C. Maxwell [

3] proposed that a measurement result is:

In eq. (1),

n is a numerical value, and

u is a unit (“taken as a standard of reference” [

4]), together they form a mutual relation. Equation (1) is the basis of quantity calculus [

5]. From Maxwell’s usage and quote,

u is a mean that is equal (without

precision) to a fixed standard unit. Equation (1) assumes that perfect precision is possible, well before Heisenberg’s uncertainty [

6] identified such precision as impossible. This current paper develops how Heisenberg’s quantum uncertainty, or quantization, requires a precision function in eq. (1).

In a Euclidian space, the International Vocabulary of Metrology (VIM) [

7] represents a measurement result as a quantity with

population variation, where instrument calibration establishes a mean

u with a

precision. In a normed vector space, a QM measurement function describes each measurement state as an eigenvalue of unity eigenvectors. A QM measurement function does not compare with eq. (1) because each eigenvector (uncalibrated) and

u (empirically calibrated) are not correlated to each other. This discrepancy can be resolved by determining the precision of each eigenvector to a mean

u or a standard. To accomplish this, another quantity calculus function is proposed:

Equation (1) assumes all u are equal, then calibration (which equalizes the un to a standard) appears to be empirical. In eq. (2) each un is the smallest interval of an additive measure scale without any un calibration, including during the design or construction of empirical measuring instruments. Therefore, the property, relative size and precision of each un is only comparable locally when each un is calibrated to a local reference scale or is comparable non-locally when each un is calibrated to U, a non-local standard Unit.

Equation (1) is often expedient for experimental measurements. As eq. (2) describes a proper superset of eq. (1) results, this paper proposes eq. (2) as the basis for all mathematical descriptions (functions) and empirical measurements (processes) that describe or produce all measurement results.

II. Model of a Relative Measurement System

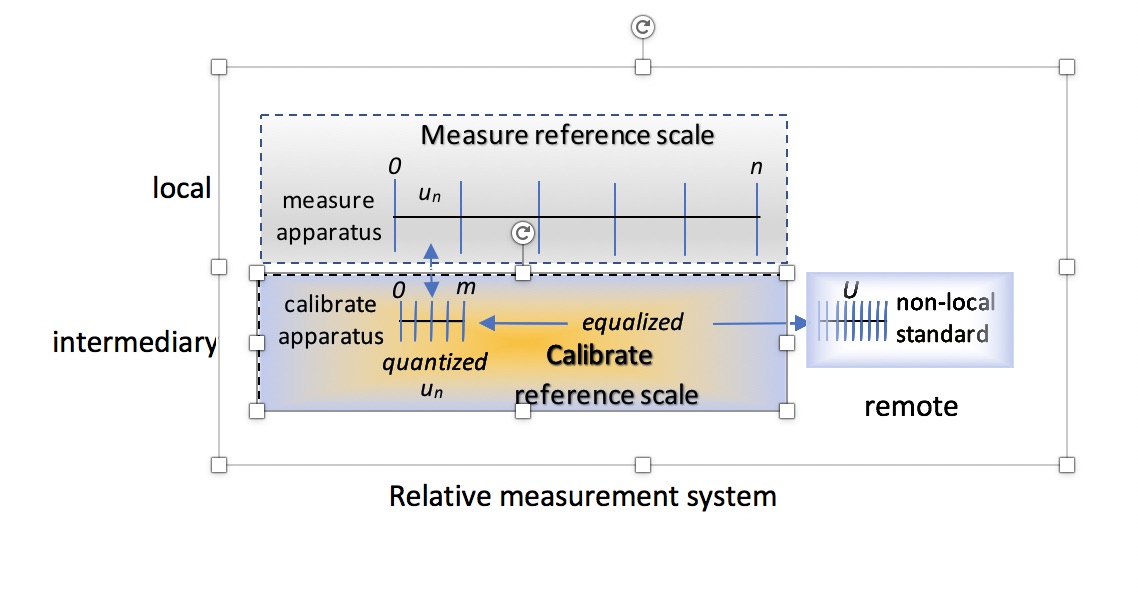

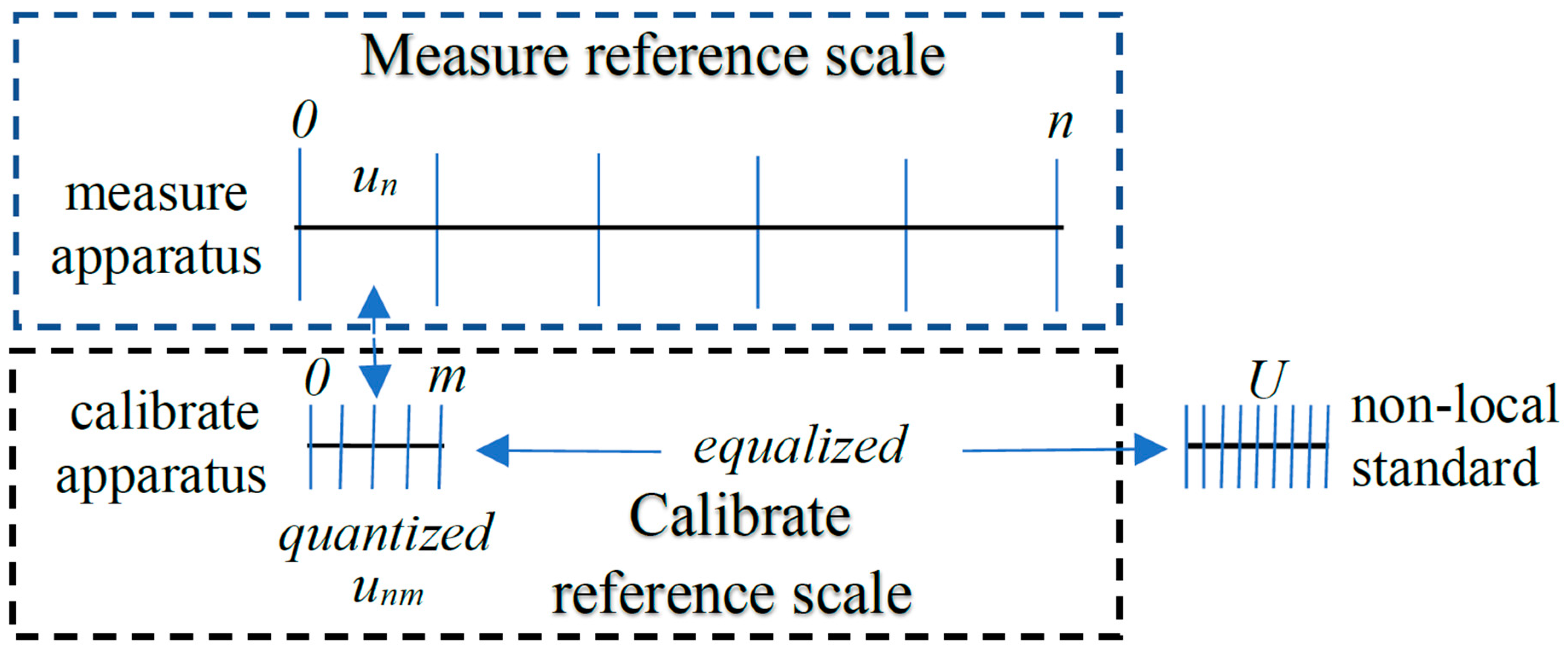

The measurement system in

Figure 1 applies to both functions and processes, without noise or distortion. The apparatus in

Figure 1 may be descriptions (e.g., additive scale), functions (e.g. measurement equation) or empirical instruments.

Figure 1 applies eq. (2) for the measure and calibrate reference scales, measures an observable with a fixed numerical value (

of

1/m states), shows a Unit standard (

U ), and the quantization of each apparatus including the standard.

Figure 1 brings together the

measure reference scale (a representational measurement [

8]) with a

calibrate reference scale which establishes the smallest equal states.

In

Figure 1, the

un intervals, where the mean interval is

1/n, quantify the measure reference scale; the

m equal states, each defined to be

1/m, quantify the calibrate reference scale. Notice that the use of the measure and calibrate reference scales increases a continuous distribution’s entropy by

log n and

log m respectively [

9]. The calibrate reference scale quantizes and equalizes each

un which determines the precision of each

un to

U. Quantizing and equalizing each

un to

U is termed

un calibration. Then:

On the measure reference scale, both

n and

un may vary due to noise, distortion or quantization. In this paper a measure is correlated to a reference scale without

un calibration, a measurement is with

un calibration, and

unm only includes quantization, not noise or distortion. When a measure apparatus with the mean

un =

1/n is applied repetitively to an

numerical value observable, eq. (2) produces a distribution:

Notice that by changing a quantity (product) to a Quantity (summation) it is clear that the un, each of which vary statistically plus or minus, are summed. This is the functional significance of eq. (2) vs eq. (1). In statistically rare cases the population variation created by each ±1/m is large (see V.A, V.B and V.C below). The unm precision is determined by the calibrate reference scale and applies in all measurement functions/processes. When calibration is treated as empirical, this functional effect is not treated and measurement discrepancies emerge.

Quantization (i.e.,

1/m) also causes

n uncertainty. W. Heisenberg formally presented quantum uncertainty in QM. Relative Measurement Theory (RMT) [

10] proved that the quantization of

n at Planck scales causes Heisenberg’s quantum uncertainty.

Process measurement result Quantity population variation is due to: distortion (from inside the measurement system) and noise (from outside the measurement system). Both function and process Quantity population variation is caused by the quantization of the calibrate reference scale.

A measurement function/process describes successive granularity. The measure reference scale has a mean unit = 1/n, 1/n > 1/m. 1/m = the quantization of the calibrate reference scale. 1/m the physical resolution of an instrument’s transducer which converts the property of an observable’s Quantity to a property of a reference scale.

As example, an analog voltmeter has a transducer which converts voltage (a property) into the position (another property) of a needle on a reference scale with an identified n uncertainty and unm precision of 1/m. If the resolution of the transducer is a Planck (the most precise resolution possible), then the absolute value of the n uncertainty or unm precision is a Planck.

It is commonly assumed in QM measure functions that each eigenvector relates to a reference measurement standard, U in metrology. When the unm precision to U approaches the size of a measurement result Quantity only unm is valid to apply in a reference scale, because such unm precision produces an identifiable change to such a measurement result Quantity.

III. Units Have Multiple Definitions

In a normed vector space an eigenvector equals a unity property (e.g., one length). In metrology, u represents a standard or a factor of a standard. In this paper, un represents an uncalibrated interval (which has a local property, undetermined relative size and undetermined precision) and unm represents a unit calibrated to U. Each unm has a non-local property, relative size and relative precision. The defined equal 1/m states may be treated as QM unity eigenvectors.

U standard,

U (capitalized), is a non-local standard with a defined property and a defined numerical value.

U represents one of the seven different BIPM base units (properties) or one of their derivations [

11].

U may be without

precision (i.e., exact). This paper recognizes that a non-local

U defines the relative precision of

unm, makes possible comparable measurement results and

U’s numerical value is arbitrary only in the first usage.

un identifies each of the smallest intervals of the measure reference scale before any calibration.

unm is the calibrated numerical value of each un expressed in 1/m. The 1/m are the smallest states of the calibrate reference scale. n and m may be represented as integers (counts) when 1/n and 1/m represent the smallest intervals or states of their respective reference scales.

IV. Additional Definitions

The International Vocabulary of Metrology (VIM) provides definitions of current metrology terminology. The following additional definitions, which do not include any noise or distortion, are related, where possible, to VIM definitions.

Quantity may be a product (q) as shown in (1) or a sum (Q) as shown in eq. (2). In VIM a quantity is a product, because instrument calibration establishes sufficient unm precision when 1/m is not close in size to a measurement result Quantity.

Reference scale is the scale of a measure or calibrate reference apparatus local to an observable (i.e., properties are locally comparable) and non-local to a U standard. A reference scale has equal (i.e., additive) uncalibrated intervals, where both the size relative to U and the precision of the intervals are not determined. A reference scale has a reference or zero point. VIM applies the term reference measurement standard for both concepts, similar to Maxwell’s usage.

A measure apparatus has a reference scale and may have a transducer which converts an observable’s property to a property of this reference scale. A measure apparatus determines the n of an observable.

A calibrate apparatus has a reference scale which is calibrated to a U whose property is consistent with this reference scale. The calibrate apparatus determines unm precision, 1/m. un calibration does not average the unm precision.

Calibration or instrument calibration, is defined in VIM and generates a mean un. Calibration averages unm and commonly includes an adjustment of the n of a quantity as adjusting un in an empirical instrument is usually not practical.

V. Empirical examples

A. Physical metre stick

A physical metre stick is

100 centimetres, or

100 un. Then

n (e.g., 50) is a numerical value of

un (a centimetre) and each

un is calibrated to

unm. When calibrated to

U (a standard metre)

1/m precision and

n uncertainty =

n 1/m, where each

1/m is one millimetre. The largest portion of the

population variation is determined by

un calibration. In the rarest two cases, when

n of the

unm, all with a precision of +

1/m, are summed and in another measurement of

n of the

unm, all with a precision of -

1/m, are summed, the greatest population variance (

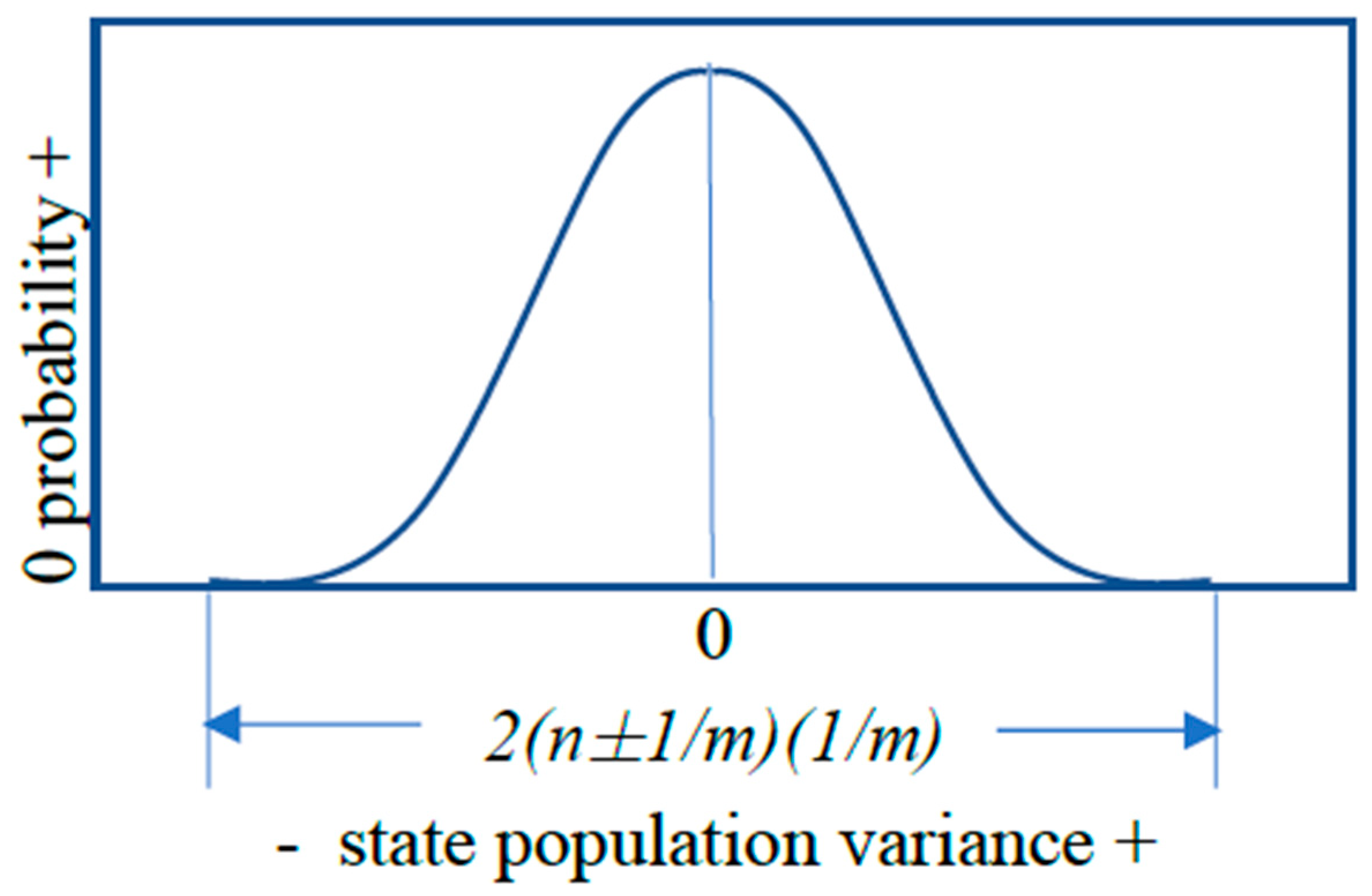

) occurs, as shown in

Figure 2 below.

B. Bell shaped normal measurement distributions

Figure 2 presents the characteristic bell shape of a large distribution of repetitive experimental measurement results. This shape has been verified in many different forms of measurement results where noise and distortion have been minimized [

12]. The bell shape (widening)

unm precision population variance of a normal distribution in

Figure 2 is further demonstrated in V.C below.

Figure 2.

Normal distribution.

Figure 2.

Normal distribution.

C. Additive reference scale

An example of an additive reference scale is a thermometer which measures the property of thermodynamic temperature. Rather than marking the freezing and boiling points of water (points on a reference scale) and dividing the distance between into equal units, this example demonstrates how additive intervals statistically increase population variance, producing a bell shaped measurement result distribution.

An instrument, consisting of a hollow glass tube with a reservoir filled with mercury at one end, fits inside another hollow glass tube that slides over the first. The two glass tubes are held together and placed in an adjustable temperature oven which has a resolution of 0.10 (degree). Then the outside glass tube is marked at the level of mercury which appears for the zero degree state and each 1.00 un above the zero mark. n + 1 marks (e.g., n = 100 in the Celsius system) or 101 marks are made to quantize the outside glass tube. Each of the 100 un is correlated by the oven to 1/0.1 =10 = unm 0.10 precision.

After 101 marks are made, the instrument is removed from the oven and an ice water bath is applied to the tube with mercury. The outside glass tube is now slid over the inside glass tube until the top of the inside mercury column lines up with the first mark on the outside glass tube. Now one mark on the outside glass tube is referenced to the temperature of ice water (00C) which is a reference point for thermodynamic temperature.

Consider the temperature of a glass of water in contact with the reservoir of the referenced measuring instrument. If the temperature of the water is 700, the 71st mark on the outside glass tube represents 700 0.10 nominal precision or 70 worst case population variation. The 0.10 nominal precision occurs when the 0.10 unm precision of all 70 un is uniformly distributed.

The 70 population variation occurs (very, very rarely) when each of the 70 un has the same +0.10 or -0.10 state precision which then sums. This statistical effect is ignored when defined equal units are applied (e.g., in an eigenvector representation of a measurement result). Notice there is also a 700 0.10 numerical value n uncertainty in this case.

D. Comparison of two measuring instrument results

The comparison of two measurement results (

qa and

qb) from two measuring instruments

(a and

b) is a ratio of their numerical values (

na and

nb) and the numerical values of their

un (i.e.,

ua and

ub), shown as:

. The calibration of

qa and

qb to

U refines

ua into

uam and

ub into

ubm. Then

uam and

ubm cancel because they are equalized by calibration. This allows accurate

na/nb comparisons. That is, when calibration equalizes the numerical values of

uam and

ubm, a factor change in the numerical value of either

uam and

ubm, need not impact the ratio

na/nb. In this manner a numerical value of centimetres (e.g.,

1/100 factor of a metre) is compared with a numerical value of a metre in V.A, above. Calibration must occur in a measurement process to allow accurate numerical value comparisons [

13].

J. S. Bell, in his seminal paper [

14]: “...there must be a mechanism whereby the setting of one measuring device can influence the reading of another instrument, however remote.” In this example, the

a and

b measuring instruments are independent but the measurement results

qa and

qb, whose units (

uam and

ubm) are equalized by calibration, appear to “influence the reading of another instrument” (i.e., they are remote entangled) across any distance.

VI. The effect of U on precision and population variation

Reviewing eq. (3), the numerical value of the observable in

Figure 2 is

of

1/m states. Each

un is quantized and equalized to

U by the calibrate reference scale, becoming

unm:

A common local property and common local

un between measurement functions or processes are provided by a common reference scale. Then the non-local precision of

un is determined by calibration to

U. Notice that the

n of

U does not appear in eq. (3), but is required to determine

unm. The

n of

U is arbitrary in the first usage, but required to determine precision. Equation (4) is modified to include

n uncertainty, producing:

Equation (5) identifies how different numerical values of Quantities will occur when the

1/m state is near a Planck size. Equation (5) is a measurement function that applies to measurement processes as well. From eq. (3):

Assuming the

un in eq. (5) are fixed, eq. (7) presents:

Equation (8) identifies that the lowest probability measurement results have a population variation ~2

n times

1/m. Equation (8) also explains how the bell shape of a normal distribution in

Figure 2 occurs.

Equation (8) identifies that every calibrated measurement result Quantity has a population variation which is understood when both n and u are treated. In eq. (5) when n is large, the sum of each 1/m cancels, or close to cancels, very often (see V.C) due to the central limit theorem’s effect on a normal (i.e., symmetric) distribution of measurement results.

Conversely, when

n is small (neutron spin measurements

n = 2) [

15], the sum of each

1/m is not likely to cancel. Thus two repetitive measurement result Quantities of the same observable when

1/m is near a Planck size, will likely be different. This appears in QM as repetitive measurement results that do not commute.

VII. Quantity calculus explains perplexing experiments

A. Heisenberg’s quantum uncertainty

In Heisenberg’s quantum uncertainty analysis [

16], Heisenberg identifies two properties,

p = MV = momentum and

q = position, each with a precision (i.e.,

un precision) of

p1 and

q1 that exhibit this relationship:

(

h = a Planck). That is, when

p1 increases,

q1 decreases. In his example

p1 and

q1 must be determined by comparing

p and

q at least two different times. Notice that the comparison of

p1 and

q1 is a local comparison.

The time difference between two repetitive position measurements is: tn . Converting Heisenberg’s precision notation (below in brackets) into this paper’s notation:

The position’s qn precision at .

The velocity (V)’s vn precision .

The momentum’s pn precision where M = mass = constant.

This quantity calculus identifies that tn inversely changes q and p. This applies to all such measure comparisons.

Heisenberg recognizes this inverse precision relationship: “Thus, the more precisely the position is determined, the less precisely the momentum is known, and conversely.” However, QM does not recognize that the two times ( and ), when a wavelength of light is applied to observe the q position, establish a measure reference scale interval of time, tn. In any local measurement an interval is determined by a reference scale.

Heisenberg also observes that an energy increase occurs when one measurement is made of an observable. Shannon [

17] developed that an entropy/energy increase occurs when a continuous distribution is linearly transformed, in this case by a measure reference scale.

B. Double slit experiments

In the double slit experiments [

18], two properties of each particle are measured. One measurement result quantity represents a frequency property, the other measurement result quantity represents an energy property. The slits provide the reference scale, while the sensing plate is both the frequency and energy transducer. An operator’s selection of a pattern on the sensing plate determines which property is measured. Most particles have multiple properties, i.e., time, mass, energy, etc. In non-local measurements the selection of a property occurs by calibration of the measuring apparatus to a

U. When calibration is assumed to be empirical, this property selection function is not recognized.

VIII. Relating this paper to other measurement theories

In the 20th century, QM offered a new measurement function, von Neumann’s Process 1 which includes a statistical eigenvector operator [

19]. Both von Neumann’s Process 1 and Dirac’s bra-ket notation treat a quantity as an inner product of eigenvalues and eigenvectors. The comparison of eq. (5) to Process 1 is straight forward when the equal

1/m states are treated as eigenvectors.

A Planck represents a very, very small quantization limit and the times

~2n effect of quantization on population variation [see eq. (7)] is not usually recognized. With this basis, Maxwell’s assumption that the mean

appears to have been acceptable [

20]. This masks the quantized and statistical nature

U exhibits at all scales. Currently QM also incorrectly assumes that the precision of repetitive measurement results can in theory be within a Planck of each other [

21]. As presented in this paper, these assumptions break down when the

unm precision is close in size to a measurement result Quantity.

In representational measurement theory [

22], a measure and measurement are not differentiated. This theory does not recognize a quantity mutual relation; assumes measure result comparisons can occur without a reference scale or standard; treats a unit as arbitrary [

23], which requires any calibration to be empirical [

24]; and indicates that all measure result population variation is due to noise, distortion and errors in the measurement system [

25].

Since a Quantity consisting of a numerical value and a calibrated

un has not been applied in QM for almost 90 years, many perplexing effects have been noted. Measurement Unification, 2021 [

26] explains how in the Stern-Gerlach experiments that J. S. Bell considered, the calibration of each instrument to the other is not recognized. Other explanations are given of quantum teleportation experiments, Mach-Zehnder interferometer experiments, and Mermin’s device (also based upon the Stern-Gerlach experiments), and the Schrödinger’s Cat thought experiment. These explanations identify how

un calibration unifies metrology processes and QM measurement functions.

IX. Conclusion

Perhaps Maxwell’s quantity, which assumed that the mean un = U, misled measurement theorists to treat calibration as an empirical process. QM evolved away from Maxwell’s single dimension quantity with n and u towards a richer coordinate system with a magnitude and defined eigenvectors in multiple dimensions. However, the precise relationship of u and eigenvectors, determined by calibration, was not included in QM measurement functions. And when calibration is not part of a QM measurement function, the entropy change caused by calibration is not understood. The EPR paper is shown to be correct: a QM measurement function is completed by including the precision determined by calibration to a non-local unit standard in both QM mathematical functions and metrology measurement processes.

Acknowledgments

The author acknowledges Luca Mari, Chris Field, Elaine Baskin and Richard Cember for their valuable discussions and detailed comments on drafts of this work.

References

- Einstein, B. Podolsky, N. Rosen, Can quantum-mechanical description of physical reality be considered complete?, Physical Review, Vol 47, May 15, 1935. This paper is often referred to as the EPR paper. [CrossRef]

- L. Euler, Elements of Algebra, Chapter I, Article I, #3. Third ed., Longman, Hurst, Rees, Orme and Co., London England, 1822. “Now, we cannot measure or determine any quantity, except by considering some other quantity of the same kind as known, and pointing out their mutual relation.”.

- J. C. Maxwell, A Treatise on Electricity and Magnetism, 3rd Ed. (1891), Dover Publications, New York, 1954, p. 1.

- Ibid., The quote is Maxwell’s.

- J. de Boer, On the History of Quantity Calculus and the International System, Metrologia, Vol 31, page 405, 1995. [CrossRef]

- W. Heisenberg, The physical content of quantum kinematics and mechanics, J.A. Wheeler, W.H. Zurek (Eds.), Quantum Theory and Measurement, Princeton University Press, Princeton, NJ (1983).

-

International Vocabulary of Metrology (VIM), third ed., BIPM JCGM 200:2012, quantity 1.1. <http://www.bipm.org/en/publications/guides/vim.html> 03 December 2022.

- D. H. Krantz, R. D. Luce, P. Suppes, A. Tversky, Foundations of Measurement, Academic Press, New York, 1971, Vol. 1, page 3, 1.1.2, Counting of Units. This three volume work is the foundational text on representational measure.

- Shannon, The Mathematical Theory of Communications, University of Illinois Press, Urbana, IL, 1963, page 91, para. 9. Shannon describes the entropy change due to a linear transformation of coordinates.

- K. Krechmer, Relative measurement theory (RMT), Measurement, 116 (2018), pp. 77-82.

- BIPM, the intergovernmental organization through which governments act together on matters related to measurement science and measurement standards, SI base units. <https://www.bipm.org/en/measurement-units/si-base-units> 03 December 2022.

- Lyon, Why are Normal Distributions Normal? British Journal of the Philosophy of Science, 65 (2014), 621–649. [CrossRef]

- E. Buckingham, On the physically similar systems: illustrations of the use of dimensional equations, Physical Review, Vol IV, No. 4, pages 345-376, 1914. [CrossRef]

- J. S. Bell, The Speakable and Unspeakable in Quantum Mechanics, Cambridge University Press, Cambridge England, 1987, page 20, On the EPR paradox.

- G. Sulyok, S. Sponar, J. Erhart, G. Badurek, M. Ozawa and Y. Hasegawa, Violation of Heisenberg’s error disturbance uncertainty relation in neutron-spin measurements, Physical Review A, 88, 022110 (2013). [CrossRef]

- W. Heisenberg, p. 64. “Let q1 be the precision with which the value of q is known (q1 is, say, the mean error of q), therefore here the wavelength of light”.

- Shannon, The Mathematical Theory of Communication.

- R. Feynman, The Feymann Lectures on Physics, Addison-Wesley Publishing Co., Reading, MA, 1966, page 1.1–1.8.

- J. von Neumann, Mathematical Foundations of Quantum Mechanics, Princeton University Press, Princeton NJ, USA, 1955, page 351, Process 1.

- Campbell, N., Foundations of Science, Dover Publication, New York, NY, (1957), page 454.

- J. von Neumann, Mathematical Foundations, page 221.

- H. Krantz, Foundations of Measurement.

- Ibid., page 3.

- Ibid., page 32. “The construction and calibration of measuring devices is a major activity, but it lies rather far from the sorts of qualitative theories we examine here”.

- Ibid., Section 1.5.1.

- K. Krechmer, Measurement Unification, Measurement, Vol. 182, September 2021.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).