Introduction

Motivation is a driving force of human achievement. It has been shown to positively influence academic performance, study strategies, and mental health of students. [

1] If students are stripped of motivation, i.e., student gets hold of exam key, there is no extrinsic reward to put the time and effort into studying the material. The expectancy value theorem is a psychological model that suggests that an individual’s motivation is affected by the expectancy of success at a task and the value that the individual assigns to the outcome. [

2]

College is academically challenging even for the most gifted high school students with strong GPAs, experience in AP classes and high ACT/SAT scores. Transitioning from high school to university is often met with gaps between students’ expectations and the realities of university life. [

3] The expectancy value theorem comes into play when students believe that their prior experience and study habits are sufficient enough that extra preparation will have no effect on the outcome of their grades. [

4] When this case arises, students’ motivation is depreciated, and grade outcomes are poor. [

5]

However, often failure needs to occur before success can begin. Psychologist K. Anders Ericsson identified the importance of deliberate practice, practice focused on specific elements to lead to expertise. To achieve success after failure, deliberate reflection through investigating and evaluating the root of the failure is needed to affect our future actions. [

6] For example, students who did not achieve their desired grade on an exam reflect on their study habits so that further assessments in the course fulfill their target grade.

The following article focusses on an observational analysis of college freshman who have taken an entry level mechanical engineering course titled mechanical engineering problem solving with computer application. The students were tested on introductory static and mechanic topics in exams that will be referenced as the static body and mechanics of materials exams respectively. They were assigned assessments during the semester that utilized these topics to write programs that computed forces and stresses using GUI’s. It is hypothesized that students were motivated to achieve their desired grade outcome due to the poor performance results of the first exam.

Materials and Methods

The first exam tested student’s ability to solve static body forces by using moments and free body diagrams. Students were provided schematics which detailed the angles and forces applied to the bodies. They were asked to compute reaction forces at supports as well as resultant forces with direction. To solve the problem students were to sum forces, take moments, and apply applicable trigonometric identities such as law of cosines or law of sines. The information tested in the static body exam covered both topics that should have been covered in high school physics and trigonometry as well as new static body material. The time allocated to students was 50 minutes to solve three problems.

The second exam in the mechanical engineering problem solving with computer application course tested new mechanics of material topics such as factor of safety, shear, and tensile stresses. Similar to the static body exam, the mechanical systems had forces applied to a static body and tested students’ knowledge of material stress equations and factors of safety. The problems required students to understand the effect of how the applied force effects the resulting force on specific supports. It also made use of free body diagrams and taking moments about points which had been previously covered in the static body exam. The mechanics of materials exam was taken in a 50-minute period with three problems.

The engineering approach assessment assigned to students tested their knowledge of static bodies and mechanics of materials, a continuation of material assessed in the exams. This project was an assessment of students’ ability to approach multi-faceted engineering problems and extrapolate conclusions based on computational results. The assessment asked students to create four designs with respect to their factor of safety in worst-case loading conditions of a bridge repair system. They were given a set of material and loading requirements and tasked with computing the tensile stress and factors of safety of the cables of their bridge repair system designs. Following their computations, they were asked to analyze their results and give their recommendation on which design that should be utilized.

Throughout the course, students utilized Matlab to solve computer application assessments. The GUI assessment was used as a way to introduce the power of computer applications to engineering. Students were assessed on their understanding of the ways to implement a GUI to execute functions. Students were presented with a scenario of a truck with forces applied against the vehicle as it remained in equilibrium. They were asked to use their knowledge of introductory physics and statics to find the resultant force of a vehicle when force is applied to the truck engine. This involved summing forces in the x and y directions to yield a resultant force. The engineering application was then modeled by students by writing a Matlab script that would output the trucks resultant force when the user is prompted a GUI that asks for the drag, mass, and force of wind on the truck.

The control structure assessment introduced control statements and plots to students. They were tasked with asking the user for inputs of a linear equation and outputting the x-intercept. To complete, the students depended on their knowledge of basic algebra while integrating step wise control statements to identify the x-intercept. The project assessed students understanding of for loops and generation of plots. The nested function assessment required a similar mathematical background, this time integrating a quadratic equation to find the roots. Students used menus to input user’s values of the quadratic equation and outputted the plot and data. Correct scripts involved writing if statements in combination with while loops, for loops, and plots. The nested function assessment tested students’ ability to implement if statements to their prior knowledge of while loops and GUI’s.

Finally, the computer application to mechanics assessment solved for stress, strain, and material response based off user inputs and its units. The assignment required students to create functions that would solve for the stress, strain, and response which would then be called by the main function. Students relied on their mechanics of material knowledge to achieve correct responses. They also had to create separate control statements pending the user’s unit input. This assignment was the final testament of the students’ knowledge of Matlab and mechanics of materials.

The observational analysis of the course focused on the assignments that were directly related to static and mechanics of material principals. The highlighted assignments that were analyzed from the effect of the static body exam scores were the mechanics of materials exam, engineering approach, GUI, control structures, nested functions, and the computer application of mechanics assessment. Students who did poorly in the static body exam, grades under the required passing score (%60), were tracked in the aforementioned assignments to compile data to present evidence for the study. The data was then analyzed to see if there had been any effect on student performance.

Results and Discussion

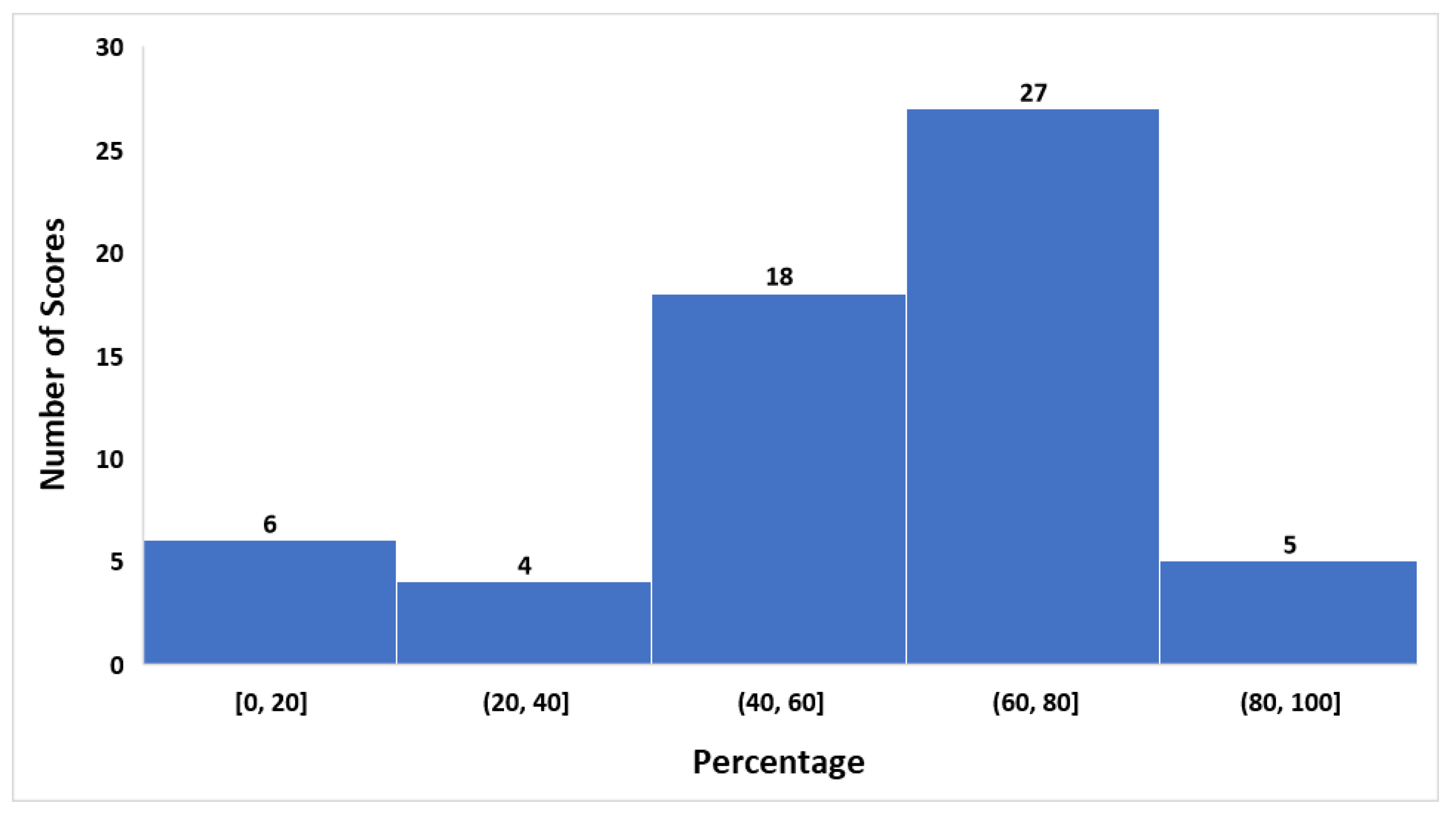

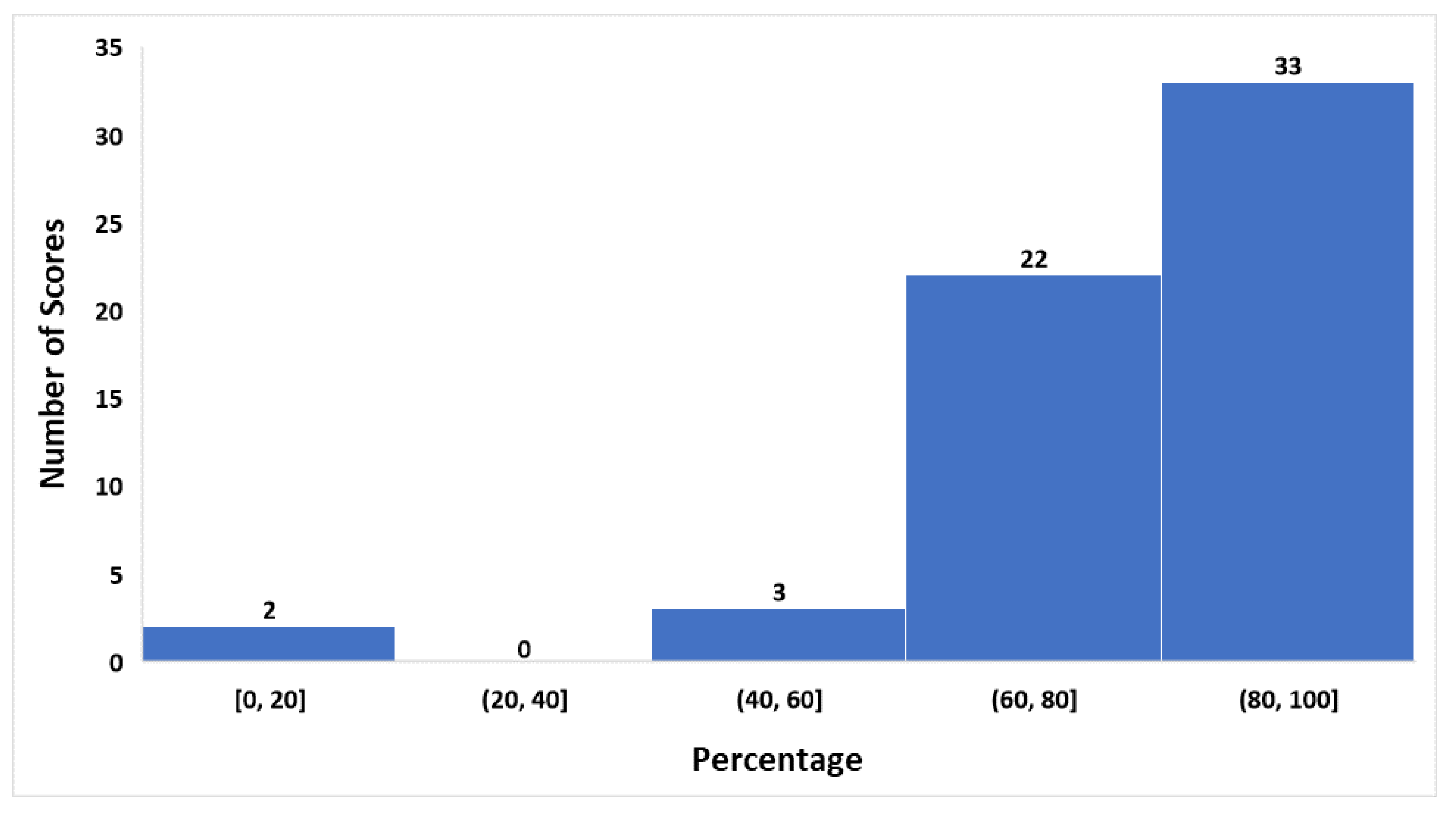

The fall of 2022 mechanical engineering problem solving with computer application course focused on preparing students for future static and mechanic courses that are a part of the core engineering curriculum at Iowa State University. The first exam taken by students, focused on introductory static material showed disappointing results with the average of the exam falling below passing at 58.075%. A breakdown of the statics exam scores is shown below in

Figure 1.

The troubling statistics display that a majority of the class did not receive a passing grade.

Table 1 below details the grade breakdown of the exam showing that 28 out of the 60 students did not pass the exam.

Following the first statics exam, students were placed in grade bands to track their results for the remainder of the course. The highlighted assignments which focused on preparing students for future engineering coursework were detailed above and analyzed to the first exam taken in the course. The results of the remaining coursework dramatically increased. The average of the second, mechanics of materials exam, showed great progress in student preparation and performance.

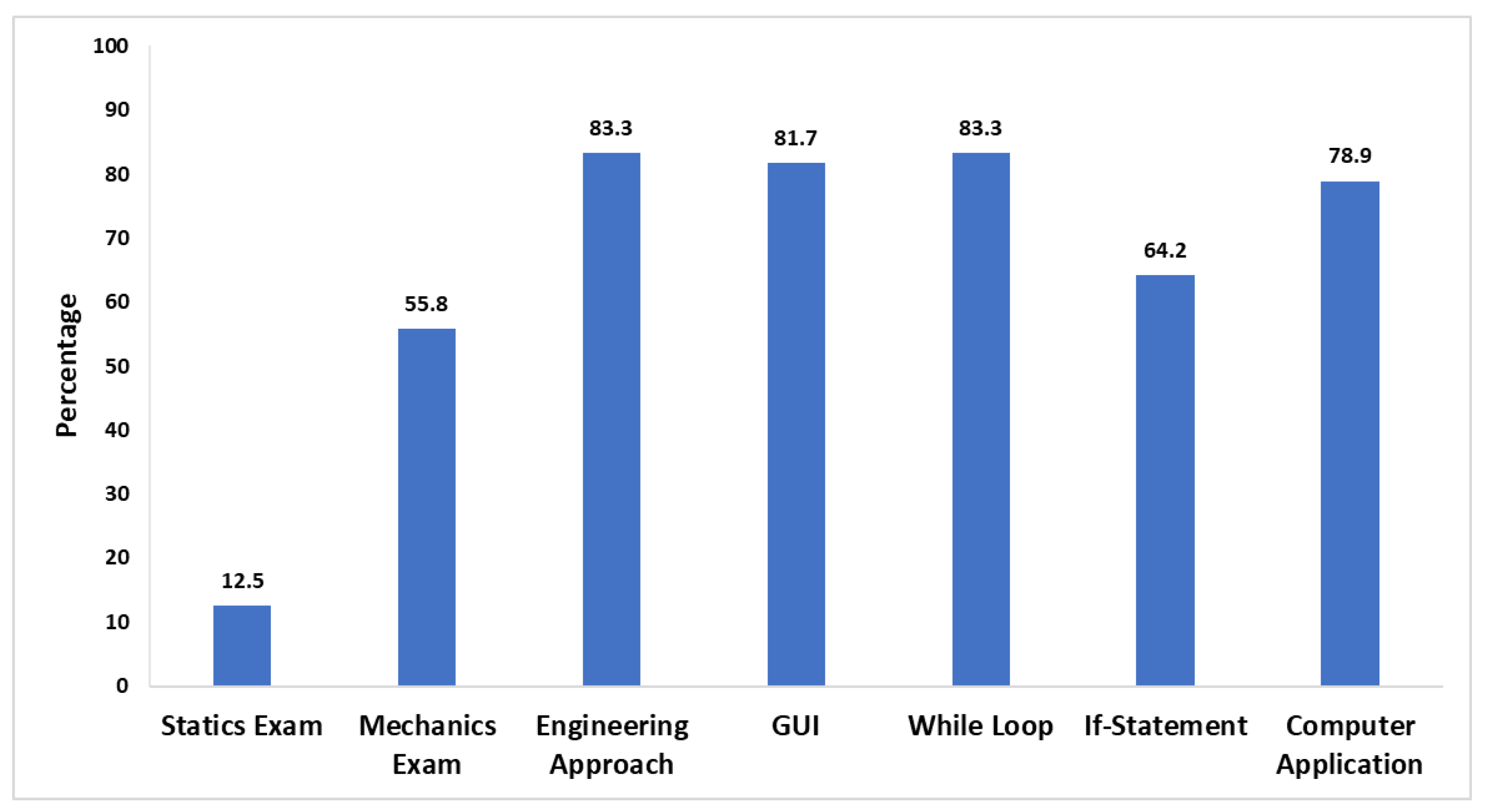

Figure 2 below details grade improvement in students who received a score between 0 and 20 percent.

The results above display students receiving a grade between 0 to 20 percent on the first statics exam increased their second exam score by 43.333% on average, however still averaging an exam grade that does not pass the course. The remaining assessments increased significantly too, with all following assessments reaching higher than a passing grade. The next grade band from the first statics exam, 21 to 40 percent, was tracked and detailed in

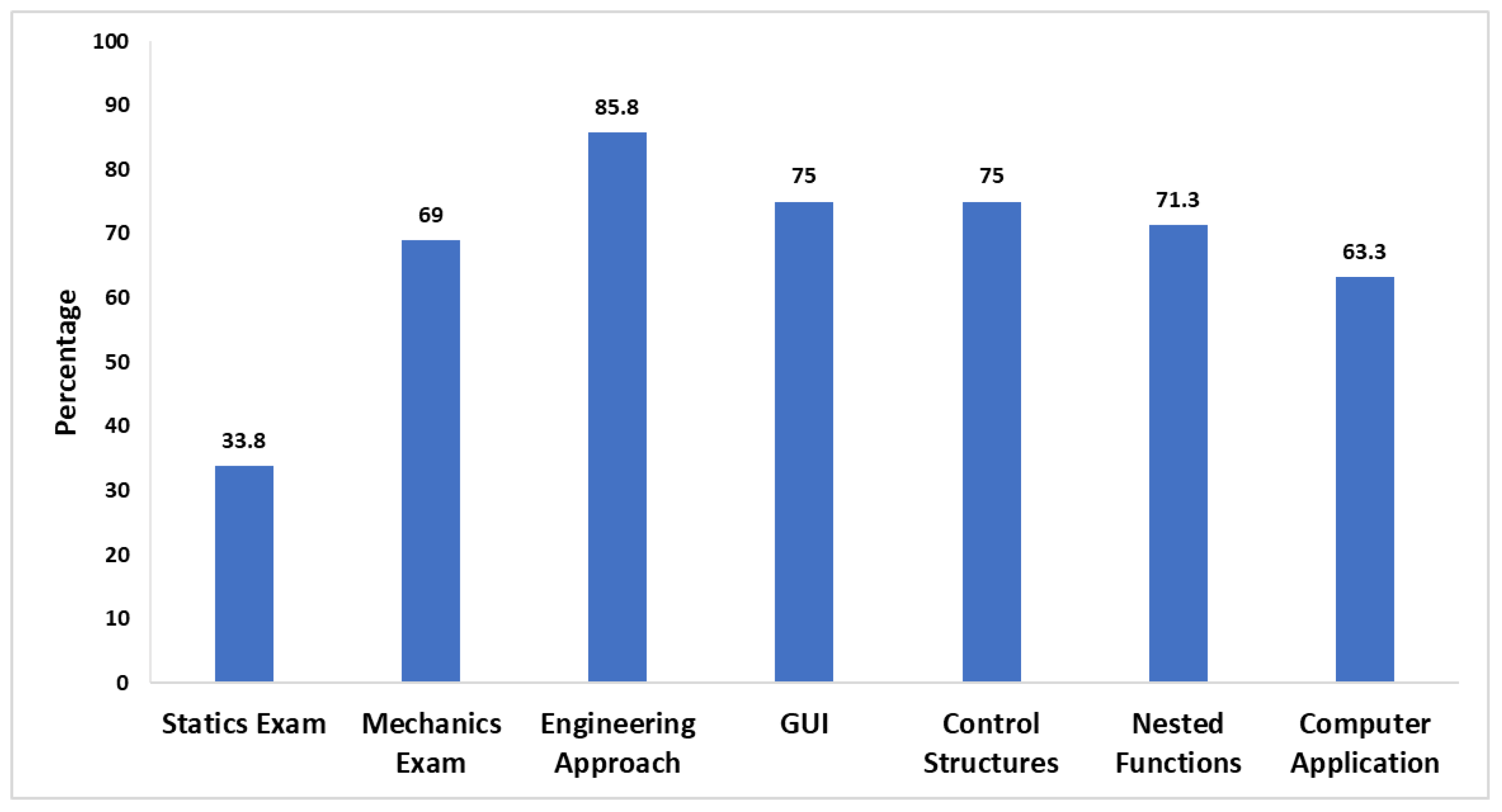

Figure 3.

Results from this band are similar to the previous figure with noted increases in the second mechanics exam yielding positive grade increases. The increase on average was 35.25% for the second mechanics exam. The assessment grades also improved however the averages were skewed by a student in the band not completing the final assessments, the nested functions and computer application. The next and final grade band that did not receive a passing score from the first statics exam, 41 to 60 percent, was tracked and detailed in

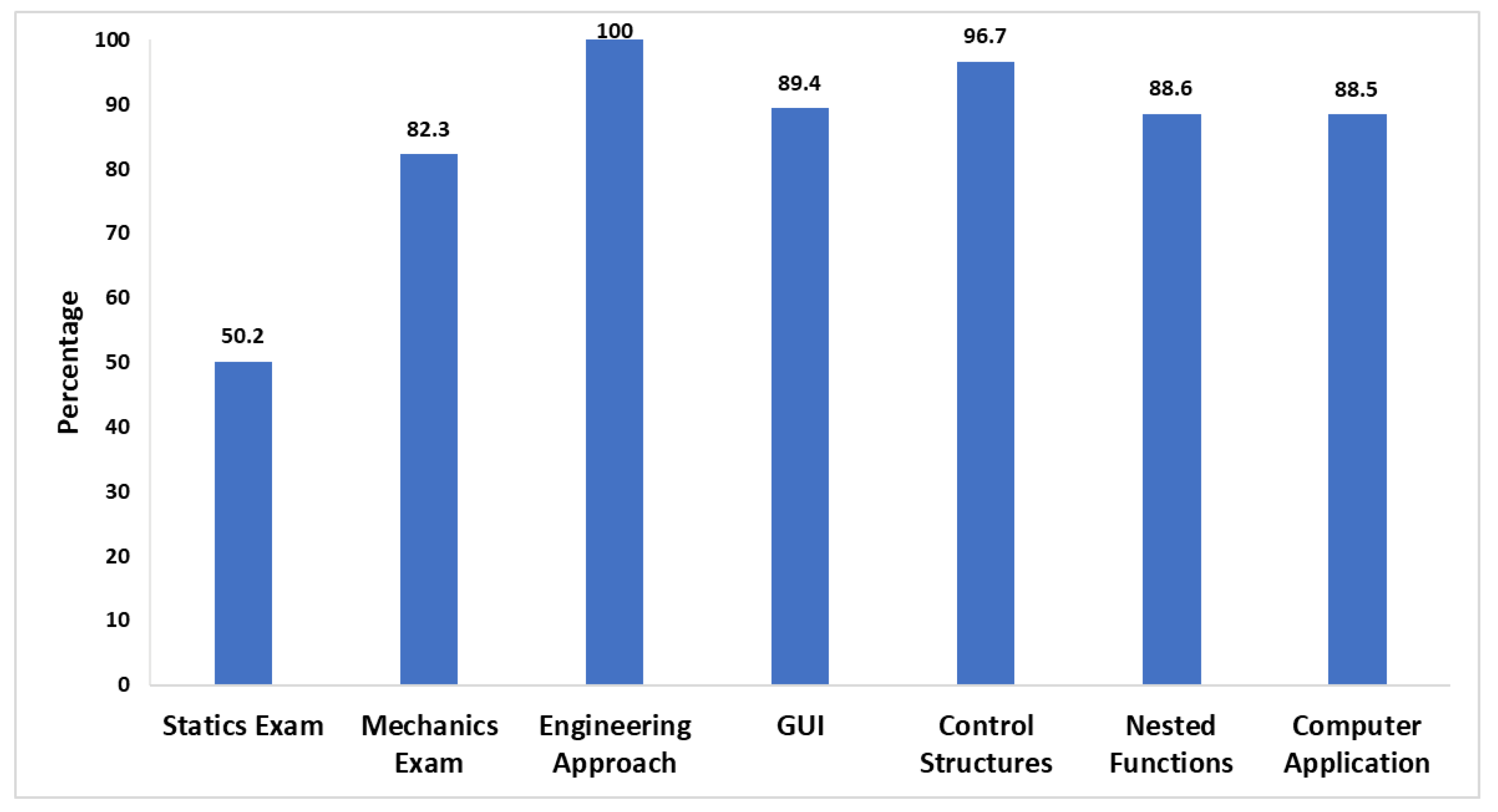

Figure 4.

A similar story is detailed in

Figure 4. Students receiving a failing grade in the range of 40 to 60 percent increased their remaining curriculum focused grades significantly, specifically up 30.0555% on average for the second mechanics exam. The assessments increased as well, achieving an average of 92.64% across the board for the remaining assessments.

Overall, students did significantly better in the rest of the course when faced with failing grades in the first statics exam. The second mechanics exam results were higher across the board with the average in the second exam being nearly 1.5 times higher than the first statics exam at 82.74%. The score and grade distribution of the exam is broken down in

Figure 5 and

Table 2.

The results of this exam were accompanied by an increase in student engagement with the teaching assistant. To his note, students began coming to office hours only after the first exam was taken. In preparation for the second mechanics exam, students came to him asking for extra practice material to which he supplied textbook problems to study from. With this in mind, the uptick in exam scores and student engagement supports the hypothesis that students were alarmed by the first exam scores and took the course more seriously, however it is of importance to address confounding variables. Students entering Iowa State Universities mechanical engineering department are placed into learning communities to gain familiarity with students that they will be taking classes with until graduation. These are communities often become a vital source for student academic improvement as they group students with the same professors and class load together. Students use these communities to form study groups which can be highly correlated to growth in academic performance. [

7,

8] This community meets once a week and by the time the second exam was taken, students likely were more comfortable reaching out to one another outside of class setting. This can support the hypothesis that the first statics exam startled students to better their preparation but it also disrupts a clear connection between the static exams effect of students’ performance as building relationships helps students succeed regardless of what occurred in the first exam. The second confounding variable is that the material in the remaining assessments and exams did not build off the first statics exam but introduced other engineering topics. Statics and mechanics are closely related however students can perform well in mechanics with a baseline knowledge of statics. In the second mechanics exam, students needed to rely on the basic concepts such as summing forces to find a resultant, but they did not need to master the material from the first exam to do well. [

9] The assessments tell a similar story, students needed to have the underlying knowledge but the assessments were computer applications and graded as such. This variable is important to consider however even though the topics were different, if the students learned how to think and approach an engineering problem in one topic, they should have been able to approach the rest of the course work similarly. This negates the significance of the material being different but the variable is needed to be addressed for a full picture of the class performance. The final confounding variables that need to be addressed is that students and the professor adapted to the course. Many students previous experience from high school is that the teaching environment is a place to acquire facts and skills, however in college the environment switches and requires students to take responsibility for thinking through and applying what you have learned. [

10,

11] This tends to be difficult to college freshman but as the course continued and students grew their relationships in their communities, their learning style adjusted and performance on exams rose. The professor also adjusted the course to benefit students learning. Following the first statics exam, the professor analyzed the results and took time discussing with students the material that they would like to spend more time on. Understanding what students needed help on cleaned up gaps in knowledge following the first exam which promoted success for future material.

Conclusions

The results of the class support the hypothesis that students were motivated to achieve their desired grade outcome due to the poor performance results of the first exam. Students who received a grade between 0 to 20 on the first statics exam significantly improved in the second mechanics of materials exam by an average of 43.333% on average. This theme continued for students receiving a score between 20 to 40 and 40 to 60, improving on average by 35.25% and 30.055% respectively. Assessment scores jumped across the class with an average of the assessments for the class being a 91.095% with each grade band increasing their score from the first statics exam. The scores point towards the hypothesis that students were motivated to significantly improve their performance, however the confounding variables must be taken into account for a full picture.

The variables that effected students’ performance focused on both outside and in-class changes. Students in the learning community had the benefit of their classmates come time for exam and assessment preparation. They were able to reach out to others for support helping improve their academic performance. The material being slightly different also had an effect on scores. Students could improve their scores in the remaining material without mastering the topics of the first statics exam. Though the material did not directly build off the statics exam, if students learned how to think and approach an engineering problem in the first topic, they were able to approach the rest of the course work with success negating the effect of the material switch. Finally, adapting the course to fit the needs of all may have been critical to success in the rest of the course. Students entering college are often taught facts and skills without the need to take responsibility for thinking through and applying their knowledge. As the semester continued, students and the professor were able to adjust their ways of learning and teaching to make the semester as successful as possible for each party. This entailed analyzing the strengths and weakness of the classes’ performance on the first exam by the professor and applying extra time to improve students learning. It also involved students taking responsibility for their learning. The study could be improved to negate confounding variables. It is difficult to isolate the effect without having an entrance and exit exam. This study could be improved by asking students who did poorly on the first exam what factors influenced their performance for the rest of the semester.

Ultimately, the poor performance on the first statics exam set the tone for the course. Students realized that they needed to approach the class more seriously in order to achieve their desired grade. Following the first statics exam, attendance to office hours increased with students asking for extra prep material. In combination with the internal and external factors, students dedicated more effort to achieve success. Since the grades increased dramatically, the hypothesis that students were motivated to achieve their desired grade due to a reality check in the first statics exam is accepted.

Author Contributions

Conceptualization, N.N.H.; methodology, C.T.B. and N.N.H.; validation, C.T.B. and N.N.H.; formal analysis, C.T.B. and N.N.H.; investigation, C.T.B. and N.N.H; resources, N.N.H.; data curation, C.T.B.; writing—original draft preparation, C.T.B.; writing—review and editing, C.T.B. and N.N.H.; visualization, C.T.B.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kusurkar, R.A.; Ten Cate, T.J.; Vos, C.M.; Westers, P.; Croiset, G. How motivation affects academic performance: a structural equation modelling analysis. Advances in health sciences education : theory and practice 2013, 18, 57–69. [Google Scholar] [CrossRef] [PubMed]

- Rowley, C.; Harry, W. (2014, March 27). Introduction: HRM context, development and Scope. Managing People Globally. Retrieved January 8, 2023, from. https://www.sciencedirect.com/science/article/pii/B9781843342236500018.

- Hassel, S.; Ridout, N. (2017, December 7). An investigation of first-year students’ and lecturers’ expectations of University Education. Frontiers. Retrieved January 8, 2023, from. https://www.frontiersin.org/articles/10.3389/fpsyg.2017.02218/full.

- Rowley, C.; Harry, W. (2014, March 27). Conclusion. Managing People Globally. Retrieved January 8, 2023, from. https://www.sciencedirect.com/science/article/pii/B9781843342236500067.

- Rosenzweig, E.Q.; Wigfield, A.; Eccles, J.S. (2019, February 15). Expectancy-value theory and its relevance for student motivation and learning (Chapter 24) - the cambridge handbook of motivation and learning. Cambridge Core. Retrieved January 8, 2023, from. https://www.cambridge.org/core/books/abs/cambridge-handbook-of-motivation-and-learning/expectancyvalue-theory-and-its-relevance-for-student-motivation-and-learning/3BED2523308E8BBC6BC1C6F359628BC2.

- Laksov, K.B.; McGrath, C. (2020, February 10). Failure as a catalyst for learning: Towards deliberate reflection in academic development work. Taylor & Francis. Retrieved January 8, 2023, from. https://www.tandfonline.com/doi/full/10.1080/1360144X.2020.1717783.

- Johnson, M.D.; Margell, S.T.; Goldenberg, K.; Palomera, R.; Sprowles Amy, E. (2022). Impact of a First-Year Place-Based Learning Community on STEM Students’ Academic Achievement in their Second, Third, and Fourth Years. Innovative Higher Education. [CrossRef]

- Dean, S.R.; Dailey, S.L. Understanding Students’ Experiences in a STEM Living-Learning Community. Journal of College and University Student Housing 2020, 46, 28–44. [Google Scholar]

- Smith, Nicholas Alan; Myose, Roy Y.; Raza, Syed J.; and Rollins, Elizabeth, “Correlating Mechanics of Materials Student Performance with Scores of a Test over Prerequisite Material” (2020). ASEE North Midwest Section Annual Conference 2020 Publications. 10. https://openprairie.sdstate.edu/asee_nmws_2020_pubs/10.

- Worsley, J.D.; Harrison, P.; Corcoran, R. Bridging the Gap: Exploring the Unique Transition From Home, School or College Into University. Frontiers in Public Health 2021, 9. [Google Scholar] [CrossRef] [PubMed]

- Richardson, A.; King, S.; Garrett, R.; Wrench, A. Thriving or just surviving? Exploring student strategies for a smoother transition to university. A practice report. The International Journal of the First Year in Higher Education 2012, 3, 87–93. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).