1. Introduction

The modern era is characterized by rapid technological developments, resulting in the development of a new economy at a global level, where the most critical asset is data. The era of "big data" gave rise to the need to analyze it and extract the valuable hidden knowledge it contains [

1]. More generally, the maximization of the production process in the modern era in sectors such as construction, and especially as it is promoted and promoted by the Industry 4.0 standard, requires the widespread use of cyber-physical systems that monitor and supervise physical processes, taking autonomously and decentralized, optimal decisions [

2].

The decisions in question are based on information collection and analysis procedures, which come from the continuous flow of data, giving an increasingly accurate picture of the system's effectiveness in production processes. This fact implies requirements for constant collection and analysis of large-scale data from heterogeneous sources.

The visualization of information and its diagnosis as to whether it is accurate, incomplete or inaccurate (veracity), determining its final value is a highly complex and demanding process, especially when real-time decision-making is required [

3]. Large-scale data is considered data that grows at high-speed rates as information arrives from multiple sources at high speed (velocity), which implies a change in the ways of collecting and storing this data (volume). Accordingly, various unstructured or semi-structured data forms are included, characterized by variability, as they change meaning or status over time and the environment in which they are found.

Intelligent large-scale data analysis systems based on artificial intelligence methods have the potential to provide machine-readable formats suitable for handling complex tasks by demonstrating logic, experiential learning and optimal decision-making capabilities without human intervention.

Artificial intelligence is an umbrella term to describe a machine's ability to mimic human cognitive functions, such as problem-solving, pattern recognition, learning, and adapting to a dynamic, ever-changing environment. Computational Intelligence (CI) is the primary subdivision of Artificial Intelligence (AI) that deals with the theory, design, implementation, and development of physiologically and linguistically inspired computational paradigms.

2. Computational Intelligence

CI is a developing area that includes computer paradigms such as ambient intelligence, artificial life, social learning, artificial immune systems, social reasoning, and artificial hormone networks, in addition to the three major parts. CI is critical in creating effective, intelligent systems, such as cognitive developmental systems. A subset of CI is Machine Learning (ML) - Deep Learning (DL), which uses algorithmic techniques to enable information systems to learn from data without being explicitly programmed. CI is at the heart of some of the most effective AI systems as there has been a surge in research on DL which is the primary approach for AI in recent years. Their ability is constantly optimized as they receive more and more data, which requires the continuous and perpetual collection of information from each production stage, to multifacetedly investigate the current but also historical situation of the processes being performed [

4].

In CI, the basic concept of the function f, which implements a correspondence mapping each element of the set to a single element of the set , is of fundamental importance as its practical advantage is that it can be implemented in practice by tangible results. Assuming a system datum as an input that implements a function, one and only one output datum is mapped to it. Under this view, the goal of a computational intelligence algorithm is to estimate a function , where the domain is the set of real numbers, while the domain can be either in regression problems or a group of labels in classification problems.

The process of computing the function

given a set of pairs

while the process of computing the value

for

,

is called supervised learning. Τhe average ranking error of the training set points can be measured by the following function [

5,

6]:

patterns in an input stream; that is, training data is used for which the classes are unknown, and the system makes predictions based on some distribution or some quantitative measures to evaluate and characterize the data's similarity to respective groups. The following function describes how to compute a process of this kind [

7,

8]:

where

and

.

One hybrid type of algorithm is semi-supervised learning which is based on searching for a decision boundary with a maximum profit margin over the labelled data so that the decision boundary has maximum profit over the more general data set. The loss function for the labelled data is

, while the loss function for the unlabeled data is

. The algorithm calculates the function

by minimizing the normalized empirical risk as follow [

4,

9]:

The available data is delivered progressively in sequential order in this situation and utilised for training and prediction by computing the error at each iteration. This On-Line/Sequential Learning approach aims to reduce the cumulative error throughout all iterations, as calculated by the formula below [

4,

10]:

In reinforcement learning, the algorithm learns to make decisions based on rewards or punishment. The method accepts as input the states

of the agent. It has the action-state value function

, for each action

to maximize the rewards, correspondingly minimizing the punishments. The basic idea of the algorithm lies behind the repeated renewal of the equation:

until these are equivalent to the optimal ones

, where

and

.

A basic goal of any learning process is an acceptable ability to generalize [

4,

11].

The three primary foundations of CI have traditionally been Neural Networks, Fuzzy Systems, and Evolutionary Computation. However, several nature-inspired computer models have emerged throughout time.

2.1. Neural Networks

The attempt to simulate the human brain and, by extension, the central nervous system constitutes the training of neural networks. It is an architecture that uses information processing - stimuli, the communication between neurons in parallel and distributed processing processes, and the learning, recognition and inference capabilities that are integrated and processed in real-time [

10,

12].

Neurons are the building blocks and nodes of neural networks, with each node receiving a set of numerical inputs (input layer), either from other neurons or from the environment and based on these inputs performing a calculation (hidden layer) and producing an output (output layer). These layers of neurons multiply their information by the matching synaptic weight and total the results. This sum is fed as an argument to the activation function, which each node implements internally. The value the part receives for that argument is the neuron's output for the current inputs and weights [

11,

13].

More specifically, neural networks are clusters of neurons that have transfer functions and are hierarchically structured according to the levels above. They implement an

function using various architectures depending on the intended effect. The numerical inputs

x1,…,xn are multiplied by the weights

w1,…,wn respectively and then summed, taking into account the bias constant

, which is the

weight of the artificial neuron. Therefore, the output (σ) is calculated as follows [

11,

14,

15]:

where

represents the inner product of the vector

input of the artificial neuron on the vector

weights. The weighted linear sum

of the neuron's inputs is then fed into a non-linear distortion component

, called the Transfer Function. Some of the more popular

that have been proposed in the literature are presented below [

4,

12]:

-

2.

Sigmoid:

- 3.

ReLU:

Depending on how the layers are interconnected and the way the nodes communicate with each other, various architectures arise, the most important being the modern deep architectures that can solve particularly complex problems. Convolutional neural networks are one of the most common forms of deep neural networks (CNN or ConvNet). A CNN convolutionally layers learnt characteristics with input data, making this architecture suited to processing 2D data like photos. CNN's reduce the requirement for manual feature extraction, so you don't have to identify image-classification characteristics. CNN extracts elements from photographs directly. The essential parts are not pre-trained; instead, they are learnt when the network trains on a set of pictures. Because of automatic feature extraction, deep learning models are very accurate for computer vision applications such as object categorization [

4,

12].

Figure 1.

Example of a network with several convolutional layers. Filters are applied at varying resolutions to each training picture, and each convolved image's result serves as the next layer's input (

https://www.mathworks.com/).

Figure 1.

Example of a network with several convolutional layers. Filters are applied at varying resolutions to each training picture, and each convolved image's result serves as the next layer's input (

https://www.mathworks.com/).

2.2. Fuzzy Systems

Fuzzy logic is a unique form of intelligence related to decision-making methodology [

16]. It is based on the extension of the concept of the classical binary set

, in which the relation of "belongs to"

for a function

is generalized so that instead,

takes infinite values in the closed interval

. In other words, it is about the creation of a new majority set

, where the transition from the category of elements of

that belong to the fuzzy set

, to the type of elements of

that do not belong to

is not abrupt-unclear but gradual-unclear, as is usually the case in reality [

16,

17]. In this sense, the characteristic two-valued function

expresses a compact set

in the two-member domain

it is included in the concept of the participation function

, which expresses a fuzzy set at the extreme values of the infinite space

and uses functions like

:

- 2.

Trapezoid:

- 3.

Gauss:

Among the fuzzy sets (are sets whose elements have degrees of membership) it is possible to perform certain operations such as [

16,

18,

19]:

- 2.

Fuzzy Conjunction:

- 3.

Fuzzy Product:

- 4.

Fuzzy Complement:

Fuzzy reasoning is called the process of deriving fuzzy conclusions, a process which is based on three fundamental concepts of the theory of fuzzy logic [

20,

21] and specifically on fuzzy variables, inference rules and fuzzy relations, which can be combined through the process of composition with operations such as:

- 2.

Fuzzy Composition Max-Prod:

2.3. Evolutionary Computation

Evolutionary Systems [

22,

23] work based on the Darwinian theory of the mechanism of natural selection through which evolution occurs, given that all life forms come from common ancestors and have been shaped over time. The application techniques of the mechanisms they use are inspired by the biological evolution of species, such as reproduction, mutation, recombination, natural selection and ultimately, survival of the fittest. Technically, they belong to the family of systems that operate with trial and error and can be considered stochastic optimization methods. The characteristic of these systems sets them apart. It makes them preferable to other classical optimization methods because they have little or no knowledge of the problem or function, they are asked to solve. The solution methods are not dependent or based on complex calculated parameters. These systems can evolve and adapt in a manner analogous to that of the organization they imitate, which is an optimal solution in cases of dynamic and rapidly changing environments [

22,

24].

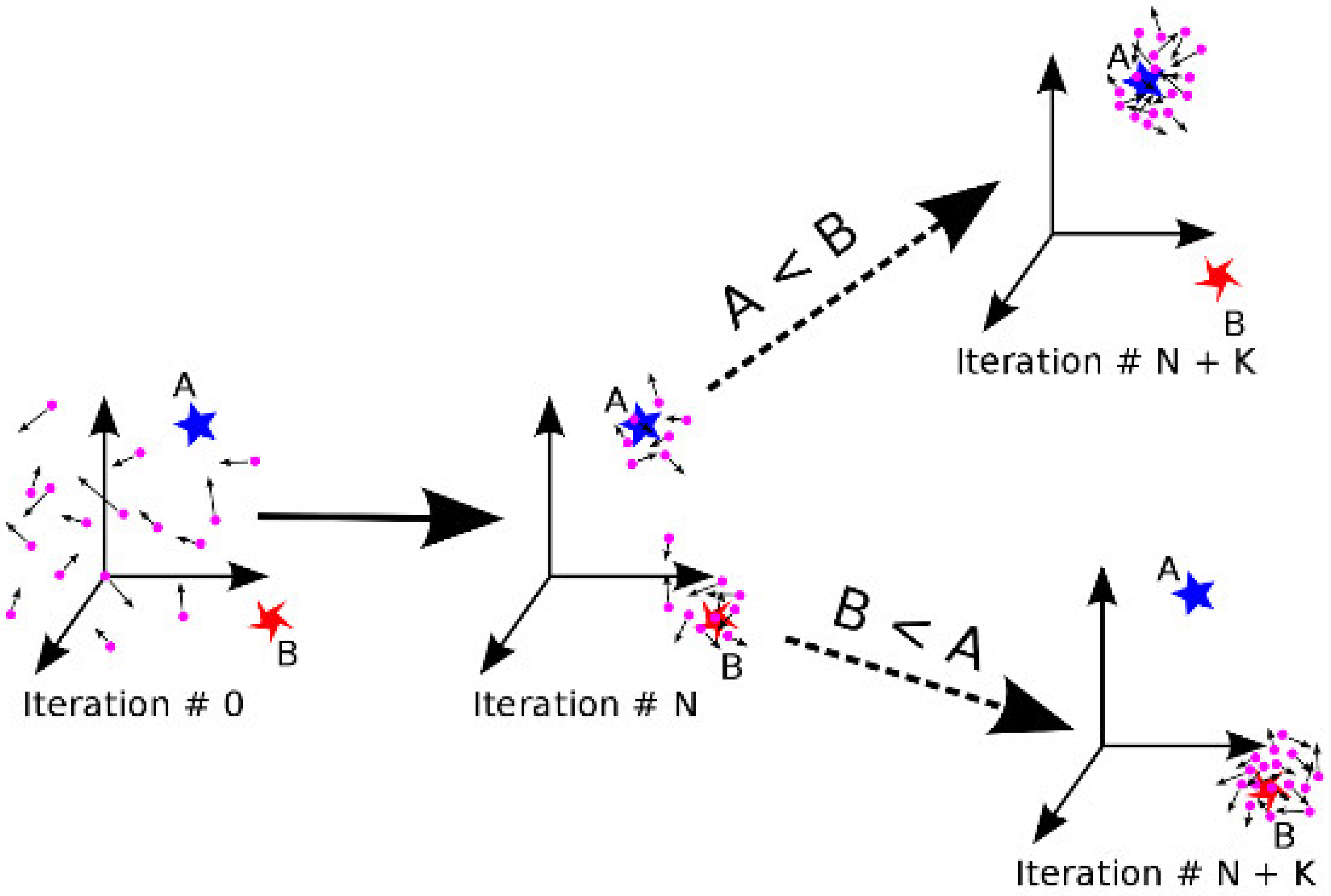

The Particle Swarm Optimization (PSO) algorithm [

23,

25,

26] is a typical case of evolutionary algorithms. It is straightforward because it does not use crossover and mutation mechanisms, and it can be applied to many problems since it requires minimal parameters to be adjusted. It is also, in many cases, very fast as it uses random real numbers and communication between the entities of a swarm. Specifically, PSO investigates the space of an objective function by altering the paths of individual tracers known as particles. These trajectories produce semi-stochastic route segments. A swarm particle's motion is governed by a stochastic and a deterministic component. Each particle is drawn to the overall best location recognized by the swarm and the best place it has encountered while tending to wander randomly. When an entity discovers a better location than the previous ones, it promotes it to the current best for track

. There is a current best for all

entities at each time

throughout the iterations. The aim is to find the optimum overall position until it can no longer be improved.

Let

, and

be the position and velocity for an entity

, respectively. The following formula gives the new velocity vector [

25,

26]:

where

the velocity of the particle,

independent random numbers

learning parameters,

the locally optimal solution and

the overall optimal solution.

The PSO algorithm updates each particle's velocity component and then adds the velocity to the location component. This update is determined by the best solution/position obtained by the particle and the one discovered by the total population of particles. If a particle's optimum solution is better than the population's, it will eventually replace it. All particles' starting positions are evenly distributed to sample the majority of the search space. It is also possible to set an entity's initial vector to zero. The new location is described by the equation below [

26]:

with

usually bound to a range

.

Figure 2.

Particle Swarm Optimization algorithm.

Figure 2.

Particle Swarm Optimization algorithm.

3. Applications of Computational Intelligence in Civil Engineering Research Domains

The potential applications of CI and its recent developments in the science of civil engineering are enormous, as, in the busy everyday life of the construction site, the requests for information dissemination, dealing with open issues and the management of the construction of technical projects are given. Artificial intelligence can be the intelligent assistant that can optimally control and manage the vast amounts of data generated and alert managers to all the critical points that need their attention. In this way, the manufacturing sector within the framework of Industry 4.0 can make substantial innovative leaps, acquire significant extroversion and develop previously impossible activities [

2,

27]. This fact, and in general, the utilization of artificial intelligence in the science of civil engineering, is proven by the relevant research that has been published in the global literature

3.2. Relevant Research Studies

In recent years, the need to model increasingly complex technical projects that the modern civil engineer is called upon to handle has highlighted the need to exploit artificial intelligence and integrate it more and more into the processes he applies. It is essential to mention that the research in this direction and the related fields show a constantly growing trend, which is strengthened interdisciplinary, continually offering new implementations that enhance the edge of the said field of knowledge. Presented below are indicative works of applied research in the areas of civil engineering science.

3.2.1. Architectural Compositions, Building Technologies and Materials

The domain of architectural compositions, building technologies and materials covers architectural designs of building units or ensembles, construction art and systems and methods of construction works, the technology of construction materials, structural physics and microclimate control and maintenance and restoration of old buildings, and monuments.

The field in question can benefit significantly from using physics-based artificial intelligence models and implementing analytical differential equations or other mathematical models used to solve structural physics problems or simulations [

13]. Concrete is the most widely used construction material, but it is also a recognised pollutant that causes significant sustainability issues in terms of resource depletion, energy use, and greenhouse gas emissions; AI can lessen the environmental impact of concrete to increase its long-term sustainability. For example, the authors of the paper [

28], A model was developed to forecast the compressive strength of various eco-friendly concrete mixtures, which may be used in the design process. A combination of recycled concrete and blast furnace slag is utilised to create the concrete. As a final step, a machine learning model was developed that accurately predicts the compressive strength of green concrete.

Despite a wealth of literature, self-healing notions have yet to reliably propose design solutions that can measure their positive effects on structural performance. As a result, concrete and other cement-based materials have an innate self-healing property. The effectiveness of the concrete's strengthening and self-healing has been shown to depend on several factors, the most important of which are the kind of exposure, the diameter of the crack, and the presence of healing stimulants such as crystalline impurities. Autogenous self-healing is primarily unaffected by other criteria like fibre count and extra cementitious materials. A related study [

29] proposes, through properly constructed neural network design and analysis diagrams, a simple input-output model for rapid prediction and evaluation of the self-healing effectiveness of cement-based materials. In particular, it uses advanced AI techniques to quantify the recovery of material performance by displaying the quantitative correlations between mix ratios, exposure type and duration, and beginning crack width. In terms of assessing structural performance deterioration and significantly extending the life of reinforced concrete structures, this is the first systematic incorporation of self-healing principles into durability-based design methodologies.

3.2.2. Geotechnical Engineering

The domain of geotechnical engineering covers the subject of soil dynamics, geotechnical earthquake engineering, soil-foundation-structure interaction, soil improvement and reinforcement, analysis of the behavior of geostructures with simulations, deep foundations, geotechnical engineering of mining projects and environmental, geotechnical engineering.

Soil classification based on shared characteristics is a cornerstone of geotechnical engineering. Testing in the lab and the field, both of which may be expensive and time-consuming, has led to this categorization. Each construction site has its ground studies, which must be completed before any technical project can be designed. Artificial intelligence may play a crucial role in cutting down on the time and money needed for a proper site inspection programme. For example, the study [

30] evaluates the essential ability of machine learning models to classify soils based on Cone Penetration Tests (CPT). A dataset of 1339 representative CPTs is used to test 24 machine learning models; the input variables include tip resistance, sleeve friction, friction ratio, and depth; the output variables include total vertical stresses, effective vertical stresses, and hydrostatic pore pressure. Soil classes based on grain size distributions and soil types based on soil behaviour are often used as reference points in the literature. The accuracy of each model's predictions and the time it takes to train are compared. Notably, the algorithm with the highest predictive ability for grain size distribution soil classes obtained around 75% accuracy, while the algorithm with the best predictive power for soil classes got about 97-99% accuracy.

As a result, evaluating soil liquefaction is a challenging phenomenon in geotechnical earthquake engineering. Capacity energy is related to initial soil factors such as relative density, initial appropriate confining pressure, fines contents, and soil textural properties, which have been the focus of several liquefaction evaluation processes and approaches. Traditional methods used to assess the liquefaction risk of sand deposits fall into one of three broad categories: stress, stress, or energy. The energy-based approach has the edge over the other two because, unlike the focus- or stress-based methods, it accounts for the impacts of stress and strain concurrently. In this study [

31], the amount of energy needed to cause liquefaction in the sand and silty sand is estimated by conducting comparative analyses of state-of-the-art artificial intelligence systems on suitable data sets. The results prove the efficacy of the suggested models and the energy capacity in gauging soils' liquefaction resistance.

3.2.3. Structural Constructions

The domain of structural constructions covers the subject of applied methods of analysis and design of linear and surface carriers, reinforced concrete structures for everyday and seismic actions, prestressed concrete structures for normal and seismic activities, special reinforced and prestressed concrete structures, control and interventions in structures, metal structures, metal bridges, wooden structures, light structures and masonry structures for every day and seismic actions.

Advanced machine learning algorithms have been successfully applied in many areas of modelling seismic structures and, more generally, in predicting structural damage from single earthquakes, ignoring the effect of seismic sequences. In the study [

32], a neural network approach is applied to expect the ultimate structural damage of a reinforced concrete frame under natural and artificial ground motion sequences. Sequential earthquakes consisting of two seismic events are used. Specifically, 16 known measures of ground motion intensity and the structural damage caused by the first earthquake were considered characteristics of the problem. In contrast, the final structural damage was the goal. After the first seismic events and after the seismic sequences, the damage indices' actual values are calculated through nonlinear time history analysis. The machine learning model is trained using the dataset generated from artificial arrangements, while the predictive ability of the neural network is approximated using the natural seismic lines. The study in question is a promising application of the method of modelling multiple seismic sequences for the final prediction of the structural damage of a building, offering highly accurate results.

Multiple nonlinear time history evaluations utilising various incidence angles are necessary to determine the angle at which possible seismic damage is maximum (critical angle). Thus, the rise of seismic excitation is a crucial consideration in assessing the seismic response of structures. In addition, several accelerograms should be used to analyse the seismic reaction, as advised by seismic codes. As a result, it takes longer to complete the project. The study [

33] presents a technique for critical angle estimation that uses multi-layer neural networks to cut down on computation time drastically. The general concept is to identify situations in which the seismic damage category is higher due to the acute angle than it would be due to the application of seismic motion along the structural axis of the structure. This is accomplished by formulating and resolving the issue as a pattern recognition problem. Inputs to the networks were the ratios of seismic parameter values along the two components of the horizontal seismic files and correctly chosen structural parameters. The investigation findings demonstrate that neural networks can accurately identify situations when a necessary angle computation is required.

3.2.4. Computer Programming and Mathematics

The domain of computer programming mathematics covers the subject of mathematics, natural sciences, informatics, system analysis and optimization methods, economic analysis and technical economics, project organization and planning, management and human relations, the mechanization of constructions, the management of the social and natural environment and the security and protection of complex systems.

In the subject matter of the specific field, multiple fields of application have been explored with a serious impact on the science of civil engineering. For example, in the study [

34], a thorough identification and risk assessment study is carried out, for the construction of an underground tunnel that will pass under a river. Numerical simulation is used to discover an initial link between the indicators impacting the construction in question; field measurements then validate the findings, and a collection of representative samples is developed. Fuzzy logic and a feed-forward neural network are used for the pieces under consideration to assess and assess the risk level in light of changes to the pertinent indicators of interest. Consequently, the system in question is applied to the risk assessment of Line 5 of the Hangzhou Metro in China, and modifications to the concrete strength, grouting pressure, and soil chamber pressure are recommended based on the findings.

In addition to safeguarding against physical hazards, there is an ongoing need to secure critical infrastructure from digital hazards [

35,

36]. Accordingly, the importance of big data analysis for the detection of online threats [

37], but also in general the protection of sensitive information present in big data, is a constant demand of the research community. In particular, the analysis of big data related to the science of civil engineering [

38], as well as the development of intelligent methods for monitoring the implementation of large-scale technical projects [

39] are an important field of research in the field in question.

A characteristic example that incorporates current expertise in the specific field is the 3-year postdoctoral research carried out in the Department concerning the design and development of innovative intelligent information systems, management and analysis of big data with the aim of digital security of critical urban infrastructures [

9,

40].

3.2.5. Mechanics Engineering

The mechanics engineering domain covers continuum mechanics, solid body kinematics and dynamics, the strength of materials, experimental mechanics, fracture mechanics, the theory of plasticity and viscoelasticity, and theoretical methods for calculating linear and surface vectors.

In particular, the modelling of fracture energy investigation methodologies utilised to depict the fracture performance of concrete structures/beams is a hot topic of study because of the critical relevance of this topic to the practical implementation of concrete engineering works. While the fracture energy may be estimated and the fracture behaviour of various concrete structures predicted, this is not always possible owing to the material's inherent properties and the intricacy of the fracture process. In this study [

41], Scientists use various experimental methodologies, AI, and associated optimisation techniques to find a workable solution to fracture energy prediction issues. Multiple factors that influence the fracture energy and compressive strength of concrete were studied, and critical conclusions were gleaned for further study and experimental assessment.

One of the most basic composite materials with excellent properties is fibre-reinforced concrete, the application of which is constantly expanding in multiple technical projects. However, its mixed design is mainly based on extensive experimentation, the effectiveness of which is tested. Accordingly, one of the main priorities of the field in question is the study of the mechanical properties of composite materials used in constructions, their conventional failure criteria and their possible deformation states. The researchers in [

42] implemented a machine learning model capable of predicting the fracture behavior of all possible subclasses of fibre-reinforced concrete, especially cementitious composites made with strain hardening. Specifically, the study evaluates 15 input parameters that include mix design components and fibre properties to predict the fracture behaviour of concrete fibre matrices. The process results demonstrate that machine learning models significantly improve the design of adequate fibre-reinforced concrete formulations with their inherent properties of simulating and explaining their application modes.

3.2.6. Transportation Engineering

The domain of transportation engineering covers the subject of road construction, pavements, traffic engineering, transport and terminal economics, transport statistics and error theory, airport planning, transport planning, railway, public transport evaluation, spatial planning, urban planning, city history, expropriations, cartography data, photogrammetry data and environmental impacts from the construction and operation of roads.

One of the central planning priorities of transportation projects is modelling short-term demand forecasts, which are usually focused on a horizon of less than one hour and are necessary for implementing dynamic transit control strategies. Suppose airlines and other service providers have a good idea of how much demand they may anticipate. In that case, they can better prepare for demand spikes and mitigate their adverse effects on service quality and the customer experience by using real-time management tactics. Predicting platform congestion and vehicle overcrowding is one of the most beneficial uses of transport demand forecasting models. These need knowledge of origin-destination demand, giving a comprehensive picture of when, where, and why customers join and leave service. While some research has been done in this area, it is limited and primarily concerned with forecasting passenger arrivals at stations. For many real-world uses, this data falls short.

In work [

43], using advanced AI patterns, a scalable, real-time framework for demand forecasting in transportation systems is created. The proposed model is divided into three distinct sections: a multi-resolution spatial feature extraction section for capturing local spatial dependencies, an auxiliary coding section for external information, and an area for tracking the temporal development of demand. Specifically, the order required at any given time is a square matrix that is processed in two different directions. Using the first fork, we can see patterns in the data that weren't apparent in the raw demand data by decomposing it into its component time and frequency variations. A three-layer convolutional neural network is utilized in the second route to understanding the demand's geographical relationships. After then, the market's temporal development is captured using a convolutional network with short-term memory. Two months of automated fare collecting data from the Hong Kong mass transit train system are used in a case study to assess the methodology, demonstrating the suggested model's evident superiority over the other benchmark approaches.

The flow of traffic is instantaneous. The notion of Dynamic Lane Re-versal (DLR), which may quickly switch lane directions to reflect its dynamics, has been tested on a broad scale in autonomously driving public transportation in recent years. The DLR is being built to eliminate traffic bottlenecks, maximize the efficiency of road areas, and prevent unused capacity. The effects of DLR and its ability to be implemented are, however, yet unknown.

In work [

44], the ideal DLR strategy for a road segment with bidirectional stochastic traffic flow was investigated using a lane-based directional cell transmission model to explore DLR's efficacy, practicality, and application. Regression analysis was carried out based on the data gathered to determine the influences of directional flow rate and multiple lanes on DLR-induced delay reductions. The findings suggest that, compared to conventional reversible lane strategies, the DLR deployment may drastically cut the overall queuing time. DLR also attained superior performance on longer, multi-lane stretches and in situations when traffic was moving in opposite directions but relatively close together. It's also important to highlight how the suggested method helped identify the previously undetectable pattern border.

Even though assessing the distribution of travel times across lanes and other vehicles in addition to their predicted values is crucial for high-level traffic control and management of urban roadways with unique lane-to-lane circumstances, it has received relatively little attention. In the paper [

45], the authors present a novel approach for estimating the lane-based distribution of trip times for various vehicles by comparing low-resolution video pictures received from conventional traffic surveillance cameras. The system utilizes deep learning neural architectures in conjunction with bipartite graph matching. They used a case study of a crowded metropolitan street in Hong Kong. According to the findings, the suggested technique effectively calculates the travel times distribution along linked lanes according to vehicle type.

3.2.7. Hydraulics and Water Resources Engineering

The domain of hydraulics and water resources engineering covers the disciplines of fluid mechanics, experimental and computational hydraulics, environmental hydraulics, marine engineering and port engineering, river hydraulics, hydrology and water resources management, hydraulic and hydrological engineering, engineering water supply and sanitation, sanitary engineering, water and urban wastewater treatment facilities, ecology and aquatic ecosystems.

In the field in question, there has been a lot of research for several years related to hydraulic devices [

46,

47], water resources management [

48,

49] and environmental hydraulics [

50,

51]. Despite all this, significant progress has recently been made in more specialized research fields. Cavitation, entrained air, and foaming are all processes in which the deformation of air bubbles in a fluid flow field is of interest. This problem cannot be solved theoretically in complicated conditions, and a solution based on the precision of computational fluid dynamics is generally not acceptable. In this study [

52], This paper suggests and describes a novel method for addressing the issue based on a hybrid sketch method for collecting experimental data and a comparison of machine learning algorithms for developing prediction models. The equivalent diameter and aspect ratio of air bubbles flowing near a sinking jet were predicted using three different models. The variables used by each model were unique. After constructing five various iterations of the Additive Regression of Decision Stump, Bagging, K-Star, Random Forest, and Support Vector Regression algorithms by adjusting their hyperparameters, we found that all five of them converged steadily.

Two models produced accurate estimates of comparable diameter using four distinct measures. Every configuration of the third model was offered at a discount from the second. Differences in the input variables of the prediction models exhibit a more substantial effect on the precision of the findings when trying to forecast the bubble aspect ratio. The suggested method has promise for tackling complex issues in investigating multiphase flows.

A typical example of the application of artificial intelligence in coastal engineering concerns methods such as artificial neural networks combined with fuzzy models, which are used to improve prediction efficiency and reduce the time and cost spent on the experimental work of applying empirical formulas on the stability of breakwaters. Specifically in work [

53], to predict the stability number of breakwaters, the least squares version of support vector machines (LSSVM) method is used, which takes as input seven independent variables (breakwater permeability, damage level, wave rate, slope angle, water depth, wave height, wave peak period), manages to predict with an accuracy rate of 0.997 the stability of breakwaters.

4. Future Research

Taking a conceptual approach, Industry 4.0 can be seen as a new organizational level of automated value chain management methods, including the entire life cycle of processes, from raw materials to the final product. Including widespread use of modern technologies such as artificial intelligence, data analysis, cyber-physical systems, internet of things or industrial internet of things, cloud computing, blockchain and cognitive computing systems, it is a significant upgrade of the modern production process.

Indicatively, the use of artificial intelligence in the context of Industry 4.0 can extend the applications of civil engineering science as follows [

54]:

Prevent cost overruns by analyzing factors such as the size of a project and the type of contracts and improving the skills of project managers and workers.

Improvement of the design and management of the construction of technical projects through Building Information Modelling (BIM).

Reduction of the risk that can occur in a project's quality, safety, cost and construction duration.

Rational and realistic planning of a project with the development of algorithms that will learn from previous related projects.

More productive operation by handling repetitive machine tasks freeing human resources.

Increase construction site safety by using algorithms that aggregate data and images of the construction site and predict potential hazards.

Dealing with shortages of human resources and machinery through the proper management of the resources in question, depending on the progress of the individual contracts.

Implement a predictive maintenance plan based on real-time analysis from various parts of the construction site and with various means such as sensors, mobile devices, drones, information systems, etc.

Monitor engineering works in real-time, giving warnings about when and where repair is required, predicting damage and identifying conditions that may occur, their location and their extent.

Improve productivity by using intelligent methods of scheduling, material requisitioning and implementing idle time reduction plans.

Determination of optimum concrete mix properties such as maximum dry density or ideal moisture content in concrete.

Management of technical projects with the ability to predict changes in costing based on raw material market prices and available stocks.

Modelling, analyzing and predicting destructive factors such as foundation subsidence, slope stability, seismic resistance, tidal events, etc.

Reduce project errors with automatic multivariate data analysis.

Solving complex problems at different project stages, such as design decision-making, foundation engineering, construction waste management, intelligent material handling, etc.

Design and development of innovative, intelligent information systems aiming at the digital security - cybersecurity of critical urban infrastructures.

5. Conclusions

Considered a branch of computer science, artificial intelligence refers to the construction of intelligent machines capable of performing human tasks by imitating human characteristics, intelligence and logic, but without direct human intervention. It is considered the pinnacle of modern science, which makes it a promising subject of civil engineering science, which is imposed by the current needs of planning and managing large-scale technical projects. From this point of view, the knowledge of the methodologies and ways of applying artificial intelligence is drawn up with the multiple requirements for processing large technical projects. In light of this, the modern civil engineer should be able to define the specifications, design constraints, preparation, operational procedures, testing and evaluation of intelligent solutions derived from artificial intelligence.

In the future, robotics, the internet, and artificial intelligence can significantly reduce manufacturing costs and time. This will be achieved through the monitoring of work with cameras, the more accurate planning of the passage of electromechanical networks in modern buildings, the development of more effective safety systems on construction sites and, above all, the real-time interaction of workers of materials and machines to warn supervisors in time and supervisors for potential manufacturing defects, productivity issues, and safety issues.

Author Contributions

Conceptualization, K.D. and S.D.; methodology, K.D. and S.D.; software, K.D. and S.D.; validation, K.D. and S.D. and L.I.; formal analysis, K.D. and S.D. and L.I.; investigation, K.D. and S.D; resources, K.D. and S.D.; data curation, K.D. and S.D.; writing—original draft preparation, K.D. and S.D; writing—review and editing, K.D. and S.D. and L.I..; visualization, S.D.; supervision, L.I..; project administration, K.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- A. Cuzzocrea, “Big Data Lakes: Models, Frameworks, and Techniques,” in 2021 IEEE International Conference on Big Data and Smart Computing (BigComp), Jan. 2021, pp. 1–4. [CrossRef]

- N. Velásquez Villagrán, P. Pesado, and E. Estevez, “Cloud Robotics for Industry 4.0 - A Literature Review,” in Cloud Computing, Big Data & Emerging Topics, Cham, 2020, pp. 3–15. [CrossRef]

- M. S. Mahmud, J. Z. Huang, S. Salloum, T. Z. Emara, and K. Sadatdiynov, “A survey of data partitioning and sampling methods to support big data analysis,” Big Data Min. Anal., vol. 3, no. 2, pp. 85–101, Jun. 2020. [CrossRef]

- L. Alzubaidi et al., “Review of deep learning: concepts, CNN architectures, challenges, applications, future directions,” J. Big Data, vol. 8, no. 1, p. 53, Mar. 2021. [CrossRef]

- S. Raschka, “An Overview of General Performance Metrics of Binary Classifier Systems,” ArXiv14105330 Cs, Oct. 2014, Accessed: Nov. 09, 2021. [Online]. Available: http://arxiv.org/abs/1410.5330.

- Z. Yang, T. Zhang, and J. Yang, “Research on classification algorithms for attention mechanism,” in 2020 19th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES), Jul. 2020, pp. 194–197. [CrossRef]

- I. Kononenko and M. Kukar, “Chapter 12 - Cluster Analysis,” in Machine Learning and Data Mining, I. Kononenko and M. Kukar, Eds. Woodhead Publishing, 2007, pp. 321–358. [CrossRef]

- “Chapter 14. Clustering Algorithms III: Schemes Based on Function Optimization - Pattern Recognition, 4th Edition [Book].” https://www.oreilly.com/library/view/pattern-recognition-4th/9781597492720/kindle_split_151.html (accessed Oct. 24, 2021).

- K. Demertzis, L. Iliadis, and I. Bougoudis, “Gryphon: a semi-supervised anomaly detection system based on one-class evolving spiking neural network,” Neural Comput. Appl., vol. 32, no. 9, pp. 4303–4314, May 2020. [CrossRef]

- J. L. Lobo, J. Del Ser, A. Bifet, and N. Kasabov, “Spiking Neural Networks and Online Learning: An Overview and Perspectives,” ArXiv190808019 Cs, Jul. 2019, Accessed: Oct. 23, 2021. [Online]. Available: http://arxiv.org/abs/1908.08019.

- B. Deng, X. Zhang, W. Gong, and D. Shang, “An Overview of Extreme Learning Machine,” in 2019 4th International Conference on Control, Robotics and Cybernetics (CRC), Sep. 2019, pp. 189–195. [CrossRef]

- A. Khan, A. Sohail, U. Zahoora, and A. S. Qureshi, “A Survey of the Recent Architectures of Deep Convolutional Neural Networks,” Artif. Intell. Rev., vol. 53, no. 8, pp. 5455–5516, Dec. 2020. [CrossRef]

- S. R. Vadyala, S. N. Betgeri1, D. J. C. Matthews, and D. E. Matthews, “A Review of Physics-based Machine Learning in Civil Engineering,” ArXiv211004600 Cs, Nov. 2021, Accessed: Feb. 10, 2022. [Online]. Available: http://arxiv.org/abs/2110.04600.

- T. F. de Lima et al., “Machine Learning With Neuromorphic Photonics,” J. Light. Technol., vol. 37, no. 5, pp. 1515–1534, Mar. 2019. [CrossRef]

- K. Demertzis, L. Iliadis, S. Avramidis, and Y. A. El-Kassaby, “Machine learning use in predicting interior spruce wood density utilizing progeny test information,” Neural Comput. Appl., vol. 28, no. 3, pp. 505–519, Mar. 2017. [CrossRef]

- P. Venkata Subba Reddy, “Generalized fuzzy logic for incomplete information,” in 2013 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Jul. 2013, pp. 1–6. [CrossRef]

- H.-P. Chen and Z.-M. Yeh, “Extended fuzzy Petri net for multi-stage fuzzy logic inference,” in Ninth IEEE International Conference on Fuzzy Systems. FUZZ- IEEE 2000 (Cat. No.00CH37063), May 2000, vol. 1, pp. 441–446 vol.1. [CrossRef]

- P. V. Subba Reddy, “Fuzzy predicate logic for Knowledge Representation,” in 2013 International Conference on Fuzzy Theory and Its Applications (iFUZZY), Dec. 2013, pp. 43–48. [CrossRef]

- K. Zhou, A. M. Zain, and L. Mo, “Dynamic properties of fuzzy Petri net model and related analysis,” J. Cent. South Univ., vol. 22, no. 12, pp. 4717–4723, Sep. 2015. [CrossRef]

- J.-S. R. Jang, “ANFIS: adaptive-network-based fuzzy inference system,” IEEE Trans. Syst. Man Cybern., vol. 23, no. 3, pp. 665–685, May 1993. [CrossRef]

- M. Salleh and K. Hussain, “A review of training methods of ANFIS for applications in business and economics,” 2016. [CrossRef]

- K. Demertzis and L. Iliadis, “Adaptive Elitist Differential Evolution Extreme Learning Machines on Big Data: Intelligent Recognition of Invasive Species,” in Advances in Big Data, Cham, 2017, pp. 333–345. [CrossRef]

- J. Lü and P. Wang, “Evolutionary Mechanisms of Network Motifs in PPI Networks,” in Modeling and Analysis of Bio-molecular Networks, J. Lü and P. Wang, Eds. Singapore: Springer, 2020, pp. 295–313. [CrossRef]

- G. Peters, M. Lampart, and R. Weber, “Evolutionary Rough k-Medoid Clustering,” in Transactions on Rough Sets VIII, J. F. Peters and A. Skowron, Eds. Berlin, Heidelberg: Springer, 2008, pp. 289–306. [CrossRef]

- M. Anantathanavit and M.-A. Munlin, “Radius Particle Swarm Optimization,” in 2013 International Computer Science and Engineering Conference (ICSEC), Sep. 2013, pp. 126–130. [CrossRef]

- X. Wu, “A density adjustment based particle swarm optimization learning algorithm for neural network design,” in 2011 International Conference on Electrical and Control Engineering, Sep. 2011, pp. 2829–2832. [CrossRef]

- R. Bogue, “Cloud robotics: a review of technologies, developments and applications,” Ind. Robot Int. J., vol. 44, no. 1, pp. 1–5, Jan. 2017. [CrossRef]

- F. Farooq et al., “A Comparative Study for the Prediction of the Compressive Strength of Self-Compacting Concrete Modified with Fly Ash,” Materials, vol. 14, no. 17, Art. no. 17, Jan. 2021. [CrossRef]

- S. Gupta, S. Al-Obaidi, and L. Ferrara, “Meta-Analysis and Machine Learning Models to Optimize the Efficiency of Self-Healing Capacity of Cementitious Material,” Materials, vol. 14, no. 16, Art. no. 16, Jan. 2021. [CrossRef]

- S. Rauter and F. Tschuchnigg, “CPT Data Interpretation Employing Different Machine Learning Techniques,” Geosciences, vol. 11, no. 7, Art. no. 7, Jul. 2021. [CrossRef]

- Z. Chen, H. Li, A. T. C. Goh, C. Wu, and W. Zhang, “Soil Liquefaction Assessment Using Soft Computing Approaches Based on Capacity Energy Concept,” Geosciences, vol. 10, no. 9, Art. no. 9, Sep. 2020. [CrossRef]

- P. C. Lazaridis et al., “Structural Damage Prediction Under Seismic Sequence Using Neural Networks”.

- K. Morfidis and K. Kostinakis, “Rapid Prediction of Seismic Incident Angle’s Influence on the Damage Level of RC Buildings Using Artificial Neural Networks,” Appl. Sci., vol. 12, no. 3, Art. no. 3, Jan. 2022. [CrossRef]

- X. Liang, T. Qi, Z. Jin, S. Qin, and P. Chen, “Risk Assessment System Based on Fuzzy Composite Evaluation and a Backpropagation Neural Network for a Shield Tunnel Crossing under a River,” Adv. Civ. Eng., vol. 2020, p. e8840200, Nov. 2020. [CrossRef]

- K. STODDART, “UK cyber security and critical national infrastructure protection,” Int. Aff., vol. 92, no. 5, pp. 1079–1105, Jun. 2016. [CrossRef]

- “Toward a Safer Tomorrow: Cybersecurity and Critical Infrastructure,” springerprofessional.de. https://www.springerprofessional.de/en/toward-a-safer-tomorrow-cybersecurity-and-critical-infrastructur/11962790 (accessed Feb. 10, 2022).

- “Big Data Analytics for Network Intrusion Detection: A Survey.” http://article.sapub.org/10.5923.j.ijnc.20170701.03.html (accessed Feb. 10, 2022).

- Y. Liang et al., “Civil Infrastructure Serviceability Evaluation Based on Big Data,” in Guide to Big Data Applications, S. Srinivasan, Ed. Cham: Springer International Publishing, 2018, pp. 295–325. [CrossRef]

- Z. Sabeur et al., “Large Scale Surveillance, Detection and Alerts Information Management System for Critical Infrastructure,” in Environmental Software Systems. Computer Science for Environmental Protection, Cham, 2017, pp. 237–246. [CrossRef]

- L. Xing, K. Demertzis, and J. Yang, “Identifying data streams anomalies by evolving spiking restricted Boltzmann machines,” Neural Comput. Appl., vol. 32, no. 11, pp. 6699–6713, Jun. 2020. [CrossRef]

- Q. Xiao et al., “Using Hybrid Artificial Intelligence Approaches to Predict the Fracture Energy of Concrete Beams,” Adv. Civ. Eng., vol. 2021, p. e6663767, Feb. 2021. [CrossRef]

- S. A. Khokhar, T. Ahmed, R. A. Khushnood, S. M. Ali, and Shahnawaz, “A Predictive Mimicker of Fracture Behavior in Fiber Reinforced Concrete Using Machine Learning,” Materials, vol. 14, no. 24, Art. no. 24, Jan. 2021. [CrossRef]

- P. Noursalehi, H. Koutsopoulos, J. Zhao, J. Zhao, and J. Zhao, “Dynamic Origin-Destination Prediction in Urban Rail Systems: A Multi-resolution Spatio-Temporal Deep Learning Approach,” IEEE Trans. Intell. Transp. Syst., 2020. [CrossRef]

- Q. Fu, Y. Tian, and J. Sun, “Modeling and simulation of dynamic lane reversal using a cell transmission model,” J. Intell. Transp. Syst., vol. 0, no. 0, pp. 1–13, Jun. 2021. [CrossRef]

- C. Zhang, H. W. Ho, W. H. K. Lam, W. Ma, S. C. Wong, and A. H. F. Chow, “Lane-based estimation of travel time distributions by vehicle type via vehicle re-identification using low-resolution video images,” J. Intell. Transp. Syst., vol. 0, no. 0, pp. 1–20, Jan. 2022. [CrossRef]

- R. Daneshfaraz, E. Aminvash, A. Ghaderi, J. Abraham, and M. Bagherzadeh, “SVM Performance for Predicting the Effect of Horizontal Screen Diameters on the Hydraulic Parameters of a Vertical Drop,” Appl. Sci., vol. 11, no. 9, Art. no. 9, Jan. 2021. [CrossRef]

- H.-Q. Yang, X. Chen, L. Zhang, J. Zhang, X. Wei, and C. Tang, “Conditions of Hydraulic Heterogeneity under Which Bayesian Estimation is More Reliable,” Water, vol. 12, no. 1, Art. no. 1, Jan. 2020. [CrossRef]

- M. El Baba, P. Kayastha, M. Huysmans, and F. De Smedt, “Evaluation of the Groundwater Quality Using the Water Quality Index and Geostatistical Analysis in the Dier al-Balah Governorate, Gaza Strip, Palestine,” Water, vol. 12, no. 1, Art. no. 1, Jan. 2020. [CrossRef]

- H. Tu, X. Wang, W. Zhang, H. Peng, Q. Ke, and X. Chen, “Flash Flood Early Warning Coupled with Hydrological Simulation and the Rising Rate of the Flood Stage in a Mountainous Small Watershed in Sichuan Province, China,” Water, vol. 12, no. 1, Art. no. 1, Jan. 2020. [CrossRef]

- N. Kimura, I. Yoshinaga, K. Sekijima, I. Azechi, and D. Baba, “Convolutional Neural Network Coupled with a Transfer-Learning Approach for Time-Series Flood Predictions,” Water, vol. 12, no. 1, Art. no. 1, Jan. 2020. [CrossRef]

- M. Neumayer, S. Teschemacher, S. Schloemer, V. Zahner, and W. Rieger, “Hydraulic Modeling of Beaver Dams and Evaluation of Their Impacts on Flood Events,” Water, vol. 12, no. 1, Art. no. 1, Jan. 2020. [CrossRef]

- F. Di Nunno et al., “Deformation of Air Bubbles Near a Plunging Jet Using a Machine Learning Approach,” Appl. Sci., vol. 10, no. 11, Art. no. 11, Jan. 2020. [CrossRef]

- N. Gedik, “Least Squares Support Vector Mechanics to Predict the Stability Number of Rubble-Mound Breakwaters,” Water, vol. 10, no. 10, Art. no. 10, Oct. 2018. [CrossRef]

- J. M. G. Giraldo and L. G. Palacio, “The fourth industrial revolution, an opportunity for Civil Engineering.,” in 2020 15th Iberian Conference on Information Systems and Technologies (CISTI), Jun. 2020, pp. 1–7. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).