Submitted:

27 January 2023

Posted:

28 January 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Experimental Design

2.1.1. Driver Test Design

- The Biographic Questionnaire identifies key facts about the subject, such as gender, age, and driving record.

- The Driver Behaviour Questionnaire (DBQ) collects self-reported data from the drivers, as there are no objective records of driving behaviour and previous traffic violations. The original DBQ consists of 50 items and is used to score the following three underlying factors: errors, violations, and lapses.

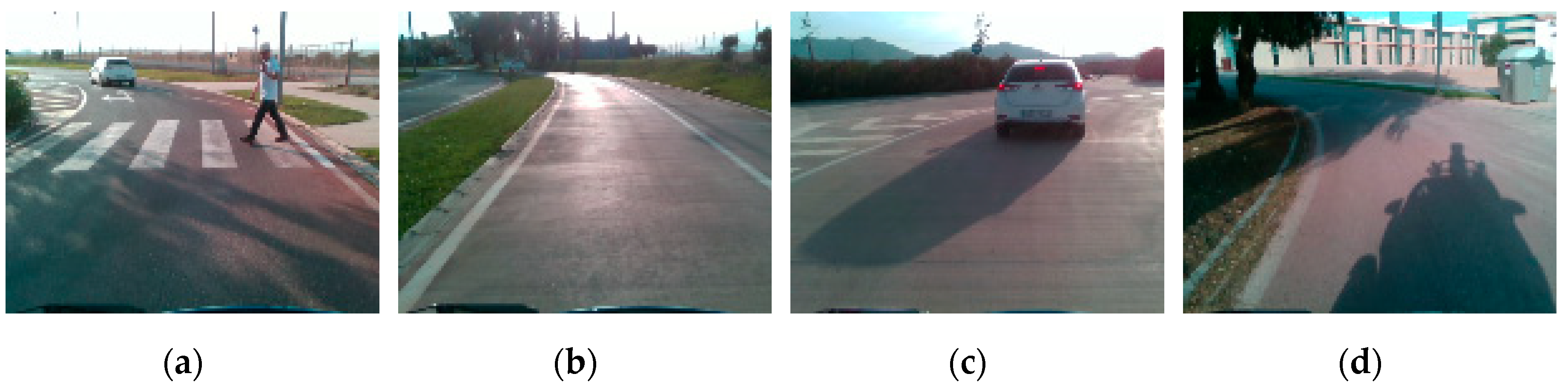

2.1.2. Driving Test Design

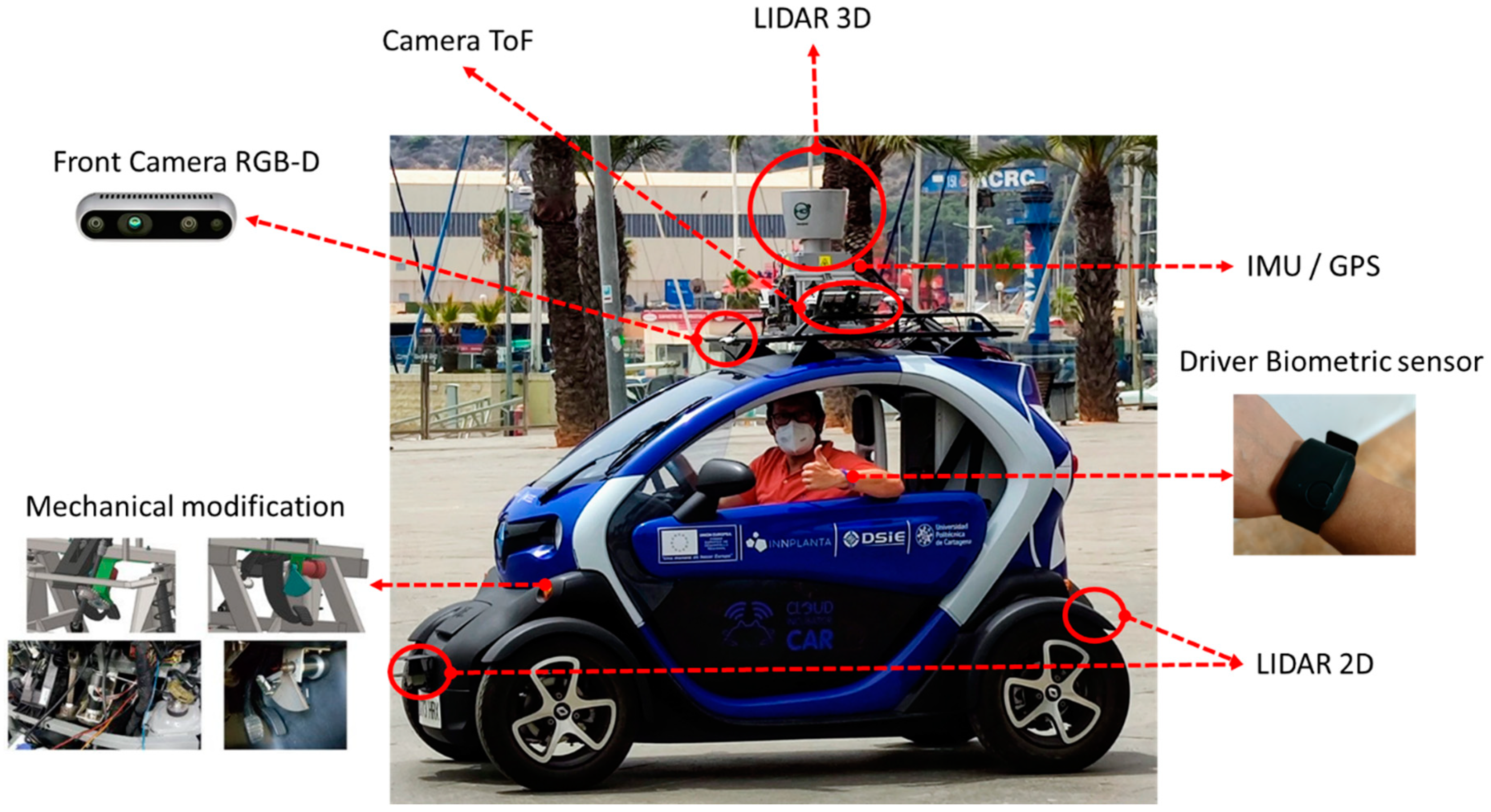

2.1.3. Platform Setup

3. Results

3.1. Driver Directory

- (TIME): The first column corresponds to the time stamp expressed as a unix timestamp in UTC.

- (TEMP): Data from the temperature sensor expressed as degrees in Celsius (°C).

- (EDA) Measurement of electrodermal activity by capturing electrical conductance (inverse of resistance) across the skin. The data provided is raw data obtained directly from the sensor expressed in microsiemens (μS).

- (BVP) The BVP is the blood volume pulse and is the raw output of the PPG sensor. The PPG/BVP is the input signal to algorithms that calculate Inter beat Interval Times (IBI) and Heart Rate (HR) as outputs.

- (HR): This file contains the average heart rate values calculated at 10 second intervals. They are not derived from a real-time read, but are processed after the data is loaded into a session.

- (ACC_X, ACC_Y, ACC_Z) Data from the 3-axis accelerometer sensor. The accelerometer is configured to measure acceleration in the range [-2g, 2g]. Therefore, the unit in this file is 1/64g. Data from x, y, and z axis are displayed in the sixth, seventh and eighth columns, respectively.

3.2. Perception Directory

- 20 .bin type files, called perceptionXX.bin, where XX corresponds to the identifier number assigned to each driver at the time of the test.

- 20 images from the RGB-D camera (front view).

- head[0]: indicates the size in bytes of the packet received by the sensor.

- head[1]: contains a sensor identifier which shows the source of the data packet received.

3.3. Position Directory

- (TIME) This first column contains the timestamp of the session expressed as a unix UTC timestamp

- (LATITUDE) latitude values obtained by the GPS

- (LONGITUD) longitude values obtained by the GPS

- (ALTITUDE) altitude values obtained by the GPS

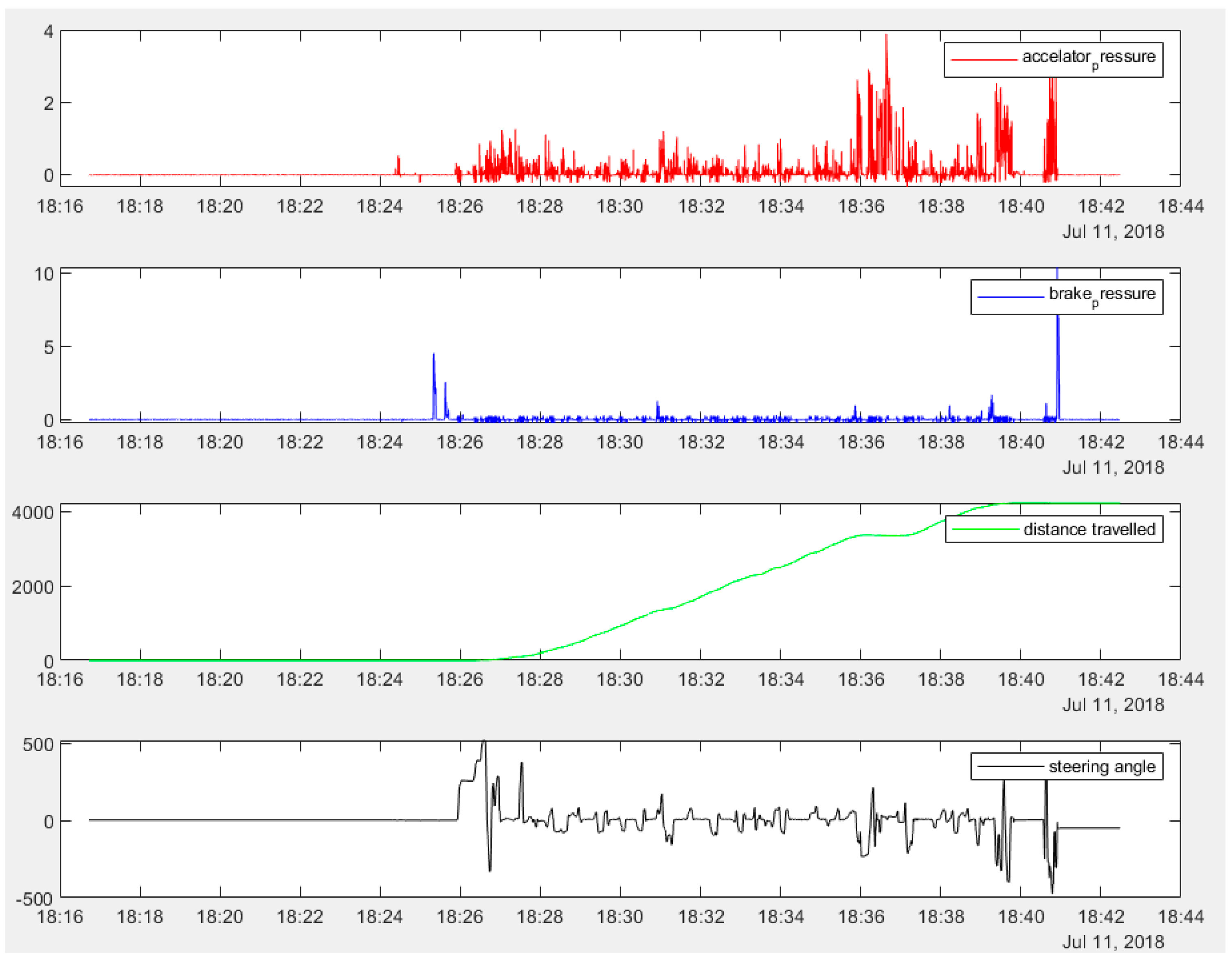

- (STERING_ANGLE): Steering wheel angle

- (SPEED): Speed/(m/s)

- (DISTANCE_TRAVELLED)

- (LIN_ACEL_X): acceleration obtained around the x-axis, obtained in g

- (LIN_ACEL_Y): acceleration obtained around the y-axis, obtained in g

- (LIN_ACEL_Z): acceleration obtained around the z-axis, obtained in g

- (ANG_VEL_X): angular velocity obtained around the x-axis, in degrees/second.

- (ANG_VEL_Y): angular velocity obtained around the y-axis, in degrees/second

- (ANG_VEL_Z): angular velocity obtained around the x-axis, in degrees/second.

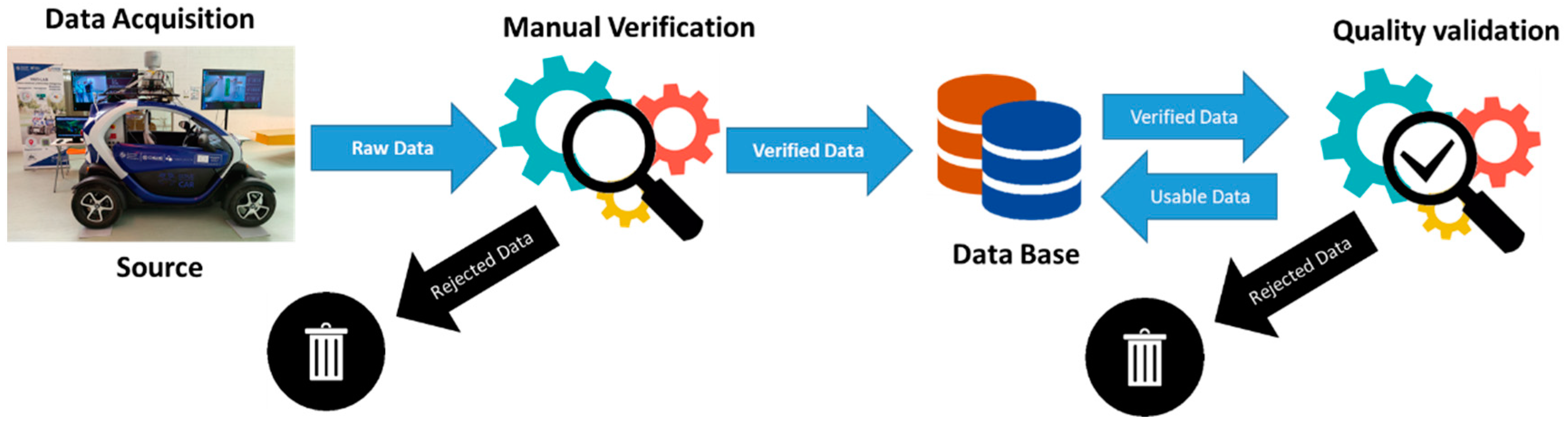

4. Technical Validation

4.1. Driver Test Validation

- A first validation is carried out by measuring the reliability of the data obtained from the DBQS tests carried out on the drivers. The reliability of the questionnaires was obtained with the entire sample, finding the Cronbach’s alpha indices and the two Guttman halves. The values to interpret the reliability were: <.50 unacceptable; 0.50≥poor<0.60; 0.60≥questionable/doubtful<0.70; 0.70≥acceptable<0.80; 0.90 ≥good<0.90; and ≥0.90 excellent. The Cronbach Alapha coefficient is 0.796, which shows that the DBQ data set in this experiment has credibility [22].

- Missing data E4 data of 7 participants (driver 1, driver 5, driver 17, driver 21, driver 30, driver 42, driver 45) were excluded due to a device malfunction during data collection. While physiological signals in the dataset are mostly error-free with most of the files complete above 95%, a portion of data is missing due to issues inherent to devices or a human error.

4.2. Driving Test Validation

- Checking for abnormalities during the test. The time elapsed for the completion of each test has been checked, passing a filter, and discarding those tests in which the time has been either very short or too long. Data of 5 participants (driver 4, driver 17, driver 23, driver 29, driver 40) were excluded.

-

Checking for errors in reading the sensors or writing to the disk. For each of the tests, the correct sending of information by the sensors during the test is verified. Those tests where a total or partial failure has been detected have been discarded. To detect these failures, the following aspects were checked:

- All files exist on the disk. At the end of each test, the number of files generated has been checked. The absence of any of the files implies a failure to read or write the data occurred, therefore this test was discarded completely. Data of 4 participants (driver 1, driver 10, driver 31, driver 34) were excluded.

- Empty files. It has been verified that the files generated all contain data, discarding those tests where empty files have been detected. Data of 2 participants (driver 35, driver 36) were excluded.

- Exploratory data analysis. Taking into account the different types of data processed, the different types of descriptive analytics have been chosen: (1) Analysis of data deviation. A standard deviation analysis has been applied to those data with discrete values (average speed, time traveled, etc.), discarding those data with a sharp deviation. Data of 2 participants (driver 11, driver 38) were excluded (2) Time series analysis: most of the data correspond to time series of data, with a certain variation of speed, for this reason it has been decided to use the Dynamic Time Warping (DTW) technique.

- Checking for driving route failures. For each of the tests carried out, the route taken by the driver during the test has been verified, to make sure the driver stuck to the route initially stipulated. The test where a small deviation from the track occurred has been discarded. To verify this, the following checks were made: (1) steering wheel rotation pattern during the test, given that for the same trajectory the steering wheel rotation pattern must be similar for all the tests. (2) GPS trajectory, the trajectory has been painted and the tests that do not comply with the marked route have been eliminated.

4.2.1. Quality Control of Variables

4.2.2. Quality Control of Support Media

4.2.3. Experimental Validation

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- C. Olaverri-Monreal, «Autonomous vehicles and smart mobility related technologies», Infocommunications Journal, vol. 8, n.o 2, pp. 17-24, 2016.

- M. Alawadhi, J. Almazrouie, M. Kamil, y K. A. Khalil, «A systematic literature review of the factors influencing the adoption of autonomous driving», International Journal of Systems Assurance Engineering and Management, vol. 11, n.o 6, pp. 1065-1082, dic. 2020. [CrossRef]

- F. Rosique, P. J. Navarro, C. Fernández, y A. Padilla, «A Systematic Review of Perception System and Simulators for Autonomous Vehicles Research», Sensors, vol. 19, n.o 3, p. 648, ene. 2019. [CrossRef]

- R. Kesten et al., «Lyft Level 5 AV Dataset 2019», 2019.

- H. Caesar et al., «Nuscenes: A multimodal dataset for autonomous driving», en Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2020, pp. 11618-11628. [CrossRef]

- Scale AI y Hesai, «Pandaset Open Dataset», 2019.

- P. Sun et al., «Scalability in Perception for Autonomous Driving: Waymo Open Dataset», 2019.

- J. Geyer et al., «A2D2: Audi Autonomous Driving Dataset», arXiv:2004.06320 [cs, eess], abr. 2020, Accedido: 11 de mayo de 2021. [En línea]. Disponible en: http://arxiv.org/abs/2004.06320.

- E. Alberti, A. Tavera, C. Masone, y B. Caputo, «IDDA: A Large-Scale Multi-Domain Dataset for Autonomous Driving», IEEE Robotics and Automation Letters, vol. 5, n.o 4, pp. 5526-5533, oct. 2020. [CrossRef]

- Z. Yang, Y. Zhang, J. Yu, J. Cai, y J. Luo, «End-to-end Multi-Modal Multi-Task Vehicle Control for Self-Driving Cars with Visual Perceptions», en Proceedings - International Conference on Pattern Recognition, nov. 2018, vol. 2018-Augus, pp. 2289-2294. [CrossRef]

- B. Hurl, K. Czarnecki, y S. Waslander, «Precise Synthetic Image and LiDAR (PreSIL) Dataset for Autonomous Vehicle Perception», en 2019 IEEE Intelligent Vehicles Symposium (IV), jun. 2019, pp. 2522-2529. [CrossRef]

- D. Wang, J. Wen, Y. Wang, X. Huang, y F. Pei, «End-to-End Self-Driving Using Deep Neural Networks with Multi-auxiliary Tasks», Automotive Innovation, vol. 2, n.o 2, pp. 127-136, jun. 2019. [CrossRef]

- A. Geiger, P. Lenz, C. Stiller, y R. Urtasun, «Vision meets robotics: The KITTI dataset», The International Journal of Robotics Research, vol. 32, n.o 11, pp. 1231-1237, sep. 2013. [CrossRef]

- X. Huang, P. Wang, X. Cheng, D. Zhou, Q. Geng, y R. Yang, «The ApolloScape Open Dataset for Autonomous Driving and Its Application», IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, n.o 10, pp. 2702-2719, oct. 2020. [CrossRef]

- «Dataset Overview – Cityscapes Dataset». https://www.cityscapes-dataset.com/dataset-overview/ (accedido 30 de noviembre de 2022).

- A. Mimouna et al., «OLIMP: A Heterogeneous Multimodal Dataset for Advanced Environment Perception», Electronics, vol. 9, n.o 4, Art. n.o 4, abr. 2020. [CrossRef]

- P. Koopman y M. Wagner, «Challenges in Autonomous Vehicle Testing and Validation», SAE Int. J. Trans. Safety, vol. 4, n.o 1, pp. 15-24, abr. 2016. [CrossRef]

- P. J. Navarro, L. Miller, F. Rosique, C. Fernández-Isla, y A. Gila-Navarro, «End-to-End Deep Neural Network Architectures for Speed and Steering Wheel Angle Prediction in Autonomous Driving», Electronics, vol. 10, n.o 11, Art. n.o 11, ene. 2021. [CrossRef]

- J. Cai, W. Deng, H. Guang, Y. Wang, J. Li, y J. Ding, «A Survey on Data-Driven Scenario Generation for Automated Vehicle Testing», Machines, vol. 10, n.o 11, Art. n.o 11, nov. 2022. [CrossRef]

- E. L. de Cózar, J. Sanmartín, J. G. Molina, J. M. Aragay, y A. Perona, «Behaviour Questionnaire (SDBQ).», p. 15.

- R. Borraz, P. J. Navarro, C. Fernández, y P. M. Alcover, «Cloud Incubator Car: A Reliable Platform for Autonomous Driving», Applied Sciences, vol. 8, n.o 2, Art. n.o 2, feb. 2018. [CrossRef]

- Z. Deng, D. Chu, C. Wu, Y. He, y J. Cui, «Curve safe speed model considering driving style based on driver behaviour questionnaire», Transportation Research Part F: Traffic Psychology and Behaviour, vol. 65, pp. 536-547, ago. 2019. [CrossRef]

- «Kubios HRV - Heart rate variability», Kubios. https://www.kubios.com/ (accedido 7 de diciembre de 2022).

- «How is IBI.csv obtained?», Empatica Support. https://support.empatica.com/hc/en-us/articles/201912319-How-is-IBI-csv-obtained- (accedido 7 de diciembre de 2022).

| Ref./Year | Samples | Image Type | LIDAR | RADAR | IMU / GPS | ControlActions | Raw data | Driver data | Real data | Biometrics data | Driver Behaviour |

|---|---|---|---|---|---|---|---|---|---|---|---|

| UPCT | 78 K | RGB, Depth | Yes | No | Yes | Steering wheel, Speed | Yes | Yes | Yes | Yes | Yes |

| Lyft L5 [4] /2019 | 323 K | RGB | Yes | No | Yes | - | Yes | No | Yes | No | No |

| nuScenes [5] /2019 | 1.4 M | RGB | Yes | Yes | Yes | - | Partial | No | Yes | No | No |

| Pandaset [6] /2019 | 48 K | RGB | Yes | No | Yes | - | Partial | No | Yes | No | No |

| Waymo [7] /2019 | 1 M | RGB | Yes | No | Yes | - | Yes | No | Yes | No | No |

| A2D2 [8] /2020 | 392 K | RGB | Yes | No | Yes | Steering angle, brake, accelerator | Partial | No | Yes | No | No |

| IDDA [9] /2020 | 1 M | RGB, Depth | No | No | No | - | No | No | No | No | No |

| Udacity [10] /2016 | 34 K | RGB | Yes | No | Yes | Steering wheel | Yes | No | No | No | No |

| PreSIL [11] /2019 | 50 K | RGB | Yes | No | No | - | No | No | No | No | No |

| GAC [12]/2019 | 3.24 M | RGB | No | No | No | Steering wheel, Speed | N/A | No | Yes | No | No |

| KITTI [13] /2012 | 15 K | RGB | Yes | No | Yes | - | Yes | No | Yes | No | No |

| Appollo Scape [14] /2020 | 100 K | RGB | Yes | No | No | - | No | No | Yes | No | No |

| Cityscapes [15] /2020 | 25 K | RGB | No | No | Yes | - | No | No | Yes | No | No |

| OLIMP [16] /2020 | 47 K | RGB | No | Yes | No | - | Yes | No | Yes | No | No |

| Categories | n Initial | % Initial | n Final | % Final | |

|---|---|---|---|---|---|

| Gender | Male | 26 | 52 | 11 | 55 |

| Female | 24 | 48 | 9 | 45 | |

| Age | 18-24 | 6 | 12 | 3 | 15 |

| 25-44 | 22 | 44 | 10 | 50 | |

| 45-64 | 17 | 34 | 5 | 25 | |

| >=65 | 5 | 10 | 2 | 10 |

| Device | Variable | Details |

|---|---|---|

| LiDAR 3D | Scene | Long-range sensors 3D High-Definition LIDAR (HDL64SE supplied by Velodyne) Its 64 laser beams spin at 800 rpm and can detect objects up to 120 m away with an accuracy of 2 cm. 1.3 Million Points per Second Vertical FOV : 26.9° |

| 2 x LiDAR 2D | Scene | Short-range sensors Sick laser 2D TIM551 Operating range 0.05 m ... 10 m Horizontal FOV 270° Frequency 15 Hz Angular resolution 1° Range 10% of reflectance 8 m |

| 2 x ToF | Scene | Short-range sensors ToF Sentis3D-M420Kit cam Range: Indoor: 7 m Outdoor: 4 m, Horizontal FOV: 90° |

| RGB-D | Scene | Short-range sensors Depth Camera D435 Intel RealSense range 3m Up to 90 fps Depth FOV: 87° × 58° RGB FOV: 69° × 42° |

| IMU | Localization, Longitudinal and transversal Acceleration | NAV440CA-202 Inertial Measurement Unit (IMU) 3-axis accelerometer Bandwidth: 25 Hz Pitch and roll accuracy of < 0.4°, Position Accuracy < 0.3 m |

| GPS | Localization | EMLID RTK GNSS Receiver 7 mm positioning precision |

| Encoder | Distance | |

| Biometric sensors | Driver Biometric signals | Empatica E4 EDA Sensor (GSR Sensor), PPG Sensor, Infrared Thermopile, 3-axis Accelerometer |

| Variable | Sampling Frequency | Signal range [min, max] | Details |

|---|---|---|---|

| ACC | 32 Hz | [-2g, 2g] | Accelerometer 3 axes data (x,y,z). |

| EDA | 4 Hz | [0.01µS, 100µS] | Electrodermal activity by capturing electrical conductance (inverse of resistance) across the skin. |

| BVP | 64 Hz | n/a | Blood Volume Pulse. |

| IBI | 64 Hz | n/a | Inter-beat interval (obtained from the BVP signal) |

| HR | 1 Hz | n/a | Average Heart Rate (obtained from the BVP signal). Values calculated at 10 second intervals. |

| TEMP | 4 Hz | [−40ºC, 115ºC] | Skin Temperature. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).