Submitted:

24 January 2023

Posted:

30 January 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- What factors influence the performance of the OSM building completeness classification?

- How well can the completeness feature produce reliable results so that it can be used in applications of risk-assessment solutions, such as exposure modeling?

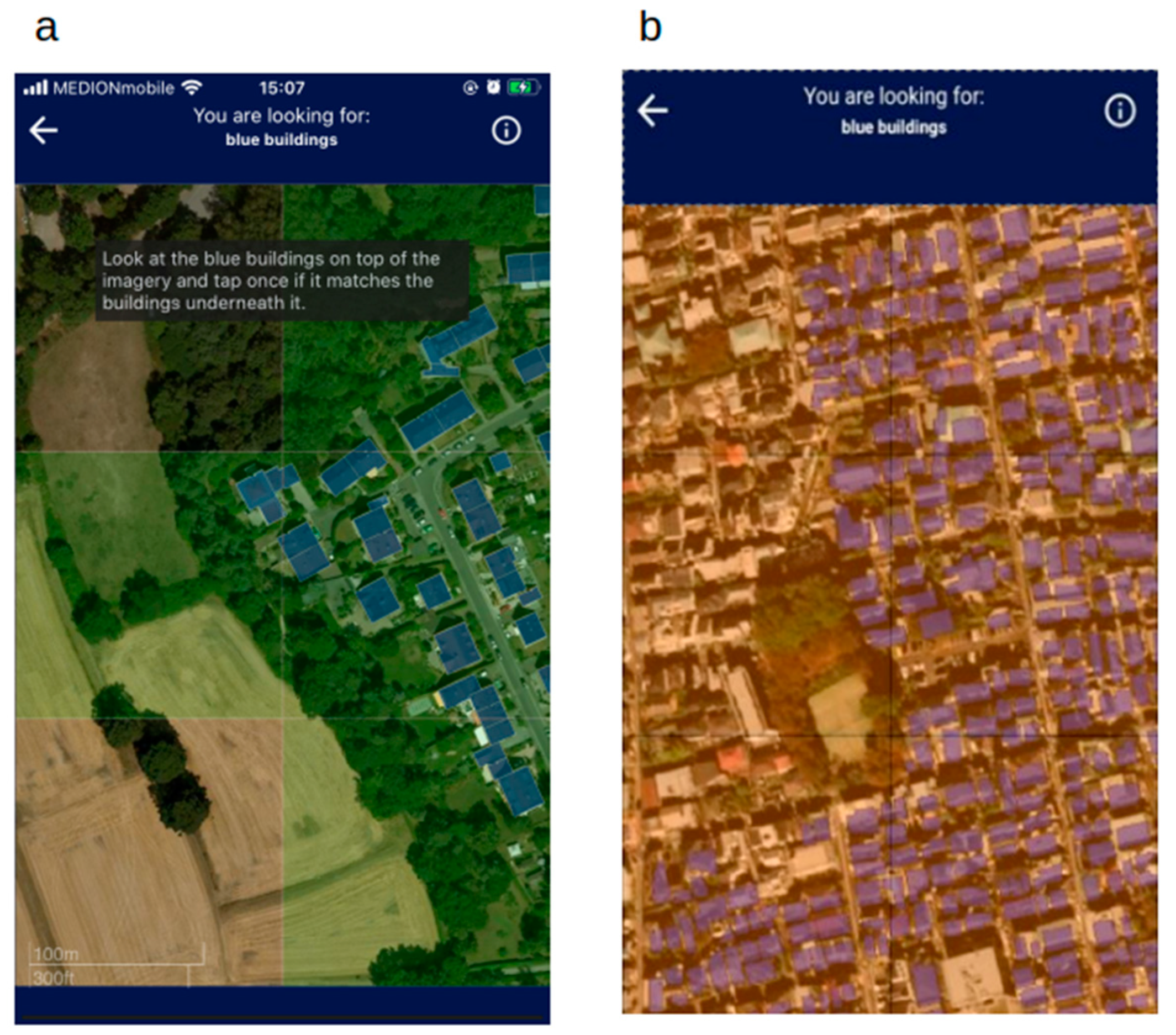

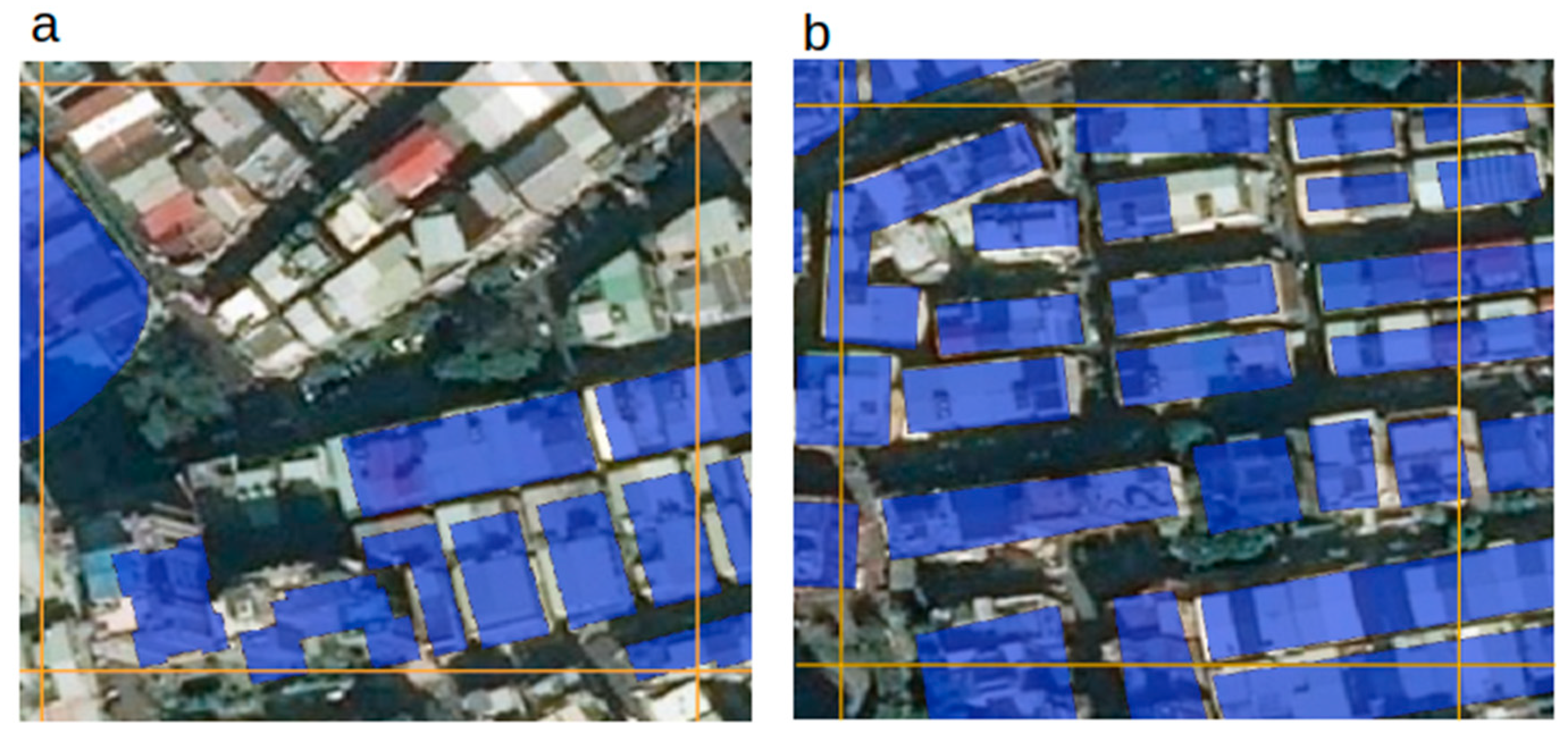

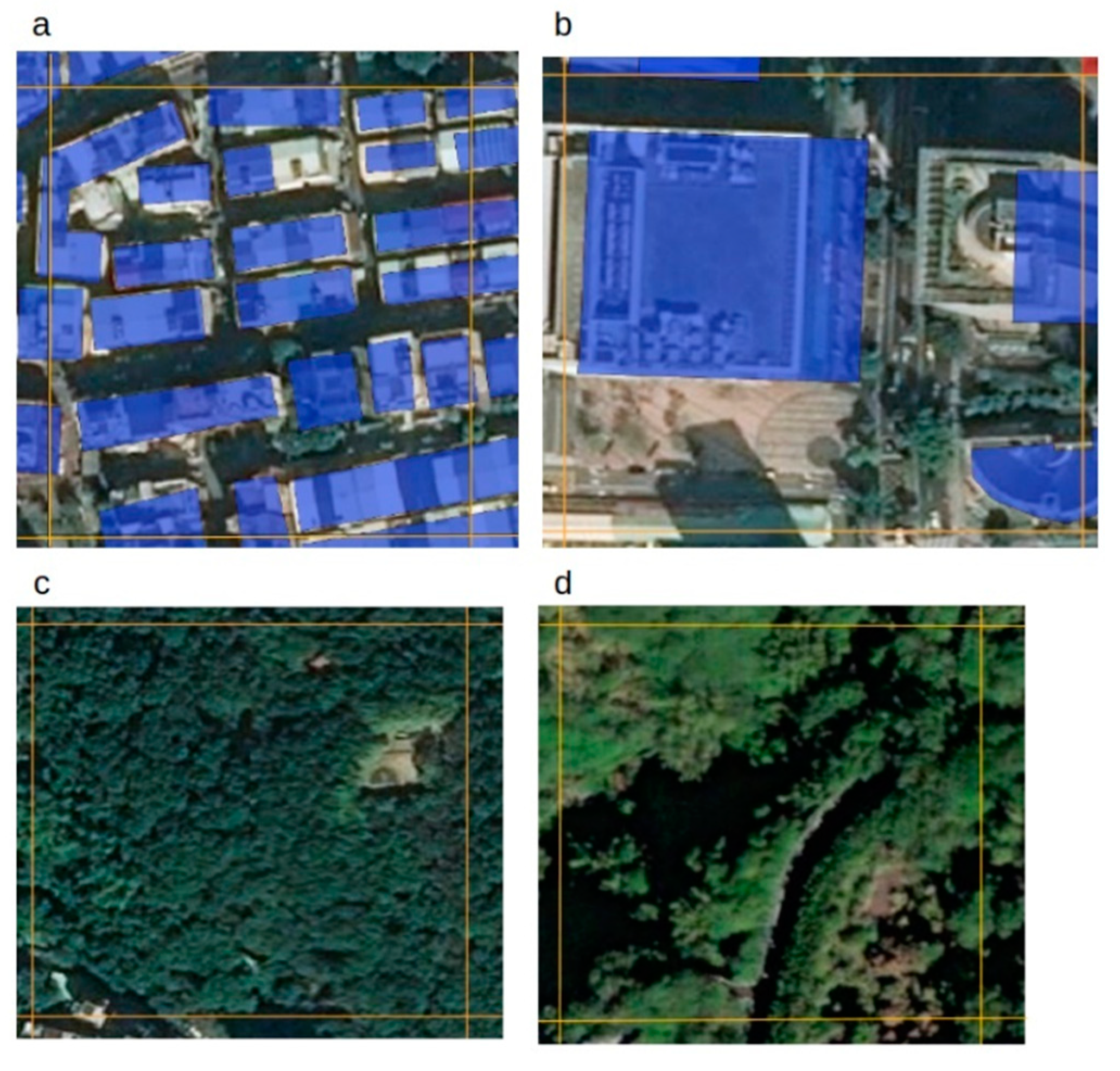

2. MapSwipe Data Model

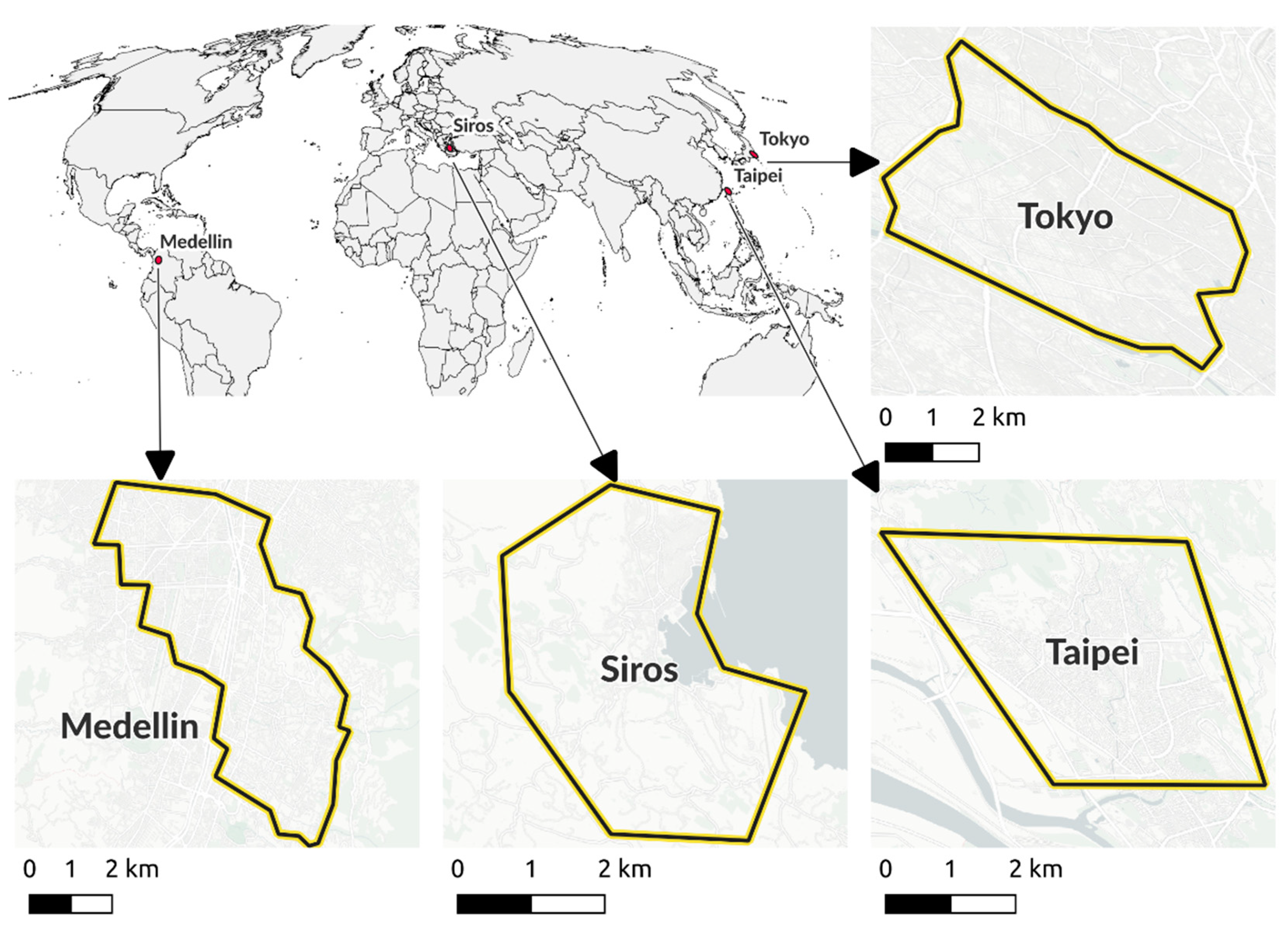

3. Case Study

4. Methods and Data

4.1. Data

4.2. Data Pre-Processing

4.3. Analysis: Performance Evaluation

| Accuracy | (1) | |

| Sensitivity | (2) | |

| Precision | (3) | |

| F1 Score | (4) |

4.4. Analysis of Geographic Factors Influencing Crowd-Sourced Classification Performance

5. Results

5.1. Overall Classification Performance

5.2. Classification Performance for Each Site

5.3. Factors That Influenced the Crowd-Sourced Classification Performance

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- McGlade, J.; Bankoff, G.; Abrahams, J.; Cooper-Knock, S.; Cotecchia, F.; Desanker, P.; Erian, W.; Gencer, E.; Gibson, L.; Girgin, S.; et al. Global Assessment Report on Disaster Risk Reduction 2019.

- Birkmann, J. Measuring Vulnerability to Promote Disaster-Resilient Societies: Conceptual Frameworks and Definitions. Measuring vulnerability to natural hazards: Towards disaster resilient societies 2006, 1, 9–54. [Google Scholar]

- Pittore, M.; Wieland, M.; Fleming, K. Perspectives on global dynamic exposure modelling for geo-risk assessment. Nat. Hazards 2016, 86, 7–30. [Google Scholar] [CrossRef]

- Shan, S.; Zhao, F.; Wei, Y.; Liu, M. Disaster management 2.0: A real-time disaster damage assessment model based on mobile social media data—A case study of Weibo (Chinese Twitter). Saf. Sci. 2019, 115, 393–413. [Google Scholar] [CrossRef]

- Peduzzi, P.; Dao, H.; Herold, C.; Mouton, F. Assessing global exposure and vulnerability towards natural hazards: the Disaster Risk Index. Nat. Hazards Earth Syst. Sci. 2009, 9, 1149–1159. [Google Scholar] [CrossRef]

- De Bono, A.; Mora, M.G. A global exposure model for disaster risk assessment. Int. J. Disaster Risk Reduct. 2014, 10, 442–451. [Google Scholar] [CrossRef]

- Gunasekera, R.; Ishizawa, O.; Aubrecht, C.; Blankespoor, B.; Murray, S.; Pomonis, A.; Daniell, J. Developing an adaptive global exposure model to support the generation of country disaster risk profiles. Earth-Science Rev. 2015, 150, 594–608. [Google Scholar] [CrossRef]

- Poiani, T.H.; Rocha, R.D.S.; Degrossi, L.C.; De Albuquerque, J.P. Potential of Collaborative Mapping for Disaster Relief: A Case Study of OpenStreetMap in the Nepal Earthquake 2015. 2016 49th Hawaii International Conference on System Sciences (HICSS). LOCATION OF CONFERENCE, COUNTRYDATE OF CONFERENCE; pp. 188–197.

- Goldblatt, R.; Jones, N.; Mannix, J. Assessing OpenStreetMap Completeness for Management of Natural Disaster by Means of Remote Sensing: A Case Study of Three Small Island States (Haiti, Dominica and St. Lucia). Remote. Sens. 2019, 12, 118. [Google Scholar] [CrossRef]

- Hecht, R.; Kunze, C.; Hahmann, S. Measuring Completeness of Building Footprints in OpenStreetMap over Space and Time. ISPRS Int. J. Geo-Information 2013, 2, 1066–1091. [Google Scholar] [CrossRef]

- Herfort, B.; Lautenbach, S.; de Albuquerque, J.P.; Anderson, J.; Zipf, A. The evolution of humanitarian mapping within the OpenStreetMap community. Sci. Rep. 2021, 11, 1–15. [Google Scholar] [CrossRef]

- Brückner, J.; Schott, M.; Zipf, A.; Lautenbach, S. Assessing shop completeness in OpenStreetMap for two federal states in Germany. Agil. GIScience Ser. 2021, 2, 1–7. [Google Scholar] [CrossRef]

- Quattrone, G.; Mashhadi, A.; Capra, L. Mind the Map: The Impact of Culture and Economic Affluence on Crowd-Mapping Behaviours. In Proceedings of the Proceedings of the 17th ACM conference on Computer supported cooperative work & social computing - CSCW ’14; ACM Press: Baltimore, Maryland, USA, 2014; pp. 934–944. In Proceedings of the Proceedings of the 17th ACM conference on Computer supported cooperative work & social computing - CSCW ’14; ACM Press: Baltimore, Maryland, USA.

- Haklay, M. How Good is Volunteered Geographical Information? A Comparative Study of OpenStreetMap and Ordnance Survey Datasets. Environ. Plan. B Plan. Des. 2010, 37, 682–703. [Google Scholar] [CrossRef]

- Törnros, T.; Dorn, H.; Hahmann, S.; Zipf, A. UNCERTAINTIES OF COMPLETENESS MEASURES IN OPENSTREETMAP – A CASE STUDY FOR BUILDINGS IN A MEDIUM-SIZED GERMAN CITY. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. W5. [CrossRef]

- Zielstra, D.; Zipf, A. Quantitative Studies on the Data Quality of OpenStreetMap in Germany. In Proceedings of the Proceedings of GIScience. Vol. 2010. No. 3; 2010; p. 8.

- Barron, C.; Neis, P.; Zipf, A. A Comprehensive Framework for Intrinsic OpenStreetMap Quality Analysis. Trans. GIS 2013, 18, 877–895. [Google Scholar] [CrossRef]

- Minghini, M.; Brovelli, M.A.; Frassinelli, F. AN OPEN SOURCE APPROACH FOR THE INTRINSIC ASSESSMENT OF THE TEMPORAL ACCURACY, UP-TO-DATENESS AND LINEAGE OF OPENSTREETMAP. ISPRS - Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. W8. [CrossRef]

- Raifer, M.; Troilo, R.; Kowatsch, F.; Auer, M.; Loos, L.; Marx, S.; Przybill, K.; Fendrich, S.; Mocnik, F.-B.; Zipf, A. OSHDB: a framework for spatio-temporal analysis of OpenStreetMap history data. Open Geospat. Data Softw. Stand. 2019, 4, 3. [Google Scholar] [CrossRef]

- Senaratne, H.; Mobasheri, A.; Ali, A.L.; Capineri, C.; Haklay, M. (. A review of volunteered geographic information quality assessment methods. Int. J. Geogr. Inf. Sci. 2016, 31, 139–167. [Google Scholar] [CrossRef]

- Gröchenig, S.; Brunauer, R.; Rehrl, K. Estimating Completeness of VGI Datasets by Analyzing Community Activity Over Time Periods. In Connecting a Digital Europe Through Location and Place; Huerta, J., Schade, S., Granell, C., Eds.; Springer International Publishing: Cham, Swizterland, 2014; pp. 3–18, ISBN 978-3-319-03611-3.

- Yeboah, G.; de Albuquerque, J.P.; Troilo, R.; Tregonning, G.; Perera, S.; Ahmed, S.A.K.S.; Ajisola, M.; Alam, O.; Aujla, N.; Azam, S.I.; et al. Analysis of OpenStreetMap Data Quality at Different Stages of a Participatory Mapping Process: Evidence from Slums in Africa and Asia. ISPRS Int. J. Geo-Information 2021, 10, 265. [Google Scholar] [CrossRef]

- Zhou, Q. Exploring the relationship between density and completeness of urban building data in OpenStreetMap for quality estimation. Int. J. Geogr. Inf. Sci. 2017, 32, 257–281. [Google Scholar] [CrossRef]

- Zhou, Q.; Tian, Y. The use of geometric indicators to estimate the quantitative completeness of street blocks in OpenStreetMap. Trans. GIS 2018, 22, 1550–1572. [Google Scholar] [CrossRef]

- Camboim, S.P.; Bravo, J.V.M.; Sluter, C.R. An Investigation into the Completeness of, and the Updates to, OpenStreetMap Data in a Heterogeneous Area in Brazil. ISPRS Int. J. Geo-Information 2015, 4, 1366–1388. [Google Scholar] [CrossRef]

- Neis, P.; Zielstra, D.; Zipf, A. Comparison of Volunteered Geographic Information Data Contributions and Community Development for Selected World Regions. Futur. Internet 2013, 5, 282–300. [Google Scholar] [CrossRef]

- Herfort, B.; Reinmuth, M.; de, J.P.; Zipf, A. Towards Evaluating Crowdsourced Image Classification on Mobile Devices to Generate Geographic Information about Human Settlements. In Proceedings of the Bregt, A., Sarjakoski, T., Lammeren, R. van, Rip, posters and poster abstracts of the 20th AGILE Conference on Geographic Information Science. Wageningen University & Research 9-12 May 2017; 2017., F. (Eds.). Societal Geo-Innovation : short papers.

- Scholz, S.; Knight, P.; Eckle, M.; Marx, S.; Zipf, A. Volunteered Geographic Information for Disaster Risk Reduction—The Missing Maps Approach and Its Potential within the Red Cross and Red Crescent Movement. Remote. Sens. 2018, 10, 1239. [Google Scholar] [CrossRef]

- Baruch, A.; May, A.; Yu, D. The motivations, enablers and barriers for voluntary participation in an online crowdsourcing platform. Comput. Hum. Behav. 2016, 64, 923–931. [Google Scholar] [CrossRef]

- Kohns, J.; Zahs, V.; Ullah, T.; Schorlemmer, D.; Nievas, C.; Glock, K.; Meyer, F.; Mey, H.; Stempniewski, L.; Herfort, B. Innovative Methods for Earthquake Damage Detection and Classification Using Airborne Observation of Critical Infrastructures (Project LOKI); Copernicus Meetings, 2021.

- de Albuquerque, J.P.; Herfort, B.; Eckle, M. The Tasks of the Crowd: A Typology of Tasks in Geographic Information Crowdsourcing and a Case Study in Humanitarian Mapping. Remote. Sens. 2016, 8, 859. [Google Scholar] [CrossRef]

- R Core Team R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021.

- Pebesma, E. Simple Features for R: Standardized Support for Spatial Vector Data. R J. 2018, 10, 439–446. [Google Scholar] [CrossRef]

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; McGowan, L.D.A.; François, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J.; et al. Welcome to the Tidyverse. J. Open Source Softw. 2019, 4, 1686. [Google Scholar] [CrossRef]

- Pebesma, E. Lwgeom: Bindings to Selected “liblwgeom” Functions for Simple Features 2020.

- Bolker, B.M.; Brooks, M.E.; Clark, C.J.; Geange, S.W.; Poulsen, J.R.; Stevens, M.H.H.; White, J.-S.S. Generalized linear mixed models: A practical guide for ecology and evolution. Trends Ecol. Evol. 2009, 24, 127–135. [Google Scholar] [CrossRef]

- Schielzeth, H.; Nakagawa, S. Nested by design: model fitting and interpretation in a mixed model era. Methods Ecol. Evol. 2012, 4, 14–24. [Google Scholar] [CrossRef]

- Instituto Adolfo Lutz Métodos físico-químicos para análise de alimentos; 2008; ISBN 9788578110796.

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using lme4. J. Stat. Softw. 2015, 67, 48. [Google Scholar] [CrossRef]

- Nakagawa, S.; Schielzeth, H. A general and simple method for obtaining R2 from generalized linear mixed-effects models. Methods Ecol. Evol. 2012, 4, 133–142. [Google Scholar] [CrossRef]

- Haklay, M. (.; Basiouka, S.; Antoniou, V.; Ather, A. How Many Volunteers Does it Take to Map an Area Well? The Validity of Linus’ Law to Volunteered Geographic Information. Cartogr. J. 2010, 47, 315–322. [Google Scholar] [CrossRef]

- Antoniou, V.; Skopeliti, A. MEASURES AND INDICATORS OF VGI QUALITY: AN OVERVIEW. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. W5. [CrossRef]

- Comber, A.; Mooney, P.; Purves, R.S.; Rocchini, D.; Walz, A. Crowdsourcing: It Matters Who the Crowd Are. The Impacts of between Group Variations in Recording Land Cover. PLOS ONE 2016, 11, e0158329. [Google Scholar] [CrossRef]

- Eckle, M.; de Albuquerque, J.P. Quality Assessment of Remote Mapping in OpenStreetMap for Disaster Management Purposes. In Proceedings of the Geospatial Data and Geographical Information Science Proceedings of the ISCRAM 2015 Conference; p. 2015.

- Herfort, B.; Li, H.; Fendrich, S.; Lautenbach, S.; Zipf, A. Mapping Human Settlements with Higher Accuracy and Less Volunteer Efforts by Combining Crowdsourcing and Deep Learning. Remote. Sens. 2019, 11, 1799. [Google Scholar] [CrossRef]

- Pisl, J.; Li, H.; Lautenbach, S.; Herfort, B.; Zipf, A. Detecting OpenStreetMap missing buildings by transferring pre-trained deep neural networks. Agil. GIScience Ser. 2021, 2, 1–7. [Google Scholar] [CrossRef]

- Marconcini, M.; Metz-Marconcini, A.; Üreyen, S.; Palacios-Lopez, D.; Hanke, W.; Bachofer, F.; Zeidler, J.; Esch, T.; Gorelick, N.; Kakarla, A.; et al. Outlining where humans live, the World Settlement Footprint 2015. Sci. Data 2020, 7, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Fonte, C.C.; Minghini, M.; Patriarca, J.; Antoniou, V.; See, L.; Skopeliti, A. Generating Up-to-Date and Detailed Land Use and Land Cover Maps Using OpenStreetMap and GlobeLand30. ISPRS Int. J. Geo-Information 2017, 6, 125. [Google Scholar] [CrossRef]

- Vargas-Munoz, J.E.; Srivastava, S.; Tuia, D.; Falcao, A.X. OpenStreetMap: Challenges and Opportunities in Machine Learning and Remote Sensing. IEEE Geosci. Remote. Sens. Mag. 2020, 9, 184–199. [Google Scholar] [CrossRef]

- Li, H.; Herfort, B.; Huang, W.; Zia, M.; Zipf, A. Exploration of OpenStreetMap missing built-up areas using twitter hierarchical clustering and deep learning in Mozambique. ISPRS J. Photogramm. Remote. Sens. 2020, 166, 41–51. [Google Scholar] [CrossRef]

- Mobasheri, A.; Zipf, A.; Francis, L. OpenStreetMap data quality enrichment through awareness raising and collective action tools—experiences from a European project. Geo-spatial Inf. Sci. 2018, 21, 234–246. [Google Scholar] [CrossRef]

- Byers, E.; Gidden, M.; Leclère, D.; Balkovic, J.; Burek, P.; Ebi, K.; Greve, P.; Grey, D.; Havlik, P.; Hillers, A.; et al. Global exposure and vulnerability to multi-sector development and climate change hotspots. Environ. Res. Lett. 2018, 13, 055012. [Google Scholar] [CrossRef]

| Name | Area [km2] | Tasks | OSM Building Coverage | Number of OSM Buildings per Task [1/ha] | OSM Building Footprint Area per Task [%] |

|---|---|---|---|---|---|

| Tokyo | 27.5 | 1914 | Urban area including fully mapped, partly mapped and unmapped areas | 23.6 (24.4) | 21.0 (17.8) |

| Taipei | 13.7 | 792 | Urban area including fully mapped, partly mapped and unmapped areas | 3.6 (5.2) | 11.5 (15.2) |

| Siros | 25.0 | 981 | Island accompanied by smaller patches of agricultural land including fully mapped and partly mapped areas | 7.1 (15.3) | 5.7 (11.4) |

| Medellin | 23.1 | 1110 | Northern part including high building density with almost completely mapped areas, less densely populated southern part consisting of single-family homes with partly mapped areas | 4.8 (8.0) | 13.4 (16.3) |

| Total | 89.3 | 4797 |

| Majority Rule | Criteria | Aggregated Result |

|---|---|---|

| Clear majority | Si (x=“no building” ≥ 0.5) | “no building” |

| Si (x=“complete” ≥ 0.5) | “complete” | |

| Si (x=“incomplete” ≥ 0.5) | “incomplete” | |

| Unclear majority | Si (“no building”) == Si (“incomplete”) | “incomplete” |

| Si (x=“incomplete”) == Si (x=“complete” ) | “incomplete” | |

| Si (x=“no building”) == Si (x=“complete”) | “incomplete” | |

| Si (x=“incomplete”) == Si (x=“complete”) == Si (x=“no building”) | “incomplete” |

| TP | TN | FN | FP | Accuracy | Sensitivity | Precision | F1 Score | ||

|---|---|---|---|---|---|---|---|---|---|

| Overall performance | no building complete incomplete |

562 1516 2201 |

4144 2837 2095 |

34 72 412 |

57 372 89 |

0.98 0.91 0.90 |

0.94 0.95 0.84 |

0.91 0.80 0.96 |

0.93 0.87 0.90 |

| Crowd Classification | |||||

|---|---|---|---|---|---|

| Reference dataset | “no building” | “complete” | “incomplete” | Total | |

| “no building” | 562 | 4 | 30 | 596 | |

| “complete” | 13 | 1516 | 59 | 1588 | |

| “incomplete” | 44 | 368 | 2201 | 2613 | |

| Total | 619 | 1888 | 2290 | ||

| TP | TN | FN | FP | Accuracy | Sensitivity | Precision | F1 Score | ||

|---|---|---|---|---|---|---|---|---|---|

| Siros | no buildings complete incomplete |

318 447 108 |

634 448 772 |

13 24 71 |

16 62 30 |

0.97 0.91 0.90 |

0.96 0.95 0.60 |

0.95 0.88 0.78 |

0.96 0.91 0.68 |

| Medellin | no building complete incomplete |

52 225 755 |

1049 813 280 |

3 15 60 |

6 57 15 |

0.99 0.94 0.93 |

0.95 0.94 0.93 |

0.90 0.80 0.98 |

0.92 0.86 0.95 |

| Taipei | no building complete incomplete |

117 219 373 |

644 517 340 |

15 11 57 |

16 45 22 |

0.96 0.93 0.90 |

0.89 0.95 0.87 |

0.88 0.83 0.94 |

0.88 0.89 0.90 |

| Tokyo | no building complete incomplete |

75 625 963 |

1815 1057 703 |

3 22 224 |

19 208 22 |

0.98 0.88 0.87 |

0.96 0.97 0.81 |

0.80 0.75 0.98 |

0.87 0.84 0.89 |

| Coefficient | Std. Error | 95% CI | z-value | p-value | |

|---|---|---|---|---|---|

| GLMM using building area share as predictor | |||||

| Intercept | 2.73 | 0.75 | [0.83, 4.65] | 3.62 | 0.00029 |

| OSM building area [%] | -9.11 | 0.54 | [-10.19, -8.07] | -16.83 | < 2*10-16 |

| AIC: 1341.0, BIC: 1357.6 Random intercept: σ2 = 2.20 (95% CI = [0.82–3.67])R²GLMM(m)= 0.24, R²GLMM(c)= 0.55 | |||||

| GLMM using buildings per area as predictor | |||||

| Intercept | 2.05 | 0.55 | [0.65, 3.45] | 3.71 | 0.00021 |

| OSM building count [1/sqm] | -744.9 | 42.4 | [-845.68, -649.57] | -17.57 | < 2*10-16 |

| AIC: 1398.1, BIC: 1414.6Random intercept: σ2 = 1.19 (95% CI = [0.60–2.70]) R²GLMM(m)= 0.26, R²GLMM(c)= 0.46 | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).