Submitted:

02 February 2023

Posted:

06 February 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Cann’t handle mixed pixels, a phenomenon that occurs when features from multiple classes are present in a single pixel.

- Don’t take advantage of the content of adjacent pixels and their contextual information.

2. Related Work

3. Methodology

3.1. BigEarthNet Dataset

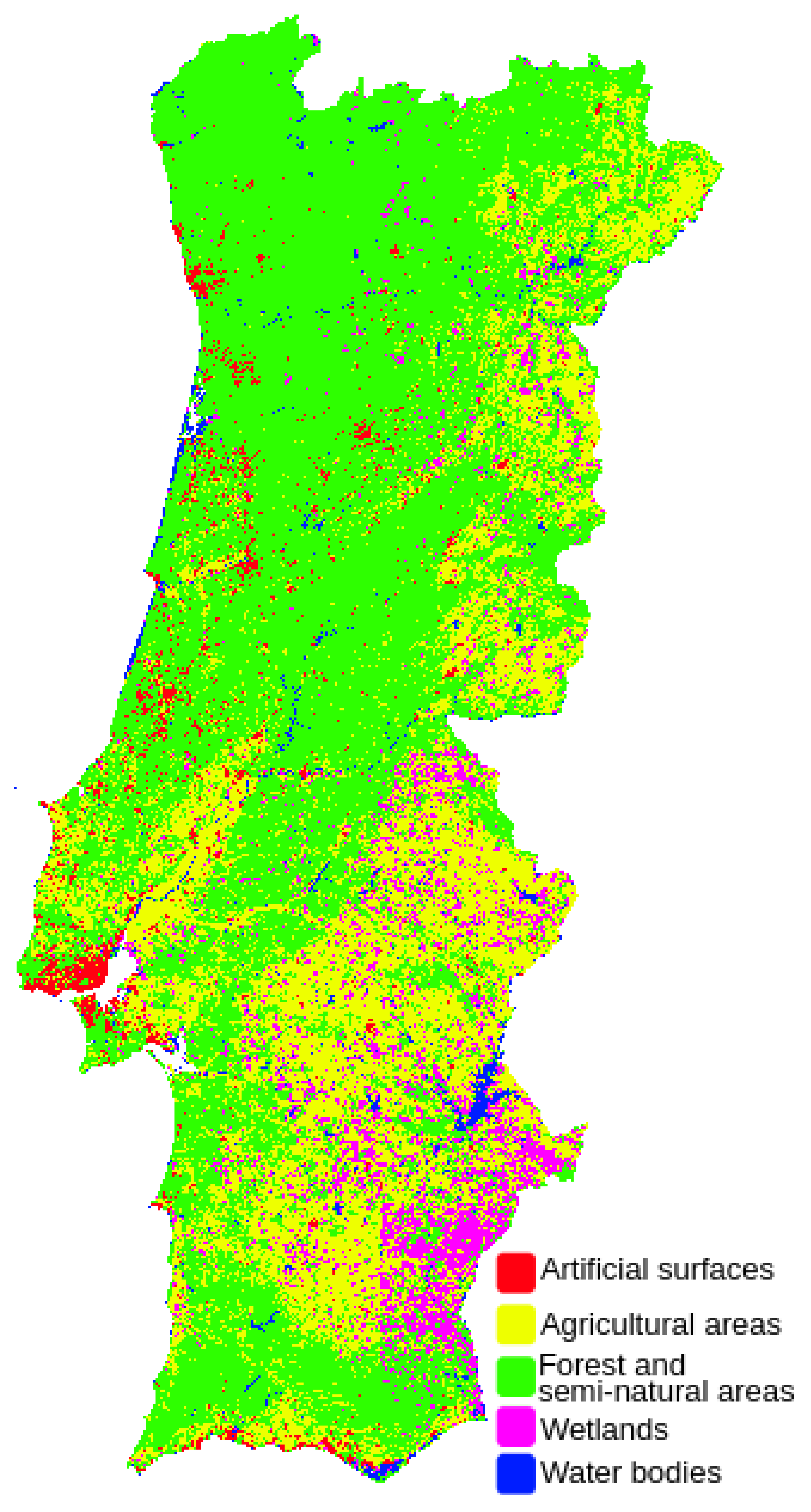

3.2. LandCoverPT Dataset

- It does not include seasonal variety.

- A thorough examination to identify the presence of clouds was not carried out, and so there may be a residual amount of clouds not detected by manual inspection.

- Some level 3 CLC classes are missing, since they do not exist in Portuguese territory.

3.3. Models

4. Experiments and Results

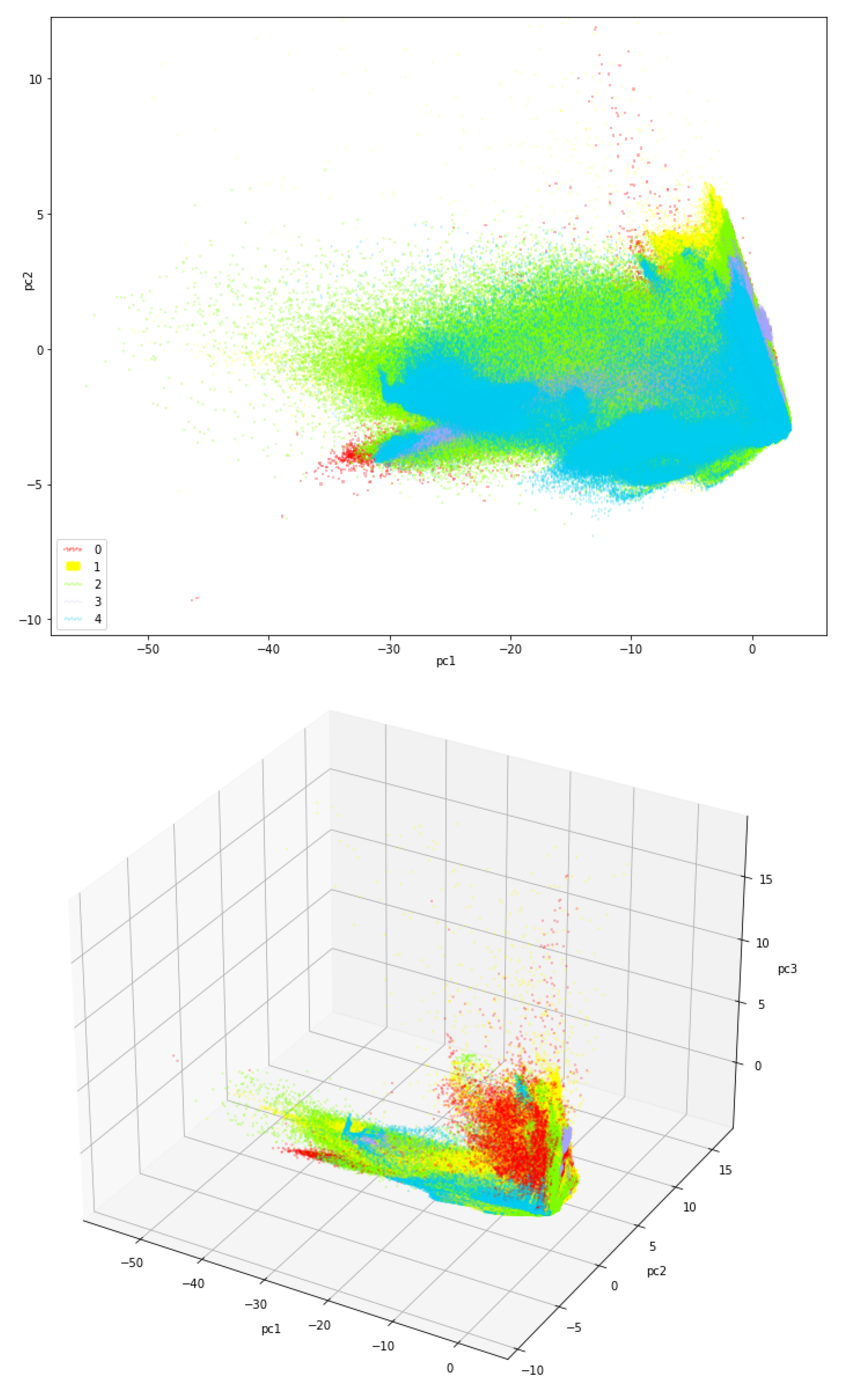

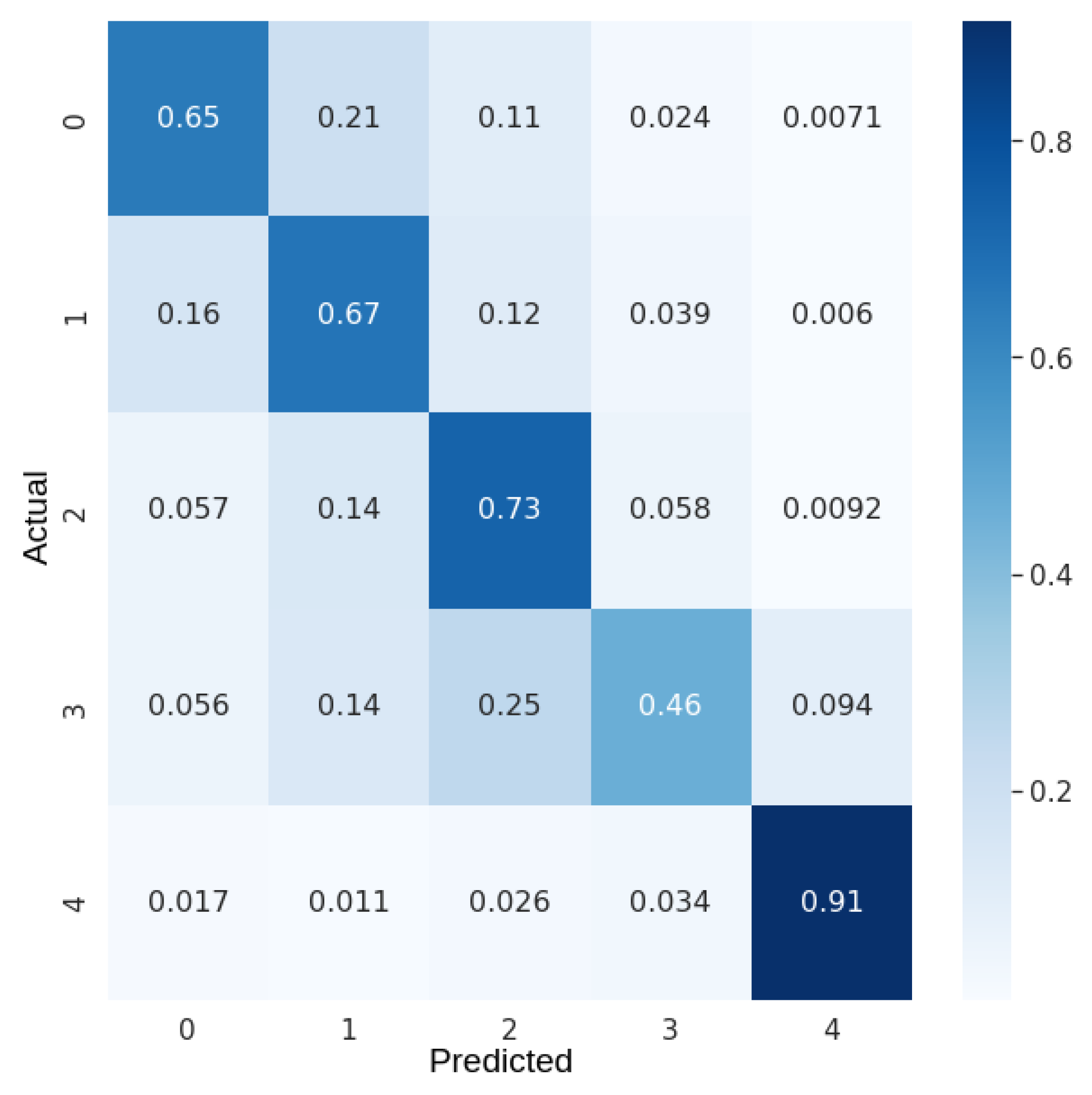

4.1. Support Vector Machine Classifier

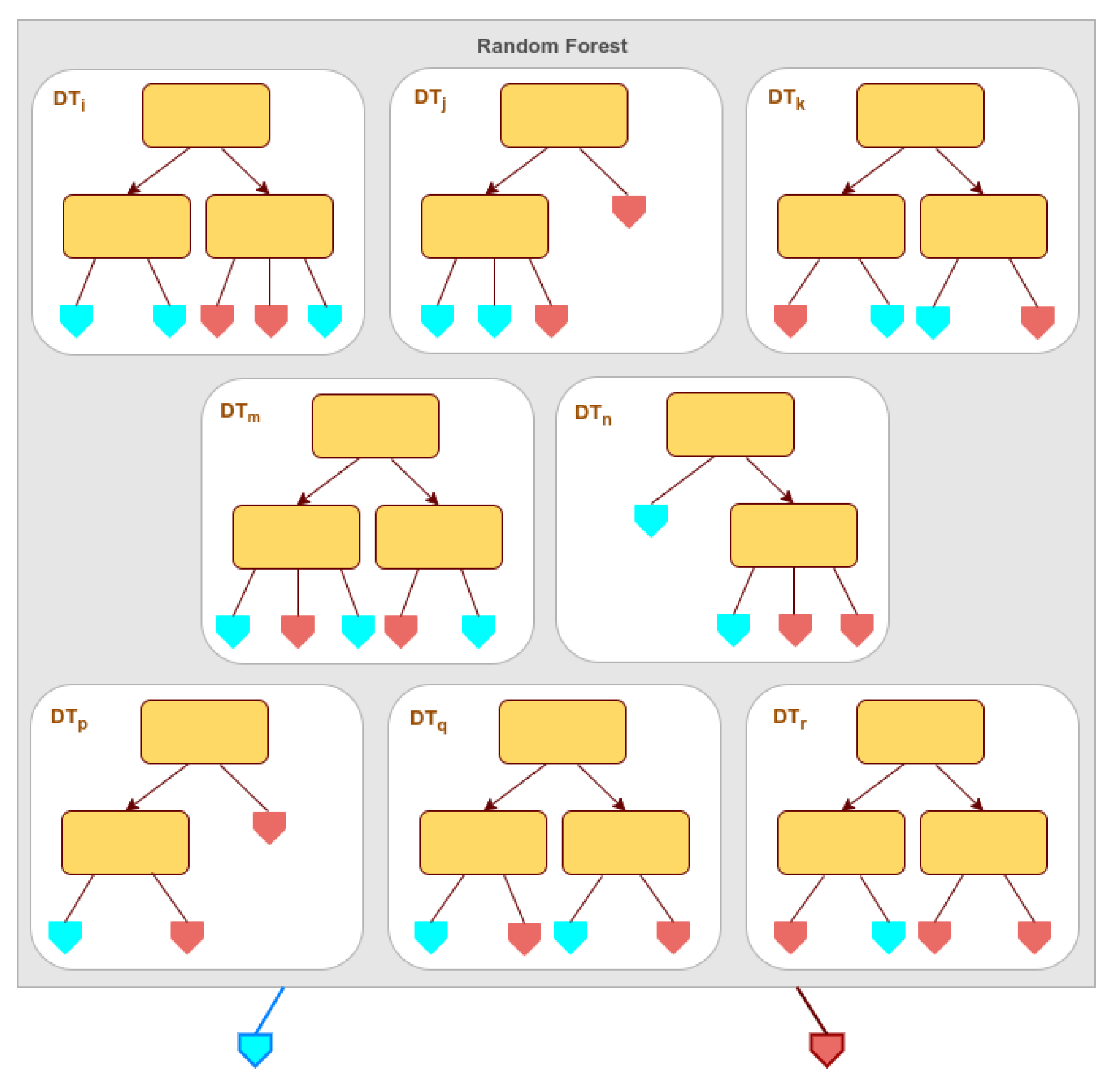

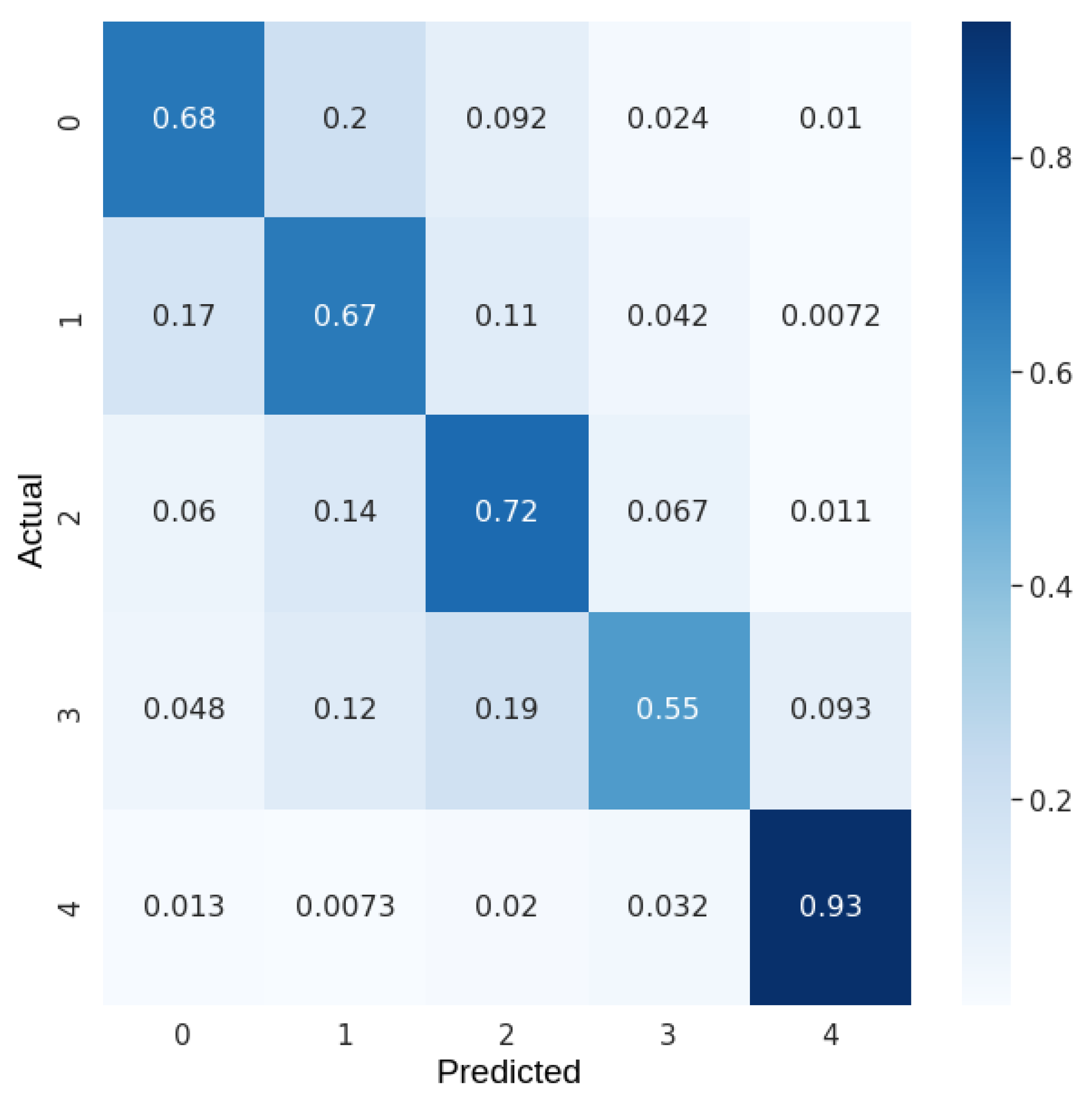

4.2. Random Forest Classifier

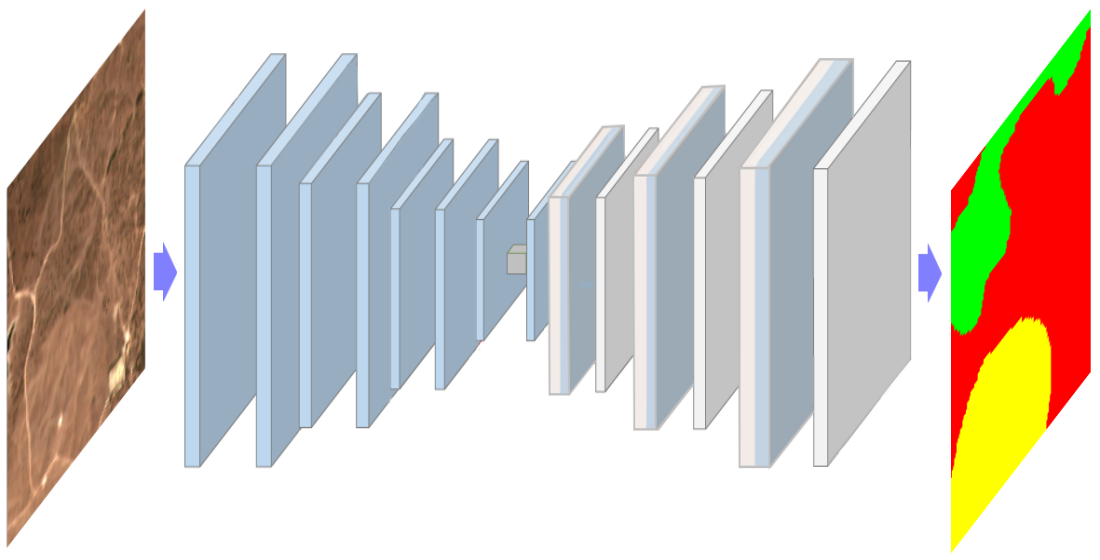

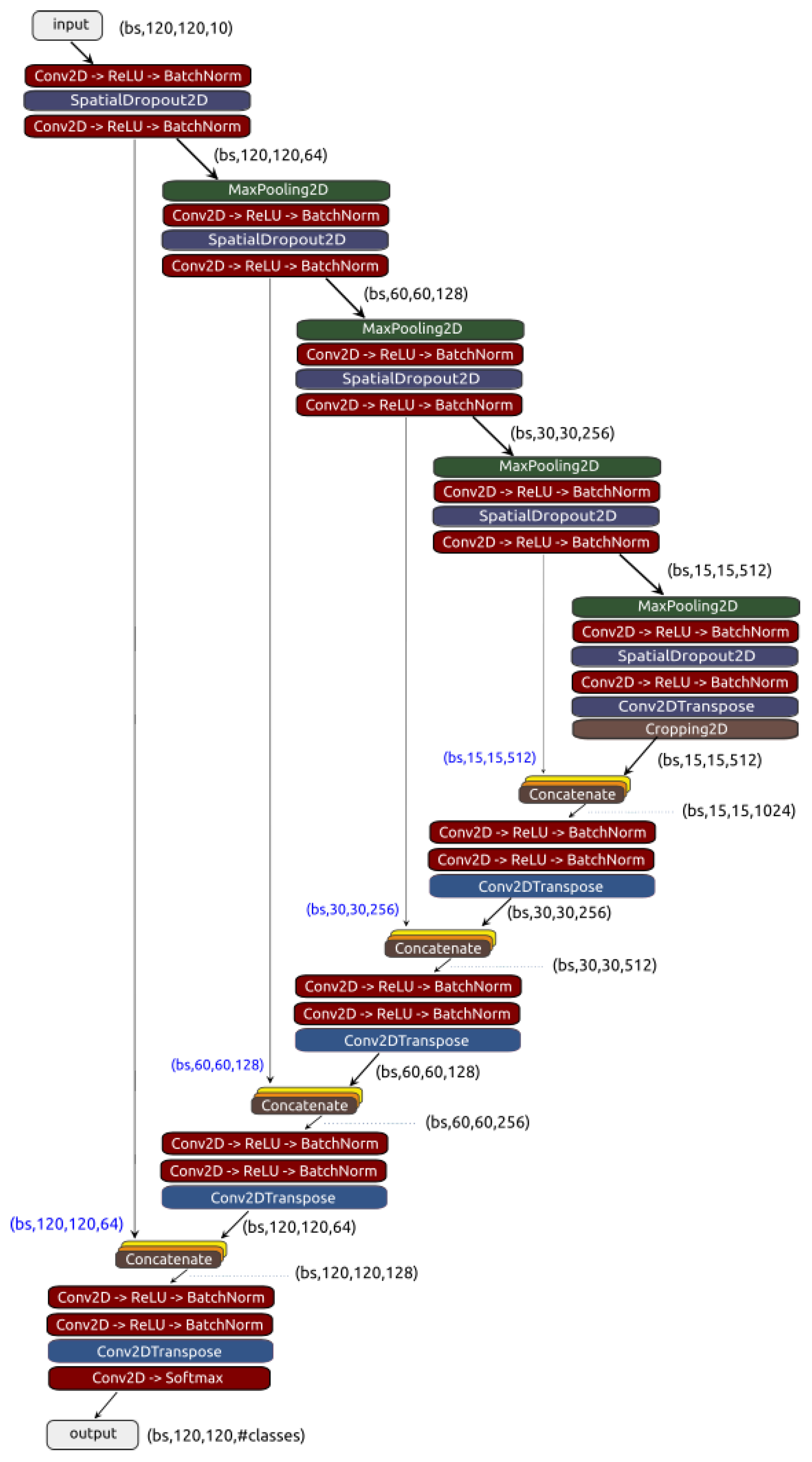

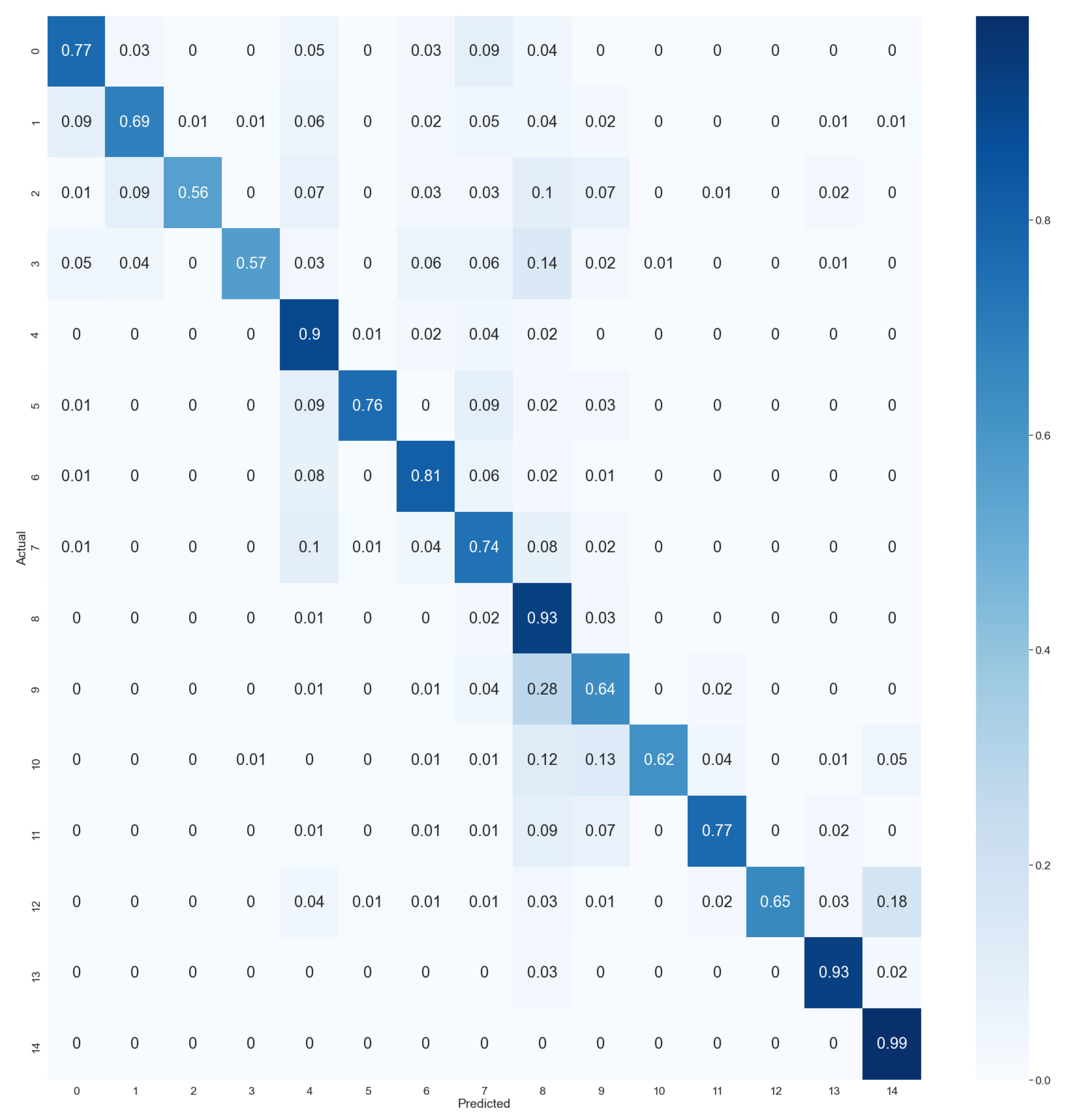

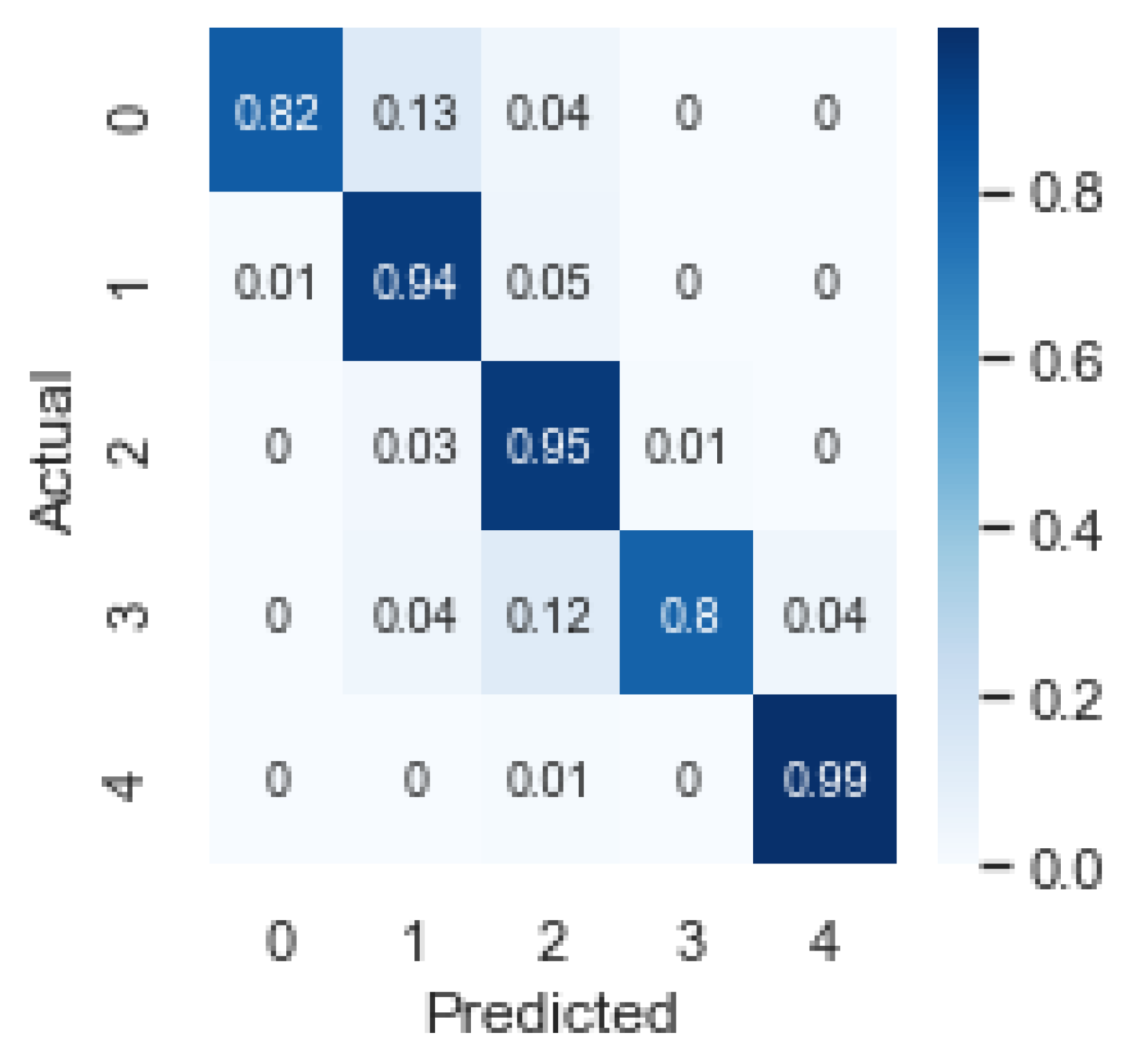

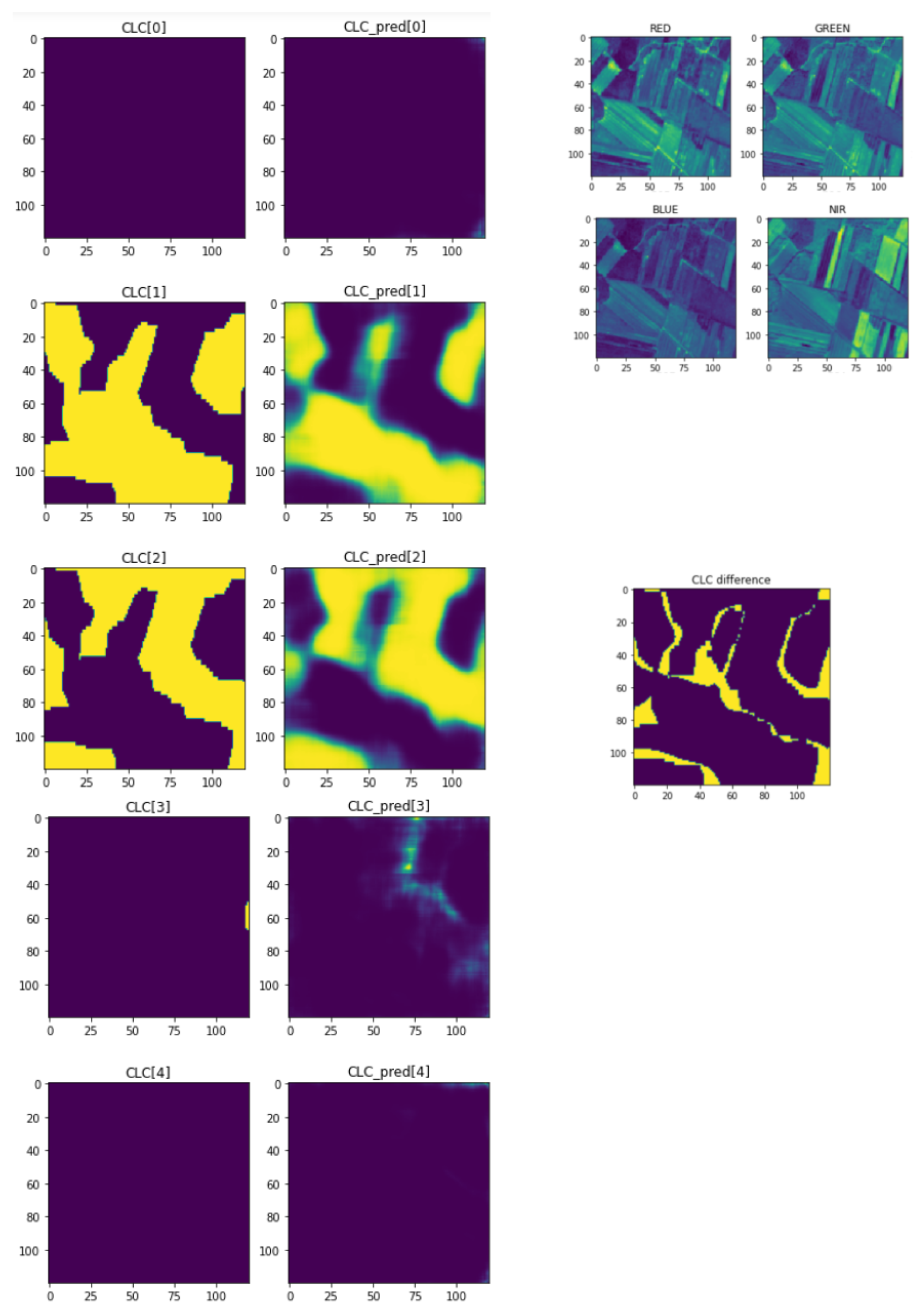

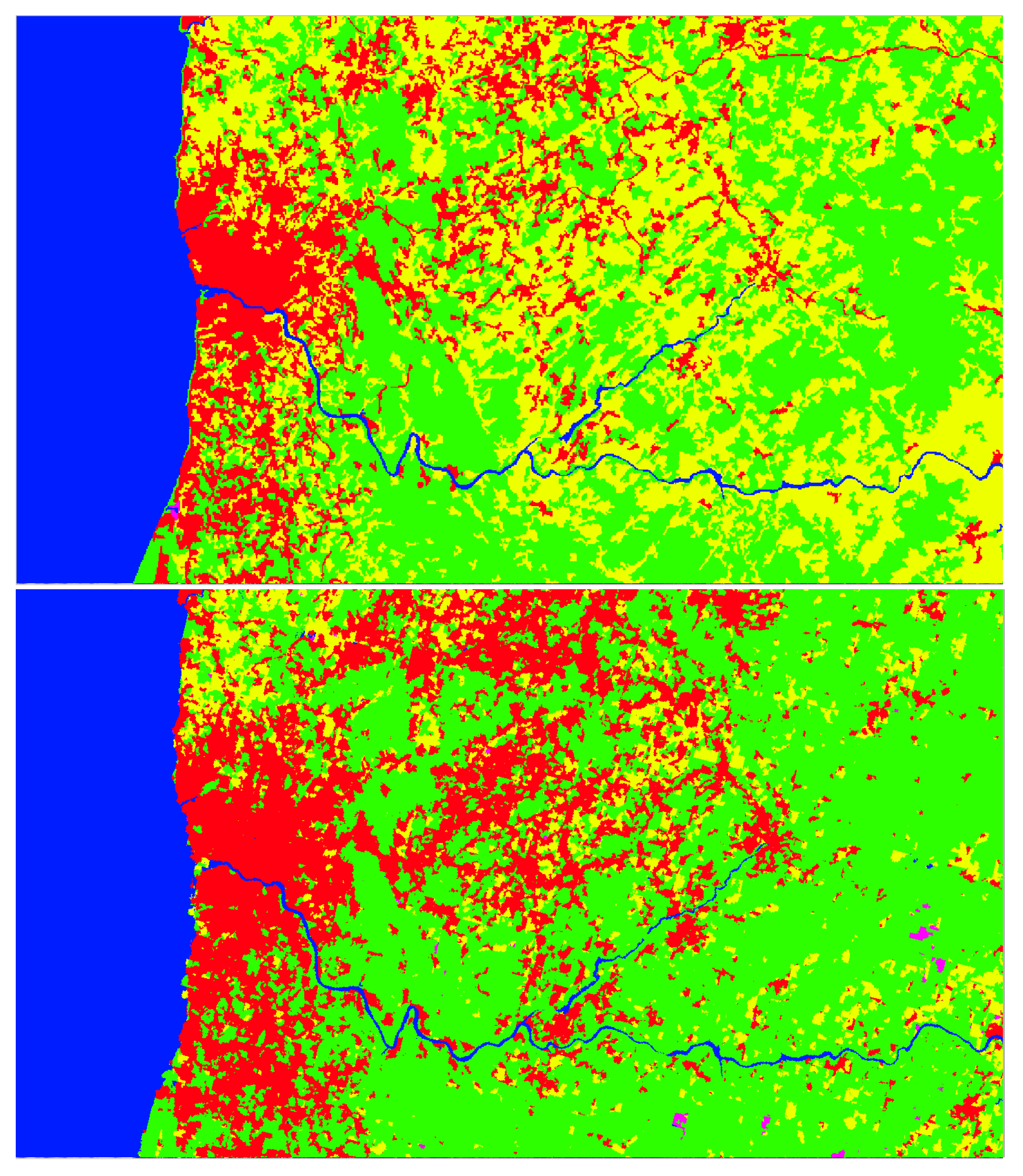

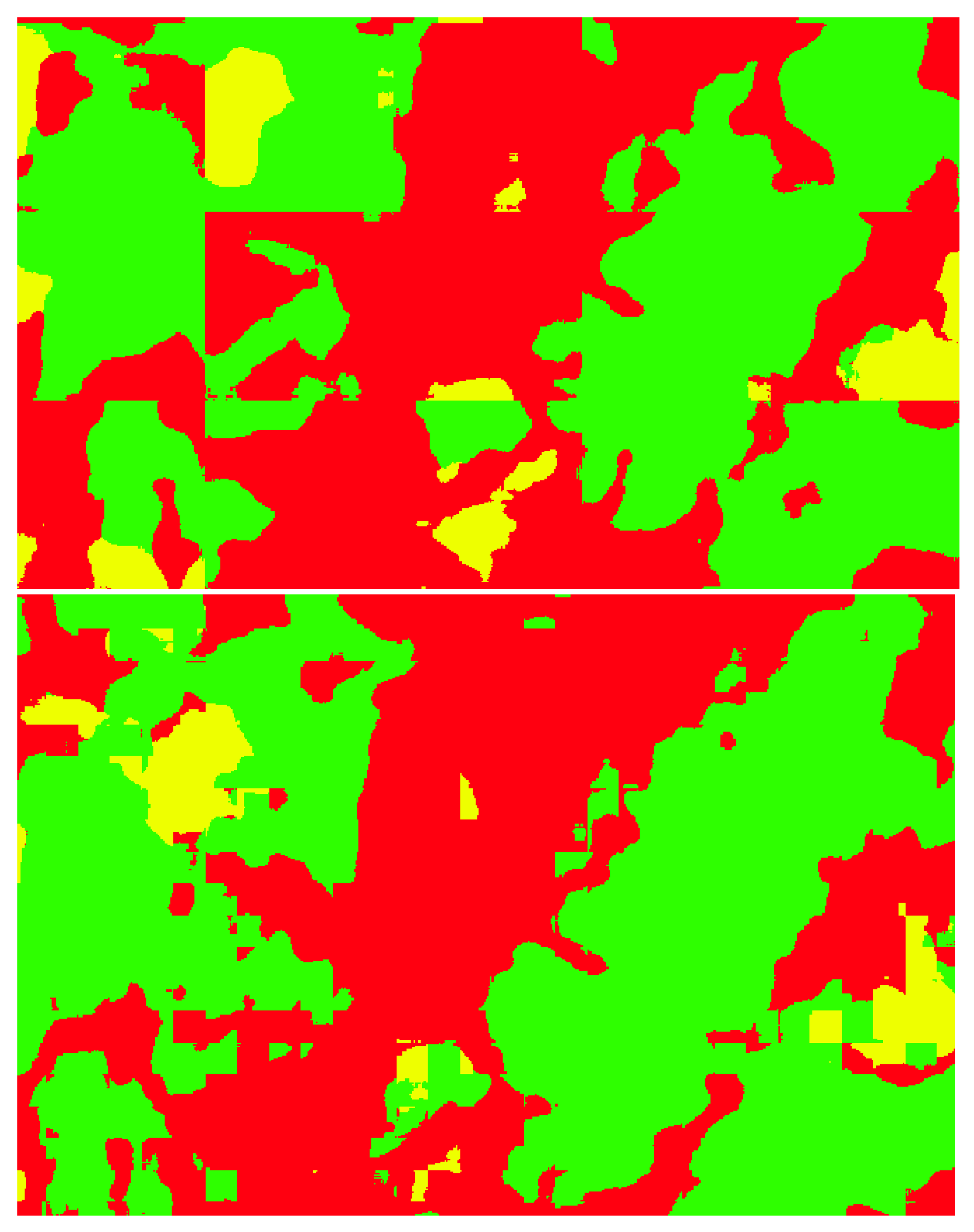

4.3. U-Net

5. Conclusions and Future Work

- Test other datasets, improve and increase the tested LandCoverPT dataset, which exhibit some limitations to obtain optimal results. Another possibility is to improve the dataset would be to optimize the size of the patches into which the Sentinel-2 products were divided.

- Implement other strategies to minimize the segmentation problem at the periphery of patches.

- Take a more consistent approach to optimizing model hyperparameters, for example by using a library such as Optuna or TPOT.

- Add other types of data to the optical images, such as radar images collected by the Sentinel-1 satellite.

- Test spectral indexes with the random forest model.

Author Contributions

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mäyrä, J. Land cover classification from multispectral data using convolutional autoencoder networks. Master’s thesis, University of Jyväskylä, 2018.

- Sumbul, G.; Charfuelan, M.; Demir, B.; Markl, V. Bigearthnet: A Large-Scale Benchmark Archive for Remote Sensing Image Understanding. In Proceedings of the IGARSS 2019 - 2019 IEEE International Geoscience and Remote Sensing Symposium, 2019, pp. 5901–5904. [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS Journal of Photogrammetry and Remote Sensing 2017, 130, 277 – 293. [CrossRef]

- Syrris, V.; Hasenohr, P.; Delipetrev, B.; Kotsev, A.; Kempeneers, P.; Soille, P. Evaluation of the Potential of Convolutional Neural Networks and Random Forests for Multi-Class Segmentation of Sentinel-2 Imagery. Remote Sensing 2019, 11, 907. [CrossRef]

- Georganos, S.; Grippa, T.; Lennert, M.; Vanhuysse, S.; Johnson, B.; Wolff, E. Scale Matters: Spatially Partitioned Unsupervised Segmentation Parameter Optimization for Large and Heterogeneous Satellite Images. Remote Sensing 2018, 10, 1440. [Google Scholar] [CrossRef]

- Zhang, C. Deep Learning for Land Cover and Land Use Classification. PhD thesis, Lancaster University, 2018. [CrossRef]

- Zhang, X.; Han, L.; Han, L.; Zhu, L. How Well Do Deep Learning-Based Methods for Land Cover Classification and Object Detection Perform on High Resolution Remote Sensing Imagery? Remote Sensing 2020, 12.

- Liu, D.; Xia, F. Assessing object-based classification: advantages and limitations. Remote Sensing Letters 2010, 1, 187–194. [CrossRef]

- Abdi, A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using Sentinel-2 data. GIScience & Remote Sensing 2020, 57, 1–20. [CrossRef]

- Van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic Use of Radar Sentinel-1 and Optical Sentinel-2 Imagery for Crop Mapping: A Case Study for Belgium. Remote Sensing 2018, 10, 1642. [CrossRef]

- Sumbul, G.; Charfuelan, M.; Demir, B.; Markl, V. Bigearthnet: A Large-Scale Benchmark Archive for Remote Sensing Image Understanding. In Proceedings of the IGARSS 2019 - 2019 IEEE International Geoscience and Remote Sensing Symposium, 2019, pp. 5901–5904. [CrossRef]

- Boser, B.; Guyon, I.; Vapnik, V. A training algorithm for optimal margin classifiers. In Proceedings of the Proceedings of the 5th Workshop on Computational Learning Theory, 1992, pp. 144––152. [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Machine Learning 1995, 20, 273––297. [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A Library for Support Vector Machines, 2001.

- Hsu, C.W.; Chang, C.C.; Lin, C.J. A Practical Guide to Support Vector Classification, 2016.

- Breiman, L. Random Forests. Machine Learning 2001, 45, 5–32. [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Int. Conf. on Medical Image Computing and Computer-assisted Intervention, 2015, p. 234–241.

- Stoian, A.; Poulain, V.; Inglada, J.; Poughon, V.; Derksen, D. Land Cover Maps Production with High Resolution Satellite Image Time Series and Convolutional Neural Networks: Adaptations and Limits for Operational Systems. Remote Sensing 2019, 11, 1986. [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. CoRR 2017, abs/1711.10684.

- Gašparović, M.; Zrinjski, M.; Gudelj, M. Automatic cost-effective method for land cover classification (ALCC). Computers, Environment and Urban Systems 2019, 76, 1 – 10. [CrossRef]

- Clerici, N.; Calderón, C.A.V.; Posada, J.M. Fusion of Sentinel-1A and Sentinel-2A data for land cover mapping: a case study in the lower Magdalena region, Colombia. Journal of Maps 2017, 13, 718–726. [CrossRef]

- Seferbekov, S.S.; Iglovikov, V.I.; Buslaev, A.V.; Shvets, A.A. Feature Pyramid Network for Multi-Class Land Segmentation. CoRR 2018, abs/1806.03510, [1806.03510].

- Yuan, Z.; Liu, Z.; Zhu, C.; Qi, J.; Zhao, D. Object Detection in Remote Sensing Images via Multi-Feature Pyramid Network with Receptive Field Block. Remote Sensing 2021, 13. [CrossRef]

- Yuan, Y.; Fang, J.; Lu, X.; Feng, Y. Spatial Structure Preserving Feature Pyramid Network for Semantic Image Segmentation. ACM Trans. Multimedia Comput. Commun. Appl. 2019, 15. [CrossRef]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R.B. PointRend: Image Segmentation as Rendering. CoRR 2019, abs/1912.08193, [1912.08193].

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. CoRR 2016, abs/1612.01105, [1612.01105].

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the ECCV, 2018.

| Class | Precision | Recall | F1-score |

|---|---|---|---|

| 0 - Artificial surfaces | 0.69 | 0.65 | 0.67 |

| 1 - Agricultural areas | 0.57 | 0.67 | 0.62 |

| 2 - Forest and semi-natural areas | 0.59 | 0.73 | 0.66 |

| 3 - Wetlands | 0.75 | 0.46 | 0.57 |

| 4 - Water bodies | 0.89 | 0.91 | 0.90 |

| Class | Precision | Recall | F1-score |

|---|---|---|---|

| 0 - Artificial surfaces | 0.70 | 0.68 | 0.69 |

| 1 - Agricultural areas | 0.59 | 0.67 | 0.62 |

| 2 - Forest and semi-natural areas | 0.63 | 0.72 | 0.67 |

| 3 - Wetlands | 0.77 | 0.55 | 0.64 |

| 4 - Water bodies | 0.88 | 0.93 | 0.91 |

| Model | Classes | Dataset | Overall Accuracy |

|---|---|---|---|

| SVM | 5 | BigEarthNet | 68.6% |

| RF | 5 | BigEarthNet | 70.6% |

| U-Net | 43 | BigEarthNet | 82.32% |

| U-Net + NDVI | 43 | BigEarthNet | 77.95% |

| U-Net | 15 | BigEarthNet | 87.11% |

| U-Net | 11 | BigEarthNet | 86.88% |

| U-Net | 8 | BigEarthNet | 92.37% |

| U-Net | 5 | BigEarthNet | 94.75% |

| U-Net | 5 | LandCoverPT | 87.26% |

| Class | Precision | Recall | F1-score |

|---|---|---|---|

| 0 - Artificial surfaces | 0.86 | 0.82 | 0.84 |

| 1 - Agricultural areas | 0.94 | 0.94 | 0.94 |

| 2 - Forest and semi-natural areas | 0.95 | 0.95 | 0.95 |

| 3 - Wetlands | 0.77 | 0.80 | 0.78 |

| 4 - Water bodies | 0.98 | 0.99 | 0.98 |

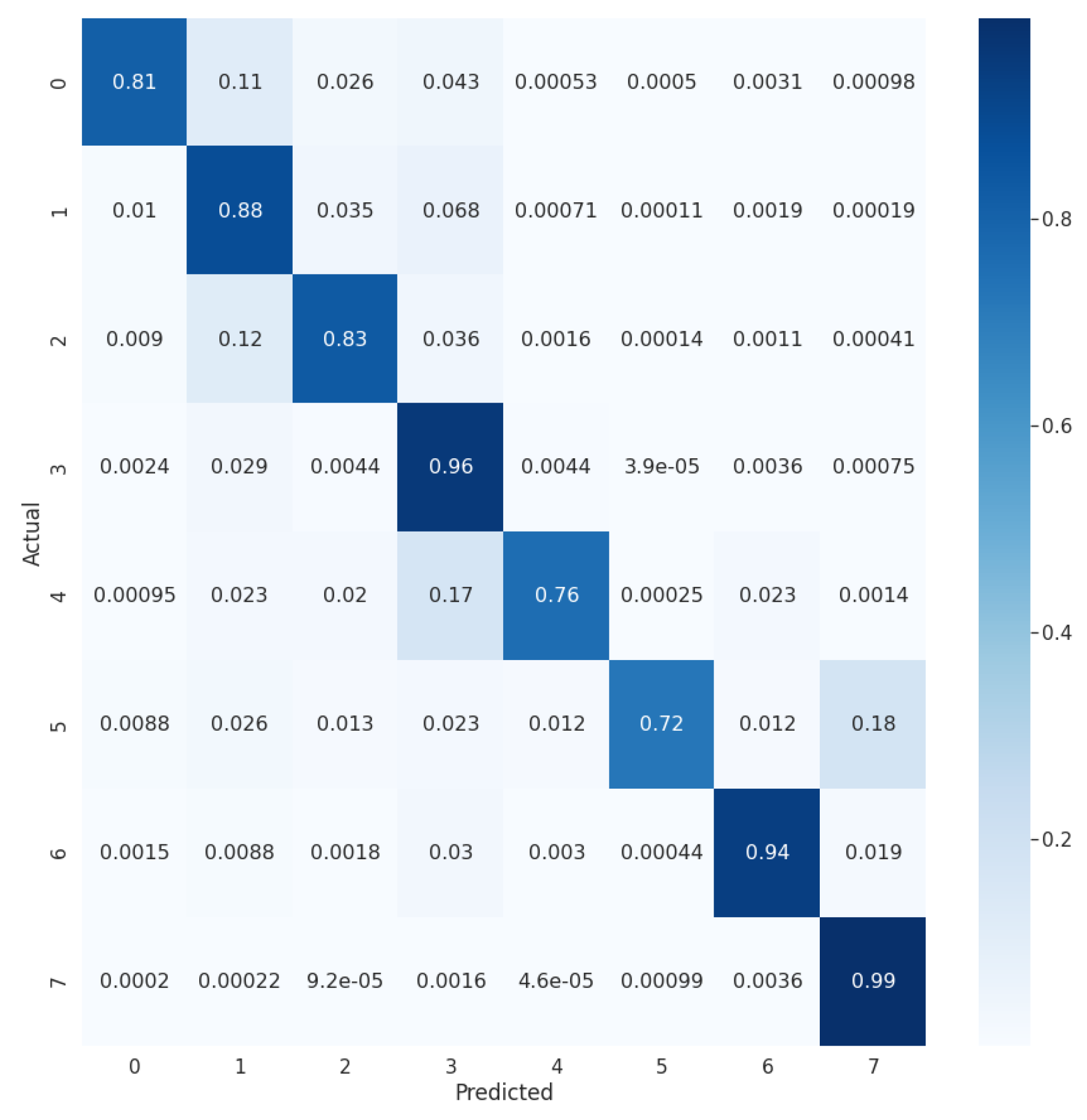

| Class | Precision | Recall | F1-score |

|---|---|---|---|

| 0 - Artificial surfaces | 0.84 | 0.81 | 0.83 |

| 1 - Agricultural areas | 0.91 | 0.88 | 0.90 |

| 2 - Pastures | 0.81 | 0.83 | 0.82 |

| 3 - Forest and semi-natural areas | 0.94 | 0.96 | 0.95 |

| 4 - Inland wetlands | 0.78 | 0.76 | 0.77 |

| 5 - Maritime wetlands | 0.79 | 0.72 | 0.76 |

| 6 - Inland waters | 0.94 | 0.94 | 0.94 |

| 7 - Maritime waters | 0.99 | 0.99 | 0.99 |

| Size of the area | 43 CLC | 15 CLC | 5 CLC |

|---|---|---|---|

| used on each patch | classes | classes | classes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).