1. State of the Art

The field of aerial robots physically interacting with the environment, especially aerial manipulators, has grown steadily. Diverse prototypes, features, and capabilities have been designed and tested in realistic indoor and outdoor environments, proving the ability to execute various tasks including pick and place, point contact, pulling and pushing, sliding, peg-in-hole, and manipulation while flying. In this case, rather than building a general-purpose aerial robot, the design, and development of an aerial manipulation robot demand the selection of specific components or modules necessary to complete the predefined task in the most effective and safe manner. For this reason, some advent configurations include 1) Tilt-able Propellers are made up of a primary rigid frame and several propellers that are attached to movable actuated parts (servo motors), which additional actuation allows the propellers to be directed in numerous directions independently, and 2) Multi-rotor Morphing platforms are made up of a primary body composed of activated links. Each connection, or portion of a link, is then outfitted with one (or more) tilted or tilt-able propellers. Multi-rotor Morphing aerial manipulators enable extremely high flexibility to the surroundings and work requirements [

1].

Pushing and sliding Non-destructive inspection on curved surfaces using an Eddy Current probe is an application of designing an 8 Degrees of Freedom (DoF) aerial manipulator in [

2]. In this study, the inspection arm cannot accurately correct high-frequency end-effector errors because of its flexibility. As a result, the feedback action is assigned to the aerial platform. Since it is completely actuated, it can adjust for errors in all 6 DoFs of the end-effector using the PID controller. In this context, [

3] uses a Force-torque sensor mounted at the end-effector to enhance feedback loop robustness against model uncertainties and external disturbances. The Quadratic Programming optimization method was implemented in fully-actuated and under-actuated modeling of the inner loop and the PID controller was applied in the outer loop. While wind effects, blade flapping, propeller interference, and ground/wall effect are neglected which limits outdoor applications.

Another approach was considered in [

4] instead of Robust and Adaptive control methods to cope with parameter uncertainties. Generating desired trajectories able to eliminate the sensitivity of the closed-loop system to parametric uncertainties was the main concern of this study by means of gradient-based optimization of trajectory related to parameters. Expanding this method to closed-loop control and verifying with Monte Carlo Simulation were some contributions. As a follow-up, [

5] contribution is thus to investigate how the input sensitivity, in conjunction with the state sensitivity, might be improved to provide high tracking performance and low input deviations. A large number of starting trajectories were then independently altered in order to evaluate the validity of the proposed scheme.

Using parallel manipulators against serial manipulators has some benefits such as reducing inertia, effort distribution over all motors, and reducing joint errors. Also, a dual-arm configuration has some advantages. For instance, one arm acts as a position sensor relative to a gripping point while the second arm performs the manipulation duty. The objective is to use the information supplied by the joint servos to determine the position of the aerial unit relative to the grasping point. It is important to note that the aerial unit positioning precision should be less than 10% of the reach of the manipulator to ensure that the aerial manipulation operation can be completed reliably [

6]. In this regard, an aerial manipulation platform consisting of a parallel 3-DOF manipulator mounted to an omnidirectional tilt-rotor aerial vehicle was proposed in [

7]. Dynamic decoupling usually causes instability when rapid motions of the end-effector are required. To cope with this problem, this study implements a standard inverse dynamic plus linear control action which allows for proper compensation of the dynamic coupling effects between the flying base and arm, though limitations of dynamic compensation for fast trajectories demand more advanced control methods. Dependency to the analytical model was eliminated in [

8] using a combination of Model Predictive Path Integral and Impedance (ratio of force output to displacement input) controller, despite computation difficulties of the Model Predictive Control (MPC) optimization.

2. Challenges

There are some challenges regarding aerial manipulators according to literature;

- 1)

Range and Endurance: the time and range of flights are short and must be enhanced for practical applications such as beyond-line-of-sight applications that involves the configuration of platforms, sources of energy, control, task, and motion planning.

- 2)

Safety in human and object interaction: Some practical applications including aerial co-working for physical interactions require safety considerations.

- 3)

Precision: The precision is determined by the positioning and orientation sensor sensitivity and is restricted by inevitable disturbances such as wind gusts and aerodynamic effects of adjacent objects.

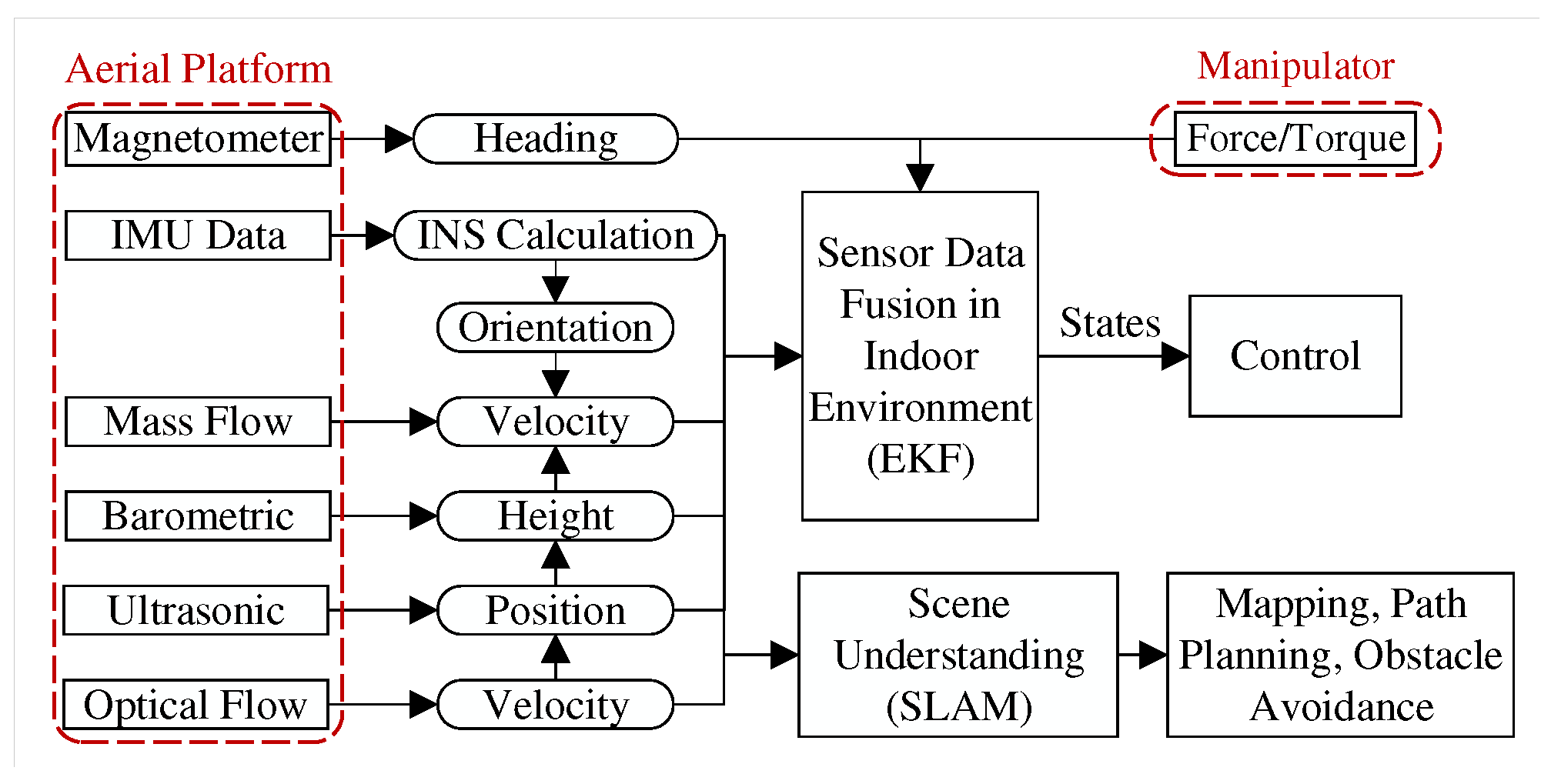

Figure 1 proposes a comprehensive consideration of indoor sensor data fusion.

- 4)

Reliability: In a condition of thrust or actuator faults, maintaining stability by adequately changing the manipulator configuration and thrust vector and therefore modifying the position of the center of gravity is substantial [

9].

- 5)

Security: In terms of autonomous applications, some adversarial threats are probable including: attack types (Influence, Specificity, Security Violation), attack frequency (Iterative, One-time), adversarial falsification (False Positive/Negative), adversarial knowledge (White/Gray/Black Box Attack), and adversarial specificity (Targeted, Non-targeted) which are explained in detail in [

10].

3. Approaches

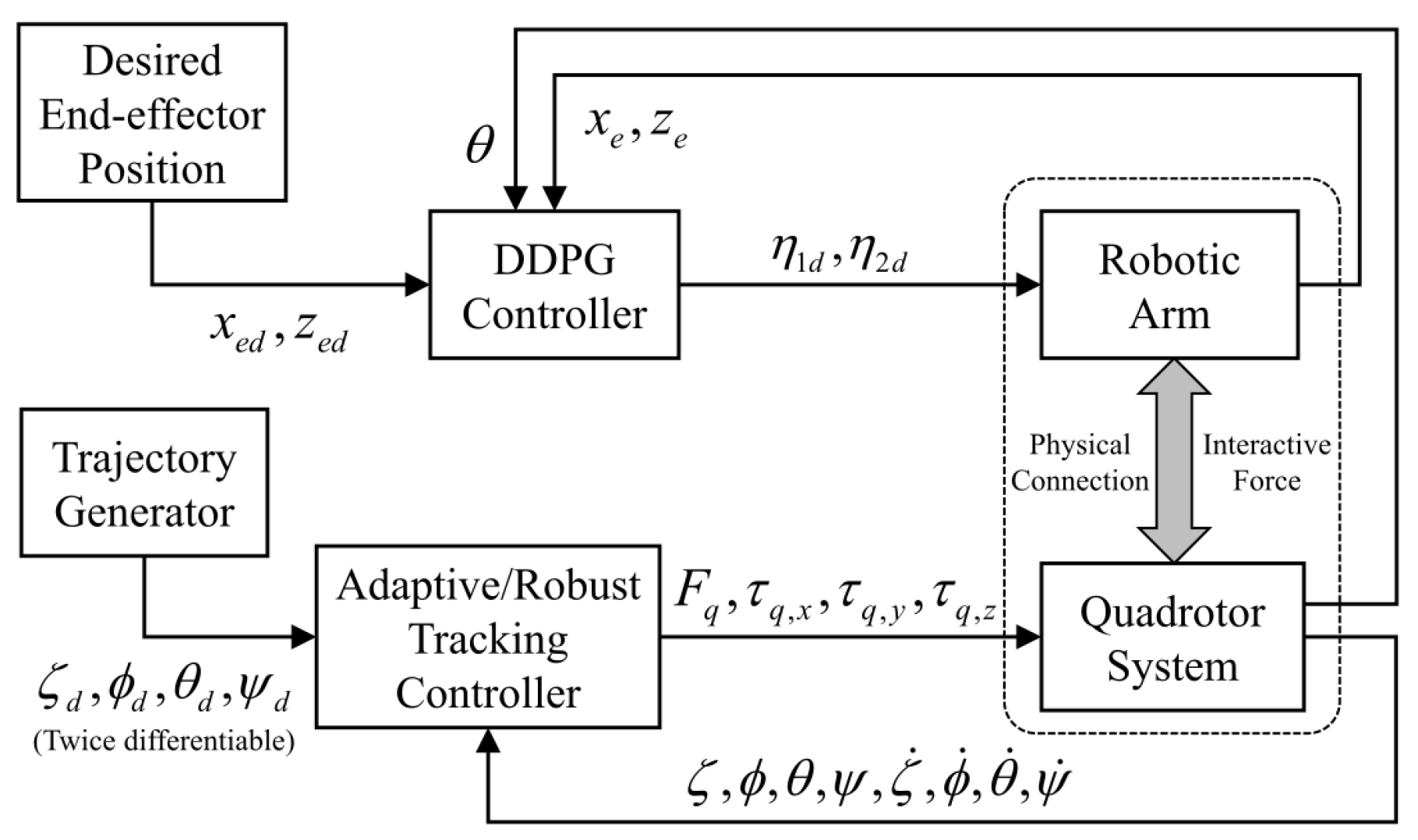

As a result of previous sections, The challenge of generating accurate and robust controllers for aerial manipulator vehicles with mounted robot arms in various configurations remains a major concern. In this case there are few studies which used learning-based controllers including dynamics coupling and decoupling approaches. In [

11], a Deep Deterministic Policy Gradient (DDPG) approach was utilized for robot arm alongside of an adaptive/robust control method for aerial platform (Quad rotor).

In this work, the usage of DDPG can reduce the interaction force/torque to a miniscule quantity, but this is not negligible. As a result, the force/torque residues are regarded as disturbances in quadrotor dynamics. Following that, a robust control rule is presented to compensate for the disturbances caused by DDPG residues of interacting force/torque. The flowchart of proposed method is depicted in

Figure 2. On the other hand, [

12] went on coupling approach. The point contact robot is modeled as a single rigid body and is able to measure contact wrench data using a force/torque sensor as well as control it using an Impedance control with a gain-adjusting policy. A supervised learning (teacher) approach with access to ground-truth information determines the required stiffness in the impedance controller. Then another policy (student) is learned to imitate the teaching and may be developed further using RL. Without any extra sim-to-real adaption, the trained policy may be instantly transferred to the physical world. In an RL scenario, the idea of student-teacher learning is that the teacher who has access to supervised data is much more able to train.

There are other approaches to controlling a full-body aerial manipulator without decoupling or gain-adjusting techniques. In [

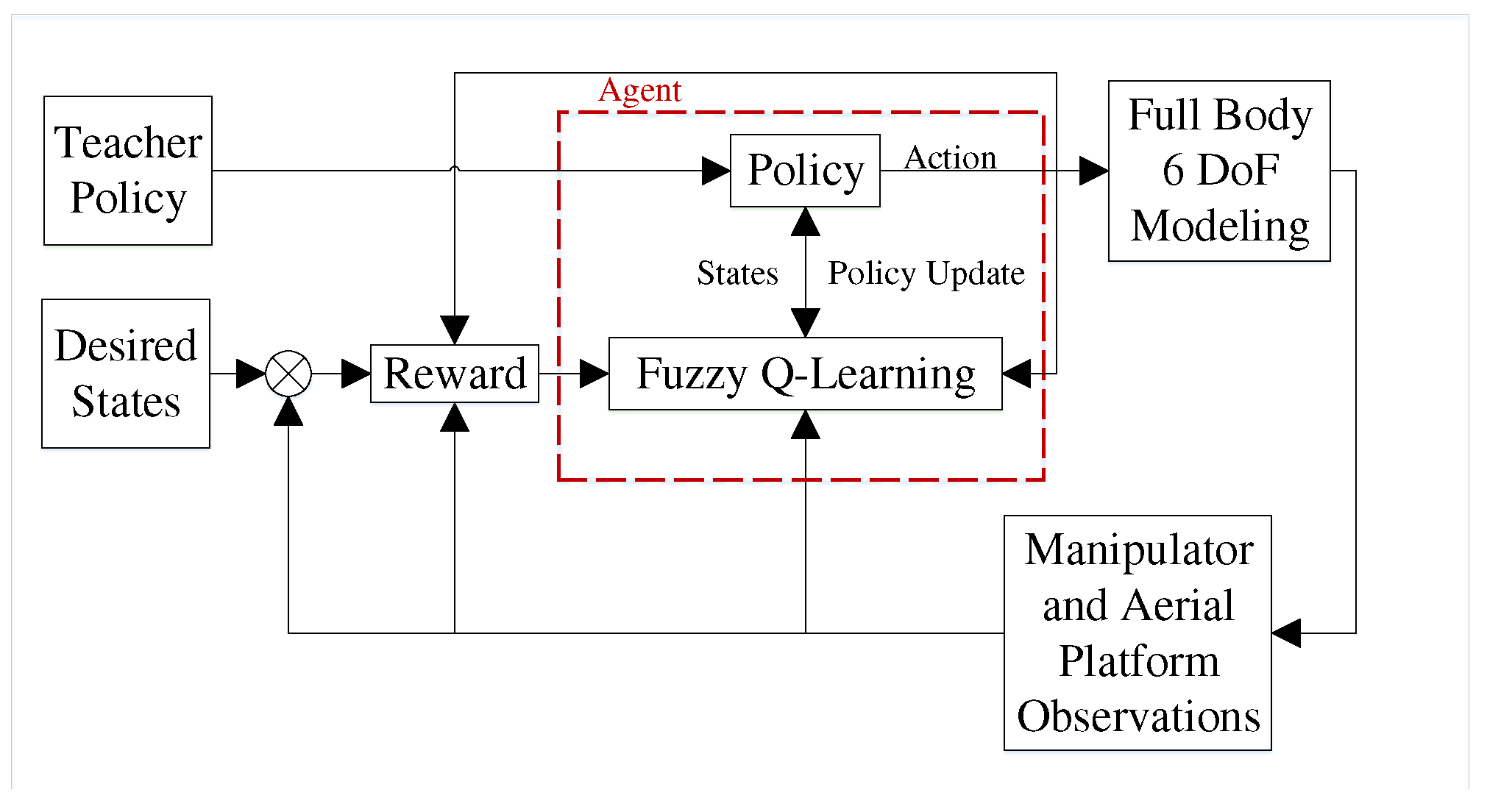

13] attitude control of a novel aircraft with low stability characteristics is addressed using Fuzzy Q-learning (FQL). Although the reward function definition in [

13], and [

12] are analogous, the robustness performance of the proposed FQL was outstanding against actuator faults, atmospheric disturbances, model parameter uncertainties, and sensor measurement errors in comparison with well-known PID and Dynamic Inversion methods. Furthermore, the FQL eliminates the problem of computational resources that are needed for Deep Reinforcement Learning algorithms.

Figure 3 proposes a road map that combines the advantages of FQL and Student-teacher learning for future implementations. According to this block diagram, a full-body 6DoF model will be developed including a manipulator arm and an aerial platform. After that, a comprehensive reward function is defined including the position of the manipulator end-effector, its rate of position change, and the aerial platform rate of the position change. The action set is defined as a weighted sum of the manipulator end-effector and aerial platform positions. Finally, the state set is defined as the position and velocity of the manipulator end-effector. Depending on the application of the end-effector, the action set can be varied. For instance, in some complex tasks, the orientation of the aerial platform can be considered as the third term of the action selection vector.

References

- Ollero, A.; Tognon, M.; Suarez, A.; Lee, D.; Franchi, A. Past, Present, and Future of Aerial Robotic Manipulators. IEEE Transactions on Robotics 2022, 38, 626–645. [Google Scholar] [CrossRef]

- Tognon, M.; Chávez, H.A.T.; Gasparin, E.; Sablé, Q.; Bicego, D.; Mallet, A.; Lany, M.; Santi, G.; Revaz, B.; Cortés, J.; Franchi, A. A Truly-Redundant Aerial Manipulator System With Application to Push-and-Slide Inspection in Industrial Plants. IEEE Robotics and Automation Letters 2019, 4, 1846–1851. [Google Scholar] [CrossRef]

- Nava, G.; Sablé, Q.; Tognon, M.; Pucci, D.; Franchi, A. Direct Force Feedback Control and Online Multi-Task Optimization for Aerial Manipulators. IEEE Robotics and Automation Letters 2020, 5, 331–338. [Google Scholar] [CrossRef]

- Giordano, P.R.; Delamare, Q.; Franchi, A. Trajectory Generation for Minimum Closed-Loop State Sensitivity. 2018 IEEE International Conference on Robotics and Automation (ICRA), 2018, pp. 286–293. [CrossRef]

- Brault, P.; Delamare, Q.; Giordano, P.R. Robust Trajectory Planning with Parametric Uncertainties. 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 11095–11101. [CrossRef]

- Suarez, A.; Vega, V.M.; Fernandez, M.; Heredia, G.; Ollero, A. Benchmarks for Aerial Manipulation. IEEE Robotics and Automation Letters 2020, 5, 2650–2657. [Google Scholar] [CrossRef]

- Bodie, K.; Tognon, M.; Siegwart, R. Dynamic End Effector Tracking With an Omnidirectional Parallel Aerial Manipulator. IEEE Robotics and Automation Letters 2021, 6, 8165–8172. [Google Scholar] [CrossRef]

- Brunner, M.; Rizzi, G.; Studiger, M.; Siegwart, R.; Tognon, M. A Planning-and-Control Framework for Aerial Manipulation of Articulated Objects. IEEE Robotics and Automation Letters 2022, 7, 10689–10696. [Google Scholar] [CrossRef]

- Pose, C.; Giribet, J.; Mas, I. Adaptive Center-of-Mass Relocation for Aerial Manipulator Fault Tolerance. IEEE Robotics and Automation Letters 2022, 7, 5583–5590. [Google Scholar] [CrossRef]

- Qayyum, A.; Usama, M.; Qadir, J.; Al-Fuqaha, A. Securing Connected and Autonomous Vehicles: Challenges Posed by Adversarial Machine Learning and the Way Forward. IEEE Communications Surveys and Tutorials 2020, 22, 998–1026. [Google Scholar] [CrossRef]

- Liu, Y.C.; Huang, C.Y. DDPG-Based Adaptive Robust Tracking Control for Aerial Manipulators With Decoupling Approach. IEEE Transactions on Cybernetics 2022, 52, 8258–8271. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Ott, L.; Tognon, M.; Siegwart, R. Learning Variable Impedance Control for Aerial Sliding on Uneven Heterogeneous Surfaces by Proprioceptive and Tactile Sensing. IEEE Robotics and Automation Letters 2022, 7, 11275–11282. [Google Scholar] [CrossRef]

- Zahmatkesh, M.; Emami, S.A.; Banazadeh, A.; Castaldi, P. Robust Attitude Control of an Agile Aircraft Using Improved Q-Learning. Actuators 2022, 11. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).