1. Introduction

The medical field believes that early discovery of several diseases allows for more effective treatment [

1,

2]. Diabetes is among the most prevalent diseases, and its incidence has risen globally, it is normally related with synthesis of insulin and excessive glucose levels in the organism [

3,

4], leading to metabolic disruption and consequent health issues like cardiovascular disease, kidney failure, mental impairment, and vision loss due to diabetes, among others. Diabetic retinopathy (DR) is a devastating eye disease that can lead to permanent blindness. [

5,

6].

Non-proliferative DR (NPD) occurs when an artery in the retina perforates and causes minor bleeding in the eye; proliferative DR occurs when new blood vessels sprout in front of the photoreceptors, causing significant bleeding into the eye [

7,

8]. DR is further classified into NPD and proliferative DR (PD), with NPD being a significantly more severe phase of progressive deterioration [

8,

9].

Early detection of DR is challenging since it is asymptomatic or presents with extremely minor indications, leaving a person blind and later leading to impaired vision. Thus, early detection of DR is crucial for preventing the complexity of this illness. This disease's diagnosis demands experts and professionals (ophthalmologists) with pretty efficient technologies and approaches that stimulate breakthroughs in this disease's diagnosis [

7,

10].

Extraction of features using machine learning (ML) approaches was the foundation of the vast bulk of DR research prior to the problem of manual feature extraction prompted researchers to shift their focus to deep learning (DL) [

11].

Subsequent study in areas of medicine opened the way for the development of numerous computer-assisted technologies, including data mining, image processing, ML, and DL. In recent years, DL has received attention in numerous disciplines, including sentiment classification, handwriting identification, and healthcare imaging analysis [

12,

13], among others fields [

7].

Our goal was to build a rapid, highly automated, DL based DR identification that might be utilized to assist ophthalmologists in evaluating potential DR. Early detection and treatment of DR can lessen its effects. To accomplish this, we developed a model for diagnostics employing the publicly accessible Asia Pacific Tele-Ophthalmology Society (APTOS) dataset [

14] utilizing unique images enhancement technique with DenseNet-121 [

15] for model classification.

Furthermore, we highlight the study's contributions.

To generate high-quality images for the APTOS dataset, we utilized the contrast limited adaptive histogram equalization CLAHE [

16] filtering algorithm in conjunction with an Enhanced Super-resolution generative adversarial networks ESRGAN [

17], which is the primary contribution of this study.

By utilizing the techniques of augmentation, we ensured that the APTOS dataset contained a constant number of records.

Accuracy (Acc), Confusion matrix (CM), Specificity, Sensitivity, top n accuracy, and the F1-score (F1c) are the indicators utilized in a comprehensive comparison research to establish the viability of the suggested system.

Using the DensNet-121 weight-tuning algorithm, pre-trained network is fine-tuned using the APTOS data set.

By employing a diverse training technique supported by multiple permutations of training strategies, the overall dependability of the proposed method is improved, and overfitting is avoided (e.g., data augmentation (DA), batch size, validation patience, and learning rate).

The APTOS dataset was utilized in both the training and evaluation phases of model construction. By utilizing severe 80:20 hold-out validation, the model obtained a stunning 98.7% accuracy of classification with enhancement approaches and 81.23% without enhancement techniques.

This research shows two scenarios: in scenario 1, an ideal strategy for DR stage enhancement employing CLAHE and ESRGAN techniques, while in scenario 2, no enhancement is employed. In addition, each model's weights were trained using DenseNet-121. Images from the APTOS dataset has been used to compare the outcomes of the models of the two scenarios. Due to the class imbalance in the dataset, augmentation techniques are required for oversampling. We shall adhere to this plan while we continue writing the paper.

Section 2 offers background for the consideration of relevant work. Following a discussion of the approach proposed in

Section 3, the findings are presented and analyzed in

Section 4. This investigation is wrapped up in

Section 5.

2. Related Work

When DR picture detection was performed by hand, there were a number of problems. Many people in poor countries have problems because there aren't enough qualified ophthalmologists and examinations are expensive. Because early detection is so crucial in the fight against blindness, automated processing methods have been made to make accurate and quick treatment and diagnosis easier to get. Machine Learning (ML) models that were learned on images of the fundus of the eye have lately been able to accurately automate DR identification. Automatic methods that collaborate well and don't cost much have taken a significant amount of effort to establish [

2,

18].

The latter means that these methods are already better than their old versions in every way. In DR classification studies, there really are two primary schools of thought conventional, specialist methods and cutting-edge, ML based methods. Below is a deeper look into each of these techniques. For instance, Costa et al. [

19] employed instance learning to detect lesions in Messidor dataset. In [

20], Wang et al. describes a two-step strategy based on handcrafted features for diagnosing DR and its severity. Using the histogram of orientation gradients, while Leeza et al. [

21] devised collection of attributes for use in DR identification. Employing Haralick and multiresolution features, Gayathri et al. [

22] classified DR binary and multiclass. Pires et al. [

23] steadily bought a bigger CNN model, conducted different forms of augmentation, and multi-resolution training using APTOS 2019 dataset. The tested model using Messidor-2 dataset yielded an area under the receiver operating characteristic (ROC) curve of 98.2%. Furthermore, Zhang et al. [

24] advocated using fundus images to identify and grade DR. Inception V3, Xception, and InceptionResNetV2 CNN models used ensemble learning to achieve 97.7% area under the curve, 97.5% sensitivity, and 97.7% specificity. While Math et al. [

25] proposed a learning model for DR prediction. A pre-trained CNN estimated segment-level DR and identified all segment levels for a 0.963 area under the ROC curve. Moreover, Maqsood et al. [

26] introduced a new 3D CNN model to localize hemorrhages, an early indicator of DR, using a pre-trained VGG-19 model to extract characteristics from the segmented hemorrhages. The studies used 1509 photos from multiple databases and averaged 97.71% accuracy.

Several research has been done to identify and classify DR at various stages simultaneously. Traditional image processing and DL both have their place in such picture categorization challenges [

7,

27,

28,

29,

30,

31,

32]. The investigation on DR identification and diagnostic methods showed that there continue to be a number of flaws that have to be reviewed. For instance, due to a lack of relevant data, there has been little effort put on creating and training a unique DL model from inception. Many researchers, however, have found high dependability values when employing pre-trained models with transfer-learning. In the end, almost all of these studies only trained DL models on raw images, not preprocessed ones. This made it hard to scale up the completed classification infrastructure. In the existing research, multiple layers are added to the architecture of models that have already been trained in order to make a lightweight DR detection system. This makes the proposed system more efficient and useful, which is what users want.

3. Research Methodology

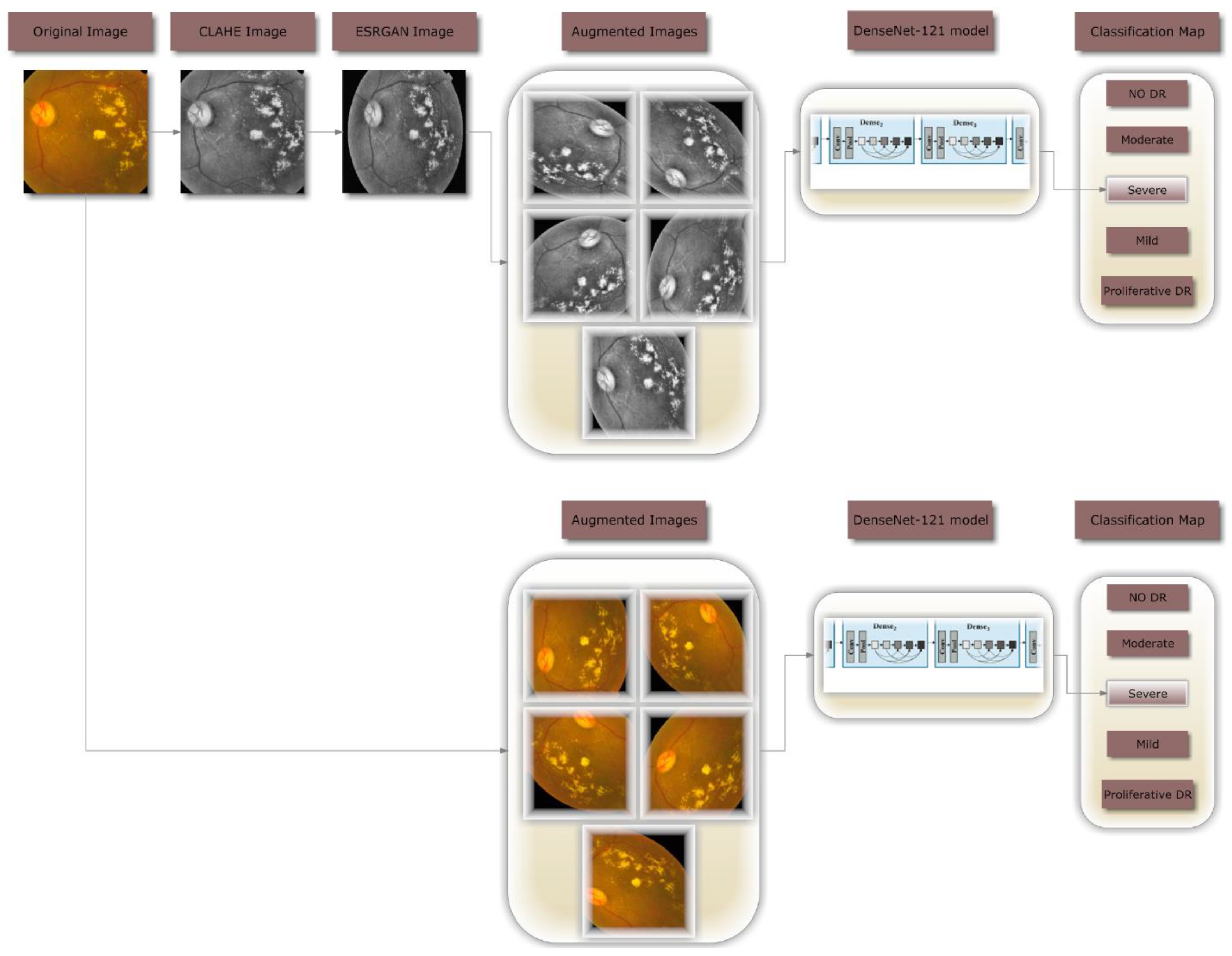

Figure 1 illustrates the complete procedure of the proposed methodology that was employed to build a fully automated DR classification model from the dataset discussed in this paper. It illustrates two distinct case scenarios: case 1 scenario, in which CLAHE is used as a preprocessing step followed by ESRGAN, and case 2 scenario, in which neither step is used. In both scenarios, the images are augmented to keep them from being too well suitable. In the last step, images are sent to the DenseNet-121 model to be classified.

3.1. Data set Description.

It is vital to select a dataset with a sufficient number of high-quality photographs. This research utilizes the APTOS 2019 (Asia Pacific Tele-Ophthalmology Society) Blindness Detection Dataset [

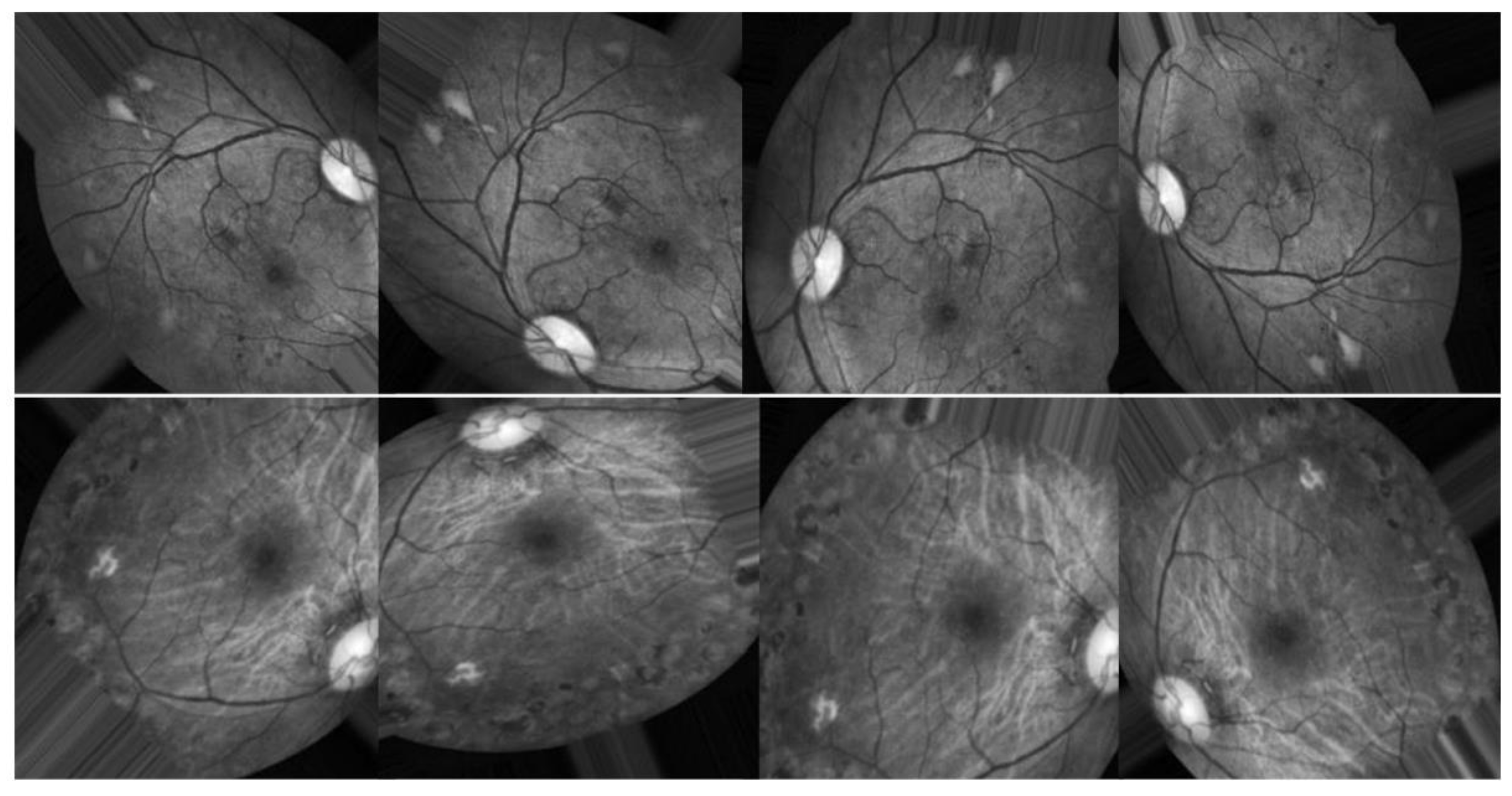

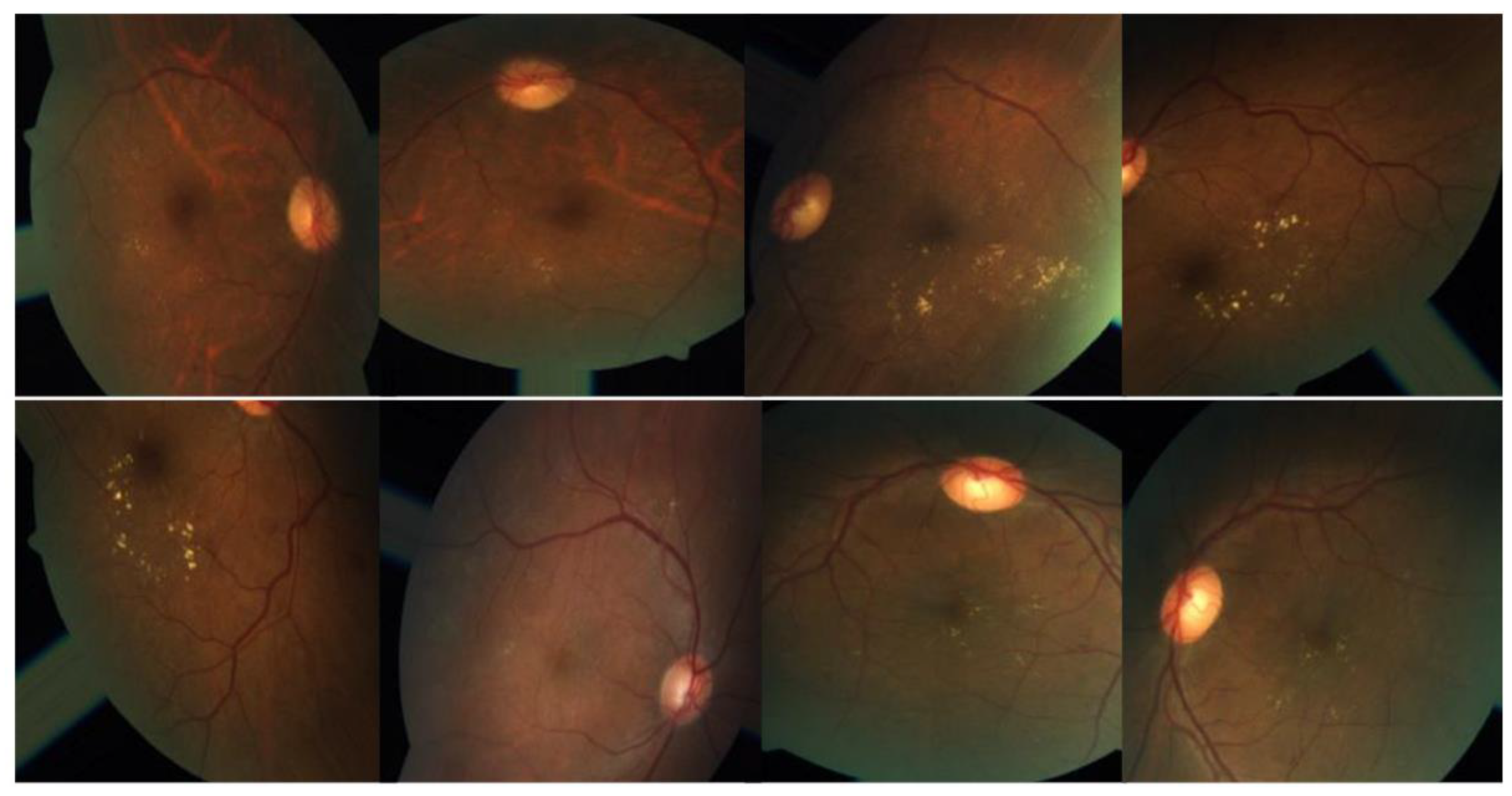

14], a publicly available Kaggle dataset including a great number of images. This collection contains high-resolution Retinal images for the five stages of DR, ranging from 0 (no DR) to 4 (proliferate DR), with labels 1-4 correlating to the four levels of severity. As shown in

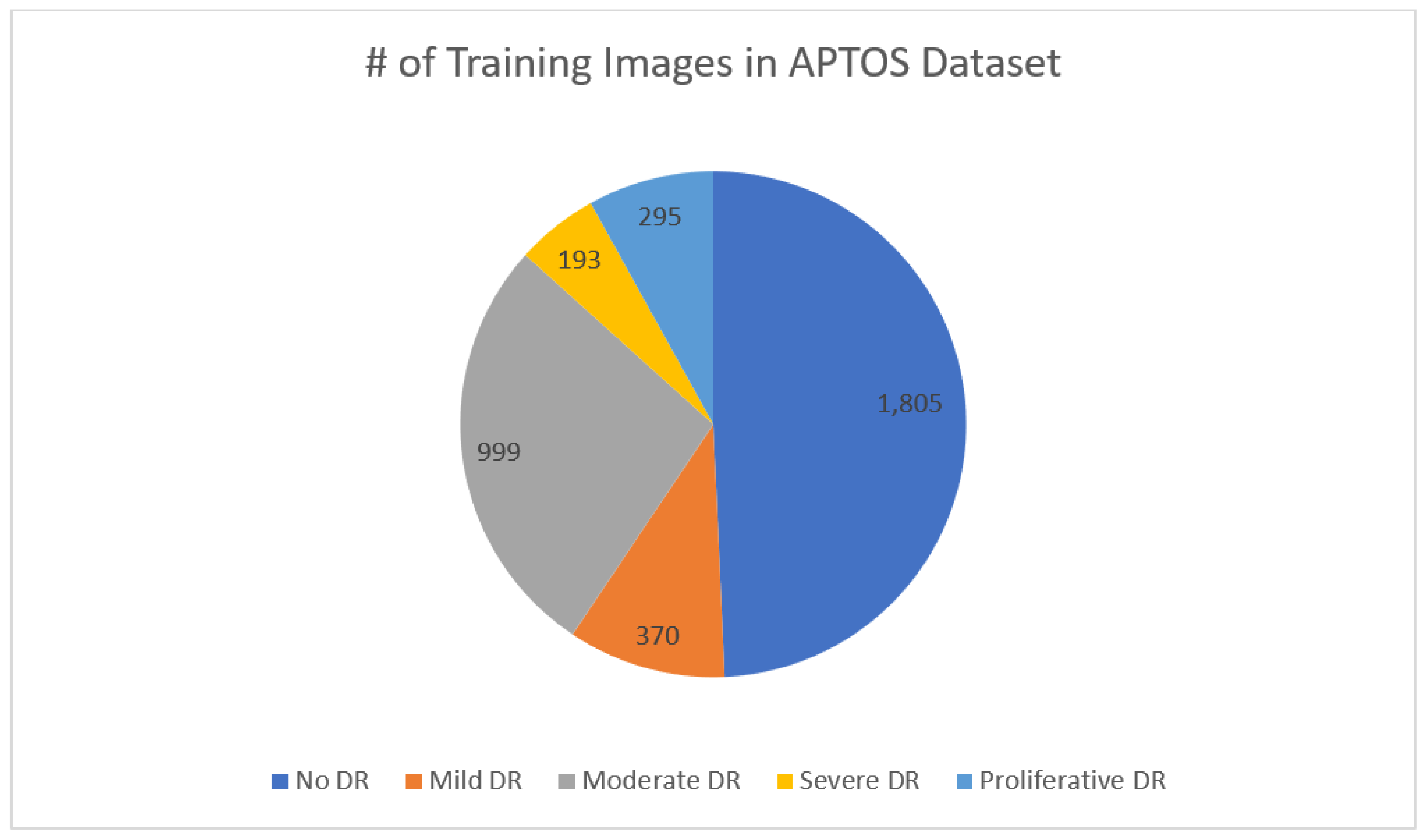

Figure 2, there are 3,662 retinal images; 1,805 are from the "no DR" group, 370 are from the "mild DR" group, 999 are from the "moderate DR" group, 193 are from the "severe DR" group, and 295 are from the "proliferate DR" group. Images are 3216 × 2136 pixels in size, and

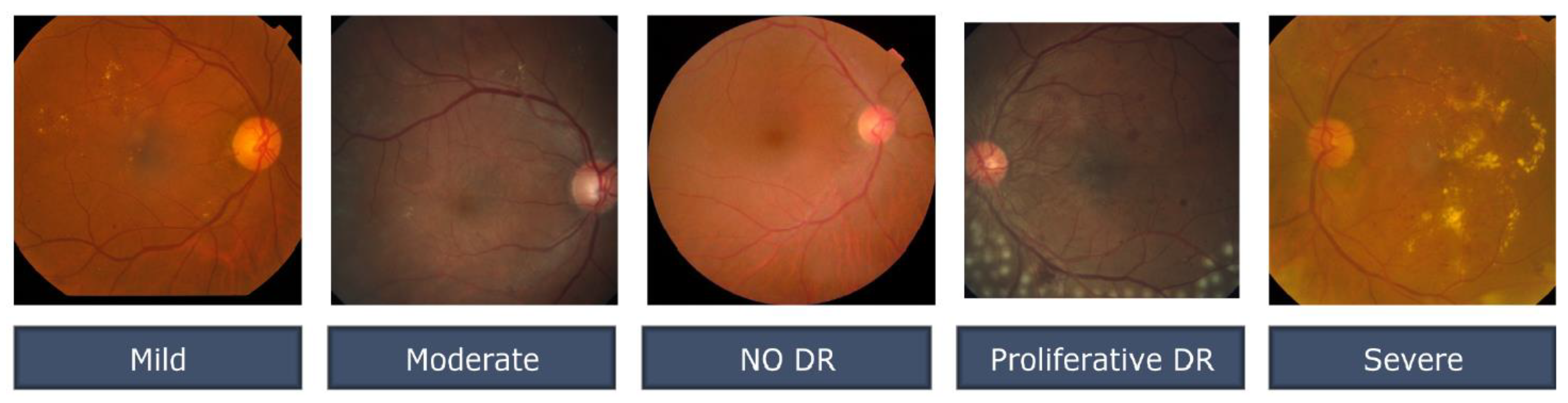

Figure 3 illustrates several examples of this format. Similar to any actual data set, the images and labels contain background noise. There is a chance that the given photographs will contain imperfections, such as blemishes, chromatic aberration, poor brightness, or another difficulty. The photographs were acquired over a lengthy period from a variety of clinics using a variety of equipment, which all add to the overall high diversity of the images.

3.2. Preprocessing using CLAHE and ESRGAN

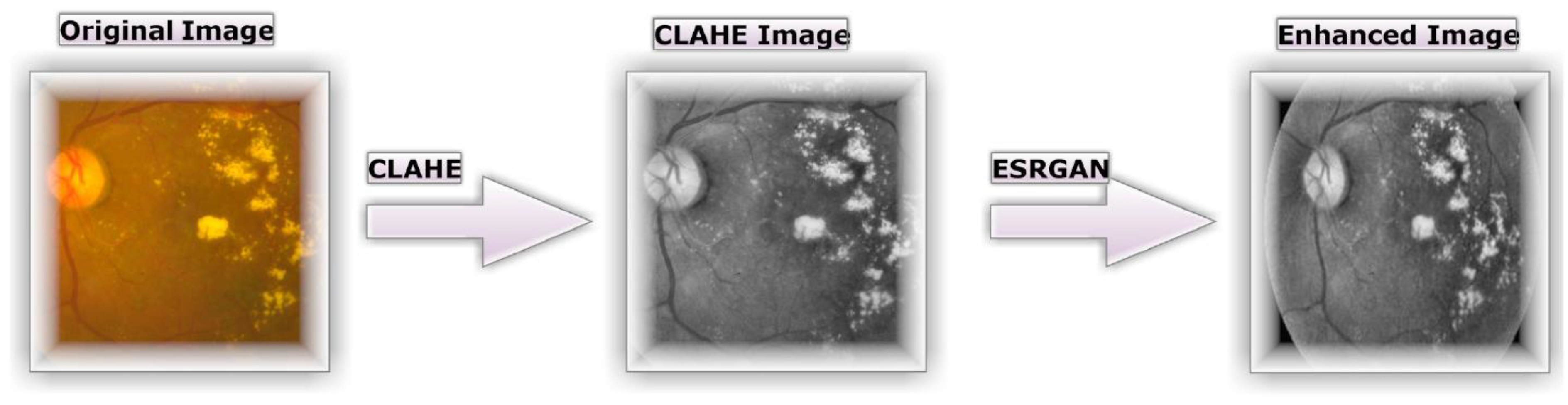

Images of the retinal images are frequently captured by many institutions utilizing diverse technology. Because of the high contrast enhancement, it was necessary to increase the clarity of DR images and reduce various forms of noise in the photos used by the proposed method. All scenario 1 images receive preparatory preparation prior to augmentation and training, which necessitates multiple steps.

Figure 4b depicts how the tiny features, textures, and poor resolution of the DR image were enhanced using CLAHE relying on the rearrangement of the source image's brightness values [

16]. This was accomplished by dividing the image into several non-overlapping, nearly identical-sized portions. Consequently, this method simultaneously boosts local contrast and the discernibility of edges and curves throughout an image. Then, all images are resized to match the input size of the learning model, which is 224*224*3.

Figure 4c demonstrates the second application of ESRGAN to the previous stage's outcome. ESRGAN images can more accurately imitate the sharp edges of image artifacts [

33]. Each image's pixel intensity can vary greatly, so it is normalized between [–1] and [

1] to keep data within predetermined parameters and eliminate noise. Normalization reduces weight sensitivity, making optimization easier. Consequently, the method represented in

Figure 4. enhances the quality of the image's boundaries and arches as well as enhancing the image's contrast, resulting in more precise results when employing this technique.

3.3. Data Augmentation

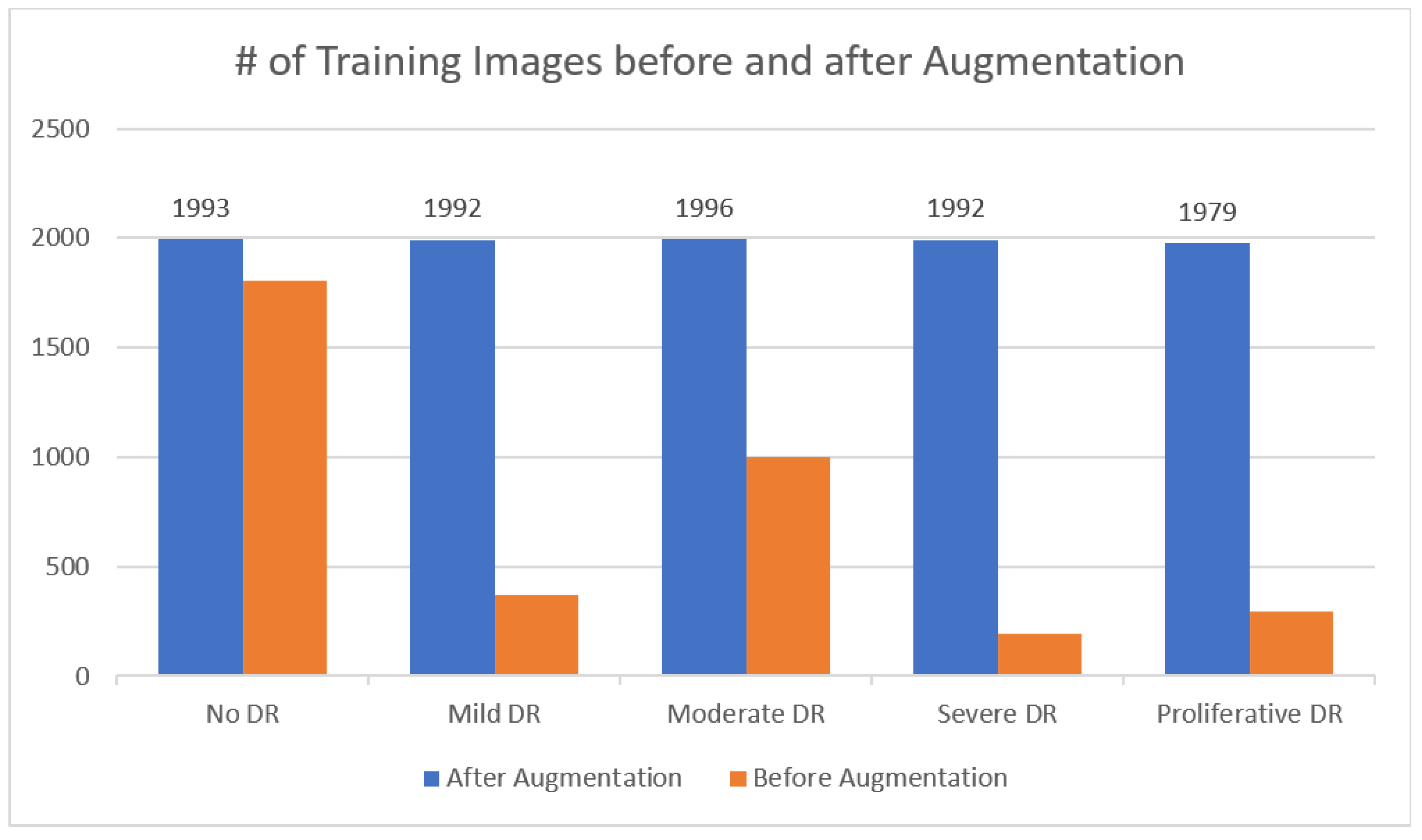

Researchers performed DA on the training set prior to subjecting DenseNet-121 to the dataset photos to boost the number of images and resolve the issue of a class imbalanced as shown in

Figure 5. In most cases, deeper learning models perform better when provided additional data to train from. By making a number of alterations to each photo, we may make use of DR photography's unique qualities. The deep neural network (DNN) retains its accuracy even after the image is enlarged, flipped either vertically or horizontally, or rotated by a predetermined degree of angles. To avoid overfitting and rectify the imbalance in the dataset, DA (i.e., translation, rotation, and magnification) are used. Among the transformations used in this investigation is the horizontal shifting augmentation, which involves shifting the pixels of an image horizontally while maintaining the image's aspect ratio, with the step size being specified by an integer between 0 and 1. Another kind of transformation is rotation, in which the image is arbitrarily rotated by picking an angle between zero and one hundred eighty. All previous edits to the images within the training set are applied to generate new samples for the network.

In this research, DenseNet-121 was trained using two different scenarios: the first involved applying augmentation to the enhanced photos (shown in

Figure 5.), and the second involved applying augmentation to the raw images (shown in

Figure 6). The purpose of DA is to increase the amount of data by adding slightly changed copies of either existing data or newly synthesized data acquired from the available data using the same parameters in both instances, even though the total number of photos is the same in both circumstances.

Differential categorization techniques were used to solve the issue of inconsistent sample numbers and unclear groupings.

Figure 7. shows that the APTOS dataset is an example of an "imbalanced class," where samples are not distributed evenly between the several classes. In both scenarios, it is clear that the classes are balanced after augmentation procedures have been applied to the dataset as shown in

Figure 7.

4. Experimental Results

4.1. Instruction and Setup of DenseNet-121

To demonstrate the DL system's efficacy and compare outcomes to best practices, tests were carried on the APTOS dataset. Per the proposed training scheme, the dataset was split into three groups: 80% for training (9,952 photographs), 10% for test (1012 photos), and a random 10% for validation (1025 photos) to test their capabilities and keep the weight pairings with the best accuracy value during learning. The training images were shrunk to 224 by 224 by 3 pixels. TensorFlow Keras for the proposed setup was tested on a Linux PC with an RTX3060 GPU and 8GB RAM.

The conceptual methodology is pre-trained on the APTOS dataset with the Adam optimizer which received these hyperparameters during training: This simulation uses learning rates from 1E^3 to 1E^5, batch sizes from 2 to 64 with an increase of two times the prior value, epochs of 50, patience of 10, and momentum of 0.90.

4.2. Performance appraisal

In this section of the study, the evaluation measures employed, and their outcomes are described in detail. Accuracy (Acc) is a commonly used statistic for measuring classification performance. It is calculated by dividing the number of successfully categorized instances (images) by the total number of instances (images) in the dataset illustrated in equation (1). Specificity and Sensitivity are the two most frequently employed metrics for evaluating the performance of picture categorization systems. The Specificity increases according to the number of precisely labeled photos as in equation (2) while Sensitivity is the ratio of effectively categorized images in the dataset to those associated numerically as shown in equation (3). Furthermore F1-score suggests that the system is more accurate at predicting the future than one with a lower value as the success of a system cannot be determined just by its accuracy or Sensitivity. Equation (4) demonstrates how to mathematically calculate the F1-score (F1sc). Top N accuracy is the final metric used in this study; for this metric to be termed "top N accuracy", model N's highest probability replies must match the expected softmax distribution. A classification is deemed accurate if at least one of the N predictions made corresponds to the desired label.

True positives, represented by the symbol (Tp), are successfully anticipated positive cases, and true negatives (Tn) are effectively predicted negative scenarios. False positives (Fp) are falsely predicted positive situations, whereas false negatives (Fn) are falsely projected negative situations.

4.3. Performance of DenseNet-121 Model Outcomes:

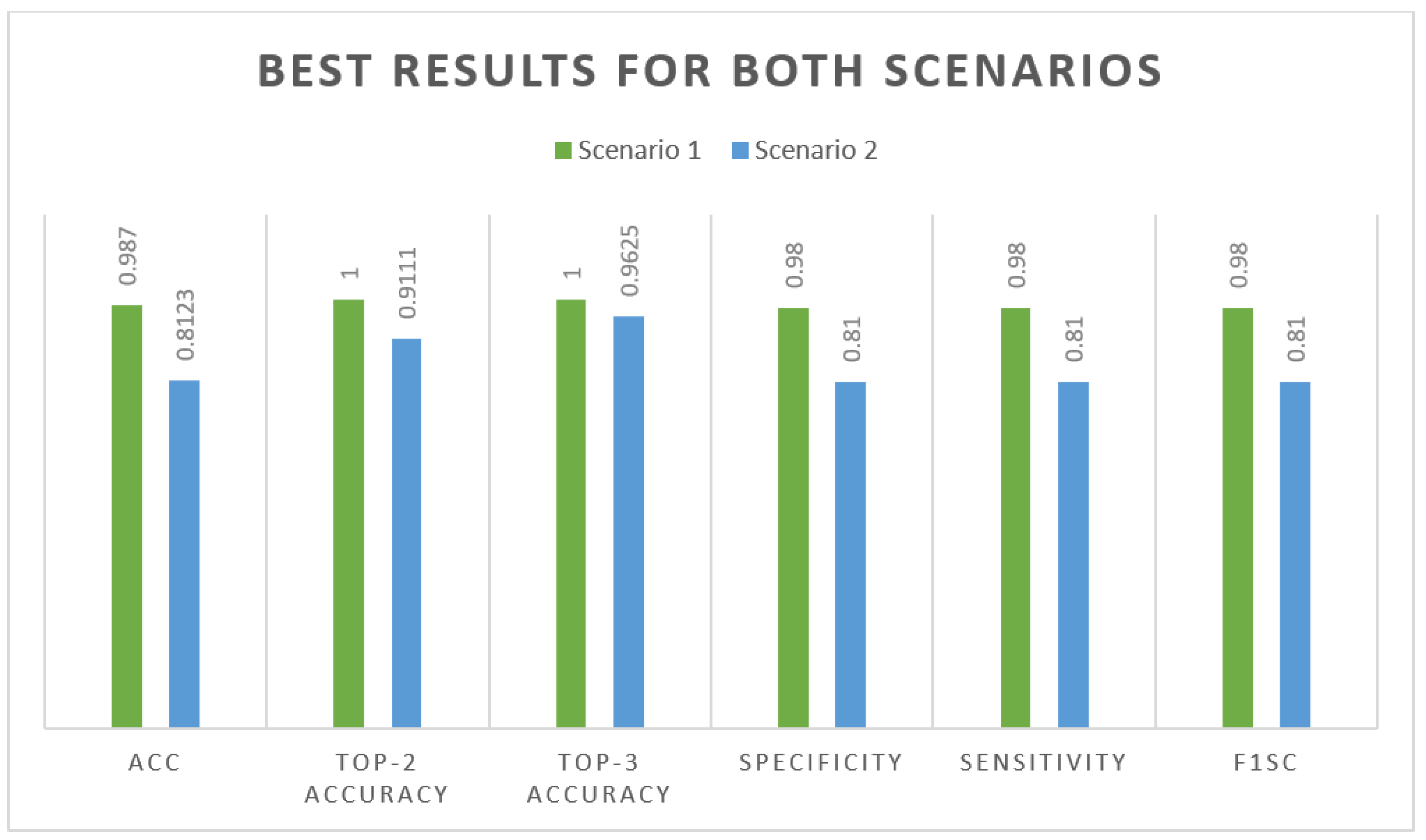

When glancing at the APTOS dataset, we concentrate on two different scenario configurations in which DenseNet-121 was applied to our dataset in two different ways: once with enhancement (CLAHE + ESRGAN) and then once without (CLAHE + ESRGAN), as shown in

Figure 3. Models are trained for 50 iterations, with batch sizes ranging from 2 to 64 and learning rates of 1E^3, up to 1E^5. DenseNet-121 was adjusted even more by freezing between 150 and 170 layers. This was done so that it could be as precise as possible. Several runs of the same model with the same parameters were used to make a model ensemble. Because such arbitrary weights are obtained for every run, the accuracy changes from run to run. In

Table 1 and

Table 1, for Scenarios 1 and 2, only the best run result is shown. It shows that the best results you can achieve using CLAHE + ESRGAN in Scenario 1 and Scenario 2 are 98.7% and 81.23%, respectively.

Figure 8 shows how the evaluation metrics used in Scenario 1 utilizing CLAHE and ESRGAN and Scenario 2 without utilizing them affected the best outcome for each scenario.

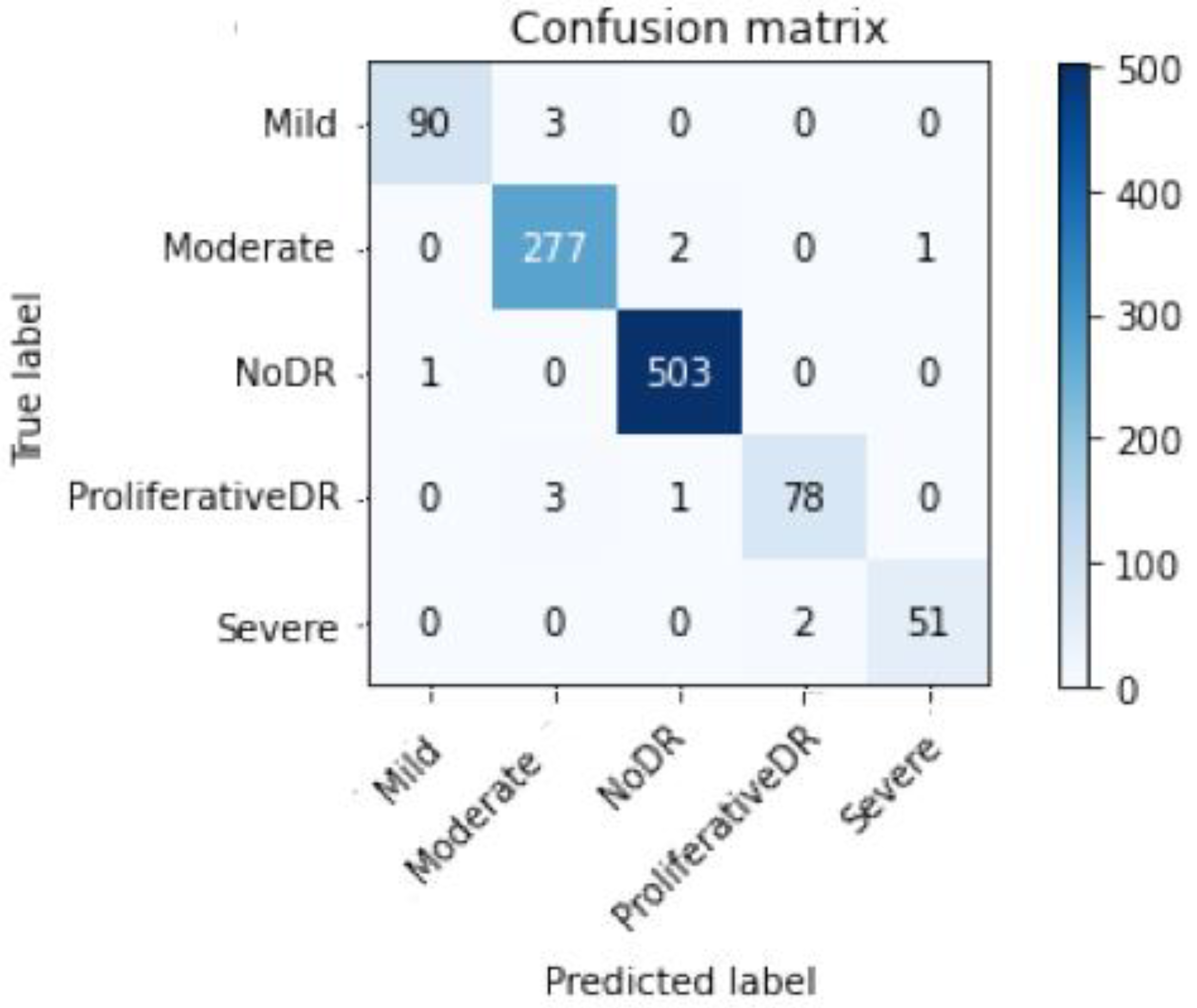

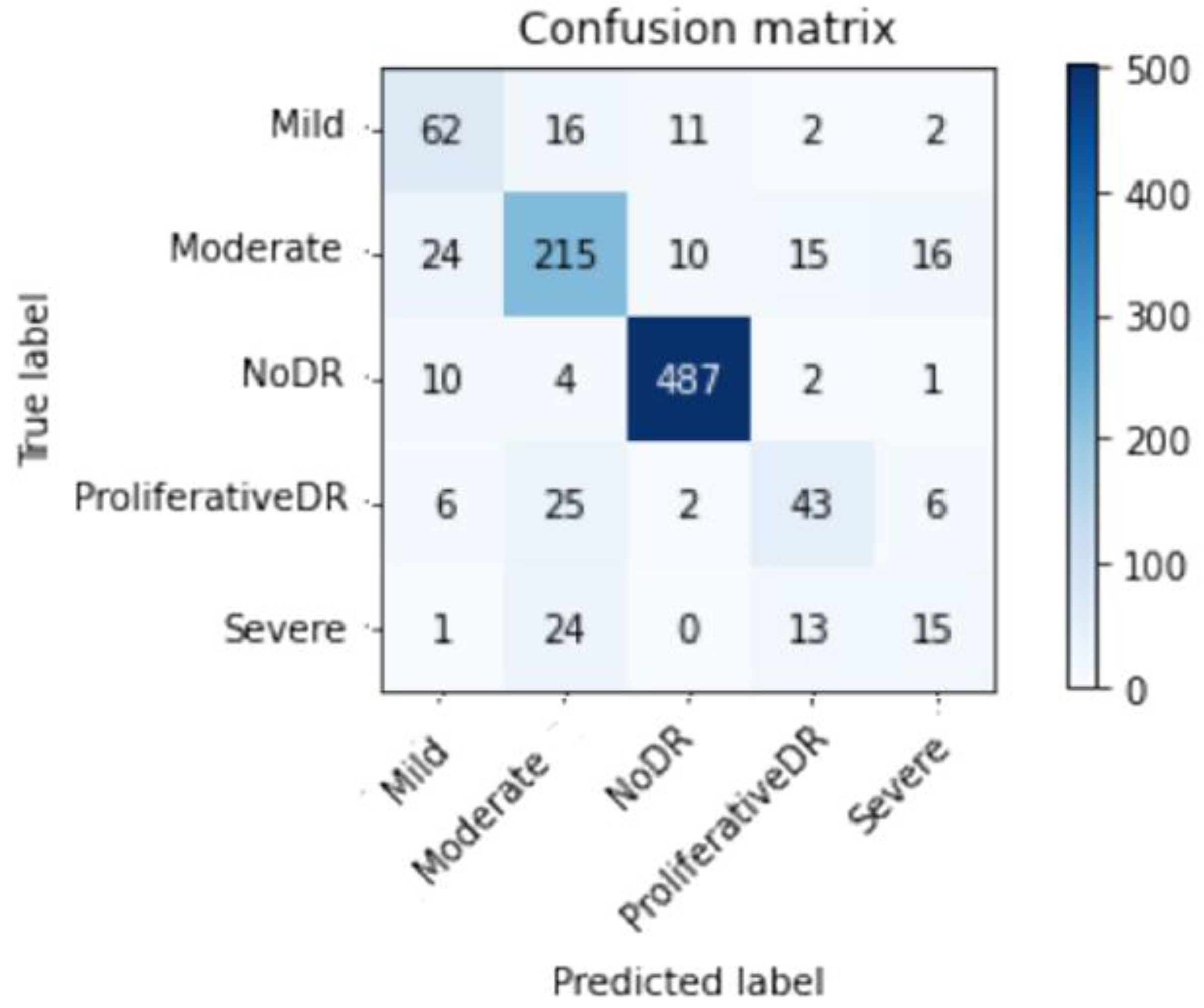

Figure 9 and

Figure 10 depict Scenario 1 and Scenario 2 confusion matrices. The confusion matrix shows that the suggested technique can distinguish retina classes with 98.7% accuracy, which is good for real-world application. The confusion matrix demonstrates that scenario 1 correctly classified samples.

Throughout the APTOS dataset,

Table 3 and

Table 4 display the full range of test photos by category. Based on the data, it is abundantly obvious that the No DR class contains the majority of images with 504; its Specificity, Sensitivity, and F1sc have the highest values of 99 percent, 100 percent, and 100 percent for Scenario 1, and 97 percent, 97 percent, and 97 percent for Scenario 2.

Table 3 and

Table 4 demonstrate that the proposed method scored higher than the baseline methods for scenario 2 in terms of F1sc, accuracy, sensitivity, and specificity. Utilizing images of the retina to help ophthalmologists find infections more accurately and with less effort has now been shown to work in the real world.

4.4. Evaluation Considering a Variety of Other Methodologies

Effectiveness is compared to that of other methods. According to

Table 5 our method exceeds other alternatives in terms of effectiveness and performance. The proposed DenseNet-121 model achieves an overall accuracy rate of 98.7% for Scenario 1 and 81.23% for Scenario 2, surpassing the present methods.

4.5. Discussion

Relying on CLAHE and ESRGAN, this study came up with a new method for classifying DR. The model that was made was put through its paces using DR images from the APTOS 2019 dataset. So, there are two training cases scenarios: case 1 scenario with CLAHE + ESRGAN applied to the APTOS dataset and case 2 scenario without CLAHE + ESRGAN. For 80:20 hold-out validation for case 1 and case 2 scenarios, the model had five-class accuracy rates of 98.7% and 81.23%, respectively. For both cases, the proposed method used the DenseNet-121 architecture that had already been trained on the utilized dataset. During the development of the model, we looked at how well it classified two different situations and found that enhancement techniques gave the best results (Error! Reference source not found.). The general resolution enhancement of CLAHE + ESRGAN is the most crucial component of our method, and we can show that it is responsible for a big improvement in accuracy.

5. Conclusion

Researchers have found a way to efficiently and precisely diagnose five different types of cancer by classifying retinal blood vessels in the APTOS dataset. The proposed method utilizes two scenarios: case 1 scenario, which uses CLAHE and ESRGAN to improve the image, and case 2 scenario, which does not use enhancement. Case 1 scenario uses four-step techniques to improve the image's brightness and get rid of noise. Experiments show that CLAHE and ESRGAN are the two stages that have the most effect on accuracy. For the purpose of preventing overfitting and enhancing the general competences of the suggested method, DenseNet-121 was trained on the apex of preprocessed medical images using augmentation techniques. The proposal asserts that when DenseNet-121 is used, the conception model has an accuracy rate of 98.7% for case 1 and 81.23% for case 2 scenarios, which is similar to the prediction performance of professional ophthalmologists. The investigation is also unique and important because CLAHE and ESRGAN were used in the preprocessing phase. Research analysis demonstrates that the proposed approach outperforms conventional modeling approaches. For the suggested method to be useful, it needs to be tested on a sizable, complicated dataset that includes numerous future DR cases. Throughout the future, Inception, VGG, ResNet, and other augmentation methods may be used to examine new datasets.

Author Contributions

Data curation, Walaa Gouda; Formal analysis, Walaa Gouda and Ghadah Alwakid; Funding acquisition, Ghadah Alwakid; Investigation, Walaa Gouda; Methodology, Mamoona Humayun and Walaa Gouda; Project administration, Mamoona Humayun and Ghadah Alwakid; Supervision, Mamoona Humayun; Writing – original draft, Walaa Gouda; Writing – review & editing, Mamoona Humayun.

Funding

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number 223202.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

Will be furnished on request from second author.

Acknowledgments

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number 223202.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Association, A.D. Diagnosis and classification of diabetes mellitus. Diabetes Care 2014, 37 (Suppl. 1), S81–S90. [Google Scholar] [CrossRef] [PubMed]

- Al-Antary, M.T.; Arafa, Y. Multi-scale attention network for diabetic retinopathy classification. IEEE Access 2021, 9, 54190–54200. [Google Scholar] [CrossRef]

- Humayun, M.; Alsayat, A. Prediction Model for Coronavirus Pandemic Using Deep Learning. Comput. Syst. Sci. Eng. 2022, 40, 947–961. [Google Scholar] [CrossRef]

- Alex, S.A.; Jhanjhi, N.Z.; Humayun, M.; Ibrahim, A.O.; Abulfaraj, A.W. Deep LSTM Model for Diabetes Prediction with Class Balancing by SMOTE. Electronics 2022, 11, 2737. [Google Scholar] [CrossRef]

- Alwakid, G.; Gouda, W.; Humayun, M. Deep Learning-based prediction of Diabetic Retinopathy using CLAHE and ESRGAN for Enhancement. Preprints 2023, 2023020097. [Google Scholar] [CrossRef]

- Pandey, S.K.; Sharma, V. World diabetes day 2018: battling the emerging epidemic of diabetic retinopathy. Indian J. Ophthalmol. 2018, 66, 1652. [Google Scholar] [CrossRef] [PubMed]

- Kobat, S.G.; et al. Automated Diabetic Retinopathy Detection Using Horizontal and Vertical Patch Division-Based Pre-Trained DenseNET with Digital Fundus Images. Diagnostics 2022, 12, 1975. [Google Scholar] [CrossRef]

- Singh, A.; et al. Mechanistic Insight into Oxidative Stress-Triggered Signaling Pathways and Type 2 Diabetes. Molecules 2022, 27, 950. [Google Scholar] [CrossRef]

- Wykoff, C.C.; et al. Risk of blindness among patients with diabetes and newly diagnosed diabetic retinopathy. Diabetes Care 2021, 44, 748–756. [Google Scholar] [CrossRef]

- Alyoubi, W.L.; Shalash, W.M.; Abulkhair, M.F. Diabetic retinopathy detection through deep learning techniques: A review. Inform. Med. Unlocked 2020, 20, 100377. [Google Scholar] [CrossRef]

- Humayun, M.; Khalil, M.I.; Almuayqil, S.N.; Jhanjhi, N.Z. Framework for Detecting Breast Cancer Risk Presence Using Deep Learning. Electronics 2023, 12, 403. [Google Scholar] [CrossRef]

- Gouda, W.; et al. Detection of COVID-19 Based on Chest X-rays Using Deep Learning. Healthcare 2022, 10, 343. [Google Scholar] [CrossRef]

- Alruwaili, M.; Gouda, W. Automated Breast Cancer Detection Models Based on Transfer Learning. Sensors 2022, 22, 876. [Google Scholar] [CrossRef]

-

APTOS 2019 Blindness Detection; Kaggle: San Francisco, CA, USA, 2019.

- Huang, G.; et al. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Reza, A.M. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Ledig, C.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Gargeya, R.; Leng, T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology, 2017, 124, 962–969. [Google Scholar] [CrossRef]

- Costa, P.; et al. A weakly-supervised framework for interpretable diabetic retinopathy detection on retinal images. IEEE Access 2018, 6, 18747–18758. [Google Scholar] [CrossRef]

- Wang, J.; Bai, Y.; Xia, B. Feasibility of diagnosing both severity and features of diabetic retinopathy in fundus photography. IEEE Access 2019, 7, 102589–102597. [Google Scholar] [CrossRef]

- Leeza, M.; Farooq, H. Detection of severity level of diabetic retinopathy using Bag of features model. IET Comput. Vis. 2019, 13, 523–530. [Google Scholar] [CrossRef]

- Gayathri, S.; et al. Automated binary and multiclass classification of diabetic retinopathy using haralick and multiresolution features. IEEE Access 2020, 8, 57497–57504. [Google Scholar] [CrossRef]

- Pires, R.; et al. A data-driven approach to referable diabetic retinopathy detection. Artif. Intell. Med. 2019, 96, 93–106. [Google Scholar] [CrossRef]

- Zhang, W.; et al. Automated identification and grading system of diabetic retinopathy using deep neural networks. Knowl.-Based Syst. 2019, 175, 12–25. [Google Scholar] [CrossRef]

- Math, L.; Fatima, R. Adaptive machine learning classification for diabetic retinopathy. Multimed. Tools Appl. 2021, 80, 5173–5186. [Google Scholar] [CrossRef]

- Maqsood, S.; Damaševičius, R.; Maskeliūnas, R. Hemorrhage detection based on 3D CNN deep learning framework and feature fusion for evaluating retinal abnormality in diabetic patients. Sensors 2021, 21, 3865. [Google Scholar] [CrossRef] [PubMed]

- Majumder, S.; Ullah, M.A. Feature extraction from dermoscopy images for melanoma diagnosis. SN Appl. Sci. 2019, 1, 1–11. [Google Scholar] [CrossRef]

- Majumder, S.; Ullah, M.A. A computational approach to pertinent feature extraction for diagnosis of melanoma skin lesion. Pattern Recognit. Image Anal. 2019, 29, 503–514. [Google Scholar] [CrossRef]

- Crane, A.; Dastjerdi, M. Effect of Simulated Cataract on the Accuracy of an Artificial Intelligence Algorithm in Detecting Diabetic Retinopathy in Color Fundus Photos. Investig. Ophthalmol. Vis. Sci. 2022, 63, 2100–F0089. [Google Scholar]

- Majumder, S.; Kehtarnavaz, N. Multitasking deep learning model for detection of five stages of diabetic retinopathy. IEEE Access 2021, 9, 123220–123230. [Google Scholar] [CrossRef]

- Yadav, S.; Awasthi, P.; Pathak, S. Retina Image and Diabetic Retinopathy: A Deep Learning Based Approach. Int. Res. J. Mod. Eng. Technol. Sci. 2022, 4, 3790–3794. [Google Scholar]

- Majumder, S.; Ullah, M.A.; Dhar, J.P. Melanoma diagnosis from dermoscopy images using artificial neural network. In Proceedings of the 2019 5th International Conference on Advances in Electrical Engineering (ICAEE), Dhaka, Bangladesh, 26–28 September 2019; IEEE: New York, NY, USA. [Google Scholar]

- Jolicoeur-Martineau, A. The relativistic discriminator: a key element missing from standard GAN. arXiv 2018, arXiv:1807.00734. [Google Scholar]

- Maqsood, Z.; Gupta, M.K. Automatic Detection of Diabetic Retinopathy on the Edge. In Cyber Security, Privacy and Networking; Springer: Singapore, 2022; pp. 129–139. [Google Scholar]

- Saranya, P.; et al. Red Lesion Detection in Color Fundus Images for Diabetic Retinopathy Detection. In Proceedings of the International Conference on Deep Learning, Computing and Intelligence; Springer: Singapore, 2022. [Google Scholar]

- Lahmar, C.; Idri, A. Deep hybrid architectures for diabetic retinopathy classification. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2022, 11, 1–19. [Google Scholar] [CrossRef]

- Oulhadj, M.; et al. Diabetic retinopathy prediction based on deep learning and deformable registration. Multimed. Tools Appl. 2022, 81, 1–19. [Google Scholar] [CrossRef]

- Gangwar, A.K.; Ravi, V. Diabetic retinopathy detection using transfer learning and deep learning. In Evolution in Computational Intelligence; Springer: Singapore, 2021; pp. 679–689. [Google Scholar]

- Lahmar, C.; Idri, A. On the value of deep learning for diagnosing diabetic retinopathy. Health Technol. 2022, 12, 89–105. [Google Scholar] [CrossRef]

- Canayaz, M. Classification of diabetic retinopathy with feature selection over deep features using nature-inspired wrapper methods. Appl. Soft Comput. 2022, 128, 109462. [Google Scholar] [CrossRef]

- Escorcia-Gutierrez, J.; et al. Analysis of Pre-trained Convolutional Neural Network Models in Diabetic Retinopathy Detection through Retinal Fundus Images. In International Conference on Computer Information Systems and Industrial Management; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Thomas, N.M.; Albert Jerome, S. Grading and Classification of Retinal Images for Detecting Diabetic Retinopathy Using Convolutional Neural Network. In Advances in Electrical and Computer Technologies; Springer: Singapore, 2022; pp. 607–614. [Google Scholar]

- Salluri, D.K.; Sistla, V.; Kolli, V.K.K. HRUNET: Hybrid Residual U-Net for automatic severity prediction of Diabetic Retinopathy. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2022, 11, 1–12. [Google Scholar] [CrossRef]

- Deshpande, A.; Pardhi, J. Automated detection of Diabetic Retinopathy using VGG-16 architecture. Int. Res. J. Eng. Technol. 2021, 8, 2936–2940. [Google Scholar]

- Macsik, P.; et al. Local Binary CNN for Diabetic Retinopathy Classification on Fundus Images. Acta Polytech. Hung. 2022, 19, 27–45. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).