Submitted:

20 February 2023

Posted:

21 February 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

| Notation | |

| QoI g | quantity of interest g |

| computational spatial domain | |

| hierarchy of spatial meshes | |

| hierarchy of temporal meshes | |

| L | number of levels |

| s | complexity |

| (or h), | spatial step size and number of spatial degrees of freedom on level ℓ |

| (or ), | time step size and number of time steps on level ℓ |

| number of samples (scenarios) on level ℓ | |

| , | expectation and variance |

| multidimensional domain of integration in parametric space | |

| , | random event and random vector |

| porosity random field | |

| permeability random field | |

| density random field | |

| volumetric velocity | |

| tensor field : molecular diffusion and dispersion of salt | |

| expectation of | |

| d | physical (spatial) dimension |

| mass fraction of salt (solution of the problem) | |

- How long can a particular drinking water well be used (i.e., when will the mass fraction of the salt exceed a critical threshold)?

- What regions have especially high uncertainty?

- What is the probability that the salt concentration is higher than a threshold at a certain spatial location and time point?

- What is the average scenario (and its variations)?

- What are the extreme scenarios?

- How do the uncertainties change over time?

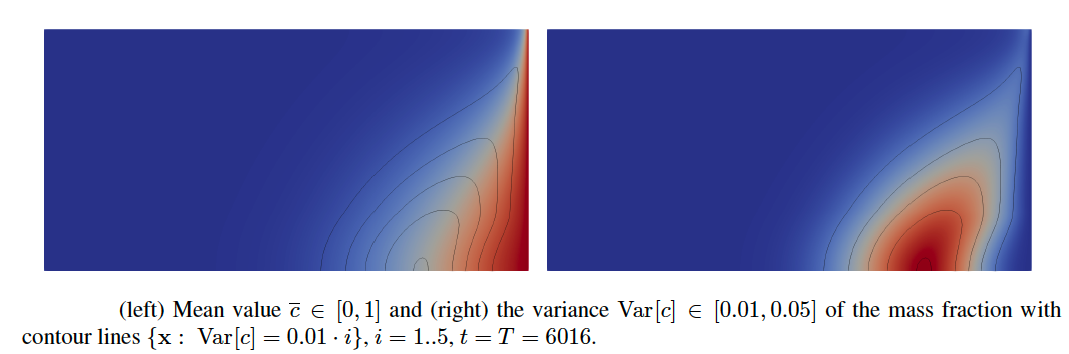

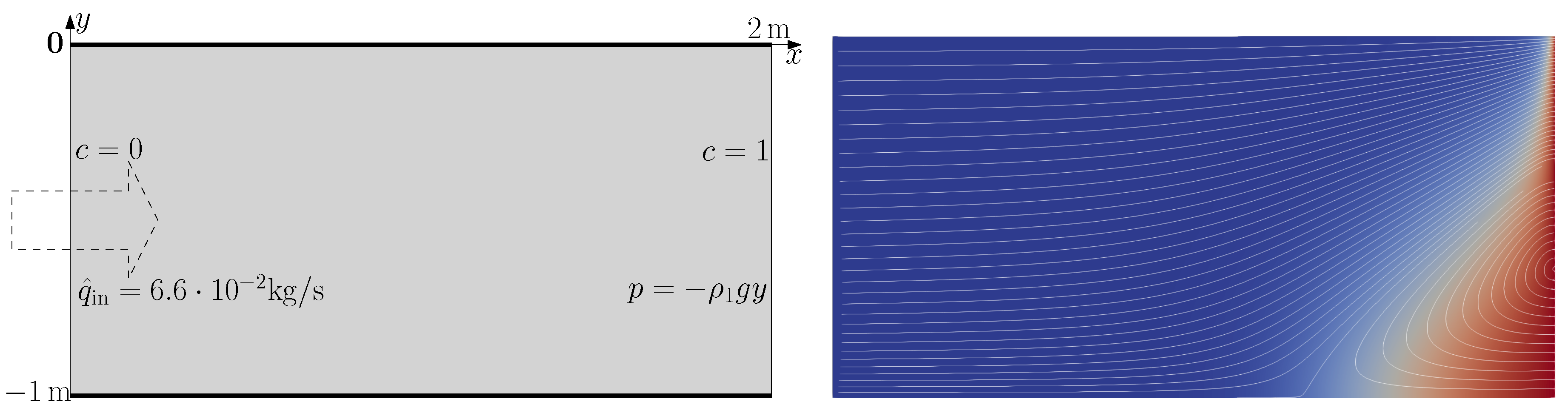

2. Henry Problem with Uncertain Porosity and Permeability

2.1. Problem setting

2.2. Modeling porosity, permeability, and mass fraction

2.3. Numerical methods for the deterministic problem

3. Multilevel Monte Carlo

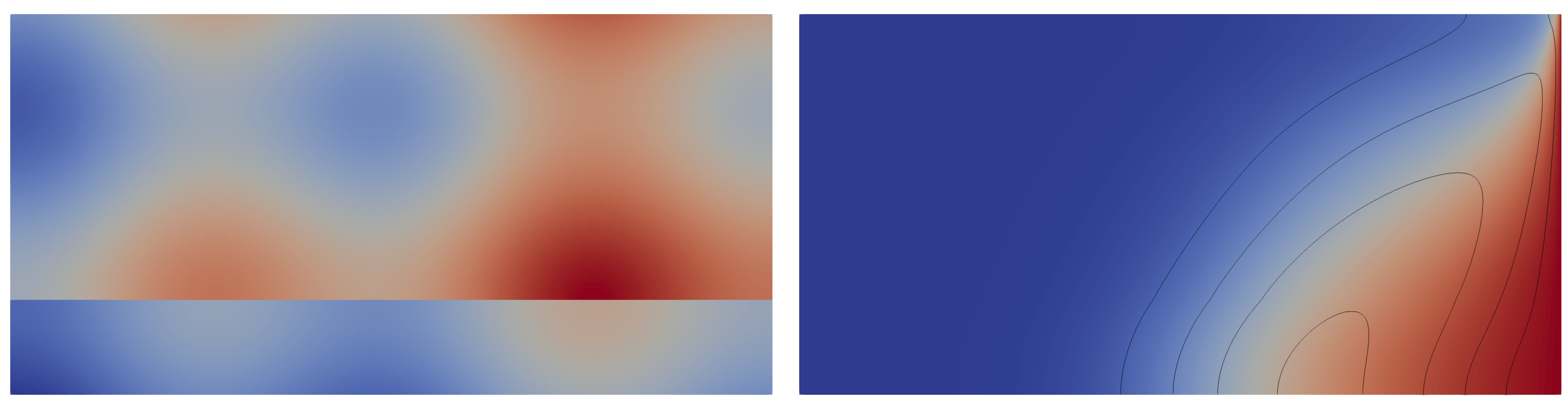

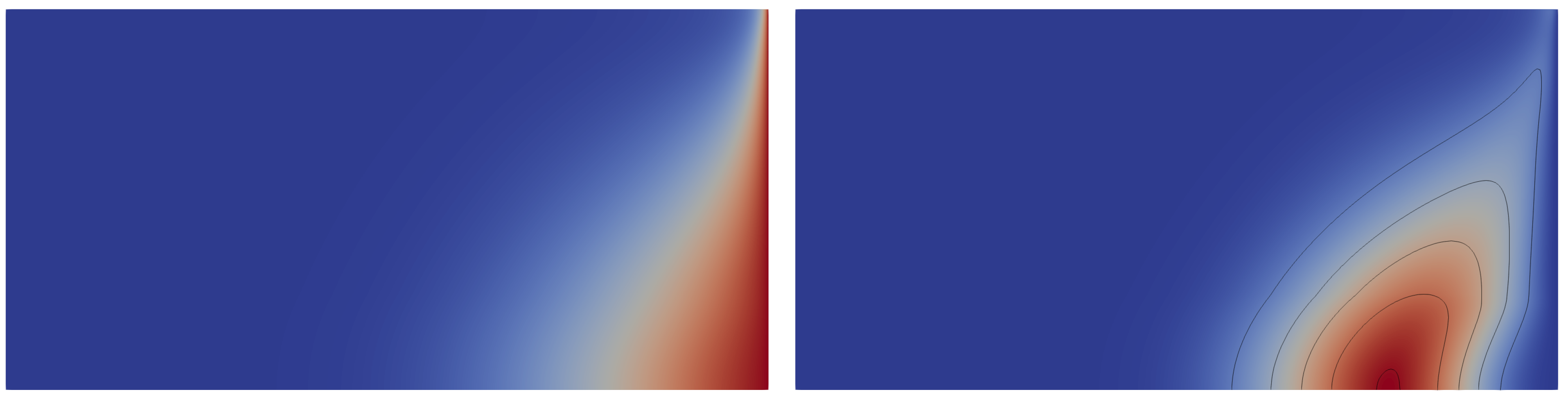

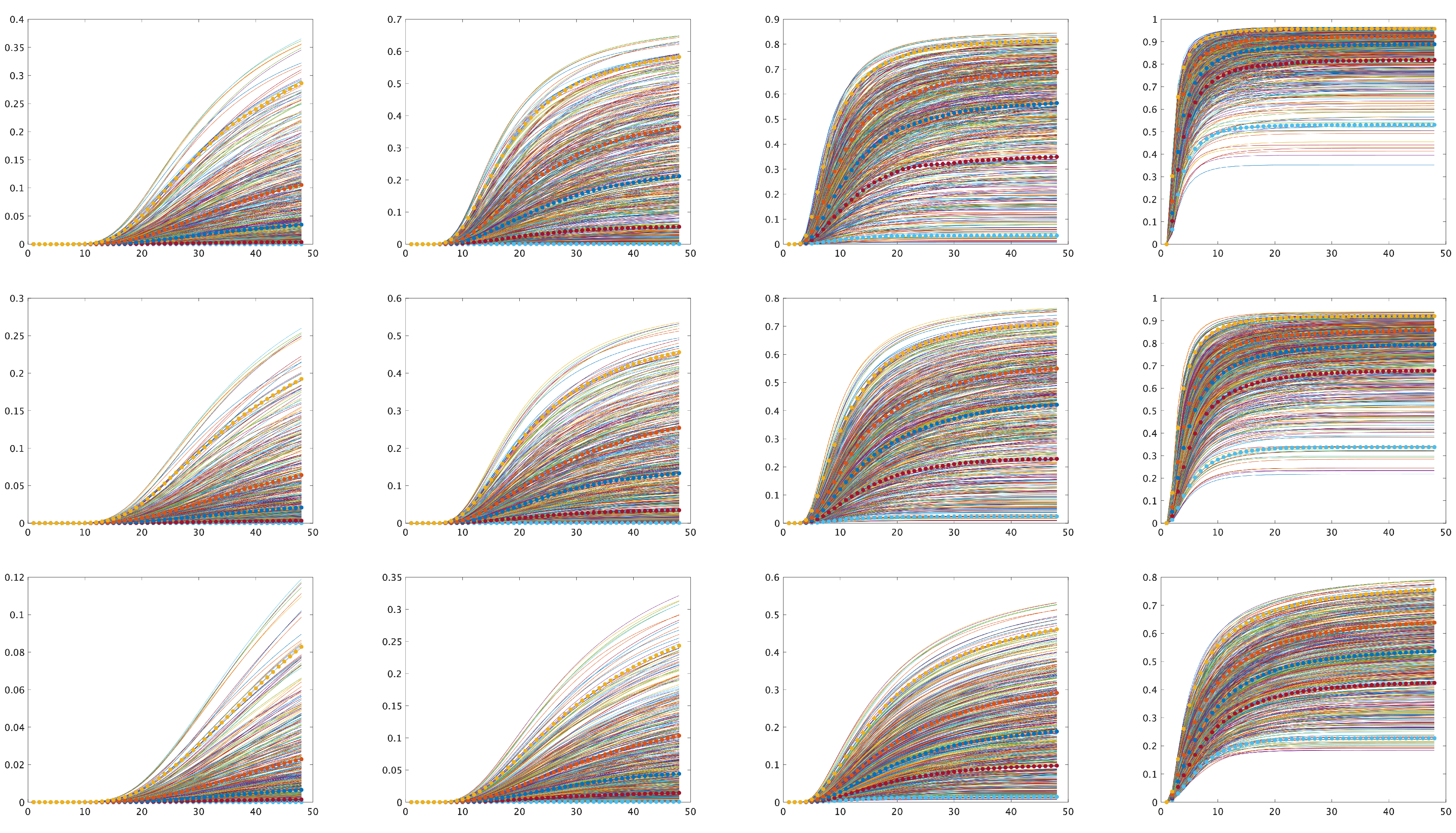

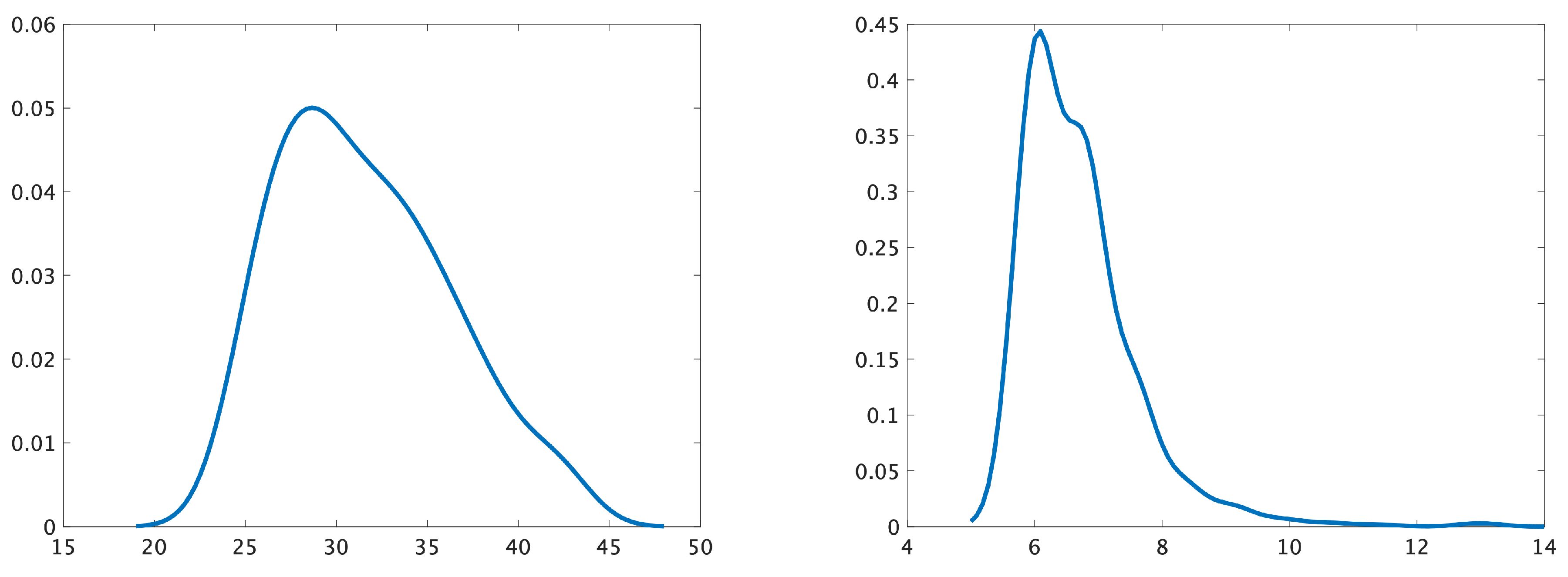

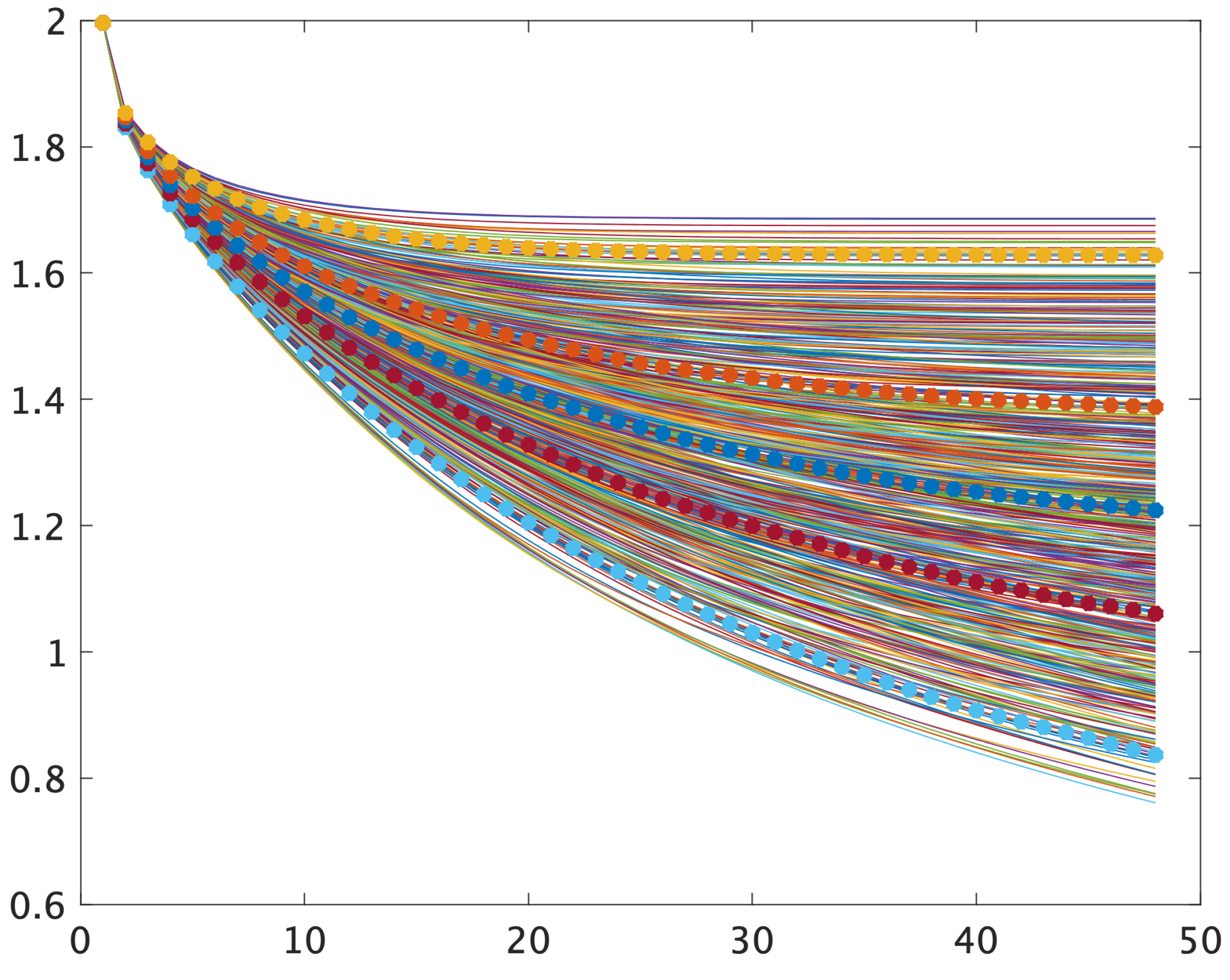

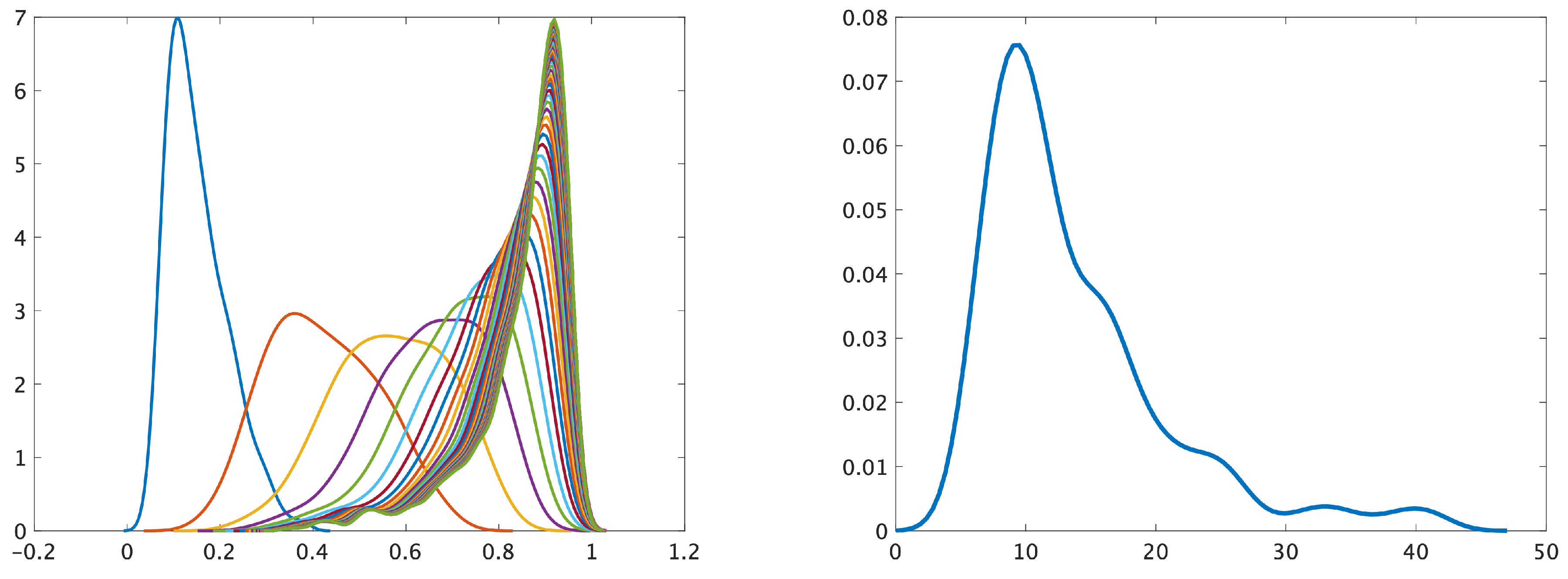

4. Numerical Experiments

| Level ℓ | Computing times () | |||||

| average | min. | max. | ||||

| 0 | 1122 | 188 | 32 | 1.15 | 0.88 | 1.33 |

| 1 | 4290 | 376 | 16 | 4.1 | 3.4 | 4.87 |

| 2 | 16770 | 752 | 8 | 19.6 | 17.6 | 22 |

| 3 | 66306 | 1504 | 4 | 136.0 | 128 | 144 |

| 4 | 263682 | 3008 | 2 | 1004.0 | 891 | 1032 |

| 5 | 1051650 | 6016 | 1 | 8138.0 | 6430 | 8480 |

5. Conclusion

- Limitations. 1. It may happen that the QoIs computed on different grid levels are the same (for the given random input parameters). In this case the standard (Q)MC on a coarse mesh will be sufficient. 2. The time dependence is challenging. The optimal number of samples depends on the point and may be small for some points and large for others. 3. Twenty-four hours may not be sufficient to compute the solution at the sixth mesh level.

- Future work. Our model of porosity in Eq. (24) is quite simple. It would be beneficial to consider a more complicated/multiscale/realistic model with more random variables. A more advanced version of MLMC may give better estimates of the number of levels L and the number of samples on each level . Another hot topic is data assimilation and the identification of unknown parameters [73,74,75,76]. Known experimental data and measurements of porosity, permeability, velocity or mass fraction could be used to minimise uncertainties.

Acknowledgments

References

- Abarca, E.; Carrera, J.; Sánchez-Vila, X.; Dentz, M. Anisotropic dispersive Henry problem. Advances in Water Resources 2007, 30, 913–926. [CrossRef]

- Schneider, A.; Zhao, H.; Wolf, J.; Logashenko, D.; Reiter, S.; Howahr, M.; Eley, M.; Gelleszun, M.; Wiederhold, H. Modeling saltwater intrusion scenarios for a coastal aquifer at the German North Sea. E3S Web Conf. 2018, 54, 00031. [CrossRef]

- Voss, C.; Souza, W. Variable density flow and solute transport simulation of regional aquifers containing a narrow freshwater-saltwater transition zone. Water Resources Research 1987, 23, 1851–1866. [CrossRef]

- Henry, H.R. Effects of dispersion on salt encroachment in coastal aquifers, in ’Seawater in Coastal Aquifers’. US Geological Survey, Water Supply Paper 1964, 1613, C70–C80.

- Simpson, M.J.; Clement, T.P. Improving the worthiness of the Henry problem as a benchmark for density-dependent groundwater flow models. Water Resources Research 2004, 40, W01504. [CrossRef]

- Simpson, M.J.; Clement, T. Theoretical Analysis of the worthiness of Henry and Elder problems as benchmarks of density-dependent groundwater flow models. Adv. Water. Resour. 2003, 26, 17–31. [CrossRef]

- Dhal, L.; Swain, S., Understanding and modeling the process of seawater intrusion: a review; 2022; pp. 269–290. [CrossRef]

- Riva, M.; Guadagnini, A.; Dell’Oca, A. Probabilistic assessment of seawater intrusion under multiple sources of uncertainty. Advances in Water Resources 2015, 75, 93–104. [CrossRef]

- Reiter, S.; Logashenko, D.; Vogel, A.; Wittum, G. Mesh generation for thin layered domains and its application to parallel multigrid simulation of groundwater flow. submitted to Comput. Visual Sci. [CrossRef]

- Schneider, A.; Kröhn, K.P.; Püschel, A. Developing a modelling tool for density-driven flow in complex hydrogeological structures. Comput. Visual Sci. 2012, 15, 163–168. [CrossRef]

- Cremer, C.; .; Graf, T. Generation of dense plume fingers in saturated–unsaturated homogeneous porous media. Journal of Contaminant Hydrology 2015, 173, 69 – 82. [CrossRef]

- Carrera, J. An overview of uncertainties in modelling groundwater solute transport. Journal of Contaminant Hydrology 1993, 13, 23 – 48. Chemistry and Migration of Actinides and Fission Products. [CrossRef]

- Vereecken, H.; Schnepf, A.; Hopmans, J.; Javaux, M.; Or, D.; Roose, T.; Vanderborght, J.; Young, M.; Amelung, W.; Aitkenhead, M.; Allison, S.; Assouline, S.; Baveye, P.; Berli, M.; Brüggemann, N.; Finke, P.; Flury, M.; Gaiser, T.; Govers, G.; Ghezzehei, T.; Hallett, P.; Hendricks Franssen, H.; Heppell, J.; Horn, R.; Huisman, J.; Jacques, D.; Jonard, F.; Kollet, S.; Lafolie, F.; Lamorski, K.; Leitner, D.; McBratney, A.; Minasny, B.; Montzka, C.; Nowak, W.; Pachepsky, Y.; Padarian, J.; Romano, N.; Roth, K.; Rothfuss, Y.; Rowe, E.; Schwen, A.; Šimůnek, J.; Tiktak, A.; Van Dam, J.; van der Zee, S.; Vogel, H.; Vrugt, J.; Wöhling, T.; Young, I. Modeling Soil Processes: Review, Key Challenges, and New Perspectives. Vadose Zone Journal 2016, 15, vzj2015.09.0131, [/gsw/content_public/journal/vzj/15/5/10.2136_vzj2015.09.0131/3/vzj2015.09.0131.pdf]. [CrossRef]

- Bode, F.; Ferré, T.; Zigelli, N.; Emmert, M.; Nowak, W. Reconnecting Stochastic Methods With Hydrogeological Applications: A Utilitarian Uncertainty Analysis and Risk Assessment Approach for the Design of Optimal Monitoring Networks. Water Resources Research 2018, 54, 2270–2287, [https://agupubs.onlinelibrary.wiley.com/doi/pdf/10.1002/2017WR020919]. [CrossRef]

- Rubin, Y. Applied stochastic hydrogeology; Oxford University Press, 2003.

- Tartakovsky, D. Assessment and management of risk in subsurface hydrology: A review and perspective. Advances in Water Resources 2013, 51, 247 – 260. 35th Year Anniversary Issue. [CrossRef]

- Post, V.; Houben, G. Density-driven vertical transport of saltwater through the freshwater lens on the island of Baltrum (Germany) following the 1962 storm flood. Journal of Hydrology 2017, 551, 689 – 702. Investigation of Coastal Aquifers. [CrossRef]

- Laattoe, T.; Werner, A.; Simmons, C., Seawater Intrusion Under Current Sea-Level Rise: Processes Accompanying Coastline Transgression. In Groundwater in the Coastal Zones of Asia-Pacific; Wetzelhuetter, C., Ed.; Springer Netherlands: Dordrecht, 2013; pp. 295–313. [CrossRef]

- Espig, M.; Hackbusch, W.; Litvinenko, A.; Matthies, H.; Waehnert, P. Efficient low-rank approximation of the stochastic Galerkin matrix in tensor formats. Computers and Mathematics with Applications 2014, 67, 818 – 829. High-order Finite Element Approximation for Partial Differential Equations. [CrossRef]

- Babuška, I.; Tempone, R.; Zouraris, G. Galerkin finite element approximations of stochastic elliptic partial differential equations. SIAM Journal on Numerical Analysis 2004, 42, 800–825. [CrossRef]

- Giraldi, L.; Litvinenko, A.; Liu, D.; Matthies, H.G.; Nouy, A. To Be or Not to Be Intrusive? The Solution of Parametric and Stochastic Equations—the “Plain Vanilla” Galerkin Case. SIAM Journal on Scientific Computing 2014, 36, A2720–A2744. [CrossRef]

- Espig, M.; Hackbusch, W.; Litvinenko, A.; Matthies, H.; Wähnert, P. Efficient low-rank approximation of the stochastic Galerkin matrix in tensor formats. Computers and Mathematics with Applications 2014, 67, 818–829. [CrossRef]

- Liu, D.; Görtz, S. Efficient Quantification of Aerodynamic Uncertainty due to Random Geometry Perturbations. In New Results in Numerical and Experimental Fluid Mechanics IX; Dillmann, A.; others., Eds.; Springer International Publishing, 2014; pp. 65–73.

- Bompard, M.; Peter, J.; Désidéri, J.A. Surrogate models based on function and derivative values for aerodynamic global optimization. Fifth European Conference on Computational Fluid Dynamics, ECCOMAS CFD 2010; , 2010.

- Loeven, G.J.A.; Witteveen, J.A.S.; Bijl, H. A probabilistic radial basis function approach for uncertainty quantification. Proceedings of the NATO RTO-MP-AVT-147 Computational Uncertainty in Military Vehicle design symposium, 2007.

- Giunta, A.A.; Eldred, M.S.; Castro, J.P. Uncertainty quantification using response surface approximation. 9th ASCE Specialty Conference on Probabolistic Mechanics and Structural Reliability; 2004.

- Chkifa, A.; Cohen, A.; Schwab, C. Breaking the curse of dimensionality in sparse polynomial approximation of parametric PDEs. Journal de Mathematiques Pures et Appliques 2015, 103, 400 – 428. [CrossRef]

- Blatman, G.; Sudret, B. An adaptive algorithm to build up sparse polynomial chaos expansions for stochastic finite element analysis. Probabilistic Engineering Mechanics 2010, 25, 183 – 197. [CrossRef]

- Dolgov, S.; Khoromskij, B.; Litvinenko, A.; Matthies, H. Polynomial Chaos Expansion of Random Coefficients and the Solution of Stochastic Partial Differential Equations in the Tensor Train Format. SIAM/ASA Journal on Uncertainty Quantification 2015, 3, 1109–1135. [CrossRef]

- Najm, H. Uncertainty Quantification and Polynomial Chaos Techniques in Computational Fluid Dynamics. Annual Review of Fluid Mechanics 2009, 41, 35–52. [CrossRef]

- Conrad, P.; Marzouk, Y. Adaptive Smolyak Pseudospectral Approximations. SIAM Journal on Scientific Computing 2013, 35, A2643–A2670. [CrossRef]

- Xiu, D. Fast Numerical Methods for Stochastic Computations: A Review. Commun. Comput. Phys. 2009, 5, No. 2-4, 242–272.

- Smolyak, S.A. Quadrature and interpolation formulas for tensor products of certain classes of functions. Sov. Math. Dokl. 1963, 4, 240–243.

- Bungartz, H.J.; Griebel, M. Sparse grids. Acta Numer. 2004, 13, 147–269.

- Griebel, M. Sparse grids and related approximation schemes for higher dimensional problems. In Foundations of computational mathematics, Santander 2005; Cambridge Univ. Press: Cambridge, 2006; Vol. 331, London Math. Soc. Lecture Note Ser., pp. 106–161.

- Klimke, A. Sparse Grid Interpolation Toolbox,www.ians.uni-stuttgart.de/spinterp/ 2008.

- Novak, E.; Ritter, K. The curse of dimension and a universal method for numerical integration. In Multivariate approximation and splines (Mannheim, 1996); Birkhäuser: Basel, 1997; Vol. 125, Internat. Ser. Numer. Math., pp. 177–187.

- Gerstner, T.; Griebel, M. Numerical integration using sparse grids. Numer. Algorithms 1998, 18, 209–232. [CrossRef]

- Novak, E.; Ritter, K. Simple cubature formulas with high polynomial exactness. Constr. Approx. 1999, 15, 499–522. [CrossRef]

- Petras, K. Smolpack—a software for Smolyak quadrature with delayed Clenshaw-Curtis basis-sequence. http://www-public.tu-bs.de:8080/ petras/software.html.

- Eigel, M.; Gittelson, C.J.; Schwab, C.; Zander, E. Adaptive stochastic Galerkin FEM. Computer Methods in Applied Mechanics and Engineering 2014, 270, 247–269. [CrossRef]

- Beck, J.; Liu, Y.; von Schwerin, E.; Tempone, R. Goal-oriented adaptive finite element multilevel Monte Carlo with convergence rates. Computer Methods in Applied Mechanics and Engineering 2022, 402, 115582. A Special Issue in Honor of the Lifetime Achievements of J. Tinsley Oden. [CrossRef]

- Matthies, H. Uncertainty Quantification with Stochastic Finite Elements. In Encyclopedia of Computational Mechanics; Stein, E.; de Borst, R.; Hughes, T.R.J., Eds.; John Wiley & Sons: Chichester, 2007.

- Babuška, I.; Nobile, F.; Tempone, R. A stochastic collocation method for elliptic partial differential equations with random input data. SIAM J. Numer. Anal. 2007, 45, 1005–1034 (electronic). [CrossRef]

- Nobile, F.; Tamellini, L.; Tesei, F.; Tempone, R. An adaptive sparse grid algorithm for elliptic PDEs with log-normal diffusion coefficient. MATHICSE Technical Report 04, 2015.

- Radović, I.; Sobol, I.; Tichy, R. Quasi-Monte Carlo Methods for Numerical Integration: Comparison of Different Low Discrepancy Sequences. Monte Carlo Methods and Applications 1996, 2, 1–14. [CrossRef]

- Xiu, D.; Karniadakis, G.E. The Wiener-Askey polynomial chaos for stochastic differential equations. SIAM J. Sci. Comput. 2002, 24, 619–644.

- Litvinenko, A.; Logashenko, D.; Tempone, R.; Wittum, G.; Keyes, D. Propagation of Uncertainties in Density-Driven Flow. Sparse Grids and Applications — Munich 2018; Bungartz, H.J.; Garcke, J.; Pflüger, D., Eds.; Springer International Publishing: Cham, 2021; pp. 101–126. [CrossRef]

- Litvinenko, A.; Logashenko, D.; Tempone, R.; Wittum, G.; Keyes, D. Solution of the 3D density-driven groundwater flow problem with uncertain porosity and permeability. GEM - International Journal on Geomathematics 2020, 11, 10. [CrossRef]

- Oladyshkin, S.; Nowak, W. Data-driven uncertainty quantification using the arbitrary polynomial chaos expansion. Reliability Engineering & System Safety 2012, 106, 179–190. [CrossRef]

- Stoeckl, L.; Walther, M.; Morgan, L.K. Physical and Numerical Modelling of Post-Pumping Seawater Intrusion. Geofluids 2019, 2019. [CrossRef]

- Panda, M.; Lake, W. Estimation of single-phase permeability from parameters of particle-size distribution. AAPG Bull. 1994, 78, 1028–1039.

- Pape, H.; Clauser, C.; Iffland, J. Permeability prediction based on fractal pore-space geometry. Geophysics 1999, 64, 1447–1460. [CrossRef]

- Costa, A. Permeability-porosity relationship: A reexamination of the Kozeny-Carman equation based on a fractal pore-space geometry assumption. Geophysical Research Letters 2006, 33. [CrossRef]

- Frolkovič, P.; De Schepper, H. Numerical modelling of convection dominated transport coupled with density driven flow in porous media. Advances in Water Resources 2001, 24, 63–72. [CrossRef]

- Frolkovič, P. Consistent velocity approximation for density driven flow and transport. Advanced Computational Methods in Engineering, Part 2: Contributed papers; Van Keer, R.; at al.., Eds.; Shaker Publishing: Maastricht, 1998; pp. 603–611.

- Frolkovič, P.; Knabner, P. Consistent Velocity Approximations in Finite Element or Volume Discretizations of Density Driven Flow. Computational Methods in Water Resources XI; Aldama, A.A.; et al.., Eds.; Computational Mechanics Publication: Southhampten, 1996; pp. 93–100.

- Barrett, R.; Berry, M.; Chan, T.F.; Demmel, J.; Donato, J.; Dongarra, J.; Eijkhout, V.; Pozo, R.; Romine, C.; van der Vorst, H. Templates for the Solution of Linear Systems: Building Blocks for Iterative Methods; Society for Industrial and Applied Mathematics, 1994; [https://epubs.siam.org/doi/pdf/10.1137/1.9781611971538]. [CrossRef]

- Hackbusch, W. Multi-Grid Methods and Applications; Springer, Berlin, 1985.

- Hackbusch, W. Iterative Solution of Large Sparse Systems of Equations; Springer: New-York, 1994.

- Cliffe, K.; Giles, M.; Scheichl, R.; Teckentrup, A. Multilevel Monte Carlo methods and applications to elliptic PDEs with random coefficients. Computing and Visualization in Science 2011, 14, 3–15. [CrossRef]

- Collier, N.; Haji-Ali, A.L.; Nobile, F.; von Schwerin, E.; Tempone, R. A continuation multilevel Monte Carlo algorithm. BIT Numerical Mathematics 2015, 55, 399–432. [CrossRef]

- Giles, M.B. Multilevel Monte Carlo path simulation. Operations Research 2008, 56, 607–617. [CrossRef]

- Giles, M.B. Multilevel Monte Carlo methods. Acta Numerica 2015, 24, 259–328. [CrossRef]

- Haji-Ali, A.L.; Nobile, F.; von Schwerin, E.; Tempone, R. Optimization of mesh hierarchies in multilevel Monte Carlo samplers. Stoch. Partial Differ. Equ. Anal. Comput. 2016, 4, 76–112. [CrossRef]

- Teckentrup, A.; Scheichl, R.; Giles, M.; Ullmann, E. Further analysis of multilevel Monte Carlo methods for elliptic PDEs with random coefficients. Numerische Mathematik 2013, 125, 569–600. [CrossRef]

- Litvinenko, A.; Yucel, A.C.; Bagci, H.; Oppelstrup, J.; Michielssen, E.; Tempone, R. Computation of Electromagnetic Fields Scattered From Objects With Uncertain Shapes Using Multilevel Monte Carlo Method. IEEE Journal on Multiscale and Multiphysics Computational Techniques 2019, 4, 37–50. [CrossRef]

- Hoel, H.; von Schwerin, E.; Szepessy, A.; Tempone, R. Implementation and analysis of an adaptive multilevel Monte Carlo algorithm. Monte Carlo Methods and Applications 2014, 20, 1–41. [CrossRef]

- Hoel, H.; Von Schwerin, E.; Szepessy, A.; Tempone, R. Adaptive multilevel Monte Carlo simulation. In Numerical Analysis of Multiscale Computations; Springer, 2012; pp. 217–234.

- Charrier, J.; Scheichl, R.; Teckentrup, A.L. Finite Element Error Analysis of Elliptic PDEs with Random Coefficients and Its Application to Multilevel Monte Carlo Methods 2013. 51, 322–352. [CrossRef]

- Reiter, S.; Vogel, A.; Heppner, I.; Rupp, M.; Wittum, G. A massively parallel geometric multigrid solver on hierarchically distributed grids. Computing and Visualization in Science 2013, 16, 151–164. [CrossRef]

- Vogel, A.; Reiter, S.; Rupp, M.; Nägel, A.; Wittum, G. UG 4: A novel flexible software system for simulating PDE based models on high performance computers. Computing and Visualization in Science 2013, 16, 165–179. [CrossRef]

- Litvinenko, A.; Kriemann, R.; Genton, M.G.; Sun, Y.; Keyes, D.E. HLIBCov: Parallel hierarchical matrix approximation of large covariance matrices and likelihoods with applications in parameter identification. MethodsX 2020, 7, 100600. [CrossRef]

- Litvinenko, A.; Sun, Y.; Genton, M.G.; Keyes, D.E. Likelihood approximation with hierarchical matrices for large spatial datasets. Computational Statistics & Data Analysis 2019, 137, 115–132. [CrossRef]

- Matthies, H.G.; Zander, E.; Rosić, B.V.; Litvinenko, A. Parameter estimation via conditional expectation: a Bayesian inversion. Advanced Modeling and Simulation in Engineering Sciences 2016, 3, 24. [CrossRef]

- Rosić, B.; Kučerová, A.; Sýkora, J.; Pajonk, O.; Litvinenko, A.; Matthies, H. Parameter identification in a probabilistic setting. Engineering Structures 2013, 50, 179 – 196. Engineering Structures: Modelling and Computations (special issue IASS-IACM 2012). [CrossRef]

| Parameter | Values and Units | Description |

| 0.35 [-] | mean value of porosity | |

| D | [] | diffusion coefficient in the medium |

| [] | permeability of the medium | |

| g | [] | gravity |

| 1000 [] | density of pure water | |

| [] | density of brine | |

| [] | viscosity |

| level, ℓ | 0 | 1 | 2 | 3 | 4 | 5 |

| 1.156 | 4.113 | 20.382 | 139.0 | 993.0 | 8053.0 | |

| 1.4e-5 | 0.2e-5 | 0.5e-6 | 0.1e-6 | 0.5e-7 | 1e-7 | |

| =5e-6) | 35 | 7 | 2 | 1 | 1 | 1 |

| =1e-6) | 172 | 35 | 8 | 2 | 1 | 1 |

| =5e-7) | 343 | 69 | 16 | 3 | 1 | 1 |

| =1e-7) | 1714 | 344 | 78 | 14 | 4 | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).