Submitted:

25 April 2023

Posted:

26 April 2023

You are already at the latest version

Abstract

Keywords:

0. Background

1. Preliminaries

1.1. Motivation

1.2. Preliminary Definitions

- (a)

- (b)

- For all , exists (when A is countable infinite, then for every , must be finite since would be a discrete uniform distribution of ; otherwise, when A is uncountable, is the normalized Lebesgue measure or some other uniform measure on (e.g. [8]) where for every , either measure on exists and is finite.

- For all , is positive and finite such that is intrinsic. (For countably infinite A, would be the counting measure where is positive and finite since is finite. For uncountable A, would either be the Lebesgue measure or the radon-nikodym derivative of some other uniform measure on (e.g. [8]), where either of the measures on are positive and finite.)

- For every , set is finite, meaning each term of the pre-structure has a discrete uniform distribution. Therefore, exists.

- For every , is finite; meaning is the counting measure. Furthermore, since and for all , is positive and finite, criteria (3) of def. 2 is satisfied.

- For every , set is finite, meaning each term of the pre-structure has a discrete uniform distribution. Therefore, exists.

- For every , is finite; meaning is the counting measure, since (when is the Euler’s totient function [15], pp.239-249) we have , and if correct, is greater than zero and positive for all . Therefore, criteria (3) of def. 2 is satisfied.

- The element

- The set is arbitrary and uncountable.

- The element

- The set is arbitrary and uncountable.

- Arrange the x-value of the points in the sample of uniform ε coverings from least to greatest. This is defined as:

- Take the multi-set of the absolute differences between all consecutive pairs of elements in (1). This is defined as:

- Normalize (2) into a probability distribution. This is defined as:

- which organizes elements in from least to greatest.

- Since we use this to normalize (2) into a probability distribution

- Hence we take the entropy of or:

- (a)

-

From def. 5 and 6, suppose we have:then (using 14) we have

- (b)

-

From def. 5 and 6, suppose we have:then (using 16) we get

-

If using equations 15 and 17 we have that:we say converges uniformly to A at asuperlinear rateto that of .

-

If using equations 15 and 17 we have either:

- (a)

-

- (b)

-

- (c)

-

- (d)

-

we then say converges uniformly to A at alinear rateto that of .

-

If using equations 15 and 17 we have that:we say converges uniformly to A at asublinear rateto that of .

- (we choose this pre-structure since if is the highest entropy (def. 6) that could be for every , we say has ahigher entropy per elementthan that of if there exists a , such for all , ).

1.3. Question on Preliminary Definitions

- Are there “simpler" alternatives to either of the preliminary definitions? (Keep this in mind as we continue reading).

2. Main Question

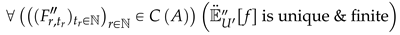

- The expected value of f on each term of the pre-structure is finite

- The pre-structure converges uniformly to A

- The pre-structure converges uniformly to A at a linear or superlinear rate to that of other non-equivalent pre-structures of A which satisfies (1) and (2).

- The generalized expected value of f on a pre-structure (i.e. an extension of def.3 to answer the full question) has a unique & finite value, such the pre-structure satisfies (1), (2), and (3).

- A choice function is defined which chooses a pre-structure from A where the following satisfies (1), (2), (3), and (4) for the largest possible subset of .

- If there is more than one choice function that satisfies (1), (2), (3), (4) and (5), we choose the choice function with the “simplest form", meaning for a general pre-structure of A, when each choice function is fully expanded, we take the choice function with the fewest variables/numbers (excluding those with quantifiers).

3. Informal Attempt to Answer Main Question

3.1. Generalized Expected Values

3.2. Choice Function

3.3. Questions on Choice Function

-

Suppose we define function . What unique pre-structure would contain (if it exists) for:

- where if and , we want

- where if and , we want

- where we’re not sure what would be if . What would be if it’s unique?

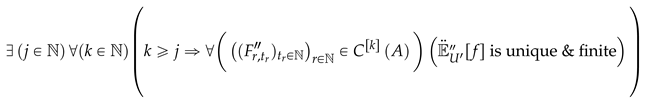

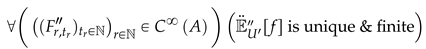

3.4. Increasing Chances of an Unique and Finite Expected Value

- For a worst-case f defined on countably infinite A (e.g. countably infinite "pseudo-random points" non-uniformly scattered across the real plane), one may need just one iteration of C (since most function on countable sets need just one iteration of C for to be unique); otherwise, one may use equation 33 for finite iterations of C.

- For a worst-case f defined on uncountable A, we might have to use equation 34 as averaging such a function might be nearly impossible. We can imagine this function as an uncountable number of "pseudo-random" points non-uniformly generated on a subset of the real plane (see Section 4.1 for a visualization.)

3.5. Questions Regarding The Answer

-

Using prevalence and shyness [11,14], can we say the set of f where either equations 32, 33 and 34 have an unique and finite which forms either a prevalent or neither prevalent nor shy subset of ? (If the subset is prevalent, this implies either one of the generalized expected values can be unique and finite for a “large" subset of ; however, if the subset is neither prevalent nor shy we need more precise definitions of “size" which takes “an exact probability that the expected values are unique & finite"—some examples (which are shown in this answer [9]) being:

- (a)

- Fractal Dimension notions

- (b)

- Kolmogorov Entropy

- (c)

- Baire Category and Porosity

- There may be a total of 292 variables in the choice function C (excluding quantifiers). Is there a choice function (ignoring quantifiers) which answers criteria (1), (2), (3) & (4) of the main question in Section 2 for a "larger" subset of ? (This might be impossible to answer since such a solution cannot be shown with prevalence or shyness [11,14])—therefore, we need a more precise version of “size" with some examples, again, shown in [9].

- If question (2) is correct, what is the choice function C using either equations 32, 33 and 34 fully answers the question in Section 2?

- Can either equations 32, 33 and 34 (when A is the set of all Liouville numbers [6] and ) give a finite value? What would the value be?

- Similar to how definition 13 in §4 approximates the expected value in definition 1, how do approximate equations 32, 33 and 34?

- Can programming be used to estimate equations 32, 33 and 34 respectively (if an unique/finite result of either of the expected values exist)?

3.6. Applications

-

In Quanta magazine [3], Wood writes on Feynman Path Integrals: “No known mathematical procedure can meaningfully average1 an infinite number of objects covering an infinite expanse of space in general. The path integral is more of a physics philosophy than an exact mathematical recipe."—despite Wood’s statement, mathematicians Bottazzi E. and Eskew M. [5] found a constructive solution to the statement using integrals defined on filters over families of finite sets; however, the solution was not unique as one has to choose a value in a partially ordered ring of infinite and infinitesimal elements.

- (a)

- Perhaps, if Botazzi’s and Eskew’s Filter integral [5] is not enough to solve Wood’s statement, could we replace the path integral with expected values from equations 32, 33 and 34 respectively (or a complete solution to Section 2)? (See, again, Section 4.1 for a visualization of Wood’s statement.)

-

As stated in Section 1.1, “when the Lebesgue measure of A, measurable in the Caratheodory sense, has zero or infinite volume (or undefined measure), there may be multiple, conflicting ways of defining a "natural" uniform measure on A." This is an example of Bertand’s Paradox which shows, "the principle of indifference (that allows equal probability among all possible outcomes when no other information is given) may not produce definite, well-defined results for probabilities if applied uncritically, when the domain of possibilities is infinite [16].Then might serve as a solution to Bertand’s Paradox (unless there’s a better and which completely solves the main question in ).Now consider the following:

- (a)

-

How do we apply (or a better solution) to the usual example which demonstrates the Bertand’s Paradox as follows: for an equilateral triangle (inscribed in a circle), suppose a chord of the circle is chosen at random—what is the probability that the chord is longer than a side of the triangle? [4] (According to Bertand’s Paradox there are three arguments which correctly use the principle of indifference yet give different solutions to this problem [4]:

- The “random endpoints" method: Choose two random points on the circumference of the circle and draw the chord joining them. To calculate the probability in question imagine the triangle rotated so its vertex coincides with one of the chord endpoints. Observe that if the other chord endpoint lies on the arc between the endpoints of the triangle side opposite the first point, the chord is longer than a side of the triangle. The length of the arc is one-third of the circumference of the circle, therefore the probability that a random chord is longer than a side of the inscribed triangle is .

- The "random radial point" method: Choose a radius of the circle, choose a point on the radius, and construct the chord through this point and perpendicular to the radius. To calculate the probability in question imagine the triangle rotated so a side is perpendicular to the radius. The chord is longer than a side of the triangle if the chosen point is nearer the center of the circle than the point where the side of the triangle intersects the radius. The side of the triangle bisects the radius, therefore the probability a random chord is longer than a side of the inscribed triangle is .

- The "random midpoint" method: Choose a point anywhere within the circle and construct a chord with the chosen point as its midpoint. The chord is longer than a side of the inscribed triangle if the chosen point falls within a concentric circle of radius the radius of the larger circle. The area of the smaller circle is one-fourth the area of the larger circle, therefore the probability a random chord is longer than a side of the inscribed triangle is .

4. Glossary

4.1. Example of Case (2) of Worst Case Functions

4.2. Question Regarding Section 4.1

4.3. Approximating the Expected Value

- We need to know when point x is in set A or not

- We need to be able to generate points from a density g that is on a support that covers A but is not too much bigger than A

- We have to be able to compute and for each point

References

- Krishnan B. Finding expected value over uncountable number of pseudo-random points, non-uniformly distributed over the sub-space of R2, 2023. https://mathematica.stackexchange.com/questions/283525/finding-expected-value-over-uncountable-number-of-pseudo-random-points-non-unif.

- Patrick B. John Wiley & Sons, New York, 3 edition, 1995. https://www.colorado.edu/amath/sites/default/files/attached-files/billingsley.pdf.

- Wood C. Mathematicians prove 2d version of quantum gravity really works. Quanta Magazine. https://www.quantamagazine.org/mathematicians-prove-2d-version-of-quantum-gravity-really-works-20210617.

- Alon Drory. Failure and uses of jaynes’ principle of transformation groups. Foundations of Physics, 45(4):439–460, feb 2015. https://arxiv.org/pdf/1503.09072.pdf.

- Bottazi E. and Eskew M. Integration with filters. https://arxiv.org/pdf/2004.09103.pdf.

- Adam Grabowski and Artur Kornilowicz. Introduction to liouville numbers. Formalized Mathematics, 25, 01 2017. https://sciendo.com/article/10.1515/forma-2017-0003.

- Michael Greinecker (https://mathoverflow.net/users/35357/michael greinecker). Demystifying the caratheodory approach to measurability. MathOverflow. https://mathoverflow.net/q/34007.

- Mark McClure (https://mathoverflow.net/users/46214/mark mcclure). Integral over the cantor set hausdorff dimension. MathOverflow. https://mathoverflow.net/q/235609 (version: 2016-04-07).

- Dave L. Renfro (https://math.stackexchange.com/users/13130/dave-l renfro). Proof that neither “almost none” nor “almost all” functions which are lebesgue measurable are non-integrable. Mathematics Stack Exchange. https://math.stackexchange.com/q/4623168 (version: 2023-01-21).

- Ben (https://stats.stackexchange.com/users/173082/ben). In statistics how does one find the mean of a function w.r.t the uniform probability measure? Cross Validated. https://stats.stackexchange.com/q/602939 (version: 2023-01-24).

- Brian R. Hunt. Prevalence: a translation-invariant “almost every” on infinite-dimensional spaces. 1992. https://arxiv.org/abs/math/9210220.

- Gray M. Springer New York, New York [America];, 2 edition, 2011. https://ee.stanford.edu/~gray/it.pdf.

- Rokach L. Maimon O. Springer New York, New York [America];, 2 edition, 2010. [CrossRef]

- William Ott and James A. Yorke. Prevelance. Bulletin of the American Mathematical Society, 42(3):263–290, 2005. https://www.ams.org/journals/bull/2005-42-03/S0273-0979-05-01060-8/S0273-0979-05-01060-8.pdf.

- Kenneth H. Rosen. Elementary number theory and its applications (6. ed.). Addison-Wesley, 1993. https://www.bibsonomy.org/bibtex/2bdf609bd9cb49ba96ef69ca99540db82/dblp.

- Nicholas Shackel. Bertrand’s paradox and the principle of indifference. Philosophy of Science, 74(2):150–175, 2007. https://orca.cardiff.ac.uk/id/eprint/3803/1/Shackel%20Bertrand’s%20paradox%205.pdf.

- Leinster T. and Roff E. The maximum entropy of a metric space. https://arxiv.org/pdf/1908.11184.pdf.

| 1 | Meaningful Average—The average answers the main question in §2. |

| 2 | Wood wrote on Feynman Path Integrals: “No known mathematical procedure can meaningfully average 1 an infinite number of objects covering an infinite expanse of space in general." |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).