Submitted:

14 February 2023

Posted:

22 February 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Entropic Dynamics

2.2. Kullback Principle of Minimimum Information Discrimination

- Uniqueness: The result of the inference should be unique.

- Invariance: The choice of coordinate system should not matter.

- System Independence: It should not matter whether one accounts for independent information about independent systems separately in terms of different densities or together in terms of a joint density.

- Subset Independence: It should not matter whether one treats an independent subset of system states in terms of a separate conditional density or in terms of the full system density.

2.3. Biological Continuum (Biocontinuum)

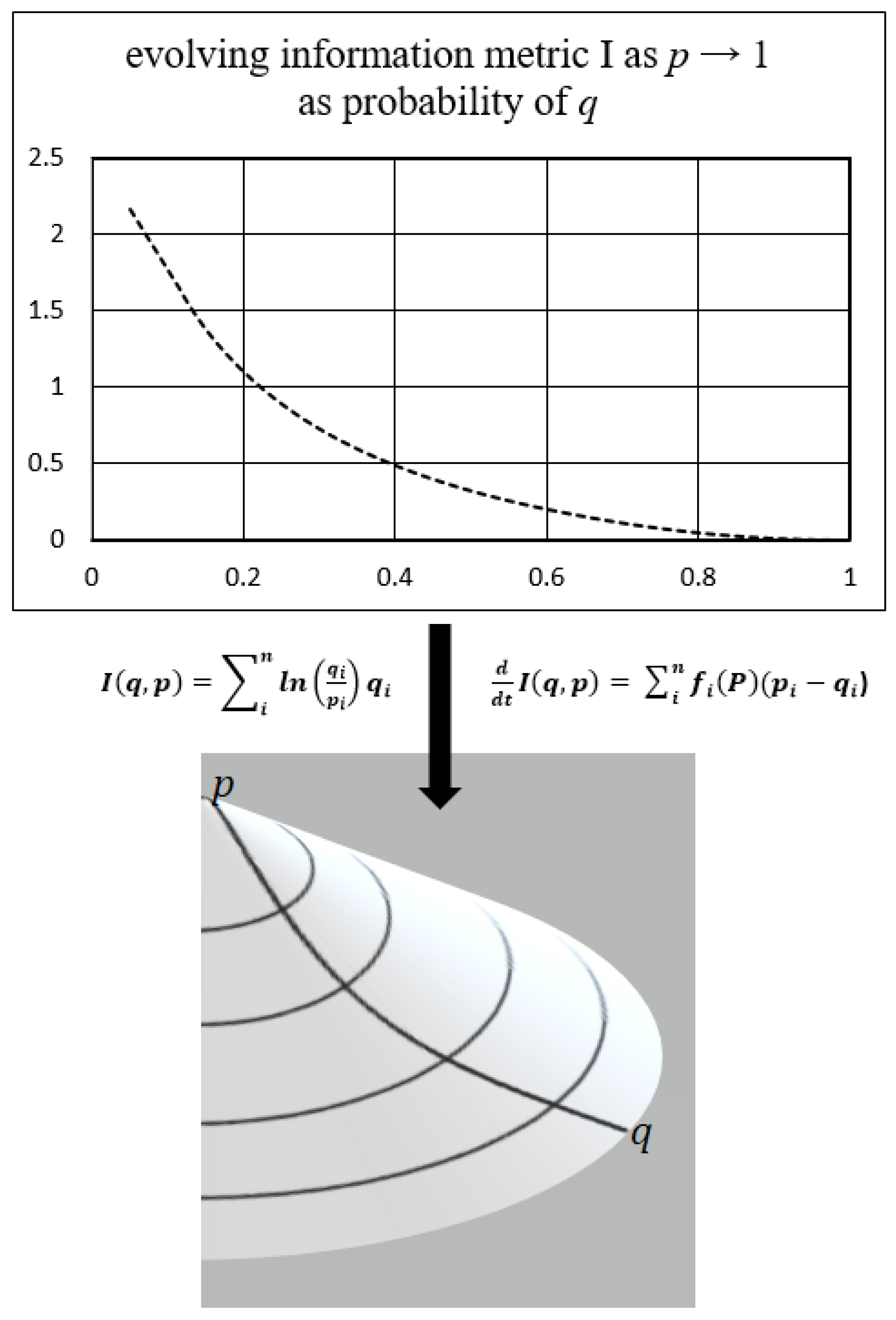

2.4. Information Geometry

2.5. Replicator Dynamics

3. Results

3.1. Derivation of Equations of Entropic Dynamics for the Biosystem

3.2. Information Geometry of the Biological Continuum (Biocontinuum)

4. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Schrödinger, E. What is Life? Cambridge. MA: 2012, Cambridge University Press, reprint edition.

- Cafaro C. The Information Geometry of Chaos. VDM Verlag Dr. Mueller e.K 2008.

- Caticha A. Entropic Dynamics, Entropy 17, 6110-6128, 2015. [CrossRef]

- Prigogine I, Stengers I. 1984, Order Out of Chaos, Brooklyn, NY: Bantam; 1984, ISBN: 0553343637.

- Morowitz, H. J. Energy Flow in Biology: Biological Organization as a Problem in Thermal Physics. Cambridge, MA: Academic Press 1968. [CrossRef]

- Leff, H. S., Rex A. Maxwell's Demon: Entropy, Information, Computing. Princeton, NJ: Princeton University Press, 2014.

- Hemmo, M., Shenker, O. R. The Road to Maxwell's Demon: Conceptual Foundations of Statistical Mechanics. Cambridge, UK: Cambridge University Press, 2012.

- Shannon, C. A Mathematical Theory of Communication. Bell System Tech. J. 27, 379–423, 1948. [CrossRef]

- Summers, RL. Experiences in the Biocontinuum: A New Foundation for Living Systems. Cambridge Scholars Publishing. Newcastle upon Tyne, UK, 2020, ISBN (10): 1-5275-5547-X, ISBN (13): 978-1-5275-5547-1.

- Jaynes ET, Rosenkrantz RD (Ed.), Papers on Probability, Statistics and Statistical Physics, Reidel Publishing Company, Dordrecht, 1983.

- Walker SI, Davies PCW. The algorithmic origins of life. J R Soc Interface, 2013, 10: 20120869. [CrossRef]

- Rovelli, Carlo. Meaning = Information + Evolution. arXiv:1611.02420 [physics.hist-ph].

- Caticha, Ariel, Cafaro, Carlo. 2007. From Information Geometry to Newtonian Dynamics. http://arxiv.org/abs/0710.1071v1.

- Caticha, Ariel. 2008. From Inference to Physics. The 28th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering. Boraceia Beach, Sao Paulo, Brazil. http://arxiv.org/abs/0808.1260v1.

- Frieden, B. Roy. 2004. Science from Fisher Information: A Unification. Cambridge, UK: Cambridge University Press. ISBN 0-521-00911-1.

- Kullback S. Information Theory and Statistics. Dover, New York, 1968.

- Jaynes, E.T., Rosenkrantz, R.D. 1983. Papers on Probability, Statistics and Statistical Physics. Dordrecht, Holland: Reidel Publishing Company.

- Jaynes, E.T. 2003. Probability Theory: The Logic of Science. Cambridge, UK: Cambridge University Press. [CrossRef]

- Karev, G. 2010. Replicator Equations and the Principle of Minimal Production of Information”. Bulletin of Mathematical Biology. 72. 1124-42. [CrossRef]

- Karev, G. 2010. “Principle of Minimum Discrimination Information and Replica Dynamics. Entropy. No. 12:1673-1695. [CrossRef]

- Summers RL. An Action Principle for Biological Systems. J. Phys.: Conf. Series 2021, 2090 012109. [CrossRef]

- Shore JE, Johnson RW. Properties of cross-entropy minimization. IEEE Transactions on Information Theory, IT-27, 472-482, 1981. [CrossRef]

- Maturana, H.R., Varela, F.J. 1991. Autopoiesis and Cognition: The Realization of the Living. New York, NY: Springer Science & Business Media.

- Varela, F., Thompson, E. Rosch, E. 1991. The Embodied Mind: Cognitive Science and Human Experience. Cambridge, MA: MIT Press.

- Newby, G. B. 2001. Cognitive space and information space. Journal of the American Society for Information Science and Technology. No. 52:12. [CrossRef]

- Amari S, Nagaoka H. Methods of Information Geometry. Vol. 191 of Translations of Mathematical Monographs. Oxford, England: Oxford University Press, 1993.

- Caticha, Ariel. The Basics of Information Geometry. The 34th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering 2014. Amboise, France. arXiv:1412.5633. [CrossRef]

- Fisher, R.A. 1930. The Genetical Theory of Natural Selection. Oxford, UK: Clarendon Press.

- Dawkins, Richard. 1976. The Selfish Gene. Oxford, UK: Oxford University Press.

- Haldane, JBS. 1927. "A Mathematical Theory of Natural and Artificial Selection, Part V: Selection and Mutation". Mathematical Proceedings of the Cambridge Philosophical Society. No. 23(7): 838–844. [CrossRef]

- Taylor, P.D., Jonker, L. 1978. Evolutionary Stable Strategies and Game Dynamics. Mathematical Biosciences, No. 40:145-156. [CrossRef]

- Lotka, A.J. 1922b. “Natural Selection as a Physical Principle”. Proceedings of the National Academy of Sciences of the United States of America, No. 8: 151-154. [CrossRef]

- Rutkowski, Leszek. Computational Intelligence. Methods and Techniques. New York, NY: Springer, 2010. ISBN 978-3-540-76287-4.

- Landauer R. 1961. Irreversibility and Heat Generation in the Computing Process. IBM Journal of Research and Development, No. 5 (3):183–191. [CrossRef]

- Fujiwara A, Amari Shun. Gradient Systems in View of Information Geometry. Physica D: Nonlinear Phenomena, 1995, No. 80: 317-327.

- Harper M, The replicator equation as an inference dynamic, 2009. arXiv:0911.1763.

- Harper M, Information geometry and evolutionary game theory, 2009, arXiv:0911.1383.

- Baez, JC, Pollard, B.S. Relative Entropy in Biological Systems. Entropy 18(2): 46-52, 2016. [CrossRef]

- Summers, R.L. Lyapunov Stability as a Metric for Meaning in Biological Systems. Biosemiotics (2022). [CrossRef]

- Summers, RL. Quantifying the Meaning of Information in Living Systems. Academia Letters 2022, Article 4874. [CrossRef]

- Friston K. The free-energy principle: a unified brain theory? Nature Reviews Neuroscience. 11:12738, 2010. [CrossRef]

- Skarda, C., Freeman, W. 1990. Chaos and the New Science of the Brain. Concepts in Neuroscience. 1-2. 275-285.

- Skarda, C. 1999. The Perceptual Form of Life” in Reclaiming Cognition: The Primacy of Action, Intention, and Emotion”. Journal of Consciousness Studies 6, No. 11-12. 79-93.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).