1. Introduction

Tele-rehabilitation has long been considered a promising way of providing rehabilitative therapies "at distance" [

1,

2,

3]. Digital sensing and artificial intelligence solutions enable patient-centred treatment by continuously monitoring and evaluating patient performances [

4,

5]. Over the past few years, the COVID-19 pandemic has accelerated this transition to a new era known as health 5.0 [

6,

7]. In this context, extended reality helped to provide an alternative therapy at a distance for a wide range of people. Notably, different solutions were proposed for older adults with neurodegenerative diseases [

8,

9,

10].

Brain-computer interfaces (BCIs) based on the motor imagery paradigm have been extensively studied for human patients with a variety of neuromuscular disorders in order to facilitate recovery of neural functions. Its effectiveness is confirmed especially for stroke patients [

11,

12,

13]. The combination of BCIs and extended reality can provide patients with neurofeedback on their mental tasks [

14]. In particular, sensory feedback helps them in the self-regulation of brain rhythms and promotes neural plasticity.

To be involved in tele-rehabilitation, a system including BCI and extended reality must be non-invasive, wearable, portable, comfortable, and generally ready for getting out of controlled lab environments [

15,

16]. These requirements are often fulfilled by exploiting electroencephalography (EEG) to acquire brain signals [

17]. EEG systems for "out-of-lab" acquisitions are increasingly being developed [

18]. These are mainly wireless devices with a reduced number of sensors that allow freedom of movement and improve usability [

19,

20]. Moreover, instead of the standard wet sensors, dry sensing could be used to increase user comfort while attempting to keep high metrological performance [

21,

22,

23].

Previous studies already proposed EEG devices relying on dry sensors. For example, new systems were either proposed in [

24,

25,

26] or evaluated consumer-grade dry electrodes [

27,

28]. For instance, a BCI with a soft robotic glove was proposed in [

29] for stroke rehabilitation, and it adopted a medical EEG device with 24 wet sensors. Moreover, in [

30], classification was attempted in different dry sensing setups (from 8 to 32 sensors) and with different signal processing approaches. A wireless high-density EEG medical grade system was used and a drop in performance was observed when 8 channels were used. However, neurofeedback was not investigated in trying to enhance motor imagery detection. Recently, the feasibility of a wearable BCI for neurorehabilitation at home was proposed in [

31]. Healthy participants received remote instructions on the use of an EEG device with 16 dry sensors. Visual feedback consisted of a bar fluctuating vertically up or down from the midline. Half of the participants succeeded in controlling the BCI during six sessions.

On the contrary, in a previous related work [

32], the authors investigated a motor imagery-based BCI with only three EEG acquisition channels. Three feedback modalities were compared to improve motor imagery detection namely visual, haptic and, visual-haptic, and results highlighted a statistically significant improvement when using neurofeedback. In there, participants generally preferred visual and visual-haptic feedback modalities. Nonetheless, wet sensors were used and the number of participants to the experiments was limited. The present study tries to overcome the discussed limitations by also adopting a new system version. A ready-to-use medical device was particularly adopted, so that the final system can be included in tele-rehabilitation programs.

Therefore, a fully-wearable motor imagery-based BCI was implemented by relying on a Class IIA EEG device with 8 dry sensors and certified according to the Medical Device Regulation. The effectiveness of visual-haptic neurofeedback in discriminating between left hand and right hand motor imagery was also investigated over 5 experimental sessions for each of the 27 enrolled subjects. To this aim, the subjects were divided into a control group and a neurofeedback group. Preliminary results were presented in [

33], but extended here by considering a large subject cohort and results of questionnaires administered to evaluate usability. The remainder of the paper is organised as follows:

Section 2 presents an overview of the proposed system, with a focus on the experimental protocol and outcome measures;

Section 3 shows system performance in experiments;

Section 4 concludes the manuscript by discussing the results.

2. Materials and methods

This section discusses the design, the implementation, and the validation methods for a wearable BCI relying on motor imagery, EEG with dry sensors, and online neurofeedback. An overview of the system is given together with the adopted hardware. Then, EEG processing is focused in association with the experimental protocol. Questionnaires will be also introduced. They were adopted to assess the usability of the system and the imaginative abilities of its users. Finally, the tests considered within the statistical analysis are recalled.

2.1. System overview

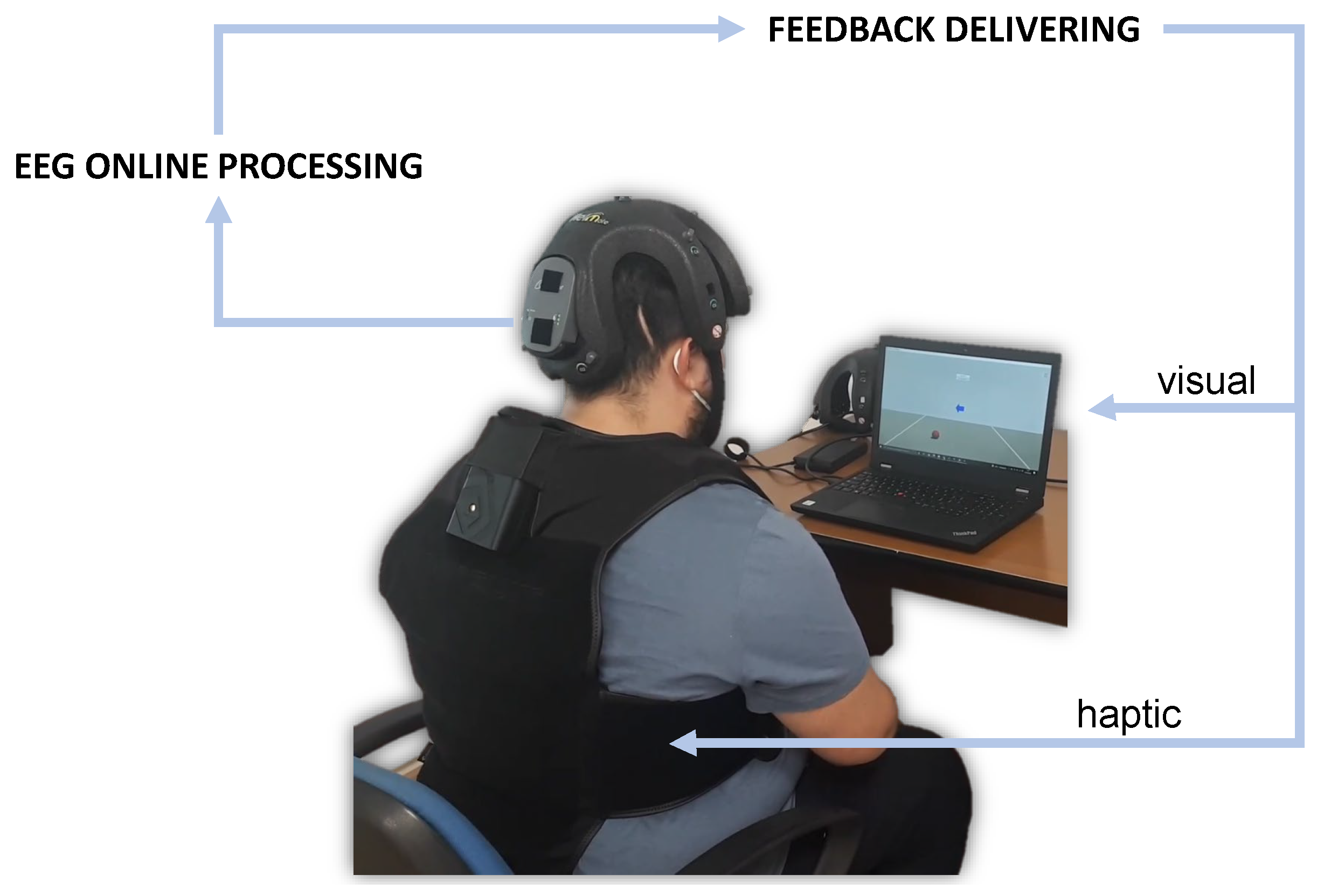

The present study proposes a new system integrating a BCI with neurofeedback in extended reality (

Figure 1). This could be addressed to daily-life applications, and notably used for tele-rehabilitation purposes.

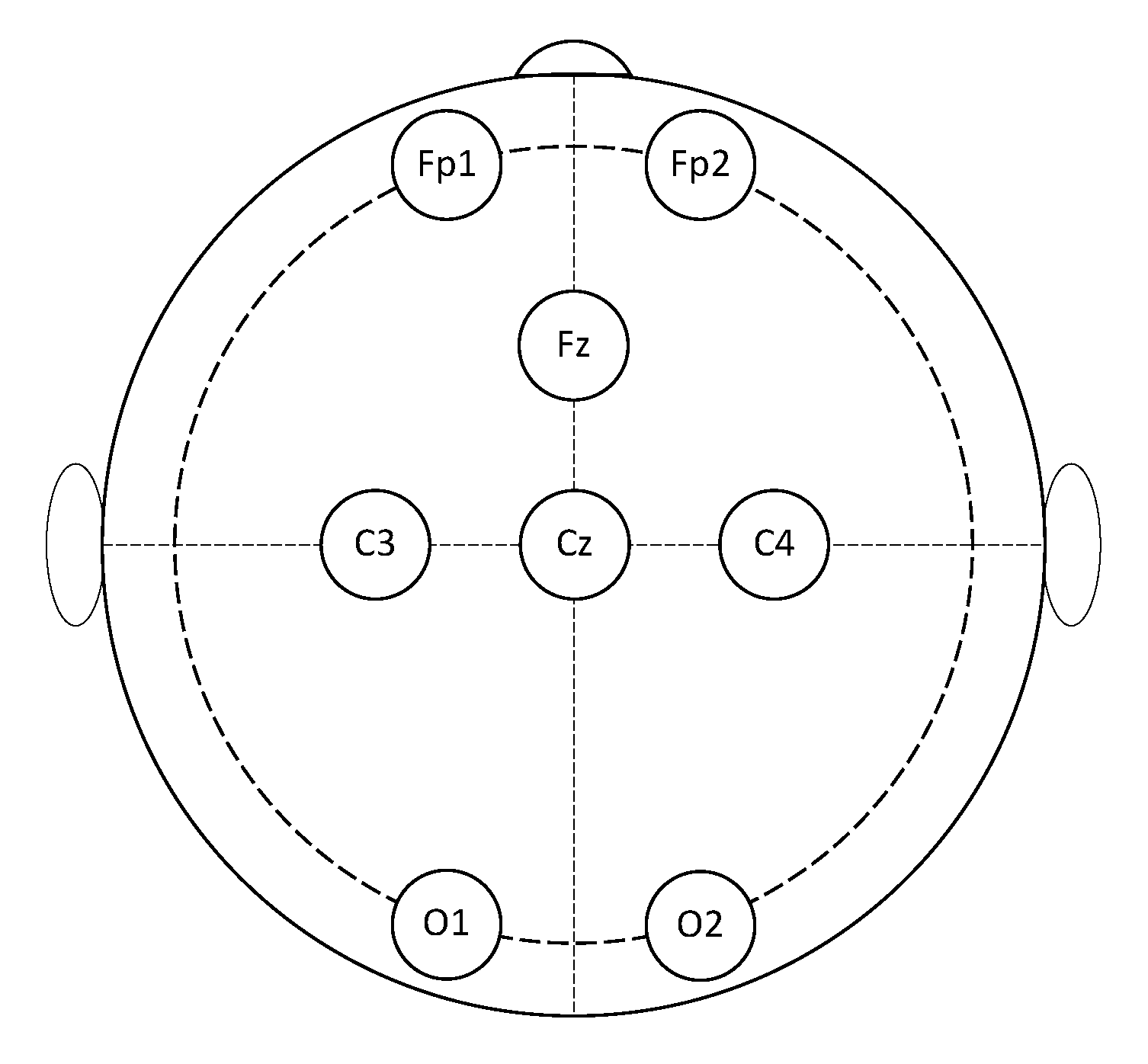

In the system, brain signals were acquired by using the

Helmate EEG device by ab medica®

1. This is a Class IIA device certified according to the Medical Device Regulation (EU) 2017/745. It has eight measuring channels plus one reference channel and one bias channel. Ten dry sensors with different shapes can be chosen according to the zone of the scalp to reach. Moreover, different configurations for the channels’ location could be exploited. In this study, the eight measuring channels were located at FP1, FP2, Fz, Cz, C3, C4, O1, and O2, while the reference and bias sensors were placed in the frontal region at AFz and FPz, respectively (

Figure 2).

Data were collected at a sampling rate of 512 Sa/

and transmitted via Bluetooth to a custom Simulink model for EEG processing. In Simulink, features from the EEG signal were extracted by means of the Filter Bank Common Spatial Pattern (FBCSP) [

34] and classified by means of the Naive Bayesian Parzen Window (NBPW). The latter returns two outputs: the class to which the multi-channel EEG signal is assigned (right or left), and the probability associated with that class.

The classification outputs were used to drive multimodal feedback through a custom Unity application. The neurofeedback consisted of a combination of visual and haptic feedback. For the visual feedback, a virtual ball was shown on a display (

Figure 3). This could roll to the left or to the right of the virtual environment according to the EEG classification. In detail, while the assigned class determined the direction, the related score determined its velocity. The TactSuit X40 from bHaptics Inc was instead used for the haptic feedback. This is a wearable and portable vest equipped with 40 individually controllable vibrotactile motors. The vibration was again modulated by classification outputs. More specifically, the pattern could move from the centre of the torso (front side) to the right or to the left in accordance with the assigned class. Meanwhile, the related score determined the vibration intensity. It is worth noting that the only bottom motors were used to minimize vibration artifacts on the EEG signals.

2.2. Experimental protocol

The described BCI was exploited within a cue-based (synchronous) paradigm. This implied that the user had to imagine a movement or be relaxed in accordance with given indications (the cues). The indications were delivered visually by means of the Unity3D platform. Two motor imagery tasks were possible, namely imagining the movement of the left hand or imagining the movement of the right hand. In case of neurofeedback, multimodal feedback was delivered to the user in response to the ongoing mental task. However, pure motor imagery (no feedback) was required to train the classifier adopted for the online processing.

In the experimental protocol, subjects were divided into two groups and involved in five one-hour experimental sessions over five weeks. The subjects assigned to a control group never received feedback. Instead, for the subjects of the neurofeedback group, pure motor imagery was recorded at the beginning of each session, and then neurofeedback was provided thanks to trained EEG classifier. The protocol for the two groups is described in details in the following.

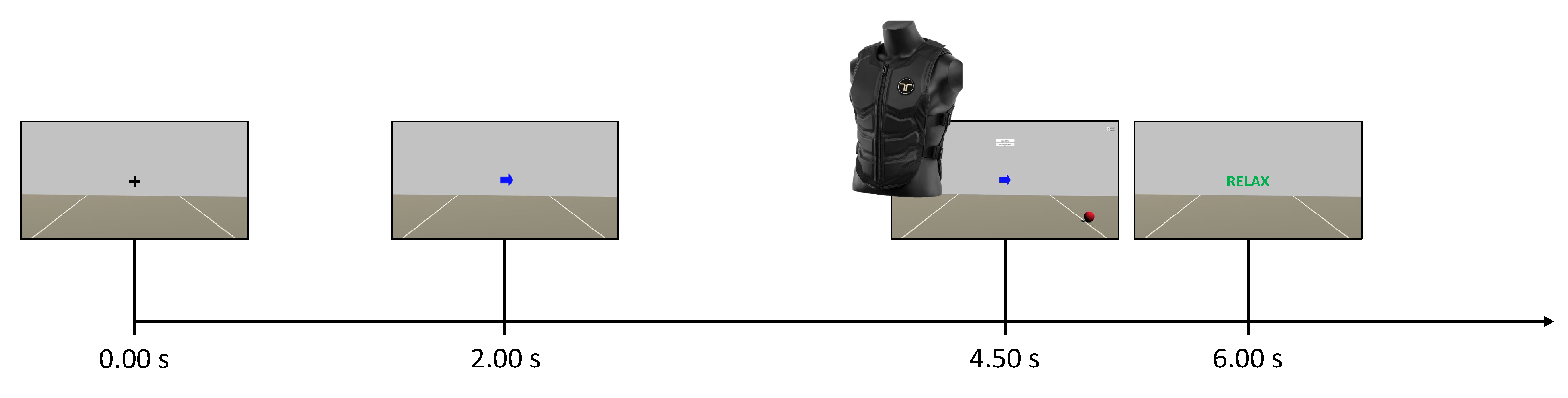

2.2.1. Control group

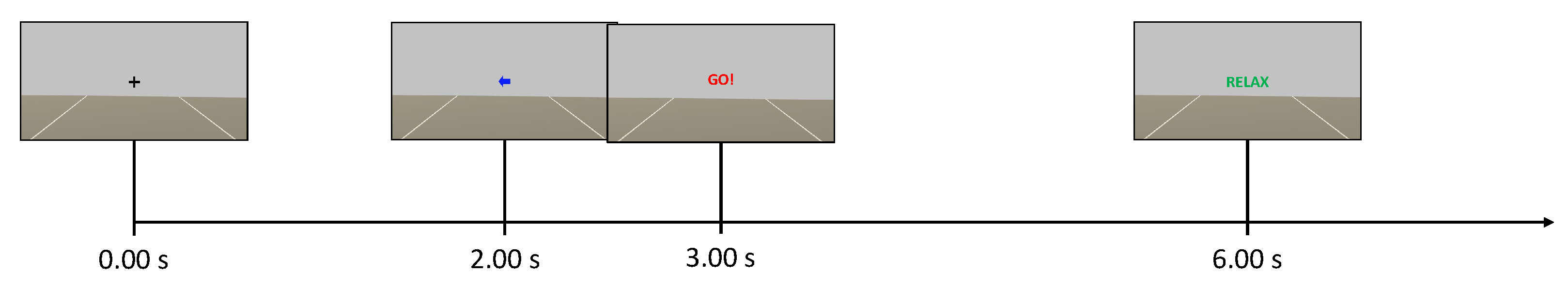

The Unity application dictated the timing within the experimental session. A total of six runs were recorded, and each run consisted of 30 trials. Each trial consisted of a fixation cross visualized from 0.00

–2.00

, a cue (left or right arrow) visualized from 2.00

–3.25

, the word "GO!" visualized from 3.00

–6.00

, and the word "RELAX" visualized for a random time window of 1.00

–2.00

(

Figure 3). The sequence of left and right cues and the duration of the final "RELAX" were randomized across trials to avoid biases. The EEG was acquired as a continuous stream during each run, but never processed online and thus the control group did not received any feedback. The runs were separated by short breaks, with a longer time break between the first three runs (phase 1) and the last three runs (phase 2) of a session.

2.2.2. Neurofeedback group

The first three runs of each session (phase 1) were carried out as done for the control group. However, during the time break between the phases, the EEG data from phase 1 were used to train the online classifier. This classifier was trained from scratch for each subject and for each session. Subsequently, participants of this group performed three further runs (phase 2) during which they received online multimodal feedback in response to motor imagery. The goal of the participants in the neurofeedback group was to move the visual feedback ball over the white lines of the game environment and to maximally activate the motors of the vest on the back of the respective side (i.e., left or right). In this case, the timing was slightly changed because participants were asked to start imagining from the appearance of the cue at

. Then, they received the feedback from 4.50

–6.00

(

Figure 4). The instant

depended on the fact that the system actually started to classify at

, and the time window for online processing was

wide. Finally, the feedback could only move if the label obtained from the online classifier matched the assigned task (positive bias). Otherwise, no feedback was provided and the virtual ball was dragged towards the centre of the screen while the intensity of the vibration was interrupted. Further details on that are discussed in the next subsection.

2.3. EEG processing

The FBCSP with the NBPW classifier were used not only for online processing, but also for offline processing of EEG data. With reference to the neurofeedback group, after acquiring the EEG in a first half-session, data processing was needed to train the online classification algorithm. Details on the processing pipeline can be found in [

32,

33,

34]. Specifically for online processing, the FBCSP-based approach was adapted so that the EEG stream was processed with a sliding window covering the motor imagery period.

By exploiting the results of previous studies [

32,

33], the time-width for the sliding windows was fixed at

, and this was used to span the interval from 0.00

–7.00

with a

shift. A five-folds cross validation with five repetitions was used to identify the best portion of the EEG trials for training the algorithm. This best

-wide window was selected as the one associated with the highest mean classification accuracy and the lowest difference between classification accuracies per class. Possible windows were extracted from the motor imagery window by considering all trials of phase 1.

Finally, at the end of the experiments, all data were processed offline to classify all data and assess the related accuracy. Differently from above, the Artifact Subspace Reconstruction (ASR) technique was also applied in post-processing [

35]. This is a relatively recent technique for artifact removal exploited here before to prepare data prior to features extraction and classification. ASR uses an artefact-free data segment as a baseline and then corrects the original data by calculating a covariance matrix and retrieving statistics to identify and remove artefacts. Notably, its usefulness for an eight EEG channels setup is supported by previous studies [

36].

2.4. Outcome measures

To evaluate the usability of the proposed system and the participants’ imaginative abilities, the following questionnaires were administered to participants of both groups:

MIQ-3 [

37]: this is the most recent version of the Movement Imagery Questionnaire [

38] and of the Movement Imagery Questionnaire-Revised [

39]. It is a 12-item questionnaire to assess an individual’s ability to imagine four movements using internal visual imagery, external visual imagery, and kinaesthetic imagery. The rating scales range from 1 (very difficult to see/feel) to 7 (very easy to see/feel). The MIQ-3 has good psychometric properties, internal reliability and predictive validity.

SUS (System Usability Scale) [

40]: this is one of the most robust and tested psychometric tool for user-perceived usability. The SUS score consists of a value between 0 and 100, with high values indicating better usability. According to Bargor et al. [

41], it is possible to adopt a 7-point adjectival scale (from "worst imaginable" to "best imaginable") for the SUS score. Another variation, proposed in [

42], is to consider the score in terms of "acceptable" (value above 70) or "not acceptable" (value below 50). The range from 50–70 is instead "marginally acceptable".

NASA-TLX (acronym for NASA Task Load Index) [

43]: it is a subjective, multidimensional evaluation tool that assesses perceived workload while performing a task or an activity. The original version also includes a weighting scheme to account for individual differences. However, the most common change made to the questionnaire is the elimination of these weights in order to simplify its application [

44]. In this work, it was administered without weights.

UEQ-S (User Experience Questionnaire-short form) [

45]: a standardised questionnaire to measure the User Experience of interactive products. It distinguishes between pragmatic and hedonic quality aspects. The first describes interaction qualities that relate to the tasks or goals the user wants to achieve when using the product. The second describes aspects related to pleasure or enjoyment while using the product. Values between -0.8 and +0.8 represent a neutral evaluation of the corresponding scale, values greater than +0.8 represent a positive evaluation, while values lower than -0.8 represent a negative evaluation.

The MIQ-3 was administered twice: before the first experimental session and at the end of the last experimental session. On the contrary, the other questionnaires were administered only at the end of the experimental sessions. In addition, during each experimental session, the participants were also given a short interview to assess their physical and mental state. This interview was adapted from the questionnaire proposed in [

46], with some modifications needed to introduce aspects associated with neurofeedback [

32].

2.5. Statistical Analysis

To compare classification accuracies between sessions and groups, a repeated-measures ANOVA test was used under the assumption of normally distributed data and homoscedasticity. The Jarque-Bera test was exploited to check for the normality assumption. Instead, the homoscedasticity was tested by means of the Bartlett’s test. In case of a violation for the assumption of homoscedasticity, it was possible to apply a Welch’s correction before applying the ANOVA. Meanwhile, when data were not normally distributed, the Kruskal-Wallis non-parametric test was used instead of the ANOVA.

The comparison of MIQ-3 scores between the two groups and the two endpoints (before starting and at the end of the sessions) was conducted via the Mann-Whitney-U-Test [

47]. In addition, a Wilcoxon signed-rank test was used to compare paired data of the MIQ-3 scale within each group (control and neurofeedback). Similarly, a comparison between the two groups was carried on in terms of SUS, NASA-TLX, and UEQ-S scores at the end of the sessions. In each case, test-specific assumptions were checked before applying the test.

Table 1.

Summary of participants information for control and neurofeedback groups. BCI experience: experience with brain-computer interfaces in active paradigms, passive paradigms, reactive paradigms, multiple paradigms, or no experience. NF experience: previous experience with neurofeedback, no experience.

Table 1.

Summary of participants information for control and neurofeedback groups. BCI experience: experience with brain-computer interfaces in active paradigms, passive paradigms, reactive paradigms, multiple paradigms, or no experience. NF experience: previous experience with neurofeedback, no experience.

| |

Control |

Neurofeedback |

| sex |

male: 31%, female: 69% |

male: 57%, female: 42% |

| handedness |

right: 85%, left: 15%, both: 0% |

right: 79%, left: 14%, both: 7% |

| practicing sport |

yes: 38%, no: 62%, professional: 0% |

yes: 64%, no: 36%, professional: 0% |

| BCI experience |

no: 38.5%, active: 8%, passive: 15%, |

no: 43%, active: 7%, passive: 21%, |

| |

reactive: 0%, multiple: 38.5% |

reactive: 0%, multiple: 29% |

| NF experience |

yes: 46%, no: 54% |

yes: 36%, no: 64% |

The statistical analyses were performed by using MATLAB (version 2021b) and the significance level for them was set by = 5% (probability of a false negative, or type-I error).

3. Results

Results are reported in this section after commenting on the sample of participants to the experimental campaign. Experimental data were analysed in accordance with the methods of

Section 2. Then, classification accuracies were exploited to assess the performance of the system and to describe its limits. The results are discussed in conjunction with answers to the questionnaires especially to address the usability of the system in tele-rehabilitation.

3.1. Participants

A sample of 27 healthy volunteers were enrolled in the study (mean age: 26, standard deviation: 2). The study was approved by the Ethical Committee of Psychological Research of the Department of Humanities of the University of Naples Federico II, and all the participants provided a written informed consent before starting the experiments.

To investigate multimodal feedback, roughly half of the participants were assigned to the "control group" and half to the "neurofeedback group". The two groups were balanced by age. In the control group, four subjects were males and nine were females. Meanwhile, in the neurofeedback group, eight subjects were males and six were females. All participant used the wearable system with dry sensors while seated in front of a display for visual indications and eventual feedback. Participants with affected motor and/or cognitive functions were excluded. However, it is worth mentioning that a subject (C08) reported of past epileptic seizures during childhood.

Most subjects were right-handed with the exception of two left-handed subjects per each group and one ambidextrous subject in the neurofeedback group. More than 60% of participants for the neurofeedback group practised sport, while participants to the control group practicing sport were less than 40%. No participant played sport at a professional level.

More than 50% of participant already had experienced some BCI paradigms, and some subjects also had previous experience with neurofeedback. Such information is detailed in

Table 1 along with a summary of previous information about sex, handedness, and sport practicing.

3.2. System performance

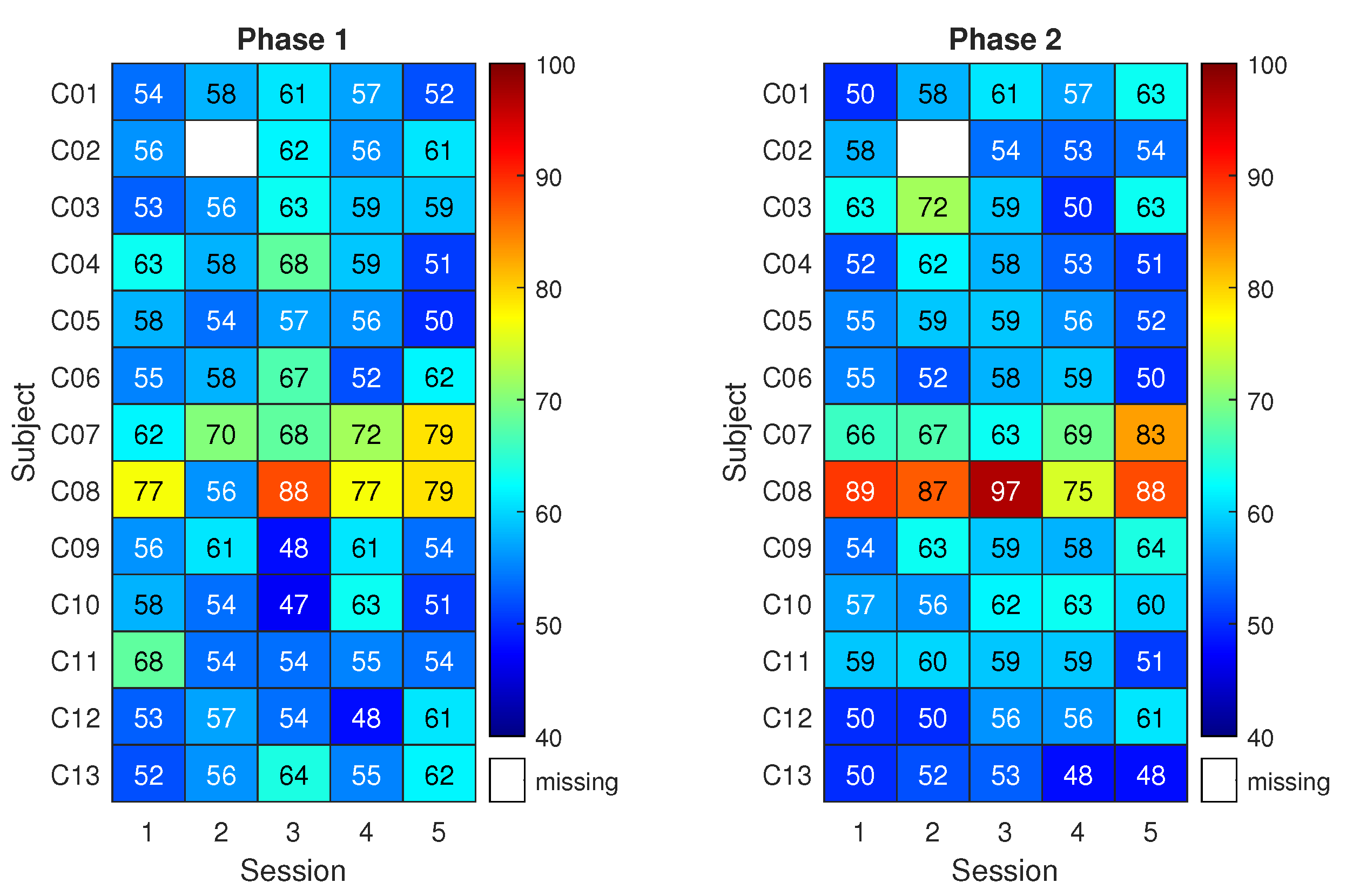

Classification results are shown in

Figure 5 for the control group. The matrix on the left reports the classification accuracy obtained on the first three runs of pure motor imagery (phase 1) across the five sessions (x-axis) and for the 13 subjects (y-axis). The matrix on the right reports the analogous results for the last three runs of pure motor imagery (phase 2). Higher classification accuracy is indicated by red colour. Meanwhile, a white space refers to a missing result caused by corrupted data or skipped session.

Given that 90 trials were used for each classification result, the classification accuracy of a random classifier would be modeled by a binomial distribution with mean equal to 50% (the well-known chance level) and a 95% coverage interval spanning from 40–59% (related to the number of trials) [

48]. Notably, this implies that only classification accuracy values above 59% can be considered non-random with an

= 5%. Therefore, for subjects in the control group, the classification accuracy resulted compatible with randomness except few cases. Overall, the highest mean classification accuracy across subjects was about 62% with 3% associated type A uncertainty and it was obtained either in phase 2 of session 2 and phase 1 of session 3.

Only subjects C07 and C08 do not belong to the general trend. Notably, the classification accuracies exceed 70% in several cases, an empirical threshold for acceptable performance in motor imagery. Interestingly, C08 was the participant reporting past epileptic seizures.

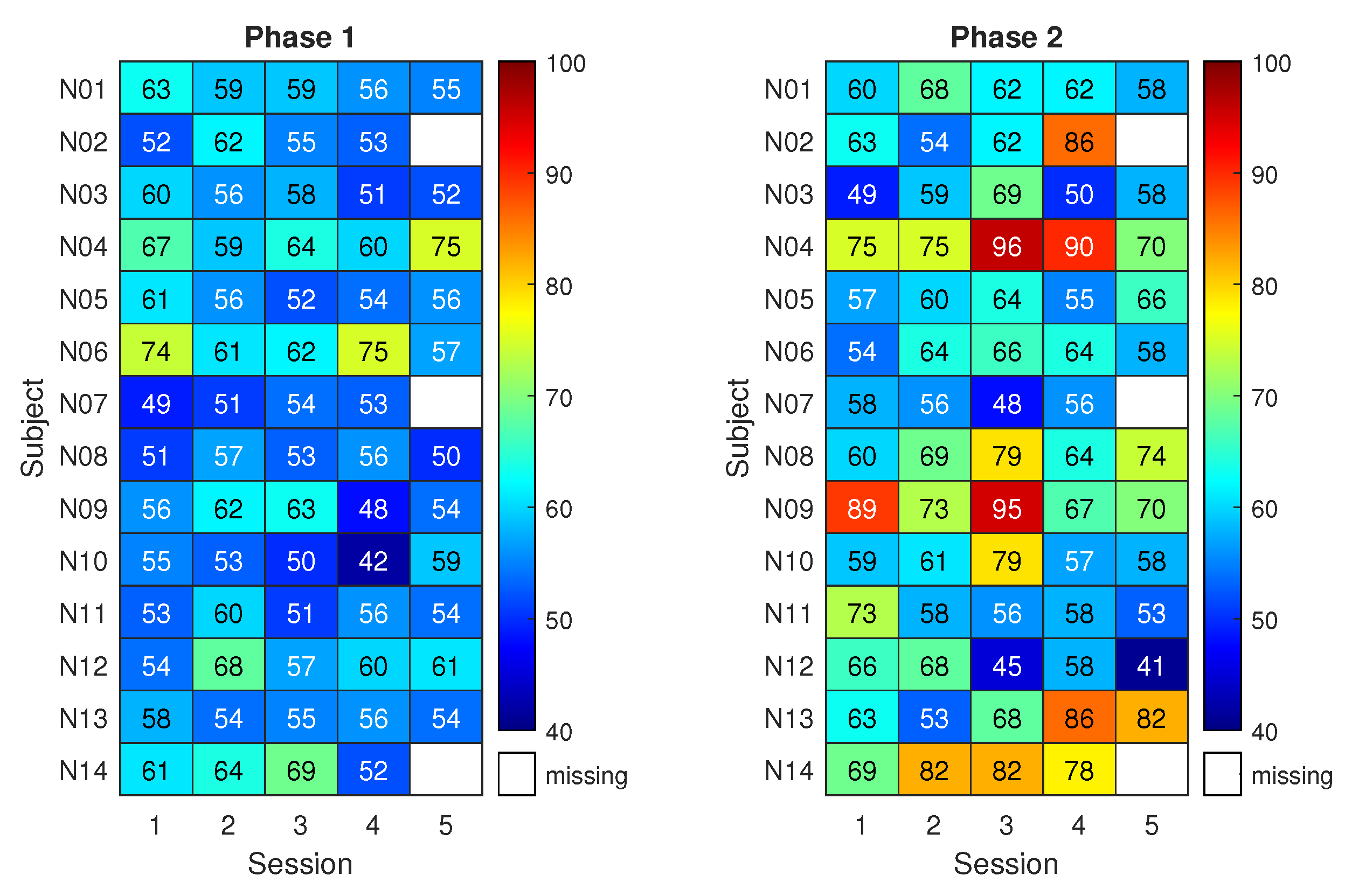

On the other hand,

Figure 6 shows the classification results for the neurofeedback group. The matrix on the right refers to three runs with neurofeedback (phase 2 for the neurofeedback group).

The results of phase 1 for the neurofeedback group appear similar to those of the control group, with classification accuracies close to the chance level. Nonetheless, during phase 2, eight subjects out of 14 exceeded the 70% accuracy threshold at least once. The subjects reached the respective peak accuracy in different sessions. This led to a maximum average classification accuracy among subjects of 69% with 3% uncertainty.

Statistical testing suggested that the highest classification performance of the neurofeedback group in phase 2 does not differs significantly from the highest of the control group, though it is 7% higher on average. Instead, a statistically significant difference between the two groups was found when focusing on the third session of phase 2 (p < 0.05). Moreover, classification accuracy in phase 2 resulted significantly higher than that of phase 1 in the fourth session of the neurofeedback group (p < 0.005). Finally, when comparing all the classification accuracies (all subjects and all sessions) of the neurofeedback group with those of the control group, the improvement given by neurofeedback in phase 2 is statistically significant (p < 0.005).

3.3. Questionnaires

As mentioned in

Section 2, the MIQ-3 was administered twice to each subject, i.e., before the first and at the end of the experimental sessions. In the scale from 1 to 7, the mean scores resulted above 5 already at the first endpoint, with the only exception of kinesthetic imagery, whose mean score equaled 4 for both groups. This implies that subjects generally considered easy, or at least not difficult, to see/feel the involved movements. The Wilcoxon signed-rank test did not produce statistically significant variations in MIQ-3 paired scores, within each group. The same applies to the Mann-Whitney-U-Test when considering differences between the two groups before and after the experiments.

On the other hand, the SUS scores suggest that the system was considered acceptable by both groups (above 70). Specifically, the results are equal to 78 ±10 and 75 ± 11 for control and neurofeedback groups, respectively. In addition, the overall results of the UEQ-s equaled 1.60 ±0.64 for the control group and 1.70 ± 0.80 for the neurofeedback group. No statistically significant differences between the groups were detected (p = 0.40 for SUS and p = 0.98 for UEQ-s).

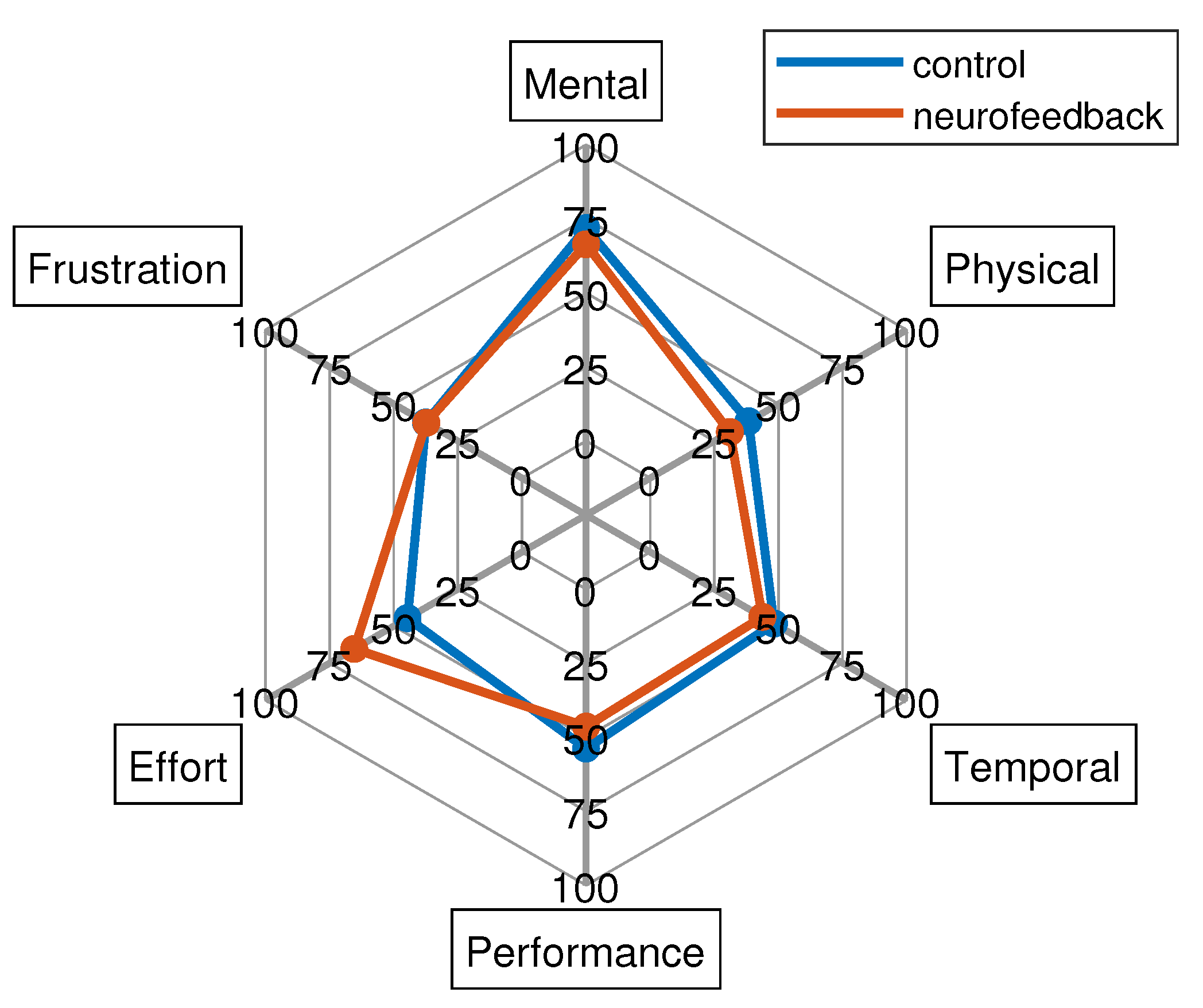

Finally, the NASA-TLX results are reported in

Figure 7. This shows similar subscales results for both groups with the exception of the effort. In particular, for the latter dimension, the Mann-Whitney-U-Test found statistically significant differences between the two groups (p < 0.05) indicating that neurofeedback group perceived that there was more effort required than the control group which was anticipated due to the need to engage with neurofeedback. The mental demand was high (around 75 for both groups), while the frustration level, the performance, as well as temporal and physical demand resulted low.

4. Discussion

Motor imagery-based BCIs present the possibility of novel rehabilitation paradigms, either substituting or supplementing current therapy protocols. However, several training sessions are typically required to successfully control a BCI based on motor imagery. Moreover, BCI illiteracy is a well-known problem in literature, which specifically prevents a widespread employment of such a system. In such a framework, this study investigated the use of neurofeedback to help a user to successfully control the system even with only a few sessions. The feedback was implemented in extended reality, and the aim was to develop a BCI suitable for daily-life and tele-rehabilitation. Such motivations led to the adoption of a wearable and portable EEG exploiting dry sensors, as well as employing a wearable and portable actuator for haptic feedback. Although only 8 dry sensors were employed, the use of multimodal feedback led to an increase in system performance. In comparison, the subjects of the control group showed no significant improvement across the sessions, with the only exception of subjects C07 and C08, who achieved good results even without any feedback.

Figure 7.

NASA-TLX results for both control and neurofeedback groups.

Figure 7.

NASA-TLX results for both control and neurofeedback groups.

With the short interview administered during each experimental session, it was also possible to monitor the subjects’ mental and physical state during the sessions, as well as the type of imagined movement. In general, the most common imagined movements were squeezing a ball, moving the arm, tapping, grasping an object, dribble, or play piano. Nonetheless, it is worth noting that six out of 13 subjects in the control group changed the type of movement imagined during the sessions and, among these, three subjects also switched between internal, external, and kinaesthetic imagery. Seven out of 14 subjects in the neurofeedback group changed the type of imagined movement during the sessions and, also among these, four subjects changed between internal, external, and kinaesthetic imagery. According to the results, one can suspect that low-performance levels would also be also caused by changes in the imagined movement during the sessions, especially when feedback was not provided. Therefore, such an aspect should be more rigorously kept under control in future protocols.

Overall, SUS and UEQ-s questionnaires showed that the system is user-friendly and subjects of both groups had a positive experience. Contrary to expectations, the MIQ-3 did not show differences between groups and sessions as the imagination scores reported by the participants were high both before and after the experiments. A possible explanation would be that such a questionnaire is not directly linked to left/right hand movements, which are instead common motor imagery tasks. Therefore, its scale may be not sensitive enough for the tasks of this work, although no other standard scale exists for this purpose. Finally, the NASA-TLX effort was statistically higher for the neurofeedback group. This result may be explained by the constant demand required by these subjects, who received a response to their mental state during the online experiment.

With respect to the feedback, a different bias type could be also proposed. Notably, instead of providing the feedback only in case of correct classification (the positively biased feedback of the current proposal), an adaptive bias could maximise system performance and subject learning [

49]. On the other hand, providing a negative feedback could also increase the effort or the frustration for the subject. In association with the feedback, further future development could also consider an improvement of the classification algorithm, so as to enhance performance across sessions [

50] and hence provide a better feedback.

Finally, using dry sensors indeed increased the comfort for the participants. However, as a drawback, EEG signals resulted more greatly affected by artifacts. The main artifacts superimposed on the EEG signal were heartbeats (especially at O1 and O2), breathing, ocular artifacts, and sweat artifacts (especially at F1 and F2). Moreover, vibration-induced artifacts occasionally appeared when the feedback was provided with the haptic suit. Although ASR applied offline removed most artifacts, suit vibration can be a limitation when using dry sensors and a different type of haptic feedback could be explored in the future. Given the possible improvements, its full wearability and the rehabilitation benefits of motor imagery, the investigated system will be addressed tele-rehabilitation purposes because of the perceived usability and the substantial improvement in classification accuracy revealed in the neurofeedback group with respect to the control group.

Acknowledgments

The Authors thank Emanuele Cirillo, Stefania Di Rienzo e Bianca Sorvillo for their precious help in carrying on the experimental campaign. A special thanks goes also to all the volunteers who took part in the experiments。

References

- Zampolini, M.; Todeschini, E.; Hermens, H.; Ilsbroukx, S.; Macellari, V.; Magni, R.; Rogante, M.; Vollenbroek, M.; Giacomozzi, C.; et al. Tele-rehabilitation: present and future. Annali dell’Istituto superiore di sanita 2008, 44, 125–134.

- Piron, L.; Tonin, P.; Trivello, E.; Battistin, L.; Dam, M. Motor tele-rehabilitation in post-stroke patients. Medical informatics and the Internet in medicine 2004, 29, 119–125.

- Schröder, J.; Van Criekinge, T.; Embrechts, E.; Celis, X.; Van Schuppen, J.; Truijen, S.; Saeys, W. Combining the benefits of tele-rehabilitation and virtual reality-based balance training: a systematic review on feasibility and effectiveness. Disability and Rehabilitation: Assistive Technology 2019, 14, 2–11. [CrossRef]

- Coccia, A.; Amitrano, F.; Donisi, L.; Cesarelli, G.; Pagano, G.; Cesarelli, M.; D’Addio, G. Design and validation of an e-textile-based wearable system for remote health monitoring. Acta Imeko 2021, 10, 220–229. [CrossRef]

- Cipresso, P.; Serino, S.; Borghesi, F.; Tartarisco, G.; Riva, G.; Pioggia, G.; Gaggioli, A.; et al. Continuous measurement of stress levels in naturalistic settings using heart rate variability: An experience-sampling study driving a machine learning approach. ACTA IMEKO 2021, 10, 239–248. [CrossRef]

- Mbunge, E.; Muchemwa, B.; Batani, J.; et al. Sensors and healthcare 5.0: transformative shift in virtual care through emerging digital health technologies. Global Health Journal 2021, 5, 169–177. [CrossRef]

- Bulc, V.; Hart, B.; Hannah, M.; Hrovatin, B. Society 5.0 and a Human Centred Health Care. In Medicine-Based Informatics and Engineering; Springer, 2022; pp. 147–177.

- Bacanoiu, M.V.; Danoiu, M. New Strategies to Improve the Quality of Life for Normal Aging versus Pathological Aging. Journal of Clinical Medicine 2022, 11, 4207. [CrossRef]

- Truijen, S.; Abdullahi, A.; Bijsterbosch, D.; van Zoest, E.; Conijn, M.; Wang, Y.; Struyf, N.; Saeys, W. Effect of home-based virtual reality training and telerehabilitation on balance in individuals with Parkinson disease, multiple sclerosis, and stroke: a systematic review and meta-analysis. Neurological Sciences 2022, pp. 1–12. [CrossRef]

- Belotti, N.; Bonfanti, S.; Locatelli, A.; Rota, L.; Ghidotti, A.; Vitali, A. A Tele-Rehabilitation Platform for Shoulder Motor Function Recovery Using Serious Games and an Azure Kinect Device. In dHealth 2022; IOS Press, 2022; pp. 145–152.

- Mansour, S.; Ang, K.K.; Nair, K.P.; Phua, K.S.; Arvaneh, M. Efficacy of Brain–Computer Interface and the Impact of Its Design Characteristics on Poststroke Upper-limb Rehabilitation: A Systematic Review and Meta-analysis of Randomized Controlled Trials. Clinical EEG and neuroscience 2022, 53, 79–90. [CrossRef]

- Padfield, N.; Camilleri, K.; Camilleri, T.; Fabri, S.; Bugeja, M. A Comprehensive Review of Endogenous EEG-Based BCIs for Dynamic Device Control. Sensors 2022, 22, 5802. [CrossRef]

- Prasad, G.; Herman, P.; Coyle, D.; McDonough, S.; Crosbie, J. Applying a brain-computer interface to support motor imagery practice in people with stroke for upper limb recovery: a feasibility study. Journal of neuroengineering and rehabilitation 2010, 7, 1–17. [CrossRef]

- Wen, D.; Fan, Y.; Hsu, S.H.; Xu, J.; Zhou, Y.; Tao, J.; Lan, X.; Li, F. Combining brain–computer interface and virtual reality for rehabilitation in neurological diseases: A narrative review. Annals of physical and rehabilitation medicine 2021, 64, 101404. [CrossRef]

- Singh, A.; Hussain, A.A.; Lal, S.; Guesgen, H.W. A comprehensive review on critical issues and possible solutions of motor imagery based electroencephalography brain-computer interface. Sensors 2021, 21, 2173. [CrossRef]

- Arpaia, P.; Callegaro, L.; Cultrera, A.; Esposito, A.; Ortolano, M. Metrological characterization of consumer-grade equipment for wearable brain–computer interfaces and extended reality. IEEE Transactions on Instrumentation and Measurement 2021, 71, 1–9. [CrossRef]

- Teplan, M.; et al. Fundamentals of EEG measurement. Measurement science review 2002, 2, 1–11.

- Ienca, M.; Haselager, P.; Emanuel, E.J. Brain leaks and consumer neurotechnology. Nature biotechnology 2018, 36, 805–810. [CrossRef]

- Affanni, A.; Aminosharieh Najafi, T.; Guerci, S. Development of an eeg headband for stress measurement on driving simulators. Sensors 2022, 22, 1785. [CrossRef]

- Arpaia, P.; Callegaro, L.; Cultrera, A.; Esposito, A.; Ortolano, M. Metrological characterization of a low-cost electroencephalograph for wearable neural interfaces in industry 4.0 applications. In Proceedings of the 2021 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4. 0&IoT). IEEE, 2021, pp. 1–5.

- Jeong, J.H.; Choi, J.H.; Kim, K.T.; Lee, S.J.; Kim, D.J.; Kim, H.M. Multi-domain convolutional neural networks for lower-limb motor imagery using dry vs. wet electrodes. Sensors 2021, 21, 6672. [CrossRef]

- Wang, F.; Li, G.; Chen, J.; Duan, Y.; Zhang, D. Novel semi-dry electrodes for brain–computer interface applications. Journal of neural engineering 2016, 13, 046021. [CrossRef]

- Hinrichs, H.; Scholz, M.; Baum, A.K.; Kam, J.W.; Knight, R.T.; Heinze, H.J. Comparison between a wireless dry electrode EEG system with a conventional wired wet electrode EEG system for clinical applications. Scientific reports 2020, 10, 1–14. [CrossRef]

- Zhang, Y.; Zhang, X.; Sun, H.; Fan, Z.; Zhong, X. Portable brain-computer interface based on novel convolutional neural network. Computers in biology and medicine 2019, 107, 248–256. [CrossRef]

- Lin, B.S.; Pan, J.S.; Chu, T.Y.; Lin, B.S. Development of a wearable motor-imagery-based brain–computer interface. Journal of medical systems 2016, 40, 1–8. [CrossRef]

- Lo, C.C.; Chien, T.Y.; Chen, Y.C.; Tsai, S.H.; Fang, W.C.; Lin, B.S. A wearable channel selection-based brain-computer interface for motor imagery detection. Sensors 2016, 16, 213. [CrossRef]

- Lisi, G.; Hamaya, M.; Noda, T.; Morimoto, J. Dry-wireless EEG and asynchronous adaptive feature extraction towards a plug-and-play co-adaptive brain robot interface. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2016, pp. 959–966.

- Lisi, G.; Rivela, D.; Takai, A.; Morimoto, J. Markov switching model for quick detection of event related desynchronization in EEG. Frontiers in neuroscience 2018, 12, 24. [CrossRef]

- Cheng, N.; Phua, K.S.; Lai, H.S.; Tam, P.K.; Tang, K.Y.; Cheng, K.K.; Yeow, R.C.H.; Ang, K.K.; Guan, C.; Lim, J.H. Brain-computer interface-based soft robotic glove rehabilitation for stroke. IEEE Transactions on Biomedical Engineering 2020, 67, 3339–3351. [CrossRef]

- Casso, M.I.; Jeunet, C.; Roy, R.N. Heading for motor imagery brain-computer interfaces (MI-BCIs) usable out-of-the-lab: Impact of dry electrode setup on classification accuracy. In Proceedings of the 2021 10th International IEEE/EMBS Conference on Neural Engineering (NER). IEEE, 2021, pp. 690–693.

- Simon, C.; Ruddy, K.L. A wireless, wearable Brain-Computer Interface for neurorehabilitation at home; A feasibility study. In Proceedings of the 2022 10th International Winter Conference on Brain-Computer Interface (BCI). IEEE, 2022, pp. 1–6.

- Arpaia, P.; Coyle, D.; Donnarumma, F.; Esposito, A.; Natalizio, A.; Parvis, M. Visual and haptic feedback in detecting motor imagery within a wearable brain-computer interface. Measurement 2022, p. 112304. [CrossRef]

- Arpaia, P.; Coyle, D.; Donnarumma, F.; Esposito, A.; Natalizio, A.; Parvis, M.; Pesola, M.; Vallefuoco, E. Multimodal Feedback in Assisting a Wearable Brain-Computer Interface Based on Motor Imagery. In Proceedings of the 2022 IEEE International Conference on Metrology for Extended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE). IEEE, 2022, pp. 691–696.

- Ang, K.K.; Chin, Z.Y.; Wang, C.; Guan, C.; Zhang, H. Filter bank common spatial pattern algorithm on BCI competition IV datasets 2a and 2b. Frontiers in neuroscience 2012, 6, 39. [CrossRef]

- Mullen, T.R.; Kothe, C.A.; Chi, Y.M.; Ojeda, A.; Kerth, T.; Makeig, S.; Jung, T.P.; Cauwenberghs, G. Real-time neuroimaging and cognitive monitoring using wearable dry EEG. IEEE Transactions on Biomedical Engineering 2015, 62, 2553–2567. [CrossRef]

- Arpaia, P.; De Bendetto, E.; Esposito, A.; Natalizio, A.; Parvis, M.; Pesola, M. Comparing artifact removal techniques for daily-life electroencephalography with few channels. In Proceedings of the 2022 IEEE International Symposium on Medical Measurements and Applications (MeMeA). IEEE, 2022, pp. 1–6.

- Williams, S.E.; Cumming, J.; Ntoumanis, N.; Nordin-Bates, S.M.; Ramsey, R.; Hall, C. Further validation and development of the movement imagery questionnaire. Journal of sport and exercise psychology 2012, 34, 621–646. [CrossRef]

- Hall, C.R.; Pongrac, J. Movement imagery: questionnaire; University of Western Ontario Faculty of Physical Education, 1983.

- Hall, C.R.; Martin, K.A. Measuring movement imagery abilities: a revision of the movement imagery questionnaire. Journal of mental imagery 1997.

- Brooke, J.; et al. SUS-A quick and dirty usability scale. Usability evaluation in industry 1996, 189, 4–7.

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. Journal of usability studies 2009, 4, 114–123.

- Bangor, A.; Kortum, P.T.; Miller, J.T. An empirical evaluation of the system usability scale. Intl. Journal of Human–Computer Interaction 2008, 24, 574–594. [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in psychology; Elsevier, 1988; Vol. 52, pp. 139–183.

- Hart, S.G. NASA-task load index (NASA-TLX); 20 years later. In Proceedings of the human factors and ergonomics society annual meeting. Sage publications Sage CA: Los Angeles, CA, 2006, Vol. 50, pp. 904–908.

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and evaluation of a short version of the user experience questionnaire (UEQ-S). International Journal of Interactive Multimedia and Artificial Intelligence, 4 (6), 103-108. 2017. [CrossRef]

- Cho, H.; Ahn, M.; Ahn, S.; Kwon, M.; Jun, S.C. EEG datasets for motor imagery brain–computer interface. GigaScience 2017, 6, gix034. [CrossRef]

- Rosner, B. Fundamentals of biostatistics; Cengage learning, 2015.

- Combrisson, E.; Jerbi, K. Exceeding chance level by chance: The caveat of theoretical chance levels in brain signal classification and statistical assessment of decoding accuracy. Journal of neuroscience methods 2015, 250, 126–136. [CrossRef]

- Mladenović, J.; Frey, J.; Pramij, S.; Mattout, J.; Lotte, F. Towards identifying optimal biased feedback for various user states and traits in motor imagery BCI. IEEE Transactions on Biomedical Engineering 2021, 69, 1101–1110. [CrossRef]

- Tao, L.; Cao, T.; Wang, Q.; Liu, D.; Sun, J. Distribution Adaptation and Classification Framework Based on Multiple Kernel Learning for Motor Imagery BCI Illiteracy. Sensors 2022, 22, 6572. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).