1. Introduction

Large language models (LLMs), especially Generative Pre-trained Transformer (GPT) models [

4,

5], have shown remarkable performance in various natural language processing (NLP) tasks. Recently, Open AI developed ChatGPT, an interactive chatbot built upon InstructGPT [

6], which has captured the attention of researchers in the NLP community [

7,

8]. This chatbot is capable of integrating multiple NLP tasks and can generate detailed and comprehensive responses to human inquiries. Additionally, it can respond appropriately to follow-up questions and maintain sensitivity throughout several turns of conversation.

Previous research has demonstrated that ChatGPT can perform as well as or even better than other LLMs in machine translation task [

9]. However, it remains uncertain whether ChatGPT can be used as a metric to evaluate the quality of translations. If ChatGPT is suitable for this task, then, how to develop appropriate prompts that can make ChatGPT generate reliable evaluations? Concurrent to our work, Kocmi and Federmann [

1] present an encouraging finding that LLMs, e.g., ChatGPT, could outperform current best MT metrics at the system level quality assessment with zero-shot standard prompting, but such kind of prompts show unreliable performance at the segment level.

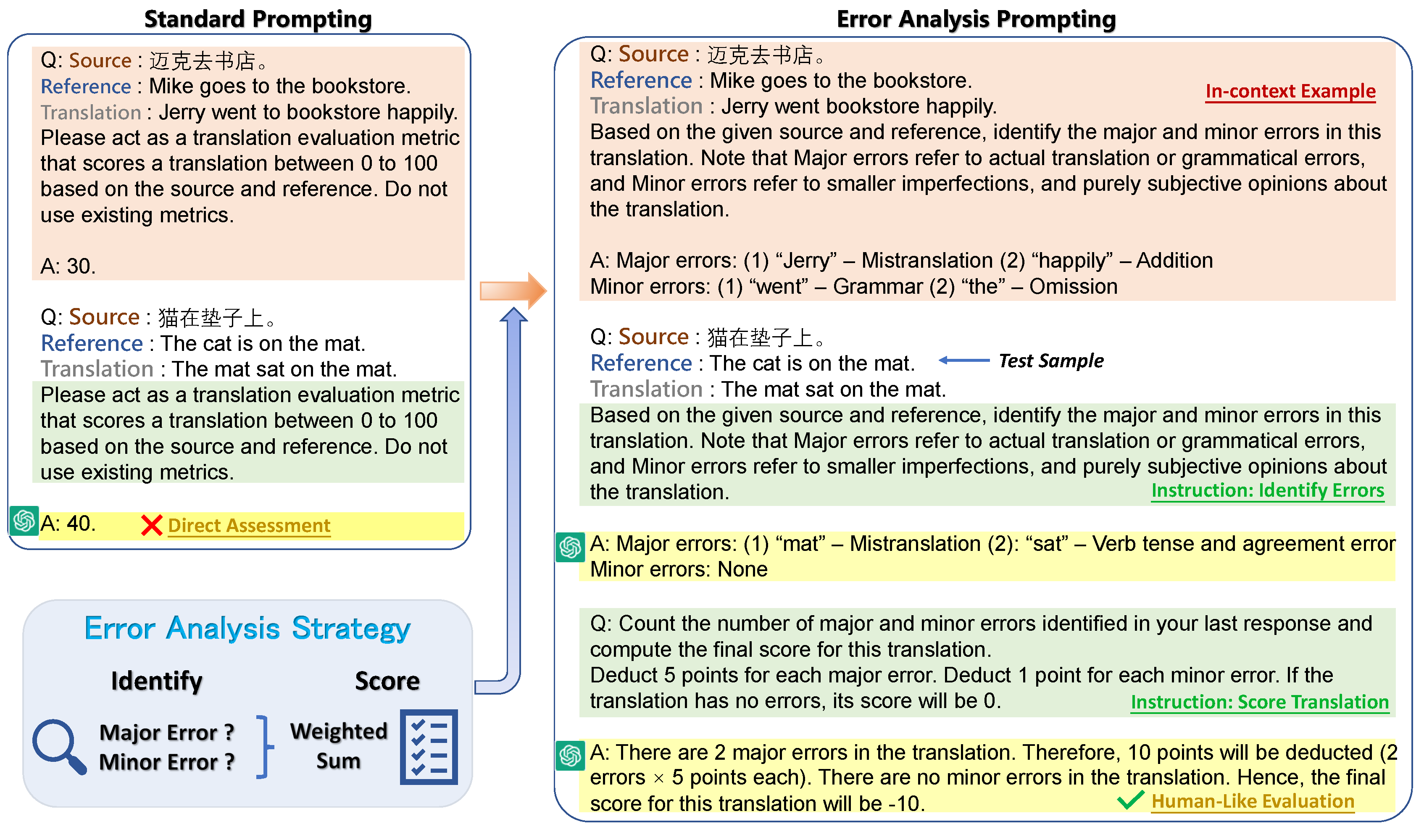

In this work, we take the further step by carefully investigating the current advanced few-shot prompting strategies upon ChatGPT for MT quality assessment, and propose a novel prompting strategy –

Error Analysis (EA) Prompting, combining the Chain-of-Thought (CoT, Wei et al. [

2]) and Error Analysis (EA, Lu et al. [

3]). We give an example of this prompt in

Figure 1. The idea is to prompt ChatGPT to generate a human-like evaluation like MQM [

10] by ❶

identifying major and minor errors, and ❷

scoring the translations according to the severity of these errors. In addition, we also explore the potential of ChatGPT compared with modern neural metrics like COMET [

11], BERTScore [

12] and BLEURT [

13].

Our experiments and analyses illustrate that:

Our proposed EA Prompting outperforms standard prompting [

1] at the segment level, achieving human-like evaluations at both the system level and segment level.

When designing prompts, itemized responses are better than lengthy and detailed explanations of errors. Moreover, splitting the instruction into two identifying errors and scoring translation can improve evaluation stability.

The boosted performance from EA prompting is observed in the zero-shot scenario on text-davinci-003 rather than in the few-shot scenario, which indicates that we need to adjust our settings when utilizing other GPT models.

Despite its good performance, we show that ChatGPT is NOT a stable evaluator and may score the same translation differently.

It is NOT advisable to combine multiple translations into a single query input, as ChatGPT has a preference for former translations.

The remainder of this report is designed as follows. We present the evaluation settings and comparative results in

Section 2. In

Section 3, we highlight several potential issues that researchers should be aware of when using ChatGPT as a translation evaluator. Conclusions are described in

Section 4.

2. ChatGPT As An Evaluation Metric

2.1. Experiment Setup

Dataset

We utilize the testset from the WMT20 Metric shared task in two language pairs: Zh-En and En-De. To ensure the reliability of our experiment, for each language pair, we divide the segments into four groups based on the number of tokens they contain (15-24, 25-34, 35-44, 45-54). We randomly sample 10 segments from each group and form a new dataset containing 40 segments. Details are shown in

Table 1.

Human Evaluation

Human evaluation of translated texts is widely considered to be the gold standard in evaluating metrics. We use a high-quality human evaluation dataset Multi-dimensional Quality Metrics (MQM, Freitag et al. [

10]) as human judgments. This dataset is annotated by human experts and has been widely adopted in recent translation evaluation [

14] and quality estimation tasks [

15] in WMT.

Baseline

We compare LLMs with several commonly used baseline metrics for MT evaluation.

BLEU [

17] is the most popular metric that compares the n-gram overlap of the translation with human reference, but it has been criticized for not capturing the full semantic meaning of the translation [

14].

BERTScore [

12] is a neural metric that relies on pre-trained models to compute the semantic similarity with the reference.

BLEURT [

13] and

COMET [

11] are supervised neural metrics that leverage human judgments to train. They have shown a high correlation with human judgments.

Large Language Models

We test the evaluation capability on ChatGPT using the default model of ChatGPT-plus, and compare it with text-davinci-003, a base model of ChatGPT.

2.2. ChatGPT as a metric attains SOTA performance at the system level

Table 2 presents the performance of LLMs compared with other baseline metrics. We report the best-performing setting, where LLMs with EA prompting. We can see that:

at the system level, ChatGPT achieves SOTA performance compared with existing evaluation metrics for both language pairs. However, text-davinci-003 obtains inferior results compared with other metrics. Our results are consistent with the findings of Kocmi and Federmann [

1], who tested the performance of large language models on

full test set of the WMT22 metric task.

ChatGPT and text-davinci-003 lag behind state-of-the-art metrics for En-De at the segment level. For Zh-En, while text-davinci-003 remains suboptimal, ChatGPT with EA prompting exhibits superior performance relative to all other metrics, with the exception of COMET.

2.3. Error analysis prompting with ChatGPT is better than standard prompting at the segment level

To improve the segment level evaluation capabilities of ChatGPT, we combine the idea of Chain-of-Thought [

2] and Error Analysis [

3]. Chain-of-Thought has been successfully applied in complex reasoning tasks, which encourages the LLM to break down the task into a series of reasoning steps, allowing it to better understand the context and formulate a more accurate response. Error analysis strategy [

3] aims to generate human-like evaluation by incorporating human evaluation framework, e.g. MQM [

10], into existing metrics to obtain better discriminating ability for errors, e.g., lexical choice [

18] or adequacy [

19] errors. Specifically, we instruct ChatGPT to identify major and minor errors in the translation, and then enable ChatGPT to score the translation based on the severity of errors.

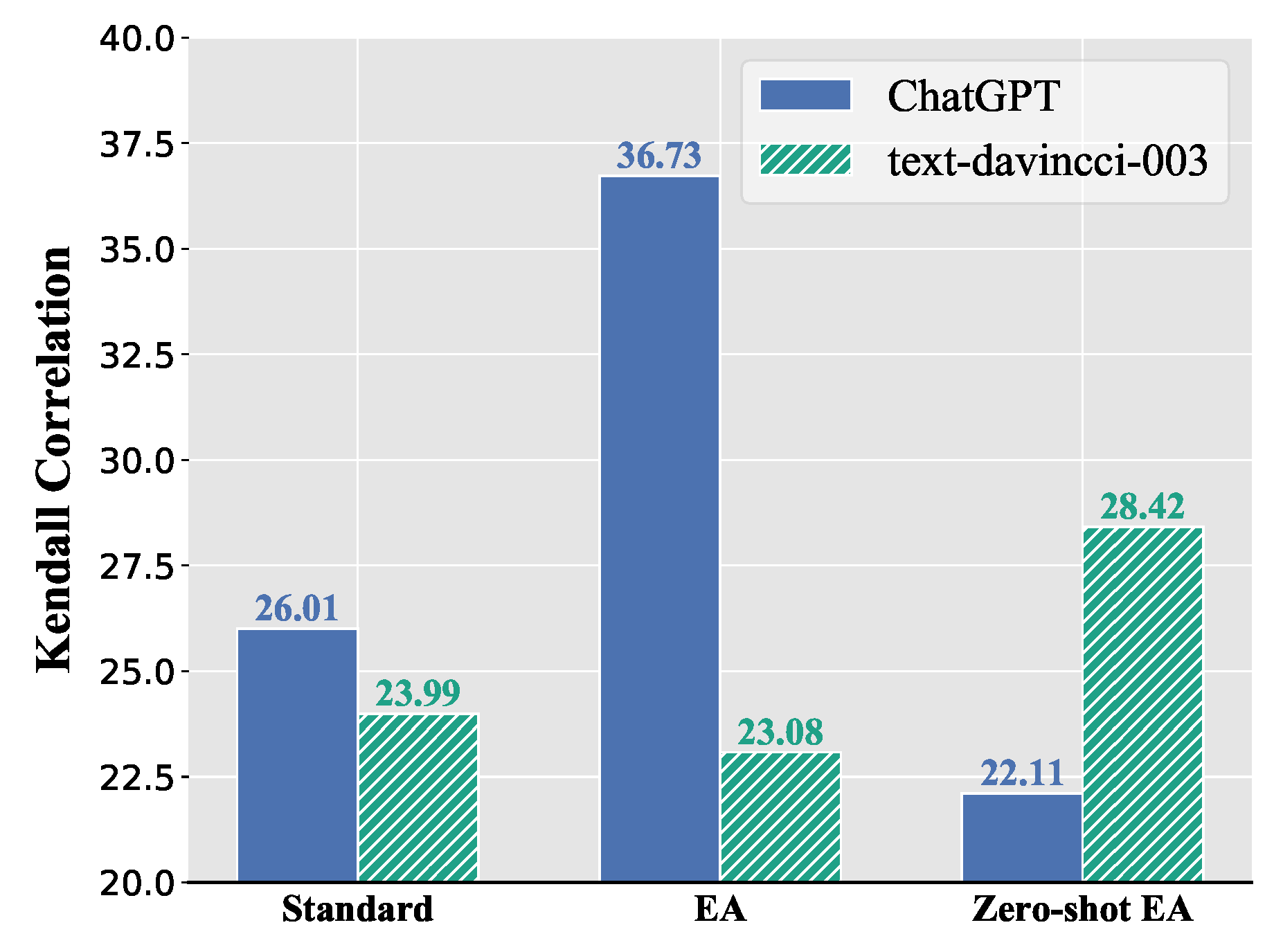

Figure 2 compares the segment level results between different prompting strategies. Prompting ChatGPT with error analysis can benefit translation evaluation between segments by improving Kendall correlation by a large margin (26.01 vs 36.73). However, simply replacing prompting instruction from scoring with zero-shot EA will even damage the performance, since identifying errors without samples will make ChatGPT become more unstable. This also highlights the importance of prompting with in-context examples.

Moreover, on text-davinci-003, the improvements from EA prompting are shown in the zero-shot scenario ("zero-shot EA"). The reason for this may be that while text-davinci-003 is capable of detecting errors when prompted with explicit instructions for error analysis, it may face challenges in fully comprehending the task of error analysis when presented with in-context examples. Compared with text-davinci-003, ChatGPT has been trained using reinforcement learning through human feedback and conversational tuning, which enables it to generalize to error analysis through in-context examples.

2.4. Error analysis prompting empowers ChatGPT to produce human-like evaluations

Given the crucial significance of the prompt design, we explore several versions of in-context prompt contexts and present an analysis in

Table 3. See

Appendix A for the prompt contexts used in our experiment. We find that:

(i) ChatGPT becomes more adept at identifying errors when instructed by error analysis.

When designing in-context examples, is it worth designing an instruction on error analysis or simply adopting standard scoring instruction? We find that error analysis instructions can make ChatGPT better understand the task of error identification in the best setting, since more errors are identified compared with standard instructions (28 vs 17 in total). As a result, We recommend using error analysis instructions in prompt contexts instead of standard instruction.

(ii) Itemized template response is better than detailed illustration.

As shown in the "Response" column, providing descriptions of errors in detail hinders ChatGPT’s ability to accurately identify errors. For example, when using error analysis with combined instruction, ChatGPT with itemized response can identify more errors than detailed response (28 vs 10 in total). It seems that lengthy paragraphs will make it difficult for ChatGPT to fully comprehend the details of each error, potentially leading to confusion regarding the intended task. Therefore, it is recommended to provide clear and concise descriptions of errors in a format that is easy for ChatGPT to process and comprehend.

(iii) Separating the scoring process from error identification may improve the stability of ChatGPT.

We suggest splitting the instruction of error analysis into two queries, one for identifying errors and the other for scoring the translation. Although this may not cause a significant performance gain, we observe that sometimes ChatGPT fails to deduct points for identified errors or presents an incorrect calculation of scores. Separating the scoring process may be helpful, as it allows ChatGPT to focus on one single procedure in each query, thus can provide more accurate judgments.

3. Case Study

In this section, we list several typical issues with the case study that should be aware of when using ChatGPT as a translation evaluator.

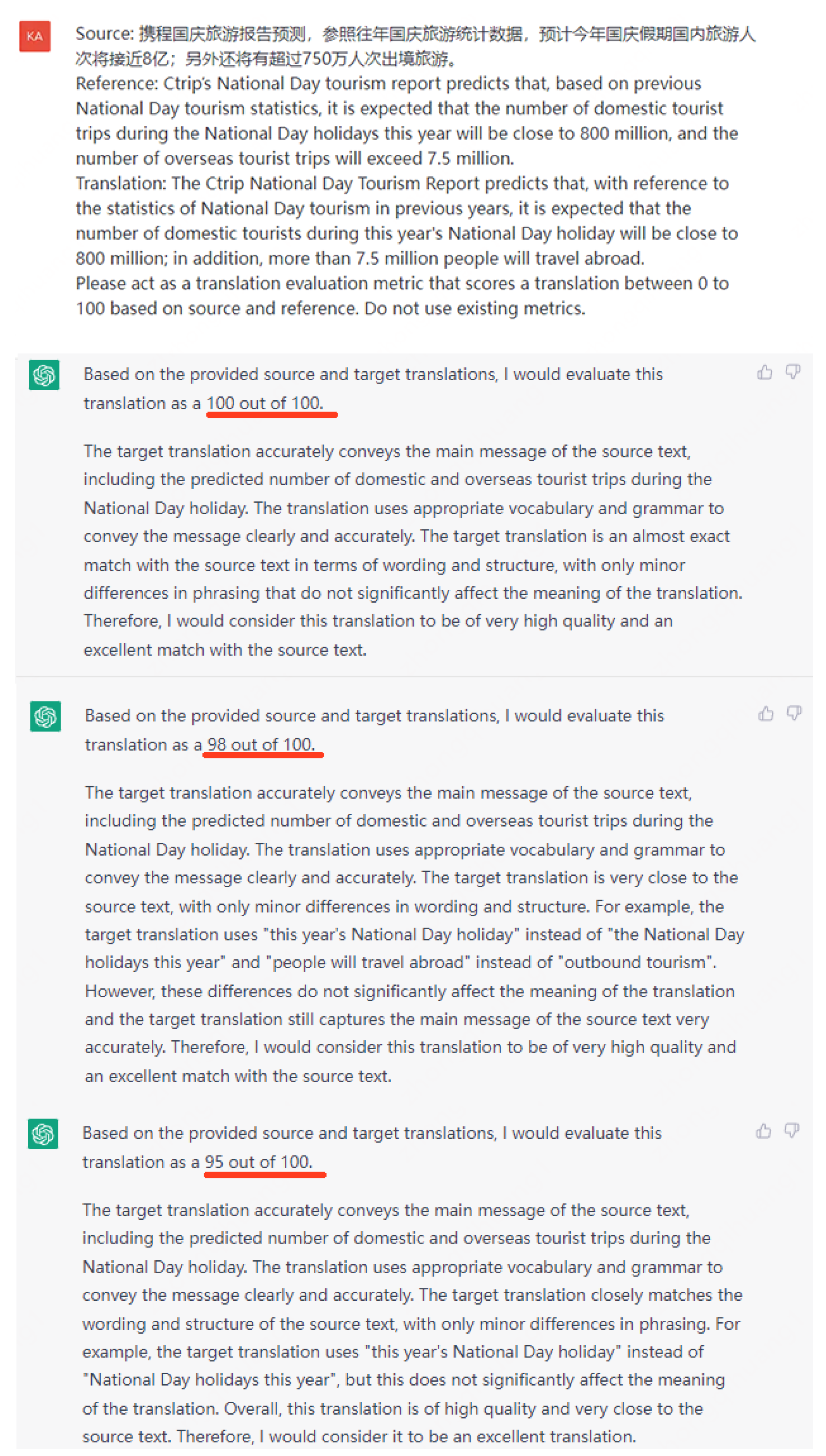

3.1. ChatGPT is unstable when conducting evaluation process

When assessing translations using ChatGPT, it is not uncommon to observe variations in the scores assigned to the same input. As shown in

Figure 3, we regenerate several responses with the same input and obtain 3 different scores (98, 95, 100) for the translation. The discrepancies in scores could be attributed to the inherent randomness of the model behind ChatGPT. Another possible reason is the lack of clearly stated evaluation criteria described in the prompt contexts. Therefore, we suggest using specific guidelines such as the template we propose to minimize the impact of these variations.

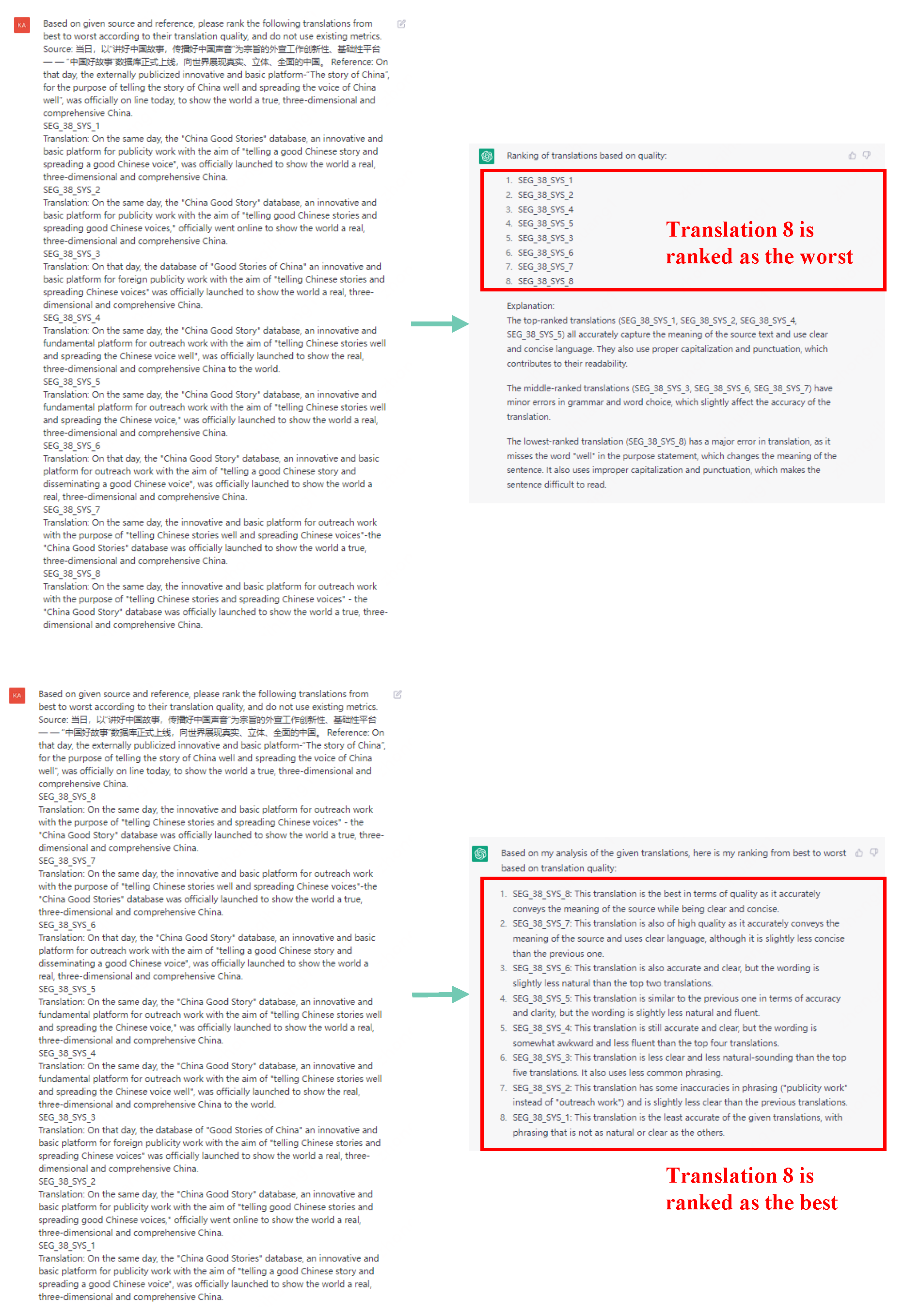

3.2. ChatGPT prefers former inputs when provided with multiple translations

An interesting phenomenon is that when multiple translations are presented together as a single input to ChatGPT for evaluation, it tends to believe that the translations provided earlier are of higher quality, while the quality of later translations are relatively poorer.

Figure 4 shows an example of the attack on ChatGPT. We provide 8 translations along with their corresponding source and reference sentences. First, we present the translations sequentially, and ask ChatGPT to rank them according to their translation quality. ChatGPT ranks the translations as (SYS1, SYS2, SYS4, SYS5, SYS3, SYS6, SYS7, SYS8), with SYS1 being the best translation and SYS8 being the worst. Then, we reverse the order of translations and obtain an entirely different sequence of ranks - (SYS8, SYS7, SYS6, SYS5, SYS4, SYS3, SYS2, SYS1), with SYS8 being the best translation and SYS1 being the worst.

The contradictory results may be attributed to the auto-regressive nature of the decoder model, which gives more attention to the latter input, potentially leading to greater identification of errors for the translation input later. Therefore, we recommend that researchers input one translation at a time instead of providing multiple translations.

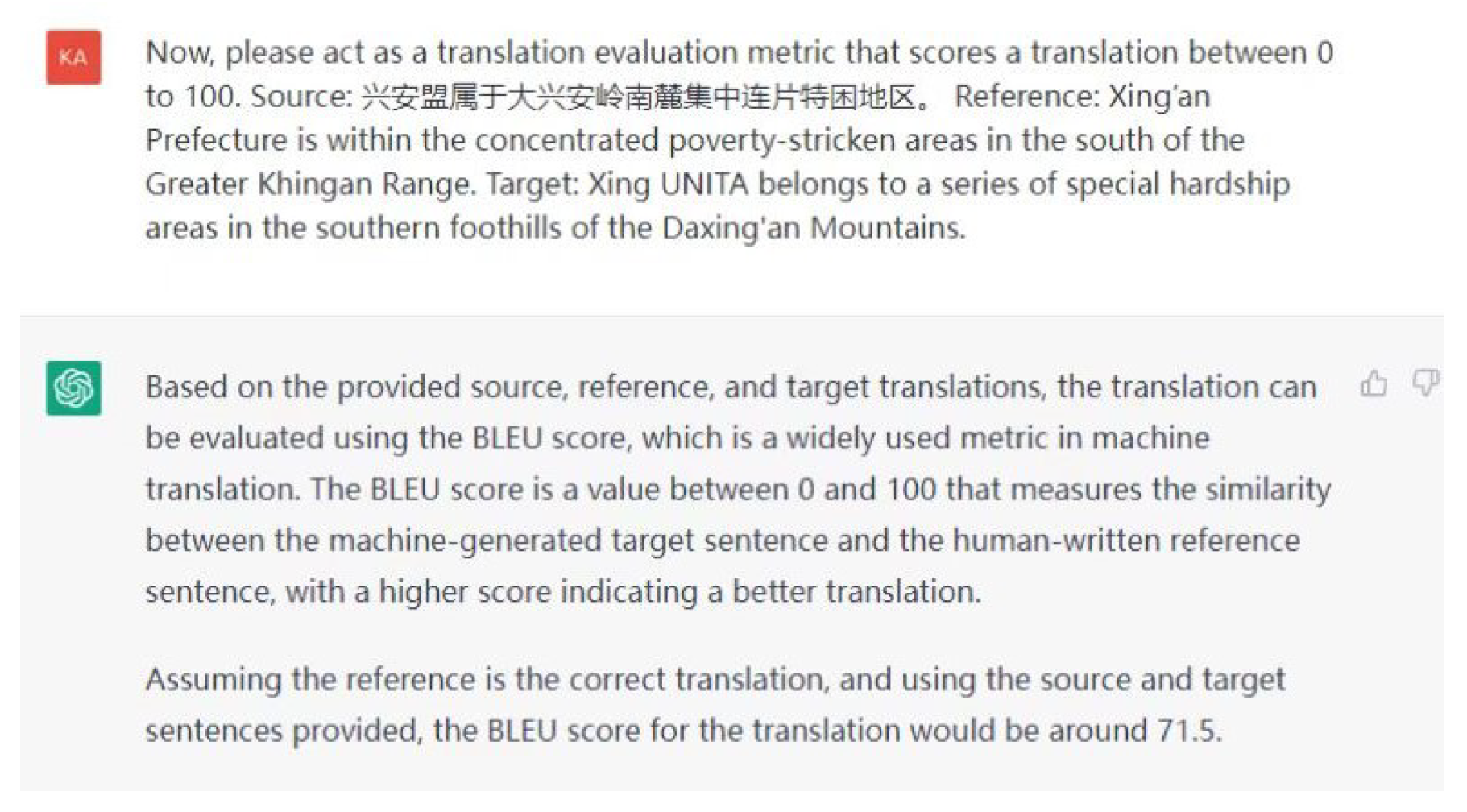

3.3. ChatGPT may directly adopt existing evaluation metrics

We observe that with standard prompting, sometimes ChatGPT directly adopts existing evaluation metrics, such as BLEU and METEOR. An example of this behavior is in

Figure 5.

However, as our objective is to examine ChatGPT’s inherent capacity for translation evaluation, rather than its ability to implement pre-existing evaluation procedures, we include an explicit instruction of "Do not use existing metrics" in standard prompting. This encourages ChatGPT to develop its own approach to evaluating translations, independent of existing metrics.

4. Conclusion

In this paper, we explore the potential of ChatGPT as a metric for evaluating translations. We design a novel in-context prompting strategy based on chain-of-thought and error analysis, and show that this strategy significantly improves ChatGPT’s evaluation performance. We compare our approach with other prompt designs to show the effectiveness of error analysis. We hope the experience can benefit NLP researchers in developing more reliable promoting strategies. In

Section 3, we also highlight several potential issues that researchers should be aware of when using ChatGPT as a translation evaluator.

In future work, we would like to experiment with our method on more test sets and top-performed systems [

20,

21,

22,

23,

24], to make our conclusion more convincing. Also, it is worth exploring the reference-free settings, i.e., quality estimation [

25,

26] evaluation performance, with our proposed error analysis prompting. Lastly, it will be interesting to automatically generate the samples in our few-shot error analysis prompting strategy.

Limitations

Since we do not have access to the ChatGPT API till done the majority of our work, all experiments in this paper were conducted using the interaction screen of ChatGPT. As a result, the test set used in this study is limited. We will conduct more experiments in future work to further validate and refine our current results and findings.

Appendix A. Prompt Contexts

Figure A1 compares the prompt contexts implemented in error analysis prompting with a detailed response and combined instruction discussed in

Section 2.4.

Figure A1.

A comparison between our proposed error analysis prompting and other prompt contexts.

Figure A1.

A comparison between our proposed error analysis prompting and other prompt contexts.

References

- Kocmi, T.; Federmann, C. Large Language Models Are State-of-the-Art Evaluators of Translation Quality. arXiv preprint arXiv:2302.14520 arXiv:2302.14520 2023.

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Chi, E.; Le, Q.; Zhou, D. Chain of thought prompting elicits reasoning in large language models. arXiv preprint arXiv:2201.11903 arXiv:2201.11903 2022.

- Lu, Q.; Ding, L.; Xie, L.; Zhang, K.; Wong, D.F.; Tao, D. Toward Human-Like Evaluation for Natural Language Generation with Error Analysis. arXiv preprint 2022. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I.; others. Language models are unsupervised multitask learners. OpenAI blog 2019. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; others. Language models are few-shot learners. NeurIPS 2020. [Google Scholar]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; others. Training language models to follow instructions with human feedback. arXiv preprint 2022. [Google Scholar]

- Qin, C.; Zhang, A.; Zhang, Z.; Chen, J.; Yasunaga, M.; Yang, D. Is ChatGPT a General-Purpose Natural Language Processing Task Solver? arXiv preprint 2023. [Google Scholar]

- Zhong, Q.; Ding, L.; Liu, J.; Du, B.; Tao, D. Can ChatGPT Understand Too? A Comparative Study on ChatGPT and Fine-tuned BERT. arXiv preprint 2023. [Google Scholar]

- Hendy, A.; Abdelrehim, M.; Sharaf, A.; Raunak, V.; Gabr, M.; others. How Good Are GPT Models at Machine Translation? A Comprehensive Evaluation. arXiv preprint 2023. [Google Scholar]

- Freitag, M.; Foster, G.; Grangier, D.; others. Experts, Errors, and Context: A Large-Scale Study of Human Evaluation for Machine Translation. TACL 2021. [Google Scholar]

- Rei, R.; Stewart, C.; Farinha, A.C.; Lavie, A. COMET: A Neural Framework for MT Evaluation. EMNLP, 2020.

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. ICLR, 2020.

- Sellam, T.; Das, D.; Parikh, A. BLEURT: Learning Robust Metrics for Text Generation. ACL, 2020.

- Freitag, M.; Rei, R.; Mathur, N.; Lo, C.k.; Stewart, C.; Avramidis, E.; Kocmi, T.; Foster, G.; Lavie, A.; Martins, A.F.T. Results of WMT22 Metrics Shared Task: Stop Using BLEU – Neural Metrics Are Better and More Robust. WMT, 2022.

- Zerva, C.; Blain, F.; Rei, R.; Lertvittayakumjorn, P.; C. De Souza, J.G.; Eger, S.; Kanojia, D.; Alves, D.; Orăsan, C.; Fomicheva, M.; Martins, A.F.T.; Specia, L. Findings of the WMT 2022 Shared Task on Quality Estimation. WMT, 2022.

- Kocmi, T.; Federmann, C.; Grundkiewicz, R.; Junczys-Dowmunt, M.; Matsushita, H.; Menezes, A. To Ship or Not to Ship: An Extensive Evaluation of Automatic Metrics for Machine Translation. WMT, 2021.

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: a Method for Automatic Evaluation of Machine Translation. ACL, 2002.

- Ding, L.; Wang, L.; Liu, X.; Wong, D.F.; Tao, D.; Tu, Z. Understanding and Improving Lexical Choice in Non-Autoregressive Translation. ICLR, 2021.

- Popović, M. Relations between comprehensibility and adequacy errors in machine translation output. CoNLL, 2020.

- Barrault, L.; Bojar, O.; Costa-jussà, M.R.; Federmann, C.; Fishel, M.; others. Findings of the 2019 Conference on Machine Translation (WMT19). WMT, 2019.

- Anastasopoulos, A.; Bojar, O.; Bremerman, J.; Cattoni, R.; Elbayad, M.; Federico, M.; others. Findings of the IWSLT 2021 evaluation campaign. IWSLT, 2021.

- Ding, L.; Wu, D.; Tao, D. The USYD-JD Speech Translation System for IWSLT2021. IWSLT, 2021.

- Kocmi, T.; Bawden, R.; Bojar, O.; Dvorkovich, A.; Federmann, C.; Fishel, M.; Gowda, T.; others. Findings of the 2022 Conference on Machine Translation (WMT22). WMT, 2022.

- Zan, C.; Peng, K.; Ding, L.; Qiu, B.; others. Vega-MT: The JD Explore Academy Machine Translation System for WMT22. WMT, 2022.

- Specia, L.; Raj, D.; Turchi, M. Machine translation evaluation versus quality estimation. Machine translation 2010. [Google Scholar]

- Qiu, B.; Ding, L.; Wu, D.; Shang, L.; Zhan, Y.; Tao, D. Original or Translated? On the Use of Parallel Data for Translation Quality Estimation. arXiv preprint 2022. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).