Submitted:

11 April 2023

Posted:

11 April 2023

You are already at the latest version

Abstract

Keywords:

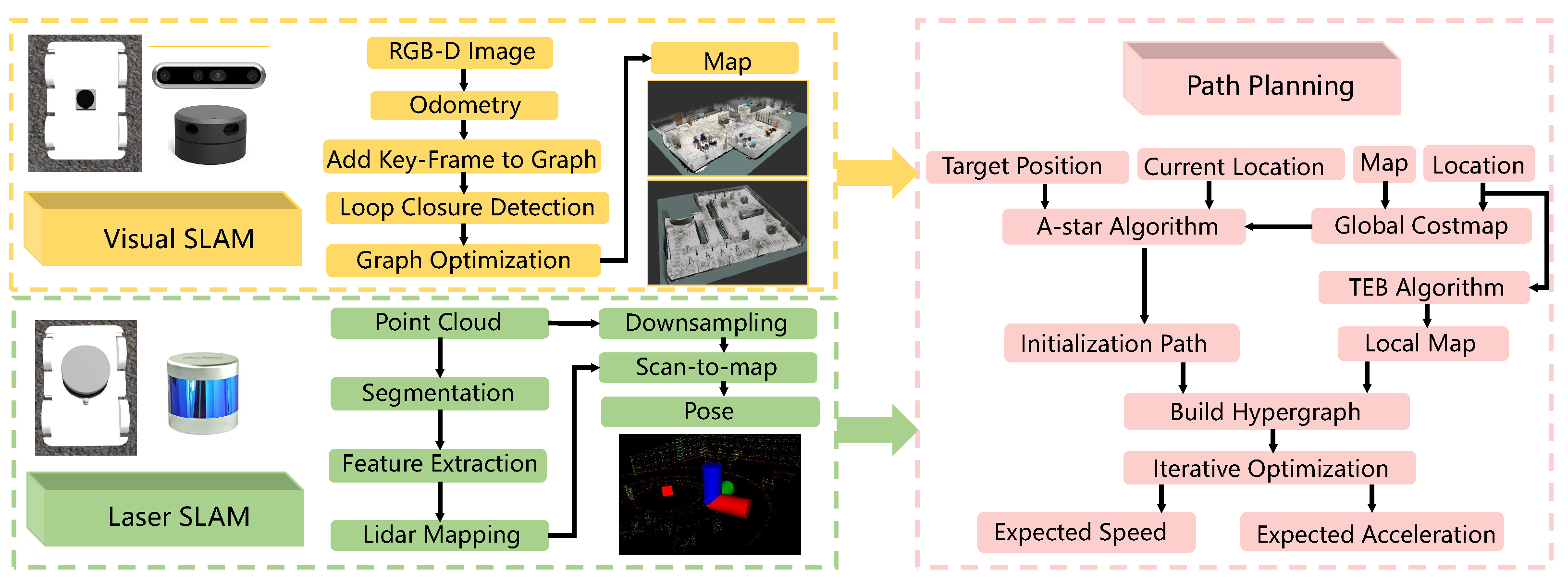

1. Introduction

2. Inspection robot SLAM system

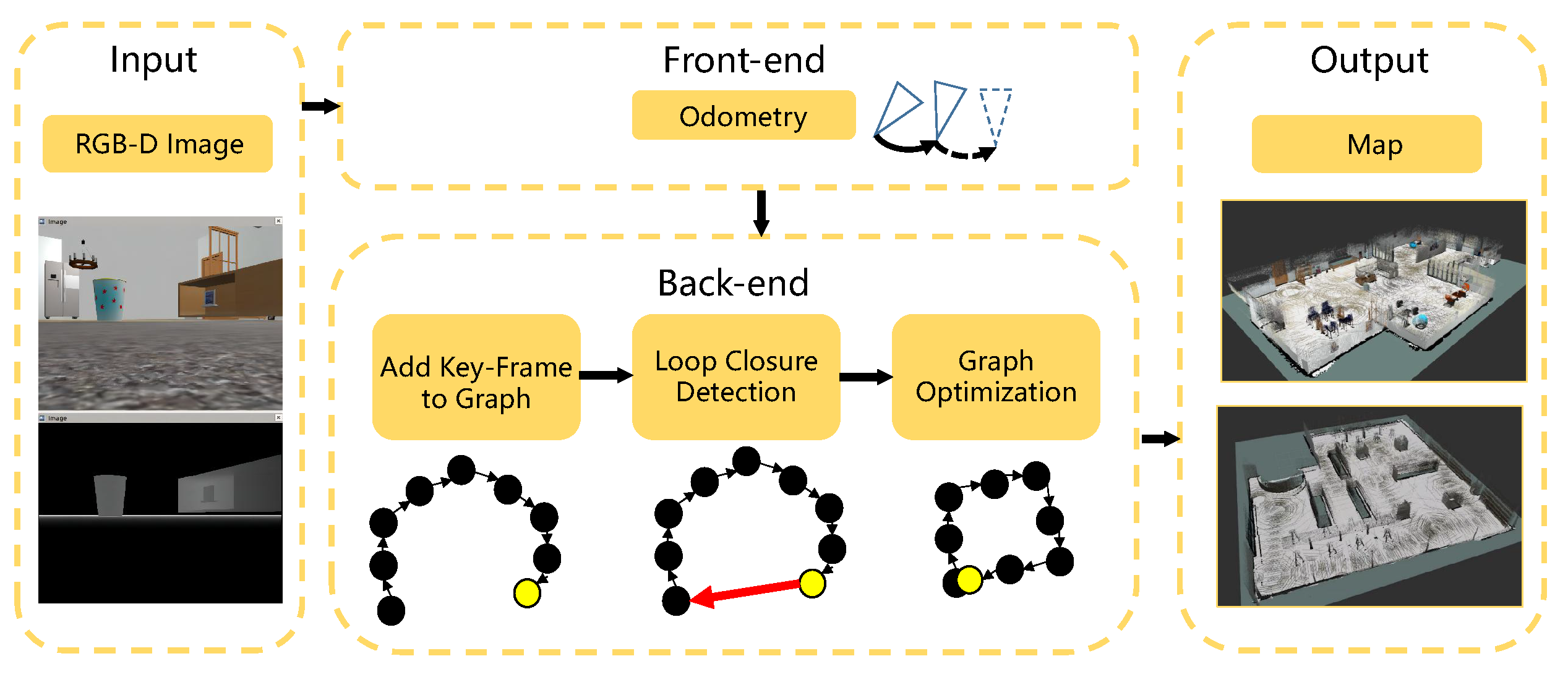

2.1. Visual-SLAM Algorithm Design and Implementation

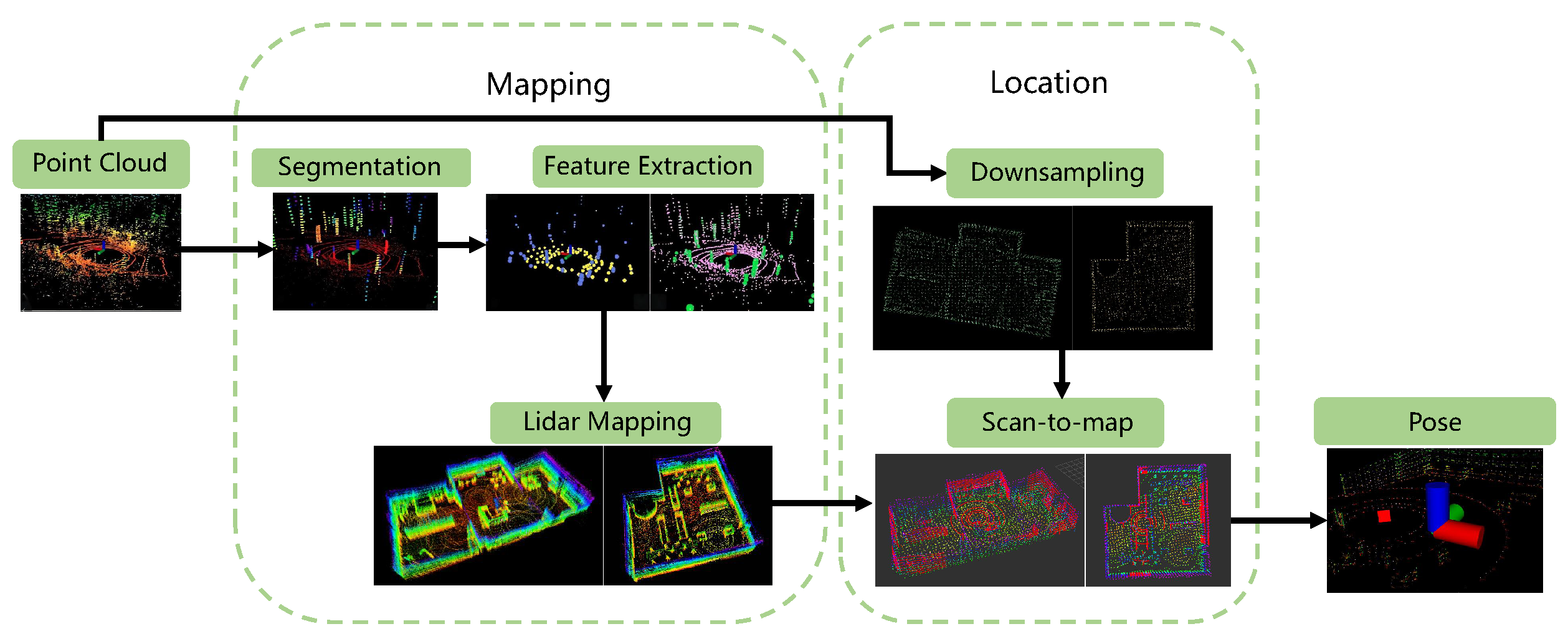

2.2. Multi-line LiDAR-based SLAM Algorithm Design and Implementation

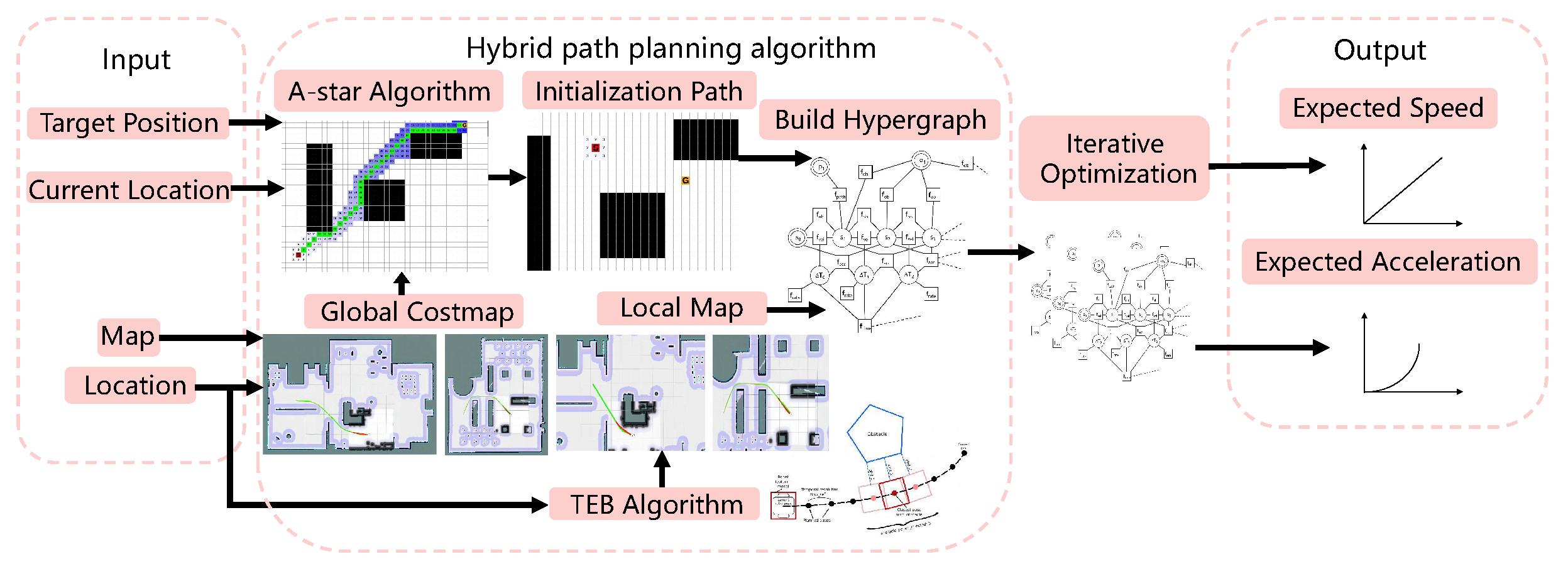

3. Inspection robot Path planning system

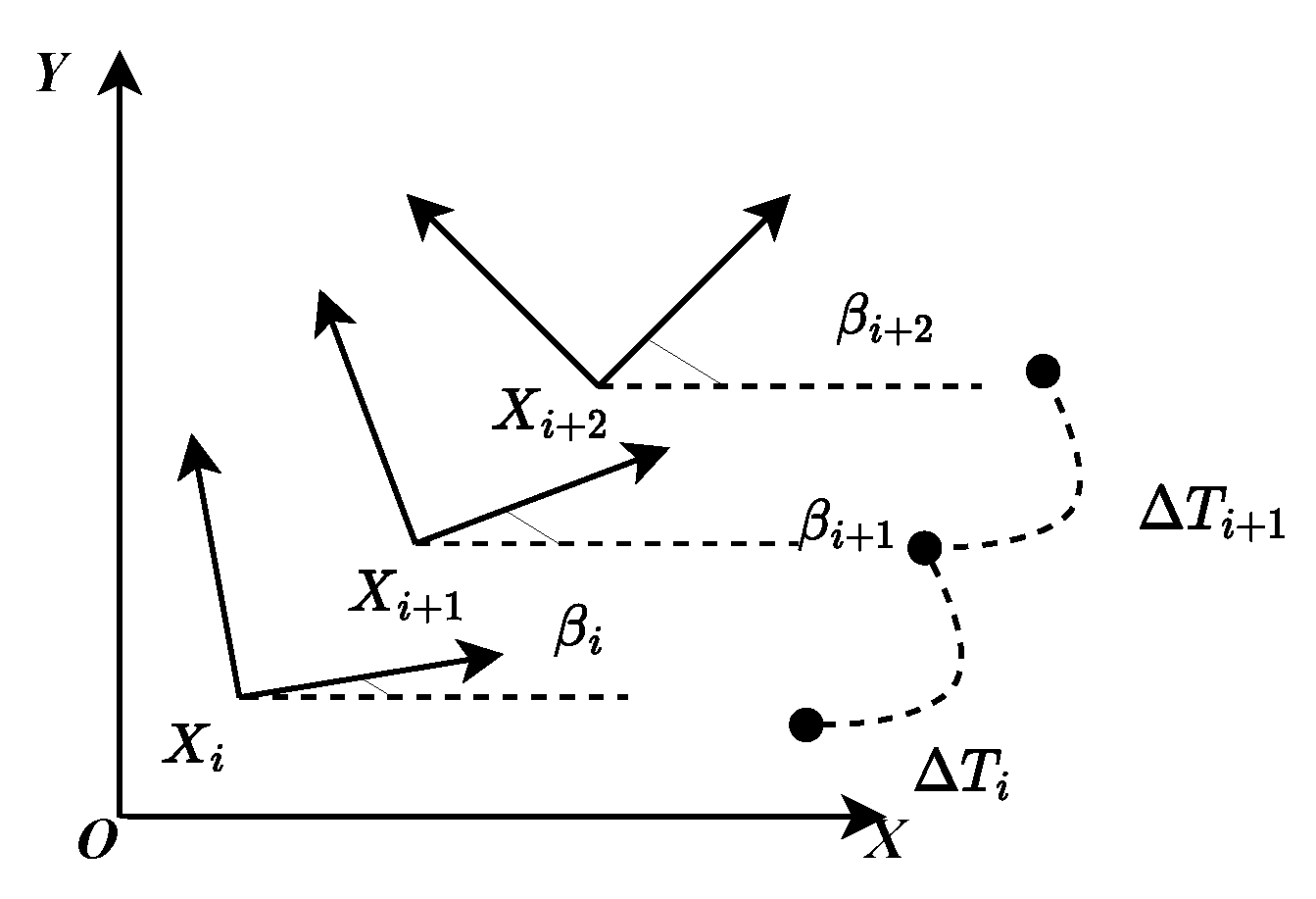

3.1. Sports model

3.2. Path Planning

4. Experiment and Analysis

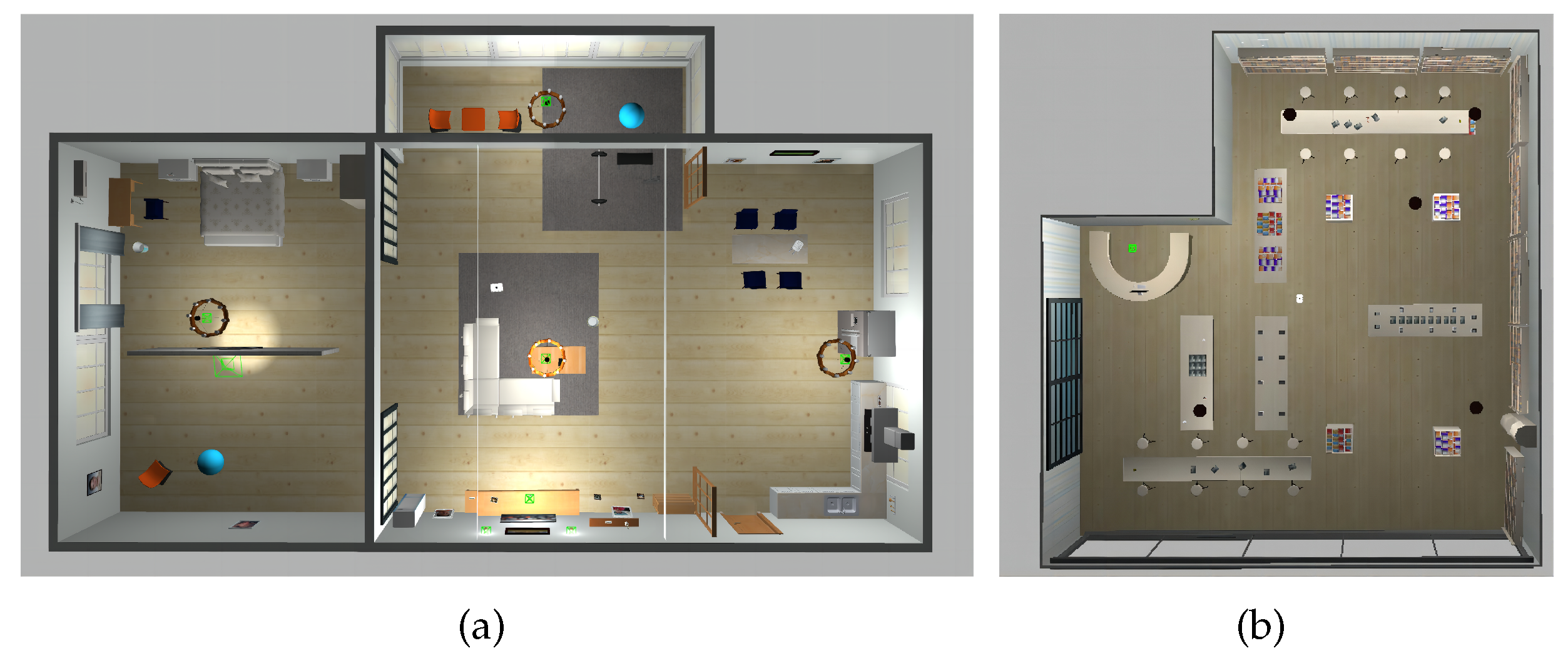

4.1. Experiment settings

4.2. Performance evaluation

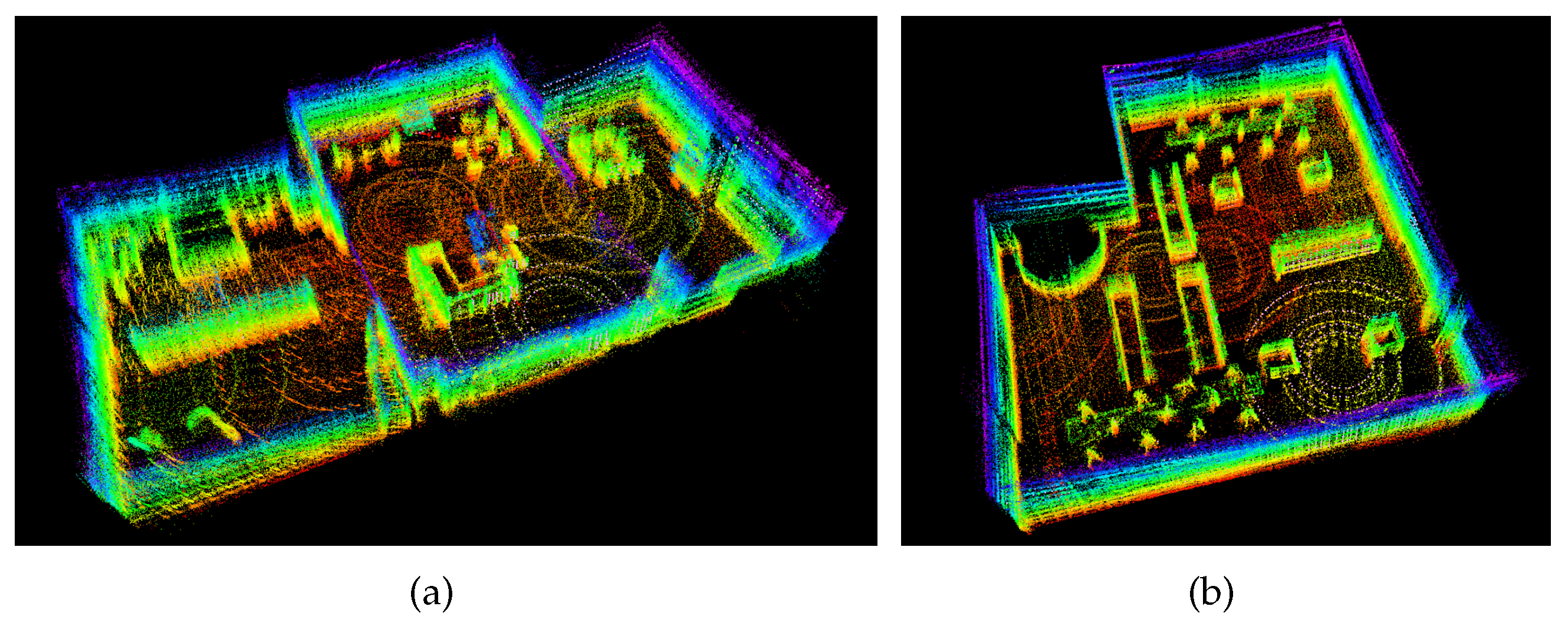

4.2.1. Visual-SLAM Algorithm performance evaluation

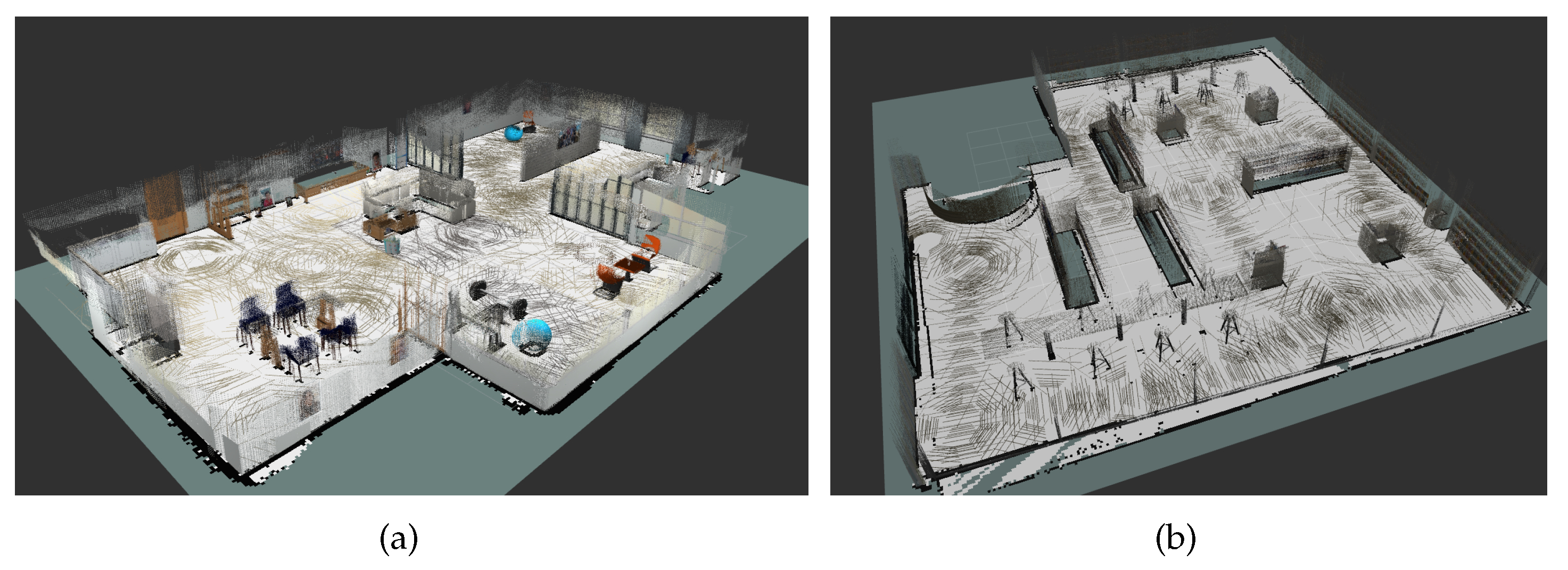

4.2.2. Multi-line LiDAR-based SLAM Algorithm performance evaluation

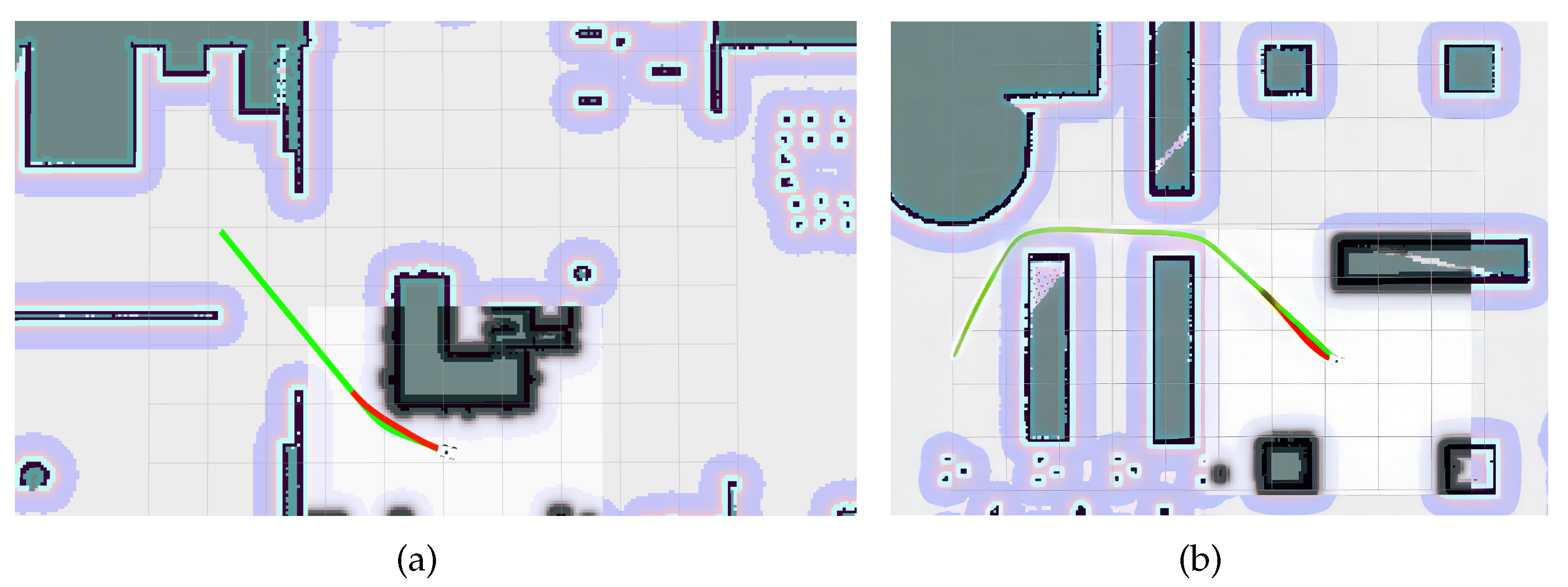

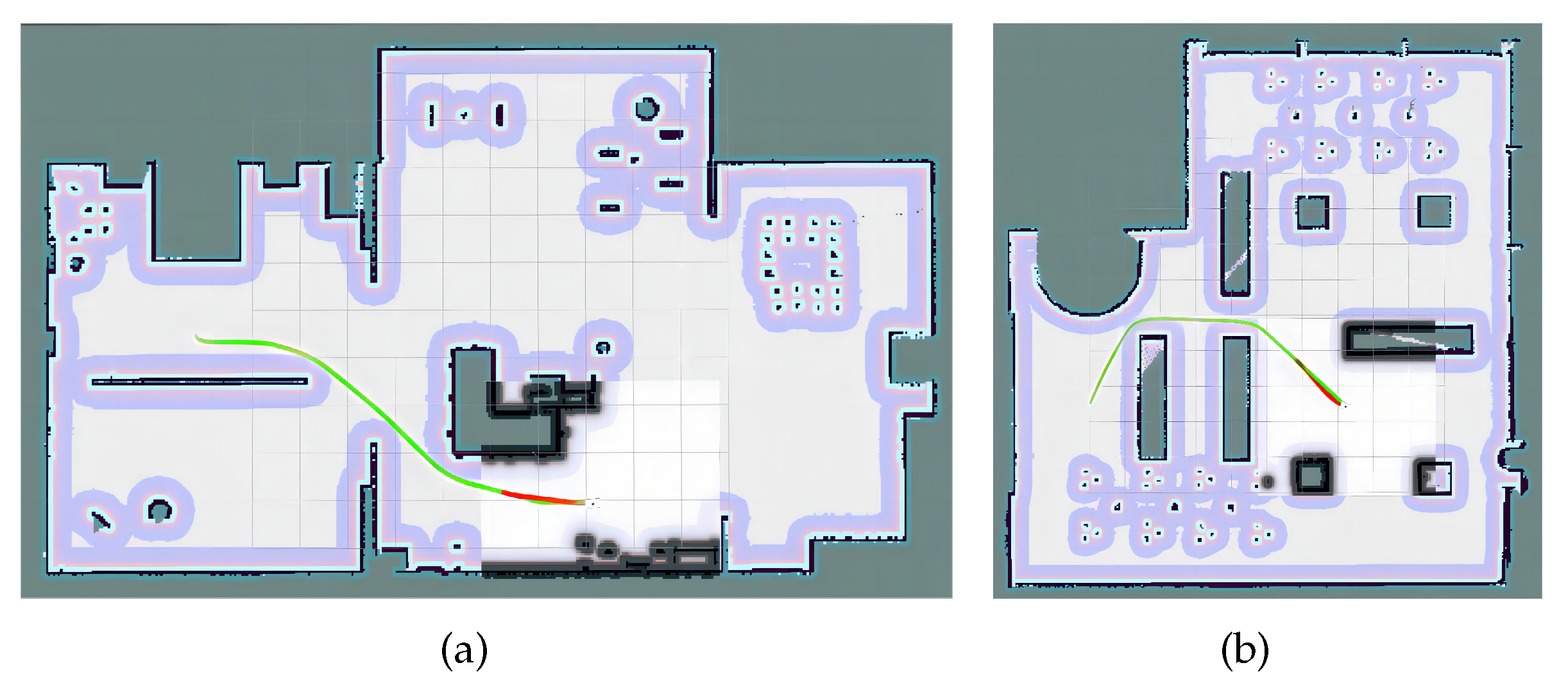

4.2.3. Path Planning Performance Evaluation

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bahrin, M.A.K.; Othman, M.F.; Azli, N.H.N.; Talib, M.F. Industry 4.0: A review on industrial automation and robotic. Jurnal teknologi 2016, 78. [Google Scholar]

- Choi, H.; Ryew, S. Robotic system with active steering capability for internal inspection of urban gas pipelines. Mechatronics 2002, 12, 713–736. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE transactions on pattern analysis and machine intelligence 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Pire, T.; Fischer, T.; Castro, G.; De Cristóforis, P.; Civera, J.; Berlles, J.J. S-PTAM: Stereo parallel tracking and mapping. Robotics and Autonomous Systems 2017, 93, 27–42. [Google Scholar] [CrossRef]

- Zhou, H.; Ummenhofer, B.; Brox, T. DeepTAM: Deep tracking and mapping with convolutional neural networks. Springer, 2020, Vol. 128, pp. 756–769.

- Grisetti, G.; Kümmerle, R.; Stachniss, C.; Burgard, W. A tutorial on graph-based SLAM. IEEE Intelligent Transportation Systems Magazine 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Strasdat, H.; Davison, A.J.; Montiel, J.M.; Konolige, K. Double window optimisation for constant time visual SLAM. 2011 international conference on computer vision. IEEE, 2011, pp. 2352–2359.

- Harik, E.H.C.; Korsaeth, A. Combining hector slam and artificial potential field for autonomous navigation inside a greenhouse. Robotics 2018, 7, 22. [Google Scholar] [CrossRef]

- Noto, M.; Sato, H. A method for the shortest path search by extended Dijkstra algorithm. Smc 2000 conference proceedings. 2000 ieee international conference on systems, man and cybernetics.’cybernetics evolving to systems, humans, organizations, and their complex interactions’(cat. no. 0. IEEE, 2000, Vol. 3, pp. 2316–2320.

- Seet, B.C.; Liu, G.; Lee, B.S.; Foh, C.H.; Wong, K.J.; Lee, K.K. A-STAR: A mobile ad hoc routing strategy for metropolis vehicular communications. Networking 2004: Networking Technologies, Services, and Protocols; Performance of Computer and Communication Networks; Mobile and Wireless Communications Third International IFIP-TC6 Networking Conference Athens, Greece, –14, 2004, Proceedings 3. Springer, 2004, pp. 989–999. 9 May.

- Ogren, P.; Leonard, N.E. A convergent dynamic window approach to obstacle avoidance. IEEE Transactions on Robotics 2005, 21, 188–195. [Google Scholar] [CrossRef]

- Chang, L.; Shan, L.; Jiang, C.; Dai, Y. Reinforcement based mobile robot path planning with improved dynamic window approach in unknown environment. Autonomous Robots 2021, 45, 51–76. [Google Scholar] [CrossRef]

- Rösmann, C.; Hoffmann, F.; Bertram, T. Timed-elastic-bands for time-optimal point-to-point nonlinear model predictive control. 2015 european control conference (ECC). IEEE, 2015, pp. 3352–3357.

- Ragot, N.; Khemmar, R.; Pokala, A.; Rossi, R.; Ertaud, J.Y. Benchmark of visual slam algorithms: Orb-slam2 vs rtab-map. 2019 Eighth International Conference on Emerging Security Technologies (EST). IEEE, 2019, pp. 1–6.

- Yang, J.; Wang, C.; Luo, W.; Zhang, Y.; Chang, B.; Wu, M. Research on point cloud registering method of tunneling roadway based on 3D NDT-ICP algorithm. Sensors 2021, 21, 4448. [Google Scholar] [CrossRef] [PubMed]

- Xue, G.; Wei, J.; Li, R.; Cheng, J. LeGO-LOAM-SC: An Improved Simultaneous Localization and Mapping Method Fusing LeGO-LOAM and Scan Context for Underground Coalmine. Sensors 2022, 22, 520. [Google Scholar] [CrossRef] [PubMed]

- Zheng, X.; Gan, H.; Liu, X.; Lin, W.; Tang, P. 3D Point Cloud Mapping Based on Intensity Feature. Artificial Intelligence in China: Proceedings of the 3rd International Conference on Artificial Intelligence in China. Springer, 2022, pp. 514–521.

- Zhang, G.; Yang, C.; Wang, W.; Xiang, C.; Li, Y. A Lightweight LiDAR SLAM in Indoor-Outdoor Switch Environments. 2022 6th CAA International Conference on Vehicular Control and Intelligence (CVCI). IEEE, 2022, pp. 1–6.

- Karal Puthanpura, J. ; others. Pose Graph Optimization for Large Scale Visual Inertial SLAM 2022. [Google Scholar]

- Li, H.; Dong, Y.; Liu, Y.; Ai, J. Design and Implementation of UAVs for Bird’s Nest Inspection on Transmission Lines Based on Deep Learning. Drones 2022, 6, 252. [Google Scholar] [CrossRef]

- Moshayedi, A.J.; Roy, A.S.; Sambo, S.K.; Zhong, Y.; Liao, L. Review on: the service robot mathematical model. EAI Endorsed Transactions on AI and Robotics 2022, 1. [Google Scholar] [CrossRef]

- Zhang, B.; Li, G.; Zheng, Q.; Bai, X.; Ding, Y.; Khan, A. Path planning for wheeled mobile robot in partially known uneven terrain. Sensors 2022, 22, 5217. [Google Scholar] [CrossRef] [PubMed]

- Vagale, A.; Oucheikh, R.; Bye, R.T.; Osen, O.L.; Fossen, T.I. Path planning and collision avoidance for autonomous surface vehicles I: a review. Journal of Marine Science and Technology.

- Gul, F.; Mir, I.; Abualigah, L.; Sumari, P.; Forestiero, A. A consolidated review of path planning and optimization techniques: Technical perspectives and future directions. Electronics 2021, 10, 2250. [Google Scholar] [CrossRef]

- Wu, J.; Ma, X.; Peng, T.; Wang, H. An improved timed elastic band (TEB) algorithm of autonomous ground vehicle (AGV) in complex environment. MDPI, 2021, Vol. 21, p. 8312.

- Cheon, H.; Kim, T.; Kim, B.K.; Moon, J.; Kim, H. Online Waypoint Path Refinement for Mobile Robots using Spatial Definition and Classification based on Collision Probability. IEEE, 2022.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).