Submitted:

12 April 2023

Posted:

12 April 2023

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

2. Related Works

3. The Development of Neural Network Technology for Cryptographic Data Protection

3.1. Structure of Neural Network Technology of Cryptographic Data Protection

- research and development of theoretical foundations of neuro-like cryptographic data protection;

- research and development of new algorithms and structures of neuro-like encryption and decryption of data focused on modern element base;

- modern element base with the ability to program the structure;

- means for automated design of software and hardware.

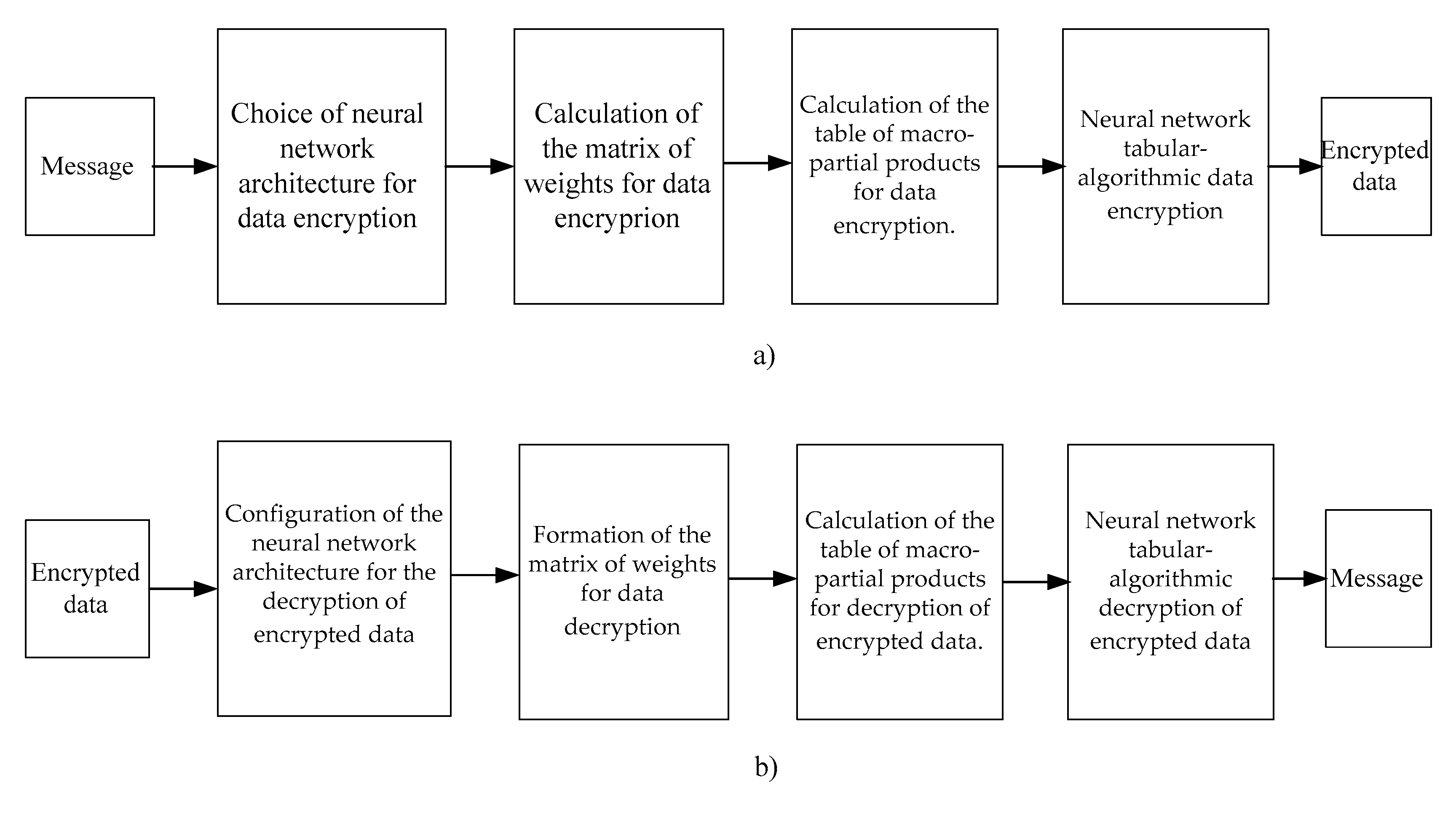

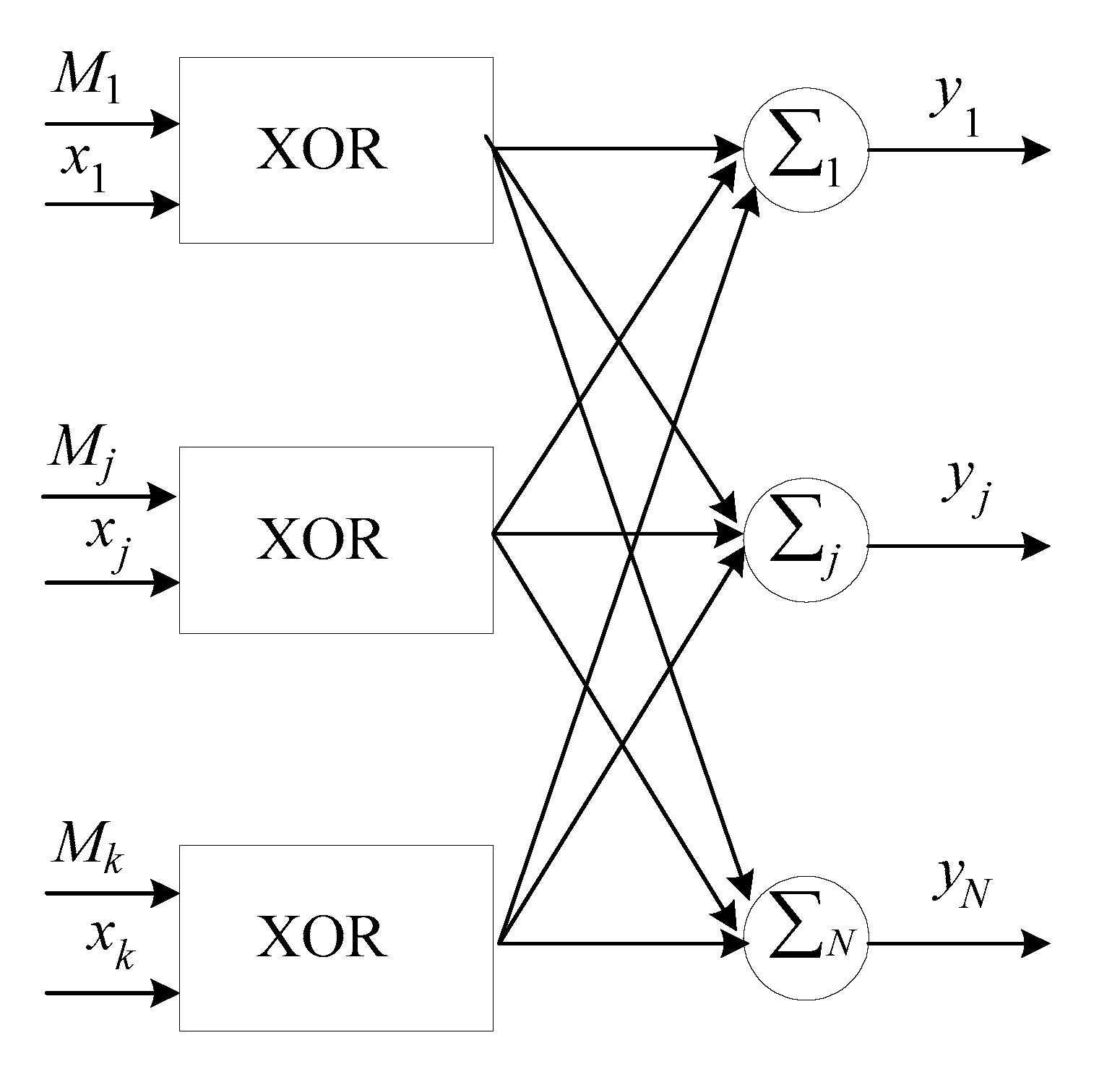

3.2. Main Stages of Neural Network Encryption

- defining the largest common order of weights ;

- calculation of the difference of orders for each weigh coefficient: ;

- shift the mantissa to the right by a difference of orders ;

- calculation of macro-partial product for the case when ;

- determining the number of overflow bits q in the macro-partial product for the case when ;

- obtaining scalable mantissas by shifting them to the right by the number of overflow bits;

- adding to the largest common order of weight the number of overflow bits q, as per formula .

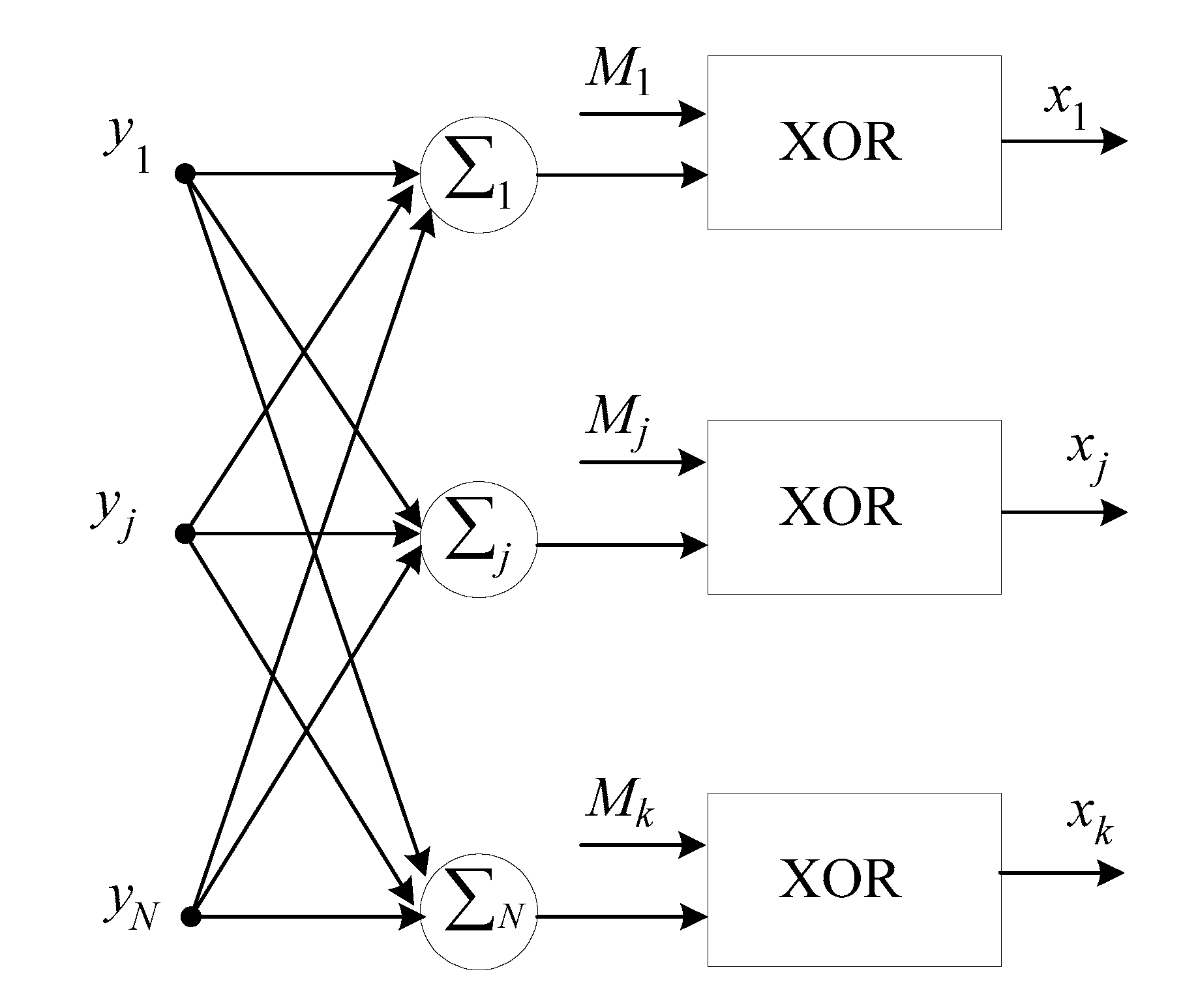

- define the greatest order ;

- for each encrypted data calculate the difference between the orders ;

- by performing shift of the mantissa to the right by the difference of orders we obtain mantissa of the encrypted data reduced to the greatest common order.

3.3. The Main Stages of Neural Network Cryptographic Data Decryption

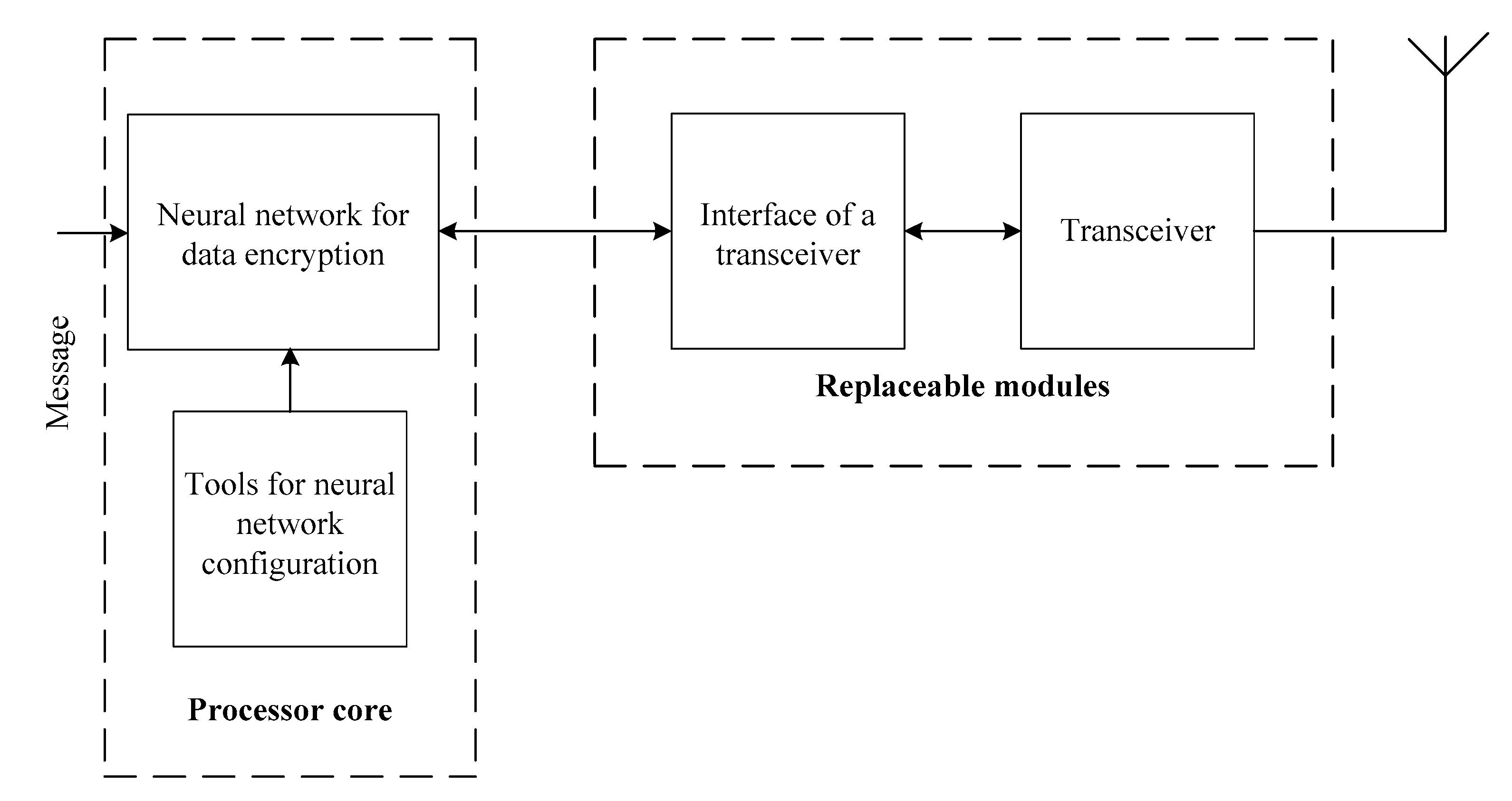

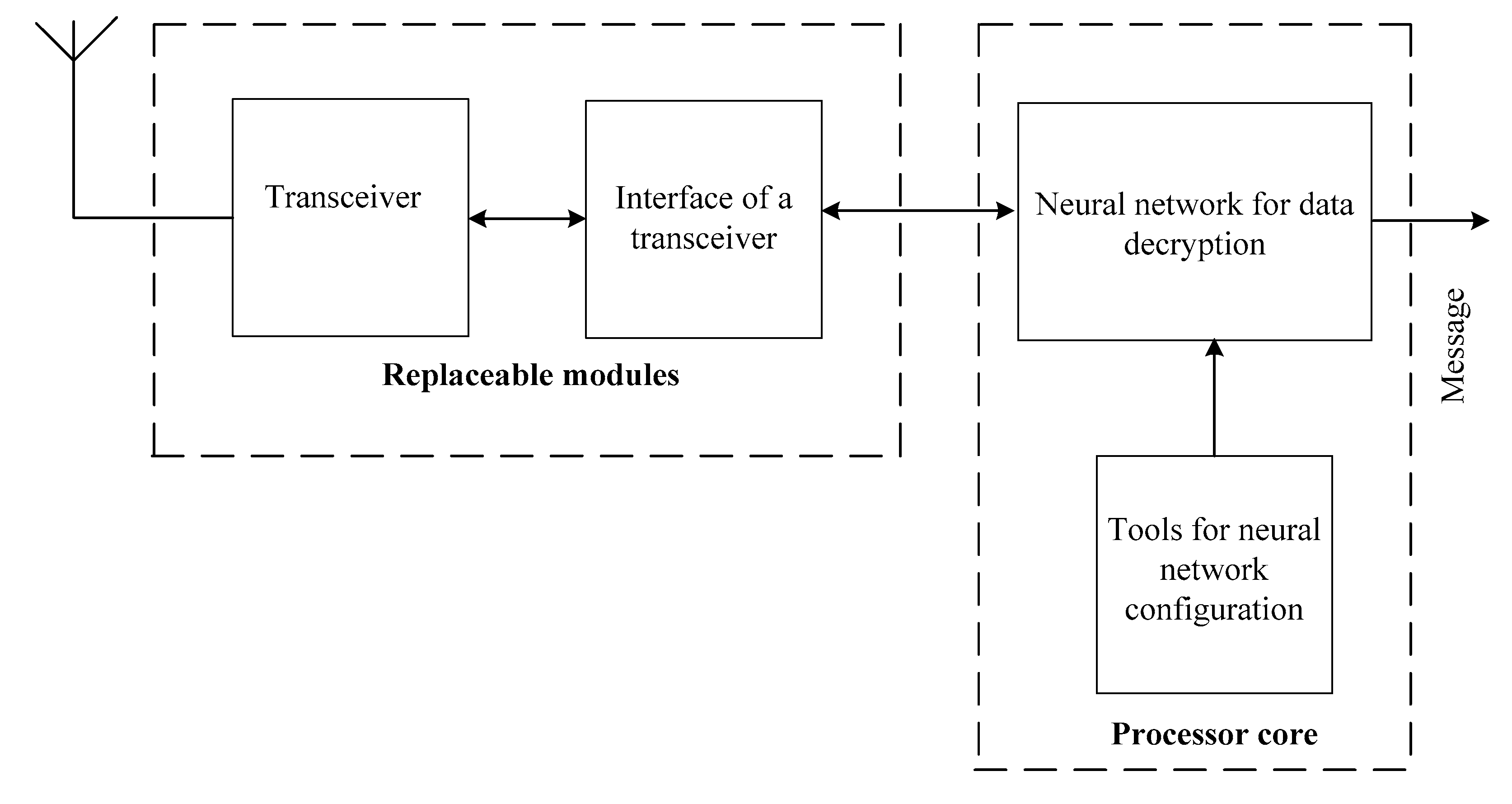

4. The Structure of the System for Neural Network Cryptographic Data Protection and Transferring in Real-Time Mode

- research and development of theoretical foundations of neural network cryptographic data encryption and decryption;

- development of new tabular-algorithmic algorithms and structures for neural network cryptographic data encryption and decryption;

- modern element base, development environment and computer-aided design tools.

- changeable composition of the equipment, which foresees the presence of the processor core and replaceable modules, with which the core adapts to the requirements of a particular application;

- modularity, which involves the development of system components in the form of functionally complete devices;

- pipeline and spatial parallelism in data encryption and decryption;

- the openness of the software, which provides opportunities for development and improvement, maximising the use of standard drivers and software.;

- specialising and adapting hardware and software to the structure of tabular algorithms for encrypting and decrypting data.;

- the programmability of hardware module architecture through the use of programmable logic integrated circuits.

5. Development of the Components of the Onboard System for Neural Network Cryptographic Data Encryption and Decryption

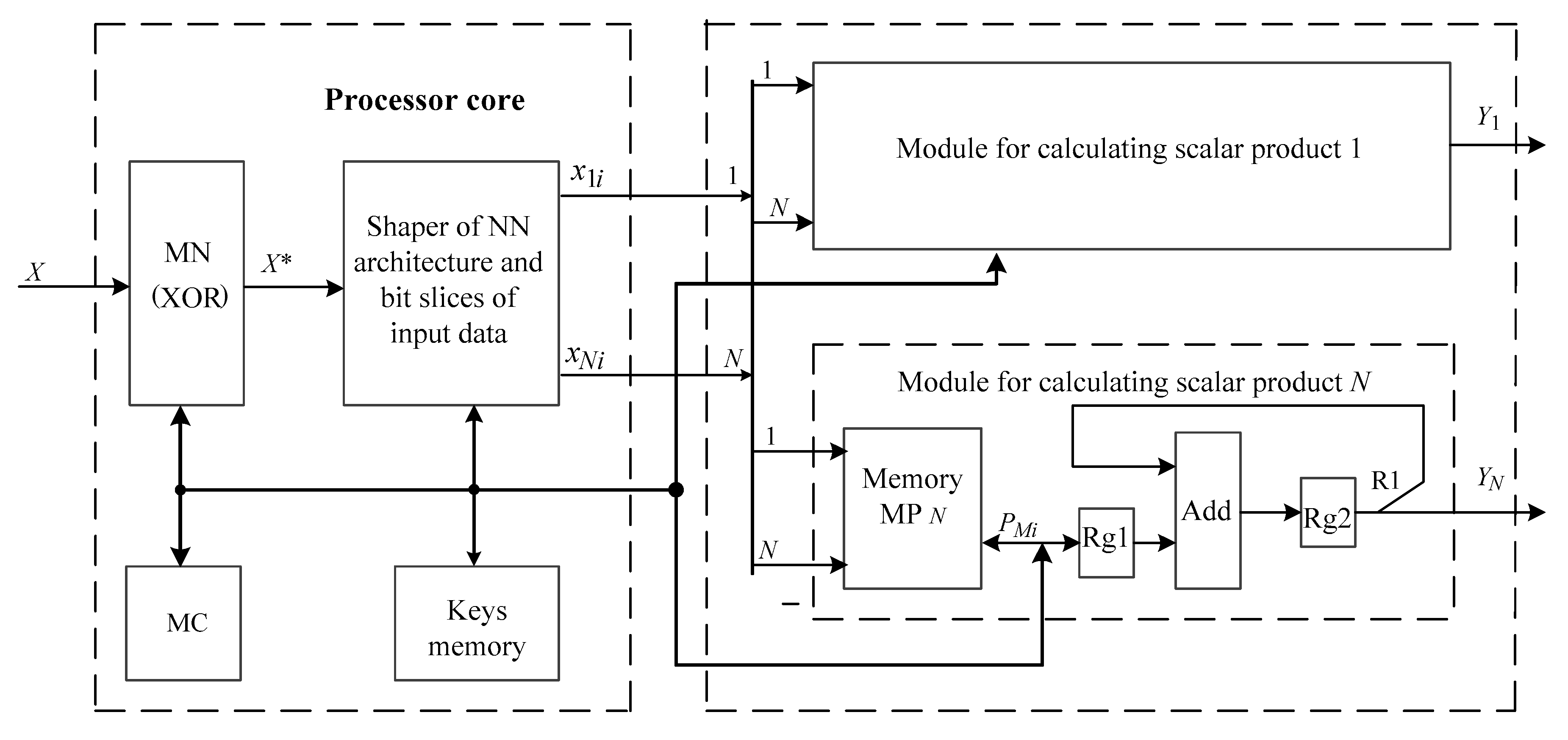

5.1. Development of the Structure of the Components for Neural Network Cryptographic Data Encryption and Decryption

- to develop an algorithm for the onboard system of neural network encryption-decryption of data and present it in the form of a specified flow graph;

- to design the structure of the onboard system for neural network data encryption-decryption with the maximum efficiency of equipment use, taking into account all the limitations and providing real-time data processing;

- to determine the main characteristics of neural elements and carry out their synthesis;

- to choose exchange methods, determine the necessary connections and develop algorithms for exchange between system components;

- to determine the order of implementation in time of neural network data encryption-decryption processes and develop algorithms for their management.

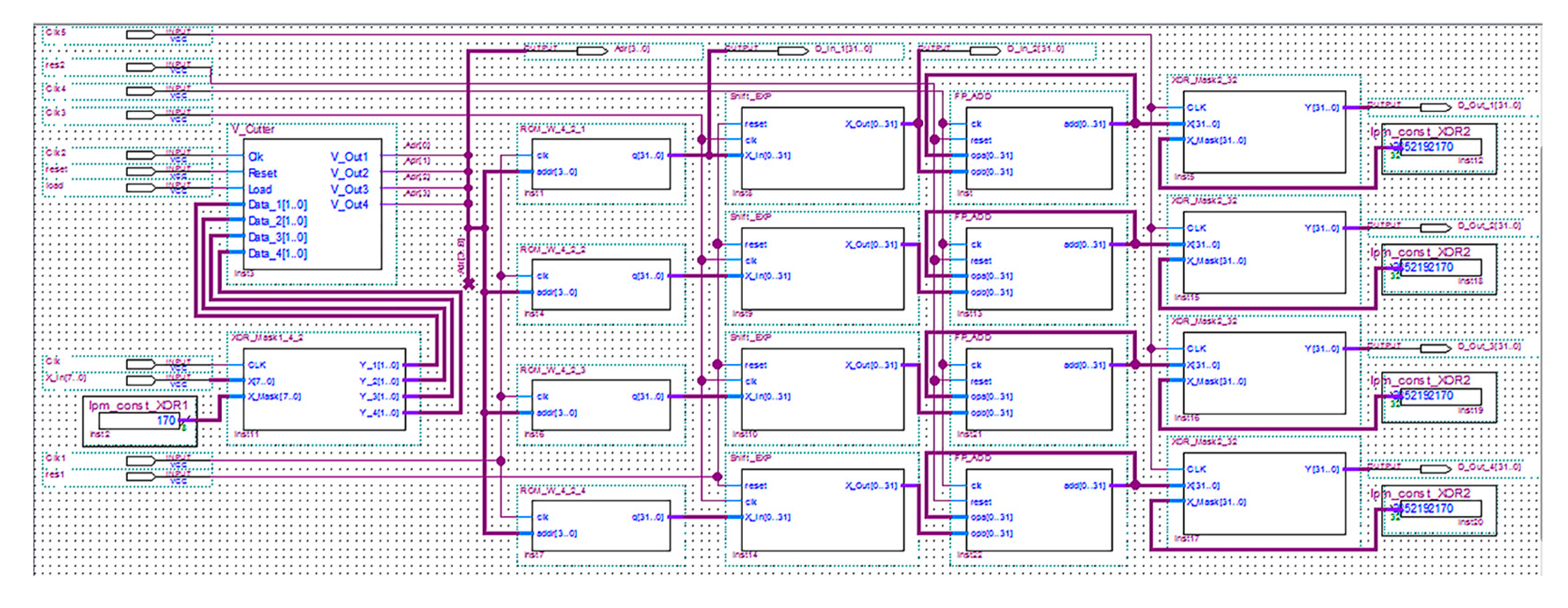

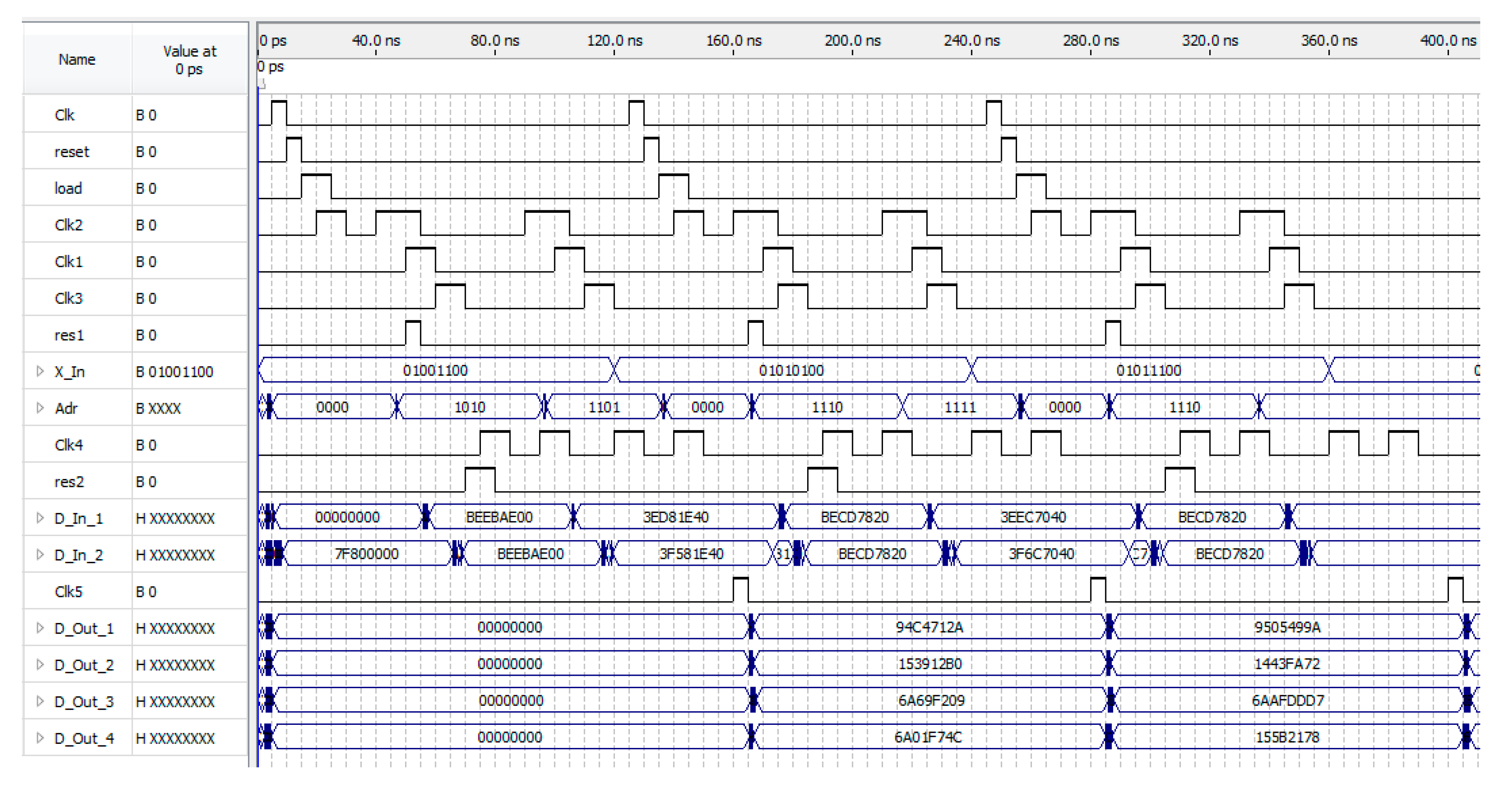

5.2. Implementation of the Specialized Hardware Components of Neural Network Cryptographic Data Encryption on FPGA

6. Conclusions

Author Contributions

Conflicts of Interest

References

- Han, B.; Qin, D.; Zheng, P.; Ma, L.; Teklu, M.B. Modeling and performance optimization of unmanned aerial vehicle channels in urban emergency management, ISPRS Int. J. Geo-Inf, 2021, 10, 478. [Google Scholar] [CrossRef]

- Śledź, S.; Ewertowski, M.W.; Piekarczyk, J. Applications of unmanned aerial vehicle (UAV) surveys and Structure from Motion photogrammetry in glacial and periglacial geomorphology. Geomorphology 2021, 378, 107620. [Google Scholar] [CrossRef]

- Zhang, C.; Zou, W.; Ma, L.; & Wang, Z.; & Wang, Z. Biologically inspired jumping robots: A comprehensive review. Robotics Auton. Syst. 2020, 124, 103362. [Google Scholar] [CrossRef]

- Weng, Z.; Yang, Y.; Wang, X.; Wu, L.; Hua, S.; Zhang, H.; Meng, Z. Parentage analysis in giant grouper (epinephelus lanceolatus) using microsatellite and SNP markers from genotyping-by-sequencing data. Genes 2021, 12, 1042. [Google Scholar] [CrossRef] [PubMed]

- Boreiko, O.; Teslyuk, V.; Zelinskyy, A.; Berezsky, O. Development of models and means of the server part of the system for passenger traffic registration of public transport in the “smart” city. EEJET 2017, 1, 40–47. [Google Scholar] [CrossRef]

- Kim, K.; and Kang, Y. Drone security module for UAV data encryption. In Proceedings 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Korea (South), 2020, 1672-1674. [CrossRef]

- Samanth, S.; K V, P.; & Balachandra, M.; & Balachandra, M. Security in Internet of Drones: A Comprehensive Review. Cogent Engineering 2022, 9, 2029080. [Google Scholar] [CrossRef]

- Kong, P.-Y. A survey of cyberattack countermeasures for unmanned aerial vehicles. IEEE Access 2021, 9, 148244–148263. [Google Scholar] [CrossRef]

- Shafique, A.; Mehmood, A.; Elhadef, M.; Khan, KH. A lightweight noise-tolerant encryption scheme for secure communication: An unmanned aerial vehicle application. PLOS ONE 2022, 17, e0273661. [Google Scholar] [CrossRef]

- Verma, A.; and Ranga, V. Security of RPL based 6LoWPAN Networks in the Internet of Things: A Review. IEEE Sensors J. 2020, 20, 11–5690. [Google Scholar] [CrossRef]

- Morales-Molina, C.D.; Hernandez-Suarez, A.; Sanchez-Perez, G.; Toscano-Medina, L.K.; Perez-Meana, H.; Olivares-Mercado, J.; Portillo-Portillo, J.; Sanchez, V.; Garcia-Villalba, L.J. A Dense Neural Network Approach for Detecting Clone ID Attacks on the RPL Protocol of the IoT. Sensors 2021, 21, 3173. [Google Scholar] [CrossRef]

- Sohaib, O.; W. Hussain, M. Asif, M. Ahmad and M. Mazzara, A PLS-SEM neural network approach for understanding cryptocurrency adoption. IEEE Access 2020, 8, 13138–13150. [Google Scholar] [CrossRef]

- Holovatyy A., Łukaszewicz A., Teslyuk V., Ripak N. Development of AC Voltage Stabilizer with Microcontroller-Based Control System In Proceedings of the 2022 IEEE 17th International Conference on Computer Sciences and Information Technologies (CSIT), 2022, Institute of Electrical and Electronics Engineers, 2022, 527-530. [CrossRef]

- Grodzki, W. , Łukaszewicz A. Design and manufacture of unmanned aerial vehicles (UAV) wing structure using composite materials, Materialwissenschaft und Werkstofftechnik, 2015, 46, 269–278. [Google Scholar] [CrossRef]

- Łukaszewicz, A. CAx techniques used in UAV design process, In Proceedings of the 2020 IEEE 7th International Workshop on Metrology for AeroSpace (MetroAeroSpace), 2020, 95-98. [CrossRef]

- Łukaszewicz, A. , Skorulski G. In , Szczebiot R. (2018): The main aspects of training in the field of computer aided techniques (CAx) in mechanical engineering. In Proceedings of the 17th International Scientific Conference on Engineering for Rural Development, May 23-25, Jelgava, Latvia, , 865-870. 2018. [Google Scholar] [CrossRef]

- Łukaszewicz, A. , Miatluk K. Reverse Engineering Approach for Object with Free-Form Surfaces Using Standard Surface-Solid Parametric CAD System, Solid State Phenomena, 2009, 147-149, 706-711. [CrossRef]

- Miatliuk, K. Coordination method in design of forming operations of hierarchical solid objects, In Proceedings of the 2008 International Conference on Control, Automation and Systems, ICCAS 2008, pp. 2724–2727, 4694220. [CrossRef]

- Puchalski, R. , Giernacki W. UAV Fault Detection Methods, State-of-the-Art, Drones, 2022, 6, 330. [Google Scholar] [CrossRef]

- Zietkiewicz, J. , Kozierski P. , Giernacki W. Particle swarm optimisation in nonlinear model predictive control; comprehensive simulation study for two selected problems, International Journal of Control, 2021, 94, 2623–2639. [Google Scholar] [CrossRef]

- Kownacki, C. , Ambroziak L. Adaptation Mechanism of Asymmetrical Potential Field Improving Precision of Position Tracking in the Case of Nonholonomic UAVs, Robotica, 2019, 37, 1823–1834. [Google Scholar] [CrossRef]

- Kownacki, C. , Ambroziak L. , Ciężkowski M., Wolniakowski A., Romaniuk S., Bożko A., Ołdziej D. Precision Landing Tests of Tethered Multicopter and VTOL UAV on Moving Landing Pad on a Lake, Sensors 2023, 23, 2016. [Google Scholar] [CrossRef] [PubMed]

- Basri, E.I.; Sultan, M.T.H.; Basri, A.A.; Mustapha, F.; Ahmad, K.A. Consideration of Lamination Structural Analysis in a Multi-Layered Composite and Failure Analysis on Wing Design Application. Materials 2021, 14, 3705. [Google Scholar] [CrossRef] [PubMed]

- Al-Haddad, L.A. , Jaber A. A., An Intelligent Fault Diagnosis Approach for Multirotor UAVs Based on Deep Neural Network of Multi-Resolution Transform Features, Drones, 2023, 7, 82. [Google Scholar] [CrossRef]

- Yang, J. , Gu H. , Hu C., Zhang X., Gui G., Gacanin H. Deep Complex-Valued Convolutional Neural Network for Drone Recognition Based on RF Fingerprinting, Drones, 2022, 6, 374. [Google Scholar] [CrossRef]

- Holovatyy, A. , Teslyuk V., Lobur M., Sokolovskyy Y., Pobereyko S. Development of Background Radiation Monitoring System Based on Arduino Platform. International Scientific and Technical Conference on Computer Science and Information Technologies, 2018, pp. 121–124. [CrossRef]

- Holovatyy, A. , Teslyuk V., Lobur M., Szermer M., Maj C. Mask Layout Design of Single- and Double-Arm Electrothermal Microactuators. Perspective Technologies and Methods In MEMS Design, MEMSTECH 2016–Proceedings of 12th International Conference, 2016, pp. 28–30. [CrossRef]

- Volna, E.; Kotyrba, M.; Kocian, V.; & Janosek, M. ; & Janosek, M. Cryptography Based On Neural Network. In Proceedings of the 26th European Conference on Modeling and Simulation (ECMS 2012), edited by: K. G. Troitzsch, M. Moehring, U. Lotzmann. European Council for Modeling and Simulation. Koblenz, Germany, 2012, 386-391., May 29–June 1. [CrossRef]

- Shihab, K. A backpropagation neural network for computer network security. Journal of Computer Science 2006, 2, 710–715. [Google Scholar] [CrossRef]

- Sagar, V.; Kumar, K. A symmetric key cryptographic algorithm using counter propagation network (CPN). In Proceedings of the 2014 ACM International Conference on Information and Communication Technology for Competitive Strategies, November 14-16, 2014., (ICTCS’14). Udaipur Rajasthan India. [CrossRef]

- Arvandi, M.; Wu, S.; Sadeghian, A.; Melek, W.W.; Woungang, I. Symmetric cipher design using recurrent neural networks. In Proceedings of the IEEE International Joint Conference on Neural Networks, 2039–2046. 2006. [Google Scholar] [CrossRef]

- Tsmots, I.; Tsymbal, Y.; Khavalko, V.; Skorokhoda, O.; Teslyuk, T. Neural-like means for data streams encryption and decryption in real time. In Proceedings of the 2018 IEEE Second International Conference on Data Stream Mining & Processing (DSMP). Lviv, Ukraine, 2018, 438-443., August 21-25. [CrossRef]

- Scholz, M.; Fraunholz, M.; Selbig, J. Nonlinear principal component analysis: neural network models and applications. In: Gorban, A.N., Kégl, B., Wunsch, D.C., Zinovyev, A.Y. (eds) Principal Manifolds for Data Visualization and Dimension Reduction. Lecture Notes in Computational Science and Engineering, 58, 2008, Springer, Berlin, Heidelberg. [CrossRef]

- Rabyk, V.; Tsmots, I.; Lyubun, Z.; Skorokhoda, O. Method and Means of Symmetric Real-time Neural Network Data Encryption. In Proceedings of the 2020 IEEE 15th International Scientific and Technical Conference on Computer Sciences and Information Technologies (CSIT 2020), 2020, 1, 47–50. [Google Scholar] [CrossRef]

- Chang, A.X.M.; Martini, B.; Culurciello, E. Recurrent Neural Networks Hardware Implementation on FPGA: arXiv preprint arXiv:1511.05552. 2015. arXiv:1511.05552. 2015. [CrossRef]

- Nurvitadhi, E. et al. In Can FPGAs beat GPUs in accelerating next-generation deep neural networks? In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays. Monterey, California, USA, 2017, 5-14., February 22-24. [CrossRef]

- Misra, J.; Saha, I. Artificial neural networks in hardware: A survey of two decades of progress. Neurocomputing, 2010; 74, 239–255. [Google Scholar] [CrossRef]

- Guo, K. et al. In From model to FPGA: Software-hardware co-design for efficient neural network acceleration. In Proceedings of the 2016 IEEE Hot Chips 28 Symposium (HCS). 2016; 1–27. [Google Scholar] [CrossRef]

- Ovtcharov, K.; et al. Accelerating Deep Convolutional Neural Networks Using Specialized Hardware. Microsoft Research Whitepaper. 2016. Available online: https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/CNN20Whitepaper.pdf (accessed on 29 April 2022).

- Wang, Y.; Xu, J.; Han, Y.; Li, H. and Li, X. In DeepBurning: automatic generation of FPGA-based learning accelerators for the neural network family. In Proceedings of the 53rd Annual Design Automation Conference (DAC’16). Association for Computing Machinery, New York, NY, USA, 1–6., Article 110. [CrossRef]

- Nurvitadhi, E.; Sheffield, D.; Sim, J.; Mishra, A.; Venkatesh, G. , and Marr, D. Accelerating Binarized Neural Networks: Comparison of FPGA, CPU, GPU, and ASIC. In Proceedings 2016 International Conference on Field-Programmable Technology (FPT), 2016, 77-84. [CrossRef]

- Yayik, A.; Kutlu, Y. Neural Network Based Cryptography. Neural Network Worldc, 2014, 24, 177–192. [Google Scholar] [CrossRef]

- Govindu, G.; Zhuo, L.; Choi, S.; and Prasanna, V. Analysis of high-performance floating-point arithmetic on FPGAs. In Proceedings of the 18th International Parallel and Distributed Processing Symposium (IPDPS 2004). 26-30 April, Santa Fe, New Mexico, USA, 149. 2004. [Google Scholar] [CrossRef]

- Holovatyy, A. , Teslyuk V., Lobur M. VHDL-AMS model of delta-sigma modulator for A/D converter in MEMS interface circuit. Perspective Technologies and Methods In MEMS Design, MEMSTECH 2015—Proceedings of the 11th International Conference, 2015, pp. 55–57. [CrossRef]

- Holovatyy, A. , Lobur M. , Teslyuk V. VHDL-AMS model of mechanical elements of MEMS tuning fork gyroscope for the schematic level of computer-aided design. Perspective Technologies and Methods In MEMS Design—Proceedings of the 4th International Conference of Young Scientists, MEMSTECH 2008, 2008, pp 138—140. [Google Scholar] [CrossRef]

- Electronic components database. Available online: https://www.digchip.com/datasheets/parts/datasheet/033/EP3C16F484C6.php (accessed on 29 April 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).