Submitted:

14 April 2023

Posted:

14 April 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Models and Methods

2.1. Quantile Regression

2.2. Bayesian Quantile Regression based on Spike-and-Slab Lasso

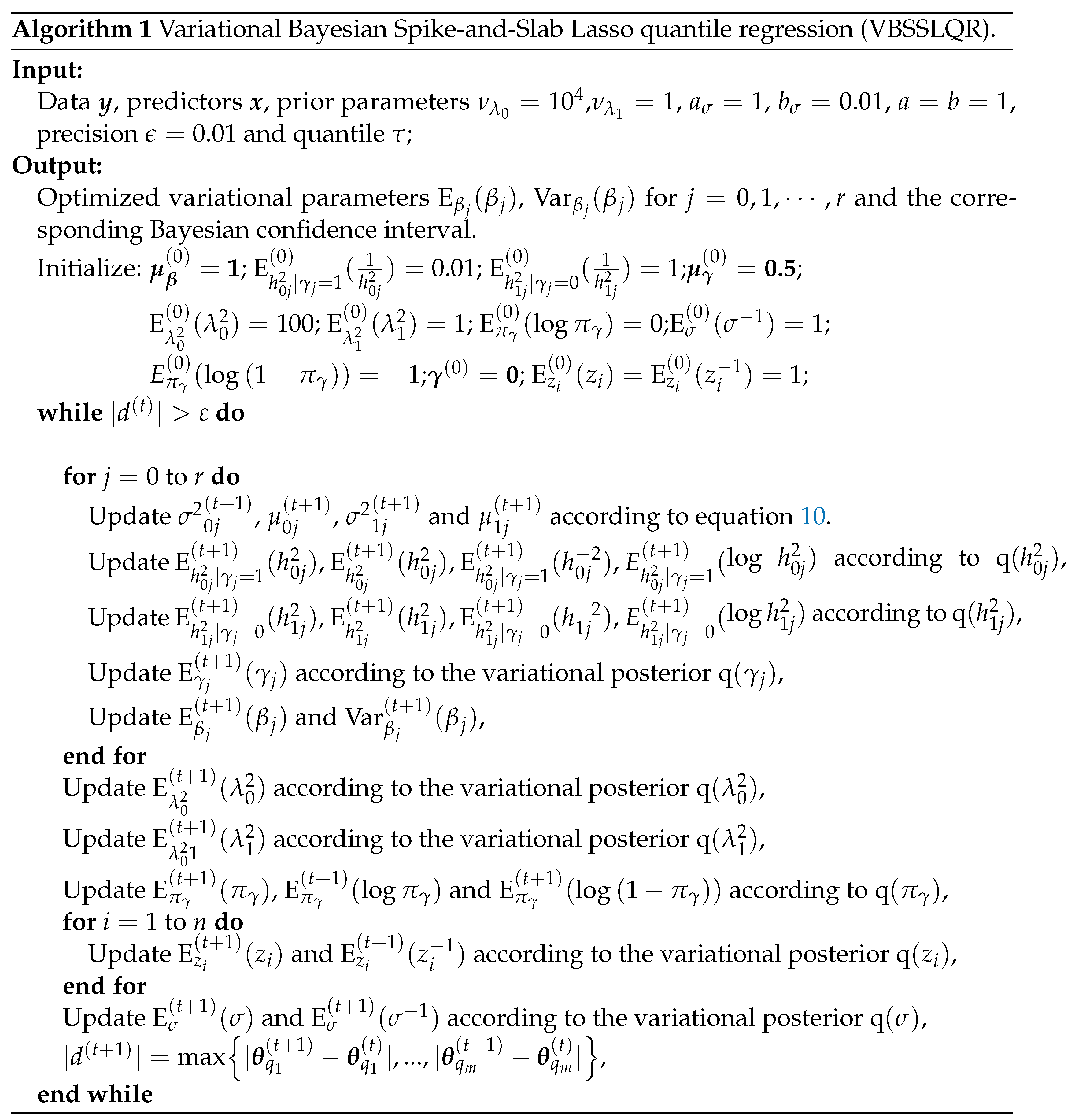

2.3. Quantile Regression with Spike-and-Slab Lasso Penalty Based on Variational Bayesian

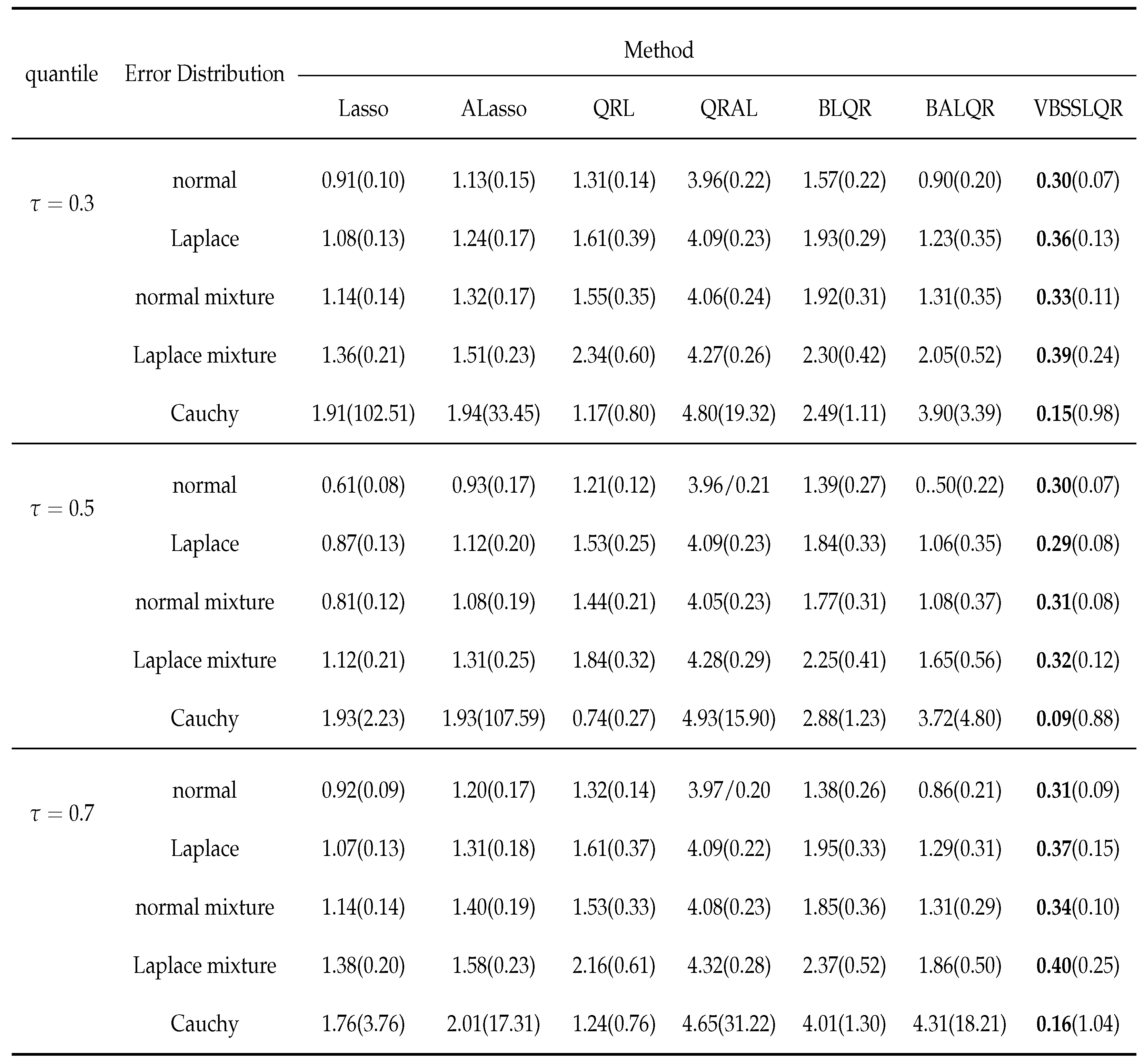

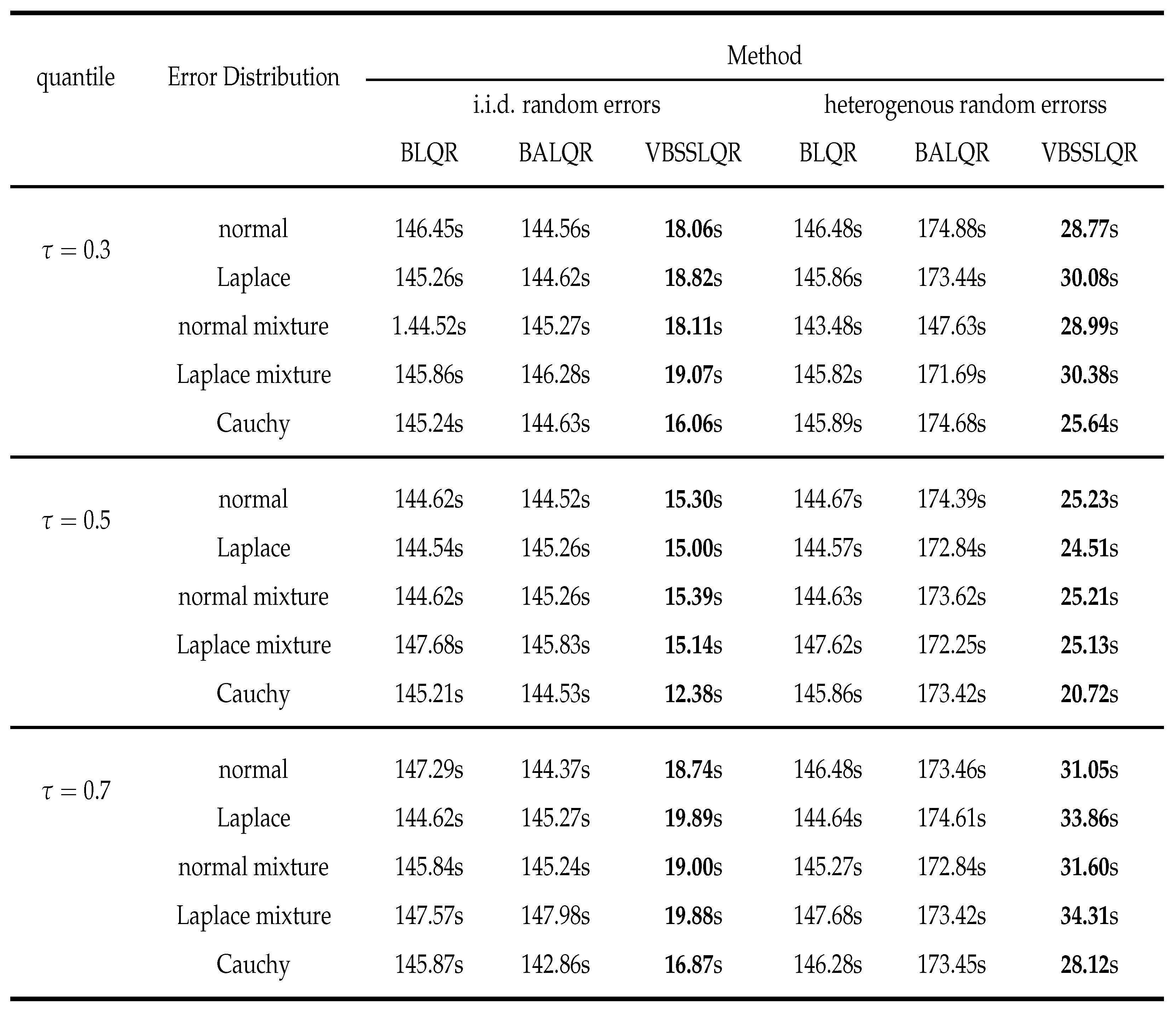

3. Simulation Studies

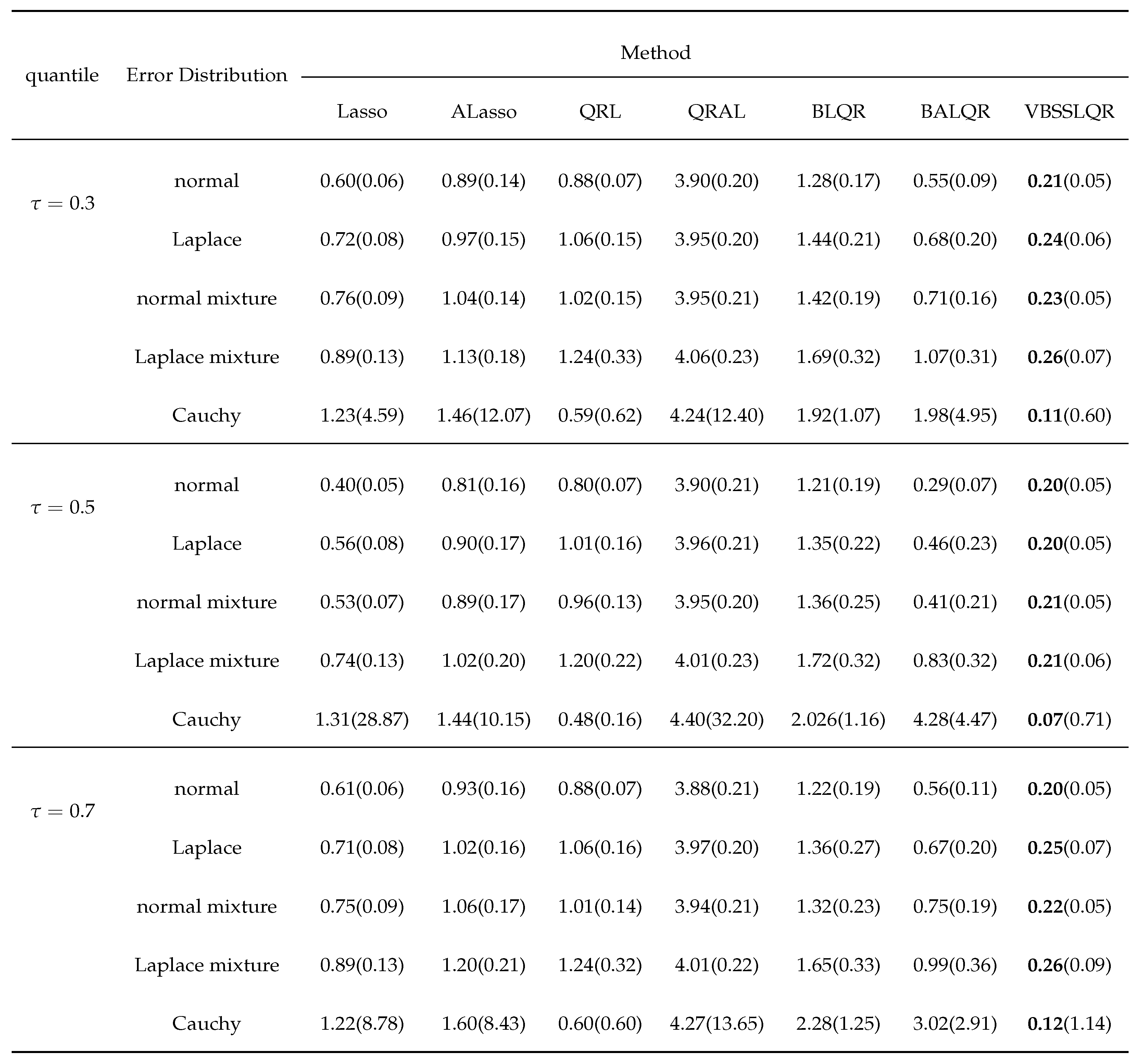

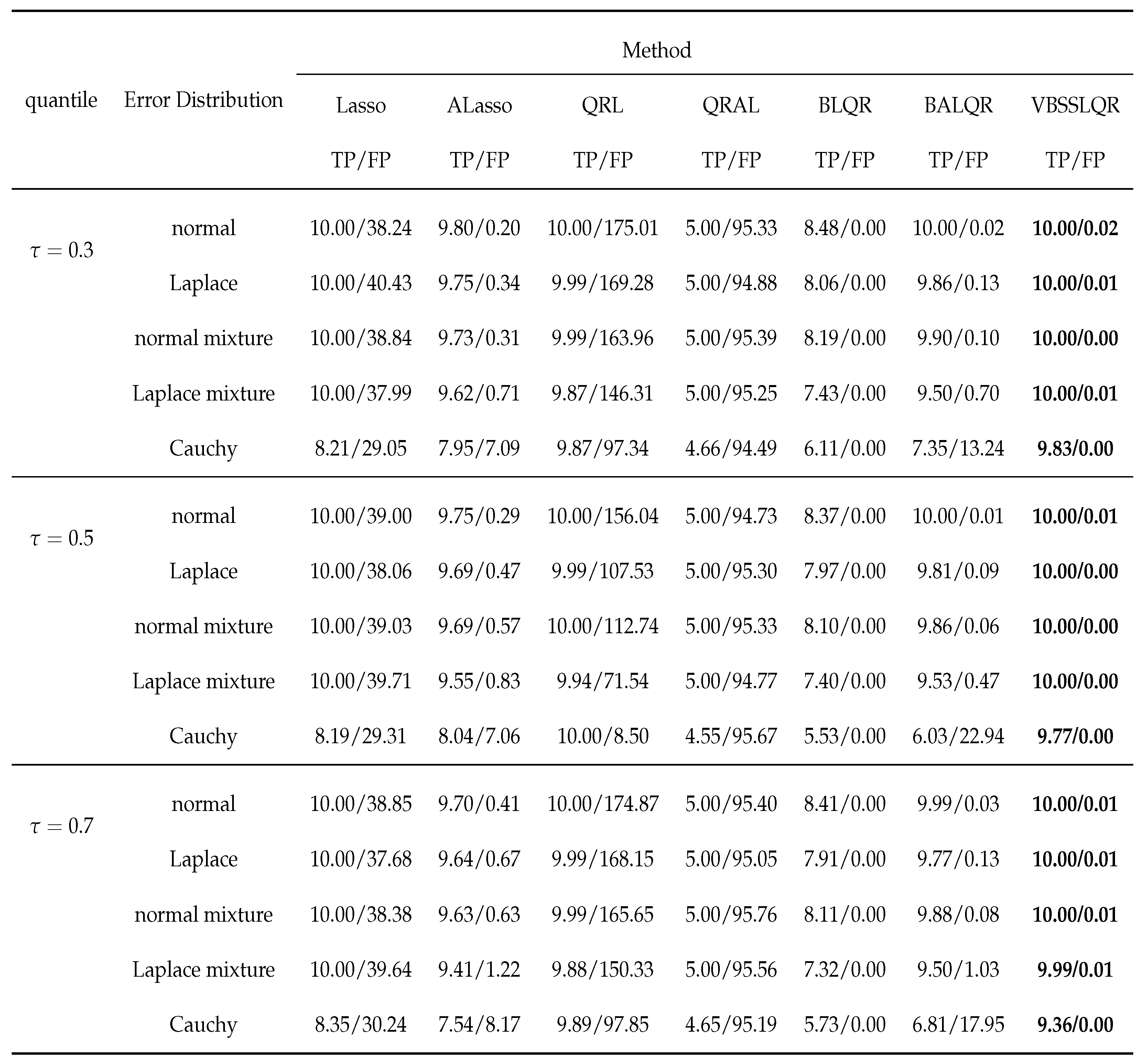

3.1. Independent and Identically Distributed Random Errors Random

- The error with being the quantile of , for ;

- The error with being the quantile of , where denotes the Laplace distribution with location parameter a and scale parameter b;

- The error with and respectively being the quantile of and ;

- The error with and respectively being the quantile of and ;

- The error with being the quantile of , where denotes the Cauchy distribution with location parameter a and scale parameter b;

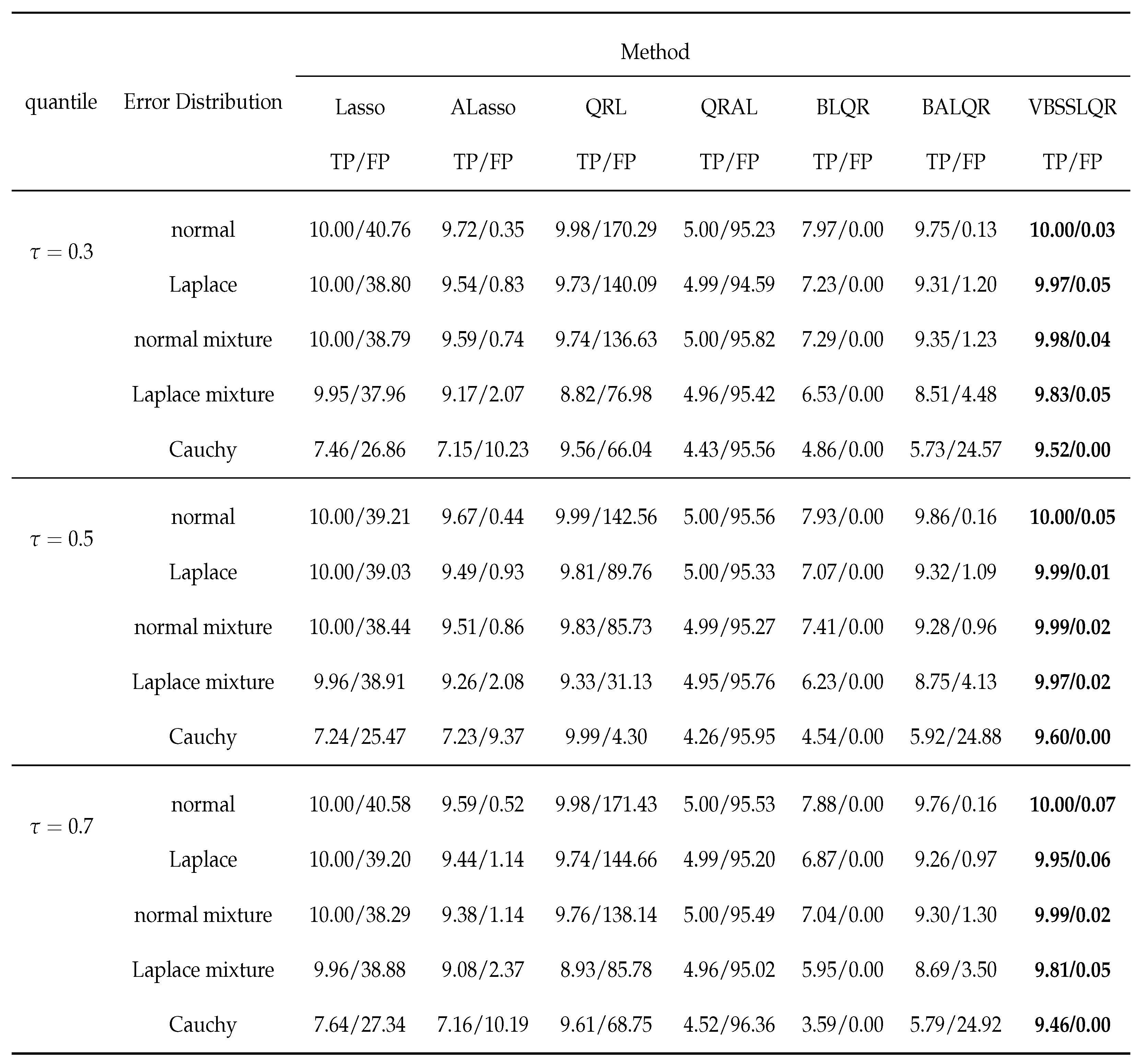

3.2. Heterogenous Random Errors

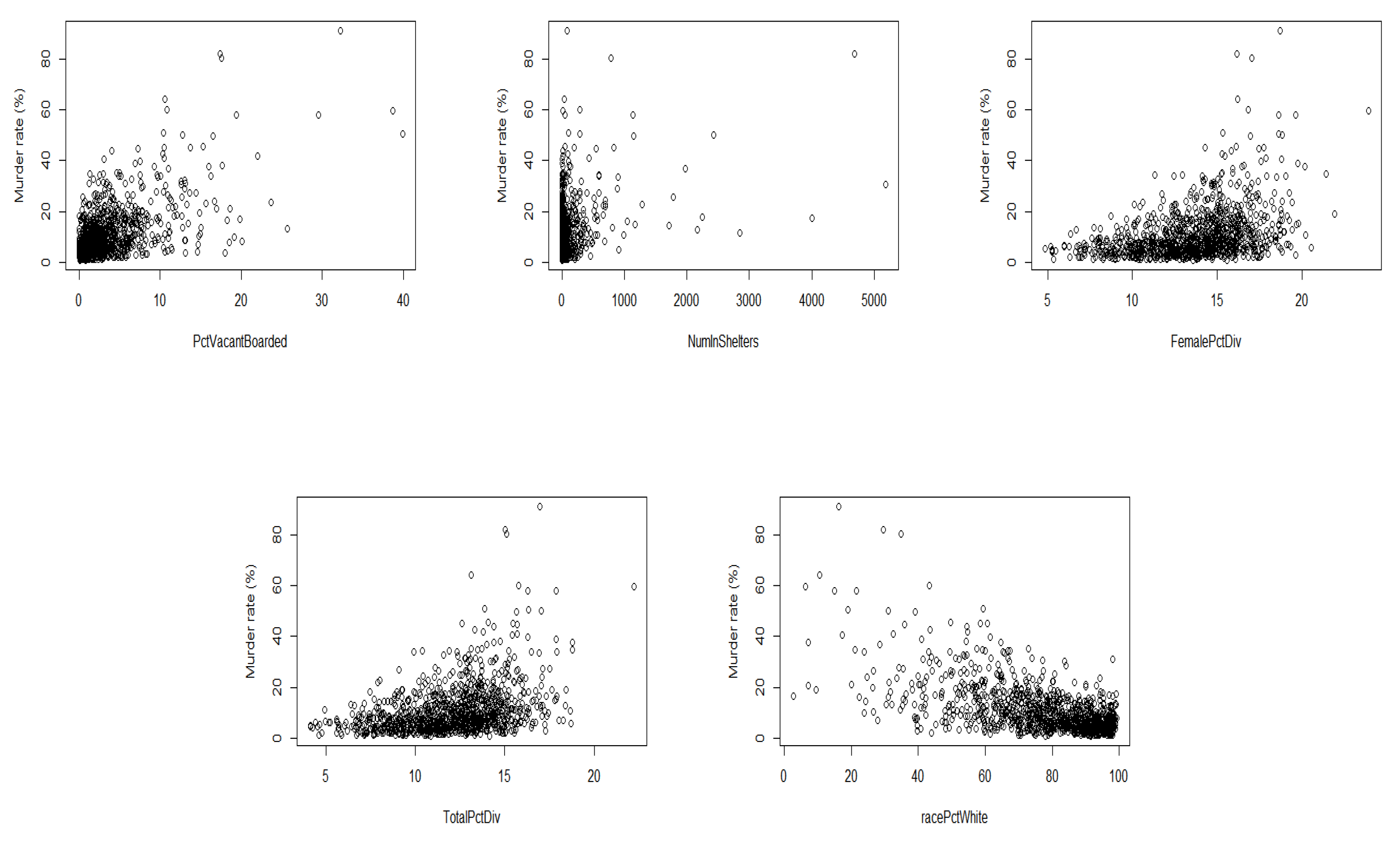

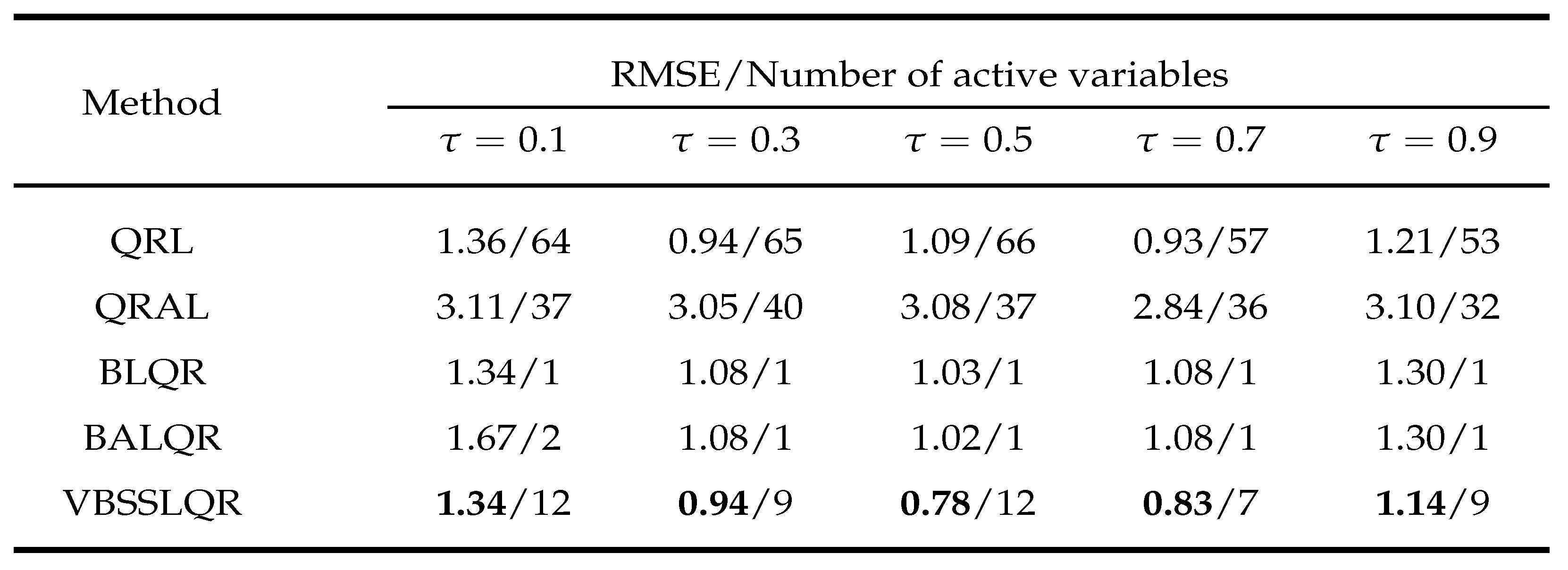

4. Examples

- Delete columns from the data set that contain missing data.

- Delete the data when the response variable equals 0 because this is not an issue of interest to us.

- Transform : and letting as the new response variable.

- Convert some qualitative variables into quantitative variables.

- Standardized covariates.

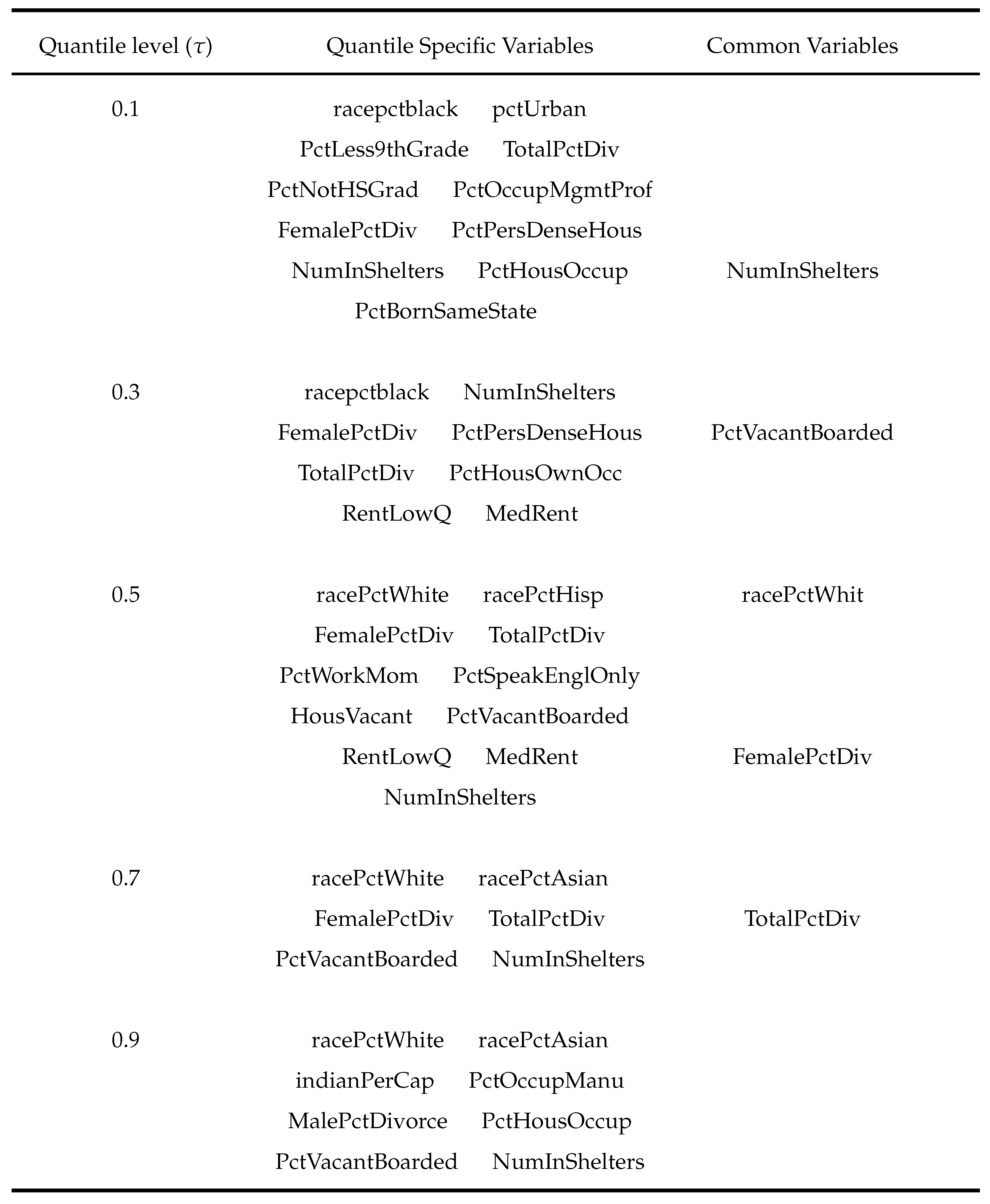

- PctVacantBoarded: percentage of households that are vacant and boarded up to prevent from vandalism.

- NumInShelters: number of shelters in the community.

- FemalePctDiv: percentage of females who are divorced.

- TotalPctDiv: percentage of peoples who are divorced.

- racePctWhite: percentage of person who are white race.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Deduction

Appendix B. Expectation

Appendix C. Efficiency comparison between Bayesian quantile regression

References

- Koenker, R.; Bassett, G. Regression quantile. Econometrica 1978, 46, 33–50. [Google Scholar] [CrossRef]

- Buchinsky, M. Changes in the united-states wage structure 1963-1987 - application of quantile regression. Econometrica 1994, 62, 405–458. [Google Scholar] [CrossRef]

- Thisted, R.; Osborne, M.; Portnoy, S.; Koenker, R. The gaussian hare and the laplacian tortoise: computability of squared-error versus absolute-error estimators - comments and rejoinders. Statistical science 1997, 12, 296–300. [Google Scholar]

- Koenker, R.; Hallock, K. Quantile regression. Journal of economic perspectives 2001, 15, 143–156. [Google Scholar] [CrossRef]

- Yu, K.; Moyeed, R. Bayesian quantile regression. Statistics & probability letters 2001, 54, 437–447. [Google Scholar] [CrossRef]

- Taddy, M.A.; Kottas, A. A bayesian nonparametric approach to inference for quantile regression. Journal of business & economic statistics 2010, 28, 357–369. [Google Scholar] [CrossRef]

- Hu, Y.; Gramacy, R.B.; Lian, H. Bayesian quantile regression for single-index models. Statistics and computing 2013, 23, 437–454. [Google Scholar] [CrossRef]

- Lee, E.R.; Noh, H.; Park, B.U. Model selection via bayesian information criterion for quantile regression models. Journal of the american statistical association 2014, 109, 216–229. [Google Scholar] [CrossRef]

- Frank, I.; Friedman, J. A statistical view of some chemometrics regression tools. Technometrics 1993, 35, 109–135. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. Journal of the royal statistical society, Series B 1996, 58. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the american statistical association 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. Journal of the royal statistical society series b-statistical methodology 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. Journal of the american statistical association 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Koenker, R. Quantile regression for longitudinal data. Journal of multivariate analysis 2004, 91, 74–89. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, Y. Variable selection in quantile regession. Statistica sinica 2009, 19, 801–817. [Google Scholar]

- Park, T.; Casella, G. The bayesian lasso. Journal of the american statistical association 2008, 103, 681–686. [Google Scholar] [CrossRef]

- Leng, C.; Tran, M.N.; Nott, D. Bayesian adaptive Lasso. Annals of the institute of statistical mathematics 2014, 66, 221–244. [Google Scholar] [CrossRef]

- Li, Q.; Xi, R.; Lin, N. Bayesian regularized quantile regression. Bayesian analysis 2010, 5, 533–556. [Google Scholar] [CrossRef]

- Alhamzawi, R.; Yu, K.; Benoit, D.F. Bayesian adaptive Lasso quantile regression. Statistical modelling 2012, 12, 279–297. [Google Scholar] [CrossRef]

- Ishwaran, H.; Rao, J. Spike and slab variable selection: frequentist and bayesian strategies. Annals of statistics 2005, 33, 730–773. [Google Scholar] [CrossRef]

- Ray, K.; Szabo, B. Variational bayes for high-dimensional linear regression with sparse priors. Journal of the american statistical association 2022, 117, 1270–1281. [Google Scholar] [CrossRef]

- Yi, J.; Tang, N. Variational bayesian inference in high-dimensional linear mixed models. Mathematics 2022, 10. [Google Scholar] [CrossRef]

- Xi, R.; Li, Y.; Hu, Y. Bayesian quantile regression based on the empirical likelihood with spike and slab priors. Bayesian analysis 2016, 11, 821–855. [Google Scholar] [CrossRef]

- Koenker, R.; Machado, J. Goodness of fit and related inference processes for quantile regression. Journal of the american statistical association 1999, 94, 1296–1310. [Google Scholar] [CrossRef]

- Tsionas, E. Bayesian quantile inference. Journal of statistical computation and simulation 2003, 73, 659–674. [Google Scholar] [CrossRef]

- Rockova, V. Bayesian estimation of sparse signals with a continuous spike-and-slab prior. Annals of statistics 2018, 46, 401–437. [Google Scholar] [CrossRef] [PubMed]

- Alhamzawi, R.; Ali, H.T.M. The bayesian adaptive lasso regression. Mathematical biosciences 2018, 303, 75–82. [Google Scholar] [CrossRef]

- Parisi, G.; Shankar, R. Statistical field theory. Physics today 1988, 41, 110. [Google Scholar] [CrossRef]

- Beal, M.J. Variational algorithms for approximate bayesian inference. Phd thesis university of london 2003. [Google Scholar]

- Kuruwita, C.N. Variable selection in the single-index quantile regression model with high-dimensional covariates. Communications in statistics-simulation and computation 2021. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).