1. Introduction

The causes of traffic accidents can be assigned to three reasons: driver-related, vehicle-related, and environment-related critical causes [

1]. According to the Stanford Center for Internet and Society claims that “ninety percent of motor vehicle crashes are caused at least in part by human error” [

2]. Thus, in order to eliminate driver-related factors, the demands of autonomous driving vehicles have primarily driven the development of ADAS. Furthermore, vehicle-related factors are mainly related to the robustness of vehicle components. For example, if the data coming from the sensors is not accurate or reliable, it can corrupt everything else downstream in ADAS. Finally, environment-related factors also raise challenges for road safety. For example, in a dataset of traffic accidents collected in a Chinese city from 2014 to 2016, approximately 30.5% of accidents were related to harsh weather and illumination conditions [

3]. For these reasons, automotive manufacturers are placing a very high priority on the development of safety systems. Therefore, A reliable and safe ADAS can prevent accidents and reduce the risk of injury to vehicle occupants and vulnerable road users. To fulfil this requirement, the sensors must be highly robust and real-time while also being able to cope with adverse weather and lighting conditions. As a result, multi-sensor fusion solutions based on Camera, LiDAR and Radar are widely used in higher-level automation driving for a powerful interpretation of vehicle’s surroundings [

4,

5,

6].

According to road traffic accident severity analysis [

3,

7,

8], late-night and adverse weather accidents are more fatal than other traffic accident factors. Driving at night under low illumination conditions and rainfall proved to be the most important, leading to the highest number of accidents, fatalities and injuries. In the state-of-the-art, some studies have outlined the impact of aforementioned environmental factors on sensor performance [

9,

10,

11]. For example, for illumination conditions, LiDAR and Radar are active sensors which are not dependent on sunlight for perception and measurements as summarised in [

9]. In contrast, the camera is a passive sensor affected by illumination, which brings up the problem of image saturation [

12]. The camera is mainly responsible for traffic lane detection, which is formed by the difference in grey values between the road surface and lane boundary points. Namely, the value of the grayscale gradient varies according to the illumination intensity [

13]. The study of [

14] has demonstrated that artificial illumination is a factor in detection accuracy. Meanwhile, object detection used in ADAS is also sensitive to illumination [

15]. Therefore, it is important to build a system with multiple systems without depending on a single sensor.

Unlike the effects produced by illumination, which only have a greater influence on the camera. the negative effects of rainfall must be taken into account in all vehicle sensors. Rainfall is a frequent adverse condition and it is necessary to consider the impact on all sensors. In [

16], Raindrops on the lens can cause noise in the captured image, resulting in poor object recognition performance. Although the wipers eliminate raindrops to ensure camera perception performance, the sight distance values vary with the intensity of the rainfall to the extent that the ADAS function is suspended [

17]. Furthermore, in other studies and analysis results on the influence of rainfall on the LiDAR used in ADAS, all sensors demonstrate sensitivity to rain. At different rainfall intensities, the laser power and number of point clouds decrease, resulting in reduced object recognition as the LiDAR perception is dependent on the received point cloud data [

18,

19]. This effect is mostly caused by water absorption in the near-infrared spectral band. Some experimental pieces of evidence indicate that rainfall reduces the relative intensity of the point cloud [

10]. Although Radar is more environmentally tolerant than LiDAR, it is subject to radio attenuation due to rainfall [

20]. Compared to normal conditions, the simulation results show that the detection range drops to 45% under heavy rainfall of 150 mm/h [

21]. A similar phenomenon is confirmed in the study of [

22]. A humid environment can cause a water film to form on the covering radome, which can affect the propagation of electromagnetic waves at microwave frequencies and lead to considerable loss [

23]. Meanwhile, the second major cause of radar signal attenuation is the interaction of electromagnetic waves with rain in the propagation medium. Several studies have obtained quantitative data demonstrating that precipitation generally affects electromagnetic wave propagation at millimetre wave frequencies [

24,

25]. Therefore, the negative impact of rainfall directly affects the recognition capability of the perception system, which results in the ADAS function being downgraded or disabled.

No sensor is perfect in harsh environmental conditions. Although there are already several scientific studies showing the experimental results of the sensors in different environments and giving quantitative data. However, in most cases, these experiments are carried out at static or indoor conditions [

10,

11,

19,

26,

27], which is difficult to comprehensively evaluate the performance of the sensor based on these laboratory data alone. This is because for the actual road traffic environment, vehicles equipped with sensors are driving dynamically and ADAS is also required to cope with various environmental factors at different speed conditions. To compensate for the limitations of the current implementation, in this study, we design a series of dynamic test cases under different illumination and rainfall conditions. In addition, we consider replicating more day-to-day traffic scenarios, such as cutting in, following and overtaking, rather than a single longitudinal test. The study statistically measures sensor detection data collected from a proving ground for autonomous driving. Thus, a more comprehensive and realistic comparison of experimental data from different sensors in adverse environments can be made, and we discuss the main barriers to the development of ADAS.

The outline of the subsequent sections of this paper is as follows: The proving ground and test facilities are introduced in

Section 2.

Section 3 presents the methodology for test cases implementation.

Section 4 demonstrates the statistics from real sensor measurement and evaluation for main automotive sensors. Limitations of sensors for ADAS are discussed in

Section 5. Finally, a conclusion is provided in

Section 6.

2. Test Facilities

The proving ground and test facilities are introduced through our measurements conducted at the DigiTrans test track. This proving ground is designed to replicate realistic driving conditions and provide a controlled environment for testing autonomous driving systems. The test track enables the simulation of various environmental conditions to test the detection performance of the sensors under different scenarios. Further details regarding the test track will be provided in

Section 2.1 of the paper.

Section 2.2 introduces three commonly used sensor types tested in our experiments. These sensors are widely used in the current automotive industry, and their detection performance under adverse weather conditions is of great interest. We introduced a ground truth system in

Section 2.3 to analyse the sensor detection error. This system allowed us to evaluate the accuracy and reliability of the sensors in detecting the surrounding environment.

2.1. Test Track

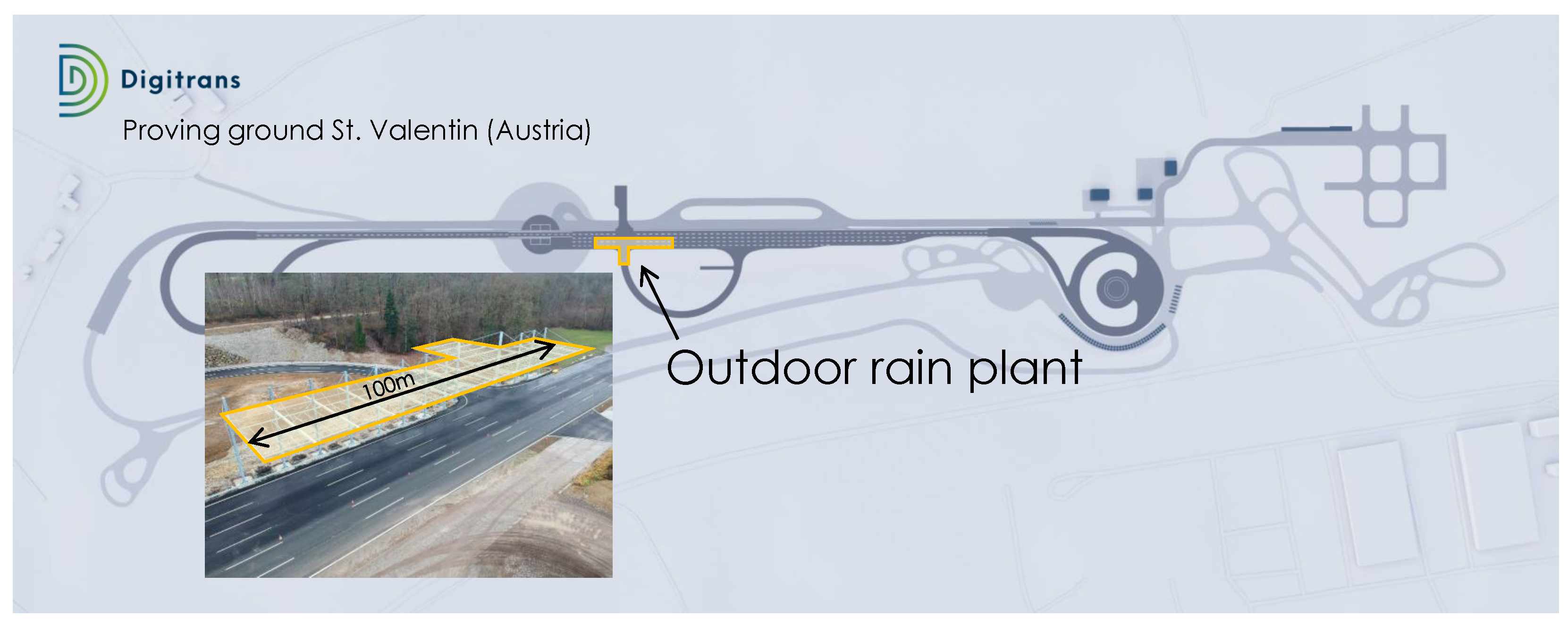

DigiTrans is a test environment located in Austria that collaborates with national and international partners to furnish expertise and testing infrastructure while supporting testing, validation, research, and implementation of automated applications within the realm of municipal services, logistics, and heavy goods transport. DigiTrans expanded the decades-old testing site in St. Valentin (see [

28]) in multiple phases to meet the demands of testing automated and autonomous vehicles.

Highly digitalised infrastructure and 5G / C-ITS test site

various asphalt tracks for motorway, rural and urban roads

a roundabout with four junctions and several intersections

high-performance road markings.

Data preparation, data processing, analysis and evaluation

Central synchronous data storage of vehicle and environmental data (Position / Weather / Operational Design Domain (ODD) / Video)

Central control and planning of test scenarios (Positioning / Trajectories)

Central control and synchronous timing for all systems

Nationwide central WLAN infrastructure (4G / 5G) according to military standard

Area-wide accurate GPS positioning and creation of a digital twin as well as high-precision reference maps (UHDmaps®)

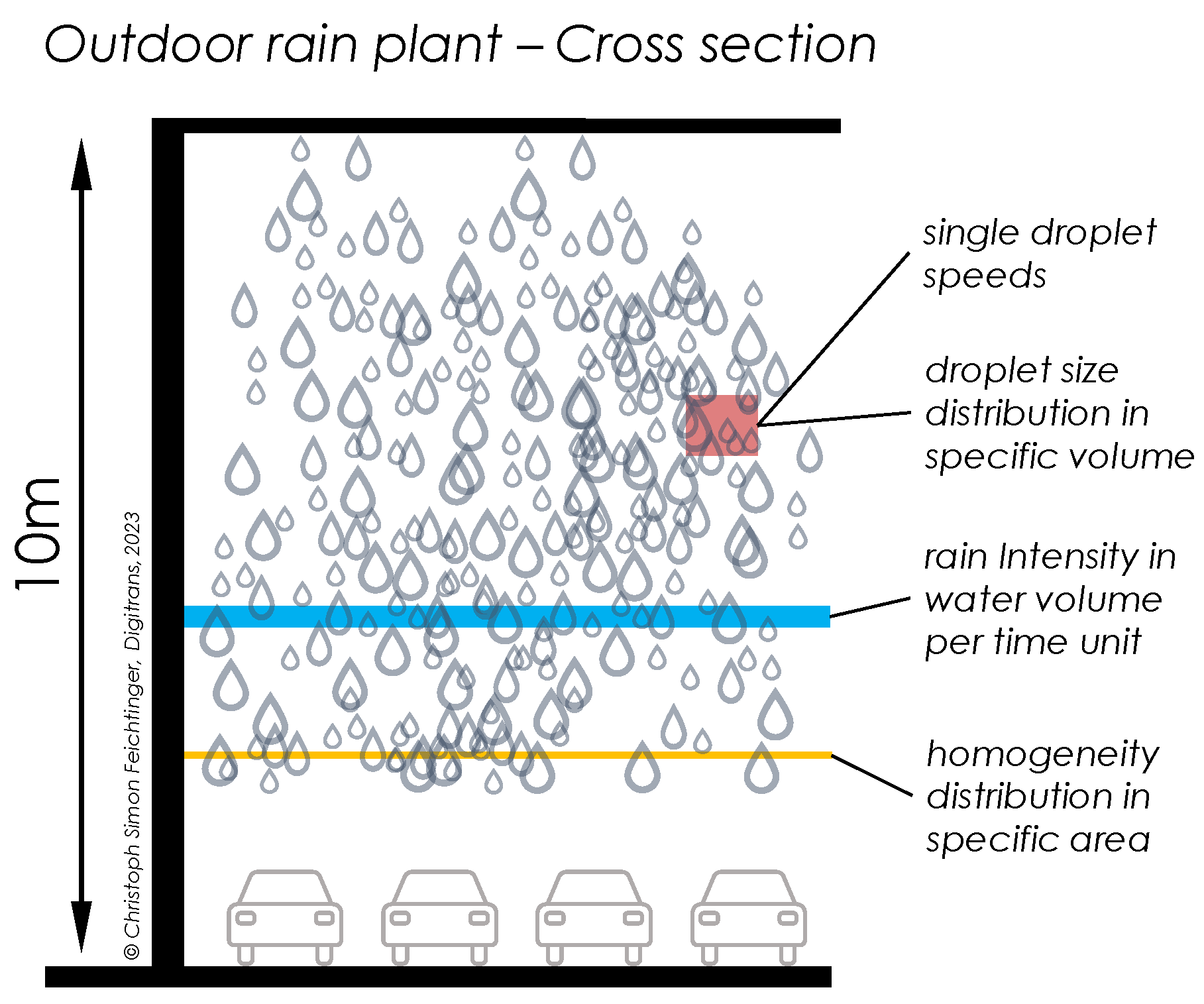

In our study, we are focused on the influence of adverse weather conditions on automotive sensors. Namely, testing these technologies in a suitable, realistic and reproducible test environment is absolutely necessary to ensure functional capability and to increase the road safety of ADAS and AD systems. To create this test environment, DigiTrans has built a unique outdoor rain plant (see

Figure 1) to provide important insights into which natural precipitation conditions affect the performance of optical sensors in detail and how to replicate the characteristics of natural rain.

The outdoor rain plant covers a total length of 100 m with a lane width of 6m. It is designed and built to replicate natural rain characteristics in a reproducible manner.

Figure 2 shows a cross-section of the rain plant in a longitudinal driving direction.

The characteristics of rain are predominantly delineated by its intensity, homogeneity distribution, droplet size distribution, and droplet velocities. The rain intensity refers to the average amount of water per unit of time (e.g., mm/h). Homogeneity distribution provides information on the spatial distribution of rain within a specific wetted area, with the mean value of homogeneity distribution being the intensity. Droplet sizes are measured as the mass distribution of different droplet sizes within a defined volume, with typical diameters ranging from 0.5 to 5 mm, and the technical information can be found in [

29]. It should be mentioned here that natural rain droplets have no classical rain-teardrop shape [

30]. The fourth characteristic is droplet speed, which varies according to droplet size and weight-to-drag ratio, as described in [

31]. The present study conducted tests under two different rain intensities, namely 10 and 100 mm/h, corresponding to mid and high-intensity rain based on internationally accepted definitions [

32].

2.2. Tested Sensors

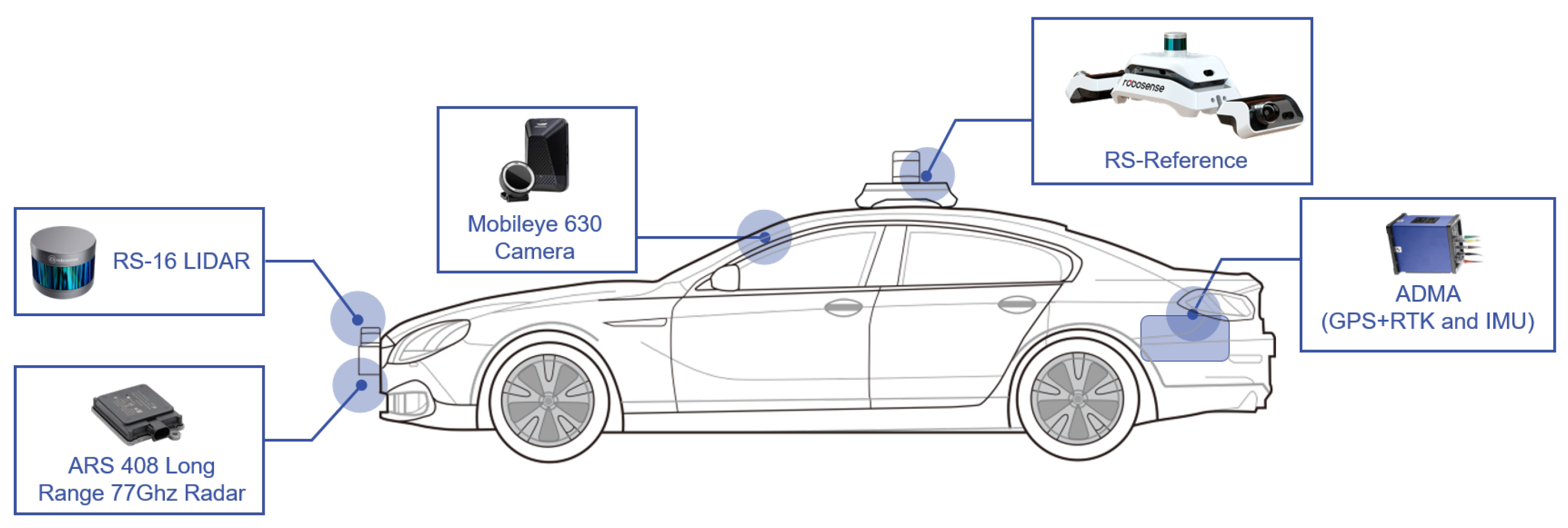

In our research, we primarily focus on the performance of three sensors (Camera, Radar, LiDAR) widely used in the automotive industry.

Table 1 shows their specific parameters and performance. In the experiment, sensors and measurement systems were integrated into the measurement vehicle, referred to as the “ego car”, shown in

Figure 3. Meanwhile, the vehicle’s motion trajectory and dynamics data recording were accomplished using GPS-RTK positioning (using a Novatel OEM-6-RT2 receiver) and the GENESYS Automotive Dynamic Motion Analyser (ADMA). The GPS-RTK system was also used to provide global time synchronization, ensuring that the sensor detection data transmitted on the bus were aligned, making it easier to post-process the data.

2.3. Ground Truth Definition

In

Figure 3 RoboSense RS-Refenrece system is mounted on the roof of the vehicle, which provides ground truth data in the measurement. Hence, we quantify the detection errors of the sensors using the ground truth data. It is a high-precision reference system designed to accurately evaluate the performance of LiDAR, Radar, and Camera systems. It provides a reliable standard for comparison and ensures the accuracy and consistency of test results. The RS-reference system uses advanced algorithms and sensors to provide precise and accurate data for multiple targets. This allows for high-accuracy detection and tracking of objects, even in challenging environments and conditions. Due to the involvement of multiple vehicles in our test scenarios, the use of inertial measurement systems relying on GPS-RTK is unsuitable as these systems can only be installed on one target vehicle. In our case, multiple object tracking is essential, which is why we opted for RS-reference.

To validate the measurement accuracy of the RS-reference system, we compared it with the ADMA. The latter enables highly accurate positioning with an accuracy of up to 1 cm. Therefore, ADMA is used as a benchmark to verify the accuracy of RS-Reference. The target and ego cars are equipped with ADMA testing equipment during the entire testing process. Additionally, the RS-Reference is also installed on the Ego Car. Due to the difference in the reference frame, the reference benchmarks for ADMA and RS-Reference on the ego car are calibrated to the midpoint of the rear axle. On the target car, the ADMA coordinate system was transformed to the centre point of the bumper to serve as a reference, which is consistent with the measurement information provided by the RS-Reference. Eventually, the accuracy information is summarized in

Table 2. Although RS-Reference does not perform as accurately as ADMA in longitudinal displacement errors. In light of our designed test cases, multiple targets must be tracked. With limited testing equipment, RS-Reference can meet multi-target detection needs, which provides excellent convenience for subsequent post-processing. Therefore, the measurement information from RS-Reference can serve as ground truth to support evaluating other sensors’ performance.

3. Test Methodology

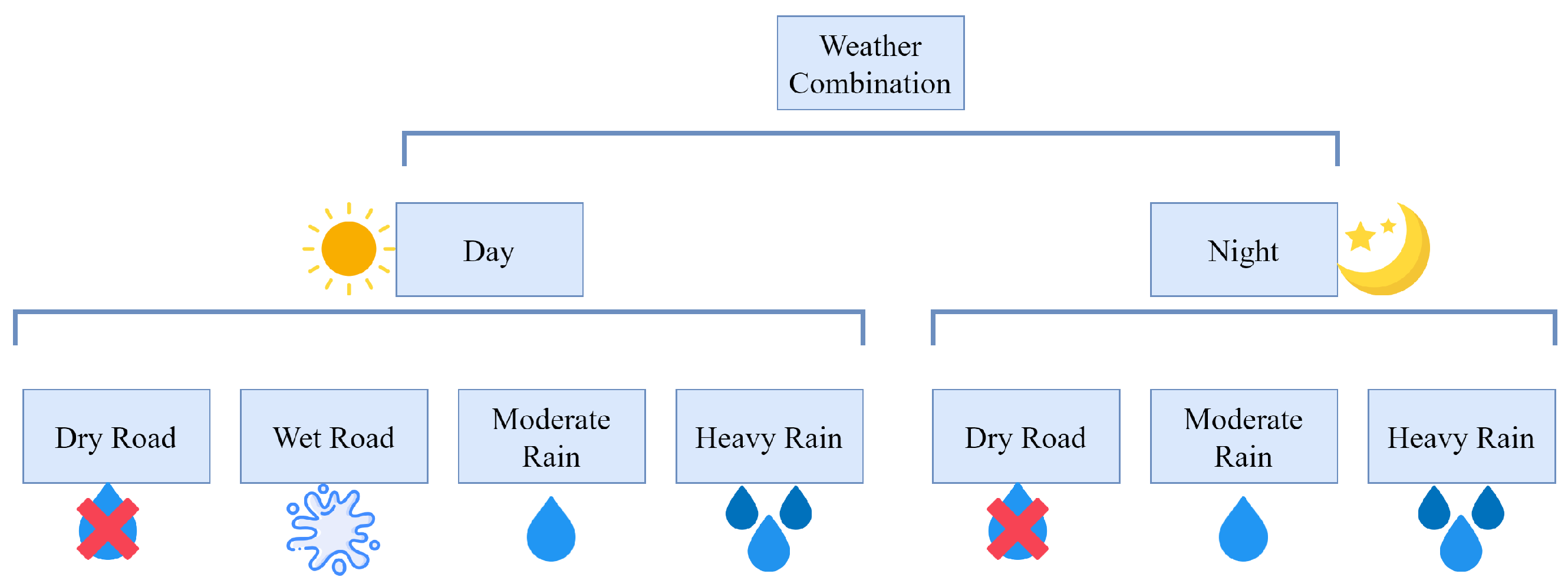

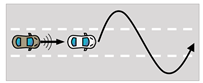

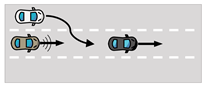

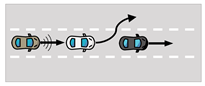

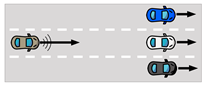

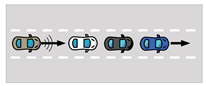

The methodology for test case implementation involved a total of seven different manoeuvres included in the overall manoeuvre matrix. These scenarios were carefully selected to either replicate real-life driving situations. To cover a common repertoire of manoeuvres, each test scenario considers both low and high vehicle speeds. One main research question of this project is to assess the influence of different day and weather conditions on sensors such as LiDAR, Radar and Camera.

Figure 4 shows the test matrix with all day and weather conditions. The examined experiments were performed during the daytime on a dry/Waterlogged road. Meanwhile, The tests were also conducted under moderate and heavy rain. For nighttime conditions, all tests were conducted under conditions with good artificial lighting. The combinations of weather conditions were, respectively, dry road and simulated moderate and heavy rain. Therefore, all scenarios were performed on the dynamic driving track underneath the rain plant of the proving ground (see

Section 2), thus ensuring consistent experimental conditions.

Finally, 276 test cases were performed. Each weather condition consists of seven manoeuvres with two variations in speed, and each variation was repeated three times to allow an additional statistical evaluation. To get as close as possible to automated driving behaviour, each vehicle was equipped with Adaptive Cruise Control (ACC) had it activated while driving. Hence distance maintenance to the front target, acceleration or deceleration of the vehicle was controlled by ACC. Since the rain planet is only 100m long, the main part of the manoeuvre should be performed under the rain simulator.

Table 3 illustrates the entire test matrix with pictograms and a short description.

4. Results and Evaluation

After a series of post-processing work, we collected 278 valid measurement cases, each sensor containing more than 80,000 detection data, which provided the statistics from real sensor measurement and evaluation for main automotive sensors. In this section, we demonstrate the quantitative analysis for each sensor to show the detection performance. Since the distance of the rain simulator is only 80m, the collected data is filtered based on GPS location information to ensure that all test results are produced within the coverage area of the rain simulator. For rainfall simulation, we split measurement into moderate and heavy rain conditions with intensities of 25 mm/h and 100 mm/h, respectively. Meanwhile, the artificial illumination condition is also considered in our test. Additionally, we also discuss the influence of detection distance on the results. However, due to the rain simulator’s length limitation, environmental factors’ effect on sensor detection is not considered for this part of the presentation of the results. As introduced in

Section 3, the test scenarios have been divided into two parts: daytime and nighttime. The daytime tests are further divided into dry and wet road conditions, as well as moderate and heavy rainfall conditions, which will be simulated using a rain simulator. For the nighttime test, the focus is only on dry road conditions and moderate rainfall, given the test conditions. This approach thoroughly evaluates the systems’ performance under different weather and lighting conditions.

According to the guide to the expression of uncertainty in measurement [

36], the detection error can be defined in Equation (

1), where

can be regarded as the sensor measurement output and the ground truth is labelled

. In addition, the measurement error is denoted as

. After obtaining a series of detection errors for the corresponding sensors, we quantify the Interquartile Range (IQR) of the boxplot and the number of outliers to indicate the detection capability of the sensor.

In this study, our sole focus is on different sensors’ performance of lateral distance detection. This is because autonomous vehicles rely on sensors to detect and respond to their surroundings, and lateral distance detection is essential in this process. An accurate lateral detection enables the vehicle to maintain a safe and stable driving path, which is critical to ensuring the safety of passengers and other road users compared to longitudinal detection-related functions. By continuously monitoring the vehicle’s position in relation to its lane and the surrounding vehicles, an autonomous vehicle can make real-time adjustments to its driving path and speed to maintain a safe and stable driving experience. This information is also used by the vehicle’s control systems to make decisions about lane changes, merging, and navigating curves and intersections. A typical example is being used in Baidu Apollo, the world’s largest autonomous driving platform, providing trajectory planning by EM planner [

37]. Therefore, the results of other outputs from sensors are presented in

Appendix A, while the focus of the following sections is solely on the performance of the sensor for lateral distance detection.

4.1. Camera

Cameras are currently widely utilised in the field of automotive safety. Hasirlioglu et al. [

10] have demonstrated through a series of experiments that intense rainfall causes a loss of information between the camera sensor and the object, which cannot be fully retrieved in real-time. Meanwhile, Borkar et al. [

14] have proven the presence of artificial lighting can be a distraction factor which makes lane detection very difficult. In addition, Koschmieder’s model describes visibility as inversely proportional to the extinction coefficient of air, which has been widely used in the last century [

38]. This model can be conveniently defined in [

39] by Equation (

2).

Where

x denotes the horizontal and vertical coordinates of the pixel,

denotes the wavelength of visible light,

is the extinction coefficient of the atmosphere, and

d is the scene depth. Furthermore,

I and

J denote the scene radiance of the observed and clear images depending on

x and

, respectively. The last item

A indicates the lightness of the observed scene. Therefore, by analysing this Equation, once the illumination and the extinction coefficient influence the observed image by the camera sensor, the estimation of obstacles can lead to detection errors. These test results can be observed in

Figure A5 and

Figure A6, which illustrate the camera’s lateral detection results.

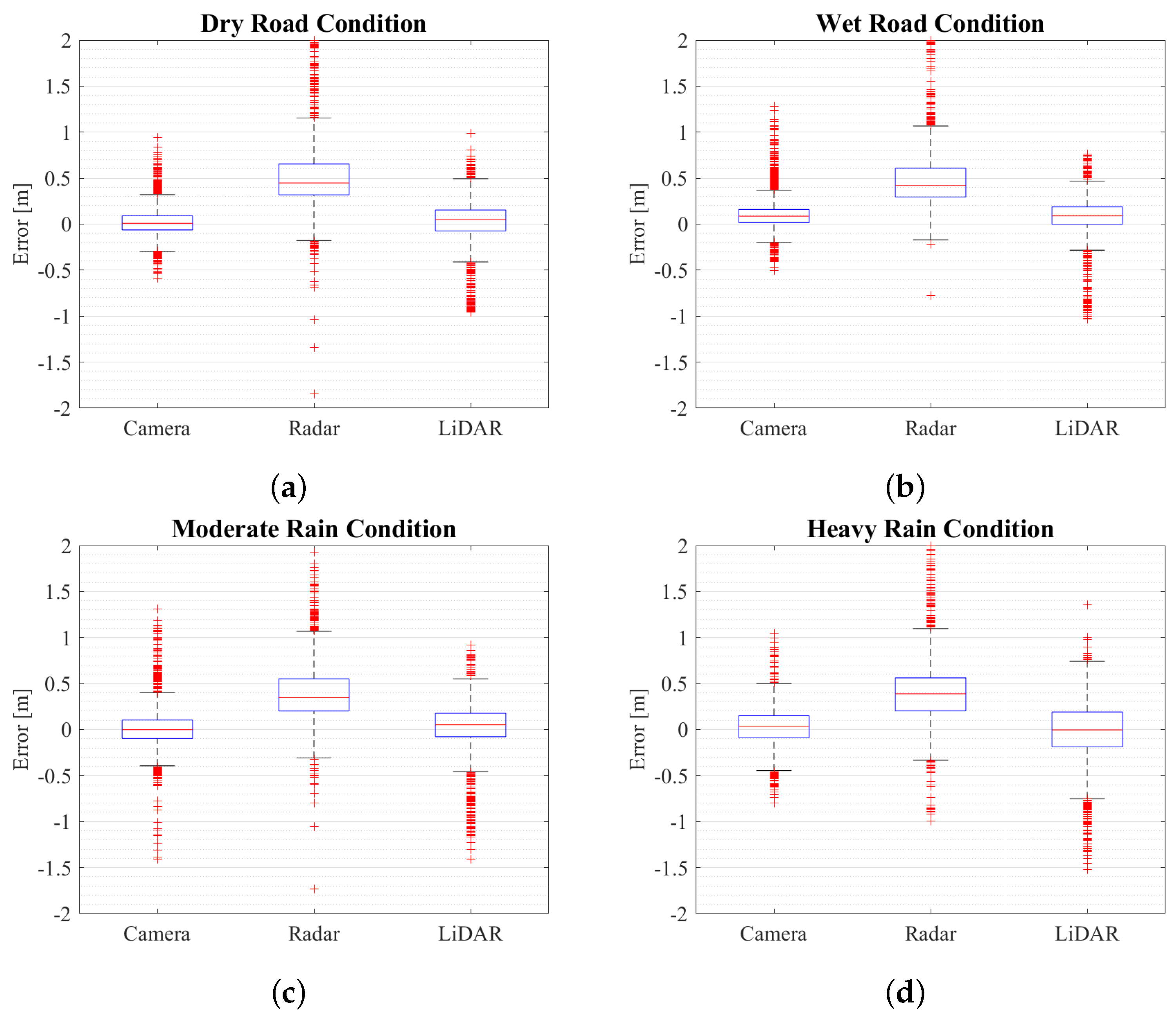

In general, having the wipers activated during rainfall can help to maintain a clear view for the camera, making the detection more stable. However, the performance of the camera’s detection is still impacted by other environmental factors, such as the intensity of the rain and the level of ambient light. These factors can affect the image quality captured by the camera, making it more difficult for the system to detect and track objects accurately. As shown in

Table 4, the IQR increases as the rainfall increases. Specifically, the outlier numbers increase by at least 23% compared to the dry road conditions. It is also evident that the camera is susceptible to illumination. In principle, the car body material’s reflectivity is higher under sufficient lighting. Hence, the extinction coefficient decreases. And good contrast with the surrounding environment is conducive to recognition. Meanwhile, artificial lighting provides the camera with enough light at night to capture clear images and perform accurate detection. In this scenario, there is a significant increase in outliers. For dry road conditions, the detected outliers are 55% higher at night than during the day. In the case of moderate rainfall, the number of outliers increased by 41.7%. Namely, the high uncertainty of detection at night leads to a decrease in average accuracy, as seen in

Table 7.

Comparing

Figure A5 and

Figure A6, the camera detection error with the smallest range of outliers is during the day and on a dry road. In addition, nighttime conditions with moderate rainfall are a challenge for camera detection, where the outliers range is significantly increased in

Figure A6-

b. Since the camera is a passive sensing sensor, like most computer vision systems, it relies on clearly visible features in the camera’s field of view to detect and track objects. For a waterlogged road, the water can cause reflections and glare that can affect the image quality captured by the camera and make it difficult for the system to process the information accurately. As a result, the average error is greatest in this condition illustrated in

Table 7.

Finally,

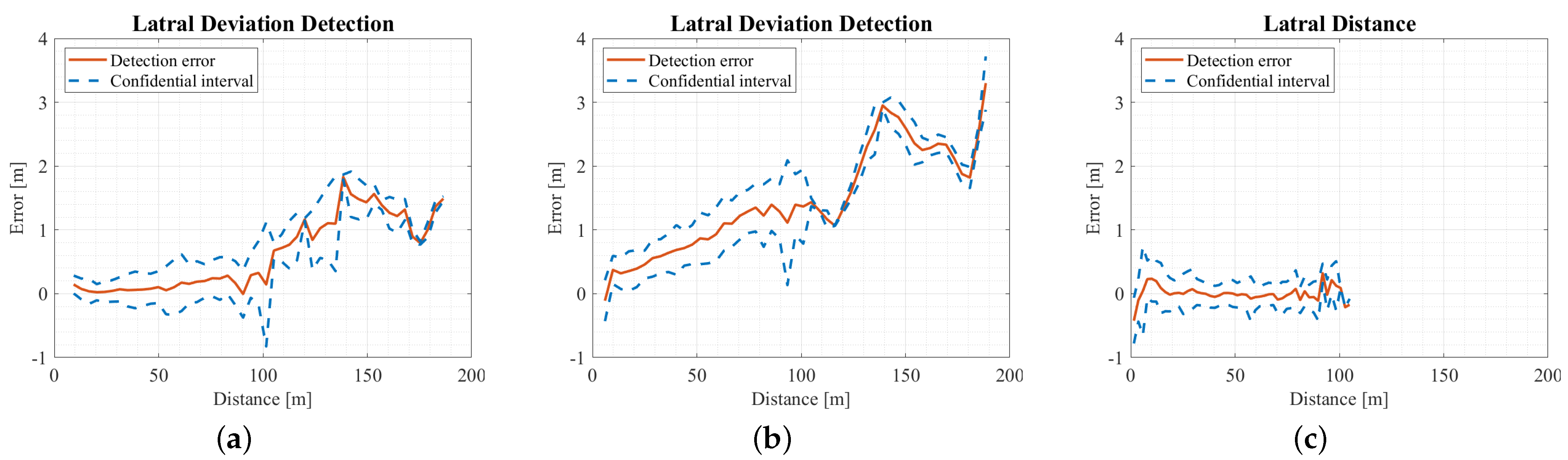

Figure 7 demonstrates the influence of distance on the detection results, and it can be clearly seen that the effective detection range of the camera is about 100m. The error is increasing for detection results beyond this range, and the confidence interval is also growing, which makes the detection results unreliable.

4.2. Radar

In the last decade, radar-based ADAS has been widely used by almost every car manufacturer in the world. However, In the millimetre wave spectrum, adverse weather conditions, for example rain, snow, fog and hail, can have a significant impact on radar performance [

21]. Moreover, the study of [

10] has demonstrated that different rain intensities directly affect the capability of an obstacle to reflect an echo signal in the direction of a radar receiver, thus resulting in an impact on maximum detectable range, target detectability and tracking stability. Therefore, rain effects on mm-wave radar can be classified as attenuation and backscatter. The two mathematical models for the attenuation and backscattering effects of rain can next be represented by Equations (

3) and (

4) respectively.

Where

r is the distance between Radar sensor and the target obstacle,

is the Radar wavelength,

is the transmission power,

G denotes antenna gain,

denotes the Radar cross-section of the target. The rain attenuation coefficient

is determined by rainfall rate and the multipath coefficient is

V. From Equation (

3), it can be seen that we need to consider the rain attenuation effects when calculating the received signal power

is based on the rain attenuation effects, pathloss and multipath coefficient.

| Environmental condition |

IQR |

Number of outliers |

| Day-Dry |

0.334 |

113 |

| Day-Wet Road |

0.311 |

74 |

| Day-Moderate Rain |

0.350 |

109 |

| Day-Heavy Rain |

0.359 |

135 |

| Night-Dry Road |

0.320 |

102 |

| Night-Moderate Rain |

0.328 |

196 |

The relationship between the power intensity

of the target signal and that of the backscatter signal

is characterized according to Equation (

4). It is essential to maintain the ratio of the two variables above a certain threshold for reliable detection. Where

is pulse duration,

denotes antenna beamwidth,

c is the speed of light. However, The rain backscatter coefficient

is highly variable as a function of the drop-size distribution. Therefore, Radar will also consume more energy and cause greater rain backscatter interference from Equation (

4). Rainwater could produce the water film on the radar’s housing and thus affect the detection effect, which can be observed in

Table 5. Although the difference in IQR values between rainy and clear weather conditions is insignificant, the number of outliers increases during heavy rainfall. Overall, the radar is not sensitive to environmental factors. In particular, illumination level does not affect the radar’s detection performance, and the IQR values remain consistent with those during the day.

Figure A5 and

Figure A6 demonstrate this phenomenon, the ranges of IQR and outliers are basically the same, but when the rainfall intensity is relative high, the rain backscatter interference leads to more outliers in

Figure 6b.

However, the average error of Radar’s lateral detection results is larger compared to the camera and LiDAR, as shown in

Table 7. This is because the working principle of Radar is to emit and receive radio waves, which are less focused and have a wider beam width compared to the laser used by LiDAR. This results in a lower spatial resolution for Radar, posing a challenge for lateral detection. LiDAR uses the laser to construct a high-resolution 3D map of the surrounding environment. At the same time, the camera captures high-resolution images that can be processed using advanced algorithms to detect objects in the scene. This makes LiDAR and camera systems more suitable for lateral detection than radar systems. Finally, comparing the performance of the camera and LiDAR in

Figure 7, Radar has the farthest detection distance of approximately 200m, but the error increases as the distance increases.

4.3. Lidar

In recent years, automotive Lidar scanners are autonomous vehicle sensors essential to the development of autonomous cars. A large number of algorithms have been developed around the 3D point cloud generated by Lidar for object detection, tracking, environmental mapping or localisation. However, Lidar’s performance is more susceptible to the effects of adverse weather. The studies of [

19,

40] tested the performance of various Lidars in a well-controlled fog and rain facility. Meanwhile, these studies verified that as the rainfall intensity increases, the number of point clouds received by the Lidar decreases, which affects the tracking and recognition of objects. This process can be summarized by Lidar’s power model in Equation (

5).

This equation describes the power a received laser returns at a distance

r, Where

is the total energy of a transmitted pulse laser, and

c is light speed.

A represents receiver’s optical aperture area,

is the overall system efficiency.

denotes the reflectivity of the target’s surface, which is decided by surface properties and incident angle. This last item can be regarded as the transmission loss through the transmission medium, which is given by Equation (

6).

Where is the extinction coefficient of the transmission medium, extinction is due to the fact that particles within the transmission medium would scatter and absorb laser light.

From the short review of Equation (

5) and (

6), we can infer that the rainfall enlarges the transmission loss

and hence leads to decrease in the received laser power

, which makes the following signal processing steps fail. In fact, the performance of the LiDAR is degraded due to changes in the extinction coefficient

and the target reflectivity

. Most of the previous studies focused on the statistics of point cloud intensities, the point cloud intensity decreases with rain intensity and distance. However, object recognition based on deep learning is robust and can well resist environmental noise’s impact on the final result’s accuracy. This phenomenon can be observed from our statistics shown in

Figure A5 and

Figure A6. Although the list of objects output by LiDAR is less influenced by the environment, there are still performance differences. In

Table 6, it can still be seen that dry road conditions are indeed the most suitable for LiDAR detection and the difference in IQR between daytime and nighttime is insignificant. Since the tests under the rain simulator are all close-range detection, the results on wet road surfaces are not much different from those on dry surfaces. However, once the rain test started, the difference was noticeable. Raindrops can scatter the laser beams causing them to return false or distorted readings. This can result in reduced visibility, making it more difficult for the system to detect objects and obstacles on the road. Therefore, as the amount of rain increases, LiDAR detection becomes more difficult. Especially in heavy rain, where the IQR increased by 0.156 m compared to when tested in dry conditions. Additionally, the number of outliers also significantly increases. Furthermore,

Figure A5c,d also demonstrate the phenomenon, with a larger vary range of outliers under rainy conditions in. Meanwhile, The range of outliers covers the entire observed range of the boxplot in

Figure A6b. Namely, the influence of rain on LiDAR performance is evident.

Although from

Figure 7c, the detection range of LiDAR can reach 100 meters. However, the effective range of 16-beams LiDAR for stable target tracking is about 30 meters. Larger than this range, tracking becomes unstable and is accompanied by missing tracking. This is because the algorithms may use a threshold for the minimum signal strength or confidence level required to recognize an object, which limits the maximum range of the object recognition output. In addition, considering the robustness and accuracy of the algorithm could filter out point cloud at long ranges due to the limited resolution and other sources of error. Thereby reducing the computational requirements and potential errors associated with processing data at more distant ranges. Through this method, 16-beams LiDAR can provide higher resolution and accuracy over a shorter range, which is suitable for many applications, such as automated driving vehicles and robotics. Only the error is larger in the closer range, which is caused by the mounting position of the LiDAR. Since our tested LiDAR is installed in the front end of the vehicle, it is difficult to cover the whole object when the car is close to the target, which makes the recognition more difficult and less accurate. However, this problem gradually improves when the target vehicle is far away from the ego car.

4.4. Influence of Environmental Factors

Discuss different performance of sensors under adverse weather.

Deriving the influence of environmental factors on detection

Exploring the possibility of reassigning sensor fusion weights based on experimental results

| Environmental condition |

IQR |

Number of outliers |

| Day-Dry |

0.222 |

115 |

| Day-Wet Road |

0.189 |

114 |

| Day-Moderate Rain |

0.253 |

131 |

| Day-Heavy Rain |

0.378 |

288 |

| Night-Dry Road |

0.172 |

127 |

| Night-Moderate Rain |

0.248 |

227 |

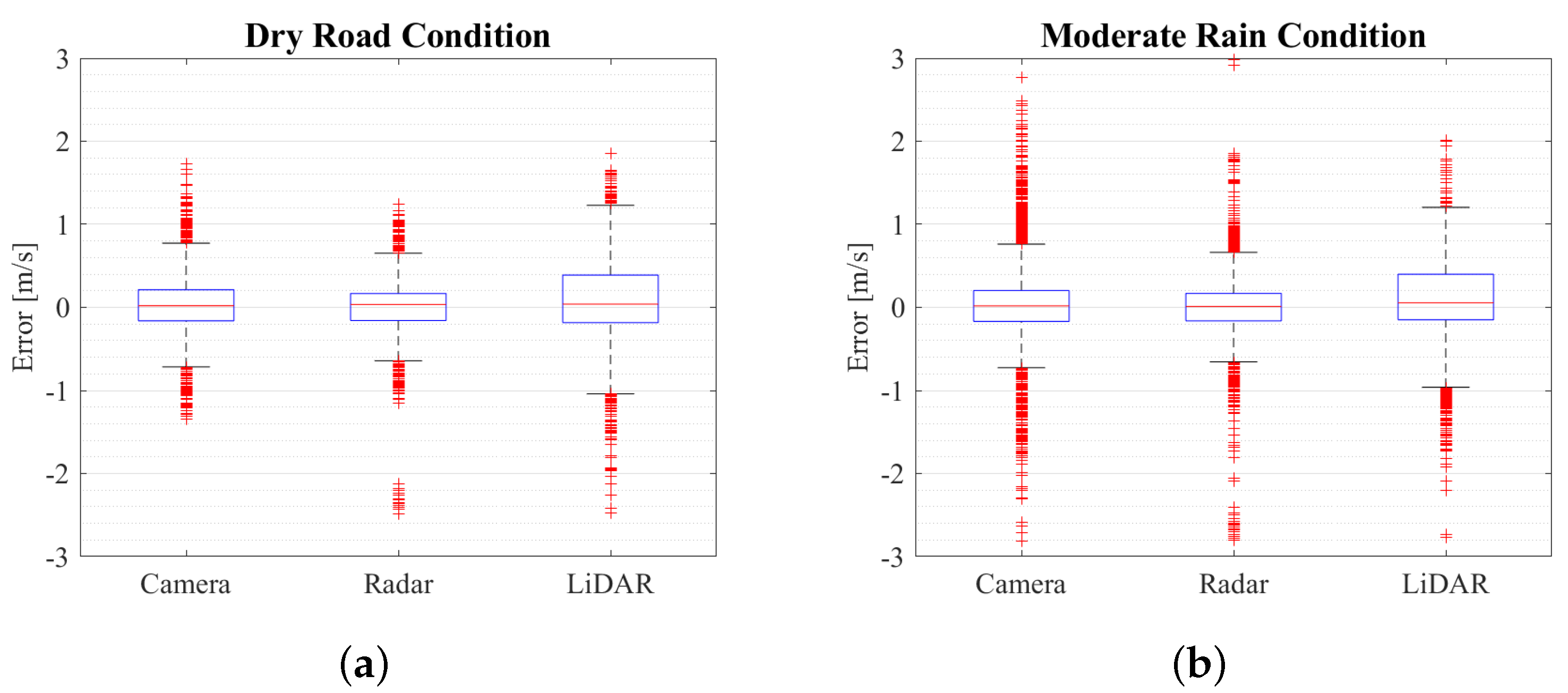

Figure 5.

Effect of environmental condition on sensors at daytime in rain simulator (near range) with artificial lightning (a) Lateral distance detection error under dry road condition (b) Lateral distance detection error under wet road condition (c) Lateral distance detection error under moderate intensity rain conditions (d) Lateral distance detection error under heavy intensity rain conditions.

Figure 5.

Effect of environmental condition on sensors at daytime in rain simulator (near range) with artificial lightning (a) Lateral distance detection error under dry road condition (b) Lateral distance detection error under wet road condition (c) Lateral distance detection error under moderate intensity rain conditions (d) Lateral distance detection error under heavy intensity rain conditions.

Figure 6.

Effect of environmental condition on sensors at nighttime in rain simulator (near range) (a) Lateral distance detection error under dry road condition (b) Lateral distance detection error under moderate intensity rain conditions.

Figure 6.

Effect of environmental condition on sensors at nighttime in rain simulator (near range) (a) Lateral distance detection error under dry road condition (b) Lateral distance detection error under moderate intensity rain conditions.

Figure 7.

Camera, Radar and LiDAR detection performance over the distance (a) Camera detection performance (b) Radar detection performance (c) LiDAR detection performance.

Figure 7.

Camera, Radar and LiDAR detection performance over the distance (a) Camera detection performance (b) Radar detection performance (c) LiDAR detection performance.

5. Discussion

In this section, we will discuss the observations and limitations of sensors for ADAS during the measurement under the rain simulator. By comparing

Table 7, we calculate the average detection error of the sensors for different environmental conditions. The comparison of lateral distance errors reveals that there is no significant difference between the errors of the Camera and LiDAR sensors, as the average detection error for both sensors is only 0.054 m and 0.042 m, respectively. Meanwhile, the conclusion drawn in the study of [

40] is consistent with our findings, as changes in the propagation medium of the laser due to rain and fog weather adversely affect the detection. However, radar detection is not as reliable as longitudinal detection, as indicated by an average error of 0.479 m. This is due to the small amount of point cloud data from the radar, and it is challenging to discern lateral deviations after clustering, as discussed in [

10,

20]. Furthermore, the error results from different environments indicate that radar is the least affected by environmental factors. Although cameras are also less impacted by the rainfall, it should be noted that the tests were performed with the wipers on. Additionally, in the night test, our results have demonstrated that the detection performance is enhanced by the contrast improvement at night with sufficient artificial light. Finally, while LiDAR has the highest detection accuracy, it is susceptible to the amount of rain, and the accuracy difference is more than 4 times.

To investigate the impact of distance on detection accuracy, we aggregated all test cases in

Figure 7 statistically. LiDAR showed an extremely high accuracy rate. The mean error is observed to be merely 0.041, and the standard deviation is also effectively controlled. However, the effective detection range of LiDAR is only about 30 m. Beyond this range, target tracking is occasionally lost. Compared with Radar’s effective detection range of up to 200 m, it is obvious that there are limitations in the usage scenario. However, the detection error of both Radar and Camera becomes larger as the distance increases. The camera’s average lateral error is 0.617 m, whereas the radar exhibits a surprisingly high lateral error of 1.456 m, indicating a potential deviation of one lane as distance increases. This presents a significant risk to the accuracy of estimated target vehicle trajectories. Finally, by using uniform sampling, we calculated the detection error for each sensor under all conditions summarized in

Table 8.

Table 7.

Average lateral detection accuracy comparison of sensors in rain simulator (near range) and unit in [m].

Table 7.

Average lateral detection accuracy comparison of sensors in rain simulator (near range) and unit in [m].

| Environmental condition |

Camera |

Radar |

LiDAR |

| Day-Dry |

0.019 |

0.453 |

0.015 |

| Day-Wet Road |

0.111 |

0.439 |

0.021 |

| Day-Moderate Rain |

0.010 |

0.472 |

0.046 |

| Day-Heavy Rain |

0.030 |

0.507 |

0.073 |

| Night-Dry Road |

0.064 |

0.458 |

0.035 |

| Night-Moderate Rain |

0.088 |

0.545 |

0.063 |

Table 8.

Average lateral detection accuracy comparison of sensors over the full range.

Table 8.

Average lateral detection accuracy comparison of sensors over the full range.

| Parameters |

Camera |

Radar |

LiDAR |

| Mean [m] |

0.617 |

1.456 |

0.041 |

| Standard Deviation |

0.571 |

0.826 |

0.118 |

6. Conclusions

Through a series of experiments, we have shown the impact of unfavourable weather conditions on automotive sensors’ detection performance. Our analysis focused on lateral distance detection, and we quantitatively evaluated the experimental results. Our studies demonstrated that rainfall could significantly reduce the performance of automotive sensors, especially for LiDAR and Camera. Based on the results presented in

Table 8, it can be inferred that the LiDAR’s detection accuracy diminishes by a factor of 4.8 as the rainfall intensity increases, yet it still exhibits a relatively high precision. In contrast, the Camera’s performance experiences less variation in rainy weather, with a maximum reduction of 1.57 times. However, as the Camera is significantly affected by lighting conditions, its detection accuracy declines by 4.6 times in rainy nighttime conditions compared to clear weather conditions. Additionally, the detection error fluctuation of radar was slight but lacked lateral estimation accuracy. In the same weather conditions, Radar exhibits detection accuracy that on average 16.5 and 14 times less precise than Camera and LiDAR, respectively.

Furthermore, we conducted a series of nighttime tests that illustrated the positive effect of high artificial illumination on camera detection. These experimental findings provide essential insights for automotive manufacturers to design and test their sensors under various weather and lighting conditions to ensure accurate and reliable detection. Additionally, drivers should be aware of the limitations of their vehicle’s sensors and adjust their driving behaviour accordingly during adverse weather conditions. Overall, the detection performance of different automotive sensors under environmental conditions provides valuable data to support sensor fusion. For instance, while Lidar has a maximum effective detection range of around 100 m, tracking loss occurs beyond 30 m. Thus, to address the limitations of individual sensors, multi-sensor fusion is a promising approach.

As part of our future work, we aim to conduct a more in-depth analysis of the raw data obtained from automotive sensors and introduce more rain and Illumination conditions, for example, introducing more tests for rain and artificial light intensity variation. Raw data is a critical input to the perception algorithm, and it often has a significant impact on the final detection output. We particularly want to investigate the effects of rainfall on LiDAR’s point cloud data, as it can significantly impact detection accuracy. Additionally, we plan to explore the development of a sensor fusion algorithm based on the experimental results. By combining data from multiple sensors, sensor fusion can compensate for the limitations of individual sensors, providing a more comprehensive perception of the environment and enabling safer and more effective decision-making for autonomous driving systems. Therefore, our future work will focus on improving the accuracy and reliability of sensor data to enable more robust sensor fusion algorithms.

Author Contributions

Conceptualization, H.L., T.M., Z.M., N.B., O.F., Y.Z. and A.E..; methodology, H.L., Z.M., N.B. and A.E.; software, H.L. Z.M. and N.B.; validation, H.L., T.M., Z.M., N.B., O.F. and A.E.; formal analysis, H.L. and N.B.; investigation, H.L., T.M., Z.M., N.B., O.F. and A.E.; resources, H.L., T.M., Z.M., N.B., O.F. and A.E.; data curation, H.L., Z.M., N.B., O.F., Y.Z. and A.E.; writing—original draft preparation, H.L., T.M., Z.M., N.B., O.F., Y.Z., C.F.and A.E.; writing—review and editing, H.L., T.M., Z.M., N.B., O.F., Y.Z. and A.E.; visualization, H.L., T.M., Z.M., N.B., O.F. and A.E.; supervision, A.E.; project administration, A.E. All authors have read and agreed to the published version of the manuscript.

Funding

Open Access Funding by the Graz University of Technology. This activity is part of the research project InVADE (FFG nr. 889349) and has received funding from the program Mobility of the Future, operated by the Austrian research funding agency FFG. Mobility of the Future is a mission-oriented research and development program to help Austria create a transport system designed to meet future mobility and social challenges.

Data Availability Statement

We encourage all authors of articles published in MDPI journals to share their research data. In this section, please provide details regarding where data supporting reported results can be found, including links to publicly archived datasets analyzed or generated during the study. Where no new data were created, or where data is unavailable due to privacy or ethical re-strictions, a statement is still required. Suggested Data Availability Statements are available in section “MDPI Research Data Policies” at

https://www.mdpi.com/ethics.

Acknowledgments

In this section you can acknowledge any support given which is not covered by the author contribution or funding sections. This may include administrative and technical support, or donations in kind (e.g., materials used for experiments).

Conflicts of Interest

Declare conflicts of interest or state “The authors declare no conflict of interest.” Authors must identify and declare any personal circumstances or interest that may be perceived as inappropriately influencing the representation or interpretation of reported research results. Any role of the funders in the design of the study; in the collection, analyses or interpretation of data; in the writing of the manuscript; or in the decision to publish the results must be declared in this section. If there is no role, please state “The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results”.

Sample Availability

Samples of the compounds ... are available from the authors.

Appendix A

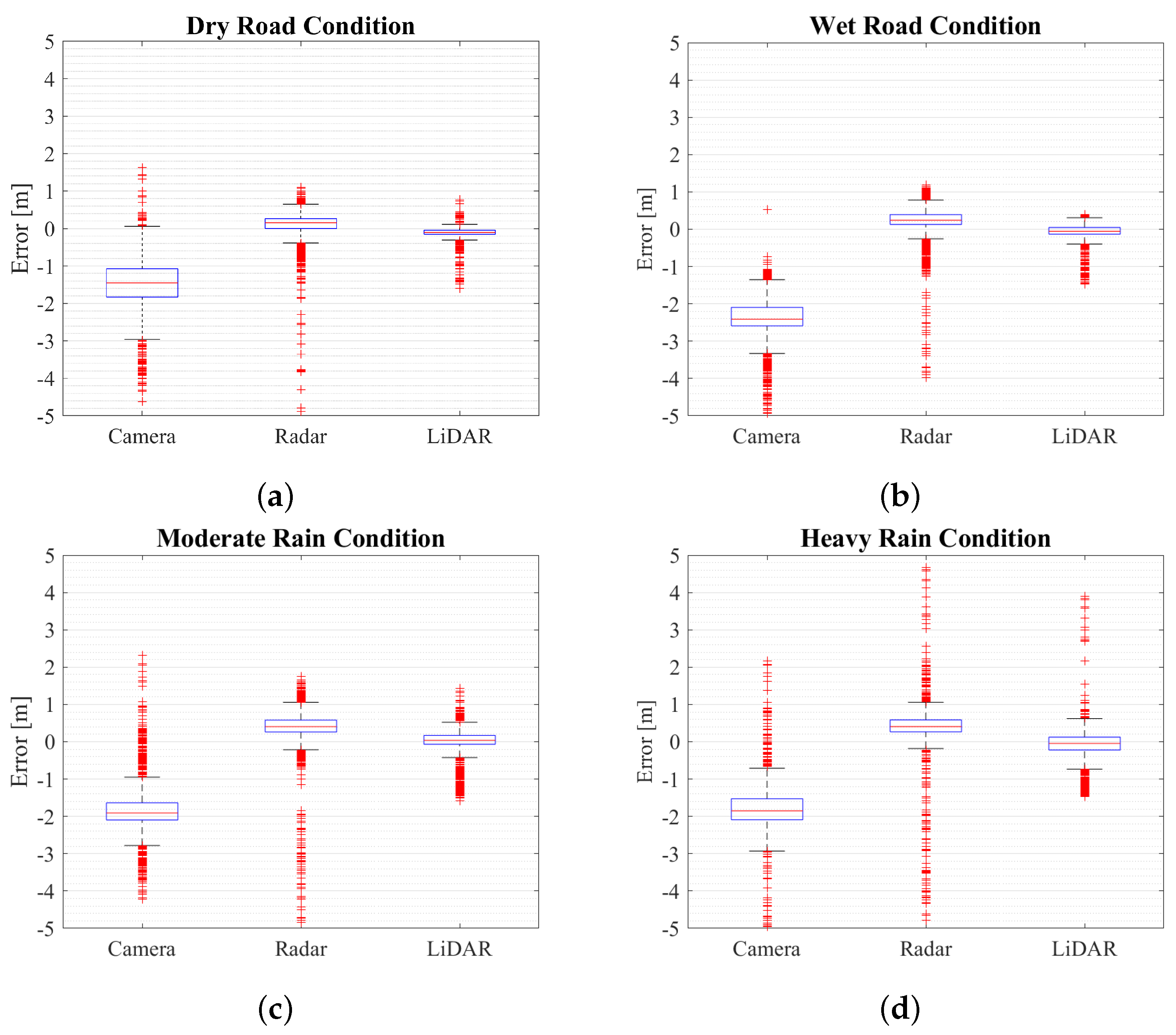

Figure A1.

Effect of environmental condition on sensors at daytime in rain simulator (near range) with artificial lightning (a) Longitudinal distance detection error under dry road condition (b) Longitudinal distance detection error under wet road condition (c) Longitudinal distance detection error under moderate intensity rain conditions (d) Longitudinal distance detection error under heavy intensity rain conditions.

Figure A1.

Effect of environmental condition on sensors at daytime in rain simulator (near range) with artificial lightning (a) Longitudinal distance detection error under dry road condition (b) Longitudinal distance detection error under wet road condition (c) Longitudinal distance detection error under moderate intensity rain conditions (d) Longitudinal distance detection error under heavy intensity rain conditions.

Figure A2.

Effect of environmental condition on sensors at nighttime in rain simulator (near range) (a) Longitudinal distance detection error under dry road condition (b) Longitudinal distance detection error under moderate intensity rain conditions.

Figure A2.

Effect of environmental condition on sensors at nighttime in rain simulator (near range) (a) Longitudinal distance detection error under dry road condition (b) Longitudinal distance detection error under moderate intensity rain conditions.

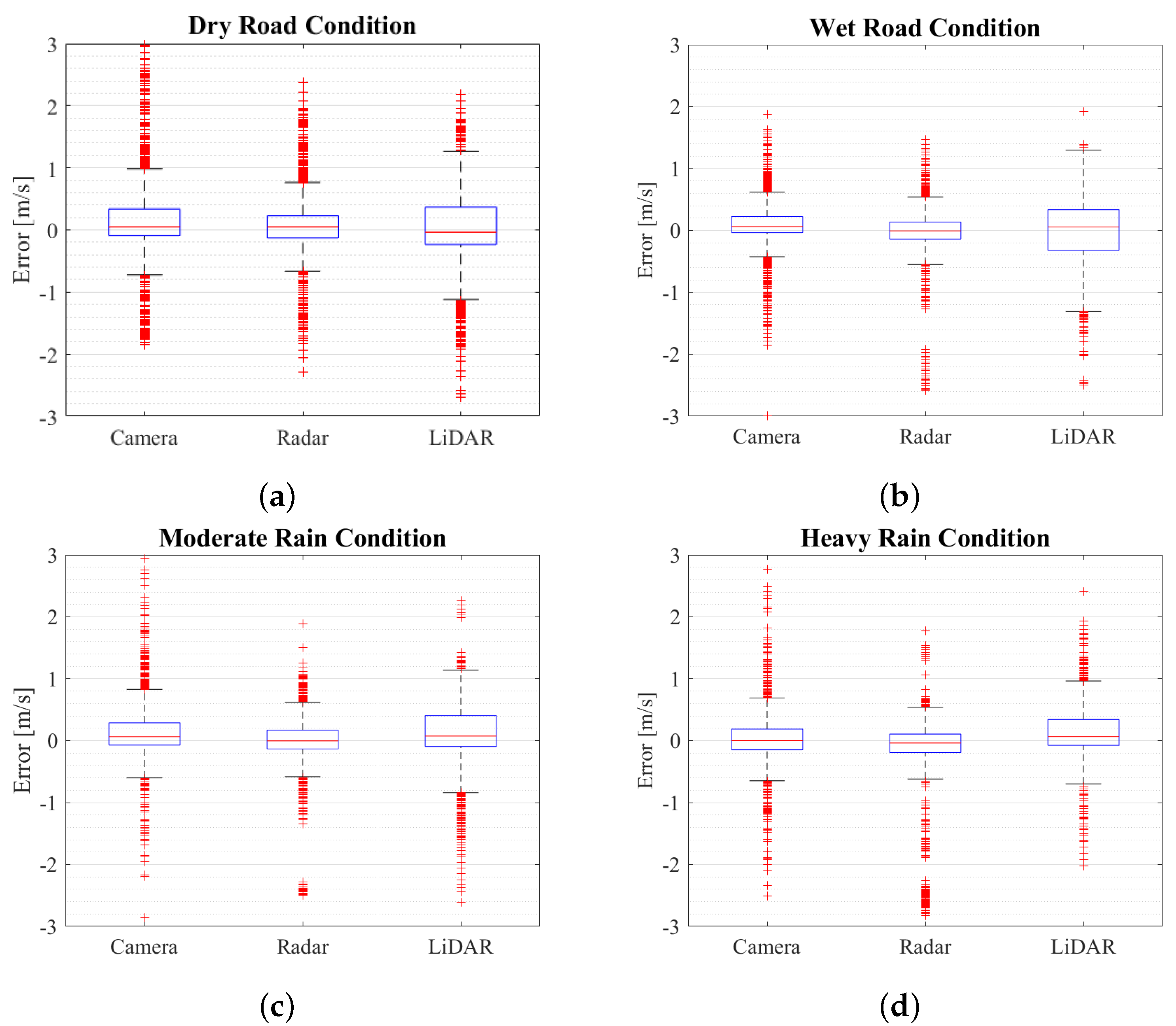

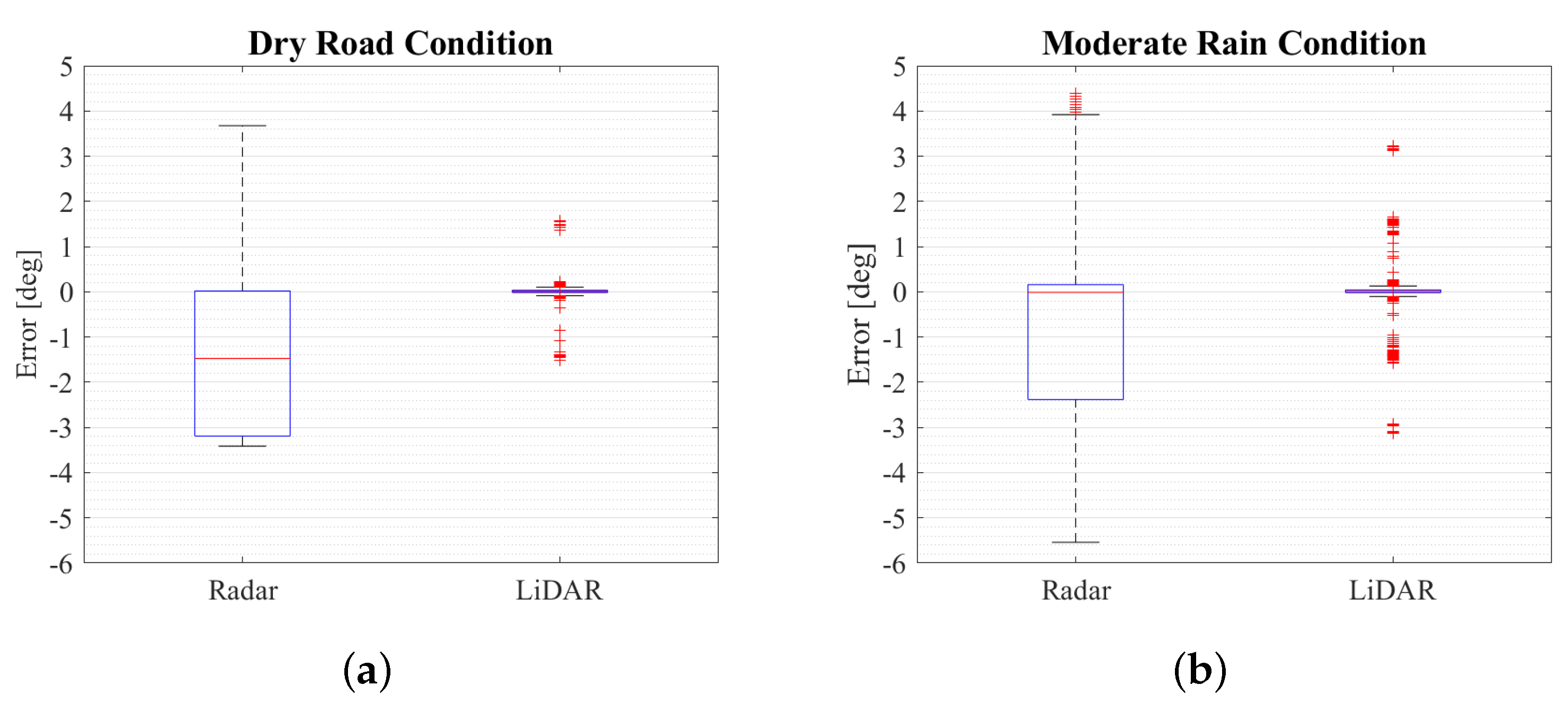

Figure A3.

Effect of environmental condition on sensors at daytime in rain simulator (near range) with artificial lightning (a) Velocity detection error under dry road condition (b) Velocity detection error under wet road condition (c) Velocity detection error under moderate intensity rain conditions (d) Velocity detection error under heavy intensity rain conditions.

Figure A3.

Effect of environmental condition on sensors at daytime in rain simulator (near range) with artificial lightning (a) Velocity detection error under dry road condition (b) Velocity detection error under wet road condition (c) Velocity detection error under moderate intensity rain conditions (d) Velocity detection error under heavy intensity rain conditions.

Figure A4.

Effect of environmental condition on sensors at nighttime in rain simulator (near range) (a) Velocity detection error under dry road condition (b) Velocity detection error under moderate intensity rain conditions.

Figure A4.

Effect of environmental condition on sensors at nighttime in rain simulator (near range) (a) Velocity detection error under dry road condition (b) Velocity detection error under moderate intensity rain conditions.

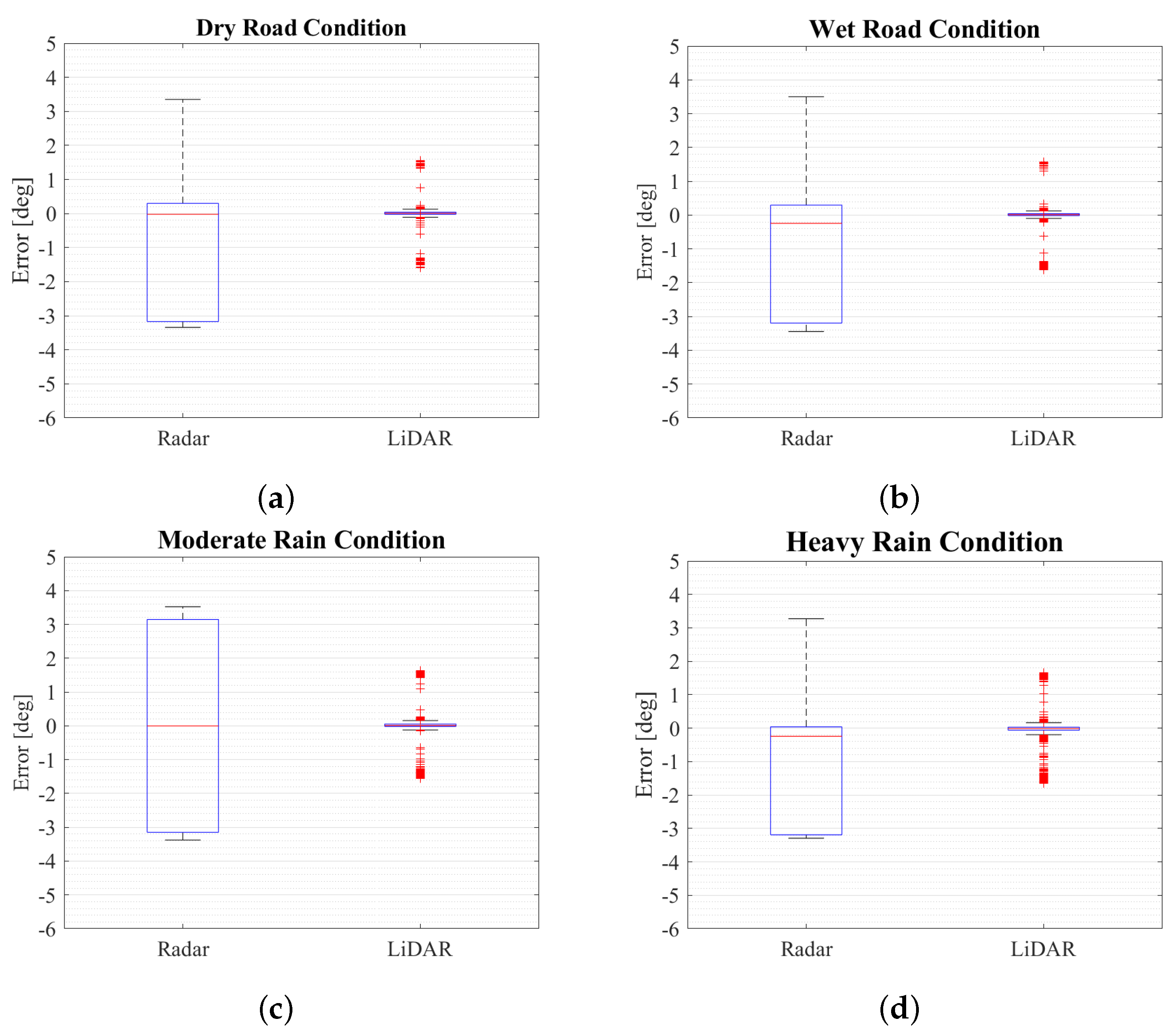

Figure A5.

Effect of environmental condition on sensors at daytime in rain simulator (near range) with artificial lightning (a) Heading angle detection error under dry road condition (b) Heading angle detection error under wet road condition (c) Heading angle detection error under moderate intensity rain conditions (d) Heading angle detection error under heavy intensity rain conditions.

Figure A5.

Effect of environmental condition on sensors at daytime in rain simulator (near range) with artificial lightning (a) Heading angle detection error under dry road condition (b) Heading angle detection error under wet road condition (c) Heading angle detection error under moderate intensity rain conditions (d) Heading angle detection error under heavy intensity rain conditions.

Figure A6.

Effect of environmental condition on sensors at nighttime in rain simulator (near range) (a) Heading angle detection error under dry road condition (b) Heading angle detection error under moderate intensity rain conditions.

Figure A6.

Effect of environmental condition on sensors at nighttime in rain simulator (near range) (a) Heading angle detection error under dry road condition (b) Heading angle detection error under moderate intensity rain conditions.

References

- Singh, S. Critical reasons for crashes investigated in the national motor vehicle crash causation survey. Technical report, 2015.

- Smith, B.W. Human error as a cause of vehicle crashes. http://cyberlaw.stanford.edu/blog/2013/12/human-error-cause-vehicle-crashes, 2013.

- Liu, J.; Li, J.; Wang, K.; Zhao, J.; Cong, H.; He, P. Exploring factors affecting the severity of night-time vehicle accidents under low illumination conditions. Advances in Mechanical Engineering 2019, 11, 1687814019840940. [CrossRef]

- Kawasaki, T.; Caveney, D.; Katoh, M.; Akaho, D.; Takashiro, Y.; Tomiita, K. Teammate Advanced Drive System Using Automated Driving Technology. SAE International Journal of Advances and Current Practices in Mobility 2021, 3, 2985–3000.

- Schrepfer, J.; Picron, V.; Mathes, J.; Barth, H. Automated driving and its sensors under test. ATZ worldwide 2018, 120, 28–35. [CrossRef]

- Marti, E.; De Miguel, M.A.; Garcia, F.; Perez, J. A review of sensor technologies for perception in automated driving. IEEE Intelligent Transportation Systems Magazine 2019, 11, 94–108. [CrossRef]

- Wang, D.; Liu, Q.; Ma, L.; Zhang, Y.; Cong, H. Road traffic accident severity analysis: A census-based study in China. Journal of safety research 2019, 70, 135–147. [CrossRef]

- Brázdil, R.; Chromá, K.; Zahradníček, P.; Dobrovolnỳ, P.; Dolák, L. Weather and traffic accidents in the Czech Republic, 1979–2020. Theoretical and Applied Climatology 2022, pp. 1–15.

- Yoneda, K.; Suganuma, N.; Yanase, R.; Aldibaja, M. Automated driving recognition technologies for adverse weather conditions. IATSS research 2019, 43, 253–262. [CrossRef]

- Hasirlioglu, S.; Kamann, A.; Doric, I.; Brandmeier, T. Test methodology for rain influence on automotive surround sensors. 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2016, pp. 2242–2247.

- Hasirlioglu, S.; Doric, I.; Kamann, A.; Riener, A. Reproducible fog simulation for testing automotive surround sensors. 2017 IEEE 85th Vehicular Technology Conference (VTC Spring). IEEE, 2017, pp. 1–7.

- Li, C.; Jia, Z.; Li, P.; Wen, H.; Lv, G.; Huang, X. Parallel detection of refractive index changes in a porous silicon microarray based on digital images. Sensors 2017, 17, 750. [CrossRef]

- Goldbeck, J.; Huertgen, B. Lane detection and tracking by video sensors. Proceedings 199 IEEE/IEEJ/JSAI International Conference on Intelligent Transportation Systems (Cat. No. 99TH8383). IEEE, 1999, pp. 74–79.

- Borkar, A.; Hayes, M.; Smith, M.T.; Pankanti, S. A layered approach to robust lane detection at night. 2009 IEEE Workshop on Computational Intelligence in Vehicles and Vehicular Systems. IEEE, 2009, pp. 51–57.

- Balisavira, V.; Pandey, V. Real-time object detection by road plane segmentation technique for ADAS. 2012 Eighth International Conference on Signal Image Technology and Internet Based Systems. IEEE, 2012, pp. 161–167.

- Roh, C.G.; Kim, J.; Im, I.J. Analysis of impact of rain conditions on ADAS. Sensors 2020, 20, 6720. [CrossRef]

- Hadi, M.; Sinha, P.; Easterling IV, J.R. Effect of environmental conditions on performance of image recognition-based lane departure warning system. Transportation research record 2007, 2000, 114–120.

- Bijelic, M.; Gruber, T.; Ritter, W. A benchmark for lidar sensors in fog: Is detection breaking down? 2018 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2018, pp. 760–767.

- Kutila, M.; Pyykönen, P.; Holzhüter, H.; Colomb, M.; Duthon, P. Automotive LiDAR performance verification in fog and rain. 2018 21st International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2018, pp. 1695–1701.

- Chaudhary, S.; Wuttisittikulkij, L.; Saadi, M.; Sharma, A.; Al Otaibi, S.; Nebhen, J.; Rodriguez, D.Z.; Kumar, S.; Sharma, V.; Phanomchoeng, G.; others. Coherent detection-based photonic radar for autonomous vehicles under diverse weather conditions. PLoS one 2021, 16, e0259438. [CrossRef]

- Zang, S.; Ding, M.; Smith, D.; Tyler, P.; Rakotoarivelo, T.; Kaafar, M.A. The impact of adverse weather conditions on autonomous vehicles: How rain, snow, fog, and hail affect the performance of a self-driving car. IEEE vehicular technology magazine 2019, 14, 103–111. [CrossRef]

- Arage Hassen, A. Indicators for the Signal Degradation and Optimization of Automotive Radar Sensors Under Adverse Weather Conditions. PhD thesis, Technische Universität, 2007.

- Blevis, B. Losses due to rain on radomes and antenna reflecting surfaces. Technical report, DEFENCE RESEARCH TELECOMMUNICATIONS ESTABLISHMENT OTTAWA (ONTARIO), 1964.

- Slavik, Z.; Mishra, K.V. Phenomenological modeling of millimeter-wave automotive radar. 2019 URSI Asia-Pacific Radio Science Conference (AP-RASC). IEEE, 2019, pp. 1–4.

- Gourova, R.; Krasnov, O.; Yarovoy, A. Analysis of rain clutter detections in commercial 77 GHz automotive radar. 2017 European Radar Conference (EURAD). IEEE, 2017, pp. 25–28.

- Bijelic, M.; Gruber, T.; Ritter, W. Benchmarking image sensors under adverse weather conditions for autonomous driving. 2018 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2018, pp. 1773–1779.

- Rosenberger, P.; Holder, M.; Huch, S.; Winner, H.; Fleck, T.; Zofka, M.R.; Zöllner, J.M.; D’hondt, T.; Wassermann, B. Benchmarking and functional decomposition of automotive lidar sensor models. 2019 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2019, pp. 632–639.

- Digitrans. Test track for autonomous driving in St. Valentin. https://www.digitrans.expert/en/test-track/, 2023.

- G. Kathiravelu, T.L.; Nichols, P. Rain Drop Measurement Techniques: A Review. MDPI 2016, 8(1).

- Pruppacher, H.; Pitter, R. A semi-empirical determination of the shape of cloud and Rain Drops. Journal of the Atmospheric Sciences 1971, 28, 86–94.

- Foote, G.; Toit, P.D. Terminal velocity of raindrops aloft. Journal of Applied Meteorolgy 1969, 8, 249–253.

- (AMS), A.M.S. AMS - Glossary of Meteorolgy. https://glossary.ametsoc.org/wiki/Rain, 2023.

- Nassi, D.; Ben-Netanel, R.; Elovici, Y.; Nassi, B. MobilBye: attacking ADAS with camera spoofing. arXiv preprint arXiv:1906.09765 2019.

- Continental. ARS 408 Long Range Radar Sensor 77 GHz. https://conti-engineering.com/components/ars-408/, 2020.

- RoboSense. RS-LiDAR-16 Powerful 16 laser-beam LiDAR. https://www.robosense.ai/en/rslidar/RS-LiDAR-16, 2021.

- seven orgonlzatiarls Supportedthe, T. Guide to the Expression of Uncertainty in Measurement 1995.

- Fan, H.; Zhu, F.; Liu, C.; Zhang, L.; Zhuang, L.; Li, D.; Zhu, W.; Hu, J.; Li, H.; Kong, Q. Baidu apollo em motion planner. arXiv preprint arXiv:1807.08048 2018.

- Lee, Z.; Shang, S. Visibility: How applicable is the century-old Koschmieder model? Journal of the Atmospheric Sciences 2016, 73, 4573–4581.

- Ngo, D.; Lee, S.; Lee, G.D.; Kang, B. Single-image visibility restoration: A machine learning approach and its 4K-capable hardware accelerator. Sensors 2020, 20, 5795.

- Li, Y.; Duthon, P.; Colomb, M.; Ibanez-Guzman, J. What happens for a ToF LiDAR in fog? IEEE Transactions on Intelligent Transportation Systems 2020, 22, 6670–6681.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).