2.1. SGD-Type Algorithms

How can we see from

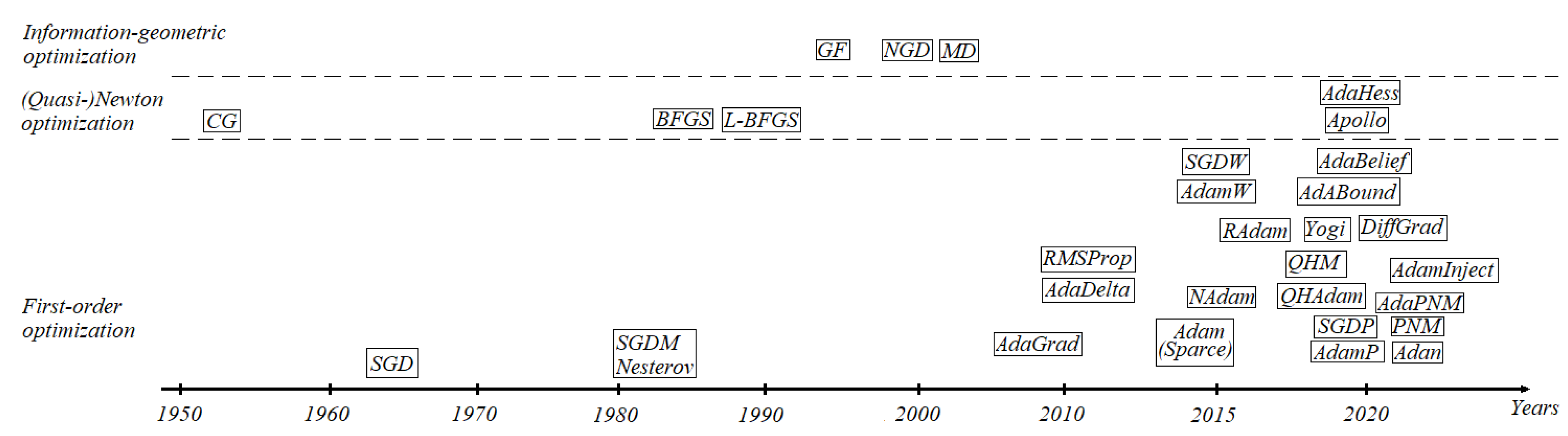

Figure 1, the earliest first order optimization algorithm is SGD [

4], which can be described by the iterative formula

where

denotes weight,

is a loss function with its gradient

and

is a learning rate. Later such approach was modified to SGDM with Nestreov condition [

5] presented by

where

is an initial point,

, where

is a damping parameter and

is a momentum. The algorithm of SGDM with Nesterov condition is constructed in [

22]. This optimization method is still used in approximation theory and machine learning. Various back propagation methods ([

23,

24,

25,

26]) are based on calculating of local partial derivatives, which rectify the value of weights of neural networks using (2). But such approach can be modified to others more effective versions, which converge to minimum faster. Therefore, because of relatively small rate of convergence of SGDM with Nesterov condition and its step-size updating, There was introduced Adaptive gradient method (AdaGrad) in [

6].

AdaGrad differs from SGDM with Nesterov condition by the adaptive step-size. This advantage allows to increase learning rate and, simultaneously, reduce time consumption. AdaGrad is described by the following formula

where the sum of gradients is presented as

As was noted above, AdaGrad updates the stepsize

on the given information of all previous gradients observed along the way. But its disadvantage is similar, like in SGD, where minimization is based on directions of the gradients and step-size regulation, which does not guarantee the convergence in the neighborhood of global minimum. Afterwards, Adagrad was equipped with the gradient norm information, which improves the convergence rate. Such modification is called AdaGrad-Norm [

6], presented as follows:

AdaGrad and AdaGrad-Norm adapt the step-size more accurately, compared with SGD and SGDM with Nesterov condition, what allows to increase the learning rate for growing the probability of reaching the global minimum. But accumulation of previous gradients does not resolve the problem of convergence in the neighborhood of global minimum. This approach, like SGD, descends towards negative directions of gradients. Such disadvantage caused researchers to come up with idea of adapting of the step-size using mean moments, what implied the root mean square propagation (RMSProp) optimization algorithm.

The RMSprop optimizer [

7] partially uses the same technique as SGDM and limits the oscillations in the upright direction, what allows to increase the learning rate. It makes possible to take fast substantial step-sizes in the horizontal direction converging

. Such approach is described as

where

is a moment. RMSProp is still actual algorithm in many neural networks, like SGDM with Nesterov condition, because it contains prerequisites of exponential moving averages, which later will have a lot of modifications, that give an opportunity to avoid some local minimums. Moreover, according to techniques in AdaGrad and RMSProp, there was introduce AdaDelta [

8].

AdaDelta is a modification, based on techniques in SGD, RMSProp and AdaGrad, that separate dynamic learning rate per-dimension, requires no manual setting of a learning rate and takes minimal computation over gradient descent. Additionaly, it is insensitive to hyperparemeters and robust to blow-up gradient, noise and architecture choice. Unlike Adagrad such method reduces aggressive, monotonically decreasing learning rate.

Adadelta restricts the window of accumulated past gradients to a fixed size, instead of accumulating all past squared gradients. The running average

depends only on the previous average and current gradient. Initial accumulation variables

,

are equal to 0. AdaDelta algorithms is described as follows. Accumulate gradient:

where

is a decay rate paremeter. Compute update:

where

The stochastic gradient descent was modified in other variations, which, like AdaGrad, AdaDelta and RMSProp, increase the test accuracy and accelerate the implementation of algorithm. Such modifications can improve the process of recognition, prediction, generation and making decision, what develops the theory of neural networks. But in deep convolutional neural networks modification (2)-(5) does not achieve the higher accuracy compared with SGDM with Nesterov condition, how can be seen in [

27], where only some modified algorithms presented in next subsection give higher test accuracy of recognition. Therefore, one needs algorithms, which take into account gradient directions and values weight. First very important modification of SGDM is Nesterov Accelerated Gradient (NAG), presented in [

28].

Nesterov’s accelerated gradient descent is widely used in practice for training deep networks and other supervised learning models, because it often provides significant improvements over SGDM with Nesterov condition. Rigorously speaking, “fast gradient” methods have provable ammendments over gradient descent only for the deterministic case, where the gradients are exact. In the stochastic case, "fast gradients" partially mimic their exact gradient counterparts, resulting in some practical gain. Such approach is described as

As said before, there is an efficient technique of utilizing Lebesgue norm on weight of neurons for improving resulting test accuracy. Such approach is called

regularization. It is well described and tested on SGDM with Nesterov condition in [

29].

Proposed

regularization in [

30] acts similarly with the usual weight decay, which is used in SGD. Indeed, both approaches evaluate weights closer to zero with the same rate. The

regularized SGD (SGDW) is a modification of usual SGD, which differs by recovering original weight decay using decoupling weight decay from the optimization steps with respect to the loss function. However, for adaptive gradient algorithms the main difference is a presence of adapted sums of gradients of the loss function and the regularizer, whereas with decoupled weight decay, only gradients of the loss function are adapted. With

regularization both types of gradients are normalized by their typical summed magnitudes, and therefore weights with large typical gradient magnitude are regularized by a smaller relative amount than other weights. The introduced SGDW method is described by the following iterative formula

where

is a weight decay parameter (

regularization factor),

where

is a schedule multiplier. Unfortunately, such SGDW does not significantly improve usual SGD, especially in deep neural networks, auto-encoders and graph-neural networks. Because the architecture of these neural networks confuses the

regularization and make the process of minimization too difficult for SGDW. But there are two techniques, which can improve the quality of minimization of the loss function using projection [

31] and hyper-parameter methods [

32].

Before introducing the projection technique for SGD, it is necessary to recall a batch normalization [

33]. In practise, normalization techniques, such as batch normalization, play an important role in for modern deep learning. They allow weights converge more rapid with better generalization performances. The normalization-induced scale invariance among the weights gives advantages to SGD, such as the effective step-size automatic regularization and stabilizing the training procedure. In practise, one can notice that the including momentum in SGD-type optimizers reduces much step-sizes for scale-invariant weights. Such phenomenon is not yet studied and causes unwanted side effects in the process of minimization. This is a crucial issue because the vast majority of modern deep neural networks consist of SGD- and Adam-type optimizer, which contain momentum, and scale-invariant parameters. Therefore, there was proposed SGD with projection (SGDP), which removes the radial component, or the norm-increasing direction, at each optimizer step. Because of the scale invariance, this modification only alters the effective step-sizes without changing the effective update directions, thus satisfying the original convergence properties of GD optimizers.

SGDP can be presented as following iterative formulas:

This approach, compared with SGDW, increases the rate of convergence, but it is not enough to significantly advance the process of minimization of the loss function in global minimum. what can be seen in experiments of minimizing Rastrigin function in (

https://github.com /jettify/pytorch-optimizer). But the problem of avoiding the local minimums was solved by introducing the hyper-parameter methods, which is called quasi-hyperbolic momentum algorithm (QHM).

Momentum-based acceleration of SGD is widely used in deep learning. There is presented QHM as an extremely simple alteration of momentum SGD, averaging a plain SGD step with a momentum step, what presented in [

34]. This approach introduces the immediate discount factor

, encapsulating plain SGD (

) and momentum (

). A self-evident interpretation of QHM is a

-weighted average of the momentum update step and the plain SGD update step. The expressive power of QHM intuitively comes from decoupling the momentum buffer’s discount factor

from the current gradient’s contribution to the update rule

. In contrast, momentum tightly couples the discount factor

and the current gradient’s contribution

.

Let us present QHM as the following iterative process:

Such method lets surmount the local minimums. This approach has no disadvantages, compared with demonstrated above algorithms. But, like others, it QHM does not suffice for achieving the highest accuracy in deep neural networks, because it still takes into account the direction of gradients and amendments with parameters and gradient normalization.

Before drawing conclusions, it should be noted that for any smooth objective function

provided optimization methods in above have almost the same regret bound [

35,

36]

which measures the convergence rate and belongs to set of

. Presented above algorithms can be improved by performing their regret bounds to

, which was done in [

37] and. Such algorithms are called strongly convex.

SGD-type algorithms are used in convolutional, recurrent, autoencoder, graph neural networks. But their huge disadvantage is insufficient information about the properties of loss function to reach the global minimum. SGD is led by the direction of gradient, which is not enough for increasing the test accuracy. In modern neural networks more preferred approaches are Adam and its modifications (Adam-type algorithms), which contain exponential moving averages of gradient and squared gradient, that significantly improves the quality of training neural networks. These methods improve the optimization by estimation of moments, which gives more information of about the global minimum. Moreover, such improvement allows to increase the accuracy of image recognition, time series prediction and object classification.

2.2. Adam-Type Algorithms

The adaptive moment estimate (Adam) [

39] algorithm is a continuation of developing gradient based optimization, which significantly increases the accuracy in neural networks. Taking into account directions of gradients and means of moments, minimization of loss function with higher frequency converges in the neighborhood of global minimum. The iterative formula is presented as

Note that are called moments, and are exponential moving averages of and , respectively.

According to the adaptive moment estimate algorithm (11), there were provided modified versions, which distinct by step-size adaptation and manipulation with exponential moving averages. Like SGDW, there was provided a modification in [

40], which is called Adam algorithm with

regularization. In the case of usual Adam, weights, that tend to have large gradients of

f, are not regularized as much as they would with decoupled weight decay, since the gradient of the regularizer is scaled. AdamW with the same exponential moving averages can be described as the following iteration formula:

where

is a schedule multipliers and

is a

regularization factor. There is proved fact, that such modification with decoupled weight decay yields substantially better generalization performance than the common implementation of Adam with

regularization, in [

41]. Another approach for modification of Adam algorithms is projected Adam, which is called AdamP [

42].

AdamP, like SGDP in (8), is based on projection of the sum of gradient and momentum vectors onto the tangent space of weights. Such approach allows to accelerate effective step sizes for scale-invariant weights. This technique with its applications is described in [

43] and has the following representation:

Then, according to cosine condition we obtain

According to notes in [44], it implies that momentum-based optimizers induce an excessive growth of the scale-invariant weight norms, which prematurely decay the effective optimization steps, leading to sub-optimal performances. The resulting AdamP, like SGDP in Section 2.1, successfully suppresses the weight norm growth and train a model at an unobstructed speed. Another approach to accelerate the convergence rate is the applying the quasi-hyperbolic momentum, like it was made for QHM in (9).

As for QHM, there was proposed QHAdam [

45], which replaces both of Adam’s moment estimators with quasi-hyperbolic terms. This approach is described as

As was noted in previous subsection, there exist Nesterov trick NAG, which accelerates usual SGD in [

46]. The same modification propagates to Adam and transform it to NAdam [

47]. This technique with higher frecuency converge to the neighborhood of global minimum for less number of iteration, compared with Adam and its previous modifications, and constructed more simple than AdamW, AdamP and QHAdam. But for cases of Rastrigin functions and Rosenbrock functions, (11)-(13) do not converge in neighborhood of global minimum. As was said in

Section 2.1,

regularization, projection and quasi-hyperbolic parameters techniques influence to step-size, taking into account gradient directions. In cases, when included moment estimation, such modifications can accelerate the process of convergence, but not necessary in the neighborhood of global minimum. But there are two techniques, which make the process of minimization ’smoother’. Such approaches are called Nesterov-accelerated (NAdam) and Rectified (RAdam) adaptive moment estimation.

Let us present the iterative formula of NAdam:

This method is a continuation of NAG due to the added exponential moving averages. Compared with Adam and its previous modifications, NAdam increases the accuracy of converging in deep convolutional neural networks and, simultaneously, make the minimization process faster by ’smoothing’ the descent. But such technique is ineffective in physics-informed neural networks, because of the smoothing descent trajectory, which for partial differential equation solution gives more deviations. Such disadvantage was resolved by rectified Adam (RAdam).

Proposed in [

48], RAdam differs from other optimization method by introducing a term to rectify the variance of the adaptive language modeling and learning rate. This modification proved its ability to receive higher test accuracy. Such optimization method has the following iterative formula:

If the variance is tractable (

) then the adaptive learning rate is computed as:

the variance rectification term is calculated as:

and we update parameters with adaptive momentum:

If the variance is not tractable we update instead with:

Such method overtake NAdam and other algorithms, especilly in deep neural networks [

49], such as AlexNet [

50], ResNet [

51], InceptionNet [

52], GoogleNet [

53] and Res-Next [

54]. But RAdam adapts the learning rate too complex and, like previous analogues, can not converge in the neighborhood of global minimum of Rastrigin function. Moreover, there exist other simple ways to adjust the learning rate and make the process of minimization faster. One of them is difference gradient approach, which is called DiffGrad [

55].

The difference gradient approach is based on moment estimate technique and, instead of complex manipulations with learning rate and weights, calculate only additional coefficient, which is called DiffGrad friction coefficient (DFC). The main distinction of DiffGrad is that such approach is based on the change in short-term gradients and controls the learning rate based on the need of dynamic adjustment of learning rate. This means that diffGrad follows the norm that the parameter update should be smaller in low gradient changing regions. The friction coefficient diffGrad (DFC) is designed to control the learning rate using information about the short-term behavior of the gradient. The DFC is represented by

and defined as

where

is the change between previous and current gradients, given as

In the proposed DiffGrad optimization method, the steps up to the computation of bias-corrected 1-st order moment

and bias-corrected 2-nd order moment

are the same as those of Adam optimization [38]. The DiffGrad optimization method updates

, using the following update rule:

DiffGrad generate a high learning rate if the gradient change is more (i.e., the optimization is far from the optimum solution), and a low learning rate if the gradient changes minimally (i.e., the optimization is near to the optimum solution). Moreover, such technique lets to avoid some local minimums, which can be seen in minimization of Rastrigin and Rosenbrock functions [

56]. This approach is suitable for deep convolutional neural networks due to moment estimation, analysing past and current gradient. Also, DiffGrad adjusts the learning rate very accurately for avoiding overshooting of the global minimum and reducing oscillation around it. Such algorithms is tested with the ResNet50 model for an image categorization task over the CIFAR10 and CIFAR100 datasets in [

57]. According to result of recognition, it became clear that DiffGrad works better, compared with SGDM (2), AdaDelta (5) and Adam (11). But this approach does not guarantee the high accuracy in other neural networks, especially in quantum, spiking, complex valued and physics-informed neural networks. Such disadvantage is explained by the lack of analyzing the curvature of minimizing loss function. For that reason, there was introduced progressive optimization algorithm in [

58], which is called Yogi.

The Yogi algorithms, like Adam, relies on scaling gradients down by the square root of exponential moving averages of past squared gradients and controls the increase in effective learning rate, leading to even better performance with similar theoretical guarantees on convergence in [

59]. It allows to solve the problem of convergence failure in simple convex optimization settings, which Adam-type algorithms, like AdamW, AdamP, QHAdam, NAdam and RAdam, can not handle. The difference between

and

and its magnitude depend on

and

. When

is much larger than

, Yogi, like Adam, increase the effective learning rate, but such procedure is more controllable. This approach has the following describtion:

This method shows better results in deep convolutional neural networks, compared with DiffGrad and other previous Adam-type algorithms. But authors from [

60,

61] defined new optimization methods for deep learning such as AdaBelief and AdaBound.

The main feature of AdaBelief is adapting the learning rate, according to the "belief" in the current gradient direction. There is a difference between AdaBelief and Adam in parameters

and

, which are defined as exponential moving averages of

and

, respectively. According to

as the prediction of the gradient at the next time step, if the observed gradient greatly deviates from the prediction, then one distrust the current observation and take a small step; if the observed gradient is close to the prediction, therefore one trust it and take a large step. This allows to achieve the high test accuracy in convolutional neural networks, that was presented in [

61] on ImageNet and CIFAR10.

It should be noticed that such approach has a modified version with fast gradient sign method (FGSM) presented in [

62,

63].

The AdaBound method gives an opportunity to restrict the learning rate between upper and lower continuous functions, which are called clips. Such technique reduces the probability of vanishing and blow-up gradient. This approach is defined as

This approach is more complex, compared with DiffGrad, Yogi and AdaBelief, but it is capable to converge in the neighborhood of global minimum. There were made experiments of minimization of Rastrigin and Rosenbrock functions (

https://github.com/jettify/pytorch-optimizer), where AdaBound achieved the highest accuracy, converging in the neighborhood of global minimum. But such approach is too complex for optimization and there exists much simple method, which is called AdamInject [

64]. It reduces time consumption, preserving convergence rate.

AdamInject is one of the most recent approaches in first order optimization algorithms, which, unlike the AdaBelief algorithm, modifies , which is the exponential moving average of , into . Such parameter is equipped with the difference between the previous parameters and . AdamInject has the following description.

Afterwards, one make usual calculations

This algorithms was tested on Rastrigin and Rosenbrock functions. Afterwards, there were trained on CIFAR10 VGG 16, ResNet, ResNext, SENet and DenseNet equipped with AdamInject, which presented the best results, compared with known analogues.

Introduced Adam-type optimization algorithms are used in deep convolutional neural networks, like Res-Net, Res-Next, InceptionNet, GoogleNet and so on. Also they find an application in recurrent and spiking neural networks due to their described above advantages, which SGD-type algorithms does not have. But in quantum, complex-valued and quaternion-valued neural networks such approaches loses in accuracy to usual SGDM with Nesterov condition. This problem caused researches in [

65] come up with extending the number of moment from 2 to 3. This approach is called positive-negative momentum (PNM)

2.3. Positive-Negative Momentum

Developing SGD- and Adam-types optimization algorithms can not be infinite, there have to another approaches and techniques for extending class of first-order methods. This issue made the researches to consider the methods, which allow more than two exponential moving averages

and

. Because step-size regularization, according to moment estimation and introduced modification in

Section 2.1 and

Section 2.2, has its limits, what can be seen in convolutional neural networks, such as ResNet18, GoogLeNet and DenseNet. In this cases there have to additional exponential moving average, that let descend to neighborhood of global minimum.

In the paper [

66] introduced conventional momentum method, also called Heavy Ball (HB), which is described in [

67]. Later there was proposed positive-negative momentum (PNM) optimization algorithms. In this approach the main feature is a positive-negative averaging, which is analogue of exponential moving average in Adam. This averaging is described as follows:

Inspired by this simple idea, there were proposed the combining the positive-negative averaging with the conventional momentum method in [

66]. The positive-negative average is described as

The stochastic gradient descent equipped with the conventional momentum estimates, which adjust the learning rate and value of the gradient, is written in the following formula

This approach is an analogue of SGD-type algorithm, which differs by presence of positive-negative average

and step-size adaptation. There is also proposed Adam-type analogue of PNM algorithm, which is called AdaPNM, described as

The advantage of PNM and AdaPNM can be seen in [

68], where were tested deep neural networks, such as ResNet, VGG, DenseNet and GoogleNet on image bases CIFAR10 and CIFAR100. These approaches gives higher test accuracy, compared with advances Adam-type optimization algorithms, like Yogi and AdaBound. If one equips AdaPNM and PNM with techniques contained in known analogues with two exponential moving averages, then it can improve the quality of optimization process. Besides these algorithms, there exists the extension of NAG and Nadam, which is called adaptive Nesterov momentum Algorithm (Adan).

In [69] proposed the Adaptive Nesterov momentum algorithm is devoted to effectively accelerate the training of deep neural networks. Adan first reformulates the vanilla Nesterov acceleration to develop a new Nesterov momentum estimation method, which reduces extra computations and memory overhead of computing gradient at the extrapolation point. Adan adopts the first and second order moments of the gradient in adaptive gradient algorithms for convergence acceleration. This approach has the following form:

This method is the same generalization of SGD, Adam and NAdam. There is presented the advantage of this approach over AdaBelief, which shew third result of test accuracy after usual SGD. Regardless the modifications of Adam-type algorithms, usual SGD can give even better results, what claims to search other methods, that approve their modifications.

First order optimization methods are suitable for problem of image recognition, time series prediction and object classification. They do not consume much time of execution and power, what makes them actual in modern neural networks. But first order optimization algorithms, except PNM, AdaPNM and Adan, can not significantly increase the accuracy in neural networks with complex architecture, such as graph, complex-valued and quantum neural networks. In the solving differential equations they can not overtake the results of Adam, because physics-informed neural networks contain automatic differentiation, which work after multilayer perceptron.. Therefore, one needs second order optimization algorithms, which are able to significantly improve the process of minimization of the loss function.