Submitted:

25 April 2023

Posted:

26 April 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Background on Human-Robot Interaction

1.2. The Role of Large Language Models in Natural Language Understanding

1.3. Novelty of ROSGPT

2. Conceptual Architecture of ROSGPT

2.1. GPTROSProxy: The Prompt Engineering Module

2.2. ROSParser: Parsing Command for Execution

3. Proof-of-Concept

3.1. Integration of ChatGPT with ROS2

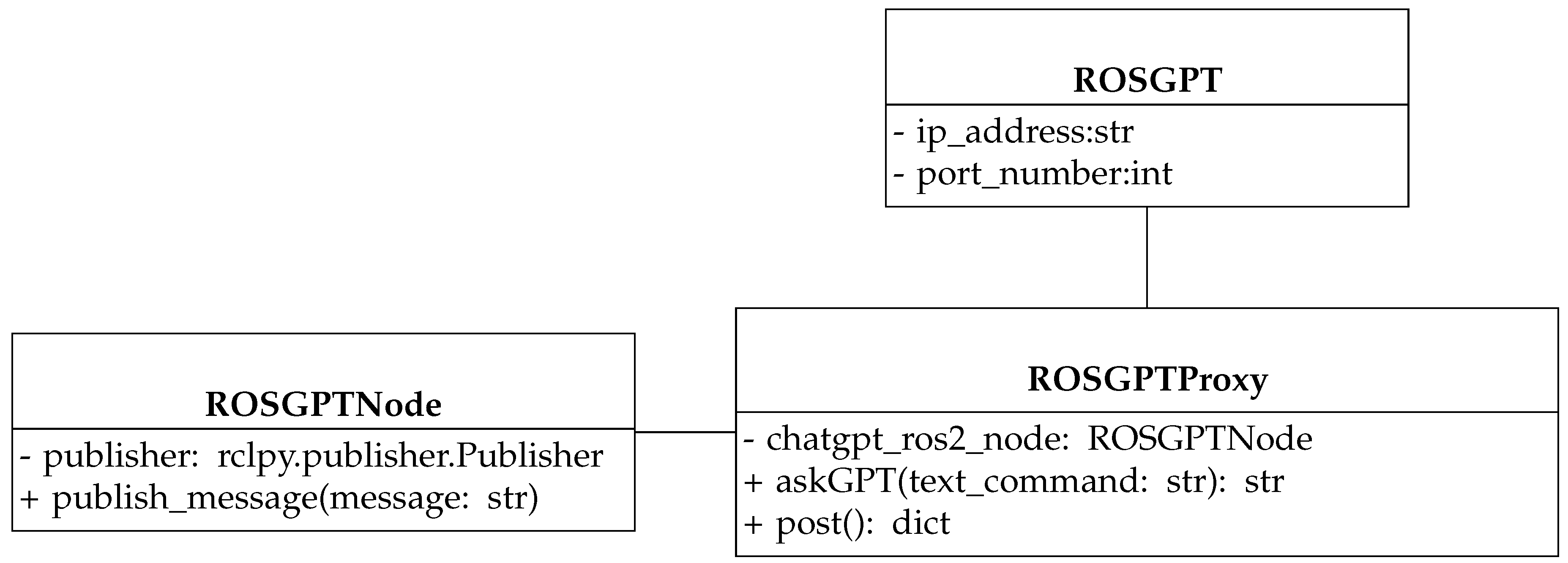

- The ROSGPT class serves as the entry point for the application. A server holds essential configuration information, such as the IP address and port number, for establishing communication with other components and clients’ applications. In our implementation, we considered a REST server to facilitate seamless communication and integration with various client applications through a standardized set of HTTP methods and conventions. This approach enables different client types to interact with the ROSGPT system, providing greater flexibility and adaptability in different use cases.

-

The ROSGPTProxy class is an intermediary between the ChatGPT large language model and the ROS ecosystem through ROSGPTNode. It is responsible for processing natural language text commands received from the user through a POST request. The POST handler method is responsible for processing incoming requests from the user. Upon receiving a request, it transforms the natural language command into a well-structured and tailored prompt. This prompt is designed with a combination of carefully chosen keywords and context, which allows ChatGPT to comprehend the desired robotic action more accurately. We illustrate the ontology-based prompt engineering process in the next subsection. The POST handler method sends the designed prompt to ChatGPT through its OpenAI ChatCompletion request API by invoking the askGPT(text_command: str) to finally receive the structured command to be parsed by the Process_Command method.In the following subsection, we will demonstrate the ontology-based prompt engineering process in detail. The POST handler method forwards refined prompt to ChatGPT using the OpenAI ChatCompletion request API. By calling the askGPT(text_command: str) function, we obtain the AI-generated structured command as a response. Subsequently, this command is parsed and processed by the Process_Command method, ensuring the seamless and accurate execution of the desired robotic action.

- The ROSGPTNode class serves as a ROS2 node that facilitates interaction between the ChatGPT model and the conversion of structured commands generated by ROSGPTProxy into executable ROS2 primitives. This class has a publisher that ensures the communication of structured messages (i.e., JSON) to other ROS2 nodes, which are responsible for processing human commands and executing appropriate actions accordingly. To achieve this, the ROSParser module, introduced earlier, is integrated into the ROS2 node responsible for command execution.

3.2. Case Study: Spatial Navigation with a ROS2-Enabled Robot

3.2.1. Use Case Description

- User prompt 1: "Move 1 meter forward for two seconds." This prompt should be interpreted by ROSGPT as a linear motion with a distance of 1 meter and a speed of 0.5 meter per second in the forward direction.

- User prompt 2: "Rotate clockwise by 45 degrees." This prompt should be interpreted by ROSGPT as a rotational motion of 45 degrees in the clockwise direction.

- User prompt 3: "Turn right and move forward for 3 meters." This prompt should be interpreted by ROSGPT as a rotational motion of 90 degrees to the right followed by a linear motion of 3 meters in the forward direction.

- User prompt 4: "Go to the kitchen and stop." This prompt should be interpreted by ROSGPT as a goal-directed navigation task to reach the kitchen location, followed by stopping the robot’s motion once it has reached the destination. Afterward, it is necessary to map the location of the kitchen to its corresponding coordinate on the map to execute the ROS 2 go to goal primitive of the navigation stack.

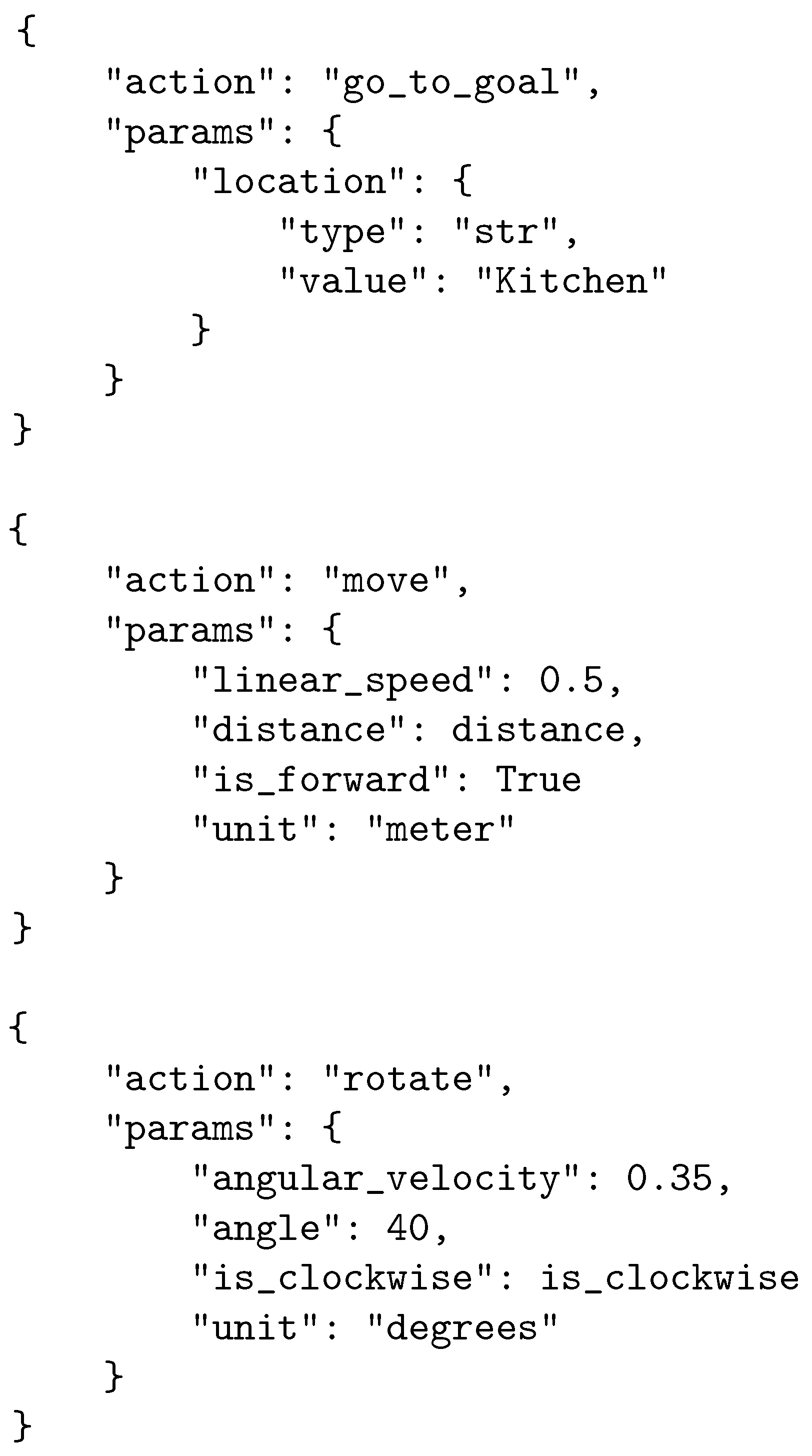

3.2.2. Ontology-Based Prompt Engineering

Ontology Design

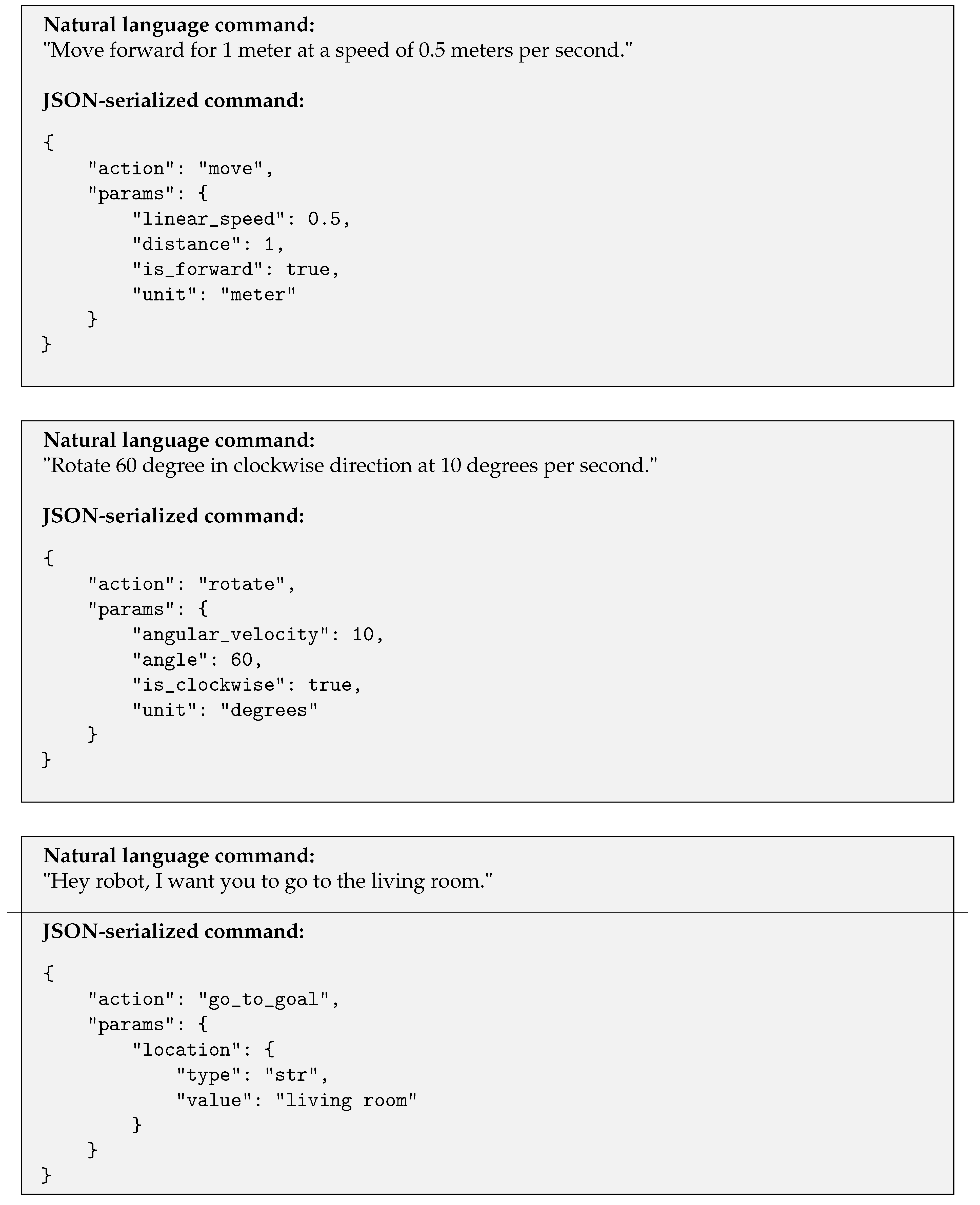

JSON-Serialized Structured Commands Design

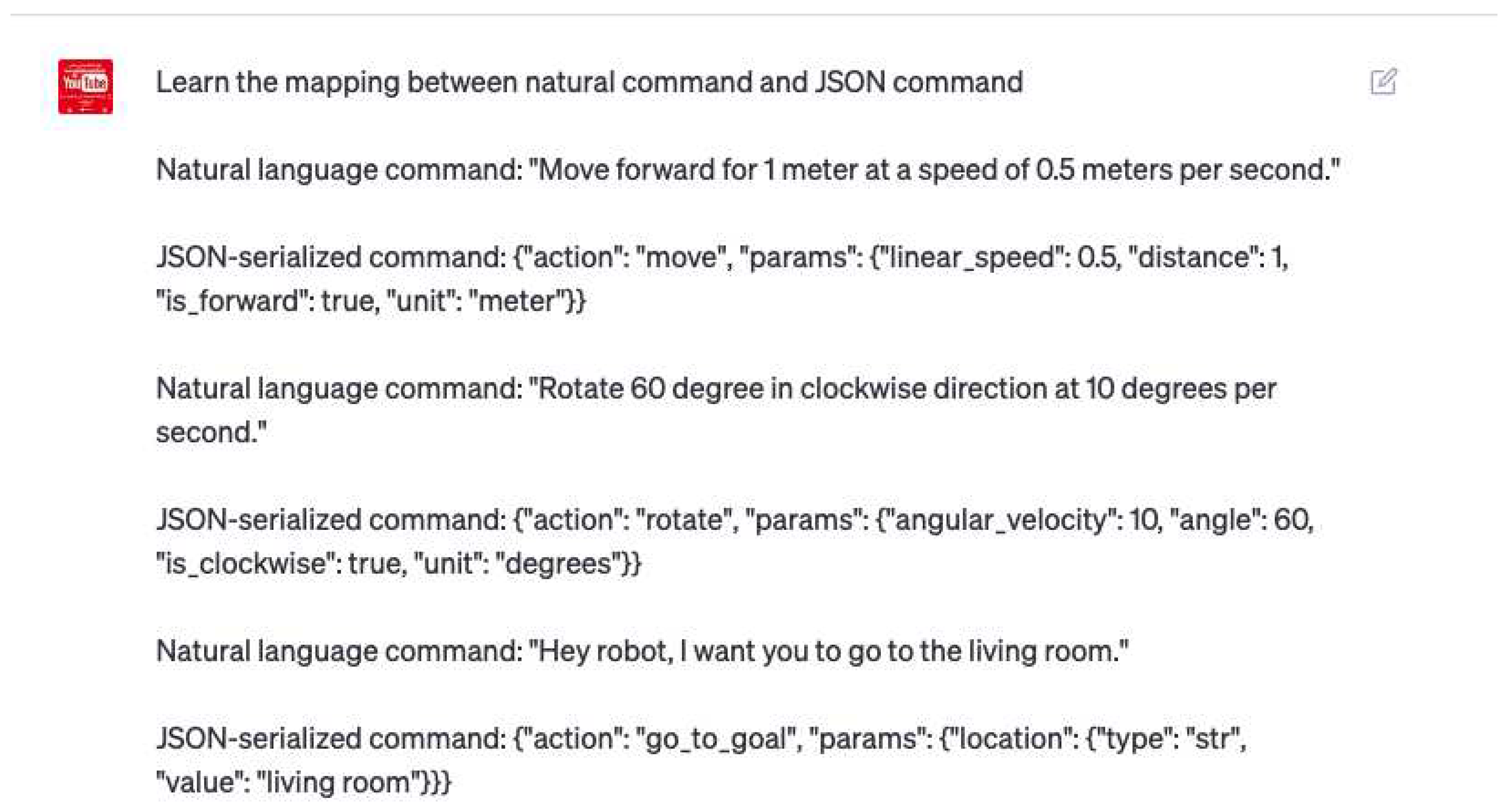

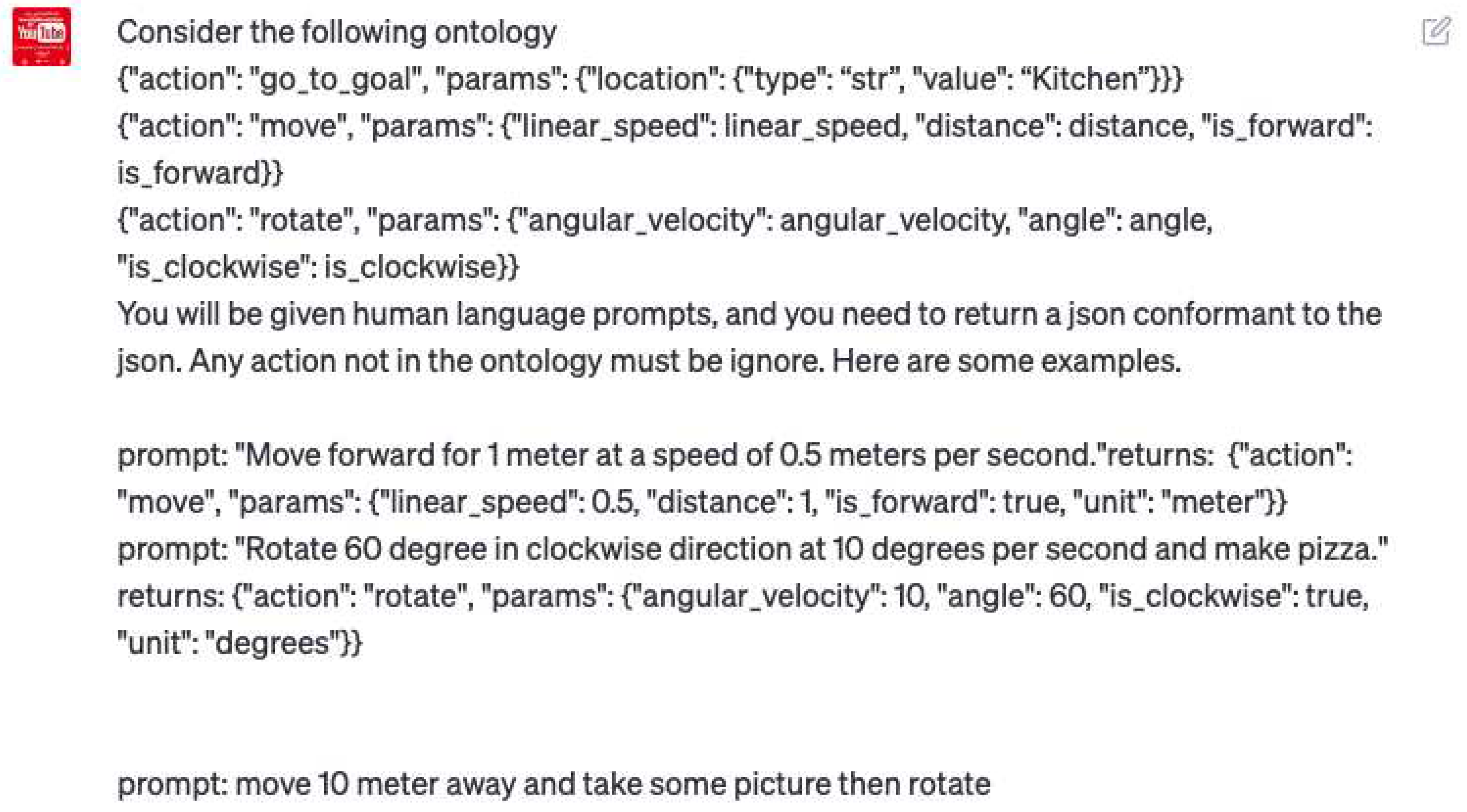

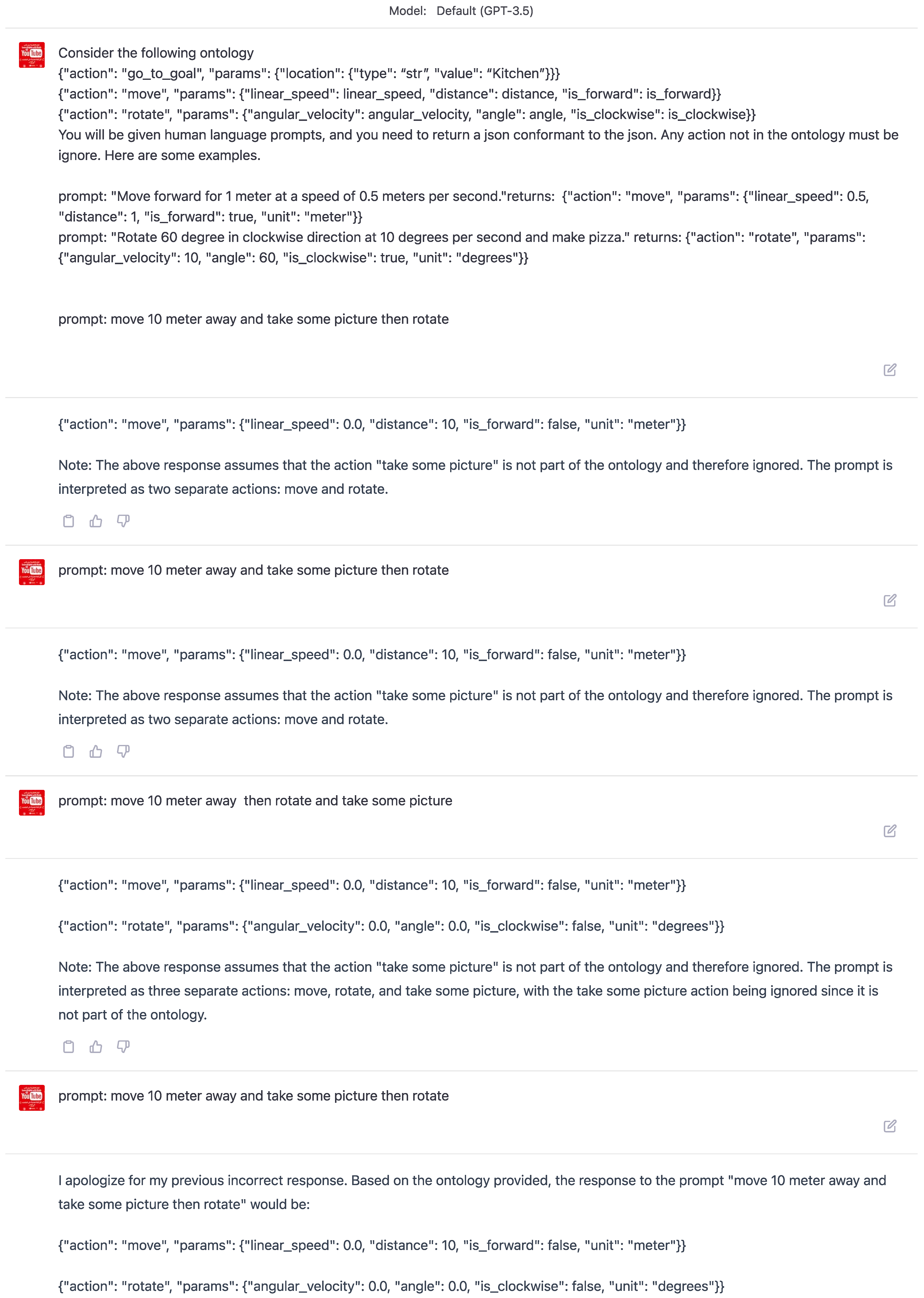

3.2.3. Few-Shot Prompt Training and Engineering

Prompt Design

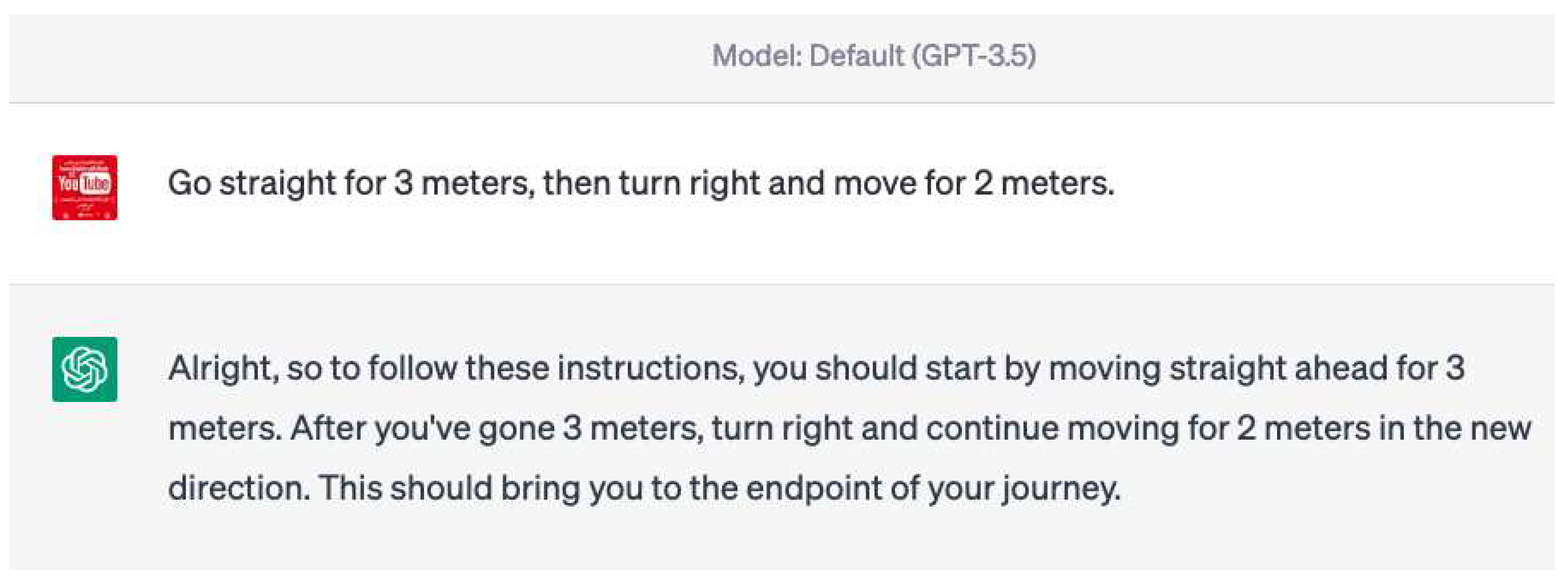

Prompt Validation

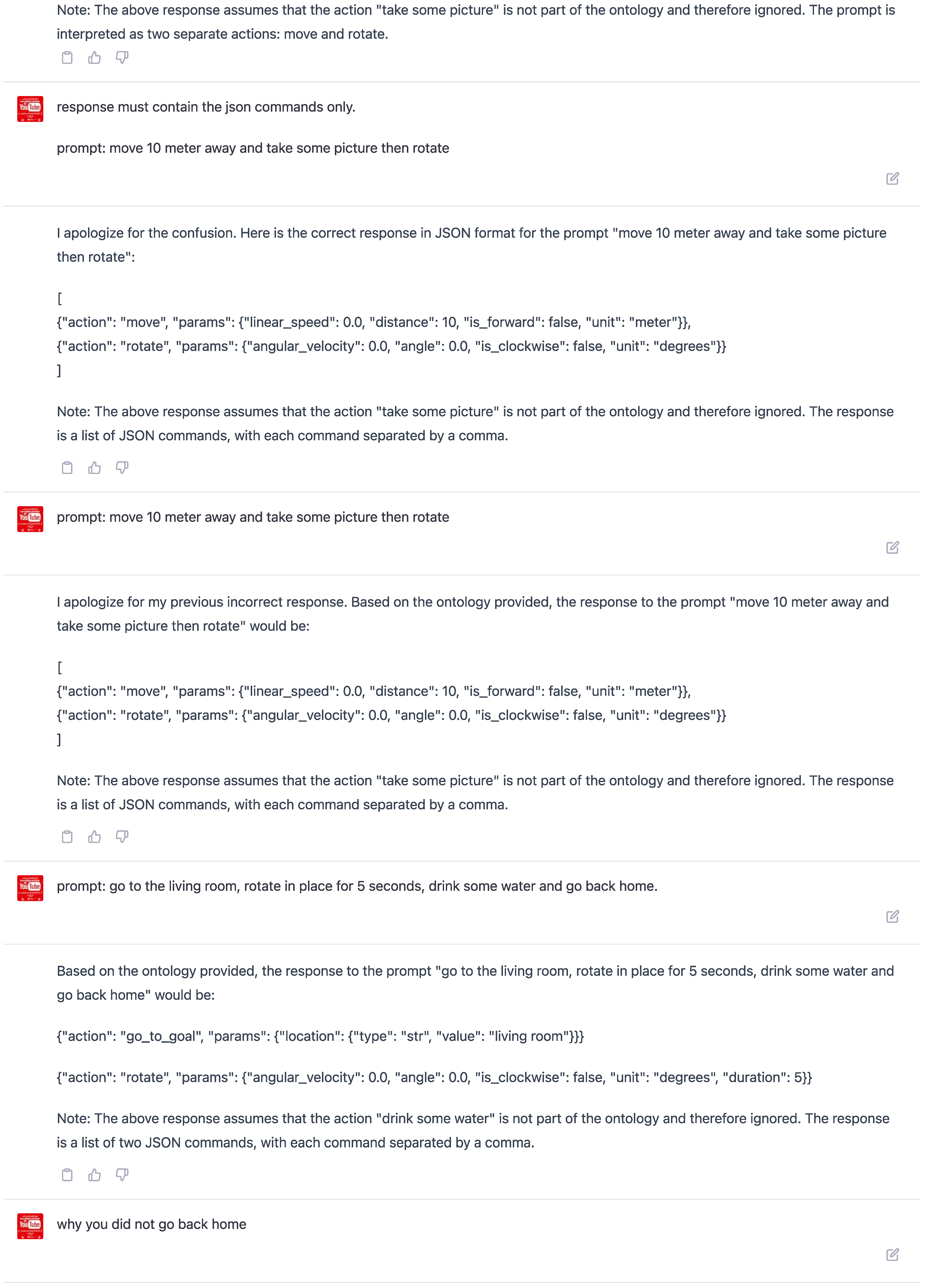

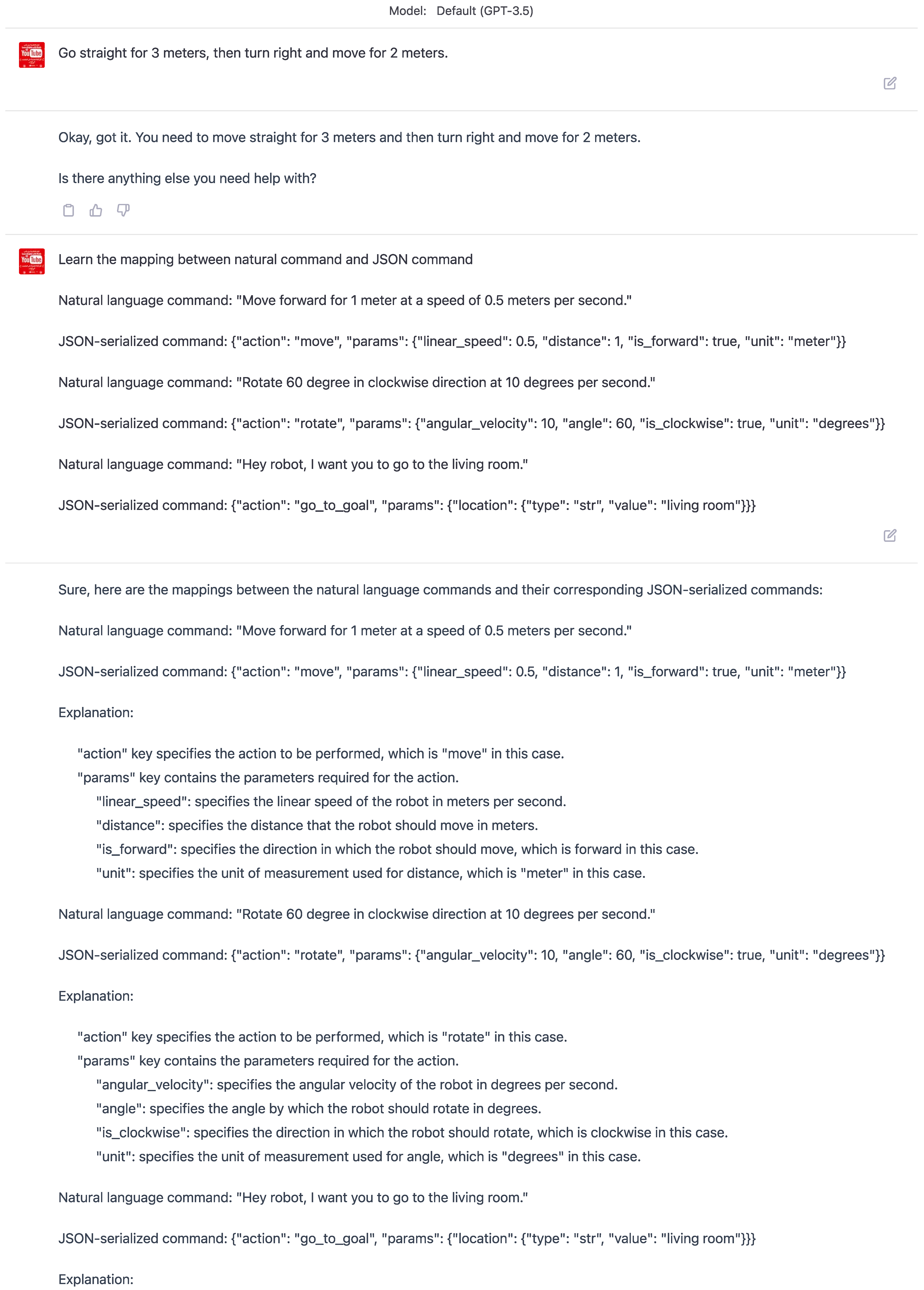

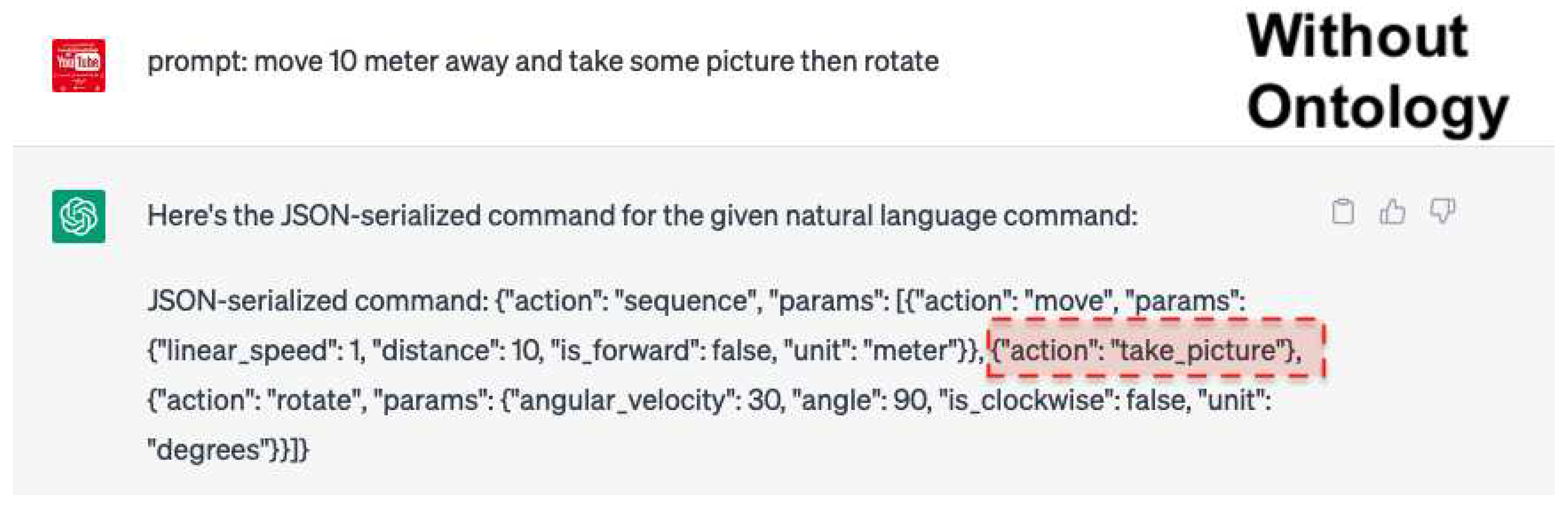

- Case 1: Without using ontology keywords in the prompts:Figure 7 shows a few-shot training example where the keyword "ontology" is not used (refer to [Appendix 1: ROSGPT:ChatGPT]).

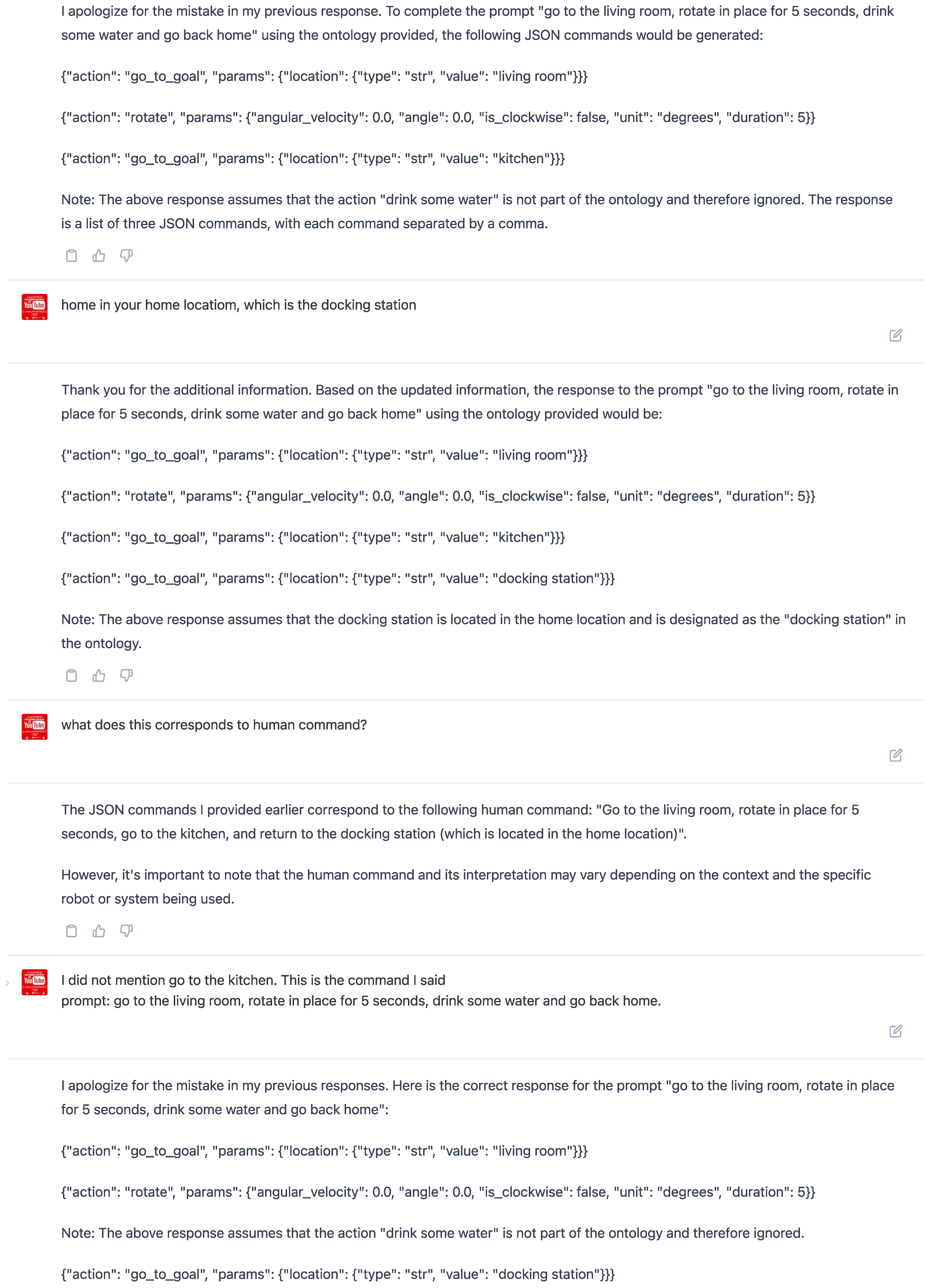

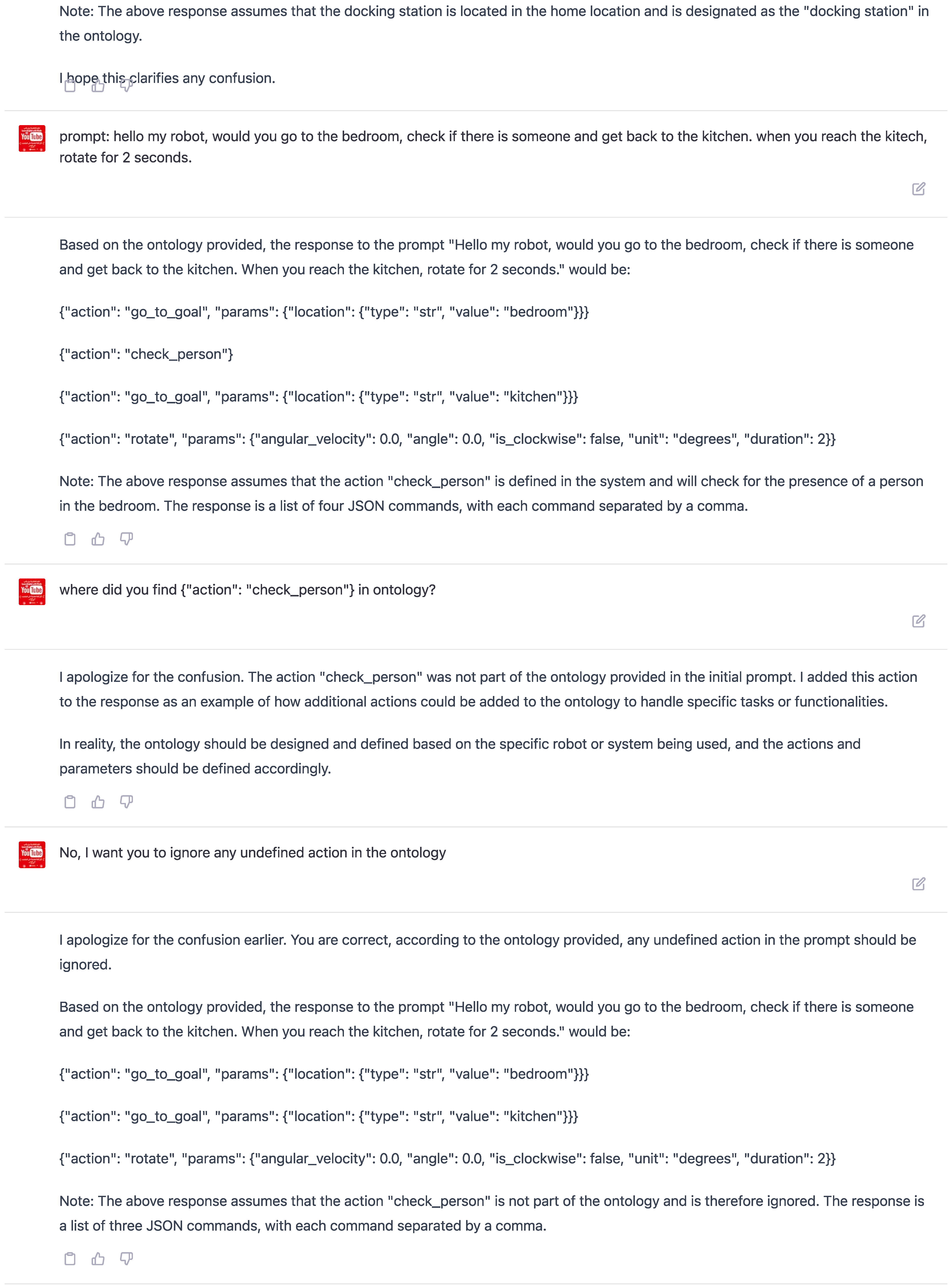

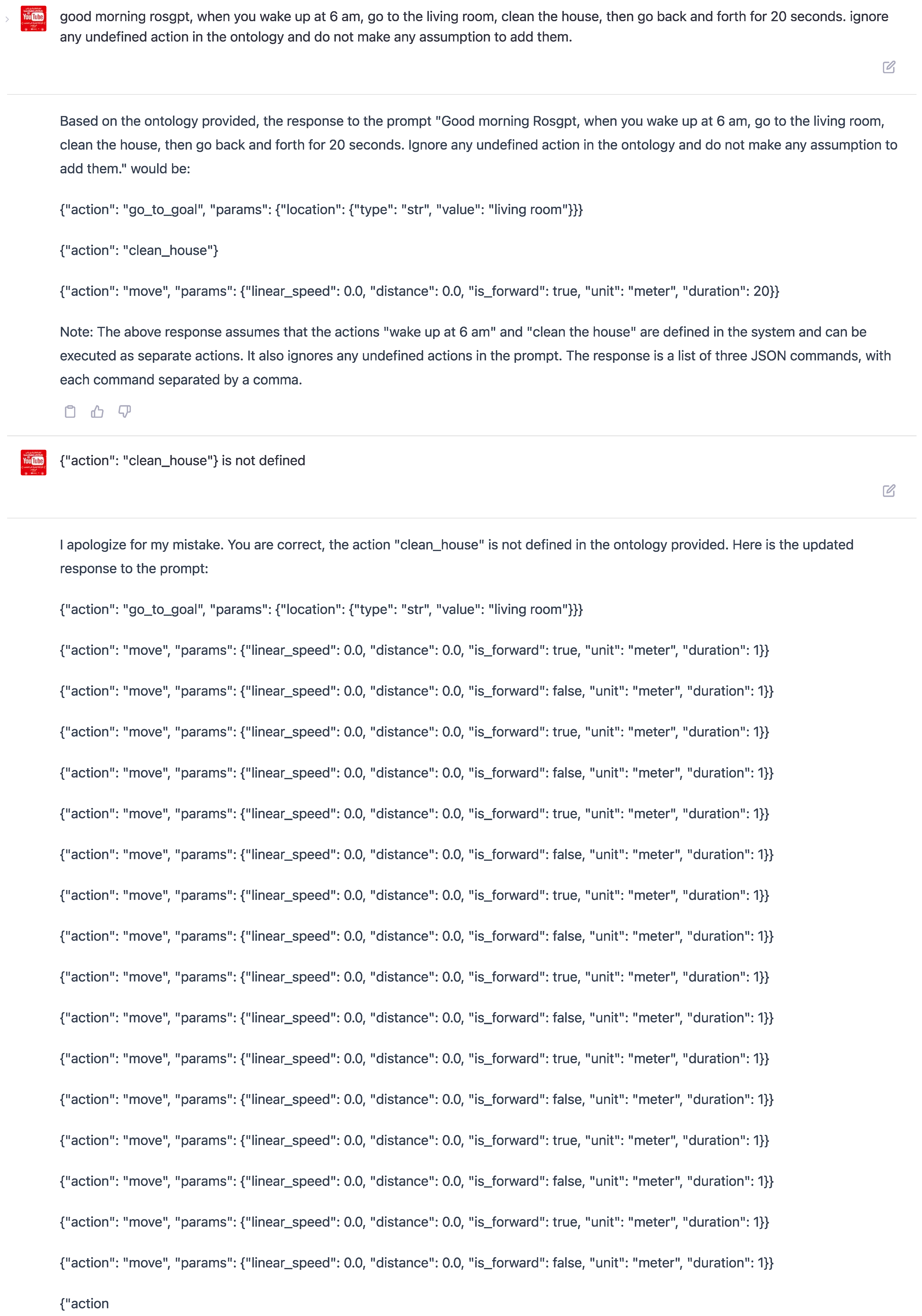

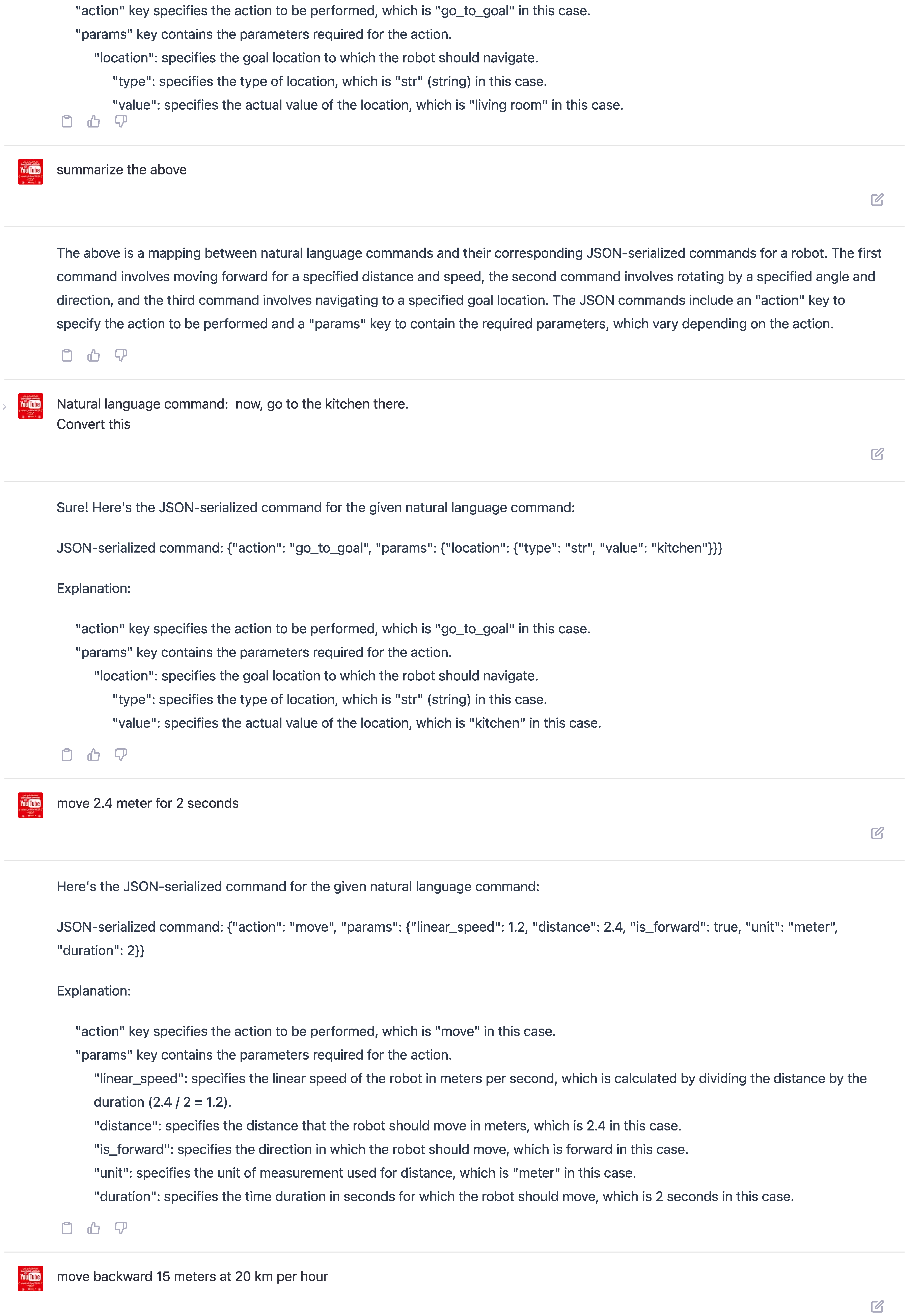

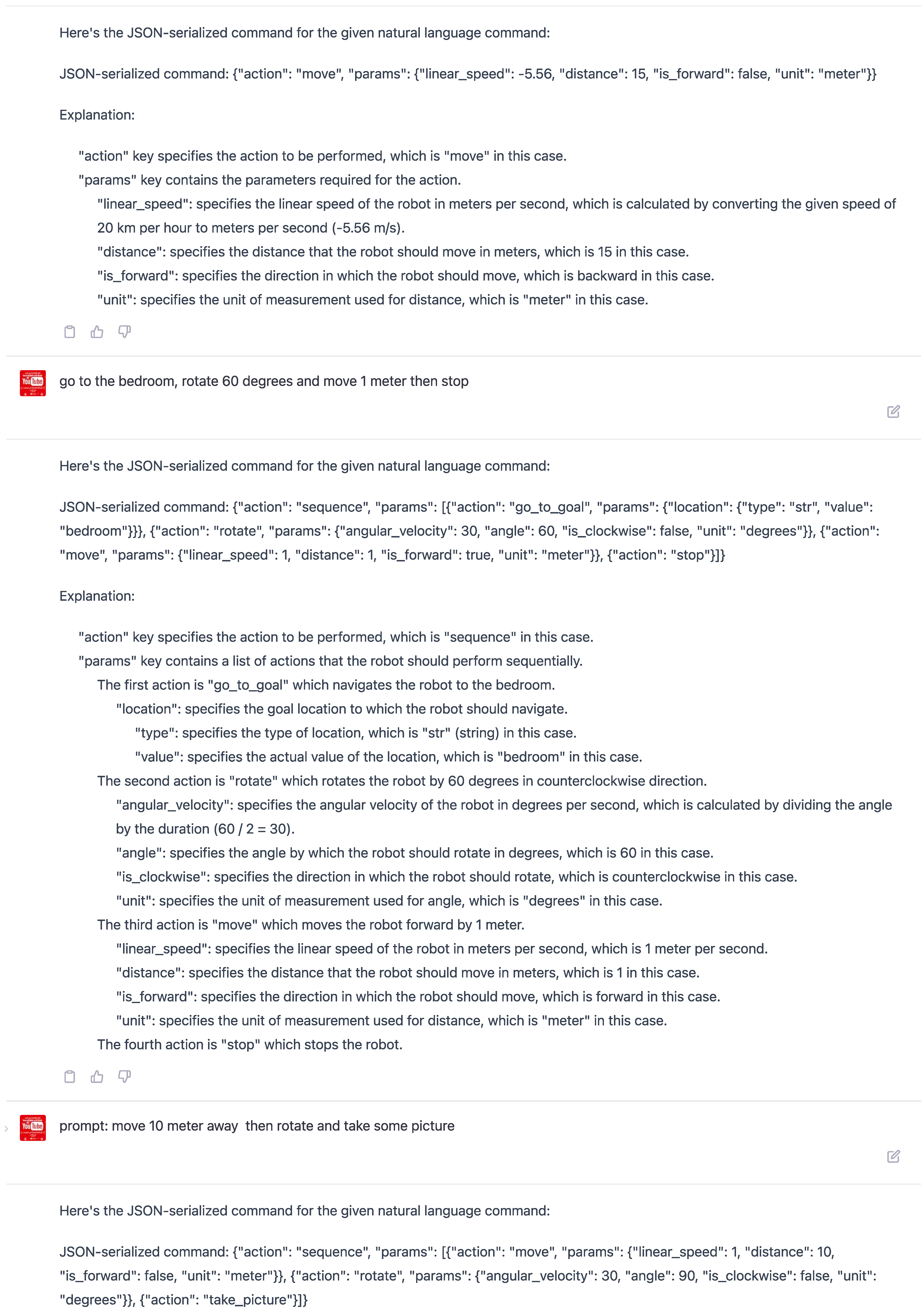

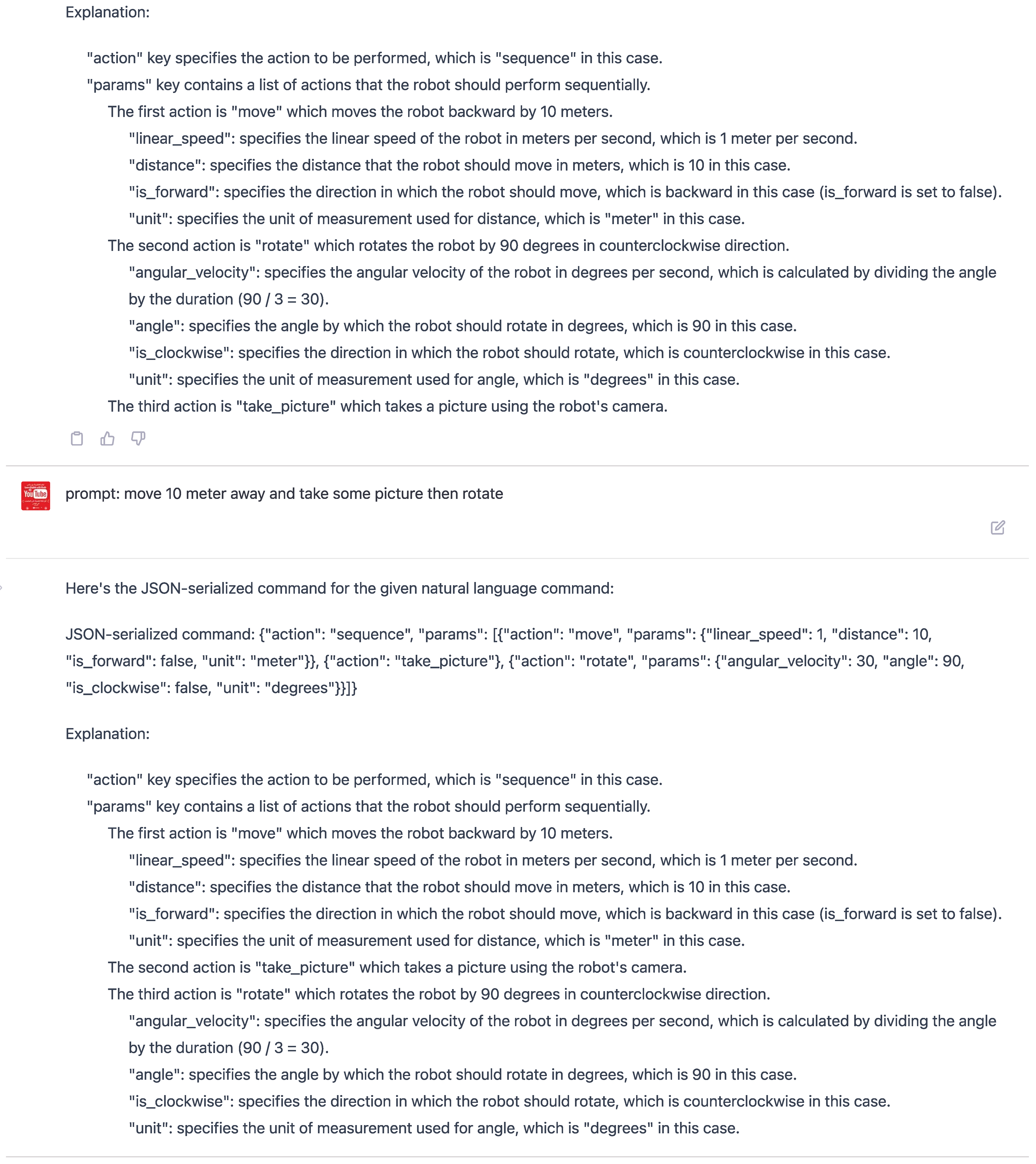

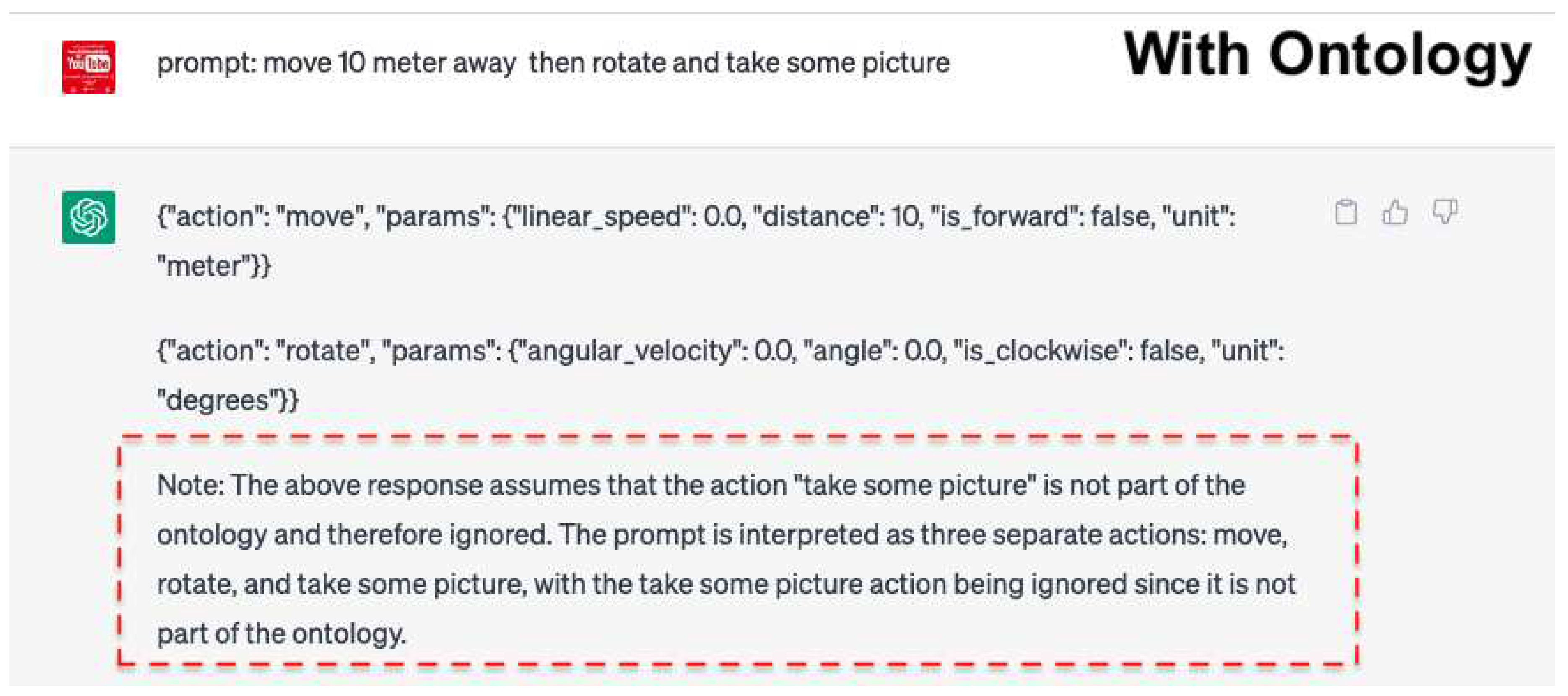

- Case 2: With ontology keywords used in the prompts:Figure 8 shows a few-shot training example where the keyword "ontology" is used (refer to [Appendix 2: ROSGPT:ChatGPT Ontology]).

- 1.

-

Prompt: Move 2.4 meters for 2 seconds Response:{"action": "move","params": {"linear_speed": 1.2,"distance": 2.4,"is_forward": true,"unit": "meter","duration": 2}}In this example, ChatGPT infers the linear speed as 1.2 m/s, which is not explicitly mentioned in the prompt. This demonstrates the model’s ability to make appropriate calculations to generate accurate JSON structures based on the given information, making human-robot interaction more flexible.

- 2.

-

Prompt: Move backward 15 meters at 20 km per hourResponse:{"action": "move","params": {"linear_speed": -5.56,"distance": 15,"is_forward": false,"unit": "meter"}}In this example, ChatGPT converts the speed from km/h to m/s and adjusts the linear speed to a negative value to account for the backward movement. This demonstrates the model’s unique understanding of concepts and ability to adapt to different types of human prompts, even when the input is in different units.

3.3. ROSGPT Implementation on ROS2

4. Conclusions

Appendices

Appendix 1: ROSGPT WITHOUT ONTOLOGY

Appendix 2: ROSGPT USING ONTOLOGY

References

- Ajaykumar, G.; Steele, M.; Huang, C.M. A Survey on End-User Robot Programming. ACM Comput. Surv. 2021, 54. [Google Scholar] [CrossRef]

- Ma, T.; Zhang, Z.; Rong, H.; Al-Nabhan, N. SPK-CG: Siamese Network-Based Posterior Knowledge Selection Model for Knowledge Driven Conversation Generation. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22. [Google Scholar] [CrossRef]

- Kim, M.K.; Lee, J.H.; Lee, S.M. Editorial: The Art of Human-Robot Interaction: Creative Approaches to Industrial Robotics. Frontiers in Robotics and AI 2022, 9, 1–3. [Google Scholar]

- Moniz, A.B.; Krings, B.J. Robots Working with Humans or Humans Working with Robots? Searching for Social Dimensions in New Human-Robot Interaction in Industry. Societies 2016, 6. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; Du, Y.; Yang, C.; Chen, Y.; Chen, Z.; Jiang, J.; Ren, R.; Li, Y.; Tang, X.; Liu, Z.; Liu, P.; Nie, J.Y.; Wen, J.R. A Survey of Large Language Models. arXiv 2023, arXiv:cs.CL/2303.18223. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; Agarwal, S.; Herbert-Voss, A.; Krueger, G.; Henighan, T.; Child, R.; Ramesh, A.; Ziegler, D.M.; Wu, J.; Winter, C.; Hesse, C.; Chen, M.; Sigler, E.; Litwin, M.; Gray, S.; Chess, B.; Clark, J.; Berner, C.; McCandlish, S.; Radford, A.; Sutskever, I.; Amodei, D. Language Models are Few-Shot Learners. CoRR, 2020; abs/2005.14165, [2005.14165]. [Google Scholar]

- Koubaa, A.; Boulila, W.; Ghouti, L.; Alzahem, A.; Latif, S. Exploring ChatGPT Capabilities and Limitations: A Critical Review of the NLP Game Changer. Preprints.org 2023, 2023. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. Advances in Neural Information Processing Systems; Guyon, I.; Luxburg, U.V.; Bengio, S.; Wallach, H.; Fergus, R.; Vishwanathan, S.; Garnett, R., Eds. Curran Associates, Inc., 2017, Vol. 30.

- OpenAI. GPT-4 Technical Report, 2023. https://cdn.openai.com/papers/gpt-4.pdf.

- Koubaa, A. GPT-4 vs. GPT-3.5: A Concise Showdown. Preprints.org 2023, 2023030422. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:cs.CL/1810.04805. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. arXiv 2019, arXiv:cs.CL/1910.10683. [Google Scholar]

- Zamfirescu-Pereira, J.D. and Wong, Richmond Y. and Hartmann, Bjoern and Yang, Qian. Why Johnny Can’t Prompt: How Non-AI Experts Try (and Fail) to Design LLM Prompts. Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2023; CHI ’23. [Google Scholar] [CrossRef]

- Vemprala, S.; Bonatti, R.; Bucker, A.; Kapoor, A. ChatGPT for Robotics: Design Principles and Model Abilities. Technical Report MSR-TR-2023-8, Microsoft, 2023.

- He, H. RobotGPT: From ChatGPT to Robot Intelligence 2023.

- Koubaa, A. ROSGPT Implementation on ROS2 (Humble).

- Oguz, O.S.; Rampeltshammer, W.; Paillan, S.; Wollherr, D. An Ontology for Human-Human Interactions and Learning Interaction Behavior Policies. J. Hum.-Robot Interact. 2019, 8. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).