Submitted:

23 April 2023

Posted:

24 April 2023

You are already at the latest version

Abstract

Keywords:

MSC: 90C70; 90C30; 65K05

1. Introduction and Background Results

- (1)

- We examine the application of NL in determining the parameter t in the Dai-Liao CG method (5).

- (2)

- A theoretical analysis is accomplished to confirm the global convergence of the proposed method.

- (3)

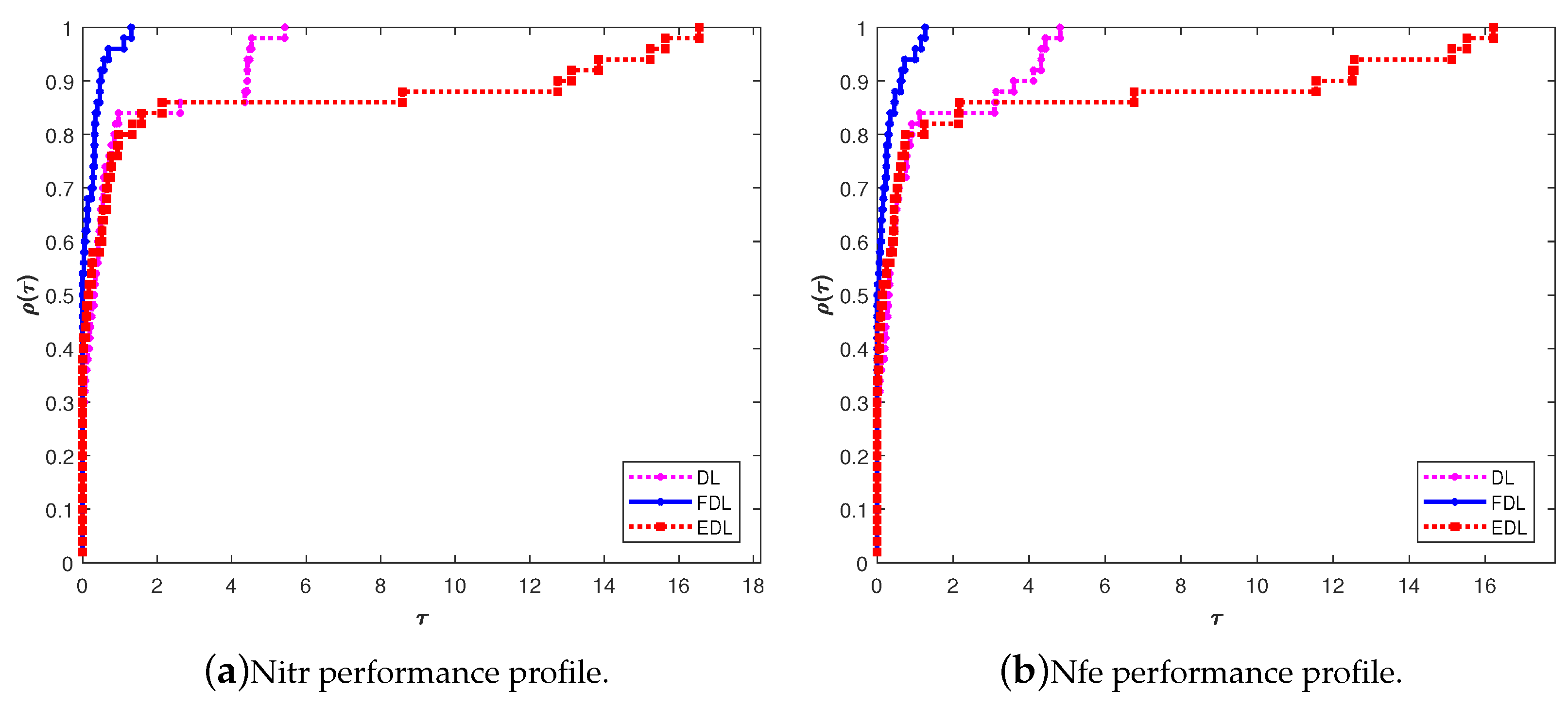

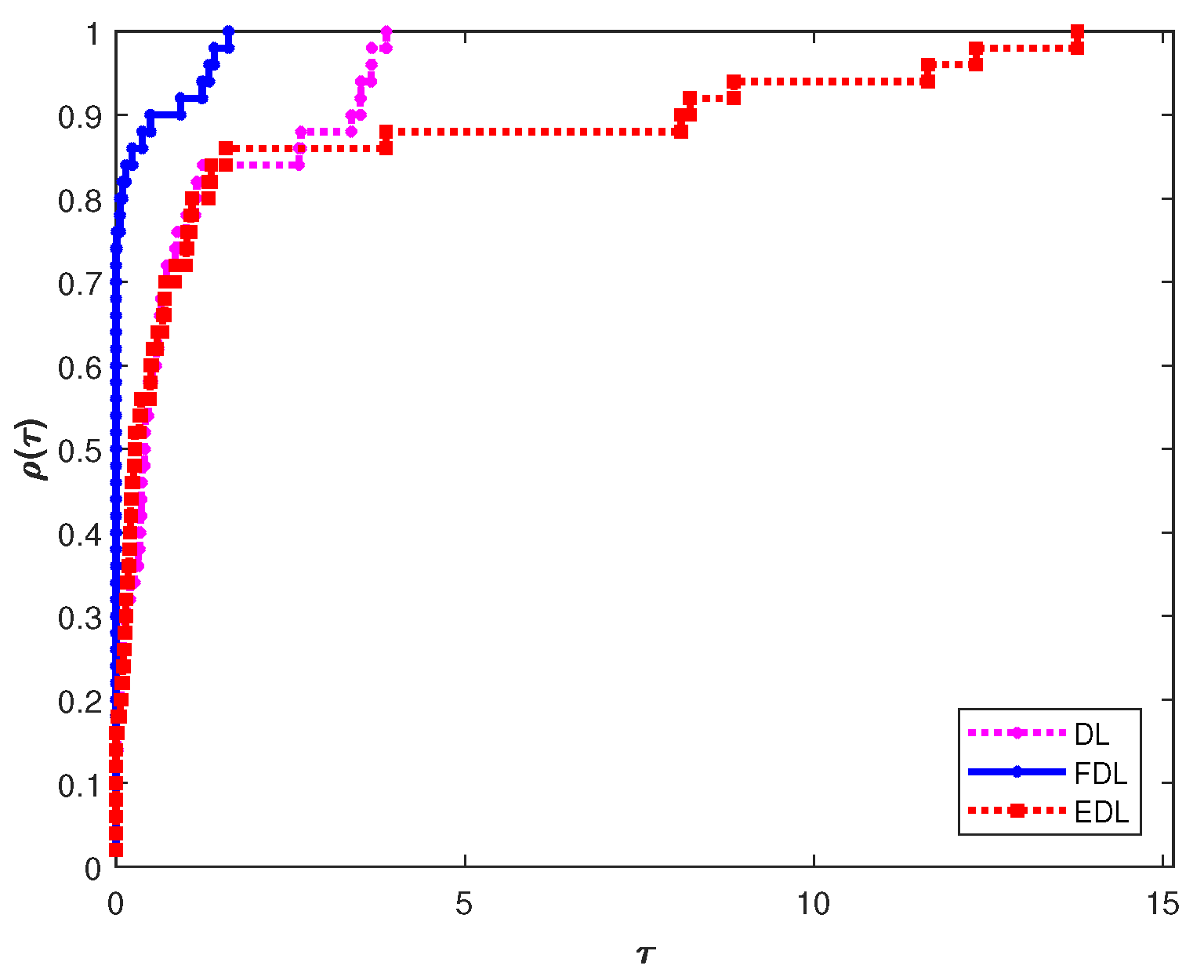

- A numerical comparison is given between the proposed FDL algorithm and other known DL algorithms.

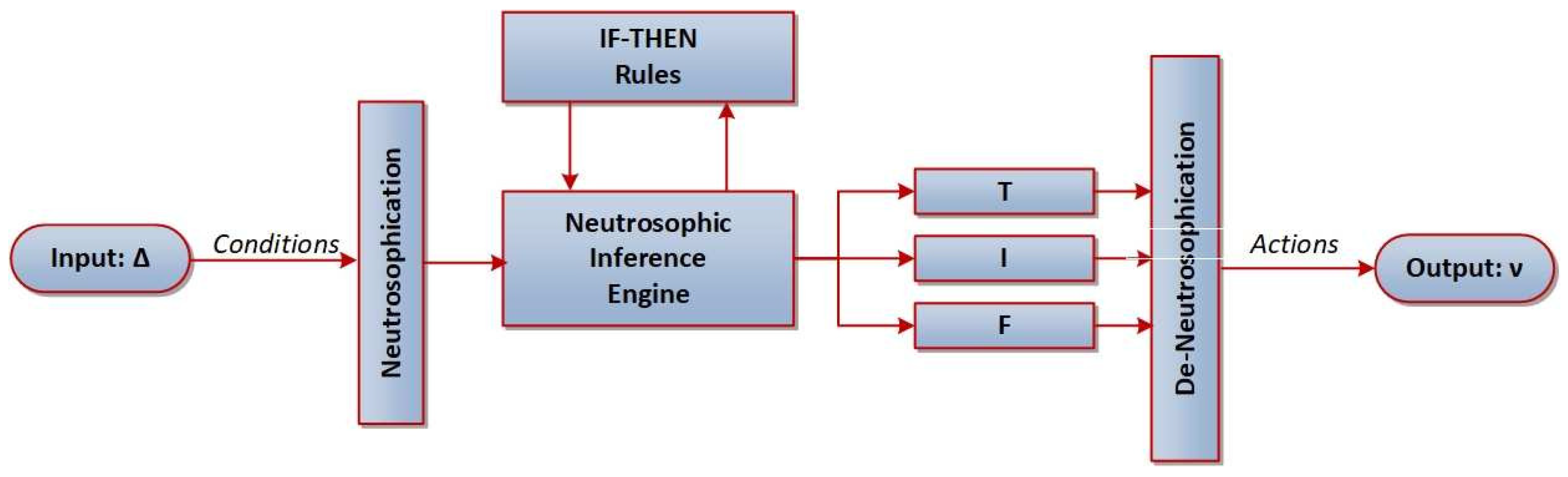

2. Fuzzy Neutrosophic Dai-Liao Conjugate Gradient Method

- (1)

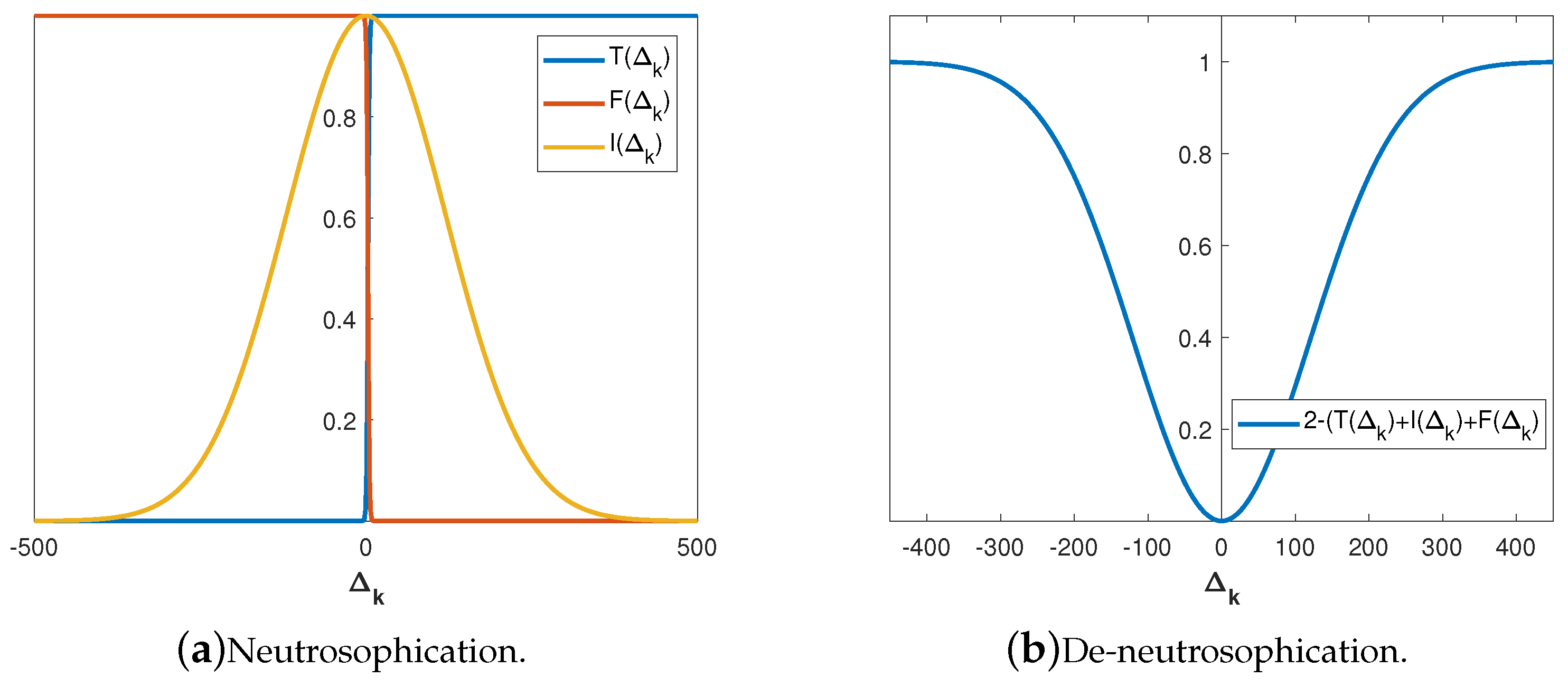

-

Neutrosophication maps the input into neutrosophic ordered triplets . The MFs are defined with the aim to improve the CG iterative rule exploiting numerical experience. The sigmoid function with the slope defined by at the crossover point is a proper choice for :A proper choice for is the following sigmoid function:The Gaussian function with the standard deviation and the mean defines the indeterminacy :

- (2)

-

Neutrosophic inference between an input fuzzy set and an output fuzzy set is based on the subsequent “IF–THEN” regulations:Fuzzy sets and point, respectively, to positive or negative errors. Applying the unification , we define , , where ∘ denotes the fuzzy transformation. In addition, for a fuzzy vector , it follows , , where ⋀ and ⋁ denote the and operator, respectively. In this research, the centroid defuzzification method is utilized to generate a vector of crisp outputs :

- (3)

- De-neutrosophication is based on the transformation resulting in a crisp value and suggested as:

| Algorithm 1 The backtracking line search. |

|

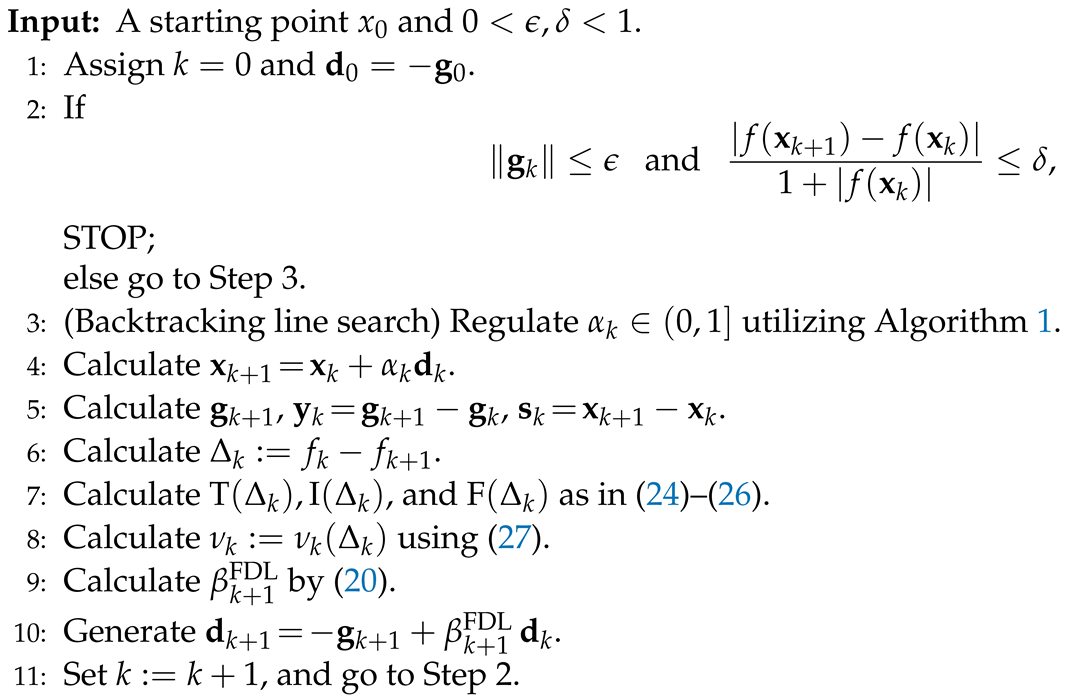

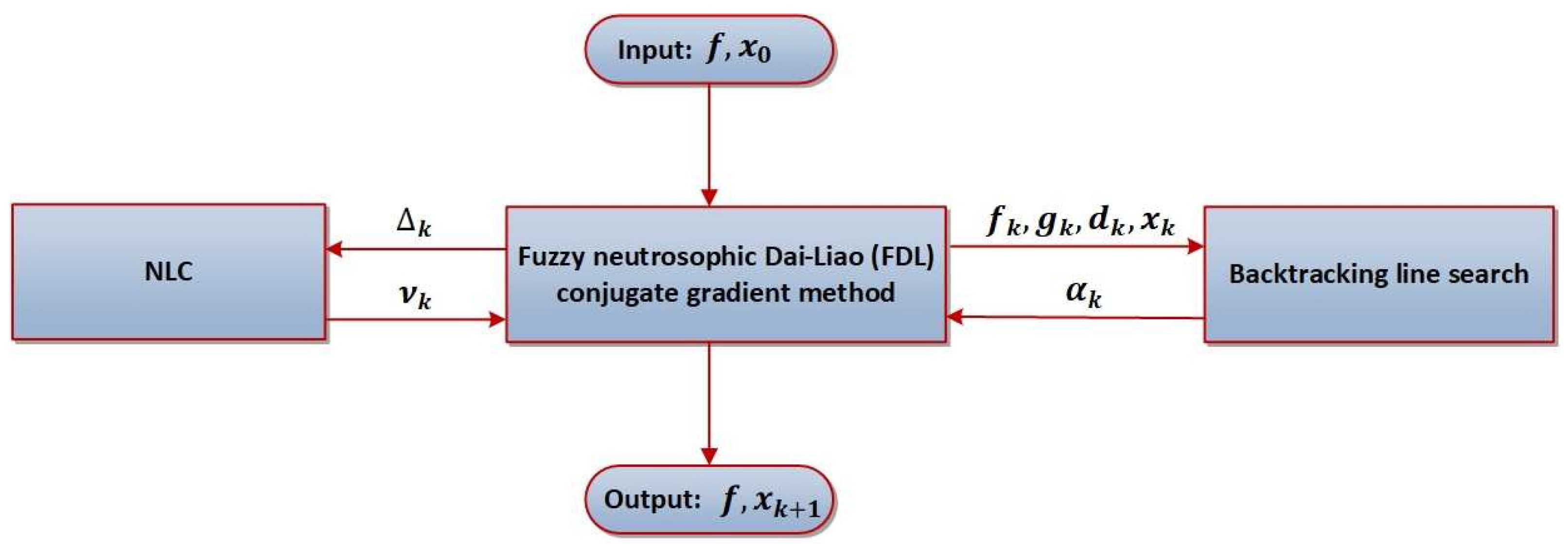

| Algorithm 2 Fuzzy neutrosophic Dai-Liao (FDL) conjugate gradient method. |

|

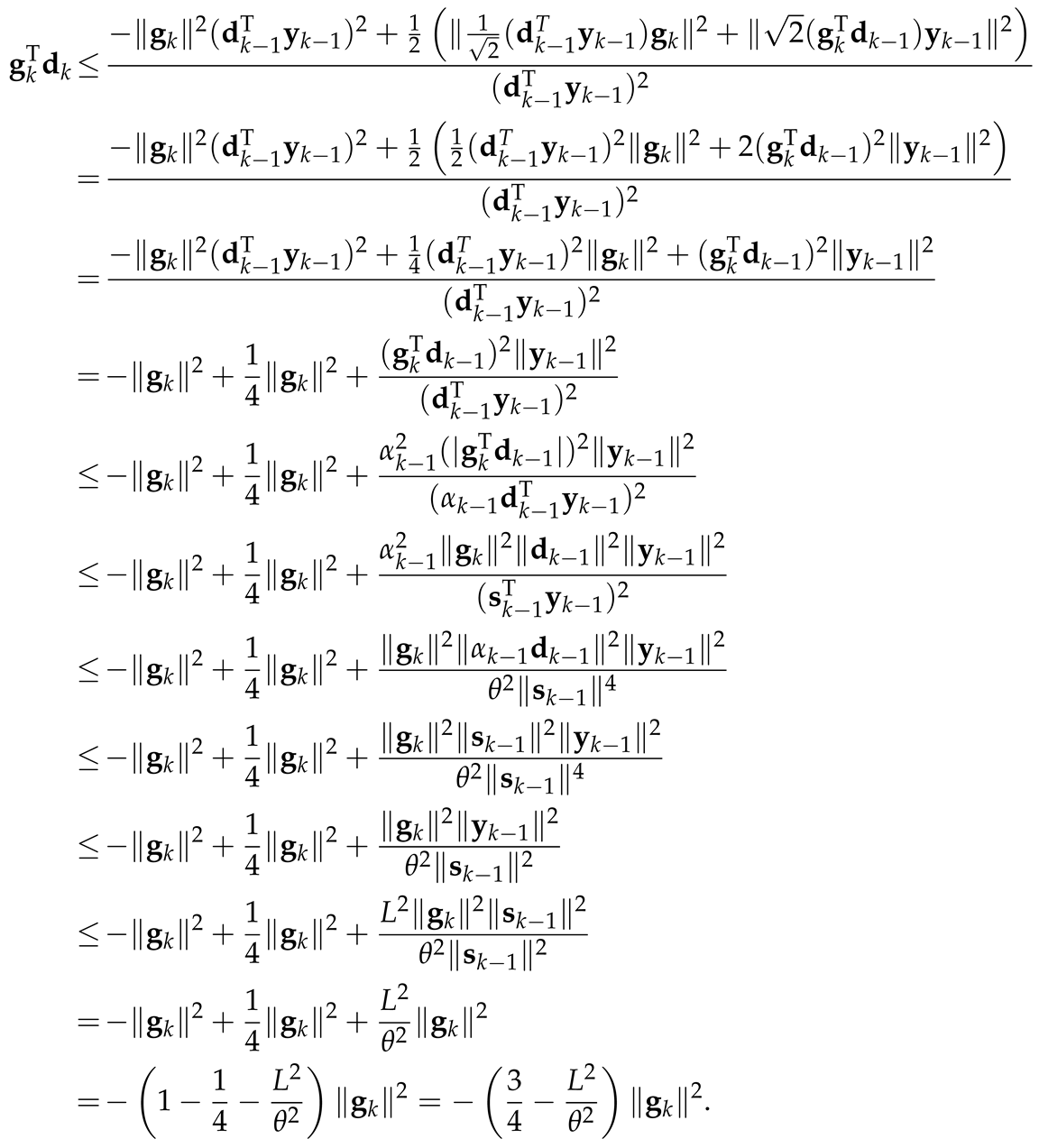

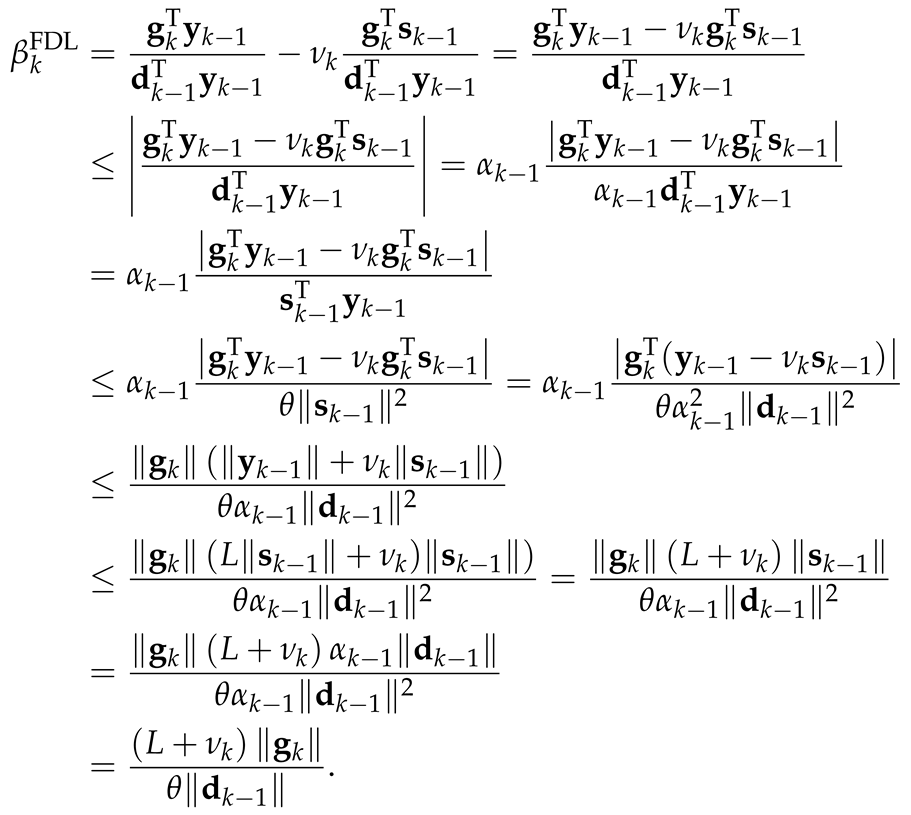

3. Convergence Examination

4. Numerical Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dai, Y.-H.; Liao, L.-Z. New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 2001, 43, 87–101. [Google Scholar] [CrossRef]

- Cheng, Y.; Mou, Q.; Pan, X.; Yao, S. A sufficient descent conjugate gradient method and its global convergence. Optim. Methods Softw. 2016, 31, 577–590. [Google Scholar] [CrossRef]

- Livieris, I.E.; Pintelas, P. A descent Dai-Liao conjugate gradient method based on a modified secant equation and its global convergence. ISRN Computational Mathematics 2012, 2012, Article ID 435495. [Google Scholar] [CrossRef]

- Peyghami, M.R.; Ahmadzadeh, H.; Fazli, A. A new class of efficient and globally convergent conjugate gradient methods in the Dai-Liao family. Optim. Methods Softw. 2015, 30, 843–863. [Google Scholar] [CrossRef]

- Yabe, H.; Takano, M. Global convergence properties of nonlinear conjugate gradient methods with modified secant condition. Comput. Optim. Appl. 2004, 28, 203–225. [Google Scholar] [CrossRef]

- Yao, S.; Qin, B. A hybrid of DL and WYL nonlinear conjugate gradient methods. Abstr. Appl. Anal. 2014, 2014, Article ID 279891. [Google Scholar] [CrossRef]

- Yao, S.; Lu, X.; Wei, Z. A conjugate gradient method with global convergence for large-scale unconstrained optimization problems. J. Appl. Math. 2013, 2013, Article ID 730454. [Google Scholar] [CrossRef]

- Zheng, Y.; Zheng, B. Two new Dai-Liao-type conjugate gradient methods for unconstrained optimization problems. J. Optim. Theory Appl. 2017, 175, 502–509. [Google Scholar] [CrossRef]

- Zhou, W.; Zhang, L. A nonlinear conjugate gradient method based on the MBFGS secant condition. Optim. Methods Softw. 2006, 21, 707–714. [Google Scholar] [CrossRef]

- Babaie-Kafaki, S. A survey on the Dai-Liao family of nonlinear conjugate gradient methods. RAIRO-Oper. Res. 2023, 57, 43–58. [Google Scholar] [CrossRef]

- Andrei, N. Open problems in nonlinear conjugate gradient algorithms for unconstrained optimization. Bull. Malays. Math. Sci. Soc. 2011, 34, 319–330. [Google Scholar]

- Hager, W.W.; Zhang, H. A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 2005, 16, 170–192. [Google Scholar] [CrossRef]

- Hager, W.W.; Zhang, H. Algorithm 851: CG DESCENT, a conjugate gradient method with guaranteed descent. ACM Transactions on Mathematical Software 2006, 32, 113–137. [Google Scholar] [CrossRef]

- Dai, Y.-H.; Kou, C.-X. A nonlinear conjugate gradient algorithm with an optimal property and an improved wolfe line search. SIAM. J. Optim. 2013, 23, 296–320. [Google Scholar] [CrossRef]

- Babaie-Kafaki, S.; Ghanbari, R. The Dai-Liao nonlinear conjugate gradient method with optimal parameter choices. Europ. J. Oper. Res. 2014, 234, 625–630. [Google Scholar] [CrossRef]

- Andrei, N. A Dai-Liao conjugate gradient algorithm with clustering of eigenvalues. Numer. Algor. 2018, 77, 1273–1282. [Google Scholar] [CrossRef]

- Babaie-Kafaki, S. On the sufficient descent condition of the Hager-Zhang conjugate gradient methods. 4OR-Q. J. Oper. Res. 2014, 12, 285–292. [Google Scholar] [CrossRef]

- Lotfi, M.; Hosseini, S.M. An efficient Dai–Liao type conjugate gradient method by reformulating the CG parameter in the search direction equation. J. Comput. Appl. Math. 2020, 371, Article–112708. [Google Scholar] [CrossRef]

- Ivanov, B.; Stanimirović, P.S.; Shaini, B.I.; Ahmad, H.; Wang, M.-K. A Novel Value for the Parameter in the Dai-Liao-Type Conjugate Gradient Method. J. Funct. Spaces 2021, 2021, Article ID 6693401, 10 pages. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Ivanov, B.; Stanujkić, D.; Katsikis, V.N.; Mourtas, S.D.; Kazakovtsev, L.A.; Edalatpanah, S.A. Improvement of Unconstrained Optimization Methods Based on Symmetry Involved in Neutrosophy. Symmetry 2023, 15, 250. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Atanassov, K.T. Intuitionistic fuzzy sets. Fuzzy Sets Syst. 1986, 20, 87–96. [Google Scholar] [CrossRef]

- Smarandache, F. A Unifying Field in Logics, Neutrosophy: Neutrosophic Probability, Set and Logic; American Research Press: Rehoboth, NM, USA, 1999. [Google Scholar]

- Wang, H.; Smarandache, F.; Zhang, Y.Q.; Sunderraman, R. Single valued neutrosophic sets. Multispace Multistructure 2010, 4, 410–413. [Google Scholar]

- Smarandache, F. Special Issue "New types of Neutrosophic Set/Logic/Probability, Neutrosophic Over-/Under-/Off-Set, Neutrosophic Refined Set, and their Extension to Plithogenic Set/Logic/Probability, with Applications". Symmetry 2019, https://www.mdpi.com/journal/symmetry/special_issues/Neutrosophic_Set_Logic_Probability.

- Mishra, K.; Kandasamy, I.; Kandasamy W.B., V.; Smarandache, F. A novel framework using neutrosophy for integrated speech and text sentiment analysis. Symmetry 2020, 12, 1715. [Google Scholar] [CrossRef]

- Tu, A.; Ye, J.; Wang, B. Symmetry measures of simplified neutrosophic sets for multiple attribute decision-making problems. Symmetry 2018, 10, 144. [Google Scholar] [CrossRef]

- Hestenes, M.R.; Stiefel, E.L. Methods of conjugate gradients for solving linear systems. J. Res. Nat. Bur. Standards. 1952, 49, 409–436. [Google Scholar] [CrossRef]

- Andrei, N. An acceleration of gradient descent algorithm with backtracking for unconstrained optimization. Numer. Algorithms 2006, 42, 63–73. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Miladinović, M.B. Accelerated gradient descent methods with line search. Numer. Algorithms 2010, 54, 503–520. [Google Scholar] [CrossRef]

- Cheng, W. A two-term PRP-based descent method. Numer. Funct. Anal. Optim. 2007, 28, 1217–1230. [Google Scholar] [CrossRef]

- Zoutendijk, G. Nonlinear Programming, Computational Methods. In: J. Abadie (eds.): Integer and Nonlinear Programming, North-Holland, 37–86, Amsterdam, 1970.

- Andrei, N. An unconstrained optimization test functions collection. Adv. Model. Optim. 2008, 10, 147–161. [Google Scholar]

- Bongartz, I.; Conn, A.R.; Gould, N.; Toint, P.L. CUTE: constrained and unconstrained testing environments. ACM Trans. Math. Softw. 1995, 21, 123–160. [Google Scholar] [CrossRef]

- Dolan, E.D.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 91, 201–213. [Google Scholar] [CrossRef]

| Set | Membership Function | Weight | ||

|---|---|---|---|---|

| Input | Sigmoid function (24) | 1 | 3 | 1 |

| Sigmoid function (25) | 1 | 3 | 1 | |

| Gaussian function (26) | 120 | 0 | 1 | |

| Output | Score function (27) | - | - | 1 |

| Test function | DL | FDL | EDL |

|---|---|---|---|

| Nitr/Nfe/Tcpu | Nitr/Nfe/Tcpu | Nitr/Nfe/Tcpu | |

| Extended Penalty | 1905/77578/32.438 | 1610/62534/24.266 | 2304/82602/39.344 |

| Perturbed Quadratic | 14555/606750/379.359 | 10800/440213/206.5 | 10012/408474/248.5 |

| Raydan 1 | 4337/114595/98.984 | 5497/122843/76.813 | 4194/109164/96.938 |

| Raydan 2 | 1427/2864/3.188 | 67/144/0.281 | 2572540/5145090/894.453 |

| Diagonal 1 | 5809/223750/245.578 | 5488/212491/227.781 | 4673/178295/219.109 |

| Diagonal 3 | 5247/196745/423.766 | 4531/168162/307.594 | 4596/171636/366.203 |

| Hager | 1742/31516/103.672 | 1242/22799/47.063 | 1940/33206/98.766 |

| Generalized Tridiagonal 1 | 2058/32313/49.5 | 2160/32033/27.5 | 2161/33285/44.703 |

| Extended Tridiagonal 1 | 310/2932/8.391 | 182/2501/6.297 | 308/4129/12.766 |

| Extended TET | 1140/9840/11.031 | 619/5808/5.484 | 749/6362/5.969 |

| Diagonal 5 | 1394/2798/6.938 | 60/130/0.609 | 3053907/6107824/3124.875 |

| Extended Himmelblau | 50/2431/1.016 | 51/2602/0.813 | 50/2413/0.938 |

| Perturbed quadratic diagonal | 1837/69156/18.453 | 1261/36785/13.875 | 2157/86977/34.797 |

| Quadratic QF1 | 13895/526995/187.313 | 21989/846402/376.156 | 10199/379554/122.844 |

| Extended quadratic penalty QP1 | 1080/17440/9.922 | 1524/23840/8.25 | 1157/18043/9.109 |

| Extended quadratic penalty QP2 | 218/9479/11.047 | 112/5513/4.953 | 218/9194/8.906 |

| Quadratic QF2 | 19211/847031/348.781 | 18861/816310/225.891 | 15555/689736/250.891 |

| Extended quadratic exponential EP1 | 1254/3443/3.172 | 56/404/0.516 | 21431/43829/7.531 |

| Extended Tridiagonal 2 | 22468/998473/549.484 | 3668/114169/87.438 | 10989/510713/93.609 |

| TRIDIA (CUTE) | 33278/1647913/967.234 | 40156/1977068/950.547 | 29133/1428866/675.422 |

| ARWHEAD (CUTE) | 1624/81625/44.875 | 1529/72379/31.594 | 1219/57140/28.672 |

| Almost Perturbed Quadratic | 14904/621925/259.797 | 19675/829784/357.359 | 13201/543372/188.047 |

| LIARWHD (CUTE) | 30/2705/1.281 | 30/2732/1.25 | 30/2739/1.438 |

| POWER (CUTE) | 532442/44419504/16742.672 | 580790/48609979/17435.609 | 629342/52431424/23630.781 |

| ENGVAL1 (CUTE) | 2489/33103/13.781 | 2400/32299/10.719 | 1975/27260/12.922 |

| INDEF (CUTE) | 21/1924/2.125 | 26/2238/2.5 | 30/2610/4.266 |

| Diagonal 6 | 1583/3197/4.531 | 74/185/0.359 | 7052401/14105032/5037.219 |

| DIXON3DQ (CUTE) | 320921/1775846/1083.281 | 229757/1368033/727.172 | 257451/1517252/1045.328 |

| COSINE (CUTE) | 20/1600/1.891 | 20/1697/1.891 | 20/1700/2 |

| BIGGSB1 (CUTE) | 249919/1400798/832.375 | 259475/1549293/810.766 | 236612/1389720/945.672 |

| Generalized Quartic | 866/11273/3.984 | 1099/8951/4.063 | 959/10662/3.125 |

| Diagonal 7 | 1453/4564/6.875 | 68/162/0.469 | 469477/940686/140.172 |

| Diagonal 8 | 1371/3962/5.359 | 67/199/0.422 | 594522/1193760/195.094 |

| Full Hessian FH3 | 2237/6202/7.125 | 52/513/0.688 | 767988/1537759/188.469 |

| Diagonal 9 | 3312/138545/225.719 | 5344/217150/224.906 | 4520/189307/260.453 |

| HIMMELH (CUTE) | 20/1690/4.797 | 20/1758/4.531 | 20/1760/4.891 |

| FLETCHCR (CUTE) | 303212/10189775/5073.688 | 300227/10011849/4704.125 | 289670/9702961/4411.453 |

| Extended BD1 (Block Diagonal) | 1597/16783/7.625 | 1227/15639/5.875 | 1200/12605/6.625 |

| Extended Maratos | 72/3366/1.188 | 50/2069/0.719 | 40/1975/0.75 |

| Extended Cliff | 234/2992/2.078 | 217/6000/4.891 | 950/13187/6.188 |

| Extended Hiebert | 70/7215/1.938 | 70/7220/1.828 | 70/7228/1.859 |

| NONDIA (CUTE) | 33/3066/1.375 | 30/2829/1.266 | 32/3031/1.625 |

| NONDQUAR (CUTE) | 58/4652/18.047 | 45/3666/17.219 | 86/4989/19.016 |

| DQDRTIC (CUTE) | 3456/87105/26.453 | 2327/59047/16.406 | 3637/92315/34.953 |

| Extended Freudenstein and Roth | 1376/46597/10.734 | 3390/111830/28.516 | 2018/66654/16.172 |

| Generalized Rosenbrock | 282948/8410218/4125.516 | 280440/8335396/4088.547 | 281792/8373946/4055.172 |

| Extended White and Holst | 76/5794/9.219 | 50/3171/7.281 | 59/4022/11.563 |

| Extended Beale | 118/6791/14.047 | 72/3118/5.906 | 181/4748/6.75 |

| EG2 (CUTE) | 507/29388/47.547 | 697/48512/119.875 | 811/39769/122.469 |

| EDENSCH (CUTE) | 1694/23160/89.453 | 2089/27821/83.266 | 1684/22731/116.844 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).