Submitted:

23 April 2023

Posted:

24 April 2023

You are already at the latest version

Abstract

Keywords:

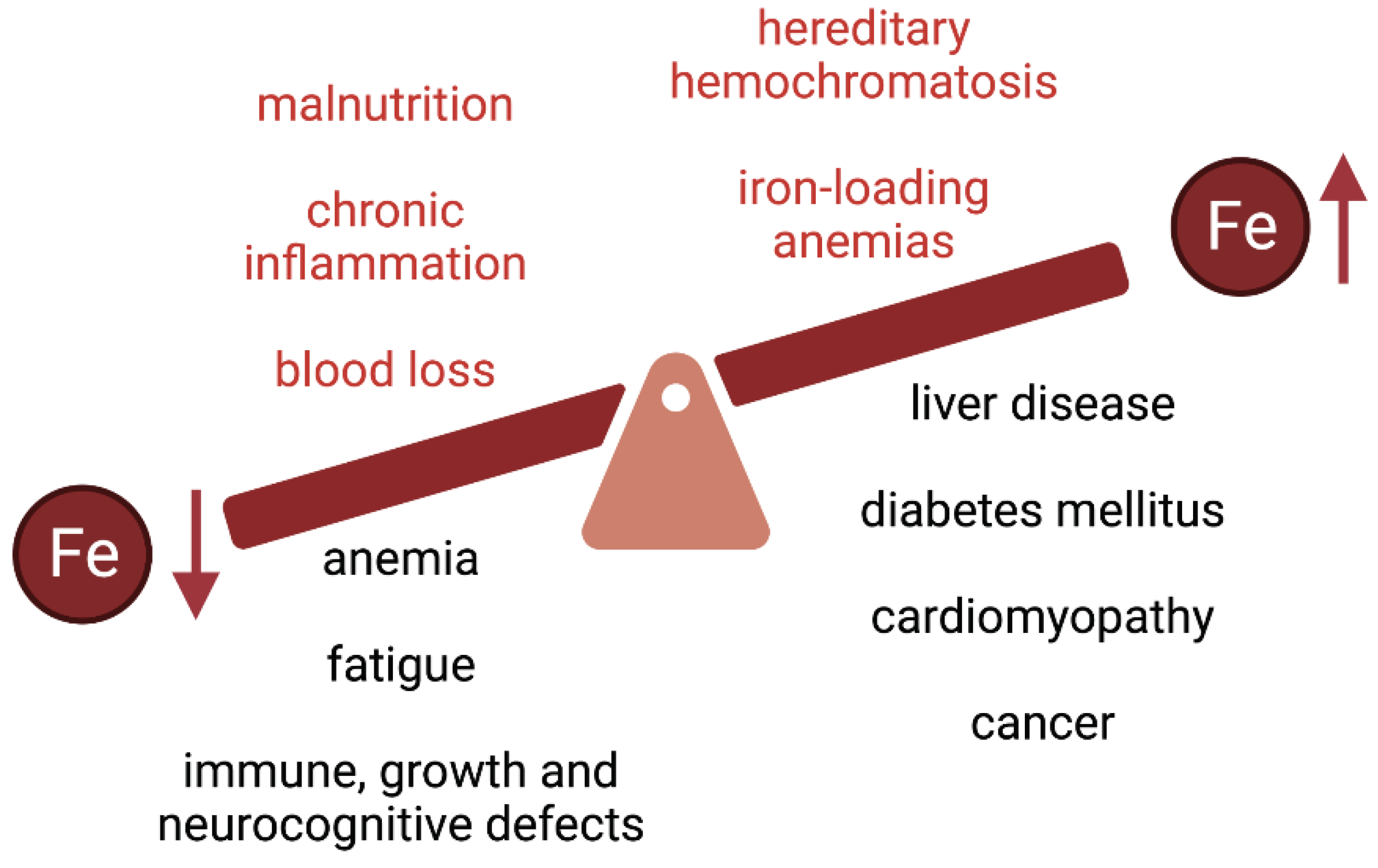

1. Nutritional Value of Iron

2. Iron Homeostasis

3. Iron Biomarkers: Applications and Limitations

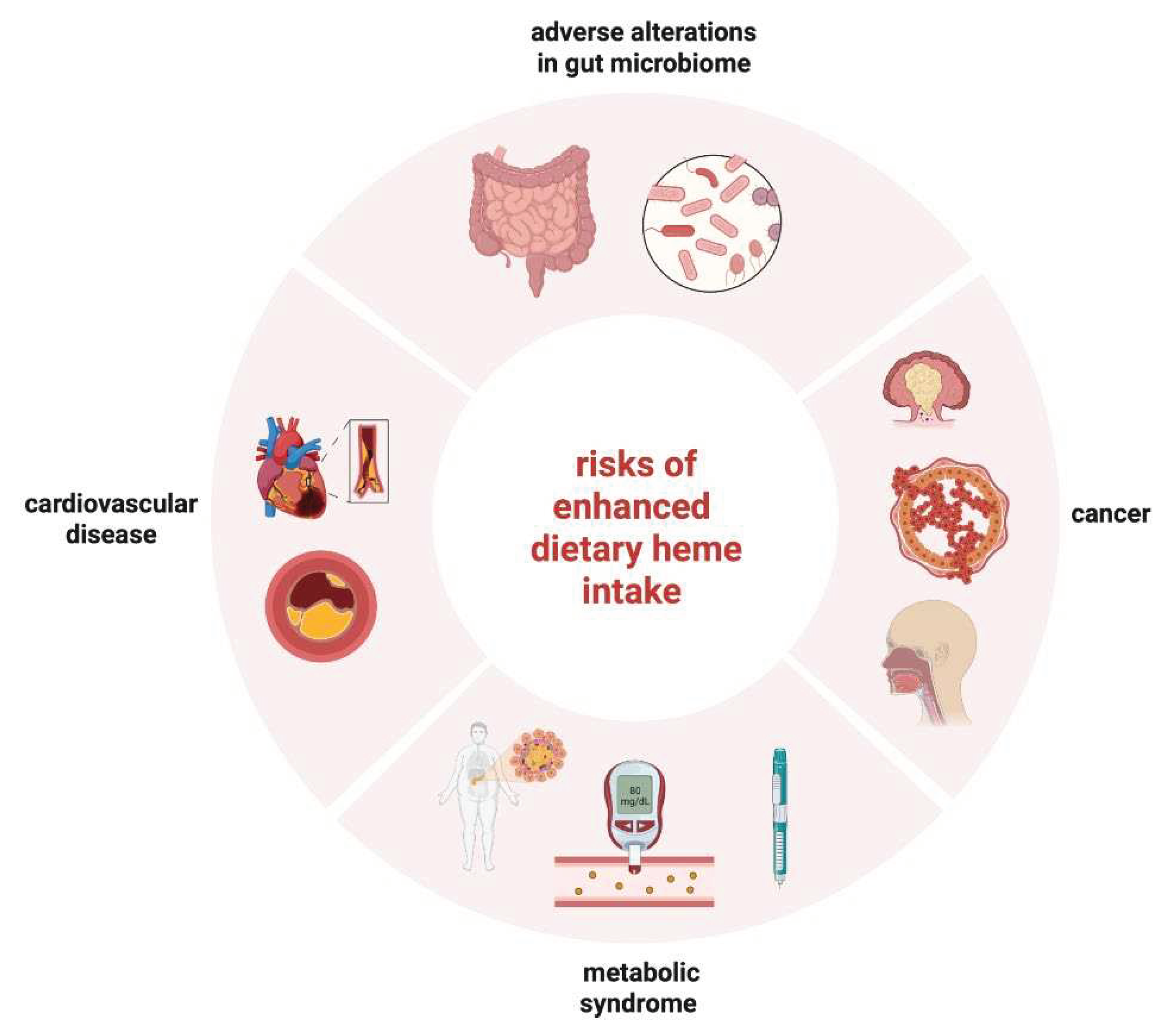

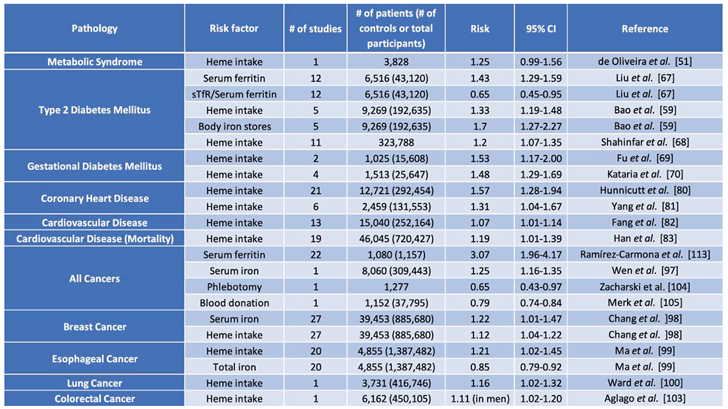

4. Dietary Iron Intake and the Risk for Disease

5. Iron and the Risk for Metabolic Syndrome

6. Iron and the Risk for Cardiovascular Disease

7. Iron and Cancer Risk

8. Iron and the Intestinal Microbiome

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Katsarou, A.; Pantopoulos, K. Basics and principles of cellular and systemic iron homeostasis. Mol Aspects Med 2020, 75, 100866. https://doi.org/10.1016/j.mam.2020.100866. [CrossRef]

- Camaschella, C. Iron-deficiency anemia. N Engl J Med 2015, 372, 1832–1843. https://doi.org/10.1056/NEJMra1401038. [CrossRef]

- Al-Naseem, A.; Sallam, A.; Choudhury, S.; Thachil, J. Iron deficiency without anaemia: A diagnosis that matters. Clin Med (Lond) 2021, 21, 107–113. https://doi.org/10.7861/clinmed.2020-0582. [CrossRef]

- Pasricha, S.-R.; Tye-Din, J.; Muckenthaler, M.U.; Swinkels, D.W. Iron deficiency. The Lancet 2021, 397, 233–248. https://doi.org/10.1016/S0140-6736(20)32594-0. [CrossRef]

- De Benoist, B.; Cogswell, M.; Egli, I.; McLean, E. Worldwide prevalence of anaemia 1993-2005; WHO global database of anaemia. 2008.

- Vos, T.; Abajobir, A.A.; Abate, K.H.; Abbafati, C.; Abbas, K.M.; Abd-Allah, F.; Abdulkader, R.S.; Abdulle, A.M.; Abebo, T.A.; Abera, S.F.; et al. Global, regional, and national incidence, prevalence, and years lived with disability for 328 diseases and injuries for 195 countries, 1990–2016: A systematic analysis for the Global Burden of Disease Study 2016. The Lancet 2017, 390, 1211–1259. https://doi.org/10.1016/S0140-6736(17)32154-2. [CrossRef]

- Trumbo, P.; Yates, A.A.; Schlicker, S.; Poos, M. Dietary reference intakes: Vitamin A, vitamin K, arsenic, boron, chromium, copper, iodine, iron, manganese, molybdenum, nickel, silicon, vanadium, and zinc. J. Am. Diet. Assoc. 2001, 101, 294–301. https://doi.org/10.1016/S0002-8223(01)00078-5. [CrossRef]

- Pivina, L.; Semenova, Y.; Doşa, M.D.; Dauletyarova, M.; Bjørklund, G. Iron Deficiency, Cognitive Functions, and Neurobehavioral Disorders in Children. J. Mol. Neurosci. 2019, 68, 1–10. https://doi.org/10.1007/s12031-019-01276-1. [CrossRef]

- Pottie, K.; Greenaway, C.; Feightner, J.; Welch, V.; Swinkels, H.; Rashid, M.; Narasiah, L.; Kirmayer, L.J.; Ueffing, E.; MacDonald, N.E.; et al. Evidence-based clinical guidelines for immigrants and refugees. Can. Med. Assoc. J. 2011, 183, E824–E925. https://doi.org/10.1503/cmaj.090313. [CrossRef]

- Khambalia, A.Z.; Aimone, A.M.; Zlotkin, S.H. Burden of anemia among indigenous populations. Nutr. Rev. 2011, 69, 693–719. https://doi.org/10.1111/j.1753-4887.2011.00437.x. [CrossRef]

- Uauy, R.; Hertrampf, E.; Reddy, M. Iron fortification of foods: Overcoming technical and practical barriers. J Nutr 2002, 132, 849S–852S. https://doi.org/10.1093/jn/132.4.849S. [CrossRef]

- Auerbach, M.; Adamson, J.W. How we diagnose and treat iron deficiency anemia. Am J Hematol 2016, 91, 31–38. https://doi.org/10.1002/ajh.24201. [CrossRef]

- Weinberg, E.D. The hazards of iron loading. Metallomics 2010, 2, 732–740. https://doi.org/10.1039/c0mt00023j. [CrossRef]

- Galaris, D.; Barbouti, A.; Pantopoulos, K. Iron homeostasis and oxidative stress: An intimate relationship. Biochim Biophys Acta Mol Cell Res 2019, 1866, 118535. https://doi.org/10.1016/j.bbamcr.2019.118535. [CrossRef]

- Deugnier, Y.; Turlin, B. Pathology of hepatic iron overload. Semin Liver Dis 2011, 31, 260–271. https://doi.org/10.1055/s-0031-1286057. [CrossRef]

- Kowdley, K.V. Iron, hemochromatosis, and hepatocellular carcinoma. Gastroenterology 2004, 127, S79–S86.

- Utzschneider, K.M.; Kowdley, K.V. Hereditary hemochromatosis and diabetes mellitus: Implications for clinical practice. Nat Rev Endocrinol 2010, 6, 26–33, doi:nrendo.2009.241 [pii] https://doi.org/10.1038/nrendo.2009.241. [CrossRef]

- Husar-Memmer, E.; Stadlmayr, A.; Datz, C.; Zwerina, J. HFE-related hemochromatosis: An update for the rheumatologist. Curr Rheumatol Rep 2014, 16, 393. https://doi.org/10.1007/s11926-013-0393-4. [CrossRef]

- Jeney, V. Clinical Impact and Cellular Mechanisms of Iron Overload-Associated Bone Loss. Front Pharmacol 2017, 8, 77. https://doi.org/10.3389/fphar.2017.00077. [CrossRef]

- Kremastinos, D.T.; Farmakis, D. Iron overload cardiomyopathy in clinical practice. Circulation 2011, 124, 2253–2263, doi:124/20/2253 [pii] https://doi.org/10.1161/CIRCULATIONAHA.111.050773. [CrossRef]

- Pelusi, C.; Gasparini, D.I.; Bianchi, N.; Pasquali, R. Endocrine dysfunction in hereditary hemochromatosis. J Endocrinol Invest 2016, 39, 837–847. https://doi.org/10.1007/s40618-016-0451-7. [CrossRef]

- De Sanctis, V.; Soliman, A.T.; Elsedfy, H.; Pepe, A.; Kattamis, C.; El Kholy, M.; Yassin, M. Diabetes and Glucose Metabolism in Thalassemia Major: An Update. Expert Rev Hematol 2016, 9, 401–408. https://doi.org/10.1586/17474086.2016.1136209. [CrossRef]

- Chang, T.P.; Rangan, C. Iron poisoning: A literature-based review of epidemiology, diagnosis, and management. Pediatr Emerg Care 2011, 27, 978–985. https://doi.org/10.1097/PEC.0b013e3182302604. [CrossRef]

- Nemeth, E.; Ganz, T. Hepcidin and Iron in Health and Disease. Annu Rev Med 2023, 74, 261–277. https://doi.org/10.1146/annurev-med-043021-032816. [CrossRef]

- Aschemeyer, S.; Qiao, B.; Stefanova, D.; Valore, E.V.; Sek, A.C.; Ruwe, T.A.; Vieth, K.R.; Jung, G.; Casu, C.; Rivella, S.; et al. Structure-function analysis of ferroportin defines the binding site and an alternative mechanism of action of hepcidin. Blood 2018, 131, 899–910. https://doi.org/10.1182/blood-2017-05-786590. [CrossRef]

- Billesbølle, C.B.; Azumaya, C.M.; Kretsch, R.C.; Powers, A.S.; Gonen, S.; Schneider, S.; Arvedson, T.; Dror, R.O.; Cheng, Y.; Manglik, A. Structure of hepcidin-bound ferroportin reveals iron homeostatic mechanisms. Nature 2020, 10.1038/s41586-020-2668-z. https://doi.org/10.1038/s41586-020-2668-z. [CrossRef]

- Nemeth, E.; Tuttle, M.S.; Powelson, J.; Vaughn, M.B.; Donovan, A.; Ward, D.M.; Ganz, T.; Kaplan, J. Hepcidin regulates cellular iron efflux by binding to ferroportin and inducing its internalization. Science 2004, 306, 2090–2093. [CrossRef]

- Nemeth, E.; Rivera, S.; Gabayan, V.; Keller, C.; Taudorf, S.; Pedersen, B.K.; Ganz, T. IL-6 mediates hypoferremia of inflammation by inducing the synthesis of the iron regulatory hormone hepcidin. J. Clin. Invest. 2004, 113, 1271–1276. https://doi.org/10.1172/jci20945. [CrossRef]

- Babitt, J.L.; Huang, F.W.; Wrighting, D.M.; Xia, Y.; Sidis, Y.; Samad, T.A.; Campagna, J.A.; Chung, R.T.; Schneyer, A.L.; Woolf, C.J.; et al. Bone morphogenetic protein signaling by hemojuvelin regulates hepcidin expression. Nat. Genet. 2006, 38, 531–539. https://doi.org/10.1038/ng1777. [CrossRef]

- Weiss, G.; Ganz, T.; Goodnough, L.T. Anemia of inflammation. Blood 2019, 133, 40–50. https://doi.org/10.1182/blood-2018-06-856500. [CrossRef]

- Pantopoulos, K. Inherited Disorders of Iron Overload. Front Nutr 2018, 5, 103. https://doi.org/10.3389/fnut.2018.00103. [CrossRef]

- Lynch, S.; Pfeiffer, C.M.; Georgieff, M.K.; Brittenham, G.; Fairweather-Tait, S.; Hurrell, R.F.; McArdle, H.J.; Raiten, D.J. Biomarkers of Nutrition for Development (BOND)-Iron Review. J Nutr 2018, 148, 1001S–1067S. https://doi.org/10.1093/jn/nxx036. [CrossRef]

- Walters, G.O.; Miller, F.M.; Worwood, M. Serum ferritin concentration and iron stores in normal subjects. J. Clin. Pathol. 1973, 26, 770–772. https://doi.org/10.1136/jcp.26.10.770. [CrossRef]

- Lipschitz, D.A.; Cook, J.D.; Finch, C.A. A Clinical Evaluation of Serum Ferritin as an Index of Iron Stores. New England Journal of Medicine 1974, 290, 1213–1216. https://doi.org/10.1056/nejm197405302902201. [CrossRef]

- Marcus, D.M.; Zinberg, N. Measurement of Serum Ferritin by Radioimmunoassay: Results in Normal Individuals and Patients With Breast Cancer2. JNCI: Journal of the National Cancer Institute 1975, 55, 791–795. https://doi.org/10.1093/jnci/55.4.791. [CrossRef]

- McKinnon, E.J.; Rossi, E.; Beilby, J.P.; Trinder, D.; Olynyk, J.K. Factors That Affect Serum Levels of Ferritin in Australian Adults and Implications for Follow-up. Clin. Gastroenterol. Hepatol. 2014, 12, 101–108.e104. https://doi.org/10.1016/j.cgh.2013.07.019. [CrossRef]

- Infusino, I.; Braga, F.; Dolci, A.; Panteghini, M. Soluble Transferrin Receptor (sTfR) and sTfR/log Ferritin Index for the Diagnosis of Iron-Deficiency Anemia A Meta-Analysis. Am. J. Clin. Pathol. 2012, 138, 642–649. https://doi.org/10.1309/ajcp16ntxzlzfaib. [CrossRef]

- Shin, D.H.; Kim, H.S.; Park, M.J.; Suh, I.B.; Shin, K.S. Utility of access soluble transferrin receptor (sTfR) and sTfR/log ferritin index in diagnosing iron deficiency anemia. Ann. Clin. Lab. Sci. 2015, 45, 396–402.

- Gkouvatsos, K.; Papanikolaou, G.; Pantopoulos, K. Regulation of iron transport and the role of transferrin. Biochim Biophys Acta 2012, 1820, 188–202. https://doi.org/10.1016/j.bbagen.2011.10.013. [CrossRef]

- Speeckaert, M.M.; Speeckaert, R.; Delanghe, J.R. Biological and clinical aspects of soluble transferrin receptor. Crit. Rev. Clin. Lab. Sci. 2010, 47, 213–228. https://doi.org/10.3109/10408363.2010.550461. [CrossRef]

- Skikne, B.S. Serum transferrin receptor. Am. J. Hematol. 2008, 83, 872–875. https://doi.org/10.1002/ajh.21279. [CrossRef]

- Berlin, T.; Meyer, A.; Rotman-Pikielny, P.; Natur, A.; Levy, Y. Soluble transferrin receptor as a diagnostic laboratory test for detection of iron deficiency anemia in acute illness of hospitalized patients. The Israel Medical Association journal : IMAJ 2011, 13, 96–98.

- Girelli, D.; Nemeth, E.; Swinkels, D.W. Hepcidin in the diagnosis of iron disorders. Blood 2016, 127, 2809–2813. https://doi.org/10.1182/blood-2015-12-639112. [CrossRef]

- Fathi, Z.H.; Mohammad, J.A.; Younus, Z.M.; Mahmood, S.M. Hepcidin as a Potential Biomarker for the Diagnosis of Anemia. Turk J Pharm Sci 2022, 19, 603–609. https://doi.org/10.4274/tjps.galenos.2021.29488. [CrossRef]

- Kroot, J.J.; van Herwaarden, A.E.; Tjalsma, H.; Jansen, R.T.; Hendriks, J.C.; Swinkels, D.W. Second round robin for plasma hepcidin methods: First steps toward harmonization. Am. J. Hematol. 2012, 87, 977–983. [CrossRef]

- van der Vorm, L.N.; Hendriks, J.C.; Laarakkers, C.M.; Klaver, S.; Armitage, A.E.; Bamberg, A.; Geurts-Moespot, A.J.; Girelli, D.; Herkert, M.; Itkonen, O. Toward worldwide hepcidin assay harmonization: Identification of a commutable secondary reference material. Clin. Chem. 2016, 62, 993–1001. [CrossRef]

- Carpenter, C.E.; Mahoney, A.W. Contributions of heme and nonheme iron to human nutrition. Critical Reviews in Food Science and Nutrition 1992, 31, 333–367. https://doi.org/10.1080/10408399209527576. [CrossRef]

- Hurrell, R.; Egli, I. Iron bioavailability and dietary reference values. The American Journal of Clinical Nutrition 2010, 91, 1461S–1467S. https://doi.org/10.3945/ajcn.2010.28674F. [CrossRef]

- Gulec, S.; Anderson, G.J.; Collins, J.F. Mechanistic and regulatory aspects of intestinal iron absorption. Am J Physiol Gastrointest Liver Physiol 2014, 307, G397-G409, doi:ajpgi.00348.2013 [pii] https://doi.org/10.1152/ajpgi.00348.2013. [CrossRef]

- Huang, Y.; Cao, D.; Chen, Z.; Chen, B.; Li, J.; Wang, R.; Guo, J.; Dong, Q.; Liu, C.; Wei, Q.; et al. Iron intake and multiple health outcomes: Umbrella review. Critical Reviews in Food Science and Nutrition 2021, 10.1080/10408398.2021.1982861, 1-18. https://doi.org/10.1080/10408398.2021.1982861. [CrossRef]

- de Oliveira Otto, M.C.; Alonso, A.; Lee, D.-H.; Delclos, G.L.; Bertoni, A.G.; Jiang, R.; Lima, J.A.; Symanski, E.; Jacobs, D.R., Jr; Nettleton, J.A. Dietary Intakes of Zinc and Heme Iron from Red Meat, but Not from Other Sources, Are Associated with Greater Risk of Metabolic Syndrome and Cardiovascular Disease. The Journal of Nutrition 2012, 142, 526–533. https://doi.org/10.3945/jn.111.149781. [CrossRef]

- Bozzini, C.; Girelli, D.; Olivieri, O.; Martinelli, N.; Bassi, A.; De Matteis, G.; Tenuti, I.; Lotto, V.; Friso, S.; Pizzolo, F.; et al. Prevalence of body iron excess in the metabolic syndrome. Diabetes Care 2005, 28, 2061–2063. [CrossRef]

- Fernandez-Real, J.M.; Ricart-Engel, W.; Arroyo, E.; Balanca, R.; Casamitjana-Abella, R.; Cabrero, D.; Fernandez-Castaner, M.; Soler, J. Serum ferritin as a component of the insulin resistance syndrome. Diabetes Care 1998, 21, 62–68. [CrossRef]

- Lee, D.H.; Liu, D.Y.; Jacobs, D.R., Jr.; Shin, H.R.; Song, K.; Lee, I.K.; Kim, B.; Hider, R.C. Common presence of non-transferrin-bound iron among patients with type 2 diabetes. Diabetes Care 2006, 29, 1090–1095. https://doi.org/10.2337/diacare.2951090. [CrossRef]

- Mendler, M.H.; Turlin, B.; Moirand, R.; Jouanolle, A.M.; Sapey, T.; Guyader, D.; Le Gall, J.Y.; Brissot, P.; David, V.; Deugnier, Y. Insulin resistance-associated hepatic iron overload. Gastroenterology 1999, 117, 1155–1163, doi:S0016508599006150 [pii]. [CrossRef]

- Sachinidis, A.; Doumas, M.; Imprialos, K.; Stavropoulos, K.; Katsimardou, A.; Athyros, V.G. Dysmetabolic Iron Overload in Metabolic Syndrome. Curr Pharm Des 2020, 26, 1019–1024. https://doi.org/10.2174/1381612826666200130090703. [CrossRef]

- Crawford, D.H.G.; Ross, D.G.F.; Jaskowski, L.A.; Burke, L.J.; Britton, L.J.; Musgrave, N.; Briskey, D.; Rishi, G.; Bridle, K.R.; Subramaniam, V.N. Iron depletion attenuates steatosis in a mouse model of non-alcoholic fatty liver disease: Role of iron-dependent pathways. Biochim Biophys Acta Mol Basis Dis 2021, 1867, 166142. https://doi.org/10.1016/j.bbadis.2021.166142. [CrossRef]

- Murali, A.R.; Gupta, A.; Brown, K. Systematic review and meta-analysis to determine the impact of iron depletion in dysmetabolic iron overload syndrome and non-alcoholic fatty liver disease. Hepatol Res 2018, 48, E30–E41. https://doi.org/10.1111/hepr.12921. [CrossRef]

- Bao, W.; Rong, Y.; Rong, S.; Liu, L. Dietary iron intake, body iron stores, and the risk of type 2 diabetes: A systematic review and meta-analysis. BMC Med 2012, 10, 119. https://doi.org/10.1186/1741-7015-10-119. [CrossRef]

- Dos Santos Vieira, D.A.; Hermes Sales, C.; Galvão Cesar, C.L.; Marchioni, D.M.; Fisberg, R.M. Influence of Haem, Non-Haem, and Total Iron Intake on Metabolic Syndrome and Its Components: A Population-Based Study. Nutrients 2018, 10, 314. [CrossRef]

- Fillebeen, C.; Lam, N.H.; Chow, S.; Botta, A.; Sweeney, G.; Pantopoulos, K. Regulatory Connections between Iron and Glucose Metabolism. Int J Mol Sci 2020, 21. https://doi.org/10.3390/ijms21207773. [CrossRef]

- Davis, R.J.; Corvera, S.; Czech, M.P. Insulin stimulates cellular iron uptake and causes the redistribution of intracellular transferrin receptors to the plasma membrane. J. Biol. Chem. 1986, 261, 8708–8711. https://doi.org/10.1016/S0021-9258(19)84438-1. [CrossRef]

- Ford, E.S.; Cogswell, M.E. Diabetes and serum ferritin concentration among U.S. adults. Diabetes Care 1999, 22, 1978–1983. https://doi.org/10.2337/diacare.22.12.1978. [CrossRef]

- Oshaug, A.; Bugge, K.H.; Bjønnes, C.H.; Borch-Iohnsen, B.; Neslein, I.L. Associations between serum ferritin and cardiovascular risk factors in healthy young men. A cross sectional study. Eur. J. Clin. Nutr. 1995, 49, 430–438.

- Vaquero, M.P.; Martínez-Maqueda, D.; Gallego-Narbón, A.; Zapatera, B.; Pérez-Jiménez, J. Relationship between iron status markers and insulin resistance: An exploratory study in subjects with excess body weight. PeerJ 2020, 8, e9528. https://doi.org/10.7717/peerj.9528. [CrossRef]

- Klisic, A.; Kavaric, N.; Kotur, J.; Ninic, A. Serum soluble transferrin receptor levels are independently associated with homeostasis model assessment of insulin resistance in adolescent girls. Arch. Med. Sci. 2021, 10.5114/aoms/132757. https://doi.org/10.5114/aoms/132757. [CrossRef]

- Liu, J.; Li, Q.; Yang, Y.; Ma, L. Iron metabolism and type 2 diabetes mellitus: A meta-analysis and systematic review. Journal of Diabetes Investigation 2020, 11, 946–955. https://doi.org/10.1111/jdi.13216. [CrossRef]

- Shahinfar, H.; Jayedi, A.; Shab-Bidar, S. Dietary iron intake and the risk of type 2 diabetes: A systematic review and dose–response meta-analysis of prospective cohort studies. Eur. J. Nutr. 2022, 61, 2279–2296. https://doi.org/10.1007/s00394-022-02813-2. [CrossRef]

- Fu, S.; Li, F.; Zhou, J.; Liu, Z. The Relationship Between Body Iron Status, Iron Intake And Gestational Diabetes: A Systematic Review and Meta-Analysis. Medicine (Baltimore) 2016, 95, e2383. https://doi.org/10.1097/MD.0000000000002383. [CrossRef]

- Kataria, Y.; Wu, Y.; Horskjaer, P.H.; Mandrup-Poulsen, T.; Ellervik, C. Iron Status and Gestational Diabetes-A Meta-Analysis. Nutrients 2018, 10. https://doi.org/10.3390/nu10050621. [CrossRef]

- Jahng, J.W.S.; Alsaadi, R.M.; Palanivel, R.; Song, E.; Hipolito, V.E.B.; Sung, H.K.; Botelho, R.J.; Russell, R.C.; Sweeney, G. Iron overload inhibits late stage autophagic flux leading to insulin resistance. EMBO reports 2019, 20, e47911. https://doi.org/10.15252/embr.201947911. [CrossRef]

- Sung, H.K.; Song, E.; Jahng, J.W.S.; Pantopoulos, K.; Sweeney, G. Iron induces insulin resistance in cardiomyocytes via regulation of oxidative stress. Sci. Rep. 2019, 9, 4668. https://doi.org/10.1038/s41598-019-41111-6. [CrossRef]

- Cui, R.; Choi, S.-E.; Kim, T.H.; Lee, H.J.; Lee, S.J.; Kang, Y.; Jeon, J.Y.; Kim, H.J.; Lee, K.-W. Iron overload by transferrin receptor protein 1 regulation plays an important role in palmitate-induced insulin resistance in human skeletal muscle cells. The FASEB Journal 2019, 33, 1771–1786. https://doi.org/10.1096/fj.201800448R. [CrossRef]

- Hansen, Jakob B.; Tonnesen, Morten F.; Madsen, Andreas N.; Hagedorn, Peter H.; Friberg, J.; Grunnet, Lars G.; Heller, R.S.; Nielsen, Anja Ø.; Størling, J.; Baeyens, L.; et al. Divalent Metal Transporter 1 Regulates Iron-Mediated ROS and Pancreatic β Cell Fate in Response to Cytokines. Cell Metab. 2012, 16, 449–461. https://doi.org/10.1016/j.cmet.2012.09.001. [CrossRef]

- Huang, J.; Jones, D.; Luo, B.; Sanderson, M.; Soto, J.; Abel, E.D.; Cooksey, R.C.; McClain, D.A. Iron Overload and Diabetes Risk: A Shift From Glucose to Fatty Acid Oxidation and Increased Hepatic Glucose Production in a Mouse Model of Hereditary Hemochromatosis. Diabetes 2010, 60, 80–87. https://doi.org/10.2337/db10-0593. [CrossRef]

- Borel, M.J.; Beard, J.L.; Farrell, P.A. Hepatic glucose production and insulin sensitivity and responsiveness in iron-deficient anemic rats. American Journal of Physiology-Endocrinology and Metabolism 1993, 264, E380–E390. https://doi.org/10.1152/ajpendo.1993.264.3.E380. [CrossRef]

- Farrell, P.A.; Beard, J.L.; Druckenmiller, M. Increased Insulin Sensitivity in Iron-Deficient Rats. The Journal of Nutrition 1988, 118, 1104–1109. https://doi.org/10.1093/jn/118.9.1104. [CrossRef]

- Ozdemir, A.; Sevinç, C.; Selamet, U.; Kamaci, B.; Atalay, S. Age- and body mass index-dependent relationship between correction of iron deficiency anemia and insulin resistance in non-diabetic premenopausal women. Ann. Saudi Med. 2007, 27, 356–361. https://doi.org/10.5144/0256-4947.2007.356. [CrossRef]

- Sullivan, J. Iron and the sex difference in heart disease risk. The Lancet 1981, 317, 1293–1294. [CrossRef]

- Hunnicutt, J.; He, K.; Xun, P. Dietary iron intake and body iron stores are associated with risk of coronary heart disease in a meta-analysis of prospective cohort studies. J Nutr 2014, 144, 359–366. https://doi.org/10.3945/jn.113.185124. [CrossRef]

- Yang, W.; Li, B.; Dong, X.; Zhang, X.Q.; Zeng, Y.; Zhou, J.L.; Tang, Y.H.; Xu, J.J. Is heme iron intake associated with risk of coronary heart disease? A meta-analysis of prospective studies. Eur J Nutr 2014, 53, 395–400. https://doi.org/10.1007/s00394-013-0535-5. [CrossRef]

- Fang, X.; An, P.; Wang, H.; Wang, X.; Shen, X.; Li, X.; Min, J.; Liu, S.; Wang, F. Dietary intake of heme iron and risk of cardiovascular disease: A dose-response meta-analysis of prospective cohort studies. Nutr Metab Cardiovasc Dis 2015, 25, 24–35. https://doi.org/10.1016/j.numecd.2014.09.002. [CrossRef]

- Han, M.; Guan, L.; Ren, Y.; Zhao, Y.; Liu, D.; Zhang, D.; Liu, L.; Liu, F.; Chen, X.; Cheng, C.; et al. Dietary iron intake and risk of death due to cardiovascular diseases: A systematic review and dose-response meta-analysis of prospective cohort studies. Asia Pac J Clin Nutr 2020, 29, 309–321. https://doi.org/10.6133/apjcn.202007_29(2).0014. [CrossRef]

- Ahluwalia, N.; Genoux, A.; Ferrieres, J.; Perret, B.; Carayol, M.; Drouet, L.; Ruidavets, J.-B. Iron Status Is Associated with Carotid Atherosclerotic Plaques in Middle-Aged Adults. The Journal of Nutrition 2010, 140, 812–816. https://doi.org/10.3945/jn.109.110353. [CrossRef]

- Sawada, H.; Hao, H.; Naito, Y.; Oboshi, M.; Hirotani, S.; Mitsuno, M.; Miyamoto, Y.; Hirota, S.; Masuyama, T. Aortic Iron Overload with Oxidative Stress and Inflammation in Human and Murine Abdominal Aortic Aneurysm. Arteriosclerosis, Thrombosis, and Vascular Biology 2015, 35, 1507–1514. https://doi.org/10.1161/ATVBAHA.115.305586. [CrossRef]

- Alnuwaysir, R.I.S.; Hoes, M.F.; van Veldhuisen, D.J.; van der Meer, P.; Grote Beverborg, N. Iron Deficiency in Heart Failure: Mechanisms and Pathophysiology. J Clin Med 2021, 11. https://doi.org/10.3390/jcm11010125. [CrossRef]

- Anand, I.S.; Gupta, P. Anemia and Iron Deficiency in Heart Failure: Current Concepts and Emerging Therapies. Circulation 2018, 138, 80–98. https://doi.org/10.1161/CIRCULATIONAHA.118.030099. [CrossRef]

- Salah, H.M.; Savarese, G.; Rosano, G.M.C.; Ambrosy, A.P.; Mentz, R.J.; Fudim, M. Intravenous iron infusion in patients with heart failure: A systematic review and study-level meta-analysis. ESC Heart Fail 2023, 10, 1473–1480. https://doi.org/10.1002/ehf2.14310. [CrossRef]

- Araujo, J.A.; Romano, E.L.; Brito, B.E.; Parthé, V.; Romano, M.; Bracho, M.; Montaño, R.F.; Cardier, J. Iron Overload Augments the Development of Atherosclerotic Lesions in Rabbits. Arteriosclerosis, Thrombosis, and Vascular Biology 1995, 15, 1172–1180, https://doi.org/10.1161/01.ATV.15.8.1172. [CrossRef]

- Vinchi, F.; Porto, G.; Simmelbauer, A.; Altamura, S.; Passos, S.T.; Garbowski, M.; Silva, A.M.N.; Spaich, S.; Seide, S.E.; Sparla, R.; et al. Atherosclerosis is aggravated by iron overload and ameliorated by dietary and pharmacological iron restriction. Eur. Heart J. 2019, 41, 2681–2695. https://doi.org/10.1093/eurheartj/ehz112. [CrossRef]

- Vinchi, F.; Porto, G.; Simmelbauer, A.; Altamura, S.; Passos, S.T.; Garbowski, M.; Silva, A.M.N.; Spaich, S.; Seide, S.E.; Sparla, R.; et al. Atherosclerosis is aggravated by iron overload and ameliorated by dietary and pharmacological iron restriction. Eur Heart J 2020, 41, 2681–2695. https://doi.org/10.1093/eurheartj/ehz112. [CrossRef]

- Lakhal-Littleton, S.; Wolna, M.; Carr, C.A.; Miller, J.J.J.; Christian, H.C.; Ball, V.; Santos, A.; Diaz, R.; Biggs, D.; Stillion, R.; et al. Cardiac ferroportin regulates cellular iron homeostasis and is important for cardiac function. Proceedings of the National Academy of Sciences 2015, 112, 3164–3169, https://doi.org/10.1073/pnas.1422373112. [CrossRef]

- Bigorra Mir, M.; Charlebois, E.; Tsyplenkova, S.; Fillebeen, C.; Pantopoulos, K. Cardiac Hamp mRNA Is Predominantly Expressed in the Right Atrium and Does Not Respond to Iron. Int. J. Mol. Sci. 2023, 24, 5163. [CrossRef]

- Zhabyeyev, P.; Oudit, G.Y. Unravelling the molecular basis for cardiac iron metabolism and deficiency in heart failure. Eur Heart J 2017, 38, 373–375. https://doi.org/10.1093/eurheartj/ehw386. [CrossRef]

- Xu, W.; Barrientos, T.; Mao, L.; Rockman, H.A.; Sauve, A.A.; Andrews, N.C. Lethal Cardiomyopathy in Mice Lacking Transferrin Receptor in the Heart. Cell Rep 2015, 13, 533–545. https://doi.org/10.1016/j.celrep.2015.09.023. [CrossRef]

- Lakhal-Littleton, S.; Wolna, M.; Chung, Y.J.; Christian, H.C.; Heather, L.C.; Brescia, M.; Ball, V.; Diaz, R.; Santos, A.; Biggs, D.; et al. An essential cell-autonomous role for hepcidin in cardiac iron homeostasis. Elife 2016, 5. https://doi.org/10.7554/eLife.19804. [CrossRef]

- Wen, C.P.; Lee, J.H.; Tai, Y.P.; Wen, C.; Wu, S.B.; Tsai, M.K.; Hsieh, D.P.; Chiang, H.C.; Hsiung, C.A.; Hsu, C.Y.; et al. High serum iron is associated with increased cancer risk. Cancer Res 2014, 74, 6589–6597. https://doi.org/10.1158/0008-5472.CAN-14-0360. [CrossRef]

- Chang, V.C.; Cotterchio, M.; Khoo, E. Iron intake, body iron status, and risk of breast cancer: A systematic review and meta-analysis. BMC Cancer 2019, 19, 543. https://doi.org/10.1186/s12885-019-5642-0. [CrossRef]

- Ma, J.; Li, Q.; Fang, X.; Chen, L.; Qiang, Y.; Wang, J.; Wang, Q.; Min, J.; Zhang, S.; Wang, F. Increased total iron and zinc intake and lower heme iron intake reduce the risk of esophageal cancer: A dose-response meta-analysis. Nutr Res 2018, 59, 16–28. https://doi.org/10.1016/j.nutres.2018.07.007. [CrossRef]

- Ward, H.A.; Whitman, J.; Muller, D.C.; Johansson, M.; Jakszyn, P.; Weiderpass, E.; Palli, D.; Fanidi, A.; Vermeulen, R.; Tjonneland, A.; et al. Haem iron intake and risk of lung cancer in the European Prospective Investigation into Cancer and Nutrition (EPIC) cohort. Eur J Clin Nutr 2019, 73, 1122–1132. https://doi.org/10.1038/s41430-018-0271-2. [CrossRef]

- Cao, H.; Wang, C.; Chai, R.; Dong, Q.; Tu, S. Iron intake, serum iron indices and risk of colorectal adenomas: A meta-analysis of observational studies. Eur J Cancer Care (Engl) 2017, 26. https://doi.org/10.1111/ecc.12486. [CrossRef]

- Qiao, L.; Feng, Y. Intakes of heme iron and zinc and colorectal cancer incidence: A meta-analysis of prospective studies. Cancer Causes Control 2013, 24, 1175–1183. https://doi.org/10.1007/s10552-013-0197-x. [CrossRef]

- Aglago, E.K.; Cross, A.J.; Riboli, E.; Fedirko, V.; Hughes, D.J.; Fournier, A.; Jakszyn, P.; Freisling, H.; Gunter, M.J.; Dahm, C.C.; et al. Dietary intake of total, heme and non-heme iron and the risk of colorectal cancer in a European prospective cohort study. Br. J. Cancer 2023, 10.1038/s41416-023-02164-7. https://doi.org/10.1038/s41416-023-02164-7. [CrossRef]

- Zacharski, L.R.; Chow, B.K.; Howes, P.S.; Shamayeva, G.; Baron, J.A.; Dalman, R.L.; Malenka, D.J.; Ozaki, C.K.; Lavori, P.W. Decreased cancer risk after iron reduction in patients with peripheral arterial disease: Results from a randomized trial. J Natl Cancer Inst 2008, 100, 996–1002. https://doi.org/10.1093/jnci/djn209. [CrossRef]

- Merk, K.; Mattsson, B.; Mattsson, A.; Holm, G.; Gullbring, B.; Bjorkholm, M. The Incidence of Cancer among Blood Donors. Int. J. Epidemiol. 1990, 19, 505–509. https://doi.org/10.1093/ije/19.3.505. [CrossRef]

- Gamage, S.M.K.; Lee, K.T.W.; Dissabandara, D.L.O.; Lam, A.K.-Y.; Gopalan, V. Dual role of heme iron in cancer; promotor of carcinogenesis and an inducer of tumour suppression. Exp. Mol. Pathol. 2021, 120, 104642. https://doi.org/10.1016/j.yexmp.2021.104642. [CrossRef]

- Torti, S.V.; Manz, D.H.; Paul, B.T.; Blanchette-Farra, N.; Torti, F.M. Iron and Cancer. Annu Rev Nutr 2018, 38, 97–125. https://doi.org/10.1146/annurev-nutr-082117-051732. [CrossRef]

- Pinnix, Z.K.; Miller, L.D.; Wang, W.; D’Agostino, R.; Kute, T.; Willingham, M.C.; Hatcher, H.; Tesfay, L.; Sui, G.; Di, X.; et al. Ferroportin and Iron Regulation in Breast Cancer Progression and Prognosis. Sci. Transl. Med. 2010, 2, 43ra56-43ra56, https://doi.org/10.1126/scitranslmed.3001127. [CrossRef]

- Basuli, D.; Tesfay, L.; Deng, Z.; Paul, B.; Yamamoto, Y.; Ning, G.; Xian, W.; McKeon, F.; Lynch, M.; Crum, C.P. Iron addiction: A novel therapeutic target in ovarian cancer. Oncogene 2017, 36, 4089–4099. [CrossRef]

- Tesfay, L.; Clausen, K.A.; Kim, J.W.; Hegde, P.; Wang, X.; Miller, L.D.; Deng, Z.; Blanchette, N.; Arvedson, T.; Miranti, C.K.; et al. Hepcidin Regulation in Prostate and Its Disruption in Prostate Cancer. Cancer Res. 2015, 75, 2254–2263. https://doi.org/10.1158/0008-5472.Can-14-2465. [CrossRef]

- Schonberg, David L.; Miller, Tyler E.; Wu, Q.; Flavahan, William A.; Das, Nupur K.; Hale, James S.; Hubert, Christopher G.; Mack, Stephen C.; Jarrar, Awad M.; Karl, Robert T.; et al. Preferential Iron Trafficking Characterizes Glioblastoma Stem-like Cells. Cancer Cell 2015, 28, 441–455. https://doi.org/10.1016/j.ccell.2015.09.002. [CrossRef]

- Jeong, S.M.; Hwang, S.; Seong, R.H. Transferrin receptor regulates pancreatic cancer growth by modulating mitochondrial respiration and ROS generation. Biochemical and Biophysical Research Communications 2016, 471, 373–379. https://doi.org/10.1016/j.bbrc.2016.02.023. [CrossRef]

- Ramírez-Carmona, W.; Díaz-Fabregat, B.; Yuri Yoshigae, A.; Musa de Aquino, A.; Scarano, W.R.; de Souza Castilho, A.C.; Avansini Marsicano, J.; Leal do Prado, R.; Pessan, J.P.; de Oliveira Mendes, L. Are Serum Ferritin Levels a Reliable Cancer Biomarker? A Systematic Review and Meta-Analysis. Nutrition and Cancer 2022, 74, 1917–1926. https://doi.org/10.1080/01635581.2021.1982996. [CrossRef]

- Radulescu, S.; Brookes, M.J.; Salgueiro, P.; Ridgway, R.A.; McGhee, E.; Anderson, K.; Ford, S.J.; Stones, D.H.; Iqbal, T.H.; Tselepis, C.; et al. Luminal iron levels govern intestinal tumorigenesis after apc loss in vivo. Cell Rep 2012, 2, 270–282, doi:S2211-1247(12)00199-4 [pii] https://doi.org/10.1016/j.celrep.2012.07.003. [CrossRef]

- Ijssennagger, N.; Rijnierse, A.; de Wit, N.; Jonker-Termont, D.; Dekker, J.; Muller, M.; van der Meer, R. Dietary haem stimulates epithelial cell turnover by downregulating feedback inhibitors of proliferation in murine colon. Gut 2012, 61, 1041–1049. https://doi.org/10.1136/gutjnl-2011-300239. gutjnl-2011-300239 [pii]. [CrossRef]

- Ijssennagger, N.; Rijnierse, A.; de Wit, N.J.; Boekschoten, M.V.; Dekker, J.; Schonewille, A.; Muller, M.; van der Meer, R. Dietary heme induces acute oxidative stress, but delayed cytotoxicity and compensatory hyperproliferation in mouse colon. Carcinogenesis 2013, 34, 1628–1635. https://doi.org/10.1093/carcin/bgt084. [CrossRef]

- Constante, M.; Fragoso, G.; Calve, A.; Samba-Mondonga, M.; Santos, M.M. Dietary Heme Induces Gut Dysbiosis, Aggravates Colitis, and Potentiates the Development of Adenomas in Mice. Front Microbiol 2017, 8, 1809. https://doi.org/10.3389/fmicb.2017.01809. [CrossRef]

- Leung, C.; Rivera, L.; Furness, J.B.; Angus, P.W. The role of the gut microbiota in NAFLD. Nat Rev Gastroenterol Hepatol 2016, 13, 412–425. https://doi.org/10.1038/nrgastro.2016.85. [CrossRef]

- Forslund, K.; Hildebrand, F.; Nielsen, T.; Falony, G.; Le Chatelier, E.; Sunagawa, S.; Prifti, E.; Vieira-Silva, S.; Gudmundsdottir, V.; Pedersen, H.K.; et al. Disentangling type 2 diabetes and metformin treatment signatures in the human gut microbiota. Nature 2015, 528, 262–266. https://doi.org/10.1038/nature15766. [CrossRef]

- Belkaid, Y.; Harrison, O.J. Homeostatic Immunity and the Microbiota. Immunity 2017, 46, 562–576. https://doi.org/10.1016/j.immuni.2017.04.008. [CrossRef]

- Osborne, N.J.; Gurrin, L.C.; Allen, K.J.; Constantine, C.C.; Delatycki, M.B.; McLaren, C.E.; Gertig, D.M.; Anderson, G.J.; Southey, M.C.; Olynyk, J.K.; et al. HFE C282Y homozygotes are at increased risk of breast and colorectal cancer. Hepatology 2010, 51, 1311–1318. https://doi.org/10.1002/hep.23448. [CrossRef]

- Fargion, S.; Valenti, L.; Fracanzani, A.L. Role of iron in hepatocellular carcinoma. Clin Liver Dis (Hoboken) 2014, 3, 108–110. https://doi.org/10.1002/cld.350. [CrossRef]

- Finianos, A.; Matar, C.F.; Taher, A. Hepatocellular Carcinoma in beta-Thalassemia Patients: Review of the Literature with Molecular Insight into Liver Carcinogenesis. Int J Mol Sci 2018, 19. https://doi.org/10.3390/ijms19124070. [CrossRef]

- Allameh, A.; Hüttmann, N.; Charlebois, E.; Katsarou, A.; Gu, W.; Gkouvatsos, K.; Pasini, E.; Bhat, M.; Minic, Z.; Berezovski, M.; et al. Hemojuvelin deficiency promotes liver mitochondrial dysfunction and predisposes mice to hepatocellular carcinoma. Commun Biol 2022, 5, 153. https://doi.org/10.1038/s42003-022-03108-2. [CrossRef]

- Celis, A.I.; Relman, D.A.; Huang, K.C. The impact of iron and heme availability on the healthy human gut microbiome in vivo and in vitro. Cell Chemical Biology 2023, 30, 110–126.e113. https://doi.org/10.1016/j.chembiol.2022.12.001. [CrossRef]

- Mayneris-Perxachs, J.; Cardellini, M.; Hoyles, L.; Latorre, J.; Davato, F.; Moreno-Navarrete, J.M.; Arnoriaga-Rodríguez, M.; Serino, M.; Abbott, J.; Barton, R.H.; et al. Iron status influences non-alcoholic fatty liver disease in obesity through the gut microbiome. Microbiome 2021, 9, 104. https://doi.org/10.1186/s40168-021-01052-7. [CrossRef]

- Jaeggi, T.; Kortman, G.A.; Moretti, D.; Chassard, C.; Holding, P.; Dostal, A.; Boekhorst, J.; Timmerman, H.M.; Swinkels, D.W.; Tjalsma, H.; et al. Iron fortification adversely affects the gut microbiome, increases pathogen abundance and induces intestinal inflammation in Kenyan infants. Gut 2015, 64, 731–742. https://doi.org/10.1136/gutjnl-2014-307720. [CrossRef]

- Gwamaka, M.; Kurtis, J.D.; Sorensen, B.E.; Holte, S.; Morrison, R.; Mutabingwa, T.K.; Fried, M.; Duffy, P.E. Iron deficiency protects against severe Plasmodium falciparum malaria and death in young children. Clin Infect Dis 2012, 54, 1137–1144. https://doi.org/10.1093/cid/cis010. [CrossRef]

- Lee, T.; Clavel, T.; Smirnov, K.; Schmidt, A.; Lagkouvardos, I.; Walker, A.; Lucio, M.; Michalke, B.; Schmitt-Kopplin, P.; Fedorak, R.; et al. Oral versus intravenous iron replacement therapy distinctly alters the gut microbiota and metabolome in patients with IBD. Gut 2017, 66, 863–871. https://doi.org/10.1136/gutjnl-2015-309940. [CrossRef]

- Nielsen, O.H.; Soendergaard, C.; Vikner, M.E.; Weiss, G. Rational Management of Iron-Deficiency Anaemia in Inflammatory Bowel Disease. Nutrients 2018, 10. https://doi.org/10.3390/nu10010082. [CrossRef]

- Constante, M.; Fragoso, G.; Calvé, A.; Samba-Mondonga, M.; Santos, M.M. Dietary Heme Induces Gut Dysbiosis, Aggravates Colitis, and Potentiates the Development of Adenomas in Mice. Front. Microbiol. 2017, 8. https://doi.org/10.3389/fmicb.2017.01809. [CrossRef]

- Mahalhal, A.; Williams, J.M.; Johnson, S.; Ellaby, N.; Duckworth, C.A.; Burkitt, M.D.; Liu, X.; Hold, G.L.; Campbell, B.J.; Pritchard, D.M.; et al. Oral iron exacerbates colitis and influences the intestinal microbiome. PLoS ONE 2018, 13, e0202460. https://doi.org/10.1371/journal.pone.0202460. [CrossRef]

- Mahalhal, A.; Burkitt, M.D.; Duckworth, C.A.; Hold, G.L.; Campbell, B.J.; Pritchard, D.M.; Probert, C.S. Long-Term Iron Deficiency and Dietary Iron Excess Exacerbate Acute Dextran Sodium Sulphate-Induced Colitis and Are Associated with Significant Dysbiosis. Int J Mol Sci 2021, 22. https://doi.org/10.3390/ijms22073646. [CrossRef]

- Ijssennagger, N.; Belzer, C.; Hooiveld, G.J.; Dekker, J.; van Mil, S.W.; Muller, M.; Kleerebezem, M.; van der Meer, R. Gut microbiota facilitates dietary heme-induced epithelial hyperproliferation by opening the mucus barrier in colon. Proc Natl Acad Sci U S A 2015, 112, 10038–10043. https://doi.org/10.1073/pnas.1507645112. [CrossRef]

- Song, M.; Chan, A.T. Environmental Factors, Gut Microbiota, and Colorectal Cancer Prevention. Clin. Gastroenterol. Hepatol. 2019, 17, 275–289. https://doi.org/10.1016/j.cgh.2018.07.012. [CrossRef]

- Zakrzewski, M.; Wilkins, S.J.; Helman, S.L.; Brilli, E.; Tarantino, G.; Anderson, G.J.; Frazer, D.M. Supplementation with Sucrosomial(R) iron leads to favourable changes in the intestinal microbiome when compared to ferrous sulfate in mice. Biometals 2021, 10.1007/s10534-021-00348-3. https://doi.org/10.1007/s10534-021-00348-3. [CrossRef]

- Constante, M.; Fragoso, G.; Lupien-Meilleur, J.; Calve, A.; Santos, M.M. Iron Supplements Modulate Colon Microbiota Composition and Potentiate the Protective Effects of Probiotics in Dextran Sodium Sulfate-induced Colitis. Inflamm Bowel Dis 2017, 23, 753–766. https://doi.org/10.1097/MIB.0000000000001089. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).