1. Introduction

Research has already revealed the complex information processing capabilities of mammalian retinas (

Gollisch and Meister 2010), which preprocess optical signals before sending them to higher-level visual cortices. Recent studies have also identified numerous subtypes of retinal neurons, each with unique roles that enable hosts to quickly and intelligently adapt to changing environments based on perceptions (

Franke et al. 2017;

Liu et al. 2021). Leveraging the working principles of the retina (

Land and McCann 1971) has led to the development of more intelligent perception-related algorithms and impressive achievements in image processing tasks, such as high-dynamic range image tone mapping (

Meylan and Susstrunk 2006;

Wang et al. 2013;

Zhang and Li 2016;

Park et al. 2017).

The work discussed in (

Zhang and Li 2016) is a prime example of a model inspired by the workings of various types of neurons, such as horizontal and bipolar neurons, for tone mapping tasks. The authors of (

Zhang and Li 2016) systematically explored different aspects of retinal circuitry from a signal processing perspective, inspiring the development of a corresponding computational model. By separating images into individual channels for algorithm modulation and aggregating the results, this work achieved superior results.

Traditional tone mapping in digital image processing often involves histogram equalization, which can cause images to lose color constancy, making objects appear in different or unnatural colors after restoration. This is particularly challenging for low-light image restoration tasks. While the work in (

Zhang and Li 2016) yielded impressive results, it relied on mathematical formulas and was too algorithmic-oriented. Additionally, some model parameters depended on domain knowledge, requiring author experience to select the most appropriate ones.

In our report, we draw inspiration from (

Zhang and Li 2016) to re-examine the working principles of the retina and design a network to address the low-light image restoration problem. Our network aligns with the optical signal processing flow of different neurons, providing a clear correspondence between the neural pathway in the retina and offering a transparent explanation for the design motivation. Furthermore, this simple model benefits from the end-to-end learning philosophy, eliminating the need for manual parameter optimization. Experiments demonstrate satisfying image restoration from a subjective perceptual perspective, and we plan to improve objective metrics in future work.

2. Background

Low-light image processing has become a crucial area of research in recent years, as it plays a significant role in various applications such as surveillance, autonomous vehicles, nighttime photography, and even astronomical imaging. Addressing the challenges posed by low-light conditions, such as reduced visibility, increased noise, and loss of details, is essential for enhancing the performance of computer vision tasks like classification, detection, and action recognition (

Chen et al. 2018;

Wang et al. 2020a;

Ma et al. 2021). Deep learning techniques, particularly convolutional neural networks (CNNs), have demonstrated remarkable success in addressing low-light image processing challenges (

Wang et al. 2021;

Li et al. 2019).

Numerous deep learning-based methods have been proposed for low-light image enhancement, which mainly focus on various computer vision tasks. For classification purposes, the authors in (

Wu et al. 2023) introduced a method that adapts to the varying illumination conditions by incorporating a spatial transformer network. Similarly, for object detection in low-light scenarios, a multi-scale feature fusion strategy was proposed in (

Wang et al. 2020b), which improves the detection performance by exploiting the rich contextual information. In the context of action recognition under low-light conditions, researchers in (

Wei et al. 2022) developed a two-stream fusion framework that effectively captures the spatial-temporal information. Researchers in

Hira et al. (

2021) proposed a sampling strategy that leverages temporal structure of video data to enhance low-light frames. Additionally, the authors in (

Ren et al. 2020) proposed a light-weight CNN for enhancing low-light images that significantly reduces the computational complexity without sacrificing the classification accuracy.

Low-light image denoising is another area of interest, with various CNN-based approaches being proposed. A deep joint denoising and enhancement network was introduced in (

Zhang and Wu 2016) to tackle the challenges of denoising and enhancing low-light images simultaneously. The authors in (

Zhang and Wu 2016) proposed a noise-aware unsupervised deep feature learning method to improve the denoising performance by learning the noise characteristics adaptively. In (

Tian et al. 2020), an attention mechanism was incorporated into the denoising network to selectively enhance the relevant features for better noise suppression.

Apart from denoising, low-light image dehazing has also been an active research topic. The work in (

Hassan et al. 2022) presented a multi-task learning framework that jointly addresses the dehazing and denoising problems, resulting in improved image quality. Another study (

Zhang et al. 2021) introduced a deep reinforcement learning-based approach for adaptive low-light image enhancement, which optimizes the enhancement parameters to achieve visually pleasing results.

To address the limitations of deep learning models, such as their large size and computational complexity, bio-inspired approaches have been gaining attention. These approaches draw inspiration from the functioning of the human visual system, particularly the retina, which is known for its efficiency and adaptability in processing visual information under different lighting conditions (

Gollisch and Meister 2010). However, the majority of the existing research focuses on complex models, limiting their applicability in resource-constrained environments.

In this paper, we present a bio-inspired, simple neural network for low-light image restoration that is inspired by the principles of various neurons in the retina. Our proposed network aims to achieve satisfactory results while maintaining a minimal architecture, making it suitable for deployment in various systems and scenarios.

Another area that has seen advancements is low-light image color correction. The work presented in (

Jing et al. 2020) proposes a deep learning-based method for unsupervised color correction in low-light conditions, where the model learns to map the color distribution of the input image to that of a target image. Similarly, (

Jiang et al. 2021) introduced a joint color and illumination enhancement framework using generative adversarial networks (GANs), achieving improved color fidelity and contrast in low-light images.

Furthermore, researchers have explored the potential of unsupervised and self-supervised learning methods for low-light image enhancement. In (

Chen et al. 2018), an unsupervised domain adaptation approach was proposed to transfer the knowledge learned from synthetic low-light data to real-world low-light images. The authors in (

Zheng and Gupta 2022) introduced a self-supervised learning framework for low-light image enhancement, leveraging the cycle-consistency loss to generate high-quality enhanced images.

In summary, while existing deep learning-based methods have achieved impressive results in various low-light image processing tasks, their computational complexity and large model sizes often limit their practical applicability. This paper aims to address these limitations by introducing a bio-inspired, simple neural network for low-light image restoration that draws upon the principles of various neurons in the retina. Our proposed network strikes a balance between performance and computational efficiency, making it suitable for deployment in a wide range of systems and scenarios.

3. Method

The computational model in this study takes into account the fact that cone photoreceptors are responsible for colors, while rod photoreceptors handle illuminance. Although low-light conditions may cause images to appear monochromatic, downstream cells such as horizontal cells (HCs) and amacrine cells (ACs) still aim to create a polychromatic visual perception for survival purposes (

Osorio and Vorobyev 2005;

Dresp-Langley and Langley 2009). The model from (

Zhang and Li 2016) proposes two stereotypical pathways for optical signal processing in the retina: a vertical path where signals are collected by photoreceptors, relayed by bipolar cells (BCs), and sent to ganglion cells (GCs), and lateral pathways where local feedbacks transmit information from horizontal cells back to photoreceptors and from amacrine cells to horizontal cells.

Furthermore, cone photoreceptors consist of three types (S-cones, M-cones, and L-cones), each sensitive to different wavelengths, roughly corresponding to the red, green, and blue (RGB) channels of a color image. The study in (

Zhang and Li 2016) suggests an overall split-then-combine computational flowchart.

Our model also considers the electrophysiological properties of neurons in the retina. Unlike most neurons in the cerebral cortex, some retinal neurons are so small that local graded potentials can propagate from upstream synapses to downstream somas. For instance, bipolar cells directly excite ganglion cells via local graded potentials from synapses between photoreceptors and bipolar cells. We assume that photoreceptors also have a direct impact on ganglion cells, and we propose a flow of optical signal processing as shown in Fig. 1.

According to (

Zhang and Li 2016), the information processed by HCs can be represented by Equation (

1):

However, the circuits before BCs also introduce a recursive modulation to channel data

2, which can cause numerical instability.

To avoid this issue, we simplify the equation to a more straightforward finite impulse response form as in equation :

This can be further simplified to a residual form in Equation (

4):

Next, along the optical signal processing neural circuitry, BCs exert a double-opponent effect on the signal modulated by HCs, which can be modeled as a convolution with a difference of Gaussian (DoG) kernel, as shown in Equation (

5)

Although neural networks generally lack the constraint to exert such an effect, we only require the initialization of the corresponding convolutional layer weights to comply with a DoG kernel instance. We choose specific values for this purpose and construct the filter accordingly using Equation (

6)

As mentioned earlier, bipolar cells relay signals via local potentials rather than action potentials, so we assume that the signals, although attenuated, still potentially exert influence on the ganglion cells. This assumption refines Equation (

5) to Equation (

7):

Based on the above computational model, we design the neural network architecture in the next section. This architecture aims to mimic the process of optical signal processing in the retina and provide a more accurate representation of how the retina processes visual information. The neural network will be designed to handle both the vertical and lateral pathways for optical signal processing, incorporating the various cell types involved in the process. By taking into account the electrophysiological properties of retinal neurons and the specific interactions between photoreceptors, bipolar cells, and ganglion cells, our model offers a more comprehensive and biologically plausible representation of visual processing in the retina. In conclusion, this computational model strives to accurately represent the complex processing that occurs in the retina, providing valuable insights for the development of improved artificial vision systems and a deeper understanding of the neural mechanisms underlying human vision. By considering the electrophysiological properties of retinal neurons and the interplay between different cell types, this model offers a more complete and biologically plausible representation of the retina’s optical signal processing pathways.

4. Experiment and Results

4.1. Dataset Utilized

In our experiment, we employ the open-sourced LOw-Light (LOL) image dataset (

Wei et al. 2018). This dataset comprises 500 pairs of low-light and normal-light images, split into 485 training pairs and 15 testing pairs. The images predominantly feature indoor scenes and have a resolution of 400x600. Given that this image size is comparable to those captured by applications like low-light surveillance, we process the images without down-sampling to represent real-world scenarios.

4.2. Neural Network Design and Configuration

The RGB channel values are processed independently. As a result, we utilize depthwise convolutions to maintain this separation (

Howard et al. 2017). The overall network structure aligns with equations

1,

4, and

7. It is worth noting that in Equation (

1), the

parameter for convolution

g relies on pixel values as the operation moves across the monochromatic input

. To reduce computation overhead, we forego determining

for each location, opting instead to let the network learn the optimal weights during training by specifying the configuration.

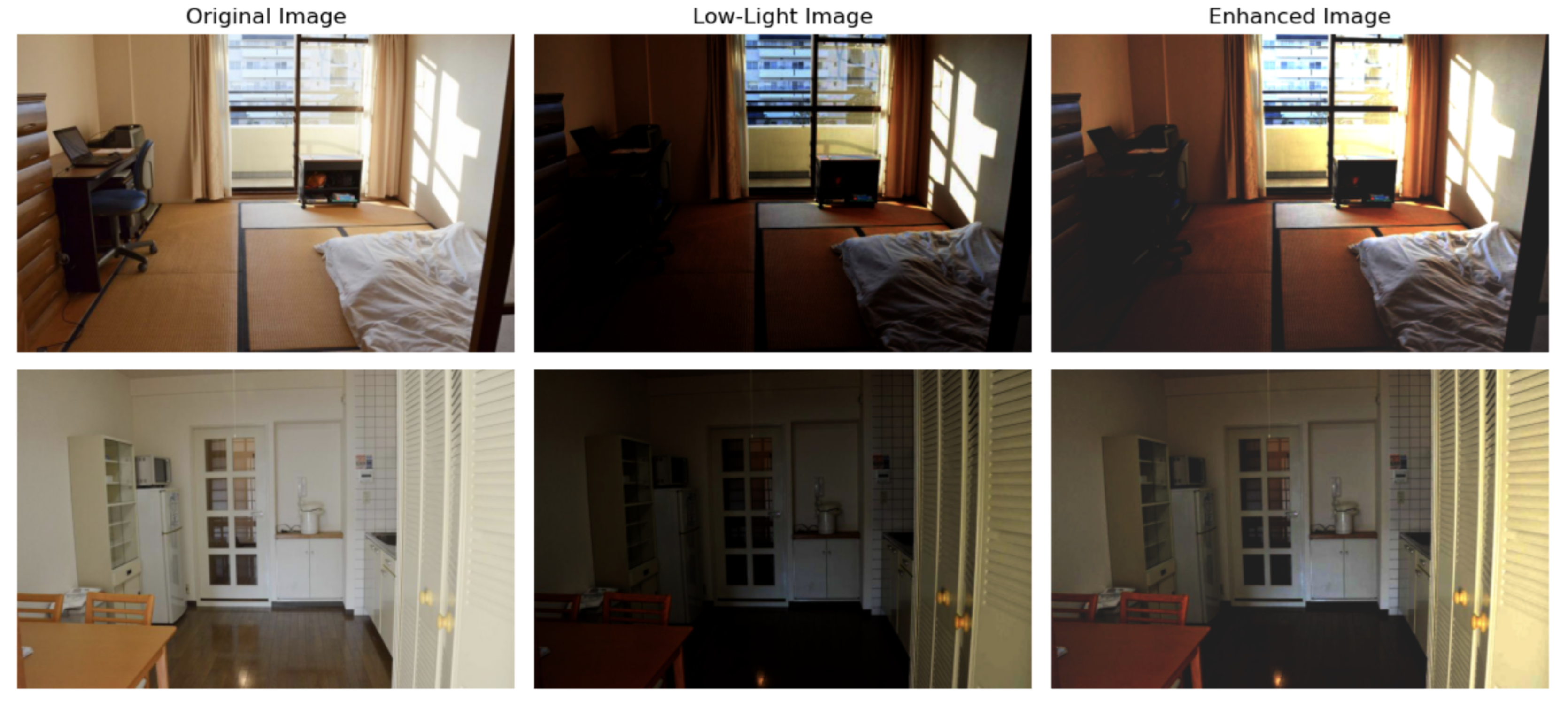

Using the above-described configuration, we train the network for 20 epochs with a batch size of 8 and a learning rate of 0.001. The network has only 108 learnable parameters, so the risk of overfitting is minimal, and we do not perform additional validation. Following training, the network is tested directly on the test set. Sample results are shown in

Figure 1, illustrating that our simple network can restore low-light images with a certain level of perceptual quality. Nevertheless the filters’ limited size may not effectively extract global illumination information, resulting in darker restored images compared to the ground-truth images.

To evaluate the restored images’ quality objectively, we compute the structural similarity index measure (SSIM) for all test samples. The average SSIM is 36.2%, lower than the 76.3% to 93.0% SSIM range reported by other models. Although previous research focused on more theory-oriented heavy models, our straightforward network ensures potential deployment across various systems and scenarios.

5. Conclusion

We have developed a simplistic neural network based on the functional principles of retina neurons and applied it to the LIIR problem. By conducting experiments on the benchmark LOL dataset for LIIR tasks, our results indicate a degree of restored image satisfactoriness from a subjective perception standpoint. While the SSIM’s objective assessment falls short compared to other methods, the importance of drawing inspiration from biological computation for constructing simple networks is evident, and future work can address the current limitations.

References

- Tim Gollisch and Markus Meister. 2010. Eye smarter than scientists believed: neural computations in circuits of the retina. Neuron 65: 150–164. [Google Scholar] [CrossRef]

- Katrin Franke, Philipp Berens, Timm Schubert, Matthias Bethge, Thomas Euler, and Tom Baden. 2017. Inhibition decorrelates visual feature representations in the inner retina. Nature 542: 439–444. [Google Scholar] [CrossRef]

- Belle Liu, Arthur Hong, Fred Rieke, and Michael B Manookin. 2021. Predictive encoding of motion begins in the primate retina. Nature neuroscience 24: 1280–1291. [Google Scholar] [CrossRef]

- Edwin H Land and John J McCann. 1971. Lightness and retinex theory. Josa 61: 1–11. [Google Scholar] [CrossRef]

- Laurence Meylan and Sabine Susstrunk. 2006. High dynamic range image rendering with a retinex-based adaptive filter. IEEE Transactions on image processing 15: 2820–2830. [Google Scholar] [CrossRef]

- Shuhang Wang, Jin Zheng, Hai-Miao Hu, and Bo Li. 2013. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE transactions on image processing 22: 3538–3548. [Google Scholar] [CrossRef]

- Xian-Shi Zhang and Yong-Jie Li. A retina inspired model for high dynamic range image rendering. In Advances in Brain Inspired Cognitive Systems: 8th International Conference, BICS 2016, Beijing, China, November 28-30, 2016, Proceedings 8, pages 68–79. Springer, 2016.

- Seonhee Park, Soohwan Yu, Byeongho Moon, Seungyong Ko, and Joonki Paik. 2017. Low-light image enhancement using variational optimization-based retinex model. IEEE Transactions on Consumer Electronics 63: 178–184. [Google Scholar] [CrossRef]

- Chen Chen, Qifeng Chen, Jia Xu, and Vladlen Koltun. Learning to see in the dark. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3291–3300, 2018.

- Zhihao Wang, Jian Chen, and Steven CH Hoi. 2020. Deep learning for image super-resolution: A survey. IEEE transactions on pattern analysis and machine intelligence 43: 3365–3387. [Google Scholar]

- Teli Ma, Mingyuan Mao, Honghui Zheng, Peng Gao, Xiaodi Wang, Shumin Han, Errui Ding, Baochang Zhang, and David Doermann. 2021. Oriented object detection with transformer. arXiv arXiv:2106.03146. [Google Scholar]

- Shuyu Wang, Mingxin Zhao, Runjiang Dou, Shuangming Yu, Liyuan Liu, and Nanjian Wu. 2021. A compact high-quality image demosaicking neural network for edge-computing devices. Sensors 21: 3265. [Google Scholar] [CrossRef] [PubMed]

- Yang Li, Zhuang Miao, Rui Zhang, and Jiabao Wang. Denoisingnet: An efficient convolutional neural network for image denoising. In 2019 2nd International Conference on Artificial Intelligence and Big Data (ICAIBD), pages 409–413. IEEE, 2019.

- Yun Wu, Yucheng Shi, Huaiyan Shen, Yaya Tan, and Yu Wang. 2023. Light-tbfnet: Rgb-d salient detection based on a lightweight two-branch fusion strategy. Multimedia Tools and Applications, 1–31. [Google Scholar]

- Wanwei Wang, Wei Hong, Feng Wang, and Jinke Yu. 2020. Gan-knowledge distillation for one-stage object detection. IEEE Access 8: 60719–60727. [Google Scholar] [CrossRef]

- Dafeng Wei, Ye Tian, Liqing Wei, Hong Zhong, Siqian Chen, Shiliang Pu, and Hongtao Lu. 2022. Efficient dual attention slowfast networks for video action recognition. Computer Vision and Image Understanding 222: 103484. [Google Scholar] [CrossRef]

- Sanchit Hira, Ritwik Das, Abhinav Modi, and Daniil Pakhomov. Delta sampling r-bert for limited data and low-light action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 853–862, 2021.

- Xutong Ren, Wenhan Yang, Wen-Huang Cheng, and Jiaying Liu. 2020. Lr3m: Robust low-light enhancement via low-rank regularized retinex model. IEEE Transactions on Image Processing 29: 5862–5876. [Google Scholar] [CrossRef] [PubMed]

- Xin Zhang and Ruiyuan Wu. Fast depth image denoising and enhancement using a deep convolutional network. In 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 2499–2503. IEEE, 2016.

- Chunwei Tian, Yong Xu, Zuoyong Li, Wangmeng Zuo, Lunke Fei, and Hong Liu. 2020. Attention-guided cnn for image denoising. Neural Networks 124: 117–129. [Google Scholar]

- Haseeb Hassan, Pranshu Mishra, Muhammad Ahmad, Ali Kashif Bashir, Bingding Huang, and Bin Luo. 2022. Effects of haze and dehazing on deep learning-based vision models. Applied Intelligence, 1–19. [Google Scholar]

- Rongkai Zhang, Lanqing Guo, Siyu Huang, and Bihan Wen. Rellie: Deep reinforcement learning for customized low-light image enhancement. In Proceedings of the 29th ACM international conference on multimedia, pages 2429–2437, 2021.

- Yongcheng Jing, Xiao Liu, Yukang Ding, Xinchao Wang, Errui Ding, Mingli Song, and Shilei Wen. Dynamic instance normalization for arbitrary style transfer. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pages 4369–4376, 2020.

- Yifan Jiang, Xinyu Gong, Ding Liu, Yu Cheng, Chen Fang, Xiaohui Shen, Jianchao Yang, Pan Zhou, and Zhangyang Wang. 2021. Enlightengan: Deep light enhancement without paired supervision. IEEE transactions on image processing 30: 2340–2349. [Google Scholar]

- Shen Zheng and Gaurav Gupta. Semantic-guided zero-shot learning for low-light image/video enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 581–590, 2022.

- Daniel Osorio and Misha Vorobyev. 2005. Photoreceptor sectral sensitivities in terrestrial animals: adaptations for luminance and colour vision. Proceedings of the Royal Society B: Biological Sciences 272: 1745–1752. [Google Scholar] [CrossRef]

- Birgitta Dresp-Langley and Keith Langley. 2009. The biological significance of colour perception. Color perception: Physiology, processes and analysis, 89. [Google Scholar]

- Chen Wei, Wenjing Wang, Wenhan Yang, and Jiaying Liu. 2018. Deep retinex decomposition for low-light enhancement. arXiv arXiv:1808.04560. [Google Scholar]

- Andrew G Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, and Hartwig Adam. 2017. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv arXiv:1704.04861. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).