Submitted:

02 May 2023

Posted:

03 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- The paper discusses a wide variety of precision agriculture problems that can be addressed by using image data acquired from UAVs.

- The paper provides a technical discussion of the most recent papers using image data from UAVs to address agricultural problems.

- The paper evaluates the effectiveness of the various machine learning and deep learning techniques using image data to address agricultural problems using UAV data.

- The paper points out some fruitful future research directions based on the work done to date.

2. Challenges in Agriculture

2.1. Plant Disease Detection and Diagnosis

2.2. Pest Detection and Control

2.3. Urban Vegetation Classification

2.4. Crop Yield Estimation

2.5. Over and Under Irrigation

2.6. Seed Quality and Germination

2.7. Soil Quality and Composition

2.8. Fertilizer Usage

2.9. Quality of the Crop Output

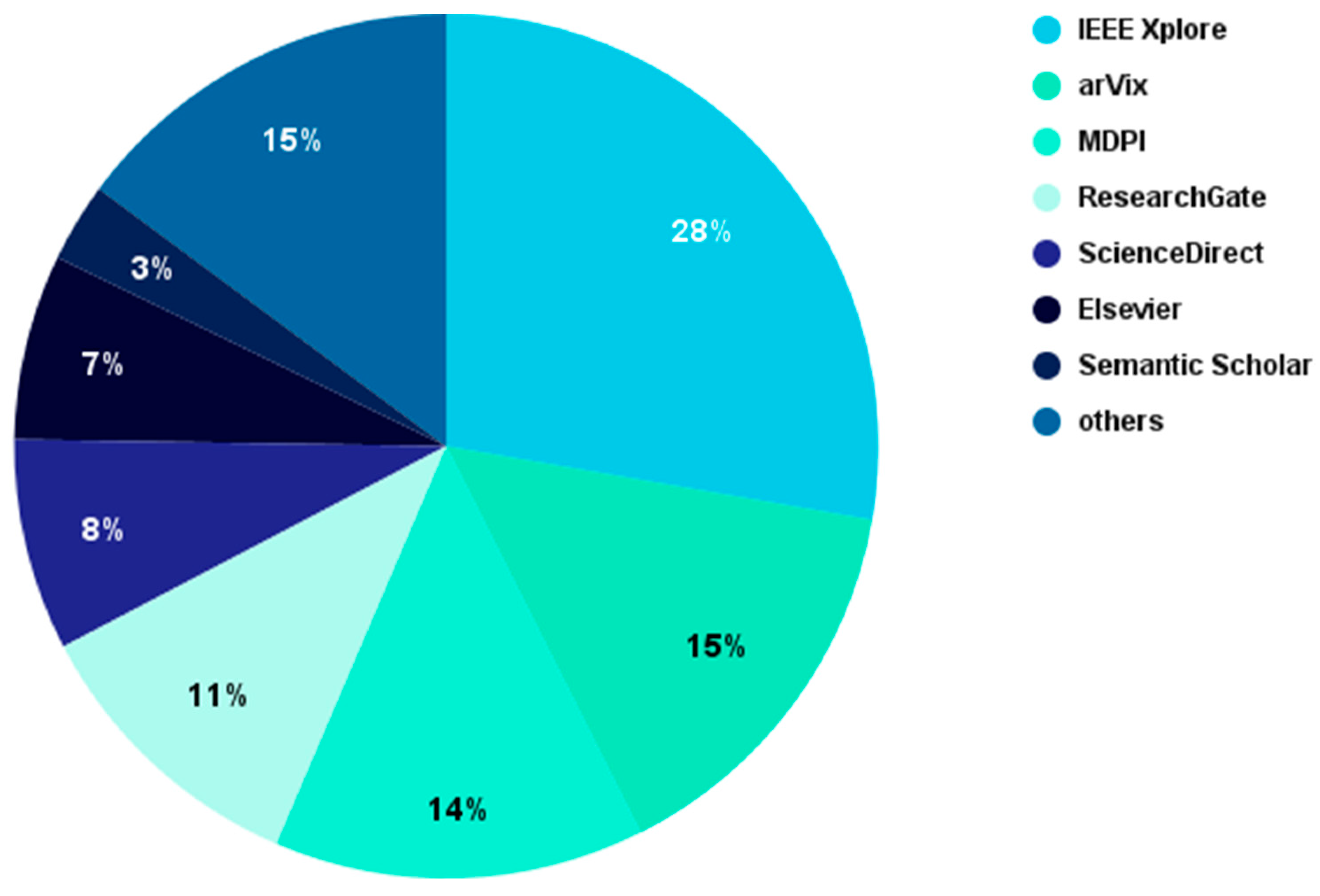

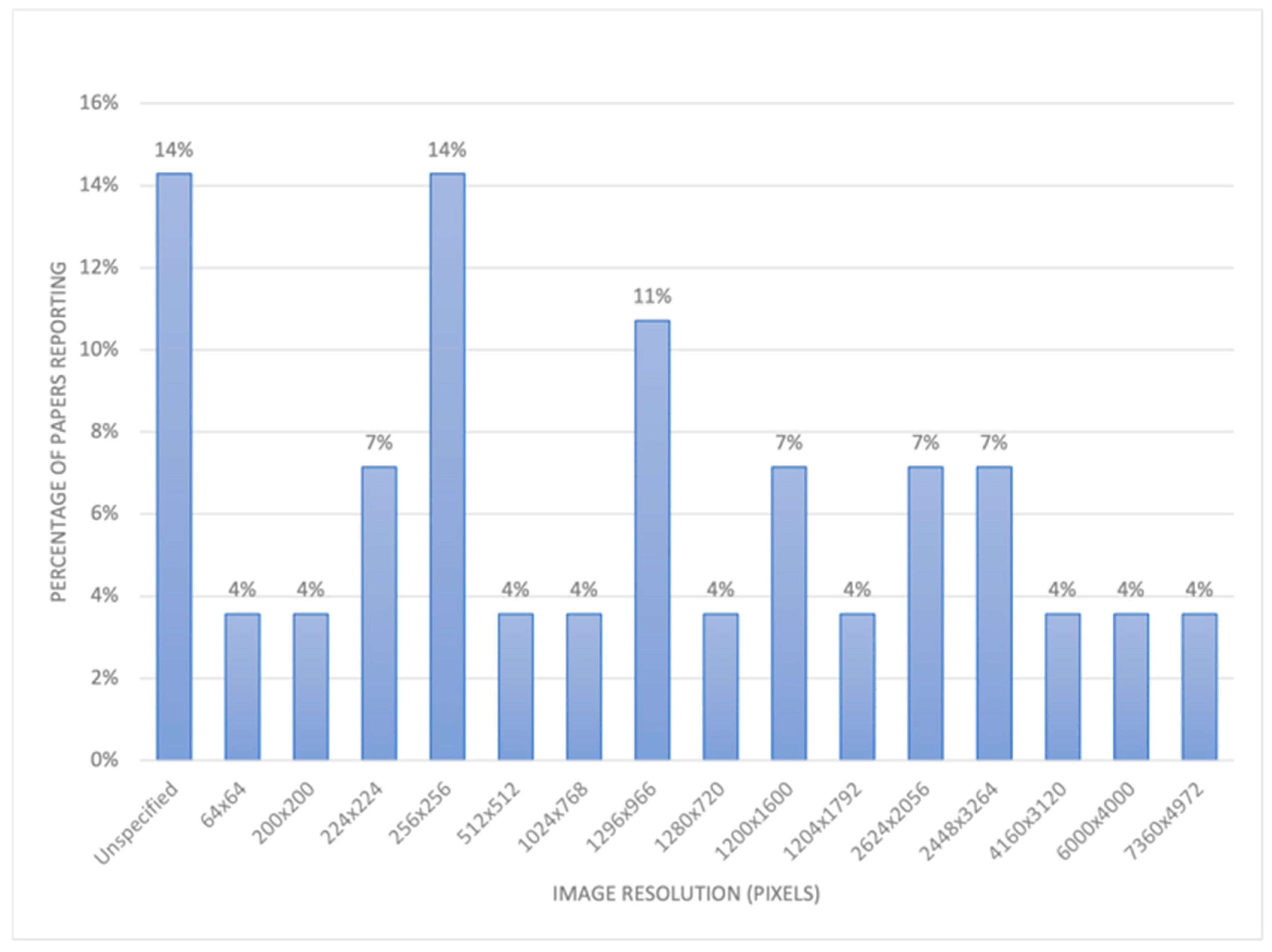

3. Survey Design

- The study must include a clear report on the performance of the models.

- The study must present an in-depth description of the model architecture.

- The study carries out detection/classification/segmentation tasks or a combination of these using UAV image datasets.

- The study is not indexed in a reputable database.

- The study does not propose any significant addition or change to previously existing deep learning or machine learning solutions in its domain.

- The study presents vague descriptions of the experimentation and classification results.

- The study proposes irrelevant or unsatisfactory results.

- What data sources and image datasets were used in the paper?

- What type of pre-processing, data cleaning and augmentation methods were utilized?

- What type of machine learning or deep learning architectures were used?

- What overall performance was achieved, and which metrics were used to report the performance?

- Which architectures and techniques performed best for a class of agricultural problems?

4. Background

4.1. Image Data from UAVs

4.2. Image Features Used in UAV Data

4.3. Vision Tasks Using UAV Data

4.4. Evaluation Metrics

- Accuracy as shown in Eq (2) is a measure of an algorithm's ability to make correct predictions. Accuracy is described as the ratio of the sum of True Positive ( and True Negative (predictions to the algorithm's total number of predictions including false predictions (.

- Precision as shown in Eq(3) is a measure of an algorithm’s ability to make correct positive predictions. Precision is described as the ratio of True Positive (predictions to the sum of True Positive (and False Positive (predictions.

- Recall as shown in Eq(4) measures an algorithm’s ability to identify positive samples. Recall is the ratio of True Positive ( predictions made by the algorithm to the sum of its True Positive ( and False Negative ( predictions.

- F1-score as shown in Eq(5) is the harmonic mean of precision and recall. A high algorithm F1-score value indicates high accuracy. F1-score is calculated as follows:

- Area Under the Curve (AUC) is the area under an ROC curve which is a plot of an algorithm’s True Positive rate (TPR) Eq(6) Vs. its False Positive rate (FPR) Eq(7). An algorithm’s True Positive Rate can be defined as the ratio of positive samples an algorithm correctly classifies to the total actual positive samples. The false positive rate, on the other hand, can be defined as the ratio of an algorithm’s false positive sample classifications to the total actual negative samples.

- Intersection over Union (IoU) as shown in Eq(8) is an evaluation metric used to assess how accurate a detection algorithm's output bounding boxes around an object of interest in an image (e.g., a weed) are compared to the ground truth boxes. IoU is the ratio of the intersection area between a bounding box and its associated ground truth box to their area of union.

- Mean Average Precision (mAP) as shown in Eq (9) is used to assess the quality of object detection models. This metric requires finding a model’s average AP across its classes. The calculation of AP requires calculating a model’s Precision and Recall, followed by drawing its precision-recall curve, and finally, finding the area under the curve.

- Average residual as shown in Eq(10) is used to assess how erroneous a model is. Average residual displays the average difference between a model’s predictions and ground truth values.

- Root Mean Square Error (RMSE) as shown in Eq (11) is used to assess an algorithm’s ability to produce numeric predictions that are close to ground truth values. RMSE is calculated by finding the square root of the average distance between an algorithm’s predictions and their associated truth values.

- Mean Absolute Error (MAE) as shown in Eq (12) is an error metric used to assess how far off an algorithm’s numeric predictions are from truth values. MAE is calculated by finding the average value of the absolute difference between predictions and truth values.

- Frames Per Second (FPS) is a measure used to assess how fast a machine learning model is at analyzing and processing images.

5. Survey Results

5.1. Traditional Machine Learning

5.1.1. Support Vector Machines (SVM)

5.1.2. K-Nearest Neighbors (KNN)

5.1.3. Decision Trees (DT) and Random Forests (RF)

5.2. Neural Networks and Deep Learning

5.2.1. Convolutional Neural Networks (CNN)

| Paper | CNN | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| Crimaldi et al. [60] |

Inception V3 |

The identification time is 200ms which is good for real-time applications | Low accuracy | Accuracy of 78.1% |

| Milioto et al. [61] | CNN model fed with RGB+NIR camera images | High accuracy for early growth stage | Low accuracy for the later growth stage | Early growth stage Accuracy: 97.3% Recall: 98% Later growth stage Accuracy: 89.2% Recall: 99% |

| Bah et al. [62] | AlexNet | Less images with high resolution from a drone | Overlapping of the leaves between crops and weeds | Best precision was for the Spinach dataset with 93% |

| Reddy et al. [63] | Customized CNN | The results had a high precision and recall | Large dataset | Precision of 99.5% for the leaf snap dataset. The flavia, Swedish leaf, UCI leaf datasets had a recall of 98%. |

| Sembiring et al. [64] | Customized CNN | Low training time compared to other models compared in the paper | Not the highest performing model compared in the paper | Accuracy of 97.15% |

| Geetharamani et al. [65] | Deep CNN | Can classify 38 distinct classes of healthy and diseased plants | Large dataset | Classification accuracy of 96.46% |

| R. et al. [66] | Residual learning CNN with attention mechanism | Prominent level of accuracy and only 600k parameters which is lower than the other papers compared in this paper | Large dataset | Overall Accuracy of 98% |

| Nanni et al. [67] | ensembles of CNNs based on different topologies (ResNet50, GoogleNet, ShuffleNet, MobileNetv2, and DenseNet201) | Using Adam helps in decreasing the learning rate of parameters whose gradient changes more frequently | IP102 is a large dataset | 95.52% on Deng and 73.46% on IP102 datasets |

| Bah et al. [77] | CrowNet | Able to detect rows in images of several types of crops | Not a single CNN model | Accuracy: 93.58% IoU: 70% |

| Atila et al. [68] | EfficientNet | Reduces the calculations by the square of the kernel size | Did not have the lowest training time compared to the other models in the paper | Plant village dataset Accuracy: 99.91% Precision: 98.42% Original and augmented datasets Accuracy: 99.97% Precision: 99.39% |

| Prasad et al. [69] | EfficientDet | Scaling ability and FLOP reduction | Performs well for limited labelled datasets however, the accuracy is still low | Identifier model average accuracy: 75.5% |

| Albattah et al. [70] | EffecientNetV2-B4 | Really reliable results and has low time complexity | Large dataset | Precision: 99.63% Recall: 99.93% Accuracy: 99.99% F1: 99.78% |

| Mishra et al. [71] | Standard CNN | Can run on devices like raspberry-pi or smartphones and drones. Works in real-time with no internet. | NCS recognition accuracy is not good and can be improved according to the authors | Accuracy GPU: 98.40% NCS chip: 88.56% |

| Bah et al. [72] | ResNet18 | Outperformed SVM and RF methods and uses unsupervised training dataset | Results of the ResNet18 are lower than SVM and RF in the spinach field | AUC: 91.7% on both supervised and unsupervised labelled data |

| Zheng et al. [73] | Multiple CNN models including: CNN- Joint Model, Xception model, and ResNet-50 model. | Compares multiple models | Joint Model had trouble with LAI estimation, and the vision transformer had trouble with percent canopy cover estimation. |

Xception model: 0.28 CNN-ConvLSTM: 0.32 ResNet-50: 0.41 |

| Yang et al. [74] | ShuffleNet V2 | The total params were 3.785 M which makes it portable and easy to apply | Not the least number of params when compared to the models in the paper | Accuracy MSI: 82.4% RGB: 93.41% TIRI: 68.26% |

| Briechleet et al. [75] | PointNet++ | Good score compared to the models mentioned in the paper | Not yet tested for practical use | Accuracy: 90.2% |

| Aiger et al. [76] | CNN | Large-Scale, Robust, and high accuracy | Low accuracy for 2D CNN. | 96.3% Accuracy |

5.2.2. U-Net Architecture

5.2.3. Other Segmentation Models

5.2.4. YOLO ONLY LOOK ONCE (YOLO)

5.2.5. Single Shot Detector (SSD)

| Paper | SSD | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| Veeranampalayam Sivakumar et al. [99] | SSD with a feature extraction module made of an Inception v2 network and 4 convolutional layers | Model is scale and translation invariant | Low optimal confidence threshold value of 0.1 Failure to detect weeds at the borders of images |

Precision: 0.66, Recall: 0.68, F1 score: 0.67, Mean IoU: 0.84, Inference Time: 0.21s |

| Ridho and Irwan. [100] | SSD with MobileNet as a base for the feature extraction module | Fast detection and image processing | Detection was not performed on a UAV Model did not yield the best accuracy in the paper | Accuracy: 90% |

5.2.6. Region-Based Convolutional Neural Networks

5.2.7. Autoencoders

| Paper | Autoencoder | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| [105] | CNN-Autoencoder | Performed joint instance segmentation of crop plants and leaves using a two-step approach of detecting individual instances of plants and leaves followed by pixel-wise segmentation of the identified instances. | Low segmentation precision for smaller plants. (Outperformed by Mask R-CNN) | 0.94 for AP50 |

| [106] | FCN-Autoencoder | Performed joint stem detection and crop-weed segmentation using an autoencoder with two task-specific decoders, one for stem detection and the other for pixel-wise semantic segmentation. | Did not achieve best mean recall across all tested datasets. + false detections of stems in soil regions | Achieved mAP scores of 85.4%, 66.9%,42.9%, and 50.1% for Bonn, Stuttgart, Ancona, and Eschikon datasets, respectively for stem detection and 69.7%, 58.9%, 52.9% and 44.2% mAP scores for Bonn, Stuttgart, Ancona, and Eschikon datasets, respectively for segmentation. |

| [107] | Autoencoder | Utilized two position-aware encoder-decoder subnets in their DNN architecture to perform segmentation of inter-row and intra-row Rygrass with higher segmentation accuracy. | Low pixel-wise semantic segmentation accuracy for early-stage wheat. | mean accuracy and IoU scores of 96.22% and 64.21%, respectively. |

5.2.8. Transformers

5.2.9. Active Learning

5.2.10. Light Detection and Ranging Algorithm (LiDAR)

5.2.10. Semi-supervised Convolutional Neural Networks

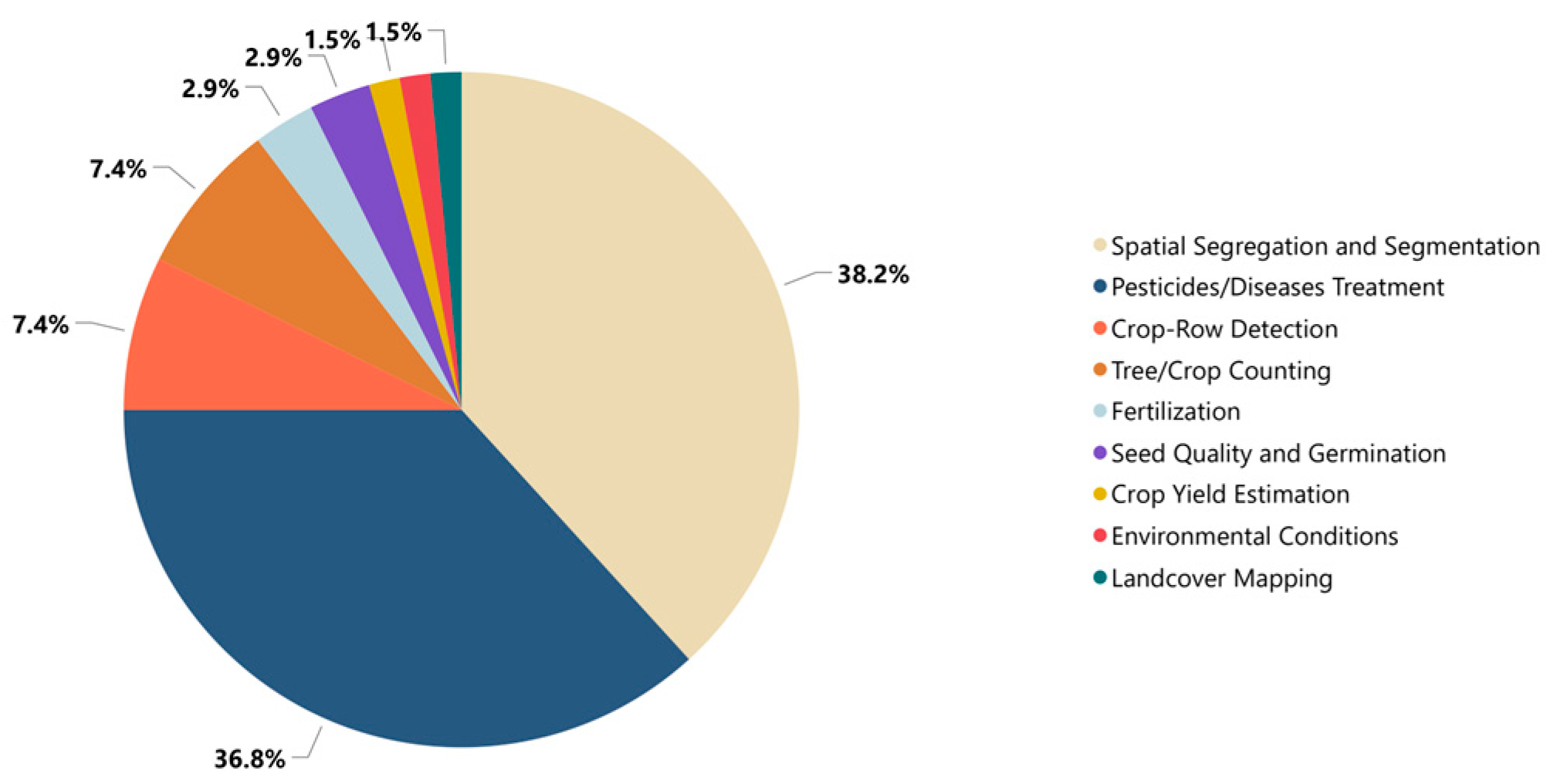

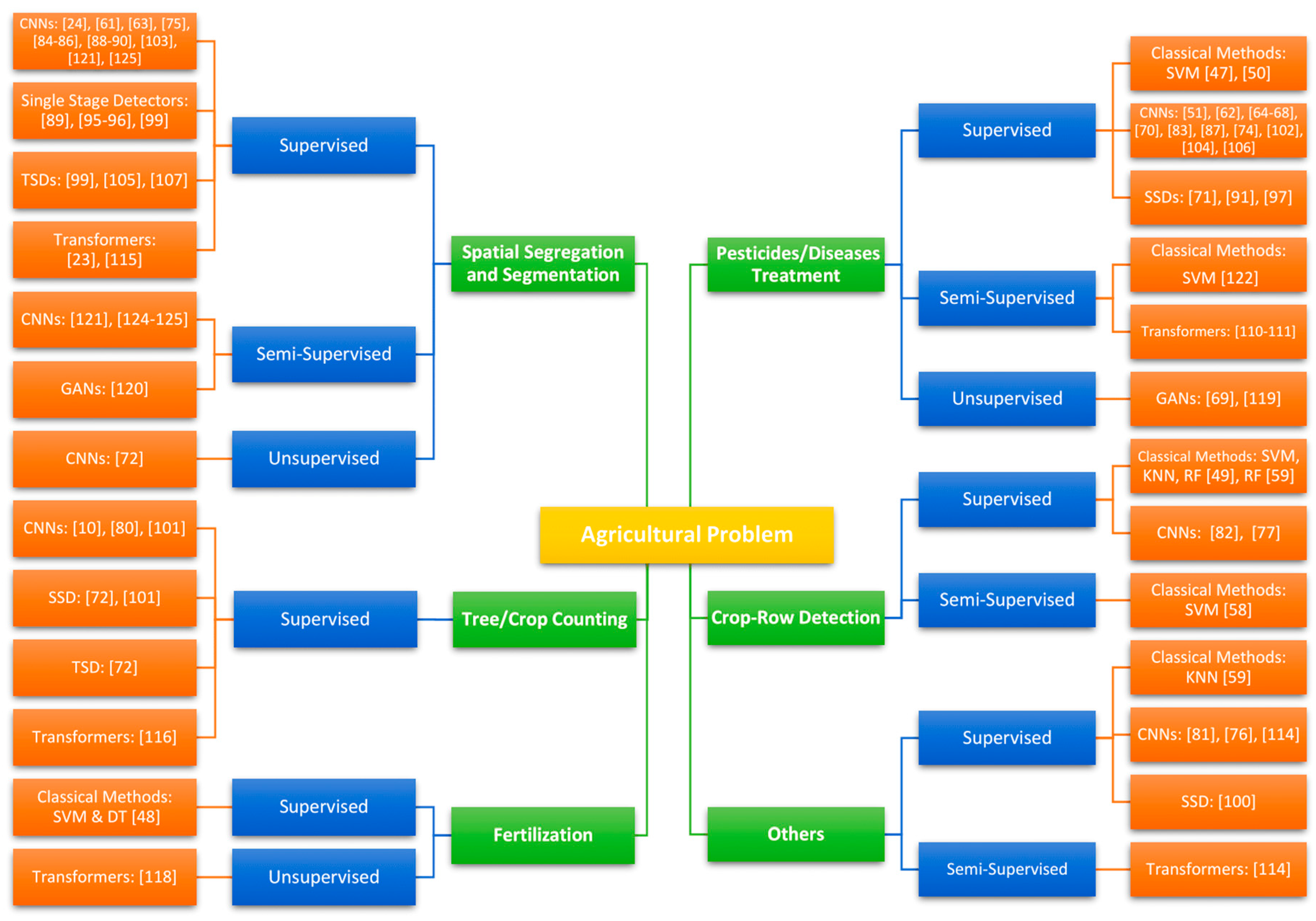

6. Discussion and Future Work

6.1. Machine Learning Techniques

6.2. Best Techniques for Agricultural Problems

6.3. Future Work

7. Conclusion

Conflicts of Interest

References

- Global Report on Food Crises. p. 277.

- Shaikh, T.A.; Rasool, T.; Lone, F.R. Towards leveraging the role of machine learning and artificial intelligence in precision agriculture and smart farming. Comput. Electron. Agric. 2022, 198. [Google Scholar] [CrossRef]

- Mylonas, D. Stavrakoudis, D. Katsantonis, and E. Korpetis, “Better farming practices to combat climate,” Academic Press, pp. 1–29, 2020. [CrossRef]

- Wolińska, “Metagenomic Achievements in Microbial Diversity Determination in Croplands: A Review,” in Microbial Diversity in the Genomic Era, S. Das and H. R. Dash, Eds., Academic Press, 2019, pp. 15–35. [CrossRef]

- Mohamed, Z.; Terano, R.; Sharifuddin, J.; Rezai, G. Determinants of Paddy Farmer's Unsustainability Farm Practices. Agric. Agric. Sci. Procedia 2016, 9, 191–196. [Google Scholar] [CrossRef]

- K. R. Krishna, Push button agriculture: Robotics, drones, satellite-guided soil and crop management. CRC Press, 2017.

- ISPA (International Society of Precision Agriculture). Precison Ag Definition. 2019. Available online: https://www.ispag.org/about/definition (accessed on 14 July 2021).

- Singh, P.; Pandey, P.C.; Petropoulos, G.P.; Pavlides, A.; Srivastava, P.K.; Koutsias, N.; Deng, K.A.K.; Bao, Y. Hyperspectral remote sensing in precision agriculture: Present status, challenges, and future trends. In Hyperspectral Remote Sensing; Elsevier: Amsterdam, The Netherlands, 2020; pp. 121–146. [Google Scholar]

- Cisternas, I.; Velásquez, I.; Caro, A.; Rodríguez, A. Systematic literature review of implementations of precision agriculture. Comput. Electron. Agric. 2020, 176, 105626. [Google Scholar] [CrossRef]

- Hosseiny, B.; Rastiveis, H.; Homayouni, S. An Automated Framework for Plant Detection Based on Deep Simulated Learning from Drone Imagery. Remote. Sens. 2020, 12, 3521. [Google Scholar] [CrossRef]

- Aburasain, R.Y.; Edirisinghe, E.A.; Albatay, A. Palm Tree Detection in Drone Images Using Deep Convolutional Neural Networks: Investigating the Effective Use of YOLO V3. Conference on Multimedia, Interaction, Design and Innovation. LOCATION OF CONFERENCE, COUNTRYDATE OF CONFERENCE; pp. 21–36.

- Lu, Y.; Young, S. A survey of public datasets for computer vision tasks in precision agriculture. Comput. Electron. Agric. 2020, 178, 105760. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; García-Nieto, J.; Hassan, S.A.; Zaidi, S.A.R.; Iqbal, N. Precision Agriculture Techniques and Practices: From Considerations to Applications. Sensors 2019, 19, 3796. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef]

- D.-K. K. Chengjuan Ren and Dongwon Jeong, “A Survey of Deep Learning in Agriculture: Techniques and Their Applications,” Journal of Information Processing Systems, vol. 16, no. 5, pp. 1015–1033, Oct. 2020. [CrossRef]

- V. Meshram, K. Patil, V. Meshram, D. Hanchate, and S. Ramkteke D., “Machine learning in agriculture domain: A state-of-art survey | Elsevier Enhanced Reader.”. Available online: https://reader.elsevier.com/reader/sd/pii/S2667318521000106?token=634DCBF0EC91ABEE41DEF3AF831054DC77F10DB01949D5414FBFDEC54E71CE2F2127753BEBEF4695C760D0F00D992D14&originRegion=eu-west-1&originCreation=20220719054056 (accessed on 19 July 2022).

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Aslan, M.F.; Durdu, A.; Sabanci, K.; Ropelewska, E.; Gültekin, S.S. A Comprehensive Survey of the Recent Studies with UAV for Precision Agriculture in Open Fields and Greenhouses. Appl. Sci. 2022, 12, 1047. [Google Scholar] [CrossRef]

- Food and Agriculture Organization of the United Nations. Available online: http://www.fao.org/news/story/en/item/1187738/icode/ (accessed on 9 September 2020).

- Khakimov, I. Salakhutdinov, A. Omonlikov, and S. Utagnov, “Traditional and current-prospective methods of agricultural plant diseases detection: A review.”. Available online: https://www.researchgate.net/publication/357714060_Traditional_and_current-prospective_methods_of_agricultural_plant_diseases_detection_A_review (accessed on 21 July 2022).

- Fang, Y.; Ramasamy, R.P. Current and Prospective Methods for Plant Disease Detection. Biosensors 2015, 5, 537–561. [Google Scholar] [CrossRef]

- Ecological Understanding of Insects in Organic Farming Systems: How Insects Damage Plants | eOrganic.”. Available online: https://eorganic.org/node/3151 (accessed on 21 July 2022).

- Reedha, R.; Dericquebourg, E.; Canals, R.; Hafiane, A. Transformer Neural Network for Weed and Crop Classification of High Resolution UAV Images. Remote. Sens. 2022, 14, 592. [Google Scholar] [CrossRef]

- Arun, R.A.; Umamaheswari, S.; Jain, A.V. Reduced U-Net Architecture for Classifying Crop and Weed using Pixel-wise Segmentation. 2020 IEEE International Conference for Innovation in Technology (INOCON). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 1–6.

- Sharma, A.; Kumar, V.; Shahzad, B.; Tanveer, M.; Sidhu, G.P.S.; Handa, N.; Kohli, S.K.; Yadav, P.; Bali, A.S.; Parihar, R.D.; et al. Worldwide pesticide usage and its impacts on ecosystem. SN Appl. Sci. 2019, 1, 1446. [Google Scholar] [CrossRef]

- Rai, M.; Ingle, A. Role of nanotechnology in agriculture with special reference to management of insect pests. Appl. Microbiol. Biotechnol. 2012, 94, 287–293. [Google Scholar] [CrossRef]

- Pest control efficiency in Agriculture - Futurcrop. Available online: https://futurcrop.com/en/blog/post/pest-control-efficiency-in-agriculture (accessed on 28 July 2022).

- Urban vegetation classification: Benefits of multitemporal RapidEye satellite data | Elsevier Enhanced Reader. Available online: https://reader.elsevier.com/reader/sd/pii/S0034425713001429?token=8C76FFADC2BF1E1A573FBD896D61E55BF50B5D27C5866C1CBB6B247EC2328C2170337BF8AF4B02F0D77FBB9520D68A30&originRegion=us-east-1&originCreation=20220722111257 (accessed on 22 July 2022).

- Remote sensing of urban environments: Special issue | Elsevier Enhanced Reader. Available online: https://reader.elsevier.com/reader/sd/pii/S0034425711002781?token=418370169508BB5AAFF98E8B53A3FB30990D7886B72D5D22A007F023EBF3BF79DCFCC674C589CA16CD7EBA979A63F179&originRegion=us-east-1&originCreation=20220722111729 (accessed on 22 July 2022).

- IOWAAGLITERACY, “Why do they do that? – Estimating Yields,” Iowa Agriculture Literacy, Sep. 18, 2019. Available online: https://iowaagliteracy.wordpress.com/2019/09/18/why-do-they-do-that-estimating-yields/ (accessed on 21 July 2022).

- Horie, T.; Yajima, M.; Nakagawa, H. Yield forecasting. Agric. Syst. 1992, 40, 211–236. [Google Scholar] [CrossRef]

- Crop Yield,” Investopedia. Available online: https://www.investopedia.com/terms/c/crop-yield.asp (accessed on 21 July 2022).

- Altalak, M.; Uddin, M.A.; Alajmi, A.; Rizg, A. Smart Agriculture Applications Using Deep Learning Technologies: A Survey. Appl. Sci. 2022, 12, 5919. [Google Scholar] [CrossRef]

- R. K. Prange, “Pre-harvest, harvest and post-harvest strategies for organic production of fruits and vegetables.”. Available online: https://www.researchgate.net/publication/284871289_Pre-harvest_harvest_and_post-harvest_strategies_for_organic_production_of_fruits_and_vegetables (accessed on 25 July 2022).

- PDF) Tomato Fruit Yields and Quality under Water Deficit and Salinity. Available online: https://www.researchgate.net/publication/279642090_Tomato_Fruit_Yields_and_Quality_under_Water_Deficit_and_Salinity (accessed on 30 July 2022).

- Atay, E.; Hucbourg, B.; Drevet, A.; Lauri, P.-E. INVESTIGATING EFFECTS OF OVER-IRRIGATION AND DEFICIT IRRIGATION ON YIELD AND FRUIT QUALITY IN PINK LADYTM ‘ROSY GLOW’ APPLE. Acta Sci. Pol. Hortorum Cultus 2017, 16, 45–51. [Google Scholar] [CrossRef]

- Li, X.; Ba, Y.; Zhang, M.; Nong, M.; Yang, C.; Zhang, S. Sugarcane Nitrogen Concentration and Irrigation Level Prediction Based on UAV Multispectral Imagery. Sensors 2022, 22, 2711. [Google Scholar] [CrossRef]

- Aden, S.T.; Bialas, J.P.; Champion, Z.; Levin, E.; McCarty, J.L. Low cost infrared and near infrared sensors for UAVs. ISPRS - Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2014, XL-1, 1–7. [CrossRef]

- Arah, I.K.; Amaglo, H.; Kumah, E.K.; Ofori, H. Preharvest and Postharvest Factors Affecting the Quality and Shelf Life of Harvested Tomatoes: A Mini Review. Int. J. Agron. 2015, 2015, 478041. [Google Scholar] [CrossRef]

- What methods can improve crop performance? | Royal Society. Available online: https://royalsociety.org/topics-policy/projects/gm-plants/what-methods-other-than-genetic-improvement-can-improve-crop-performance/ (accessed on 29 July 2022).

- Takamatsu, T.; Kitagawa, Y.; Akimoto, K.; Iwanami, R.; Endo, Y.; Takashima, K.; Okubo, K.; Umezawa, M.; Kuwata, T.; Sato, D.; et al. Over 1000 nm Near-Infrared Multispectral Imaging System for Laparoscopic In Vivo Imaging. Sensors 2021, 21, 2649. [Google Scholar] [CrossRef]

- Sellami, A.; Tabbone, S. Deep neural networks-based relevant latent representation learning for hyperspectral image classification. Pattern Recognit. 2021, 121, 108224. [Google Scholar] [CrossRef]

- Multispectral Image - an overview | ScienceDirect Topics. Available online: https://www.sciencedirect.com/topics/earth-and-planetary-sciences/multispectral-image (accessed on 13 December 2022).

- Turner, E.L.; Schafer, J.; Ford, E.; O'Malley-James, J.T.; Kaltenegger, L.; Kite, E.S.; Gaidos, E.; Onstott, T.C.; Lingam, M.; Loeb, A.; et al. Vegetation's Red Edge: A Possible Spectroscopic Biosignature of Extraterrestrial Plants. Astrobiology 2005, 5, 372–390. [Google Scholar] [CrossRef]

- Land Management Information Center - Minnesota Land Ownership,” MN IT Services. Available online: https://www.mngeo.state.mn.us/chouse/airphoto/cir.html (accessed on 13 December 2022).

- GISGeography, “What is NDVI (Normalized Difference Vegetation Index)?,” GIS Geography, , 2017. 09 May. Available online: https://gisgeography.com/ndvi-normalized-difference-vegetation-index/ (accessed on 13 December 2022).

- Tendolkar, A.; Choraria, A.; Pai, M.M.M.; Girisha, S.; Dsouza, G.; Adithya, K. Modified crop health monitoring and pesticide spraying system using NDVI and Semantic Segmentation: An AGROCOPTER based approach. 2021 IEEE International Conference on Autonomous Systems (ICAS). LOCATION OF CONFERENCE, CanadaDATE OF CONFERENCE; pp. 1–5.

- Júnior, P.C.P.; Monteiro, A.; Ribeiro, R.D.L.; Sobieranski, A.C.; Von Wangenheim, A. Comparison of Supervised Classifiers and Image Features for Crop Rows Segmentation on Aerial Images. Appl. Artif. Intell. 2020, 34, 271–291. [Google Scholar] [CrossRef]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-based crop and weed classification for smart farming. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3024–3031. [Google Scholar]

- V. Lakshmanan, M. V. Lakshmanan, M. Görner, and R. Gillard, Practical Machine Learning for Computer Vision. O’Reilly Media, Inc., 2021.

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Deep learning techniques to classify agricultural crops through UAV imagery: a review. Neural Comput. Appl. 2022, 34, 9511–9536. [Google Scholar] [CrossRef] [PubMed]

- Zaidi, S.S.A.; Ansari, M.S.; Aslam, A.; Kanwal, N.; Asghar, M.; Lee, B. A survey of modern deep learning based object detection models. Digit. Signal Process. 2022, 126. [Google Scholar] [CrossRef]

- Tian, D.; Han, Y.; Wang, B.; Guan, T.; Gu, H.; Wei, W. Review of object instance segmentation based on deep learning. J. Electron. Imaging 2021, 31, 041205. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, T.; Yang, C.; Song, H.; Jiang, Z.; Zhou, G.; Zhang, D.; Feng, H.; Xie, J. Segmenting Purple Rapeseed Leaves in the Field from UAV RGB Imagery Using Deep Learning as an Auxiliary Means for Nitrogen Stress Detection. Remote. Sens. 2020, 12, 1403. [Google Scholar] [CrossRef]

- Liliane, T.N.; MutwCharlengas, M.S.C. Factors Affecting Yield of Crops. In Agronomy-Climate Change & Food Security; Amanullah, IntechOpen: London, UK, 2020; Volume 9, pp. 1–16. [Google Scholar] [CrossRef]

- Natividade, J.; Prado, J.; Marques, L. Low-cost multi-spectral vegetation classification using an Unmanned Aerial Vehicle. 2017 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC). LOCATION OF CONFERENCE, PortugalDATE OF CONFERENCE; pp. 336–342.

- Pérez-Ortiz, M.; Peña, J.; Gutiérrez, P.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. A semi-supervised system for weed mapping in sunflower crops using unmanned aerial vehicles and a crop row detection method. Appl. Soft Comput. 2015, 37, 533–544. [Google Scholar] [CrossRef]

- Rodriguez-Garlito, E.C.; Paz-Gallardo, A. Efficiently Mapping Large Areas of Olive Trees Using Drones in Extremadura, Spain. 2021, 2, 148–156. [CrossRef]

- Rocha, B.M.; Vieira, G.d.S.; Fonseca, A.U.; Pedrini, H.; de Sousa, N.M.; Soares, F. Evaluation and Detection of Gaps in Curved Sugarcane Planting Lines in Aerial Images. 2020 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE). LOCATION OF CONFERENCE, CanadaDATE OF CONFERENCE; pp. 1–4.

- Crimaldi, M.; Cristiano, V.; De Vivo, A.; Isernia, M.; Ivanov, P.; Sarghini, F. Neural Network Algorithms for Real Time Plant Diseases Detection Using UAVs. International Mid-Term Conference of the Italian Association of Agricultural Engineering. LOCATION OF CONFERENCE, ItalyDATE OF CONFERENCE; pp. 827–835.

- Milioto, A.; Lottes, P.; Stachniss, C. Real-time blob-wise sugar beets vs weeds classification for monitoring fields using convolutional neural networks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 41–48. [Google Scholar] [CrossRef]

- Bah, M.D.; Dericquebourg, E.; Hafiane, A.; Canals, R. Deep learning based classification system for identifying weeds using high-resolution UAV imagery. Science and Information Conference:Springer, 2018,176-87.

- Reddy, S.R.G.; Varma, G.P.S.; Davuluri, R.L. Optimized convolutional neural network model for plant species identification from leaf images using computer vision. Int. J. Speech Technol. 2021, 26, 23–50. [Google Scholar] [CrossRef]

- Sembiring, A.; Away, Y.; Arnia, F.; Muharar, R. Development of Concise Convolutional Neural Network for Tomato Plant Disease Classification Based on Leaf Images. J. Physics: Conf. Ser. 2021, 1845. [Google Scholar] [CrossRef]

- Geetharamani, G. and Arun Pandian J, “Identification of plant leaf diseases using a nine-layer deep convolutional neural network | Elsevier Enhanced Reader.”. Available online: https://reader.elsevier.com/reader/sd/pii/S0045790619300023?token=2C657881C2D2EDBB85C23044E8BFCA69B649314432597FA9D45A48392FD1B10F49E30D04D95706CA328CD1A114A7E17E&originRegion=eu-west-1&originCreation=20220615171346 (accessed on 15 June 2022).

- R. , K.; M., H.; Anand, S.; Mathikshara, P.; Johnson, A.; R., M. Attention embedded residual CNN for disease detection in tomato leaves. Appl. Soft Comput. 2019, 86, 105933. [Google Scholar] [CrossRef]

- Nanni, L.; Manfè, A.; Maguolo, G.; Lumini, A.; Brahnam, S. High performing ensemble of convolutional neural networks for insect pest image detection. Ecol. Informatics 2021, 67, 101515. [Google Scholar] [CrossRef]

- Atila, M. Uçar, K. Akyol, and E. Uçar, “Plant leaf disease classification using EfficientNet deep learning model | Elsevier Enhanced Reader.”. Available online: https://reader.elsevier.com/reader/sd/pii/S1574954120301321?token=6A5F2FC8AE4A263722309CBE19B195E31EB4D614A298D3B3DAD91C75A971E4A88E7545F453E28200C3D2E11D49D8C189&originRegion=us-east-1&originCreation=20220616151758 (accessed on 16 June 2022).

- Prasad, N. Mehta, M. Horak, and W. D. Bae, “A two-step machine learning approach for crop disease detection: an application of GAN and UAV technology.” arXiv, Sep. 18, 2021. Accessed: Jun. 19, 2022. [Online]. 1106. Available online: http://arxiv.org/abs/2109.

- Albattah, W.; Javed, A.; Nawaz, M.; Masood, M.; Albahli, S. Artificial Intelligence-Based Drone System for Multiclass Plant Disease Detection Using an Improved Efficient Convolutional Neural Network. Front. Plant Sci. 2022, 13, 808380. [Google Scholar] [CrossRef]

- Mishra, S.; Sachan, R.; Rajpal, D. Deep Convolutional Neural Network based Detection System for Real-time Corn Plant Disease Recognition. Procedia Comput. Sci. 2020, 167, 2003–2010. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep Learning with Unsupervised Data Labeling for Weed Detection in Line Crops in UAV Images. Remote. Sens. 2018, 10, 1690. [Google Scholar] [CrossRef]

- Zheng, Y.; Sarigul, E.; Panicker, G.; Stott, D. Vineyard LAI and canopy coverage estimation with convolutional neural network models and drone pictures. Sensing for Agriculture and Food Quality and Safety XIV. LOCATION OF CONFERENCE, United StatesDATE OF CONFERENCE; pp. 29–38.

- Yang, R.; Lu, X.; Huang, J.; Zhou, J.; Jiao, J.; Liu, Y.; Liu, F.; Su, B.; Gu, P. A Multi-Source Data Fusion Decision-Making Method for Disease and Pest Detection of Grape Foliage Based on ShuffleNet V2. Remote. Sens. 2021, 13, 5102. [Google Scholar] [CrossRef]

- Briechle, S.; Krzystek, P.; Vosselman, G. CLASSIFICATION OF TREE SPECIES AND STANDING DEAD TREES BY FUSING UAV-BASED LIDAR DATA AND MULTISPECTRAL IMAGERY IN THE 3D DEEP NEURAL NETWORK POINTNET++. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. 2020, V-2-2020, 203–210. [CrossRef]

- Aiger, D.; Allen, B.; Golovinskiy, A. Large-Scale 3D Scene Classification with Multi-View Volumetric CNN. Computer Vision and Pattern Recognition. arXiv 2017, arXiv:1712.09216. Available online: https://arxiv.org/abs/1712.09216 (accessed on 25 December 2021). [Google Scholar]

- Bah, M.D.; Hafiane, A.; Canals, R. CRowNet: Deep Network for Crop Row Detection in UAV Images. IEEE Access 2019, 8, 5189–5200. [Google Scholar] [CrossRef]

- Ronneberger, P. Fischer, and T. Brox, "U-Net: Convolutional Networks for Biomedical Image Segmentation," arXiv.org, May 18, 2015. Available online: https://arxiv.org/abs/1505.04597.

- Kitano, B.T.; Mendes, C.C.T.; Geus, A.R.; Oliveira, H.C.; Souza, J.R. Corn Plant Counting Using Deep Learning and UAV Images. IEEE Geosci. Remote. Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Lin, Z.; Guo, W. Sorghum Panicle Detection and Counting Using Unmanned Aerial System Images and Deep Learning. Front. Plant Sci. 2020, 11. [Google Scholar] [CrossRef]

- L. El Hoummaidi, A. L. El Hoummaidi, A. Larabi, and K. Alam, “Using unmanned aerial systems and deep learning for agriculture mapping in Dubai | Elsevier Enhanced Reader.”. Available online: https://reader.elsevier.com/reader/sd/pii/S240584402102257X?token=08CBD9EDFD77D8950813E1351450D76E494A52920C53A724FFC4763B2D03C6A459F63D6C1C254EF04EBB26AA5515DACC&originRegion=eu-west-1&originCreation=20220601233300 (accessed on 2 June 2022).

- Doha, R.; Al Hasan, M.; Anwar, S.; Rajendran, V. Deep Learning based Crop Row Detection with Online Domain Adaptation. KDD '21: The 27th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. LOCATION OF CONFERENCE, SingaporeDATE OF CONFERENCE;

- Zhang, T.; Yang, Z.; Xu, Z.; Li, J. Wheat Yellow Rust Severity Detection by Efficient DF-UNet and UAV Multispectral Imagery. IEEE Sensors J. 2022, 22, 9057–9068. [Google Scholar] [CrossRef]

- Tsuichihara, S.; Akita, S.; Ike, R.; Shigeta, M.; Takemura, H.; Natori, T.; Aikawa, N.; Shindo, K.; Ide, Y.; Tejima, S. Drone and GPS Sensors-Based Grassland Management Using Deep-Learning Image Segmentation. 2019 Third IEEE International Conference on Robotic Computing (IRC). LOCATION OF CONFERENCE, ItalyDATE OF CONFERENCE; pp. 608–611.

- Yang, M.-D.; Boubin, J.G.; Tsai, H.P.; Tseng, H.-H.; Hsu, Y.-C.; Stewart, C.C. Adaptive autonomous UAV scouting for rice lodging assessment using edge computing with deep learning EDANet. Comput. Electron. Agric. 2020, 179. [Google Scholar] [CrossRef]

- Weyler, J.; Magistri, F.; Seitz, P.; Behley, J.; Stachniss, C. In-Field Phenotyping Based on Crop Leaf and Plant Instance Segmentation. 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). LOCATION OF CONFERENCE, United StatesDATE OF CONFERENCE; pp. 2968–2977.

- Guo, Y.; Zhang, J.; Yin, C.; Hu, X.; Zou, Y.; Xue, Z.; Wang, W. Plant Disease Identification Based on Deep Learning Algorithm in Smart Farming. Discret. Dyn. Nat. Soc. 2020, 2020, 1–11. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Mesas-Carrascosa, F.J.; Jiménez-Brenes, F.M.; de Castro, A.I.; López-Granados, F. Early Detection of Broad-Leaved and Grass Weeds in Wide Row Crops Using Artificial Neural Networks and UAV Imagery. Agronomy 2021, 11, 749. [Google Scholar] [CrossRef]

- Zhang, X.; Li, N.; Ge, L.; Xia, X.; Ding, N. A Unified Model for Real-Time Crop Recognition and Stem Localization Exploiting Cross-Task Feature Fusion. 2020 IEEE International Conference on Real-time Computing and Robotics (RCAR). LOCATION OF CONFERENCE, JapanDATE OF CONFERENCE; pp. 327–332.

- Li, N.; Zhang, X.; Zhang, C.; Guo, H.; Sun, Z.; Wu, X. Real-Time Crop Recognition in Transplanted Fields With Prominent Weed Growth: A Visual-Attention-Based Approach. IEEE Access 2019, 7, 185310–185321. [Google Scholar] [CrossRef]

- Chen, C.-J.; Huang, Y.-Y.; Li, Y.-S.; Chen, Y.-C.; Chang, C.-Y.; Huang, Y.-M. Identification of Fruit Tree Pests With Deep Learning on Embedded Drone to Achieve Accurate Pesticide Spraying. IEEE Access 2021, 9, 21986–21997. [Google Scholar] [CrossRef]

- Z. Qin, W. Z. Qin, W. Wang, K.-H. Dammer, L. Guo, and Z. Cao, “A Real-time Low-cost Artificial Intelligence System for Autonomous Spraying in Palm Plantations.” arXiv, Mar. 06, 2021. Accessed: Jun. 15, 2022. [Online]. Available online: http://arxiv.org/abs/2103.04132.

- Parico, A.I.B.; Ahamed, T. An Aerial Weed Detection System for Green Onion Crops Using the You Only Look Once (YOLOv3) Deep Learning Algorithm. Eng. Agric. Environ. Food 2020, 13, 42–48. [Google Scholar] [CrossRef]

- C. Rui, G. C. Rui, G. Youwei, Z. Huafei, and J. Hongyu, “A Comprehensive Approach for UAV Small Object Detection with Simulation-based Transfer Learning and Adaptive Fusion.” arXiv, Sep. 04, 2021. Accessed: Jun. 15, 2022. [Online]. 0180. Available online: http://arxiv.org/abs/2109.01800.

- Parico, A.I.B.; Ahamed, T. Real Time Pear Fruit Detection and Counting Using YOLOv4 Models and Deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef] [PubMed]

- Jintasuttisak, T.; Edirisinghe, E.; Elbattay, A. Deep neural network based date palm tree detection in drone imagery. Comput. Electron. Agric. 2021, 192, 106560. [Google Scholar] [CrossRef]

- Y. Tian, G. Y. Tian, G. Yang, Z. Wang, E. Li, and Z. Liang, “Detection of Apple Lesions in Orchards Based on Deep Learning Methods of CycleGAN and YOLOV3-Dense.”. Available online: https://www.researchgate.net/publication/355887041_Detection_of_Apple_Lesions_in_Orchards_Based_on_Deep_Learning_Methods_of_CycleGAN_and_YOLOV3-Dense (accessed on 20 June 2022).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar] [CrossRef]

- Veeranampalayam Sivakumar, A.N.; Li, J.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid- to Late-Season Weed Detection in UAV Imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Ridho, M.F. ; Irwan Strawberry Fruit Quality Assessment for Harvesting Robot using SSD Convolutional Neural Network. 2021 8th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI). LOCATION OF CONFERENCE, IndonesiaDATE OF CONFERENCE;

- Ammar, A.; Koubaa, A.; Benjdira, B. Deep-Learning-Based Automated Palm Tree Counting and Geolocation in Large Farms from Aerial Geotagged Images. Agronomy 2021, 11, 1458. [Google Scholar] [CrossRef]

- W.-H. Su et al., “Automatic Evaluation of Wheat Resistance to Fusarium Head Blight Using Dual Mask-RCNN Deep Learning Frameworks in Computer Vision.”. Available online: https://www.researchgate.net/publication/347793558_Automatic_Evaluation_of_Wheat_Resistance_to_Fusarium_Head_Blight_Using_Dual_Mask-RCNN_Deep_Learning_Frameworks_in_Computer_Vision (accessed on 20 June 2022).

- Der Yang, M.; Tseng, H.H.; Hsu, Y.C.; Tseng, W.C. Real-time Crop Classification Using Edge Computing and Deep Learning. 2020 IEEE 17th Annual Consumer Communications & Networking Conference (CCNC). LOCATION OF CONFERENCE, United StatesDATE OF CONFERENCE; pp. 1–4.

- Menshchikov, A.; Shadrin, D.; Prutyanov, V.; Lopatkin, D.; Sosnin, S.; Tsykunov, E.; Iakovlev, E.; Somov, A. Real-Time Detection of Hogweed: UAV Platform Empowered by Deep Learning. IEEE Trans. Comput. 2021, 70, 1175–1188. [Google Scholar] [CrossRef]

- Weyler, J.; Quakernack, J.; Lottes, P.; Behley, J.; Stachniss, C. Joint Plant and Leaf Instance Segmentation on Field-Scale UAV Imagery. IEEE Robot. Autom. Lett. 2022, 7, 3787–3794. [Google Scholar] [CrossRef]

- P. Lottes, J. P. Lottes, J. Behley, N. Chebrolu, A. Milioto, and C. Stachniss, “Robust joint stem detection and crop-weed classification using image sequences for plant-specific treatment in precision farming.”. Available online: https://www.researchgate.net/publication/335117645_Robust_joint_stem_detection_and_crop-weed_classification_using_image_sequences_for_plant-specific_treatment_in_precision_farming (accessed on 7 June 2022).

- Su, D.; Qiao, Y.; Kong, H.; Sukkarieh, S. Real time detection of inter-row ryegrass in wheat farms using deep learning. Biosyst. Eng. 2021, 204, 198–211. [Google Scholar] [CrossRef]

- et al. , “Attention is All you Need,” in Advances in Neural Information Processing Systems, Curran Associates, Inc., 2017. Accessed: Jun. 18, 2022. [Online]. Available online: https://proceedings.neurips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html.

- et al. , “An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale.” arXiv, Jun. 03, 2021. [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics) LNCS; Volume 12346, pp. 213–229. [Google Scholar] [CrossRef]

- Thai, H.-T.; Tran-Van, N.-Y.; Le, K.-H. Artificial Cognition for Early Leaf Disease Detection using Vision Transformers. 2021 International Conference on Advanced Technologies for Communications (ATC). LOCATION OF CONFERENCE, VietnamDATE OF CONFERENCE; pp. 33–38.

- J. Deng, W. J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “ImageNet: A Large-Scale Hierarchical Image Database,” p. 8.

- Cassava Leaf Disease Classification. Available online: https://kaggle.com/competitions/cassava-leaf-disease-classification (accessed on 18 June 2022).

- Karila, K.; Oliveira, R.A.; Ek, J.; Kaivosoja, J.; Koivumäki, N.; Korhonen, P.; Niemeläinen, O.; Nyholm, L.; Näsi, R.; Pölönen, I.; et al. Estimating Grass Sward Quality and Quantity Parameters Using Drone Remote Sensing with Deep Neural Networks. Remote. Sens. 2022, 14, 2692. [Google Scholar] [CrossRef]

- S. Dersch, A. S. Dersch, A. Schottl, P. Krzystek, and M. Heurich, “NOVEL SINGLE TREE DETECTION BY TRANSFORMERS USING UAV-BASED MULTISPECTRAL IMAGERY - ProQuest.”. Available online: https://www.proquest.com/openview/228f8f292353d30b26ebcdd38372d40d/1?pq-origsite=gscholar&cbl=2037674 (accessed on 15 June 2022).

- Chen, G.; Shang, Y. Transformer for Tree Counting in Aerial Images. Remote. Sens. 2022, 14, 476. [Google Scholar] [CrossRef]

- Liu, W.; Salzmann, M.; Fua, P. Context-Aware Crowd Counting. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5094–5103. [Google Scholar]

- X. Zhang et al., “The self-supervised spectral-spatial attention-based transformer network for automated, accurate prediction of crop nitrogen status from UAV imagery,” arXiv, arXiv:2111.06839, Feb. 2022. arXiv:2111.06839, Feb. 2022. [CrossRef]

- Coletta, L.F.; de Almeida, D.C.; Souza, J.R.; Manzione, R.L. Novelty detection in UAV images to identify emerging threats in eucalyptus crops. Comput. Electron. Agric. 2022, 196. [Google Scholar] [CrossRef]

- Silva, C.A.; Hudak, A.T.; Vierling, L.A.; Loudermilk, E.L.; O’brien, J.J.; Hiers, J.K.; Jack, S.B.; Gonzalez-Benecke, C.; Lee, H.; Falkowski, M.J.; et al. Imputation of Individual Longleaf Pine (Pinus palustrisMill.) Tree Attributes from Field and LiDAR Data. Can. J. Remote. Sens. 2016, 42, 554–573. [Google Scholar] [CrossRef]

- Bosilj, P.; Aptoula, E.; Duckett, T.; Cielniak, G. Transfer learning between crop types for semantic segmentation of crops versus weeds in precision agriculture. J. Field Robot. 2019, 37, 7–19. [Google Scholar] [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Iqbal, J.; Alam, M. A novel semi-supervised framework for UAV based crop/weed classification. PLOS ONE 2021, 16, e0251008. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual Tree-Crown Detection in RGB Imagery Using Semi-Supervised Deep Learning Neural Networks. Remote. Sens. 2019, 11, 1309. [Google Scholar] [CrossRef]

- Z. Alom, T. M. Z. Alom, T. M. Taha, and V. K. Asari, “Recurrent Residual Convolutional Neural Network based on U-Net (R2U-Net) for Medical Image Segmentation,” p. 12.

- Fawakherji, M.; Potena, C.; Prevedello, I.; Pretto, A.; Bloisi, D.D.; Nardi, D. Data Augmentation Using GANs for Crop/Weed Segmentation in Precision Farming. 2020 IEEE Conference on Control Technology and Applications (CCTA). LOCATION OF CONFERENCE, CanadaDATE OF CONFERENCE; pp. 279–284.

- Fawakherji, M.; Potena, C.; Pretto, A.; Bloisi, D.D.; Nardi, D. Multi-Spectral Image Synthesis for Crop/Weed Segmentation in Precision Farming. Robot. Auton. Syst. 2021, 146, 103861. [Google Scholar] [CrossRef]

- Mazzia, V.; Khaliq, A.; Salvetti, F.; Chiaberge, M. Real-Time Apple Detection System Using Embedded Systems With Hardware Accelerators: An Edge AI Application. IEEE Access 2020, 8, 9102–9114. [Google Scholar] [CrossRef]

- Tay, Y.; Dehghani, M.; Bahri, D.; Metzler, D. Efficient Transformers: A Survey. ACM Comput. Surv. 2022, 55, 1–28. [Google Scholar] [CrossRef]

- M. Poli et al., “Hyena Hierarchy: Towards Larger Convolutional Language Models.” arXiv, Mar. 05, 2023. [CrossRef]

- Senecal, J.J.; Sheppard, J.W.; Shaw, J.A. Efficient Convolutional Neural Networks for Multi-Spectral Image Classification. 2019 International Joint Conference on Neural Networks (IJCNN). LOCATION OF CONFERENCE, HungaryDATE OF CONFERENCE; pp. 1–8.

- Yang, Z.; Li, J.; Shi, X.; Xu, Z. Dual flow transformer network for multispectral image segmentation of wheat yellow rust. International Conference on Computer, Artificial Intelligence, and Control Engineering (CAICE 2022). LOCATION OF CONFERENCE, ChinaDATE OF CONFERENCE; pp. 119–125.

- Tao, C.; Meng, Y.; Li, J.; Yang, B.; Hu, F.; Li, Y.; Cui, C.; Zhang, W. MSNet: multispectral semantic segmentation network for remote sensing images. GIScience Remote. Sens. 2022, 59, 1177–1198. [Google Scholar] [CrossRef]

- M. López and J. Alberto, “The use of multispectral images and deep learning models for agriculture: the application on Agave,” Dec. 2022, Accessed: Mar. 12, 2023. [Online]. 1128. Available online: https://repositorio.tec.mx/handle/11285/650159.

- Victor, Z. He, and A. Nibali, “A systematic review of the use of Deep Learning in Satellite Imagery for Agriculture.” arXiv, Oct. 03, 2022. [CrossRef]

- P. Sarigiannidis, “Peach Tree Disease Detection Dataset.” IEEE, Nov. 23, 2022. Accessed: Mar. 12, 2023. [Online]. Available online: https://ieee-dataport.org/documents/peach-tree-disease-detection-dataset.

| Stage | Challenges |

|---|---|

| Pre-Harvesting | Disease Detection and Diagnosis, Seed Quality, Fertilisers Application, Field Segmentation, Urban Vegetation Classification |

| Harvesting | Crops Detection and Classification, Pest Detection and Control, Crop Yield Estimation, Tree counting, Maturity Level, Cropland Extent |

| Post-Harvesting | Fruit Grading, Quality retaining processes, Storage Environmental Conditions, Chemicals Usage detection |

| Paper | SVM | |||

|---|---|---|---|---|

| Model / Architecture | Approach | Comments | Best Results | |

| Tendolkar et al. [47] |

SVM |

Dual-step based approach of pixel-wise NDVI calculation and semantic segmentation helps in overcoming NDVI issues. | Model was not compared to any other. | Precision: 85% Recall: 81% F1-score: 79% |

| Natividade et al. [56] | SVM | Pattern recognition system allows for classification of images taken by low-cost cameras. | Accuracy, precision, and recall values of model vary highly between datasets | Dataset #1 (1st configuration): [Accuracy: 78%, Precision: 93%, Recall: 86%, Accuracy: 72%] Dataset #2: [Accuracy: 83%, Precision: 97%, Recall: 94%, Accuracy: 73%] |

| Pérez-Ortiz et al. [57] | SVM | Able to detect weeds outside and within crop rows. Does not require a big training dataset |

Segmentation process produces salt and pepper noise effect on images. Training images are manually selected. Model inference is influenced by training image selection. |

Mean Average Error (MAE): 12.68% |

| César Pereira et al. [48] | LSVM | Model can be trained fast with a small training set | Image dataset is small and simple, containing images of sugarcane cultures only. | Using RGB + EXG + GABOR filters: IOU: 0.788654 F1: 0.880129 |

| Paper | KNN | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| César Pereira et al. [48] | KNN3, KNN11 |

Simplest algorithms to implement amongst implemented algorithms in the paper | Models did not achieve the best results in the paper | KNN3 and 11 with RGB+ EXG+GABOR filters: [IOU: 0.76, F1: 0.86] |

| Rodríguez-Garlito and Paz-Gallardo [58] | KNN | Uses an automatic window processing method that allows for the use of ML algorithms on large multispectral images. | Model did not achieve the best results in the paper | Approximate values: AP: 0.955 Accuracy score: 0.918 |

| Rocha et al. [59] | KNN | Best performing classifier | Model cannot perform sugarcane line detection and fault measurement on sugarcane fields of all growth stages | Relative Error: 1.65% |

| Paper | DT & RF | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| Natividade et al. [56] | DT | Pattern recognition system allows for classification of images taken by low-cost cameras. | Model did not outperform SVM on all chosen metrics | Dataset #2: [Accuracy: 77%, Precision: 87%, Recall: 90%, Accuracy: 79%] |

| Lottes et al. [49] | RF | Model can detect plants relying only on its shape. | Low precision and recall for detecting weeds under the “other weeds” class | Saltbush class recall: 95%, Chamomile class recall: 87%, sugar beet class recall: 78%, recall of other weeds class: 45% Overall model accuracy for predicted objects: 86% |

| Paper | U-Net | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| Lin et al. [80] | U-NET | Can detect overlapping sorghum panicles | The performance decreases with lower number of training images (<500) | Accuracy: 95.5% RMSE: 2.5% |

| Arun et al. [24] | Reduced U-NET | Reduces the total number of parameters and results in a lower error rate | The comparison was done with models that were used to problems not related to agriculture which is what this model was used for | Accuracy: 95.34% Error rate: 7.45% |

| Hoummaidi et al. [81] | U-NET | Real-time and the use of multispectral images | Trees obstruction and physical characteristics causes it to have errors, however it can be improved using a better dataset | Accuracy: 89.7% Palm trees Detection rate: 96.03% Ghaf trees Detection rate: 94.54% |

| Doha et al. [82] | U-NET | The method they used can refine the results of the U-Net to reduce errors as well as do frame interpolation of the input video stream | Not enough results were given | Variance: 0.0083 |

| Zhang et al. [83] | DF-U-Net | Reduces the computation load by more than half and had the highest accuracy among other models compared | Early-stage rust disease is difficult to recognize | F1: 94.13% OA: 96.93% Precision: 94.02% |

| Tsuichihara et al. [84] | U-Net | Accuracy is 80% for only 48 images trained | Low accuracy and that is due to the small number of images which is due to manually painting 6 colors on each image | Accuracy: ~80% |

| Paper | Other Segmentation Models | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| Yang et al. [85] | EDANet | Improved prior work on identifying and lodging by 2.51% and 8.26% respectively | Drone images taken from a greater height do not perform well, however, with the method they proposed it can have reliable results | Identify rice Accuracy: 95.28% Lodging Accuracy: 86.17% If less than 2.5% lodging is neglected, then the accuracy increases to 99.25%. |

| Weyler et al. [86] | ERFNet-based instance segmentation | Data was gathered from real agricultural fields | Comparison was made on different datasets | Crop leaf segmentation Average precision: 48.7% Average recall: 57.3% Crop segmentation Average precision: 60.4% Average recall: 68% |

| Guo et al. [87] | Three stage model with RPN, Chan-Vese algorithms and a transfer learning model | Outperformed the traditional ResNet-101 which had an accuracy of 42.5% and is unsupervised | The Chan-Vese algorithm runs for a long time | Accuracy 83.75% |

| Sanchez et al. [88] | MLP | Carried in commercial fields and not under controlled conditions | The dataset was captured noon to avoid shadow | Overall accuracy on two classes of crops: 80.09% |

| Zhang et al. [89] | UniSteamNet | Joint crop recognition and stem detection in real-time. Fast and can finish processing each image within 6ms. | The scores of this model were not always the best and the differences were small | Segmentation F1: 97.4% IoU: 94.5 Stem detection SDR: 97.8% |

| Paper | YOLO | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| Chen et al. [91] | Tiny-YOLOv3 | Results in excellent outcomes in regard of FPS and mAP. In addition, reduces pesticide use. | Has a high false identification in adult T. papillosa |

mAP score of 95.33% |

| Qin et al. [92] | Ag-YOLO (v3-tiny) |

Tested Yolov3 with multiple backbones and achieved optimum results in terms of FPS and power consumption | Uses NCS2 that supports 16-bit float point values only | F1 Score of 92.05% |

| Parico et al. [93] | YOLO-Weed (v3) | High speed and mAP score | Limitations in detecting small objects | mAP score of 93.81% F1 score of 94% |

| Rui et al. [94] | YOLOv5 | Improves YOLOv5 ability to detect small objects | Was not tested on agricultural applications | +7.1% of the original model |

| Parico et al. [95] | YOLOv4 (multiple versions) |

Proves that YOLOv4-CSP has the lowest FPS with the highest mAP | Limitations in detecting small objects | AP score of 98% |

| Jintasuttisak et al. [96] | YOLOv5m | Compares different YOLO versions and proves that YOLOv5 with medium depth outperformers the rest even with overlapped trees. | YOLOv5x scored a higher detection average due to the increased number of layers. | mAP score of 92.34% |

| Tian et al. [97] | YOLOv3 (modified) | Tackles the lack of data by generating new images using CycleGAN | The model is weak without the images generated using CycleGAN | F1-score of 81.6% and IoU score of 91.7% |

| Paper | DT & RF | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| Sivakumar et al. [99] | FRCNN | the optimal confidence threshold of the SSD model was found to be much lower than that of the Faster RCNN model. | Inference time of SSD is better than that of FRCNN, but it can be improved with a cost in performance. | 66% F1-score and 85% IoU. |

| Ammar et al. [101] | FRCNN | Large advantage in terms of speed | Very weak in detecting trees. Outperformed by Efficient-Det D5 and YOLOv3 | 87.13% and 49.41% IoU for Palm and Trees respectively |

| Su et al. [102] | Mask RCNN | Superior in comparison to CNN. | Inference time was not taken into consideration | 98.81% accuracy |

| Yang et al. [103] | FCN-AlexNet | Provides good comparison between SegNet and AlexNet | Outperformed by SegNet | 88.48% Recall Rate |

| Menshchikov et al. [104] | FCNN | Proposed method is applicable in real world scenario and the use of RGB cameras are cheaper than multi-spectral cameras | Complex algorithms compared to the multi-spectral approach |

ROC AUC in segmentation: 0.96 |

| Hosseiny et al. [10] | A model with the core of the framework based on the faster Regional CNN (R-CNN) with a backbone of ResNet-101 for object detection. | Results are good for an unsupervised method | Tested only on single object detection and automatic crop row estimation can fail due to dense plant distribution | Precision: 0.868 Recall: 0.849 F1: 0.855 |

| Paper | Transformers | |||

|---|---|---|---|---|

| Model/ Architecture | Strengths | Comments | Best Results | |

| Reedha et al. [23] | ViT | The classification of crops and weed images using ViTs yields the best prediction performance. | Slightly overperformers existing CNN models | an F1-score of 99.4% and 99.2% were obtained from ViT-B16 and ViT-B32, respectively. |

| Karila et al. [114] | ViT | The ViT RGB models performed the best on several types of datasets. | VGG CNN models provided equally reliable results in most cases. |

Multiple results shown on several types of datasets |

| Dersch et al. [115] | DETR | DETR clearly outperformed YOLOv4 in mixed and deciduous plots | DETR failed to detect smaller trees far worse than YOLOv4 in multiple cases. | An F1-Score of 86% and 71% in mixed and deciduous plots respectively |

| Chen et al. [116] | DENT | The model outperformed most of the state-of-the-art methods | CANNet achieved better results | a Mean Absolute Error (MAE) of 10.7 and a Root Mean Squared Error (RMSE) of 13.7 |

| Coletta et al [119] | Active Learning | The model can classify unknown data. | Did not test the performance of different classification models other than their own. | An accuracy of 98% and a Recall of 97% |

| Problem | Type of learning | Paper | Model/Architecture | Dataset | Best Results |

|---|---|---|---|---|---|

| Spatial Segregation and Segmentation | Supervised | Jintasuttisak et al. [96] | YOLOv5 | date palm trees collected using a drone | mAP score of 92.34% |

| Semi-Supervised | Fawakherji et al [118] | cGANs | of 5,400 RGB images of pears and strawberries of which 20% were labelled | An IoU score of 83.1% on mixed data including both original and synthesized. | |

| Unsupervised | Bah et al. [72] | ResNet18 | UAV images of spinach and bean fields | AUC: 91.7% | |

| Pesticides/ Diseases Treatment | Supervised | Zhang et al. [73] | DF-U-Net | Yangling UAV images | F1: 94.13% Accuracy: 96.93% Precision: 94.02% |

| Semi-Supervised | Coletta et al [119] | Active Learning: SVM | UAV images collected from Eucalyptus plantations | An accuracy of 98% and a Recall of 97% | |

| Unsupervised | Khan et al. [117] | SGAN | UAV images collected from strawberry and pea fields | Accuracy ~90% | |

| Fertilization | Supervised | Natividade et al. [56] | SVM | UAV images of vineyards and forests | Accuracy: 83%, Precision: 97%, Recall: 94% |

| Unsupervised | Zhang et al. [115] | SSVT | UAV images of a wheat field | 96.5% on 384x384-sized images | |

| Crop-Row Detection | Supervised | Cesar Pereira et al. [47] | SVM | Manually collected RGB images | 88.01% F1-Score |

| Semi-Supervised | Pérez-Ortiz et al. [57] | SVM | UAV images collected from a sunflower plot | MAE: 12.68% | |

| Tree/Crop Counting | Supervised | Ammar et al. [98] | FRCNN | Tree counting | 87.13% IoU om Palms and 49.41% on other trees |

| Semi- Supervised | Chen et al. [113] | DENT | Yosemite Tree dataset | 10.7 MAE score | |

| Others | Supervised | Aiger et al. [76] | CNN | UAV images of various types of landcover | 96.3% accuracy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).