1. Introduction

Various types of small unmanned aerial vehicles pose a serious threat to infrastructure, hardware and people [

1]. At the same time, the accurate detection of drone targets in low resolution, visually blurred infrared images is a challenging task. There are two main problems:

1) The influence of the target itself: due to the long imaging distance, infrared targets are generally small, with only a few to several tens of pixels in the image. In addition, infrared targets usually have a low signal-to-noise ratio (SCR) and are easily submerged in strong noise and cluttered backgrounds. Therefore, the radiation intensity of the target is lower and it lacks significant morphological features, making target detection in infrared images difficult.

2) The contradiction between the target and the detection algorithm: compared to targets in visible light images, targets in infrared images present more challenging problems. Such as the lack of shape and texture features, which leads to the weakening or even loss of high-frequency amplitude of small targets after filtering and convolution calculations. Besides, although building shallow networks can improve performance in deep learning algorithms, the contradiction between advanced semantic features and high resolution still cannot be resolved.

Overall, there are too many negative samples in the image due to the large variation in target size and the extremely low percentage of pixels in infrared images, resulting in the loss of most of the available information during algorithm operation [

2]. In addition, most negative samples are easily classified, which makes it difficult for the algorithm to optimize in the expected direction. Therefore, the nets designed for normal objects is hardly use to detect small infrared targets.

To detect small infrared targets, researchers have proposed many traditional methods over the past few decades. The traditional detection method involves implementing SIRST (Single-frame InfraRed Small Target) detection by calculating the non-coherence between target and background. Typical methods include filter-based methods [

3,

4,

5], which can only suppress uniform and smooth background noise, resulting in high false alarm rates and unstable performance for complex backgrounds. The HVS method [

6,

7,

8,

9] uses the ratio of gray values between each pixel position and its neighbouring region as an enhancement factor, which can effectively enhance the real target. However, it cannot effectively suppress the background noise. Methods based on low-rank representation [

10,

11,

12] can adapt to infrared images with low SCR ratios. However, in complex backgrounds, there is still a high false alarm rate for small and shape-varying targets. Most traditional methods heavily rely on manual features. These methods are simple calculation and do not require training or learning. However, designing hand-crafted features and tuning hyperparameters require expert knowledge and a significant amount of engineering efforts.

With the development of CNN methods, more data-driven methods are being applied to infrared small target detection [

13,

14,

15,

16]. Data-driven methods are suitable for more complex real scenarios, and are less affected by target size, shape, and background changes. These methods require a large amount of data to demonstrate strong model fitting ability and have achieved better detection performance than traditional methods. Based on data-driven methods, the convolutional segmentation network can simultaneously produce pixel-level classification and location output [

17]. The first segmentation-based SIRST detection method ACM was proposed by [

18], which designed a semantic segmentation network using asymmetric context module. On this basis, Dai [

19] further introduced expanded local contrast to improve their model. By combining traditional methods with deep learning methods and using bottom-up local attention modulation modules to embed subtle low-level details at higher levels, excellent detection performance was achieved. In [

20], a balance between missed detection (MD) and false alarms (FA) was achieved by using cGAN networks to separately build models for MD and FA as two subtasks as generators. Next, a discriminator for image classification is used to distinguish the outputs of the two generators and ground-truth images. Zhang [

21] uses attention mechanisms to guide the pyramid context network to detect targets. First, the feature map is partitioned to calculate local correlations. Second, the global contextual attention is used to calculate the correlation between semantics. Finally, the decoded images of different scales are fused to improve detection performance. Cheng [

22] Using visible light imagery to achieve drone detection. First, the backbone network is lightly improved by using a multi-scale fusion method to improve the use of shallow features. To address the problem of drone loss in multi-scale detection, a novel non-maximum suppression method is developed to ultimately achieve real-time detection. However, the above methods still have many shortcomings. First, the problem of small target feature loss in the deep layers of the network still exists, and the contradiction between high-level semantic features and high-resolution cannot be resolved. Second, the coding maps generated by each downsampling layer cannot be well used. Overall, the above methods overlook the characteristics of drones. These problems will make the detection algorithm less robust to scene changes (such as cluttered backgrounds, targets with different SCR, shapes and sizes).

To solve these problems, we propose a data-driven progressive feature fusion detection method (PFFNet) from the perspective of infrared unmanned aerial vehicle target detection. First, global features were extracted from the input infrared image. Then, passes the encoding maps output by the downsampling to the FSM and PFM modules. The deep features that include high-level semantic information, the shallow features that contain rich image contour and the position information can be fully fused. Thereby improving the utilization of the output encoding maps of the downsampling layer. In addition, the output feature maps are cross-scale fused to enhance the response amplitude of infrared unmanned aerial vehicle targets in the deep network and solve the problem of feature loss in small targets in the deep layers of the network. The high-level semantic information and shallow semantic information are superimposed and output through dimensional cascading. The confidence map is obtained through threshold segmentation to output the final detection result. Finally, to verify the effectiveness of PFFNet, we conducted extensive ablation studies on FSM and PFM. And then conducted comparative experiments with existing methods on the SIRST Aug and IRSTD datasets. The experimental results show that the various modules of PFFNet have improved the detection of infrared unmanned aerial vehicle targets. Our algorithm has stronger robustness, better detection performance, and faster target detection time.

2. Methods

Given an input image I, we aim to classify each pixel by end-to-end convolving a neural network to determine whether it is a drone target. Finally output a segmentation result that is the same size as I. The PFFNet detection algorithm is divided into two parts: the global feature extractor and the progressive feature fusion network. The global feature extractor extracts the basic features of the input infrared image I by looking at the entire image. The redundant information in the image can effectively reduce by obtaining these basic features.

The progressive fusion network is divided into two modules: the Neck and the Head. The Neck includes the Pool Pyramid Fusion Model (PFM) and the Feature Selection Model (FSM). The former is used to enhance the feature response amplitude in the deep network of the infrared drone target. The latter acts as a bridge for information interaction between high and low layers, increasing the utilization rate of the downsampling output encoding map. The Head implements the progressive fusion of feature maps of different scales and generates a segmentation mask.

As shown in

Figure 1, the input image I is encoded into different dimensions and resolutions by the backbone to generate encoding maps

ba (

a=2, 3, 4). The low-level spatial position information of the target's salient features is obtained from

ba (a=2,3) by the FSM. Locating the high-frequency response area to reduce the influence of redundant signals on the target position information, and outputs the feature maps

fa (

a=2,3). The

b4 is used as the input of the PFM to output the decoded image

p. The PFM is composed of four different pooling structures in parallel to form a pyramid network. The high-frequency response amplitude of deep target features is enhanced and then passes it to the FSM after upsampling. The FSM and PFM extract local features of targets and use the progressive fusion method to calculate the phase output feature maps

ya (

a=1,2,3). After being processed by the Ghost Model [

23],

ya is doubled in size and element-wise added. This process greatly simplifies the task of small target detection by sharing the same weight for all convolution blocks, and reduces the parameters of the P algorithm by using element-wise addition while reducing the network inference time.

Then, the fused output is upsampled and dimensionally cascaded through convolution calculation. We proposed a multi-scale fusion strategy to progressively fuse feature maps of different sizes. Furthermore, the confidence map O is obtained by performing the final threshold segmentation on the fused feather map. Backbone is mainly used to expand the receptive field and extract deep semantic features. Upsampling helps to restore the size of the feature map. The progressive multi-scale feature fusion is achieved by upsampling and downsampling. The FSM and PFM modules are used to ensure the feature representation of small targets in the network.

To achieve good context data modeling ability, the simplest way is repeatedly and stack the network depth. The more layers the network has, the richer the semantic information and the larger the receptive field [

24,

25,

26,

27]. However, infrared small targets have significant differences in size and a very low pixel ratio. If the network depth is blindly increased, the problem of feature disappearance may occur after the drone target undergoes multiple downsampling operations. Therefore, we should design special modules to extract high-level features while ensuring the representation of small targets with a very small pixel ratio in the deep network.

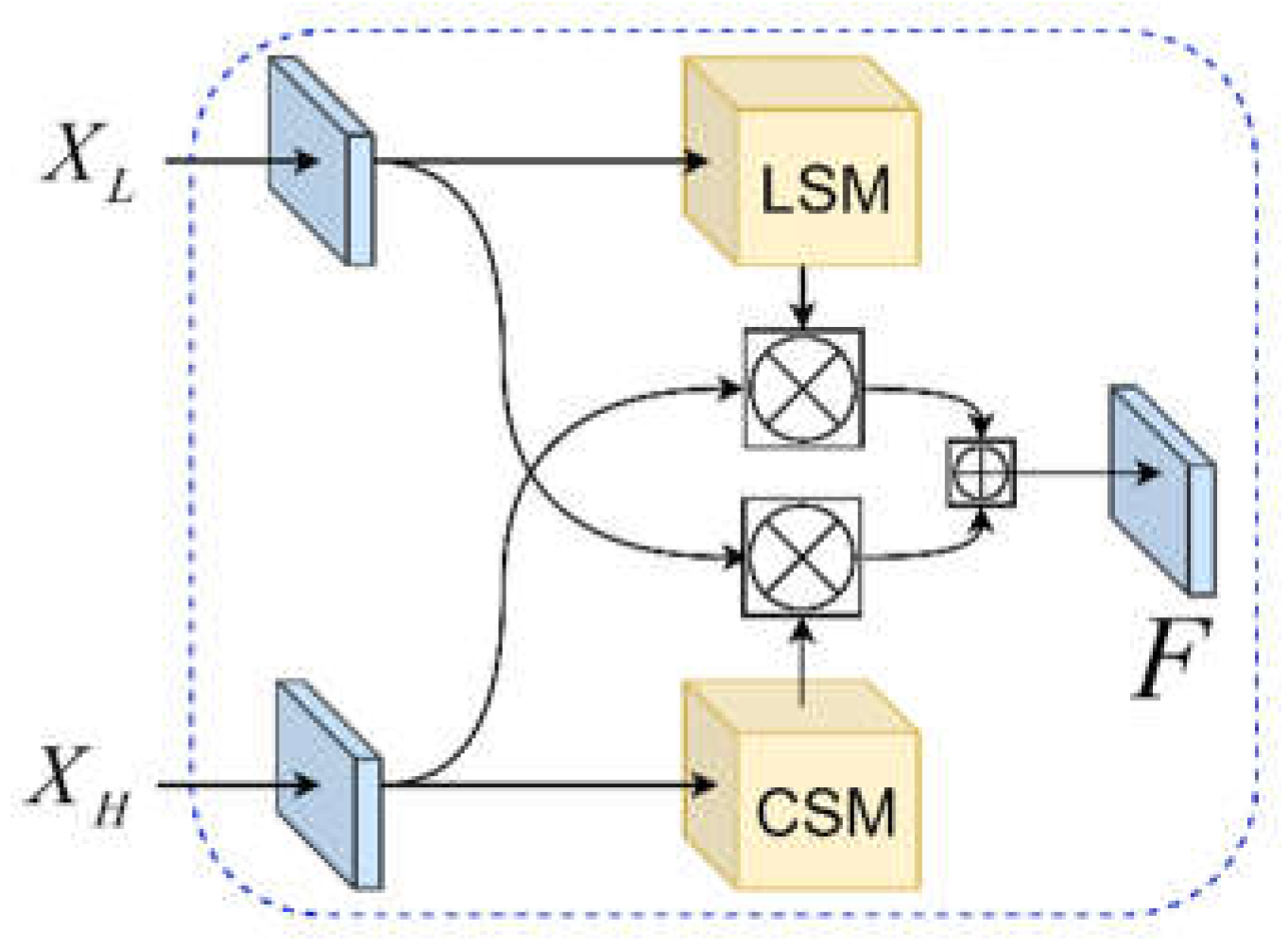

2.1. Feature Selection Module

The Feature Selection Module is mainly divided into two parts: LSM (Location Selection Model) and CSM (Channel Selection Model). Due to the small proportion in the image, drone targets are easy to lose or even weakening of the response amplitude of the target area during downsampling and upsampling. We found through experiments that there are rich target contour features in high-level semantic features, and accurate target location information in low-level semantic features. The FSM can use the semantic information of each dimension to achieve information interaction between different encoding maps. Through this module, the utilization rate of the downsampling and upsampling output encoding maps can be effectively increased. Besides, the effectiveness of multi-scale feature fusion can be guaranteed by locating and enhancing the high-frequency response amplitude area.

Figure 2 shows the feature representation of small targets retained in deep networks without losing spatial detail encoding of target positions. By combining LSM and CSM and utilizing CSM to enhance information interaction between high and low levels, obtaining target position through LSM. The combination forms the Feature Selection Module, which can fully integrate the deep features of high-level semantic information and the shallow features with rich image contour information and position information. And then improving the utilization rate of the encoding output map. The output of the feature selection module

can be represented as:

Where

XH is the deep feature that includes high-level semantic information,

XL is the shallow feature that contains rich image contour information and position information, ⊗ and ⊕ represents element-wise multiplication and addition of vectors, C and L represent the CSM and LSM modules, respectively.

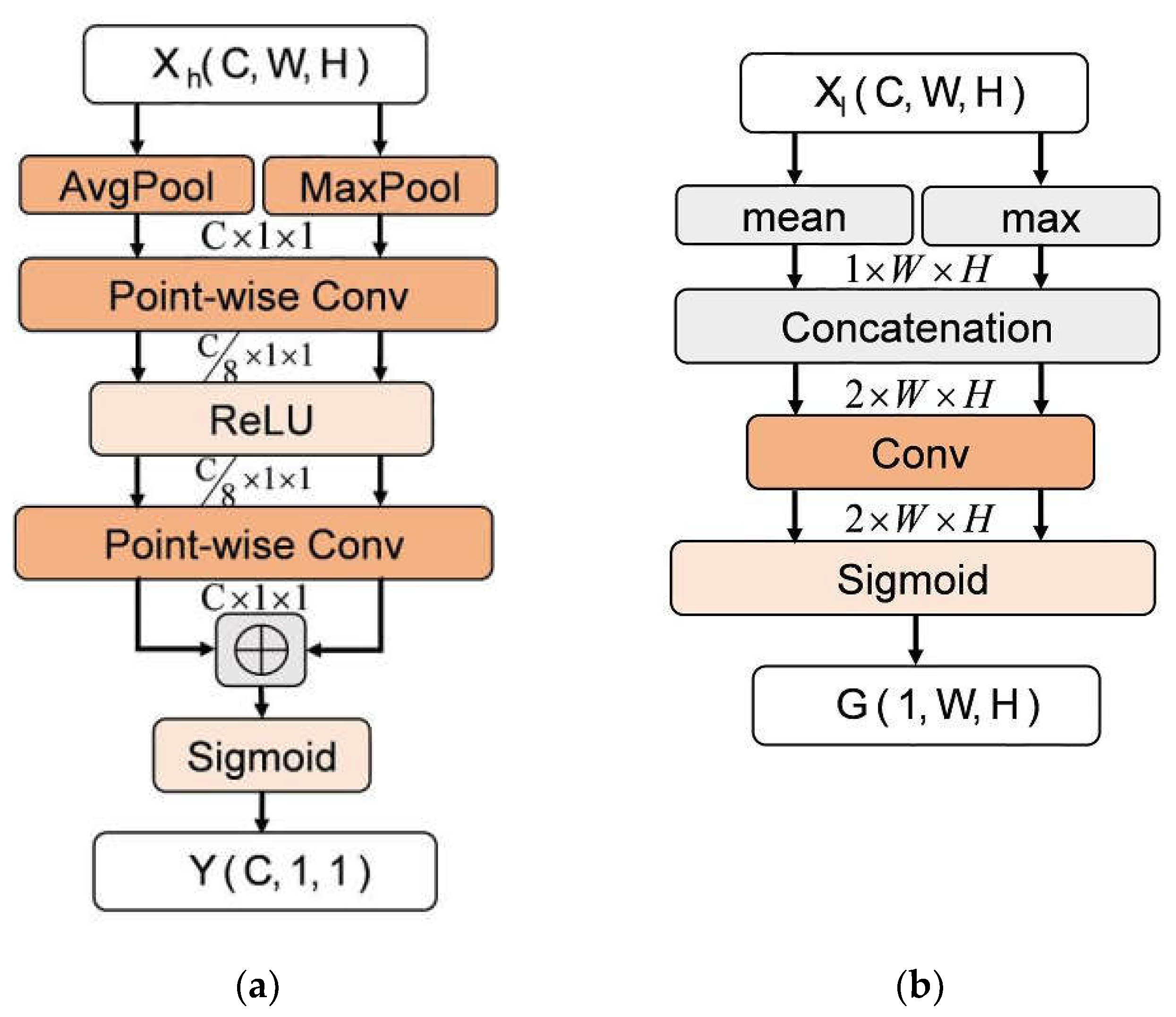

2.2. Channel Selection Model

To solve the problem of losing or weakening the target area response value during upsampling of drone targets, we use CSM to enhance the target area response amplitude. As shown in

Figure 3(a), the channel features at each spatial position are individually aggregated. The subtle details of deep drone targets are highlighted by directionally enhancing the high-frequency response channel weights of small targets. This module first performs average pooling and max pooling operations on the input feature map X to generate different 3D tensors

xi. Coupleing the global information of the feature map X in its internal channel. Then, a 1x1 convolution is used to evaluate the importance of each channel and calculate the corresponding weight. The aggregated output

can be represented as:

When

i=1,

x1 is the feature vector obtained by average-pooling. When

i=2,

x2 is the feature vector obtained by max-pooling.

are the point-wise convolutions with two convolution kernels of size 1×1 but different dimensions. δ represents the sigmoid function. σ represents the rectified linear unit, and output size of

and

. Inspired by [

28], this paper takes

r=8 as the downsampling ratio for channel reduction.

2.3. Location Selection Model

The pixel number of infrared small targets in infrared images is extremely low, which lead to easily introduce interference signals during the process of feature extraction. LSM could be used to quickly locate local regions with visual saliency. As shown in

Figure 3(b), this module calculates the maximum and mean values of the input feature map X and performs a cascade operation in the dimension direction. Then the module performs a convolution operation on the concatenated feature map. Here, a 7×7 convolution can further expand the receptive field of the convolution kernel, capturing areas with higher local response amplitudes from the lower-level network. In addition, the accurate position of the drone target in the entire feature map is calculated. The high response amplitude area

can be calculated using the following formula:

When i=1, M(*) takes the mean of the feature map X. When i=2, M(*) takes the maximum value of the feature map X; ℂ represents the dimension cascade operation. The final output size of the feature map of this module is (1, W, H).

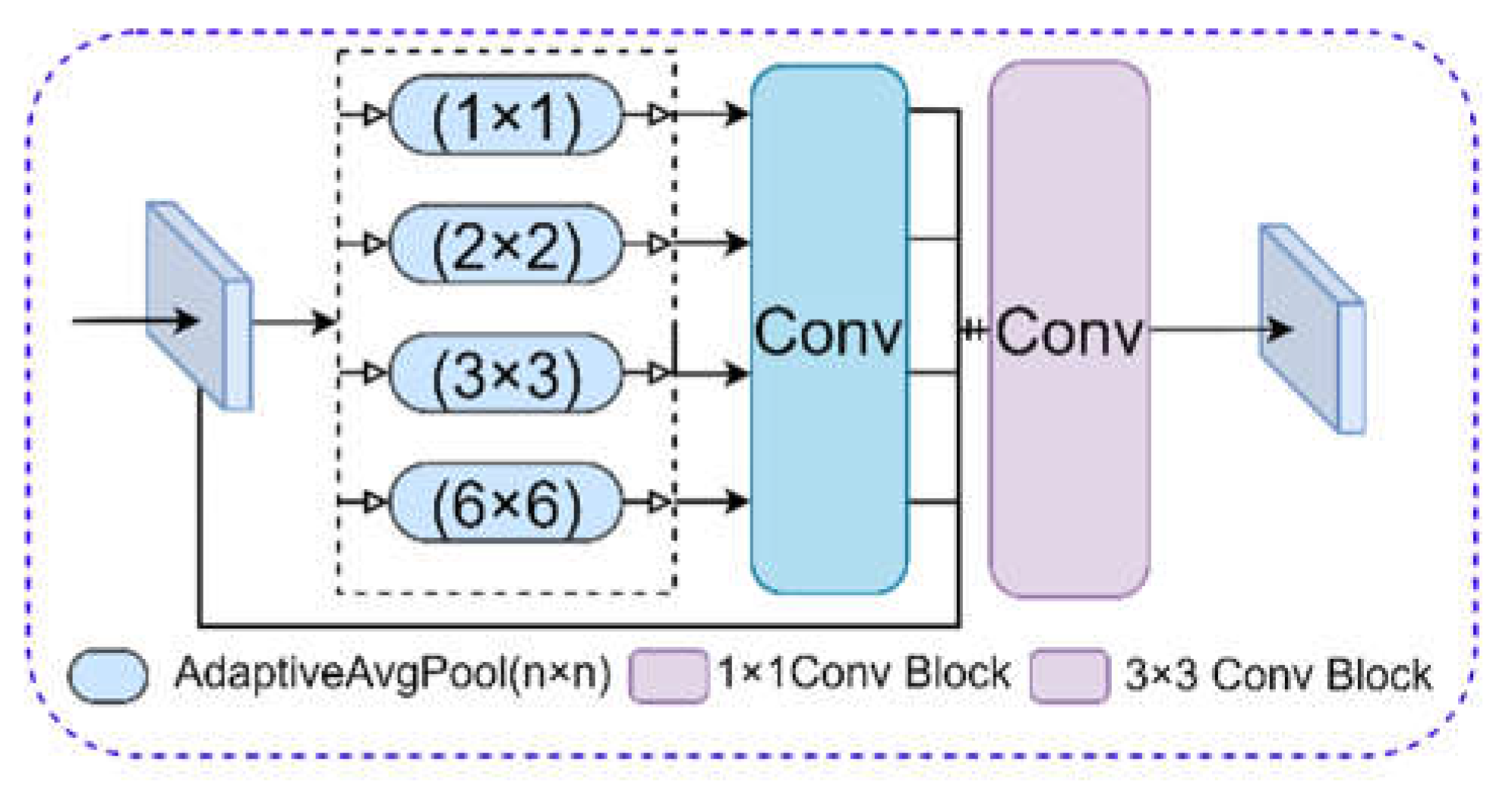

2.4. PFM

Deeper neural networks can obtain more detailed semantic information of the target, but this method is not suitable for smaller targets. As the number of downsampling increases, the feature of drone targets (such as propellers and arms) weakens or even disappears. To solve this problem, this paper proposes a Pooling Pyramid Fusion Module (PFM) for infrared small target detection, which is used to process the encoding map of the highest downsampling layer. Due to the small target size, spatial dimension compression can be achieved through different global adaptive pooling layer structures. Besides, the corresponding dimension mean-value can be extracted to enhance the feature representation of small targets in deep networks. As shown in

Figure 4, the input feature map

is parallelly input into the pyramid pooling module for decoding, generating four encoding structures of different size 1×1, 2×2, 3×3, and 6×6. Then, 1×1 convolution is used to reduce the feature dimension to 1/4C. The four feature maps of different sizes are upsampled by bilinear interpolation. Then concatenating with the input feature map in the channel dimension. Finally, a 3×3 convolution is performed to output the feature map

, and form a contextual pyramid though five feature maps of the same dimension but different scales.

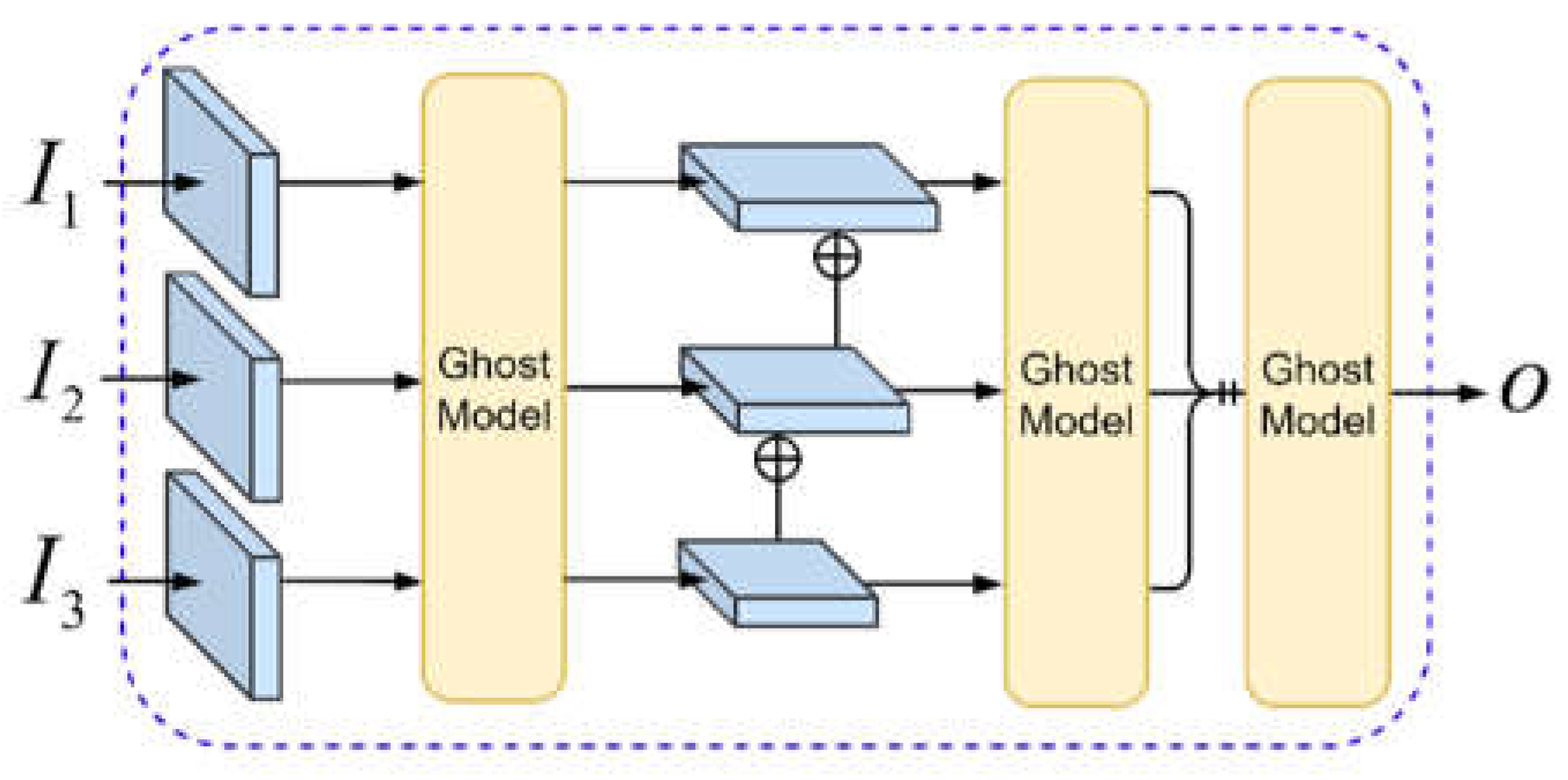

2.5. Segmentation Head

After multiple downsampling and convolution calculations, the targets’ feature response in the deepest layer of the convolutional network will weaken. In response to this problem, we proposed a progressive feature fusion structure that is better suited for drones. As shown in

Figure 5, this segmentation head can fuse different sizes of feature maps and enable the stacking of information between high and low layers to enhance the high-frequency response amplitude of the target. The input

I with different sizes are proceed through Ghost Model, which is used to generate encoding maps with the same number and texture information through simple linear calculations. This reduces the convolution parameter volume and improves training and inference efficiency.

PFFNet uses

SoftIoULoss and

CELoss to train the entire network and optimize the weighted loss between the predicted and segmented images. They can be expressed by the following formula:

where

T and

P represent the pixel values of the real target and the output prediction, respectively. Based on the initial loss value during training,

α=3 and

β=1 were set to balance the individual loss with the total loss to optimize the algorithm in the expected direction. To ensure the stability in the calculation, this paper sets

smooth=1. Different weight balances may affect performance indicators [

29].

3. Experiments

This section mainly introduces the implementation details and evaluation metrics of the algorithm, and compares it with other methods on two different datasets. In order to verify the effectiveness of the data-driven model PFFNet, comparative experiments and ablation experiments were conducted respectively.

3.1. Datasets

Performance based on data-driven methods is highly affected by the quality, quantity, and diversity of the data. Infrared image datasets have fewer images compared to visible datasets. Most methods are trained and evaluated on their own private datasets. Wang [

20] established an open infrared small target datasets which includes 10k images. However, many of the targets in the dataset are too large and the annotations are not accurate which affects the training effectiveness. Dai [

18] released a dataset with high-quality semantic segmentation masks. But the dataset is small, which easily leading to unstable model training, overfitting, and model convergence problems. To verify the reliability and robustness of the algorithm, experiments were conducted on two publicly available datasets with different image sizes (SIRST Aug [

21] and IRSTD 1k [

30]). Dataset [

21] includes 8525 images in the training set and 545 images in the test set with an image size of 256×256, which is sufficient to satisfy the training requirements of data-driven models. The image size in dataset [

30] is 512×512 and includes different types of small targets such as drones, organisms, ships, and vehicles. This dataset also covers many different scenes, including seawater, fields, mountains, cities, and clouds, with a cluttered background and severe noise, which is sufficient to verify small target detection methods.

3.2. Experimental Preparation and Evaluation Method

PFFNet will conduct ablation experiments and multi-algorithm comparison experiments on the SIRST Aug and IRSTD 1k open datasets.

We use classic semantic segmentation evaluation indicators as F1-score, receiver operating characteristic curve (ROC), and Intersection over Union (IoU). To measure the connection between precision and recall,

F1-score is introduced. Meaning that the network must be able to detect targets and ensuring as few false alarms as possible. ROC is a qualitative indicator that reflects the connection between target detection rate (

Pd) and false alarm rate (

Pf). Precision, recall, target

Pd, and

Pf are defined as follows:

where

TP represents the target pixels that are correctly matched with the true label by the predicted pixels.

FP represents the background label pixels that are incorrectly predicted as targets.

FN represents the number of target pixels that are incorrectly classified as background.

N represents the total number of pixels in the image.

F1-score and IoU can be defined as:

PFFNet is implemented based on Pytorch. The optimizer uses stochastic gradient descent (SGD), with momentum and weight decay coefficients set to 0.9 and 0.0001, respectively. The initial learning rate is 0.05, and a poly decay strategy is used. In SIRST Aug, the batch size is set to 32, and 30 epochs are trained. In IRSTD 1k, the batch size is set to 8, and 150 epochs are trained. In terms of hardware, we use a Tesla P100 GPU for training and a 3060 GPU for inference.

3.3. Comparative Experiments

We compared PFFNet with four classic methods from different types. Results in [

18,

21] shown that data-driven methods are superior to model-driven methods. Therefore, only data-driven methods are compared in our experiments. In the data-driven scheme, we select AGPCNet, ACM, MDFA, ALC, PFFNet-R (PFFNet-ResNet-18[

31]), and PFFNet-S (PFFNet-Swin Transformer v1[

32]) for comparison. As shown in

Table 1, the maximum and second values in each column are marked in bold and underlined, respectively. Obviously, PFFNet-R achieves the best results on both datasets, and its performance on the SIRST Aug dataset is better than on the IRSTD 1k dataset. This is because the IRSTD 1k dataset contains more challenging situations for detecting small infrared targets, including shape-changing targets and low contrast, as well as low SCR backgrounds with clutter and noise. Nevertheless, due to the effective aggregation of high-low level features and cross-layer features by the designed FSM and PFM modules, PFFNet still achieves the best results. In addition, to demonstrate the detection speed of the proposed algorithm for infrared small targets, we calculate the average running time of different methods on 1,000 infrared images (with a size of 256×256), where PFFNet is 6ms slower than ACM. But it achieves better detection results and can be used for real-time detection of drone targets.

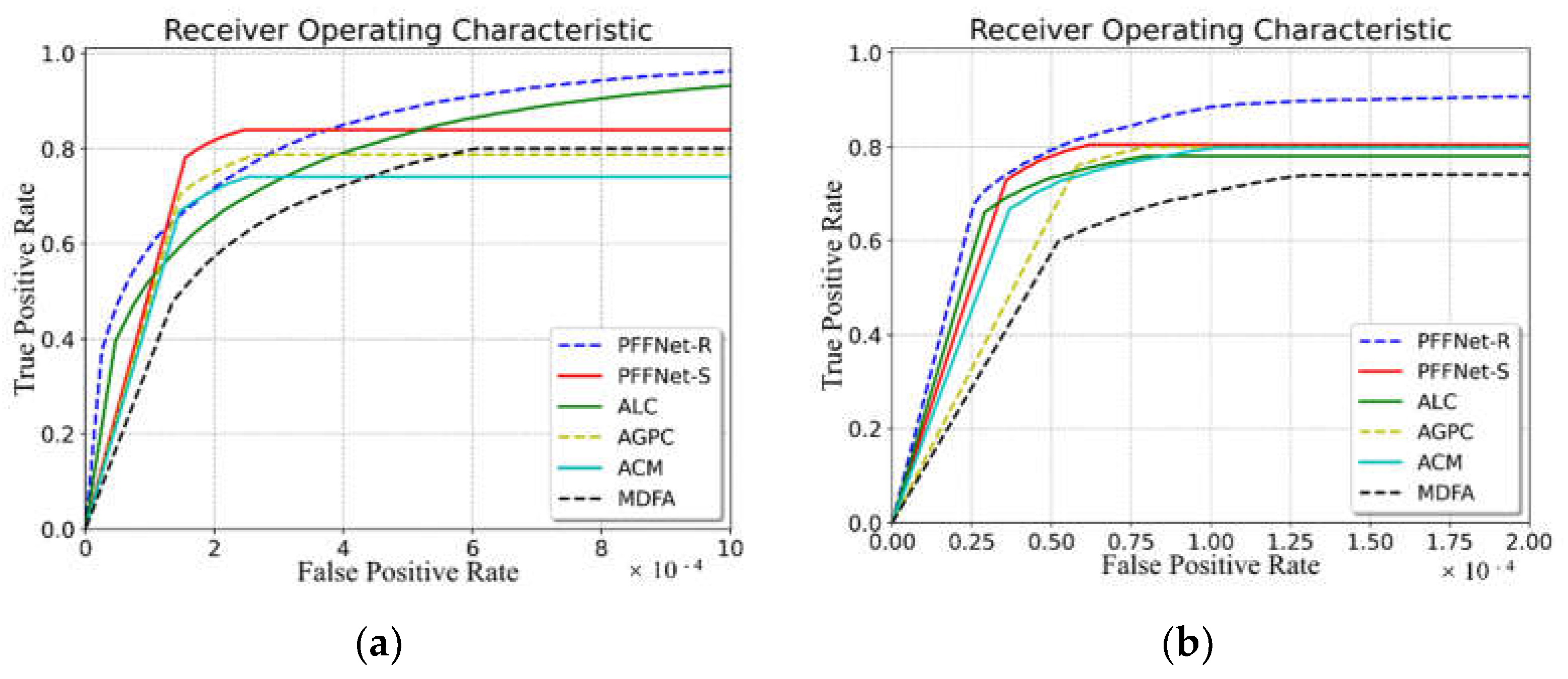

Furthermore, to visually compare the AUC, the ROC curves of these methods on two different datasets are shown in

Figure 6. These experimental results demonstrate that PFFNet can greatly suppress the background and fully leverage the algorithm's advantages. This method fully learns highly discriminative semantic features from diverse training data to achieve highly robust object detection results. In addition, it can segment targets more accurately than other state-of-the-art methods.

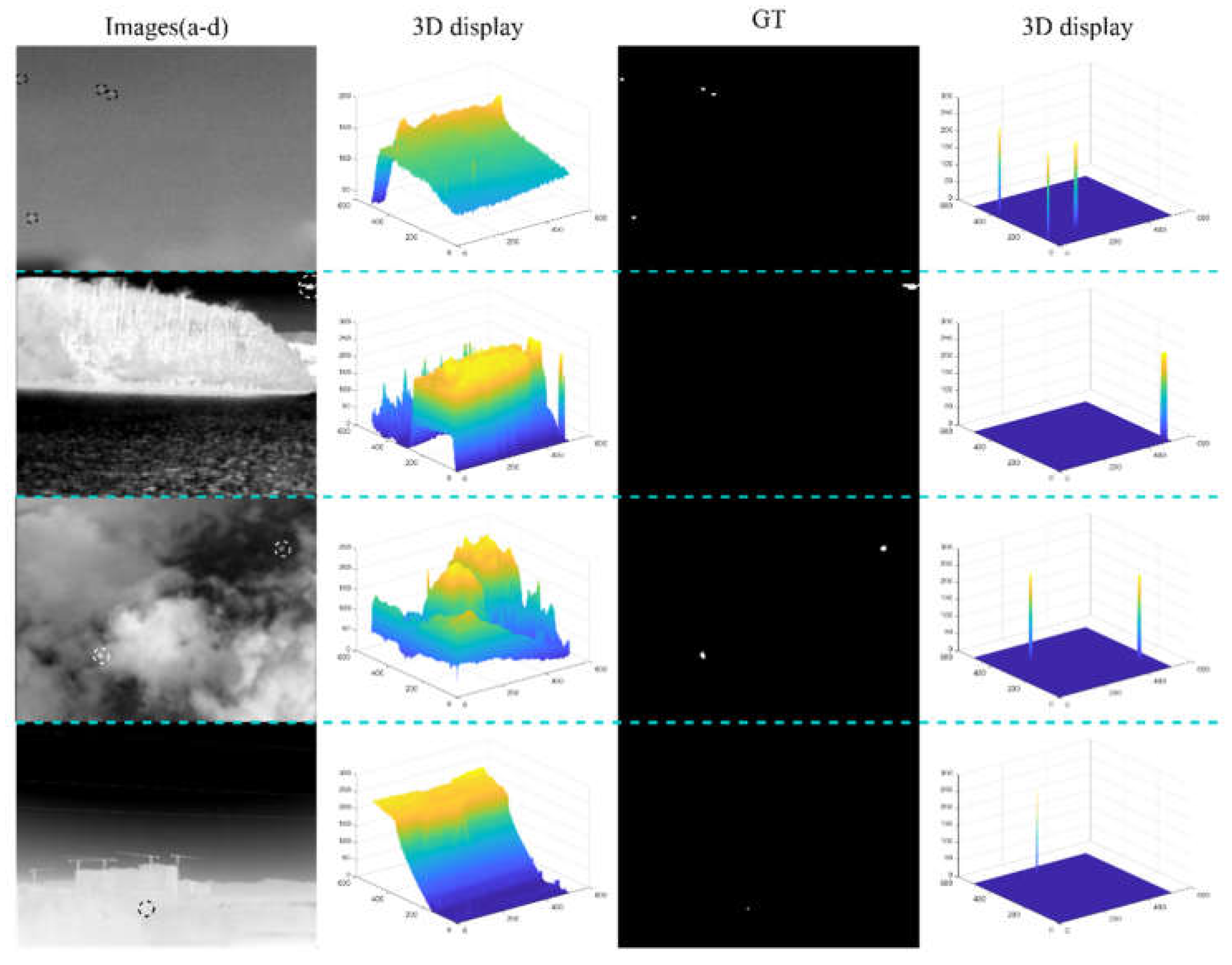

The analysis of the experimental results is presented in

Figure 7, which shows the mask information of the target and its 3D display in four different representative scenes. Image a and d have multiple drone targets but the local contrast is low between target and background. Image b and c have higher local contrast but the background is relatively complex.

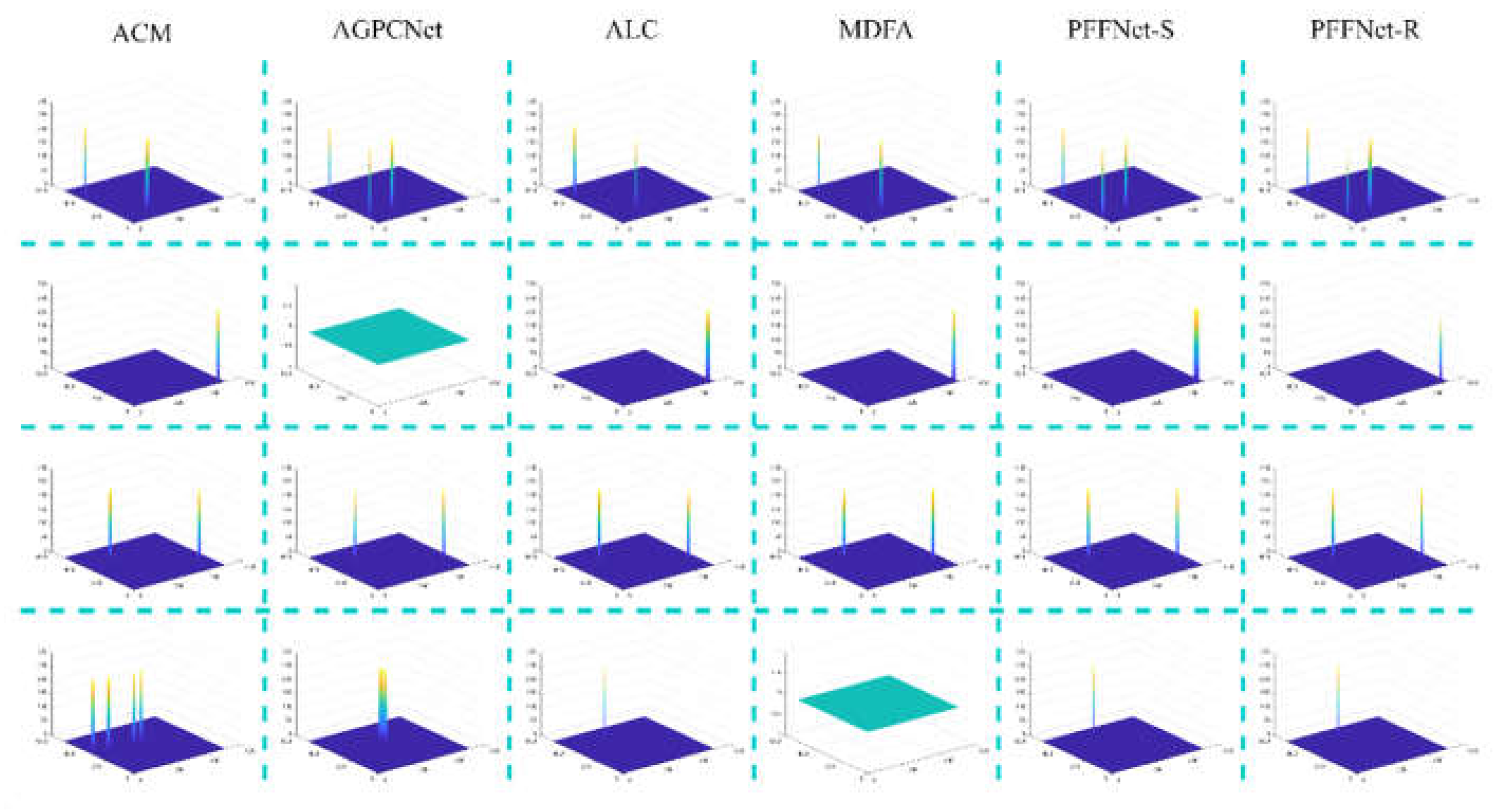

This article performs target detection on these four different scenes, and the output 3D displays of six different algorithms are shown in the

Figure 8. There is a lot of noise similar to the target in

Figure 7a,d. ACM and MDFA cannot make correct predictions, but PFFNet can clearly distinguish between the target and the background. This article's model also performs well on multiple targets in

Figure 7a. In contrast, ALC cannot distinguish all targets. The overall performance of ALC is good, but it may have some false alarms in situations with high noise levels or low SCR. PFFNet-S performs similarly to PFFNet-R, where the former can detect the target contour information and the latter can locate the target's specific position. Results proves that PFFNet has the best detection results in various scenes.

3.4. Ablation Experiments

To verify the detection performance and speed of PFFNet, this paper conducted ablation experiments with different settings on the SIRST Aug dataset. The effectiveness of CPM, AFM, and Segmentation Head was verified by controlling their addition in Swin Transformer-v1 [

32]. To prevent overfitting caused by the excessive parameterization, the last layer of Swin Transformer-v1 was removed. With only three downsampling layers replaced. Meanwhile, the pre-trained weights were used. Since FSM and PFM are based on the Head, the backbone and head were selected as the basic network for ablation experiments. In addition, ResNet-18 [

31] was also selected as the backbone to verify the portability of PFFNet. As shown in

Table 2, under the same basic network, the performance on the dataset was improved by adding FSM, PFM, and Head. Moreover, PFFNet still achieved good results with different feature extraction networks.

In FSM, different experiment were proceeded to verify the effectiveness of CSM and LSM, as shown in

Table 3. Swin Transformer-v1 and head were used as the basic network, and CSM and LSM modules were added separately. Both of them show good performance improvement, especially LSM. By enhancing the response weight of local regions with visual saliency, the position information of the target can be obtained to improve the overall performance of the network.

Dimensionality reduction can reduce redundant information and greatly accelerate the training speed in network. But this may loss of useful information. This paper selects different dimensionality reduction ratios to explore the best way to segment small infrared targets. In PFFNet, two dimensionality reductions were performed in FSM and PFM separately, and the reduction ratios were denoted as (

rf,

rp). According to [

28], we set

rf = 8. As shown in

Table 4, the best result was obtained when (

rf = 8,

rp = 4). Although the performance on the dataset may vary with different parameter settings, the overall change is not significant.

Table 5 shows the inference time of each module based on two different feature extractors, using 1000 infrared images with a size of 256×256 as the benchmark and taking the average. In which PFFNet-S has the shortest inference time. There is a gap in the total inference time between the two algorithms due to the difference backbone, but the gap between each submodule is very small. It can be seen from

Table 5 that PFFNet has a good real-time performance. In general, experiments show that PFFNet is a lightweight network that can quickly and accurately detect infrared drone targets.

4. Conclusions

This paper proposes a fast detection method for infrared small targets: Progressive Feature Fusion Network (PFFNet). Faced the problem of losing target area response values during downsampling of drone targets, FSM is proposed. It can fully fuse deep features with high-level semantic information and shallow features with rich image contour and location information. Successfully achieved information exchange between downsampling layers. Then, PFM is proposed to integrate deep features and enhance high-frequency response amplitude from a multi-scale perspective to address the problem of weakened small target feature representation in deep networks. Meanwhile, a lightweight segmentation head suitable for infrared small targets is designed to progressively fuse low-level and high-level semantics from the perspective of feature fusion. In addition, the utilization of features in downsampling layers was improved. Finally, module comparison is conducted on two datasets with different complexities, which fully demonstrates the effectiveness of each module. The practicality verification and inference time statistics on the SIRST Aug dataset confirm that PFFNet has a good performance for fast detection of infrared small targets. As well, a large number of data-driven algorithm comparison experiments demonstrate the ability of PFFNet to cope with complex scene detection tasks in term of numerical evaluation. Meanwhile, PFFNet has better detection performance and shorter inference time.

However, there are still some problems of the algorithm that need further research. Such as dealing with network overfitting, utilizing more efficient contextual information, etc. In future work, attention mechanisms and fusion structures will continue to be explored for their application in infrared drone target detection.

Author Contributions

Conceptualization, C.Z. and Z.H.; methodology, Z.H.; software, Z.H.; validation, Z.H., K.Q. and M.Y.; formal analysis, M.Y.; investigation, H.F.; resources, C.Z.; data curation, C.Z.; writing—original draft preparation, Z.H.; writing—review and editing, C.Z.; visualization, Z.H.; supervision, C.Z.; project administration, C.Z.; funding acquisition, C.Z. and M.Y.

Funding

This research was funded by Liaoning Provincial Department of Education, grant number LJKMZ20220605.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. Original data can be obtained by contacting the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kapoulas, I.K.; Hatziefremidis, A.; Baldoukas, A.K.; Valamontes, E.S.; Statharas, J.C. Small Fixed-Wing UAV Radar Cross-Section Signature Investigation and Detection and Classification of Distance Estimation Using Realistic Parameters of a Commercial Anti-Drone System. Drones 2023, 7, 39. [Google Scholar] [CrossRef]

- Wang, C.; Meng, L.; Gao, Q.; Wang, J.; Wang, T.; Liu, X.; Du, F.; Wang, L.; Wang, E. A Lightweight Uav Swarm Detection Method Integrated Attention Mechanism. Drones 2022, 7, 13. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F. Analysis of new top-hat transformation and the application for infrared dim small target detection. Pattern Recognition. 2010, 43, 2145–2156. [Google Scholar] [CrossRef]

- Chang B, Meng L, Haber E, Ruthotto L, Begert D, Holtham E. Reversible Architectures for Arbitrarily Deep Residual Neural Networks. Published online November 18, 2017. [CrossRef]

- Rivest JF, Fortin R. Detection of dim targets in digital infrared imagery by morphological image processing. OE. 1996;35(7):1886-1893. [CrossRef]

- Chen CLP, Li H, Wei Y, Xia T, Tang YY. A Local Contrast Method for Small Infrared Target Detection. IEEE Transactions on Geoscience and Remote Sensing. 2014;52(1):574-581. [CrossRef]

- Wei Y, You X, Li H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recognition. 2016;58:216-226. [CrossRef]

- Han J, Ma Y, Zhou B, Fan F, Liang K, Fang Y. A Robust Infrared Small Target Detection Algorithm Based on Human Visual System. IEEE Geoscience and Remote Sensing Letters. 2014;11(12):2168-2172. [CrossRef]

- Han J, Moradi S, Faramarzi I, Liu C, Zhang H, Zhao Q. A Local Contrast Method for Infrared Small-Target Detection Utilizing a Tri-Layer Window. IEEE Geoscience and Remote Sensing Letters. 2020;17(10):1822-1826. [CrossRef]

- Zhang L, Peng Z. Infrared Small Target Detection Based on Partial Sum of the Tensor Nuclear Norm. Remote Sensing. 2019;11(4):382. [CrossRef]

- Zhu H, Liu S, Deng L, Li Y, Xiao F. Infrared Small Target Detection via Low-Rank Tensor Completion With Top-Hat Regularization. IEEE Transactions on Geoscience and Remote Sensing. 2020;58(2):1004-1016. [CrossRef]

- Dai Y, Wu Y, Song Y, Guo J. Non-negative infrared patch-image model: Robust target-background separation via partial sum minimization of singular values. Infrared Physics & Technology. 2017;81:182-194. [CrossRef]

- Fu J, Liu J, Tian H, et al. Dual Attention Network for Scene Segmentation. Published online April 21, 2019. [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Cheng, Y.; Alexander, C.B. SSD: Single Shot MultiBox Detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Wang, C.; Shi, Z.; Meng, L.; Wang, J.; Wang, T.; Gao, Q.; Wang, E. Anti-Occlusion UAV Tracking Algorithm with a Low-Altitude Complex Background by Integrating Attention Mechanism. Drones 2022, 6, 149. [Google Scholar] [CrossRef]

- Liu, S.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric Contextual Modulation for Infrared Small Target Detection.; 2021; pp. 950–959.

- Dai Y, Wu Y, Zhou F, Barnard K. Attentional Local Contrast Networks for Infrared Small Target Detection. IEEE Transactions on Geoscience and Remote Sensing. 2021;59(11):9813-9824. [CrossRef]

- Huan Wang, Luping Zhou, and Lei Wang. Miss detection vs. false alarm: Adversarial learning for small object segmentation in infrared images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 85098518, 2019. 2, 3, 6.

- T. Zhang, L. Li, S. Cao, T. Pu, and Z. Peng, “Attention-Guided Pyramid Context Networks for Detecting Infrared Small Target Under Complex Background,” pp. 1–13, 2023. [CrossRef]

- Cheng, Q.; Wang, H.; Zhu, B.; Shi, Y.; Xie, B. A Real-Time UAV Target Detection Algorithm Based on Edge Computing. Drones 2023, 7, 95. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. arXiv March 13, 2020. arXiv:1911.11907.

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2018; pp. 122–138. [Google Scholar]

- Xiong, Y.; Liu, H.; Gupta, S.; Akin, B.; Bender, G.; Wang, Y.; Kindermans, P.J.; Tan, M.; Singh, V.; Chen, B. MobileDets: Searching for Object Detection Architectures for Mobile Accelerators. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 3824–3833. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I. S. CBAM: Convolutional Block Attention Module. arXiv July 18, 2018. arXiv:1807.06521.

- Chen, Y.; Li, L.; Liu, X.; Su, X. A Multi-Task Framework for Infrared Small Target Detection and Segmentation. IEEE Trans. Geosci. Remote Sensing 2022, 60, 1–9. [Google Scholar] [CrossRef]

- M. Zhang, R. Zhang, Y. Yang, H. Bai, J. Zhang, and J. Guo, “ISNet: Shape Matters for Infrared Small Target Detection,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, Jun. 2022, pp. 867–876. [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv December 10, 2015. arXiv:1512.03385.

- Liu Z, Lin Y, Cao Y, et al. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. Published online August 17, 2021. Accessed February 20, 2023. arXiv:2103.14030.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).