Submitted:

02 May 2023

Posted:

03 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Experimental Data

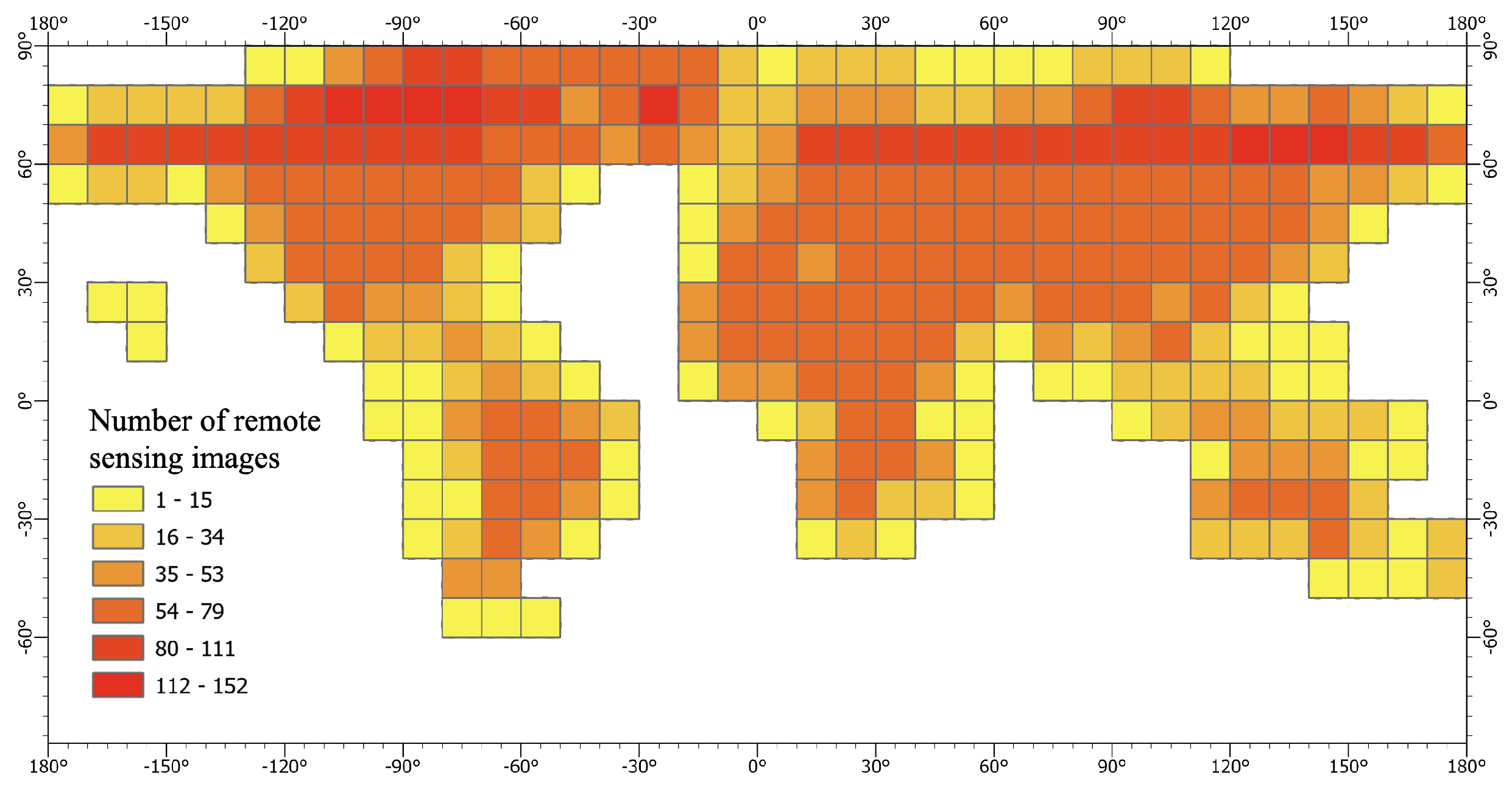

2.1.1. Landsat8

2.1.2. GDEM v3

2.1.3. GLC products

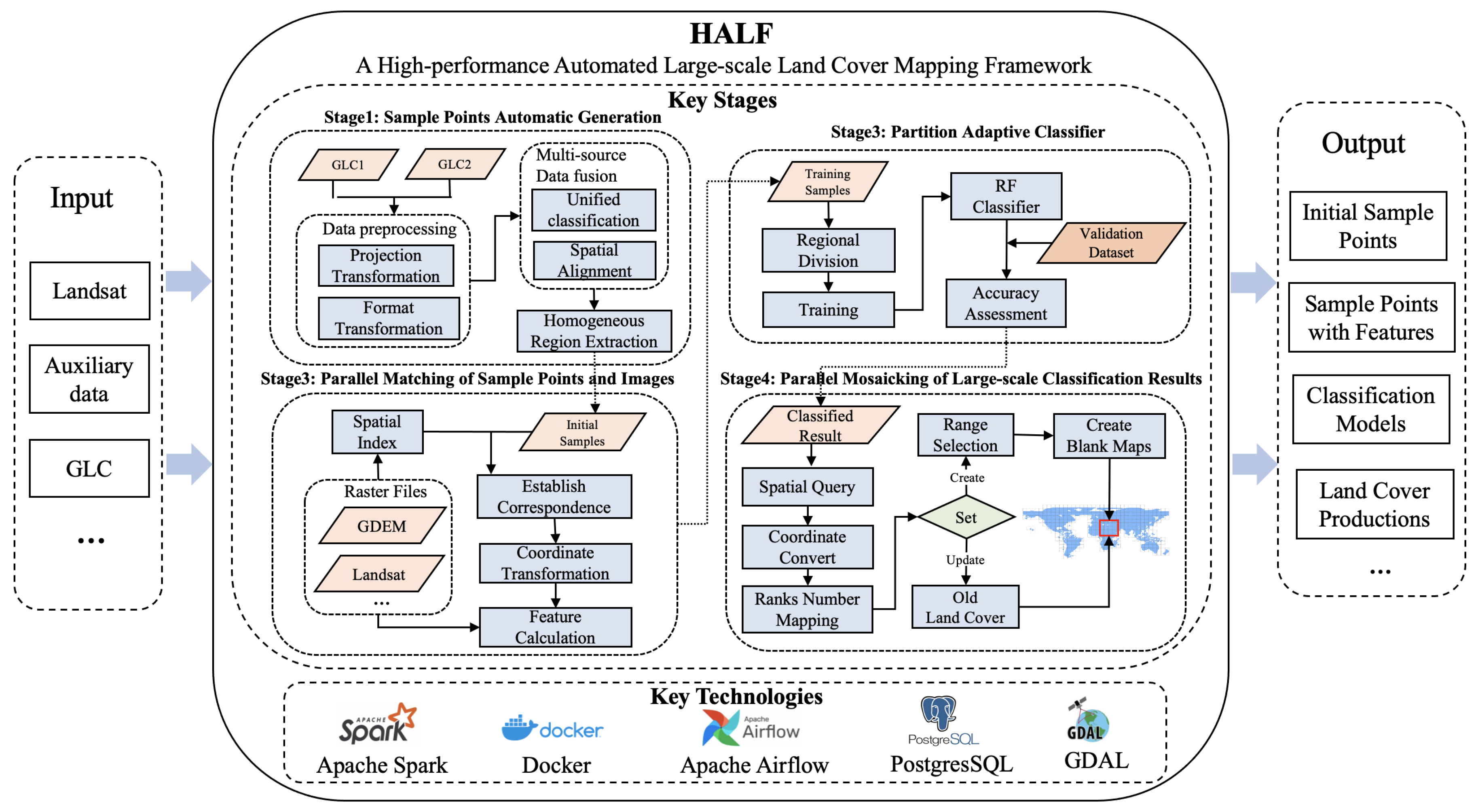

2.2. Framework Design

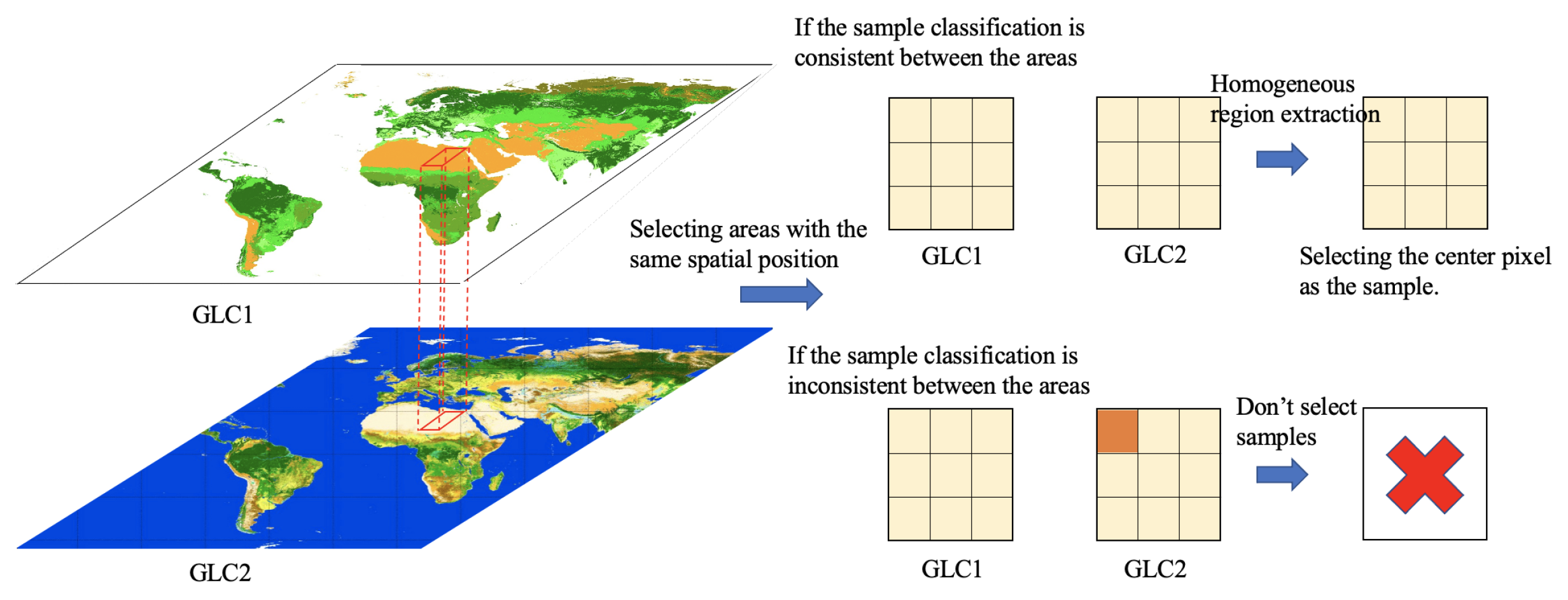

2.2.1. Stage 1: Sample Points Automatic Generation

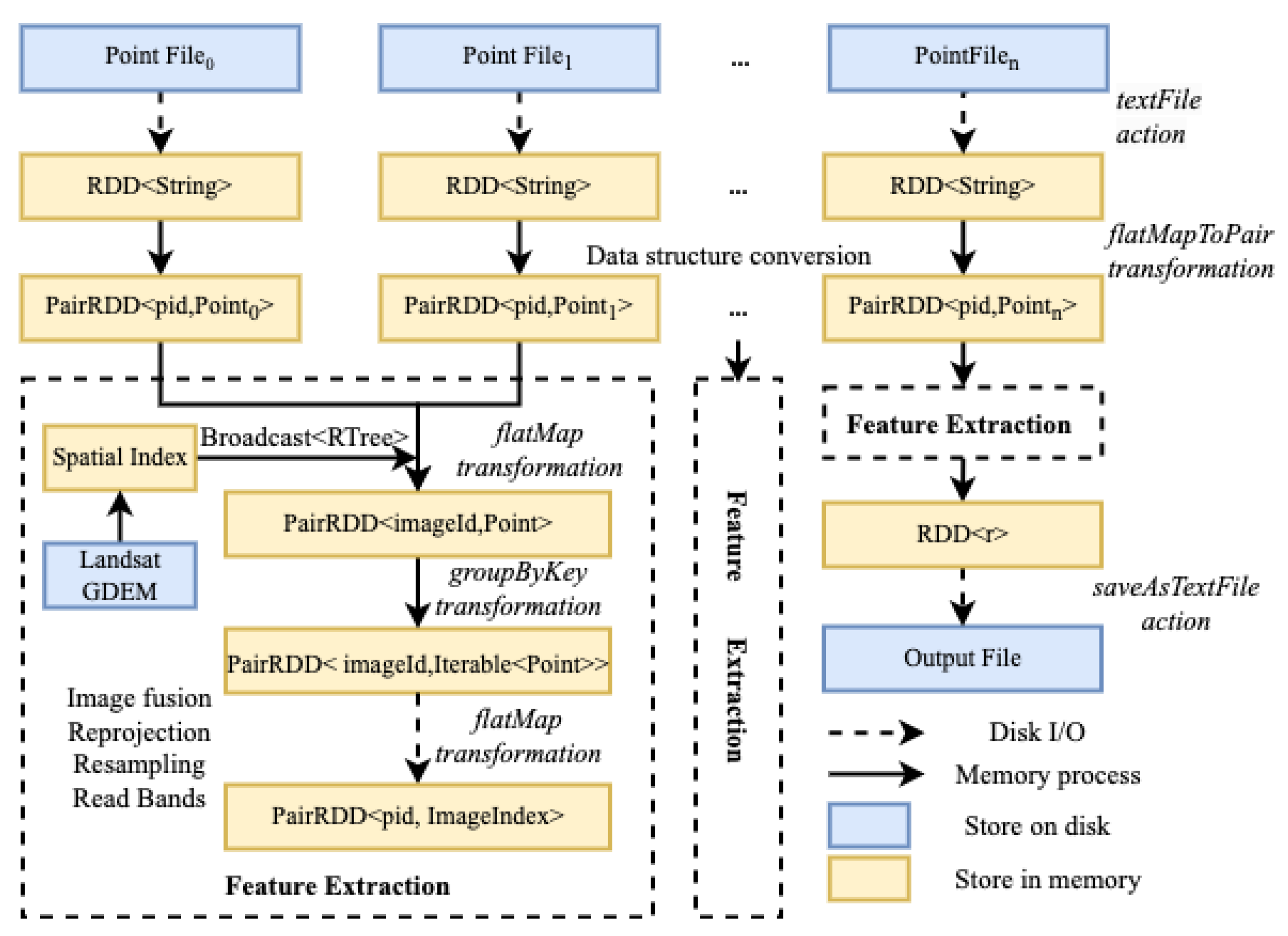

2.2.2. Stage 2: Parallel Matching of Sample Points and Images

- Read all the center point data to be matched into memory, use the longitude and latitude of the point to form a unique identifier pid, read its spatial and attribute information to form a spatial point object, and compose a tuple Tuple<pid, Point>;

- Read the Landsat image metadata information, use the boundary coordinates to construct a spatial index STRtree, and use the Broadcast variable in Spark to transmit it to each node. The Broadcast variable will save a copy on each node, saving the network transmission cost for multiple calls;

- Distribute the computing tasks based on rid. To address the issue of data skew resulting from unequal allocation of images to different computing nodes during task distribution, a custom partitioning strategy is developed, and the groupByKey operator is used to allocate data evenly across all computing nodes as much as possible;

- For each image, parallel operations are performed using GDAL. Image fusion, reprojection and resampling are carried out on the image, and the row and column numbers of the sample point’s longitude and latitude on the remote sensing image are calculated. If a sample point is covered by clouds for most of the year or its quality is poor, it is discarded. The feature indicators are calculated for data points that meet the quality criteria, and both the data point and its corresponding match are outputted.

2.2.3. Stage 3: Partition Adaptive Classifier

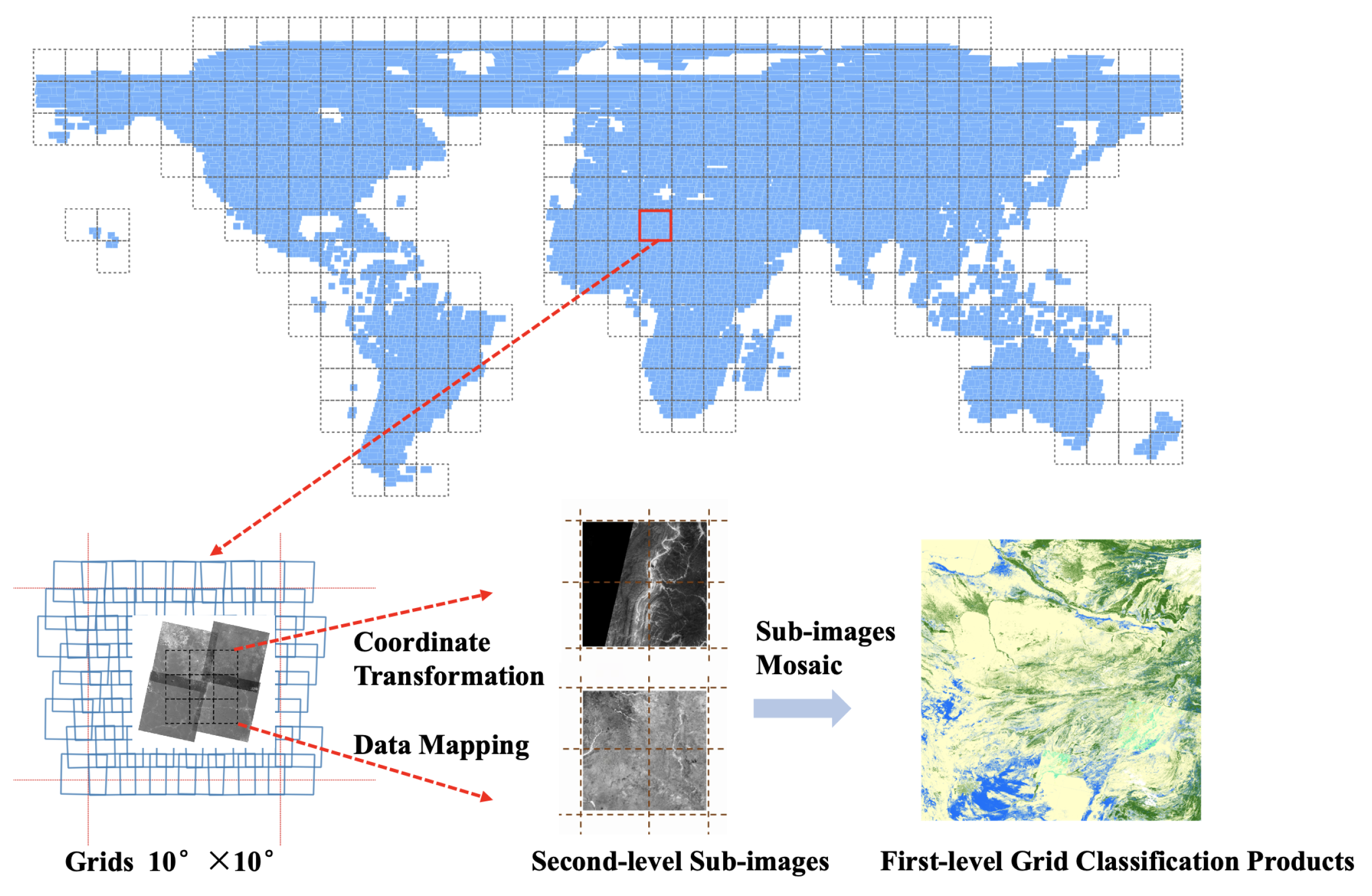

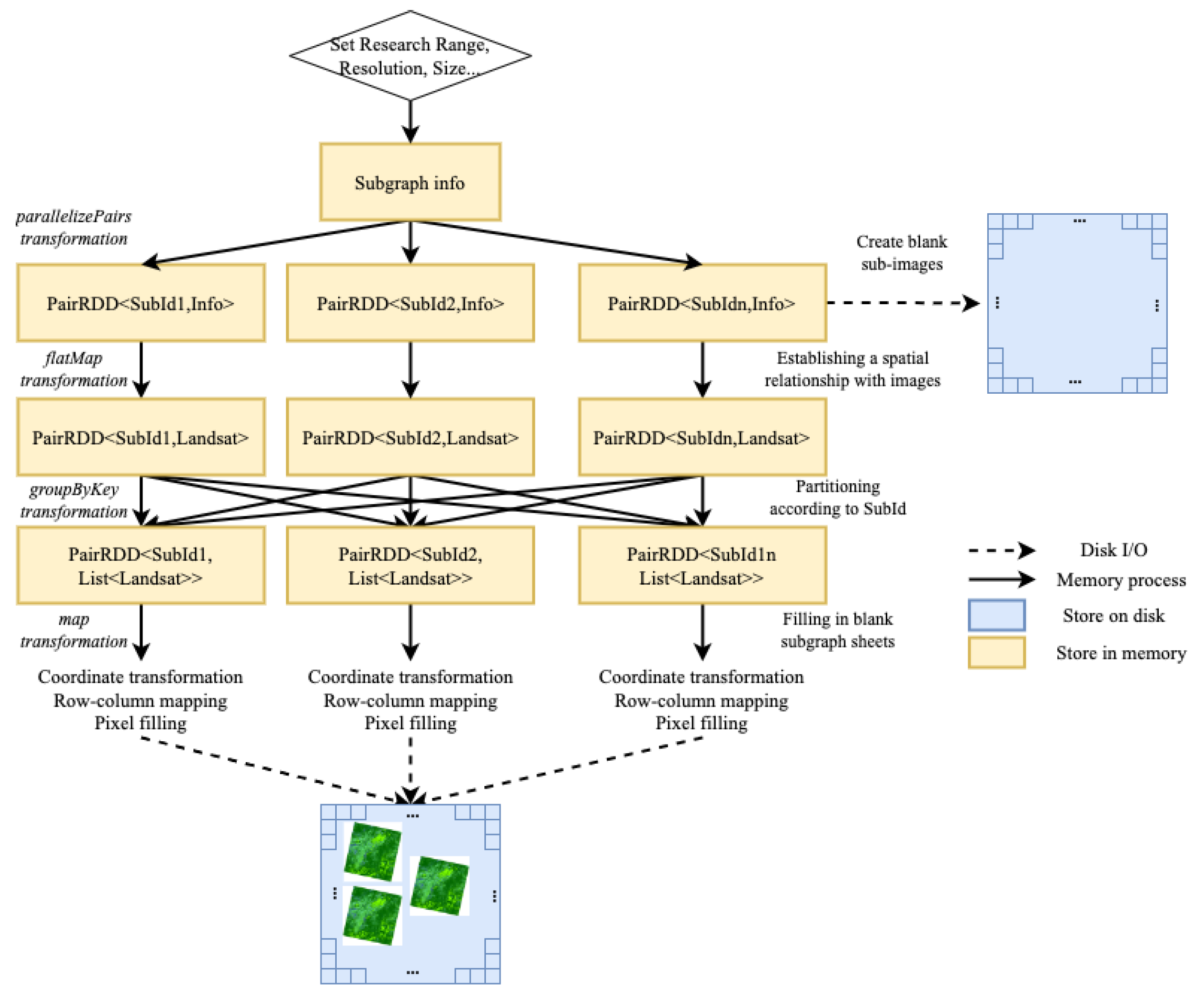

2.2.4. Stage 4: Parallel Mosaicking of Large-scale Classification Results

- Set the spatial extent, resolution, number of bands, and other parameters for different application scenarios. Then create blank map sheets. For example, a latitude and longitude range of 10°×10° corresponds to a grid of geographic extent of approximately 1100 km in length and width. If the sub-image resolution is set to 100 m, and each sub-image size is 256 × 256 pixels, then there are approximately 44×44 sub-images in this spatial range. The metadata information of the sub-images is stored in binary format, including spatial location and description, and files are created based on whether they already exist.;

- Read the image information, and use the flatMap operator to match the spatial position of the sub-image with the metadata of the remote sensing image to establish a spatial correspondence. In this step, the input is PairRDD<SubId, RasterInfo>, and after conversion, the output is PairRDD<SubId, image> which describe the sub-images and the image;

- Use the unique identification code SubId of the sub-image as the key to call the groupByKey operator to obtain the PairRDD<SubId, Iterable<image> > and perform task distribution. Each task unit performs the sub-image filling task;

- In each task computing unit, perform coordinate transformation and row/column calculation for the image classification result, and fill in the corresponding pixel points on the sub-image. If no additional settings are made, the result from the latest remote sensing image corresponding to the sub-image is selected for writing.

2.3. HALF Workflow

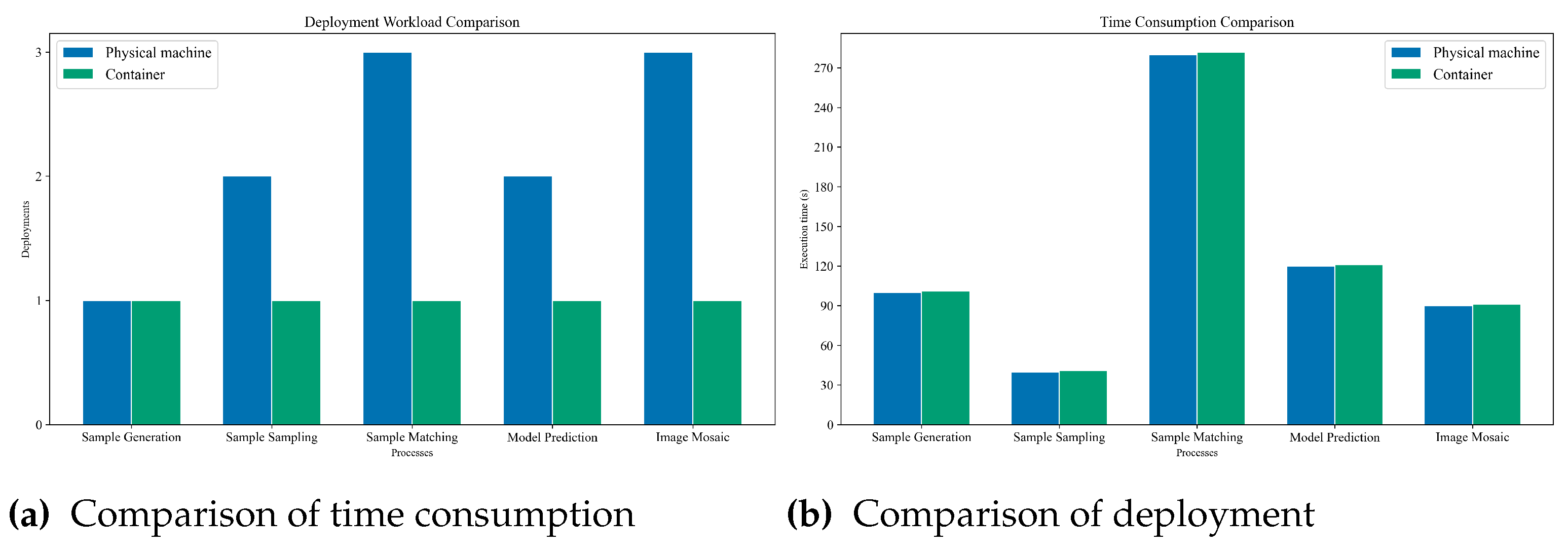

2.4. Experimental Environment

| Images | Description | Base images | Models |

|---|---|---|---|

| landuse-py | Used to run models written inPython | python:3.8.3 | Samples Generation Classifier Training Image Classification Prediction |

| gdal-spark | Used to run models written in Java Spark | osgeo/gdal:ubuntu-full-3.6.3 | Sample-Image Matching Image Mosaicking |

| pg12-citus- postgis |

Used to storage metadata and spatial queries | postgres:12.14-alpine3.17 | Building Spatial Index Spatial Querying |

| end-point | Used to launch the back-end project and provide network services |

java:openjdk-8 | Back-end Project |

3. Results

3.1. Model Performance Testing

3.2. Automatically Generated Sample Points

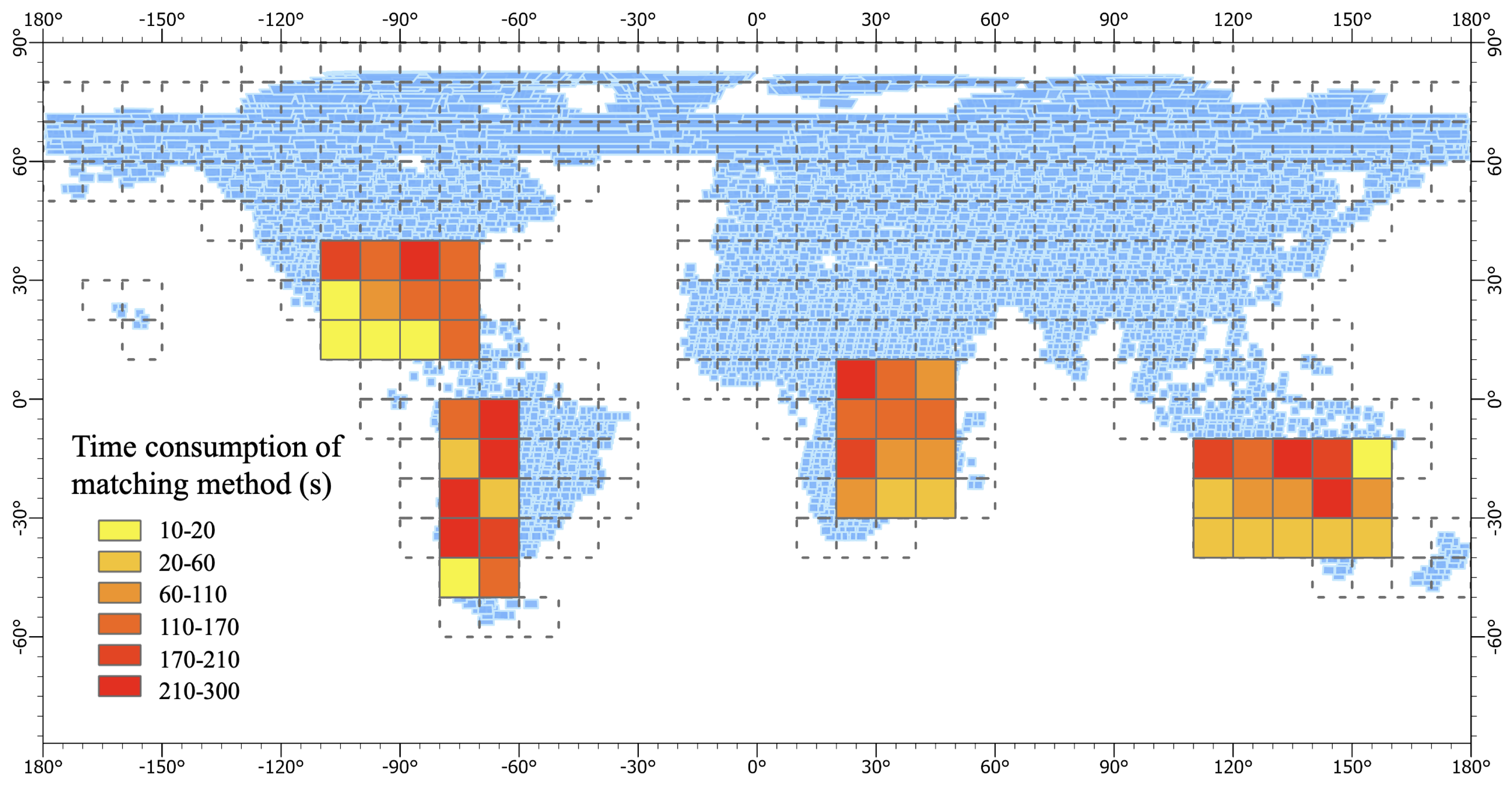

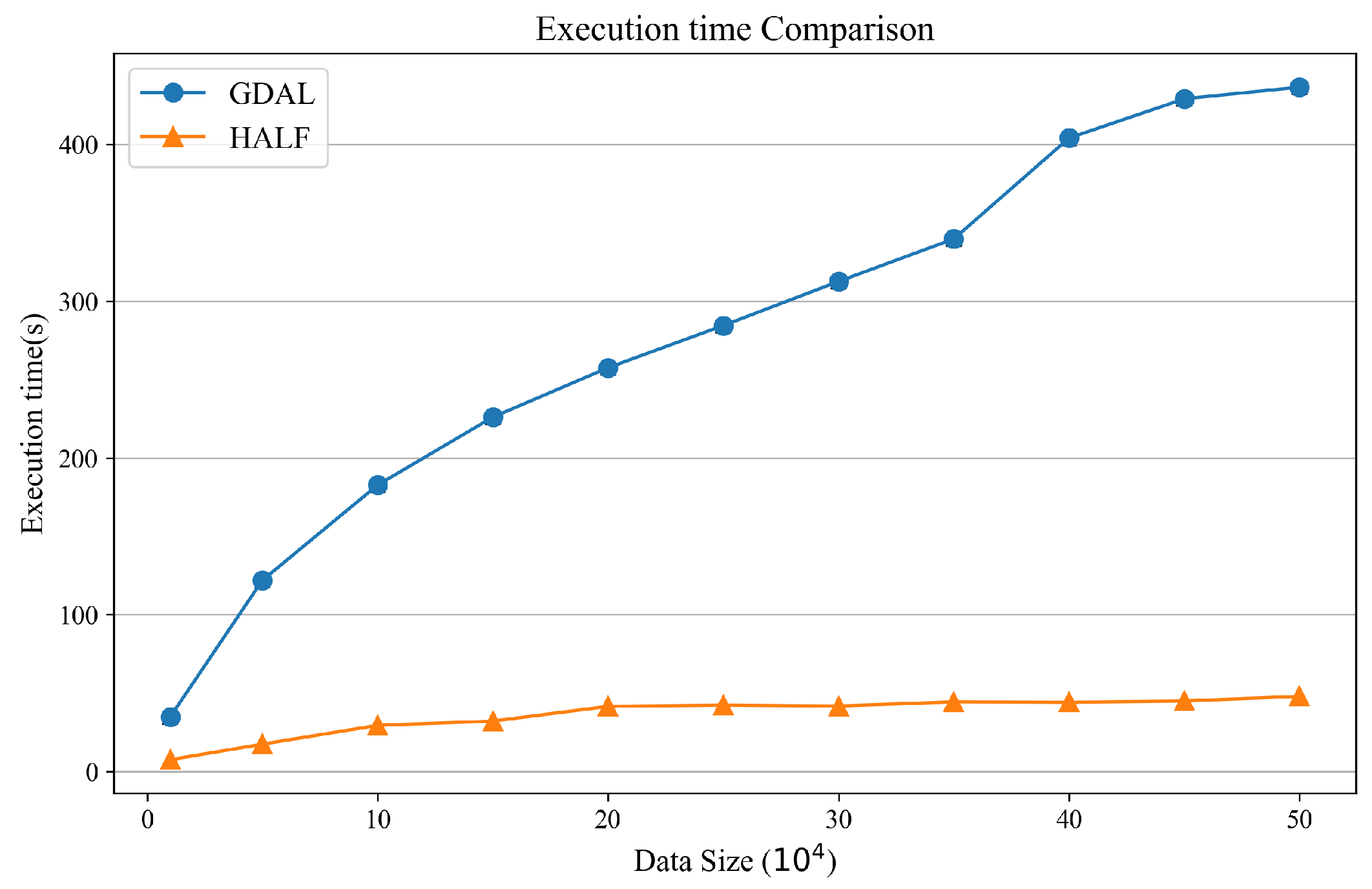

3.3. Performance of Sample Points and Images Matching

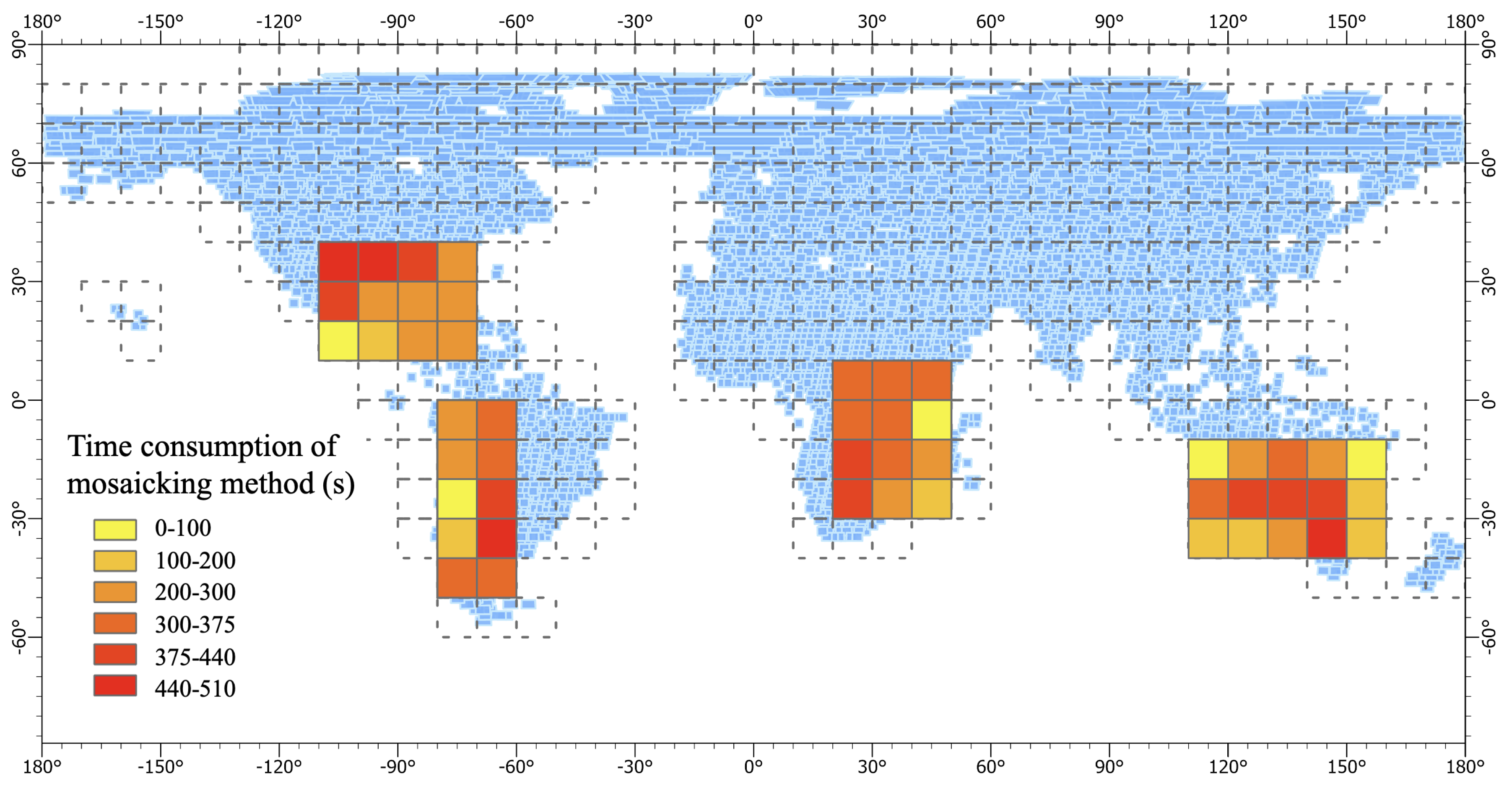

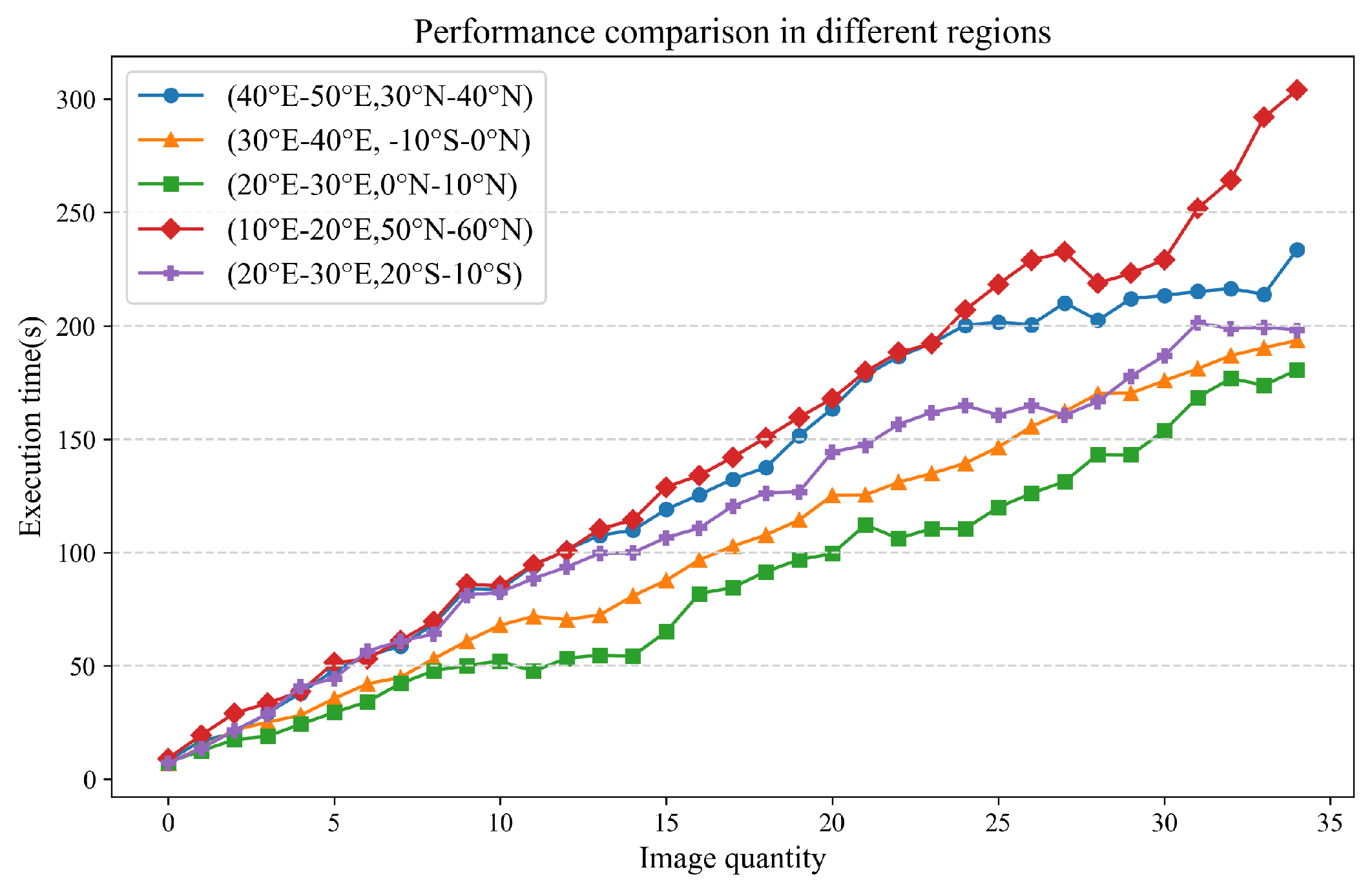

3.4. Performance of Big-scale Classification Result Mosaicking

| Region | Spatial extent | Number of Images | Number of samples | Mosaic Time(s) | Match Time(s) |

|---|---|---|---|---|---|

| Region1 | (50°S-40°S, 70°W-60°W) | 43 | 9276097 | 361 | 166.12 |

| Region2 | (40°S-30°S, 70°W-60°W) | 64 | 7557768 | 481 | 204.07 |

| Region3 | (40°S-30°S, 140°E-150°E) | 59 | 6770182 | 468 | 31.20 |

| Region4 | (30°S-20°S, 20°E-30°E) | 64 | 3663073 | 413 | 99.72 |

| Region5 | (30°S-20°S, 140°E-150°E) | 64 | 5716990 | 408 | 233.32 |

| Region6 | (30°S-20°S, 130°E-140°E) | 63 | 6657017 | 417 | 109.19 |

| Region7 | (20°S-10°S, 110°E-120°E) | 7 | 6698112 | 16 | 175.16 |

| Region8 | (20°S-10°S, 20°E-30°E) | 63 | 1675984 | 401 | 182.95 |

| Region9 | (20°S-10°S, 30°E-40°E) | 55 | 4159308 | 324 | 80.81 |

| Region10 | (-10°S-0°N, 40°E-50°E) | 13 | 11522471 | 72 | 169.84 |

| Region11 | (30°N-40°N, 80°W-70°W) | 31 | 16008303 | 222 | 143.02 |

| Region12 | (0°N-10°N, 30°E-40°E) | 62 | 1022583 | 359 | 119.86 |

| Region13 | (10°N-20°N, 80°W-70°W) | 35 | 9698301 | 250 | 134.51 |

| Region14 | (20°N-30°N, 90°W-80°W) | 39 | 12549213 | 253 | 146.13 |

| Region15 | (20°N-30°N, 80°W-70°W) | 34 | 11688087 | 224 | 153.62 |

| Region16 | (30°N-40°N, 90°W-80°W) | 63 | 12793479 | 439 | 259.34 |

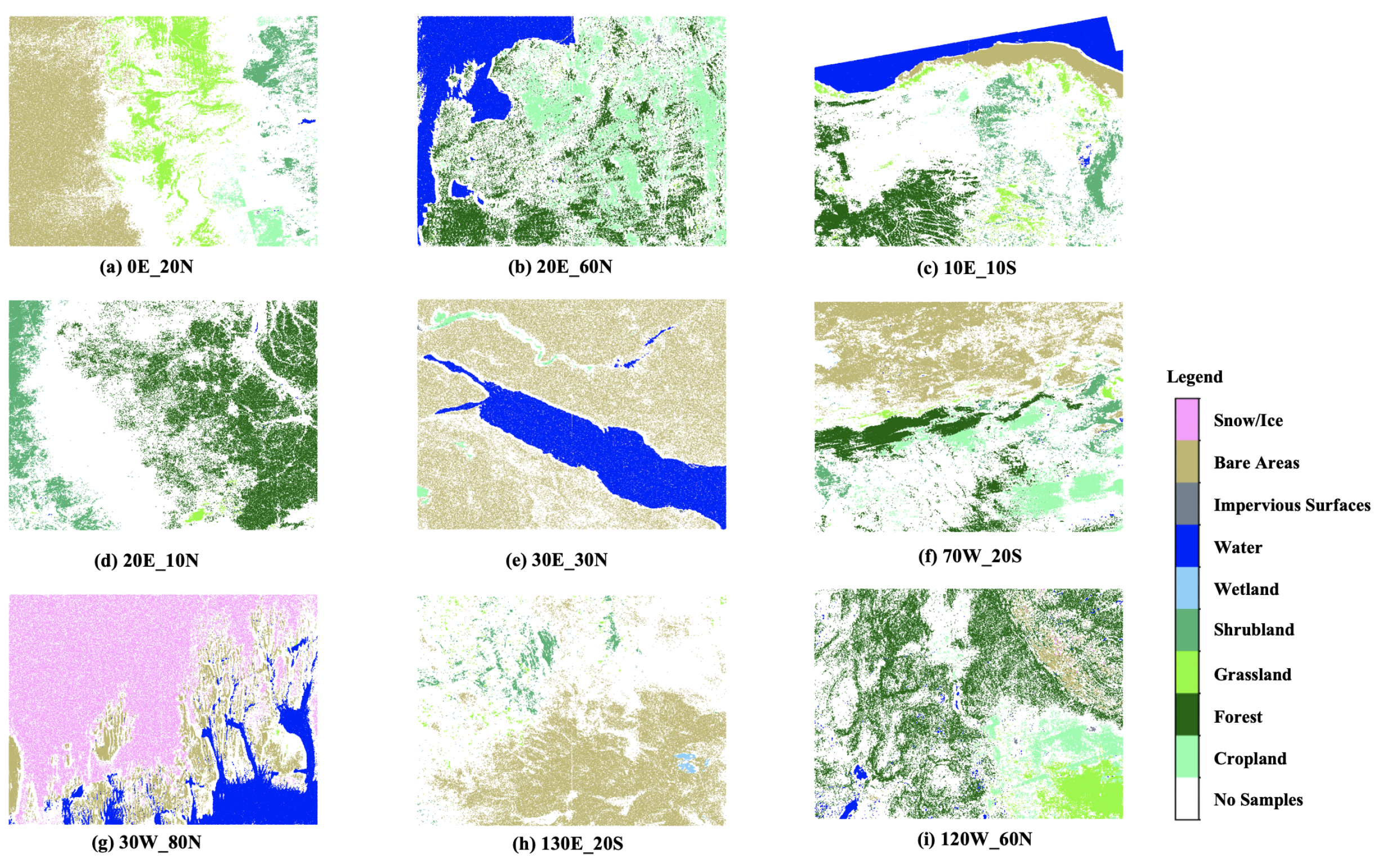

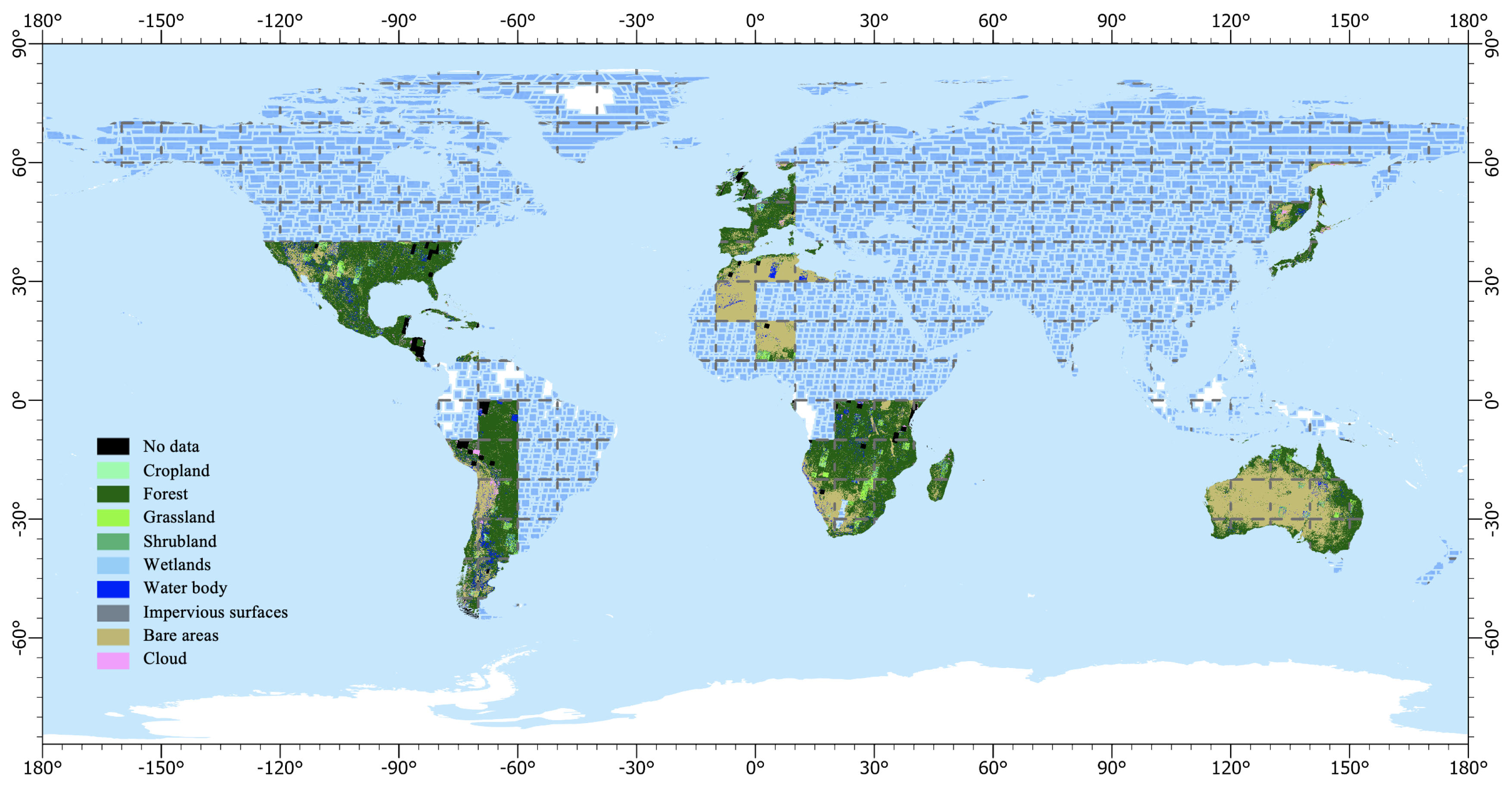

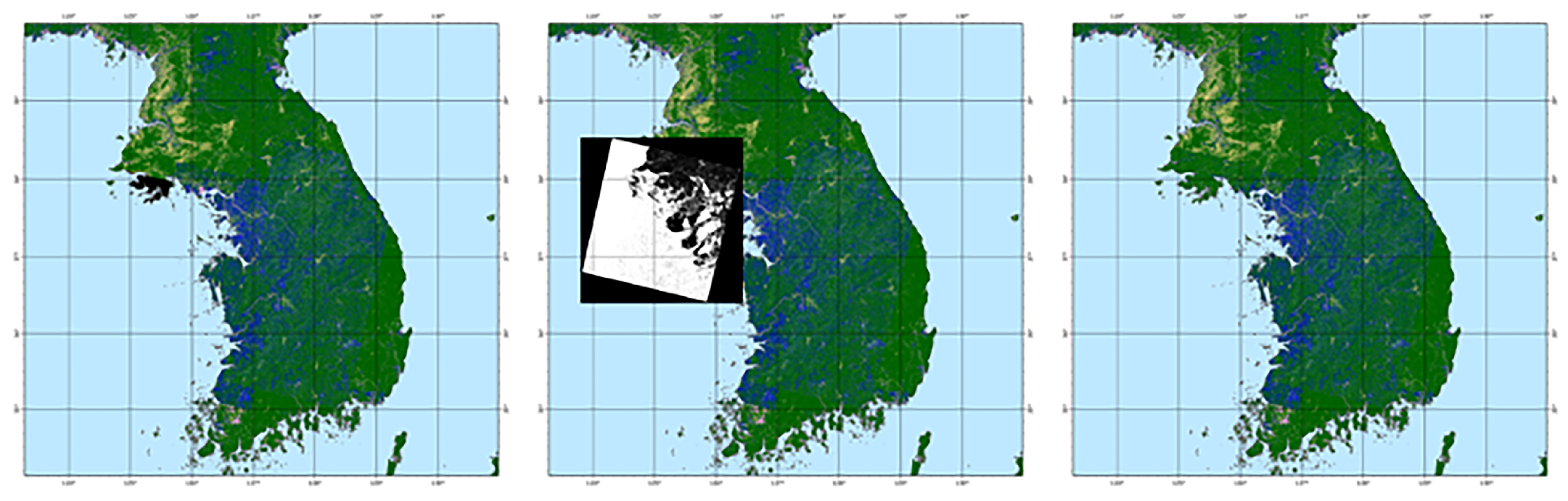

3.5. Result of Mapping

4. Discussion

5. Conclusions

References

- Sterling, S.M.; Ducharne, A.; Polcher, J. The impact of global land-cover change on the terrestrial water cycle. Nature Climate Change 2013, 3, 385–390. [Google Scholar] [CrossRef]

- Feddema, J.J.; Oleson, K.W.; Bonan, G.B.; Mearns, L.O.; Buja, L.E.; Meehl, G.A.; Washington, W.M. The Importance of Land-Cover Change in Simulating Future Climates. Science 2005, 310, 1674–1678. [Google Scholar] [CrossRef] [PubMed]

- Ban, Y.; Gong, P.; Giri, C. Global land cover mapping using Earth observation satellite data: Recent progresses and challenges. ISPRS Journal of Photogrammetry and Remote Sensing 2015, 103, 1–6. [Google Scholar] [CrossRef]

- Brown, C.F.; Brumby, S.P.; Guzder-Williams, B.; Birch, T.; Hyde, S.B.; Mazzariello, J.; Czerwinski, W.; Pasquarella, V.J.; Haertel, R.; Ilyushchenko, S.; Schwehr, K.; Weisse, M.; Stolle, F.; Hanson, C.; Guinan, O.; Moore, R.; Tait, A.M. Dynamic World, Near real-time global 10 m land use land cover mapping. Scientific Data 2022, 9, 251. [Google Scholar] [CrossRef]

- Yu, L.; Du, Z.; Dong, R.; Zheng, J.; Tu, Y.; Chen, X.; Hao, P.; Zhong, B.; Peng, D.; Zhao, J.; Li, X.; Yang, J.; Fu, H.; Yang, G.; Gong, P. FROM-GLC Plus: toward near real-time and multi-resolution land cover mapping. GIScience & Remote Sensing 2022, 59, 1026–1047. [Google Scholar] [CrossRef]

- Sulla-Menashe, D.; Gray, J.M.; Abercrombie, S.P.; Friedl, M.A. Hierarchical mapping of annual global land cover 2001 to present: The MODIS Collection 6 Land Cover product. Remote Sensing of Environment 2019, 222, 183–194. [Google Scholar] [CrossRef]

- Buchhorn, M.; Lesiv, M.; Tsendbazar, N.E.; Herold, M.; Bertels, L.; Smets, B. Copernicus Global Land Cover Layers—Collection 2. Remote Sensing 2020, 12. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Liao, A.; Cao, X.; Chen, L.; Chen, X.; He, C.; Han, G.; Peng, S.; Lu, M.; Zhang, W.; Tong, X.; Mills, J. Global land cover mapping at 30m resolution: A POK-based operational approach. ISPRS Journal of Photogrammetry and Remote Sensing 2015, 103, 7–27. [Google Scholar] [CrossRef]

- Li, X.; Gong, P. An “exclusion-inclusion” framework for extracting human settlements in rapidly developing regions of China from Landsat images. Remote Sensing of Environment 2016, 186, 286–296. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P. Using the 500m MODIS land cover product to derive a consistent continental scale 30m Landsat land cover classification. Remote Sensing of Environment 2017, 197, 15–34. [Google Scholar] [CrossRef]

- Radoux, J.; Lamarche, C.; Bogaert, E.V.; Bontemps, S.; Brockmann, C.; Defourny, P. Automated Training Sample Extraction for Global Land Cover Mapping. Remote Sensing 2014, 6, 3965–3987. [Google Scholar] [CrossRef]

- Yu, L.; Wang, J.; Li, X.; Li, C.; Zhao, Y.; Gong, P. A multi-resolution global land cover dataset through multisource data aggregation. Science China Earth Sciences 2014, 57, 2317–2329. [Google Scholar] [CrossRef]

- Wessels, K.J.; Van den Bergh, F.; Roy, D.P.; Salmon, B.P.; Steenkamp, K.C.; MacAlister, B.; Swanepoel, D.; Jewitt, D. Rapid Land Cover Map Updates Using Change Detection and Robust Random Forest Classifiers. Remote Sensing 2016, 8. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, L.; Chen, X.; Xie, S.; Gao, Y. Fine Land-Cover Mapping in China Using Landsat Datacube and an Operational SPECLib-Based Approach. Remote Sensing 2019, 11. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, L.; Chen, X.; Gao, Y.; Xie, S.; Mi, J. GLC_FCS30: global land-cover product with fine classification system at 30m using time-series Landsat imagery. Earth System Science Data 2021, 13, 2753–2776. [Google Scholar] [CrossRef]

- Venter, Z.S.; Barton, D.N.; Chakraborty, T.; Simensen, T.; Singh, G. Global 10 m Land Use Land Cover Datasets: A Comparison of Dynamic World, World Cover and Esri Land Cover. Remote Sensing 2022, 14. [Google Scholar] [CrossRef]

- Gong, P.; Yu, L.; Li, C.; Wang, J.; Liang, L.; Li, X.; Ji, L.; Bai, Y.; Cheng, Y.; Zhu, Z. A new research paradigm for global land cover mapping. Annals of GIS 2016, 22, 87–102. [Google Scholar] [CrossRef]

- Camargo, A.; Schultz, R.R.; Wang, Y.; Fevig, R.A.; He, Q. GPU-CPU implementation for super-resolution mosaicking of Unmanned Aircraft System (UAS) surveillance video. 2010 IEEE Southwest Symposium on Image Analysis & Interpretation (SSIAI), 2010, pp. 25–28. [CrossRef]

- Ma, Y.; Song, J.; Zhang, Z. In-Memory Distributed Mosaicking for Large-Scale Remote Sensing Applications with Geo-Gridded Data Staging on Alluxio. Remote Sensing 2022, 14. [Google Scholar] [CrossRef]

- Zhang, J.; Ke, T.; Sun, M. Parallel processing of mass aerial digital images base on cluster computer—The application of parallel computing in aerial digital photogrammetry. Comput. Eng. Appl. 2008, 44, 12–15. [Google Scholar] [CrossRef]

- Chen, C.; Tan, Y.; Li, H.; Gu, H. A Fast and Automatic Parallel Algorithm of Remote Sensing Image Mosaic. Microelectronics & Computer 2011, 28, 59–62. [Google Scholar]

- Ma, Y.; Wang, L.; Zomaya, A.Y.; Chen, D.; Ranjan, R. Task-Tree Based Large-Scale Mosaicking for Massive Remote Sensed Imageries with Dynamic DAG Scheduling. IEEE Transactions on Parallel and Distributed Systems 2014, 25, 2126–2137. [Google Scholar] [CrossRef]

- Jing, W.; Huo, S.; Miao, Q.; Chen, X. A Model of Parallel Mosaicking for Massive Remote Sensing Images Based on Spark. IEEE Access 2017, 5, 18229–18237. [Google Scholar] [CrossRef]

- Rabenseifner, R.; Hager, G.; Jost, G. Hybrid MPI/OpenMP Parallel Programming on Clusters of Multi-Core SMP Nodes. 2009 17th Euromicro International Conference on Parallel, Distributed and Network-based Processing, 2009, pp. 427–436. [CrossRef]

- Apache Hadoop. Available online: https://hadoop.apache.org (accessed on 20 April 2023).

- Zaharia, M.; Chowdhury, M.; Franklin, M.J.; Shenker, S.; Stoica, I.; others. Spark: Cluster computing with working sets. HotCloud 2010, 10, 95. [Google Scholar]

- Garland, M.; Le Grand, S.; Nickolls, J.; Anderson, J.; Hardwick, J.; Morton, S.; Phillips, E.; Zhang, Y.; Volkov, V. Parallel Computing Experiences with CUDA. IEEE Micro 2008, 28, 13–27. [Google Scholar] [CrossRef]

- Eldawy, A.; Mokbel, M. SpatialHadoop: A MapReduce framework for spatial data. 2015 IEEE 31st International Conference on Data Engineering, ICDE 2015. IEEE Computer Society, 2015, Proceedings - International Conference on Data Engineering, pp. 1352–1363. [CrossRef]

- Aji, A.; Wang, F.; Vo, H.; Lee, R.; Liu, Q.; Zhang, X.; Saltz, J. Hadoop GIS: A High Performance Spatial Data Warehousing System over Mapreduce. Proc. VLDB Endow. 2013, 6, 1009–1020. [Google Scholar] [CrossRef]

- Shaikh, S.A.; Mariam, K.; Kitagawa, H.; Kim, K. GeoFlink: A Framework for the Real-time Processing of Spatial Streams. CoRR 2020, abs/2004.03352. [Google Scholar] [CrossRef]

- Kopp, S.; Becker, P.; Doshi, A.; Wright, D.J.; Zhang, K.; Xu, H. Achieving the Full Vision of Earth Observation Data Cubes. Data 2019, 4. [Google Scholar] [CrossRef]

- Nüst, D.; Konkol, M.; Pebesma, E.; Kray, C.; Schutzeichel, M.; Przibytzin, H.; Lorenz, J. Opening the publication process with executable research compendia. D-Lib Magazine 2017, 23, 451. [Google Scholar] [CrossRef]

- Wang, B.; Zhang, M.; Huang, Q.; Yue, P. A Container-Based Service Publishing Method for Heterogeneous Geo-processing Operators. Journal of Geomatics 2021, 174–177. [Google Scholar] [CrossRef]

- Huffman, J.; Forsberg, A.; Loomis, A.; Head, J.; Dickson, J.; Fassett, C. Integrating advanced visualization technology into the planetary Geoscience workflow. Planetary and Space Science 2011, 59, 1273–1279. [Google Scholar] [CrossRef]

- Yue, P.; Zhang, M.; Tan, Z. A geoprocessing workflow system for environmental monitoring and integrated modelling. Environmental Modelling & Software 2015, 69, 128–140. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, H.; Xiao, L.; Jing, Q.; You, L.; Ding, Y.; Hu, M.; Devlin, A.T. Versioned geoscientific workflow for the collaborative geo-simulation of human-nature interactions – a case study of global change and human activities. International Journal of Digital Earth 2021, 14, 510–539. [Google Scholar] [CrossRef]

- Gesch, D.; Oimoen, M.; Danielson, J.; Meyer, D. VALIDATION OF THE ASTER GLOBAL DIGITAL ELEVATION MODEL VERSION 3 OVER THE CONTERMINOUS UNITED STATES. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2016, XLI-B4, 143–148. [Google Scholar] [CrossRef]

- Gong, P.; Wang, J.; Yu, L.; Zhao, Y.; Zhao, Y.; Liang, L.; Niu, Z.; Huang, X.; Fu, H.; Liu, S.; et al. Finer resolution observation and monitoring of global land cover: first mapping results with Landsat TM and ETM+ data. International Journal of Remote Sensing 2013, 34, 2607–2654. [Google Scholar] [CrossRef]

- Foody, G.M.; Arora, M.K. An evaluation of some factors affecting the accuracy of classification by an artificial neural network. International Journal of Remote Sensing 1997, 18, 799–810. [Google Scholar] [CrossRef]

- Du, P.; Lin, C.; Chen, Y.; Wang, X.; Zhang, W.; Guo, S. Training Sample Transfer Learning from Multi-temporal Remote Sensing Images for Dynamic and Intelligent Land Cover Classification. Journal of Tongji University (Natural Science Edition) 2022, 50, 955–966. [Google Scholar] [CrossRef]

- Huang, Y.; Liao, S. Automatic collection for land cover classification based on multisource datasets. Journal of Remote Sensing 2017, 21, 757–766. [Google Scholar] [CrossRef]

- Liu, K.; Yang, X.; Zhang, T. Automatic Selection of Clasified Samples with the Help of Previous Land Cover Data. Journal of Geo-information Science 2012, 14, 507–513. [Google Scholar] [CrossRef]

- Xiaodong, W.T.L.J.X.L.Y.H.S.Z.H. An Automatic Sample Collection Method for Object-oriented Classification of Remotely Sensed Imageries Based on Transfer Learning. Acta Geodaetica et Cartographica Sinica 2014, 43, 908. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS Journal of Photogrammetry and Remote Sensing 2016, 116, 55–72. [Google Scholar] [CrossRef]

- Apache Airflow. Available online: https://airflow.apache.org (accessed on 20 April 2023).

- Amstutz, P.; Mikheev, M.; Crusoe, M.R.; Tijanić, N.; Lampa, S.; et al. Existing Workflow Systems. Available online: https://s.apache.org/existing-workflow-systems (accessed on 18 April 2023).

- Leipzig, J. A review of bioinformatic pipeline frameworks. Briefings in bioinformatics 2017, 18, 530–536. [Google Scholar] [CrossRef] [PubMed]

- Schultes, E.; Wittenburg, P. FAIR Principles and Digital Objects: Accelerating Convergence on a Data Infrastructure. Springer International Publishing, 2019, pp. 3–16.

- Common Workflow Language. Available online: http://www.commonwl.org (accessed on 20 April 2023).

| USGS | CORINE | FROM_GLC | GlobalLand30 | GLC_FCS |

|---|---|---|---|---|

| 1972 | 1985 | 2013 | 2014 | 2021 |

| Forest | Forest and semi-natural areas | Forest | Forest | Forest |

| Agricultural | Agricultural areas | Crop | Cultivated land | Cropland |

| Shrub | Shrubland | Shrubland | ||

| Range | Grass | Grassland | Grassland | |

| Wetlands | Wetlands | Wetland | Wetland | Wetlands |

| Urban or built-up | Artificial surfaces | Impervious | Artificial surfaces | Impervious surfaces |

| Barren | Bareland | Bareland and tundra | Bare areas | |

| Water | Water bodies | Water | Water bodies | Water body |

| Perennial snow and ice | Snow/Ice | Permanent snow/ice | Permanent ice and snow | |

| Tundra | Tundra | |||

| Cloud |

| Column Name | Data Type | Length |

|---|---|---|

| ARTIFACT_ID | varchar | 50 |

| NAME | varchar | 50 |

| DESCRIPTION | varchar | 255 |

| USAGES | varchar | 100 |

| MAIN_CLASS | varchar | 100 |

| CREATE_DATE | datetime | / |

| VERSION_ID | int | / |

| KEYWORDS | varchar | 150 |

| INPUT | longtext | / |

| OUTPUT | longtext | / |

| PARAMETERS | longtext | / |

| MODEL_PATH | varchar | 255 |

| MODIFY_DATE | date | / |

| TEST_CASE | longtext | / |

| Feature | Data Source | Characteristic |

|---|---|---|

| Spatial Characteristics | Landsat | Longitude and Latitude |

| Temporal Characteristics | Image Acquisition Time | |

| Spectral Characteristics | Band1,Band2...Band7 | |

| RS Index | NDVI, NDWI, EVI, NBR | |

| Topographic Feature | DEM | DEM, Slope, Aspect |

| Land Cover Type | GLC | First-level Type |

| Stage | Data size Time cost(s) |

100000 | 200000 | 300000 | |||

|---|---|---|---|---|---|---|---|

| GDAL | HALF | GDAL | HALF | GDAL | HALF | ||

| 1 | Build Spatial Index | 0.8 | 0.8 | 0.8 | 0.8 | 0.8 | 0.8 |

| 2 | Read data and create spatial relationships |

3.7 | 2.4 | 10.5 | 3.6 | 26.6 | 4.4 |

| 3 | Distribute tasks | / | 3.9 | / | 7.0 | / | 8.4 |

| 4 | Compute feature values | 179 | 23.0 | 247 | 28.2 | 283.6 | 30.7 |

| 5 | Total | 183.5 | 30.1 | 258.3 | 39.7 | 311 | 44.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).