Submitted:

03 May 2023

Posted:

06 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Works and Proposed Methods

2.1. Related Works

2.1.1. Point Cloud Analysis

2.1.2. Point Cloud Local Geometry Exploration

2.1.3. Shared MLP Improvement for Point Cloud Analysis

2.2. Proposed Methods

2.2.1. Detail Activation Module

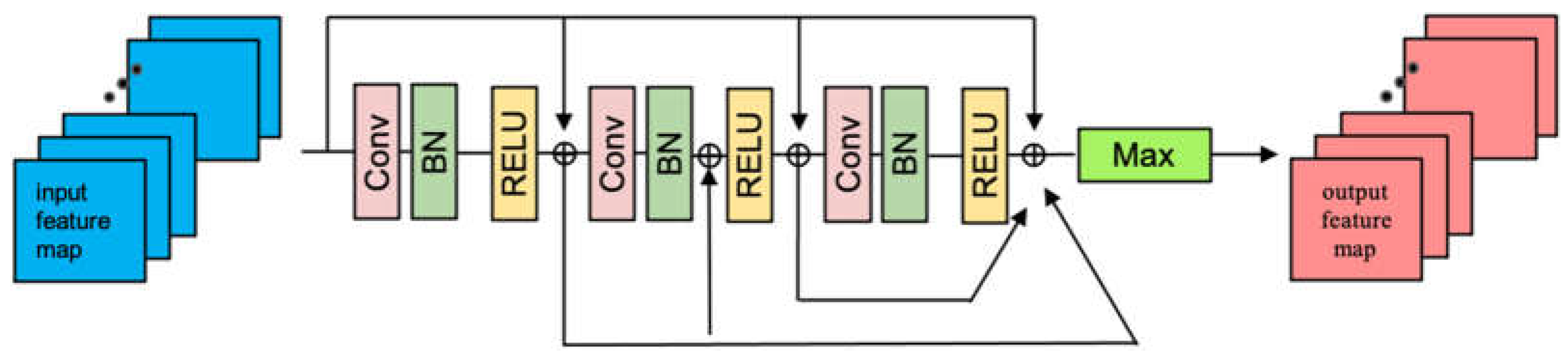

2.2.2. ResDMLP Module

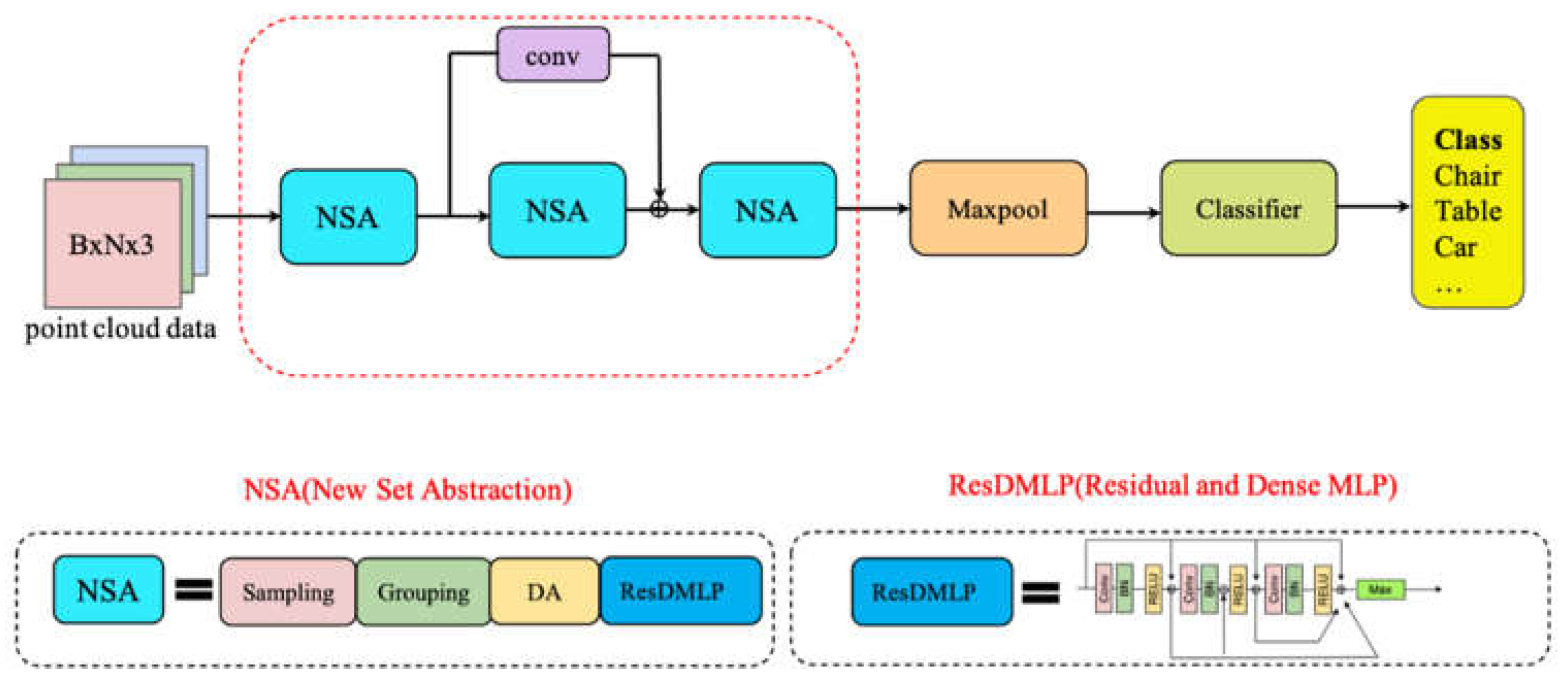

2.2.3. Framework of Point-MDA

3. Results

3.1. Experiments

3.1.1. Experimental Data

3.1.2. Implementation Details

3.2. Classification Results

3.2.1. Shape Classification on ModelNet40

3.3. Ablation Studies

3.3.1. Detail Scales Activated in Different Network Layers

4. Discussion

5. Conclusions and Future Research Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shao, Y.; Fan, Z.; Zhu, B.; Zhou, M.; Chen, Z.; Lu, J. A Novel Pallet Detection Method for Automated Guided Vehicles Based on Point Cloud Data. Sensors 2022, 22 (20), 8019. [CrossRef]

- Wan, R.; Zhao, T.; Zhao, W. PTA-Det: Point Transformer Associating Point Cloud and Image for 3D Object Detection. Sensors 2023, 23 (6), 3229. [CrossRef]

- Barranquero, M.; Olmedo, A.; Gómez, J.; Tayebi, A.; Hellín, C. J.; Saez de Adana, F. Automatic 3D Building Reconstruction from OpenStreetMap and LiDAR Using Convolutional Neural Networks. Sensors 2023, 23 (5), 2444. [CrossRef]

- Comesaña-Cebral, L.; Martínez-Sánchez, J.; Lorenzo, H.; Arias, P. Individual Tree Segmentation Method Based on Mobile Backpack LiDAR Point Clouds. Sensors 2021, 21 (18), 6007. [CrossRef]

- Bhandari, V.; Phillips, T. G.; McAree, P. R. Real-Time 6-DOF Pose Estimation of Known Geometries in Point Cloud Data. Sensors 2023, 23 (6), 3085. [CrossRef]

- Zhang, F.; Zhang, J.; Xu, Z.; Tang, J.; Jiang, P.; Zhong, R. Extracting Traffic Signage by Combining Point Clouds and Images. Sensors 2023, 23 (4), 2262. [CrossRef]

- Influence of Colour and Feature Geometry on Multi-modal 3D Point Clouds Data Registration. https://ieeexplore.ieee.org/abstract/document/7035827/ (accessed 2023-04-02).

- Qi, C. R.; Su, H.; Mo, K.; Guibas, L. J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation; 2017; pp 652–660.

- Qi, C. R.; Yi, L.; Su, H.; Guibas, L. J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Advances in Neural Information Processing Systems; Curran Associates, Inc., 2017; Vol. 30.

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. PointCNN: Convolution On X-Transformed Points. Advances in Neural Information Processing Systems 2018, 31.

- Liu, Y.; Fan, B.; Xiang, S.; Pan, C. Relation-Shape Convolutional Neural Network for Point Cloud Analysis; 2019; pp 8895–8904.

- Wu, W.; Qi, Z.; Fuxin, L. PointConv: Deep Convolutional Networks on 3D Point Clouds; 2019; pp 9621–9630.

- Li, G.; Mueller, M.; Qian, G.; Delgadillo Perez, I. C.; Abualshour, A.; Thabet, A. K.; Ghanem, B. DeepGCNs: Making GCNs Go as Deep as CNNs. IEEE Transactions on Pattern Analysis and Machine Intelligence 2021, 1–1. [CrossRef]

- Zheng, Y.; Gao, C.; Chen, L.; Jin, D.; Li, Y. DGCN: Diversified Recommendation with Graph Convolutional Networks. In Proceedings of the Web Conference 2021; WWW ’21; Association for Computing Machinery: New York, NY, USA, 2021; pp 401–412. [CrossRef]

- Yu, X.; Tang, L.; Rao, Y.; Huang, T.; Zhou, J.; Lu, J. Point-BERT: Pre-Training 3D Point Cloud Transformers With Masked Point Modeling; 2022; pp 19313–19322.

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P. H. S.; Koltun, V. Point Transformer; 2021; pp 16259–16268.

- Zheng, S.; Pan, J.; Lu, C.; Gupta, G. PointNorm: Dual Normalization Is All You Need for Point Cloud Analysis. arXiv October 2, 2022. [CrossRef]

- Ma, X.; Qin, C.; You, H.; Ran, H.; Fu, Y. Rethinking Network Design and Local Geometry in Point Cloud: A Simple Residual MLP Framework. arXiv November 29, 2022. [CrossRef]

- Qian, G.; Li, Y.; Peng, H.; Mai, J.; Hammoud, H.; Elhoseiny, M.; Ghanem, B. PointNeXt: Revisiting PointNet++ with Improved Training and Scaling Strategies. Advances in Neural Information Processing Systems 2022, 35, 23192–23204.

- Yan, X.; Zhan, H.; Zheng, C.; Gao, J.; Zhang, R.; Cui, S.; Li, Z. Let Images Give You More:Point Cloud Cross-Modal Training for Shape Analysis. arXiv October 9, 2022. [CrossRef]

- Xue, L.; Gao, M.; Xing, C.; Martín-Martín, R.; Wu, J.; Xiong, C.; Xu, R.; Niebles, J. C.; Savarese, S. ULIP: Learning a Unified Representation of Language, Images, and Point Clouds for 3D Understanding. arXiv March 30, 2023. [CrossRef]

- Yan, S.; Yang, Z.; Li, H.; Guan, L.; Kang, H.; Hua, G.; Huang, Q. IAE: Implicit Autoencoder for Point Cloud Self-Supervised Representation Learning. arXiv November 22, 2022. [CrossRef]

- Esteves, C.; Xu, Y.; Allen-Blanchette, C.; Daniilidis, K. Equivariant Multi-View Networks; 2019; pp 1568–1577.

- Wei, X.; Yu, R.; Sun, J. View-GCN: View-Based Graph Convolutional Network for 3D Shape Analysis; 2020; pp 1850–1859.

- Alakwaa, W.; Nassef, M.; Badr, A. Lung Cancer Detection and Classification with 3D Convolutional Neural Network (3D-CNN). ijacsa 2017, 8 (8). [CrossRef]

- Tancik, M.; Srinivasan, P.; Mildenhall, B.; Fridovich-Keil, S.; Raghavan, N.; Singhal, U.; Ramamoorthi, R.; Barron, J.; Ng, R. Fourier Features Let Networks Learn High Frequency Functions in Low Dimensional Domains. In Advances in Neural Information Processing Systems; Curran Associates, Inc., 2020; Vol. 33, pp 7537–7547.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition; 2016; pp 770–778.

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K. Q. Densely Connected Convolutional Networks; 2017; pp 4700–4708.

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D ShapeNets: A Deep Representation for Volumetric Shapes; 2015; pp 1912–1920.

- Uy, M. A.; Pham, Q.-H.; Hua, B.-S.; Nguyen, T.; Yeung, S.-K. Revisiting Point Cloud Classification: A New Benchmark Dataset and Classification Model on Real-World Data; 2019; pp 1588–1597.

- Woo, S.; Lee, D.; Lee, J.; Hwang, S.; Kim, W.; Lee, S. CKConv: Learning Feature Voxelization for Point Cloud Analysis. arXiv July 27, 2021. [CrossRef]

- Li, J.; Chen, B. M.; Lee, G. H. SO-Net: Self-Organizing Network for Point Cloud Analysis; 2018; pp 9397–9406.

- Zhao, H.; Jiang, L.; Fu, C.-W.; Jia, J. PointWeb: Enhancing Local Neighborhood Features for Point Cloud Processing; 2019; pp 5565–5573.

- Phan, A. V.; Nguyen, M. L.; Nguyen, Y. L. H.; Bui, L. T. DGCNN: A Convolutional Neural Network over Large-Scale Labeled Graphs. Neural Networks 2018, 108, 533–543. [CrossRef]

- Zhou, H.; Feng, Y.; Fang, M.; Wei, M.; Qin, J.; Lu, T. Adaptive Graph Convolution for Point Cloud Analysis; 2021; pp 4965–4974.

- Ran, H.; Liu, J.; Wang, C. Surface Representation for Point Clouds; 2022; pp 18942–18952.

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks; 2018; pp 4510–4520.

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks; 2018; pp 7132–7141.

- Xiangli, Y.; Xu, L.; Pan, X.; Zhao, N.; Rao, A.; Theobalt, C.; Dai, B.; Lin, D. BungeeNeRF: Progressive Neural Radiance Field for Extreme Multi-Scale Scene Rendering. In Computer Vision – ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G. M., Hassner, T., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, 2022; pp 106–122. [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; Desmaison, A.; Kopf, A.; Yang, E.; DeVito, Z.; Raison, M.; Tejani, A.; Chilamkurthy, S.; Steiner, B.; Fang, L.; Bai, J.; Chintala, S. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems; Curran Associates, Inc., 2019; Vol. 32.

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv January 4, 2019. [CrossRef]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv May 3, 2017. [CrossRef]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S. E.; Bronstein, M. M.; Solomon, J. M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. 2019, 38 (5), 146:1-146:12. [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision; 2016; pp 2818–2826.

- Yan, X.; Zheng, C.; Li, Z.; Wang, S.; Cui, S. PointASNL: Robust Point Clouds Processing Using Nonlocal Neural Networks With Adaptive Sampling; 2020; pp 5589–5598.

- Muzahid, A. A. M.; Wan, W.; Sohel, F.; Wu, L.; Hou, L. CurveNet: Curvature-Based Multitask Learning Deep Networks for 3D Object Recognition. IEEE/CAA Journal of Automatica Sinica 2021, 8 (6), 1177–1187. [CrossRef]

- Pang, Y.; Wang, W.; Tay, F. E. H.; Liu, W.; Tian, Y.; Yuan, L. Masked Autoencoders for Point Cloud Self-Supervised Learning. In Computer Vision – ECCV 2022; Springer, Cham, 2022; pp 604–621. [CrossRef]

- Wang, Z.; Yu, X.; Rao, Y.; Zhou, J.; Lu, J. P2P: Tuning Pre-Trained Image Models for Point Cloud Analysis with Point-to-Pixel Prompting. arXiv October 12, 2022. [CrossRef]

- Zhang, R.; Wang, L.; Wang, Y.; Gao, P.; Li, H.; Shi, J. Parameter Is Not All You Need: Starting from Non-Parametric Networks for 3D Point Cloud Analysis. arXiv March 14, 2023. [CrossRef]

- Liu, Y.; Tian, B.; Lv, Y.; Li, L.; Wang, F.-Y. Point Cloud Classification Using Content-Based Transformer via Clustering in Feature Space. IEEE/CAA Journal of Automatica Sinica 2023, 1–9. [CrossRef]

- Wu, C.; Zheng, J.; Pfrommer, J.; Beyerer, J. Attention-Based Point Cloud Edge Sampling. arXiv March 26, 2023. [CrossRef]

- Xu, Y.; Fan, T.; Xu, M.; Zeng, L.; Qiao, Y. SpiderCNN: Deep Learning on Point Sets with Parameterized Convolutional Filters; 2018; pp 87–102.

- Qiu, S.; Anwar, S.; Barnes, N. Dense-Resolution Network for Point Cloud Classification and Segmentation; 2021; pp 3813–3822.

- Cheng, S.; Chen, X.; He, X.; Liu, Z.; Bai, X. PRA-Net: Point Relation-Aware Network for 3D Point Cloud Analysis. IEEE Transactions on Image Processing 2021, 30, 4436–4448. [CrossRef]

- Song, S.; Lichtenberg, S. P.; Xiao, J. SUN RGB-D: A RGB-D Scene Understanding Benchmark Suite; 2015; pp 567–576.

- Yi, L.; Kim, V. G.; Ceylan, D.; Shen, I.-C.; Yan, M.; Su, H.; Lu, C.; Huang, Q.; Sheffer, A.; Guibas, L. A Scalable Active Framework for Region Annotation in 3D Shape Collections. ACM Trans. Graph. 2016, 35 (6), 210:1-210:12. [CrossRef]

- Armeni, I.; Sener, O.; Zamir, A. R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3D Semantic Parsing of Large-Scale Indoor Spaces; 2016; pp 1534–1543.

| Method | Publication | ModelNet40 | Extra Training Data | Deep | |

|---|---|---|---|---|---|

| OA(%) | mAcc(%) | ||||

| PointNet [8] | CVPR 2017 | 89.2 | 86.0 | × | × |

| PointNet++ [9] | NeurIPS 2017 | 90.7 | 88.4 | × | × |

| PointCNN [10] | NeurIPS 2018 | 92.5 | 88.1 | × | × |

| RS-CNN [11] | CVPR 2019 | 92.9 | - | × | × |

| PointConv [12] | CVPR 2019 | 92.5 | - | × | × |

| DeepGCN [13] | PAMI 2019 | 93.6 | 90.9 | × | √ |

| PointASNL [45] | CVPR 2020 | 93.2 | - | × | × |

| CurveNet [46] | ICCV 2021 | 93.8 | - | × | × |

| Point-BERT [15] | CVPR 2022 | 93.8 | - | √ | × |

| PointNorm [17] | CVPR 2022 | 93.7 | 91.3 | × | √ |

| PointMLP [18] | ICLR 2022 | 93.7 | 90.9 | × | √ |

| PointNeXT [19] | NeurIPS 2022 | 94.4 | 91.1 | × | √ |

| RepSurf-U [36] | CVPR 2022 | 94.4 | 91.4 | × | × |

| Point-MAE [47] | CVPR 2022 | 94.0 | - | √ | × |

| P2P [48] | CVPR 2022 | 94.0 | 91.6 | √ | √ |

| Point-PN [49] | CVPR 2023 | 93.8 | - | × | × |

| PointConT [50] | IEEE 2023 | 93.5 | - | × | × |

| APES [51] | CVPR 2023 | 93.5 | - | × | × |

| Point-MDA | 2023 | 94.0 | 91.9 | × | × |

| Method | Publication | ScanObjectNN | Extra Training Data | Deep | |

|---|---|---|---|---|---|

| OA(%) | mAcc(%) | ||||

| PointNet [8] | CVPR 2017 | 68.2 | 63.4 | × | × |

| PointNet++ [9] PointCNN [10] |

NeurIPS 2017 NeurIPS 2018 |

77.9 78.5 |

75.4 75.1 |

× × |

× × |

| DGCNN [34] SpiderCNN [52] DRNet [53] PRA-Net [54] |

ELSEVIER 2018 ECCV 2018 WACV 2021 IEEE 2021 |

78.1 73.7 80.3 82.1 |

73.6 69.8 78.0 79.1 |

× × × × |

× × × × |

| Point-BERT [15] PointNorm [17] PointMLP [18] PointNeXt [19] RepSurf-U [36] |

CVPR 2022 CVPR 2022 ICLR 2022 NeurIPS 2022 CVPR 2022 |

83.1 86.8 85.4 88.2 84.6 |

- 85.6 83.9 86.8 81.9 |

√ × × × × |

× √ √ √ × |

| Point-MAE [47] | CVPR 2022 | 85.2 | - | √ | × |

| P2P [48] | CVPR 2022 | 89.3 | 88.5 | √ | × |

| Point-PN [49] PointConT [50] |

CVPR 2023 IEEE 2023 |

87.1 88.0 |

- 86.0 |

× × |

× × |

| Point-MDA | 2023 | 85.8 | 83.6 | × | × |

| Dataset | Activation Frequency Level | OA(%) | mAcc(%) |

|---|---|---|---|

| ModelNet40 | L1=1,L2=5,L3=9 | 93.7 | 91.4 |

| L1=2,L2=6,L3=10 | 94.0 | 91.9 | |

| L1=3,L2=7,L3=11 L1=4,L2=8,L3=12 |

93.9 93.7 |

91.7 91.3 |

|

| ScanObjectNN | L1=1,L2=5,L3=9 | 85.4 | 82.9 |

| L1=2,L2=6,L3=10 | 85.7 | 83.2 | |

| L1=3,L2=7,L3=11 | 85.8 | 83.6 | |

| L1=4,L2=8,L3=12 | 85.4 | 83.3 |

| Dataset | DA | ResDMLP | Reuse | OA(%) | mAcc(%) |

|---|---|---|---|---|---|

| ModelNet40 | √ | √ | √ | 94.0 | 91.9 |

| √ | × | √ | 93.7 | 91.4 | |

| × × |

√ √ |

× √ |

93.7 93.6 |

91.2 91.2 |

|

| ScanObjectNN | √ | √ | √ | 85.8 | 83.6 |

| √ | × | √ | 84.7 | 82.8 | |

| × | √ | × | 85.3 | 83.2 | |

| × | √ | √ | 84.8 | 82.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).