1. Introduction and Notation

Simulation is widely recognized as an effective technique to produce forecasts, evaluate risk (see, e.g., [

1]), animate and illustrate the performance of a system over time (see, e.g., [

2]). When there is uncertainty in a component of a simulation model, it is said to be a random component, and it is modeled using a probability distribution and/or a stochastic process that is generated during the simulation run, to produce a stochastic simulation. Random component typically depends on the value of certain parameters, and we will denote by

a particular value for the vector of parameters of the random components of a stochastic simulation, and

will denote the random vector that corresponds to the parameter values when there is uncertainty on the value of these parameters.

In general, the output of a stochastic (dynamic) simulation can be regarded as a stochastic process

, where

is a random vector (of arbitrary dimension

d) representing the state of the simulation at time

. The term transient simulation applies to a dynamic simulation that has a well-defined termination time, so that the output of a transient simulation can be viewed as a stochastic process

, where

T is a stopping time (may be deterministic), see, e.g., [

3] for a definition of stopping time. Note that this notation includes the case of a discrete-time output

, if we assume that

, where

denotes the integer part of

s.

A performance variable

W in transient simulation is a real-valued random variable (r.v.) that depends on the simulation output up to time

T, i.e.,

, and the expectation of a performance variable

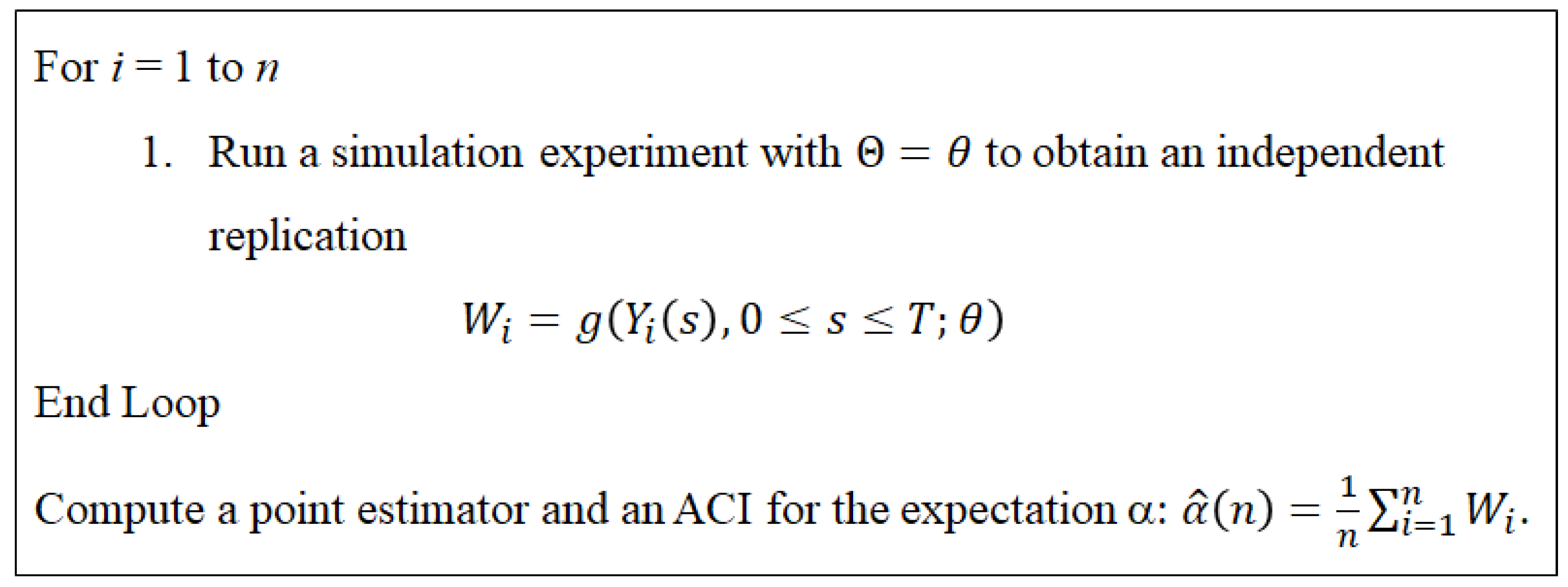

W is a performance measure that we usually estimate through experimentation with the simulation model. When there is no uncertainty in the parameters of the random components, the standard methodology that is used to estimate a performance measure in transient simulation is the method of independent replications, that consists on running the simulation model to produce

n replications

that can be regarded as independent and identically distributed (i.i.d.) random variables (see

Figure 1) .

Under the method of independent replications, a point estimator for the expectation

is the average

. If

, it follows from the classical Law of Large Numbers (LLN), that

is consistent, i.e., it satisfies

, as

(where ⇒ denotes weak convergence of random variables), see, e.g., [

3] for a proof. Consistency guarantees that the estimator approaches the parameter as the number of replications

n increases, and the accuracy of the simulation-based estimator

is typically assessed by an asymptotic confidence interval (ACI) for the parameter. The expression for an ACI for a parameter of a stochastic simulation is usually obtained through a Central Limit Theorem (CLT) for the estimator (see, for example, chapter 3 of [

4]). For the case of the expectation

in the algorithm of

Figure 1, if

, the classical CLT implies that

as

, where

and

denotes a r.v. distributed as normal with mean 0 and variance 1. Then, if

, it follows from (

1) and Slutsky’s Theorem (see the Appendix) that

as

, where

denotes the sample standard deviation, i.e.,

. This CLT implies that

for

, where

denotes the (

)-quantile of a N(0,1), which is sufficient to establish a

ACI for

with halfwidth

A halfwidth in the form of (

2) is the typical measure used in simulation software (e.g., Simio, see [

2]) to assess the accuracy of

for the estimation of expectation

.

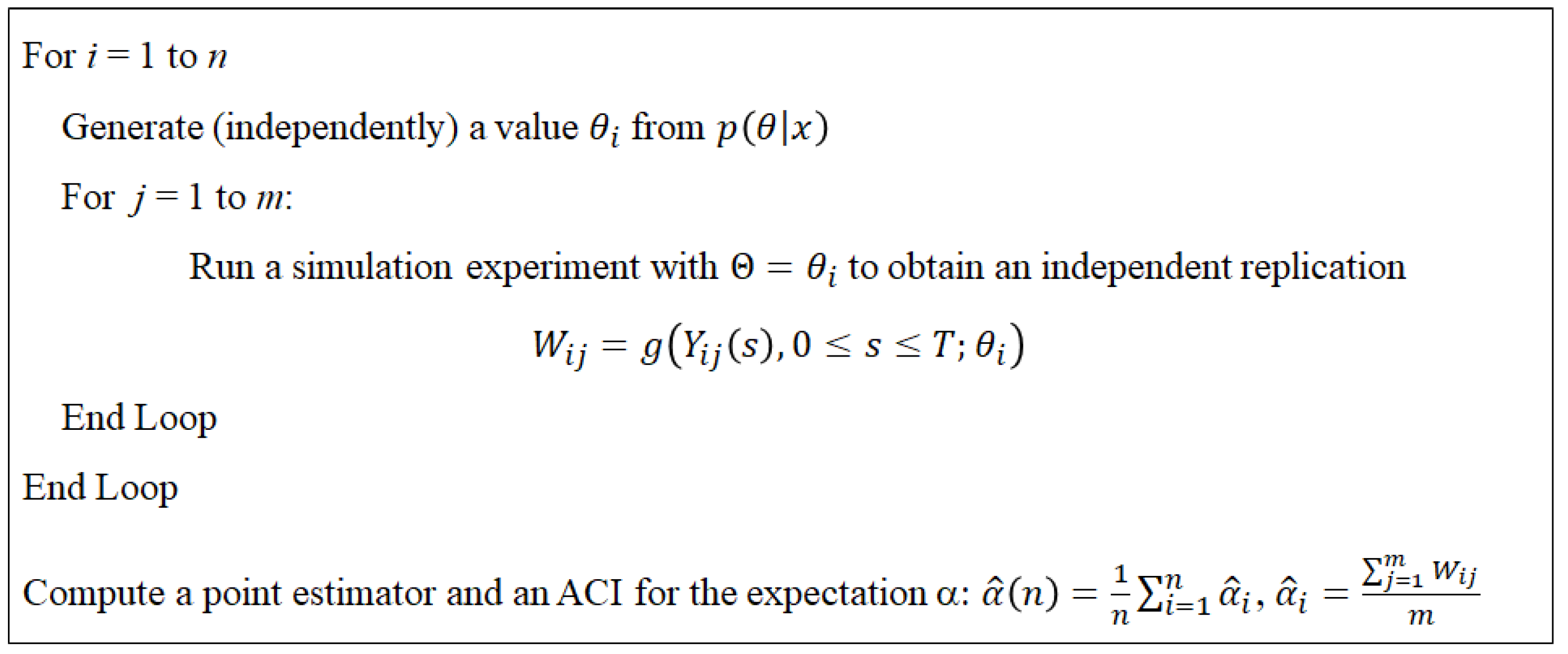

In contrast to the estimation of (output) performance measures, parameters of (input) random components of a simulation model are usually estimated from real-data observations (

x) and, while most applications covered in the relevant literature assume that no uncertainty exists in the value of these parameters, the uncertainty can be significant when little data is available. In these cases, Bayesian statistics can be used to incorporate this uncertainty in the output analysis of simulation experiments via the use of a posterior distribution

. A methodology currently proposed for the analysis of simulation experiments under parameter uncertainty, is a two-level nested simulation algorithm (see, e.g., [

6,

7,

8]. In the outer level, we simulate (

n) observations for the parameters from a posterior distribution

, while in the inner level we simulate (

m) observations for the performance variable with the parameters fixed at the value (

) generated in the outer level (see

Figure 2). In this paper, we focus on the output analysis of two-level simulation experiments, for the case where the observations at the inner level are independent, showing how the variance of a simulated observation can be decomposed into parametric and stochastic variance components. Afterwards, we derive a CLT for both the estimator of the point forecast and the estimators of the variance components. Our CLTs allow us to compute an ACI for each estimator. Our results are validated through experiments with a forecast model for sporadic demand reported in [

10]. This paper is an extended version of results initially reported in [

11] and the missing proofs in [

11] are provided.

Following this introduction, we present the proposed methodology for the construction of an ACI for the point forecast and the variance components in a two-level simulation experiment. Afterwards, we present an illustrative example that has an analytical solution for the parameters of interest in this paper. This example is used in the next section to illustrate the application and validity of our proposed methodologies for the construction of an ACI. Finally, in the last section, we present conclusions and directions for future research.

3. An Example with Analytical Solution

The following model (reported in [

10]) has been proposed to forecast sporadic demand by incorporating data on times between arrivals and customer demand; where uncertainty on the model parameters is incorporated using a Bayesian approach. For this model, we will show analytical expressions for the performance measures defined in

Section 2. These expressions are used in the following section to illustrate the validity of the ACIs proposed in the previous section.

Customer arrivals for a particular item in a shop follow a Poisson process, yet there is uncertainty in the arrival rate

, so that given

, interarrival times between customers are i.i.d. with exponential density:

where

. Every client can order

j units of this item with probability

,

,

. Let

and

, then

is the parameter vector, and

is the parameter space, where

.

Total demand during a period of length

T is

where

is the number of customer arrivals during the interval

,

, and

are the individual demands (conditionally independent relative to

). The information about

consists of i.i.d. observations

,

of past customers, where

is the interarrival time between customer

i and customer (

), and

is the number of units ordered by client

i. By taking Jeffrey’s non-informative prior as the prior density for

, we obtain the posterior density (see [

10] for details)

, where

,

,

,

as

where

, and

, for

. Using this notation, we can show that (see [

1] for details)

where

,

,

,

,

,

, and

are defined in (

10).

4. Empirical Results

To validate the ACIs proposed in (4), we conducted some experiments with the Bayesian model of the previous section to illustrate the estimation of , and . We considered the values , , , , , , , , . With this data, the point forecast is , and the variance components are , . The empirical results that we report below illustrate a typical behavior that we should experiment for any other feasible data set.

In all the experiments reported in this Section we considered 1000 independent replications of the algorithm of

Figure 2 for different number of observations in the outer level (

n) and in the inner level (

m); in each replication we computed the point estimators for

,

, and

, and the corresponding halfwidths of 90% ACI’s according to equations (

6). Since we know the value of the parameters we are estimating, we were able to report (for

n and

m given), the empirical coverage (i.e., the fraction of independent replications that the corresponding ACI covered the true parameter value), the average and standard deviation of halfwidths, and the squared root of the empirical mean squared error defined by

where

denotes the value obtained in the

i-th replication for the estimation of a parameter

(

in our experiments).

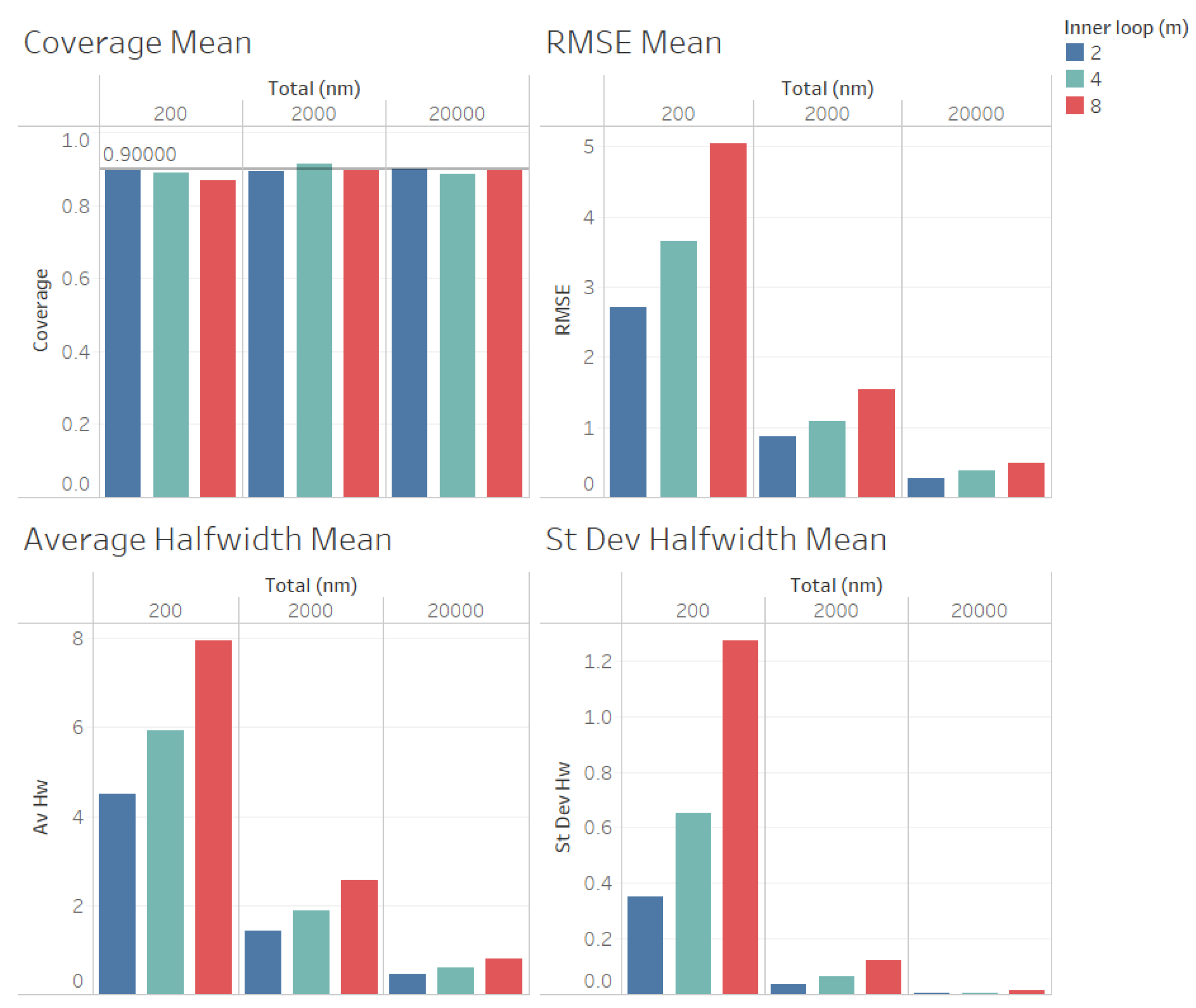

In a first set of experiments we considered

, and

for each value of

, to compare the effect of increasing the number of observations in the inner level for a given value value of

. The results of this set of experiments are summarized in

Figure 3,

Figure 4 and

Figure 5. Note that we are not considering

in this set of experiments to be able to construct an ACI for the stochastic variance

.

In

Figure 3 we illustrate the performance measures for the quality of the estimation procedure that we obtained for the estimation of the point forecast

. As we observe from

Figure 3, the coverages are acceptable (very close to the nominal value of 0.9, even for

). These results validate the ACI defined in (

6) for the point forecast

. We also observe from

Figure 3 that the RMSE, average halfwidth and standard deviation of halwidths improve (decrease) as the number of observations in the outer level (

n) increases, as suggested by Corollary 1. Note also from

Figure 3 that a smaller value of

m provides smaller RMSE, average halfwidths and standard deviations of halwidths, validating our theoretical results.

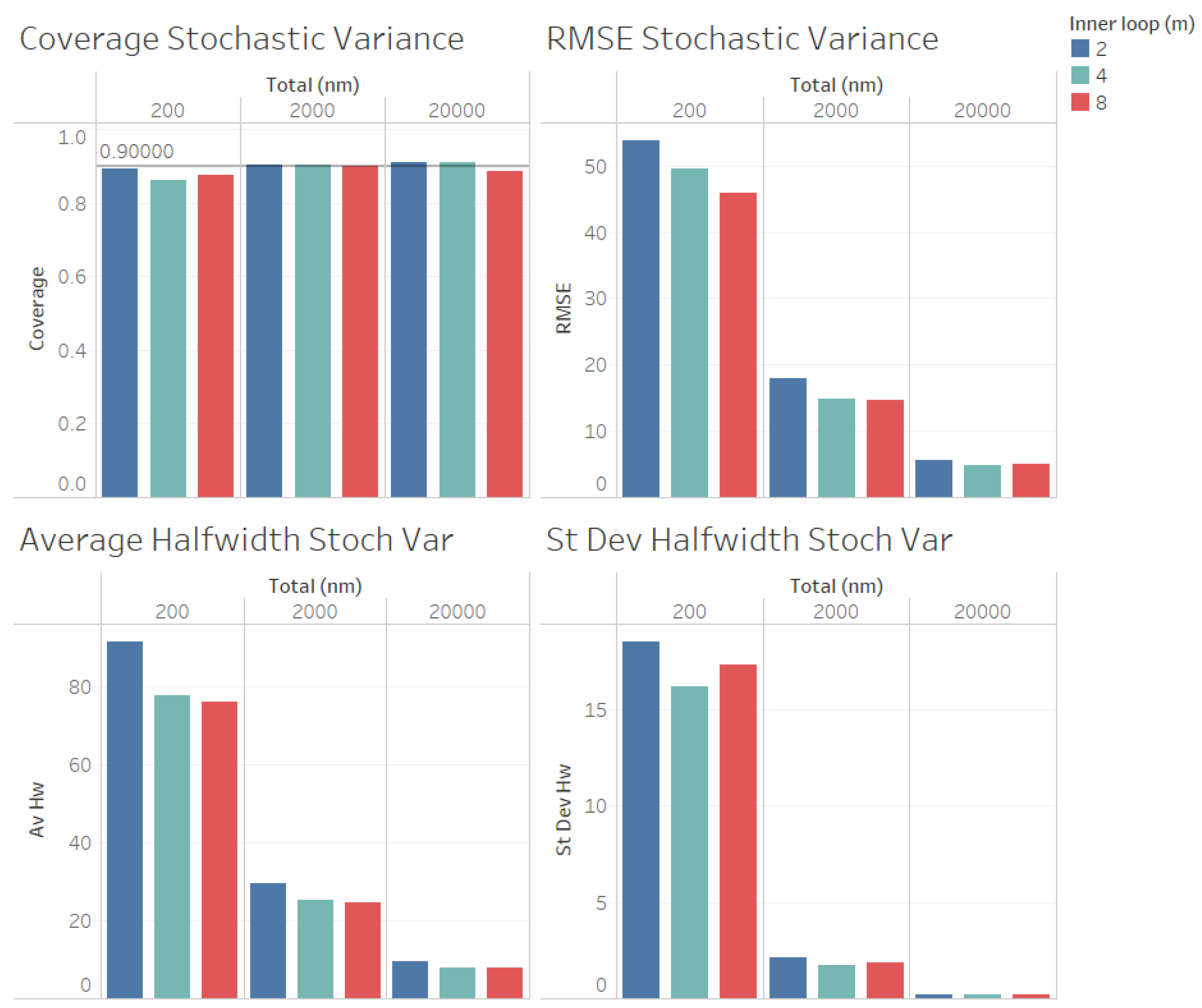

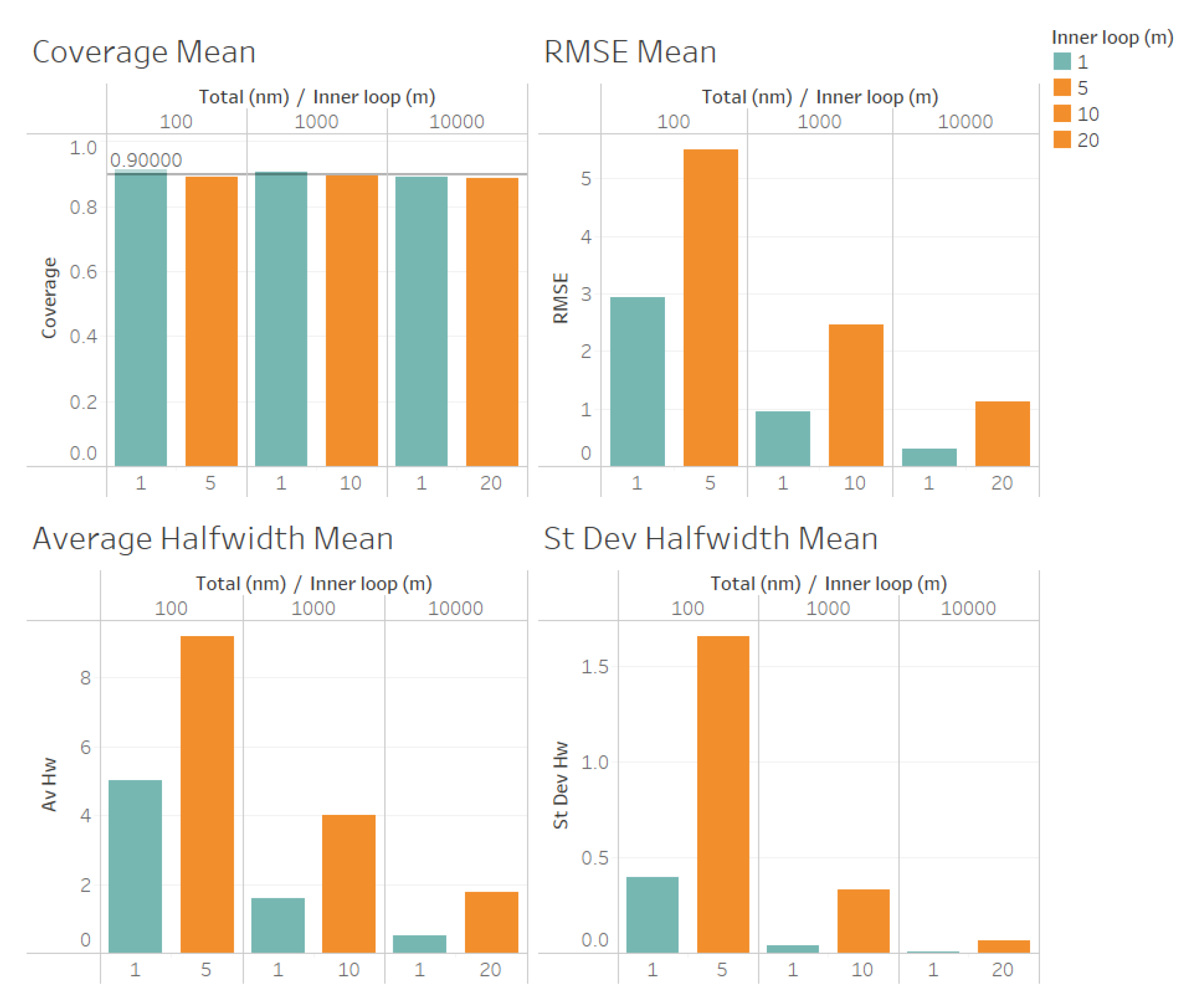

In

Figure 4 we illustrate the performance measures for the quality of the estimation procedure that we obtained for the estimation of the stochastic variance

. As we observe from

Figure 4, the coverages are acceptable (very close to the nominal value of 0.9, even for

). These results validate the ACI defined in (

6) for the stochastic variance

. We also observe from

Figure 3 that the RMSE, average halfwidth and standard deviation of halwidths improve (decrease) as the number of observations in the outer level (

n) increases, as suggested by Corollary 2. However, contrary to what we observe for the estimation of

, a larger value of

m provides smaller RMSE, average halfwidths and standard deviations of halwidths, suggesting that, for a fixed value of

, the quality of the estimation for the stochastic variance

improves as the number of the observations in the inner loop (

m) increases.

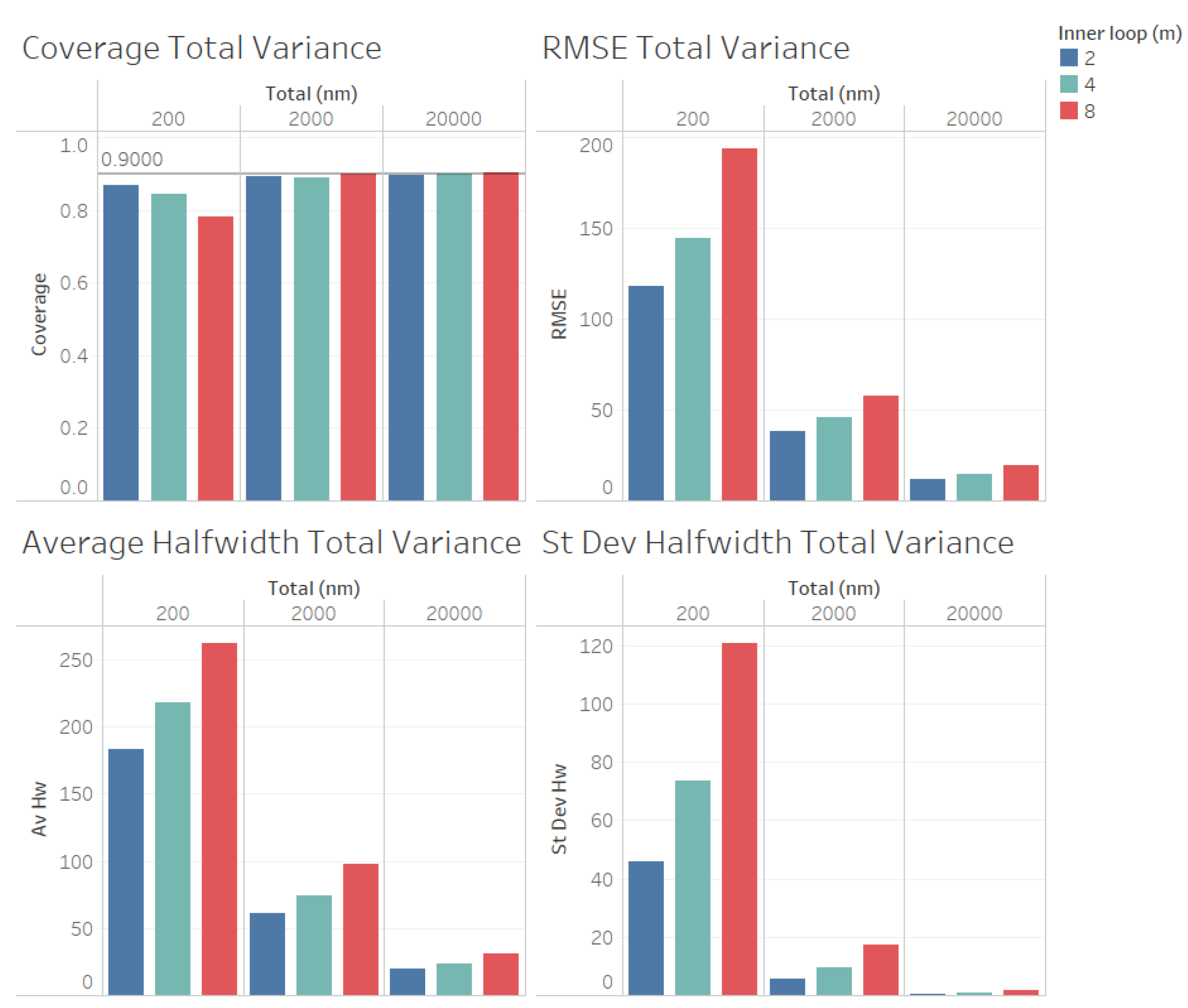

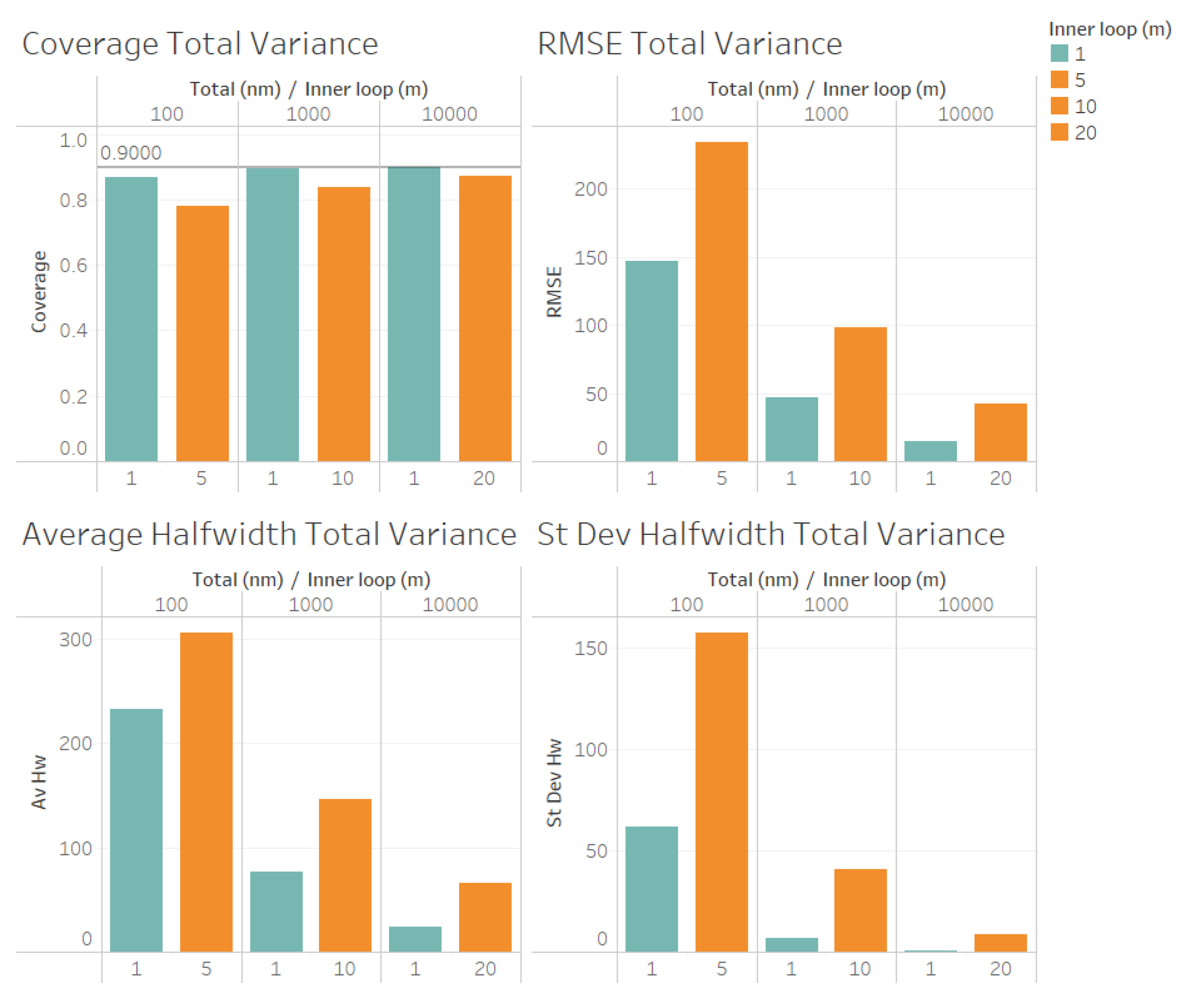

For the estimation of the total variance

(illustrated n

Figure 5) we obtained similar results for the quality of the estimation as for the estimation of the point forecast

, except that a larger values of

n is required to obtain reliable coverages. As we observe from

Figure 3, the coverages are acceptable (very close to the nominal value of 0.9, for

and 10000). These results validate the ACI defined in (

6) for the total variance

. We also observe from

Figure 3 that the RMSE, average halfwidth and standard deviation of halwidths improve (decrease) as the number of observations in the outer level (

n) increases, as suggested by Corollary 3. Note also from

Figure 5 that a smaller value of

m provides smaller RMSE, average halfwidths and standard deviations of halwidths, validating our theoretical results.

Figure 4.

Performance of the estimation of stochastic variance for fixed comparing different values of m.

Figure 4.

Performance of the estimation of stochastic variance for fixed comparing different values of m.

Figure 5.

Performance of the estimation of total variance for fixed comparing different values of m.

Figure 5.

Performance of the estimation of total variance for fixed comparing different values of m.

In a second set of experiments we considered

, with

and

for each value of

, to compare the quality of the estimation procedures using the value of

m that we suggest as optimal for the estimation of point forecast

with the value of

m suggested in [

6] as an adequate choice for

m in the case of biased estimators in the inner level of the algorithm of

Figure 2. The results of this set of experiments are summarized in

Figure 6 and

Figure 7. Note that we are not considering the estimation of the stochastic variance

in this set of experiments because

is required to construct an ACI for the stochastic variance

. Note also that we considered

,

, and

, and we are using the same color for

in

Figure 6 and

Figure 7.

In

Figure 6 we illustrate the performance measures for the quality of the estimation procedure that we obtained for the estimation of the point forecast

in our second set of experiments . As we observe from

Figure 6, the coverages are acceptable (very close to the nominal value of 0.9, even for

). These results validate the ACI defined in (

6) for the point forecast

, and the ACI suggested by Proposition 3. We also observe from

Figure 6 that the RMSE, average halfwidth and standard deviation of halwidths are worse for

, confirming our finding that, for the same number of replications

,

produces better point estimators for

than

confirming the result of Proposition 3.

Finally, in

Figure 7 we show the results of our second set of experiments for the estimation of the total variance

. We found similar resulta as for the case of the estimation of the point forecast

, coverages are very good (even for

n = 100), and all performance measure for the ACI (RMSE, average and standard deviation of halfwidths) are worse for

, suggesting that, for the same number of replications

,

produces better point estimators for

than

.

5. Conclusions

In this paper, we propose methodologies to calculate point estimators (and their corresponding halfwidths), for both the point forecast and the variance components in two-level nested stochastic simulation experiments, for the case where the observations at both levels of the algorithm are independent. These methods can be applied to the construction of Bayesian forecasts based on experiments using a simulation model under parameter uncertainty.

Both our theoretical and our experimental results confirm that the proposed point estimators and their corresponding halfwidths are asymptotically valid, i.e., the point estimators converge to the corresponding parameter values and the halfwidths converge to the nominal coverage as the number of replications (n) of the outer level increases.

Furthermore, given a fixed number of total observations (), we show that the choice of only one replication in the inner level () provides more accurate estimators for both the point forecast (), and the variance of the point forecast (). However, is required for the estimation of .

Directions for future research on this topic includes experimentation with other point estimators, such as, quasi Monte Carlo or Simpson integration, with the objective of finding more accurate point estimators for the parameters considered in this paper.