1. Introduction

Williams and Beer [

1] proposed the

partial information decomposition (PID) framework as a way to characterize, or analyze, the information that a set of random variables (often called

sources) has about another variable (referred to as the

target). PID is a useful tool for gathering insights and analyzing the way information is stored, modified, and transmitted within complex systems [

2,

3]. It has found applications in areas such as cryptography [

4] and neuroscience [

5,

6], with many other potential use cases, such as in understanding how information flows in gene regulatory networks [

7], neural coding [

8], financial markets [

9], and network design [

10].

Consider the simplest case: a three-variable joint distribution

describing three random variables: two sources,

and

, and a target

T. Notice that, despite what the names

sources and

target might suggest, there is no directionality (causal or otherwise) assumption. The goal of PID is to

decompose the information that

has about

T into the sum of 4 non-negative quantities: the information that is present in both

and

, known as

redundant information

R; the information that only

(respectively

) has about

T, known as

unique information

(respectively

); the

synergistic information

S that is present in the pair

but not in

or

alone. That is, in this case with two variables, the goal is to write

where

is the mutual information between

T and

Y [

11]. Because unique information and redundancy satisfy the relationship

(for

), it turns out that defining how to compute one of these quantities (

R,

, or

S) is enough to fully determine the others [

1,

12]. As the number of variables grows, the number of terms appearing in the PID of

grows super exponentially [

13]. Williams and Beer [

1] suggested a set of axioms that a measure of redundancy should satisfy, and proposed a measure of their own. Those axioms became known as the Williams-Beer axioms and the measure they proposed has subsequently been criticized for not capturing informational content, but only information size [

14].

Spawned by that initial work, other measures and axioms for information decomposition have been introduced; see, for example, the work by Bertschinger

et al. [

15], Griffith and Koch [

16], and James

et al. [

17]. There is no consensus about what axioms any measure should satisfy or whether a given measure is

capturing the information that it should capture, except for the Williams-Beer axioms. Today, there is still debate about what axioms a measure of redundant information should satisfy and there is no general agreement on what is an appropriate PID [

17,

18,

19,

20,

21].

Recently, Kolchinsky [

12] suggested a new general approach to define measures of redundant information, also known as

intersection information (II), the designation that we adopt hereinafter. At the core of that approach is the choice of an order relation between information sources (random variables), which allows comparing two sources in terms of how informative they are with respect to the target variable. Every order relation that satisfies a set of axioms introduced by Kolchinsky [

12] yields a valid II measure.

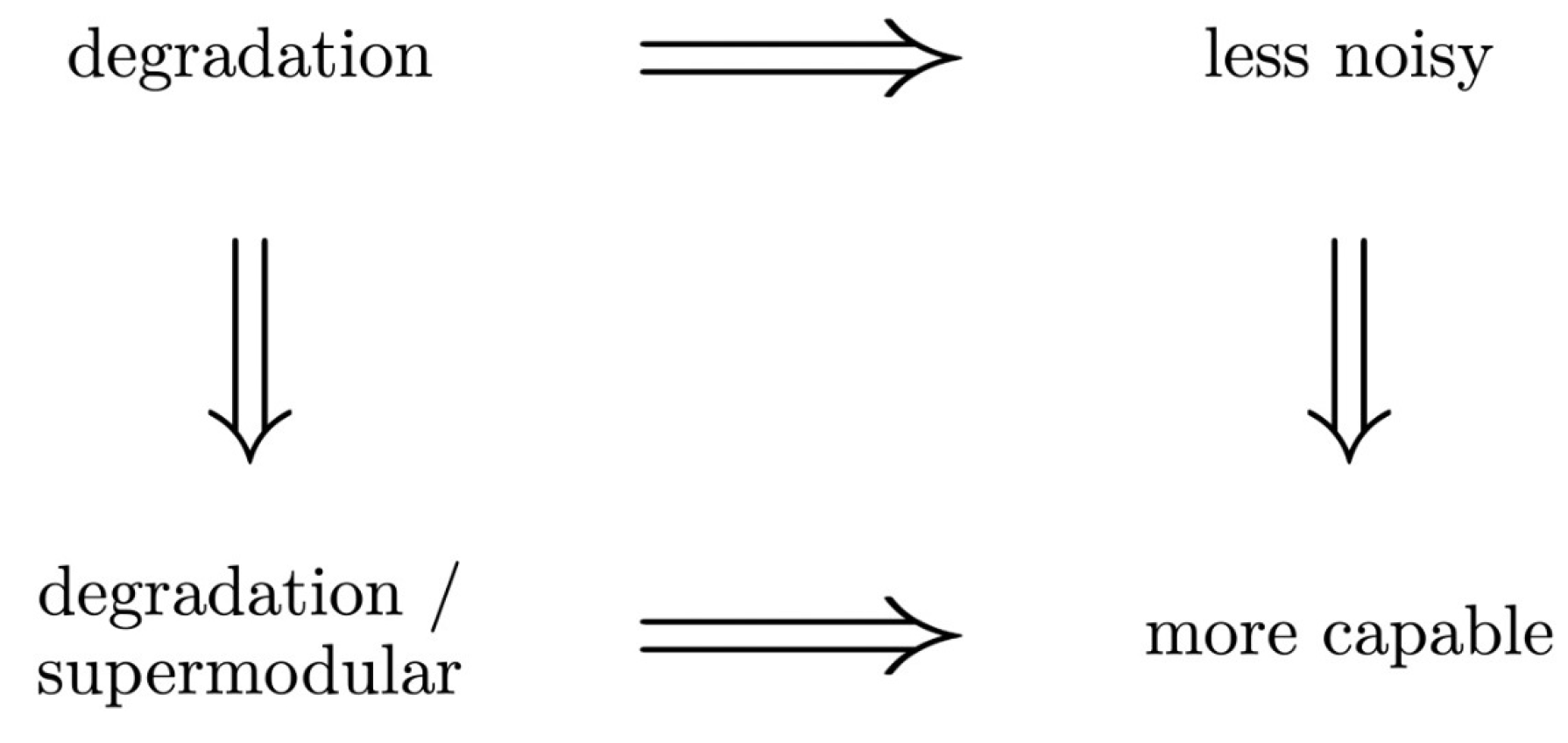

In this work, we take previously studied partial orders between communication channels, which correspond to partial orders between the corresponding output variables in terms of information content with respect to the input. Following Kolchinsky’s approach, we show that those orders thus lead to the definition of new II measures. The rest of the paper is organized as follows. In

Section 2 and

Section 3, we review Kolchinsky’s definition of an II measure and the

degradation order. In

Section 4, we describe some partial orders between channels, based on the work by Korner and Marton [

22], derive the resulting II measures, and study some of their properties.

Section 5 presents and comments on the optimization problems involved in the computation of the proposed measures. In

Section 6, we explore the relationships between the new II measures and previous PID approaches, and we apply the proposed II measures to some famous PID problems.

Section 7 concludes the paper by pointing out some suggestions for future work.

3. Channels and the Degradation/Blackwell Order

Given two discrete random variables

and

, the corresponding conditional distribution

corresponds, in an information-theoretical perspective, to a discrete memoryless channel with a channel matrix

K,

i.e.,, such that

[

11]. This matrix is row-stochastic:

, for any

and

, and

The comparison of different channels (equivalently, different stochastic matrices) is an object of study with many applications in different fields [

23]. That study addresses order relations between channels and their properties. One such order, named

degradation order (or

Blackwell order) and defined next, was used by Kolchinsky to obtain a particular II measure [

12].

Consider the distribution and the channels between T and each , that is, is a row-stochastic matrix with the conditional distribution .

Definition 1.

We say that channel is a degradation of channel , and write or , if there exists a channel from to , i.e.,, a row-stochastic matrix, such that .

Intuitively, consider 2 agents, one with access to

and the other with access to

. The agent with access to

has at least as much information about

T as the one with access to

, because it has access to channel

, which allows sampling from

, conditionally on

[

20]. Blackwell [

24] showed that this is equivalent to saying that, for whatever decision game where the goal is to predict

T and for whatever utility function, the agent with access to

cannot do better, on average, than the agent with access to

.

Based on the degradation/Blackwell order, Kolchinsky [

12] introduced the

degradation II measure, by plugging the “

” order in (

2):

As noted by Kolchinsky [

12], this II measure has the following operational interpretation. Suppose

and consider agents 1 and 2, with access to variables

and

, respectively. Then

is the maximum information that agent 1 (resp. 2) can have w.r.t.

T without being able to do better than agent 2 (resp. 1) on any decision problem that involves guessing

T. That is, the degradation II measure quantifies the existence of a dominating strategy for any guessing game.

5. Optimization Problems

We now focus on some observations about the optimization problems of the introduced II measures. All problems seek to maximize (under different constraints) as a function of the conditional distribution , equivalently with respect to the channel from T to Q, which we will denote as . For fixed – as is the case in PID – is a convex function of [11, Theorem 2.7.4]. As we will see, the admissible region of all problems is a compact set and, since is a continuous function of the parameters of , the supremum will be achieved, thus we replace sup with max.

As noted by Kolchinsky [

12], the computation of (

3) involves only linear constraints, and since the objective function is convex, its maximum is attained at one of the vertices of the admissible region. The computation of the other measures is not as simple. To solve (

4), we may use one of the necessary and sufficient conditions presented by Makur and Polyanskiy [25, Theorem 1]. For instance, let

V and

W be two channels with input

T, and

be the probability simplex of the target

T. Then,

if and only if, for any pair of distributions

, the inequality

holds, where

denotes the

-distance

2 between two vectors. Notice that

is the distribution of the output of channel

W for input distribution

; thus, intuitively, the condition in (

10) means that the two output distributions of the

less noisy channel are more different from each other than those of the other channel. Hence, computing

can be formulated as solving the problem

Although the restriction set is convex since the

-divergence is an

f-divergence, with

f convex [

26], the problem is intractable because we have an infinite (uncountable) number of restrictions. One may construct a set

by taking an arbitrary number of samples

S of

to define the problem

The above problem yields an upper bound on

. To compute

, we define the problem

which also leads to a convex restriction set, because

is a convex function of

. We discretize the problem in the same manner to obtain a tractable version

which also yields an upper bound on

. The final introduced measure,

, is given by

The proponents of the

partial order have not yet found a condition to check if

(private communication with one of the authors) and neither have we.

6. Relation with Existing PID Measures

Griffith

et al. [

31] introduced a measure of II as

with the order relation ◃ defined by

if

for some deterministic function

f. That is,

quantifies redundancy as the presence of deterministic relations between input and target. If

Q is a solution of (

15), then there exist functions

, such that

, which implies that, for all

is a Markov chain. Therefore,

Q is an admissible point of the optimization problem that defines

, thus we have that

.

Barrett [

32] introduced the so-called

minimum mutual information (MMI) measure of bivariate redundancy as

It turns out that, if

are jointly Gaussian, then most of the introduced PIDs in the literature are equivalent to this measure [

32]. As noted by Kolchinsky [

12], it may be generalized to more than two sources,

which allows us to trivially conclude that, for any set of variables

,

One of the appeals of measures of II, as defined by Kolchinsky [

12], is that it is the underlying partial order that determines what is intersection - or redundant - information. For example, take the degradation II measure, in the

case. Its solution,

Q, satisfies

and

, that is, if either

or

are known,

Q has no additional information about

T. Such is not necessarily the case for the

less noisy or the

more capable II measures, that is, the solution

Q may have additional information about

T even when a source is known. However, the three proposed measures satisfy the following property: the solution

Q of the optimization problem that defines each of them satisfies

where

refers to the so-called specific information [

1,

33]. That is, independently of the outcome of

T,

Q has less specific information about

than any source variable

. This can be seen by noting that any of the introduced orders imply the more capable order. Such is not the case, for example, for

, which is arguably one of the reasons why it has been criticized for depending only on the amount of information, and not on its content [

12]. As mentioned, there is not much consensus as to what properties a measure of II should satisfy. The three proposed measures for partial information decomposition do not satisfy the so-called

Blackwell property [

15,

34]:

Definition 7. An intersection information measure is said to satisfy the Blackwell property if the equivalence holds.

This definition is equivalent to demanding that

, if and only if

has no unique information about

T. Although the

implication holds for the three proposed measures, the reverse implication does not, as shown by specific examples presented by Korner and Marton [

22], which we will mention below. If one defines the “more capable property” by replacing the

degradation order with the

more capable order in the original definition of the Blackwell property, then it is clear that measure

k satisfies the

k property, with

k referring to any of the three introduced intersection information measures.

Also often studied in PID is the

identity property (IP) [

14]. Let the target

T be a copy of the source variables, that is,

. An II measure

is said to satisfy the IP if

Criticism was levied against this proposal for being too restrictive [

17,

35]. A less strict property was introduced by [

21], under the name

independent identity property (IIP). If the target

T is a copy of the input, an II measure is said to satisfy the IIP if

Note that the IIP is implied by the IP, but the reverse does not hold. It turns out that all the introduced measures, just like the degradation II measure, satisfy the IIP, but not the IP, as we will show. This can be seen from (

8), (

9), and the fact that

equals 0 if

, as we argue now. Consider the distribution where

T is a copy of

, presented in

Table 1.

We assume that each of the 4 events has non-zero probability. In this case, channels

and

are given by

Note that for any distribution

, if

, then

, which implies that, for any of such distributions, the solution

Q of (

12) must satisfy

, thus the first and second rows of

must be the same. The same goes for any distribution

with

. On the other hand, if

or

, then

, implying that

for such distributions. Hence,

must be an arbitrary channel (that is, a channel that satisfies

), yielding

.

Now recall the Gács-Korner

common information [

36] defined as

We will use a similar argument and slightly change the notation to show the following result.

Theorem 2. Let be a copy of the source variables. Then .

Proof. As shown by Kolchinsky [

12],

. Thus, (

8) implies that

. The proof will be complete by showing that

. Construct the bipartite graph with vertex set

and edges

if

. Consider the set of

maximally connected components , for some

, where each

refers to a maximal set of connected edges. Let

, be an arbitrary set in

. Suppose the edges

and

, with

are in

. This means that the channels

and

have rows corresponding to the outcomes

and

of the form

Choosing

, that is,

and

, we have that,

, which implies that the solution

Q must be such that,

(from the definition of the

more capable order), which in turn implies that the rows of

corresponding to these outcomes must be the same, so that they yield

under this set of distributions. We may choose the values of those rows to be the same as those rows from

- that is, a row that is composed of zeros except for one of the positions whenever

or

. On the other hand, if the edges

and

, with

, are also in

, the same argument leads to the conclusion that the rows of

corresponding to the outcomes

,

, and

must be the same. Applying this argument to every edge in

, we conclude that the rows of

corresponding to outcomes

must all be the same. Using this argument for every set

implies that if two edges are in the same CC, the corresponding rows of

must be the same. These corresponding rows of

may vary between different CCs, but for the same CC, they must be the same.

We are left with the choice of appropriate rows of

for each corresponding

. Since

is maximized by a deterministic relation between

Q and

T, and as suggested before, we choose a row that is composed of zeros except for one of the positions, for each

, so that

Q is a deterministic function of

T. This admissible point

Q implies that

and

, since

X and

Y are also functions of

T, under the channel perspective. For this choice of rows, we have

where we have used the fact that

to conclude that

. Hence

if

T is a copy of the input. □

Bertschinger

et al. [

15] suggested what later became known as the (*) assumption, which states that, in the bivariate source case, any sensible measure of unique information should only depend on

, and

. It is not clear that this assumption should hold for every PID. It is trivial to see that all the introduced II measures satisfy the (*) assumption.

We conclude with some applications of the proposed measures to famous (bivariate) PID problems, with results shown in

Table 2. Due to channel design in these problems, the computation of the proposed measures is fairly trivial. We assume the input variables are binary (taking values in

), independent, and equiprobable.

We note that in these fairly simple toy distributions, all the introduced measures yield the same value. This is not surprising when the distribution

yields

, which implies that

, where

k refers to any of the introduced partial orders, as is the case in the

and

examples. Less trivial examples lead to different values over the introduced measures. We present distributions that show that our three introduced measures lead to novel information decompositions by comparing them to the following existing measures:

from Griffith

et al. [

31],

from Barrett [

32],

from Williams and Beer [

1],

from Griffith and Ho [

37],

from Ince [

21],

from Finn and Lizier [

38],

from Bertschinger

et al. [

15],

from Harder

et al. [

14] and

from [

17]. We use the

dit package [

39] to compute them as well as the code provided in [

12]. Consider counterexample 1 by [

22] with

, given by

These channels satisfy

(it is easy to numerically confirm this using (

10), whenever

T only takes two values) but

. This is an example that satisfies, for whatever distribution

,

. It is interesting to note that even though there is no degradation order between the two channels, we have that

because there is a nontrivial channel

that satisfies

and

. We present various PID under different measures, after choosing

(which yields

) and assuming

.

Table 3.

Different decompositions of .

Table 3.

Different decompositions of .

|

|

|

|

|

|

|

|

|

|

|

|

|

| 0 |

0.002 |

0.004 |

* |

0.004 |

0.004 |

0.004 |

0.002 |

0.003 |

0.047 |

0.003 |

0.004 |

0 |

We write

* because we don’t yet have a way to find the ‘largest’

Q, such that

and

. See counterexample 2 by [

22] for an example of channels

that satisfy

but

, leading to different values of the proposed II measures. An example of

that satisfy

but

is presented by Américo

et al. [30, page 10], given by

There is no stochastic matrix

, such that

, but

because

. Using (

10) one may check that there is no

less noisy relation between the two channels. We present the decomposition of

for the choice of

(which yields

) in

Table 4.

We write because we conjecture, after some numerical experiments, that the ‘largest’ channel that is less noisy than both and is a channel that satisfies .