The use of discriminant analysis algorithm as a classification algorithm was first proposed [

10]. In earlier years, researchers used artificial neural networks and multiple adaptive regression splines to establish a two-stage hybrid model for loan data, and compared it with linear discriminant analysis and logistic regression. They found that the classification performance of the two-stage hybrid model was superior to other classifiers [

11]. Wen-Hwa Chen et. al used SVM and BP neural networks to rate issuers in the financial market. For high-dimensional and imbalanced datasets, using multiple SVM classifiers one-on-one is more effective and accurate than using BP neural networks [

12]. With the continuous activity of SVM as a classifier research method, researchers have applied SVM classification algorithms to classify credit data on Australian and German credit datasets, and used genetic algorithms to optimize SVM parameters. After the experiment, comparative analysis was also conducted with the classification results of other classifiers, such as C4.5. The results show that SVM classifiers based on genetic algorithm optimization parameters have good classification performance [

13]. In addition to SVM, neural network is also an important classification method for credit scoring of financial institutions in commercial banks. Some scholars have studied neural network systems including probabilistic neural network and multi-layer feedforward neural network and compared them with traditional technologies such as discriminant analysis and logical regression, and conducted experiments on personal loan dataset of Bank of Egypt. The results show that, Neural network models have better performance compared to other classification techniques [

14]. Researchers gradually discovered that the variance of Bagging is smaller than that of other simple classifiers or predictors. Therefore, researchers gradually used Bagging for credit data classification, using performance metrics such as AUC and ROC to represent its classification accuracy. Through experiments, it can be seen that Bagging's classification performance is relatively superior to the performance of other simple classification predictors [

15]. After that, the researchers further analyzed the unbalanced data set of credit data. By under-sampling the adverse observation samples, they applied classification methods such as logical regression, linear and quadratic discriminant analysis, neural network, least squares support vector machine and so on to analyze and compare the balance data set after under-sampling, and used AUC as the evaluation indicator. The results show that, random forest classifier performs well on a large class of unbalanced data sets [

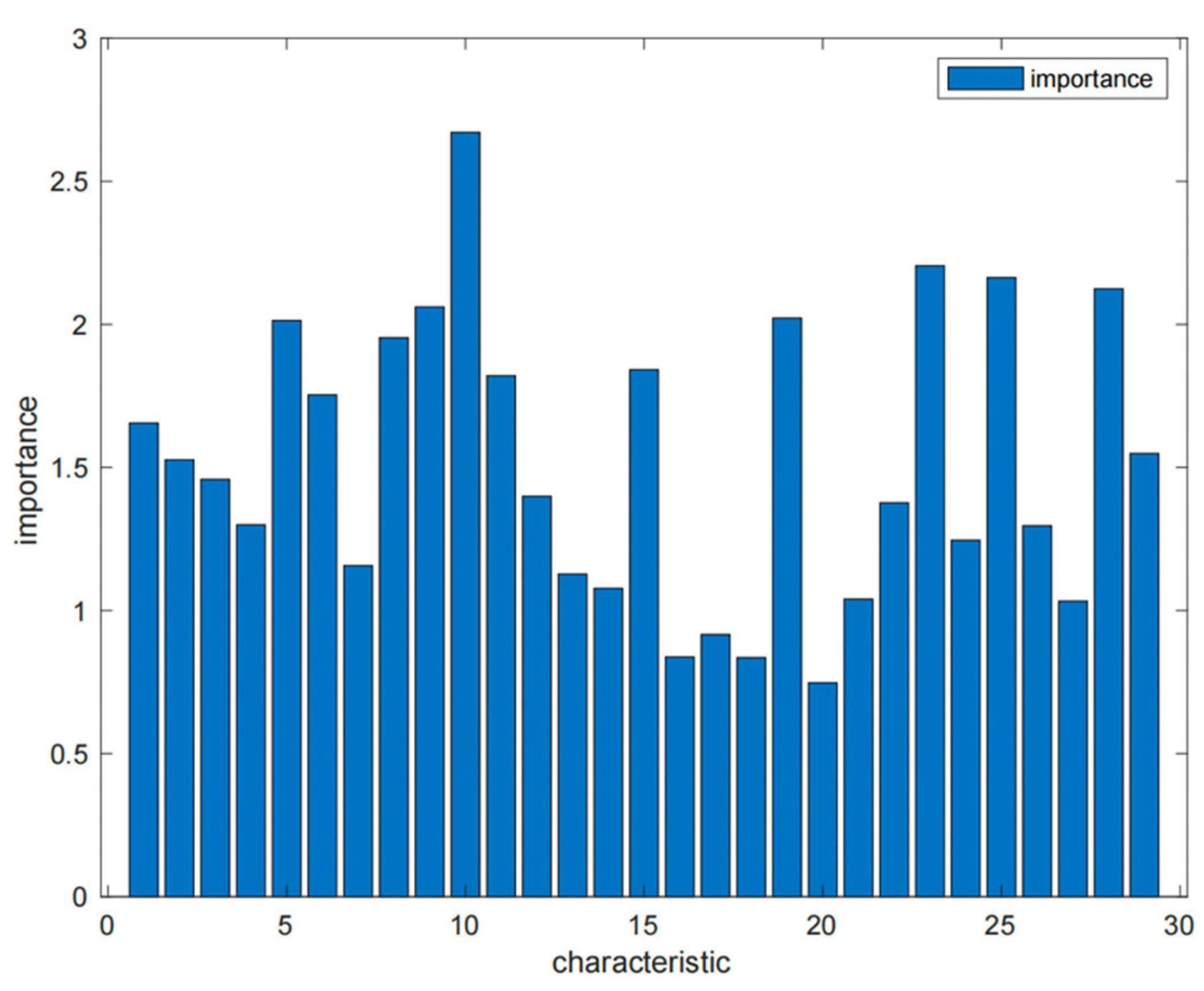

16]. In the past few years, it has been found that feature selection methods such as principal component analysis, genetic algorithm and information gain ratio are used to preprocess the data, and then random forest, SVM, Adaboost, Bagging and other classifiers are used to classify the data to get two categories of "good and bad". Then classification accuracy and AUC are used as evaluation indicators to evaluate the classification effect of the model. Finally, the use of principal component analysis as a feature engineering method, followed by the application of ANN Adaboost as a classification method, is the best model combination with the highest classification accuracy [

17]. In recent years, people's attention to imbalanced credit datasets has also increased. Some researchers use a DBN composed of three stages: partitioning data, training basic classifiers, and finally integrating data. After resampling, SVM is used to classify the data. DBN technology has been widely used in fields such as computer vision and acoustic modeling, but it is rarely applied to imbalanced datasets in the field of credit risk. Therefore, this study fills the gap in this field and proposes new methods to address imbalanced datasets [

18]. In recent years, with the development of P2P platform, user information has been continuously filled and improved, and the characteristics and dimensions of data sets have also gradually increased. Feature engineering is an important method to solve high-dimensional feature data sets. Some scholars have proposed a strategy of combining soft computing methods with expert knowledge, which combines subjective and objective methods to avoid subjective errors caused by personal emotions, but also takes into account human factors. Then use machine learning algorithms for classification, and use AUC as a performance evaluation indicator [

19]. Some researchers use SMOTE oversampling method to process unbalanced credit data sets, and then use C4.5, random forest, SVM, naive Bayesian, KNN and back-propagation neural network to classify the data, and then use accuracy, AUC, precision, recall, average absolute error to evaluate the classification effect of the classifier. Finally, it was found that SVM performs relatively well in classification [

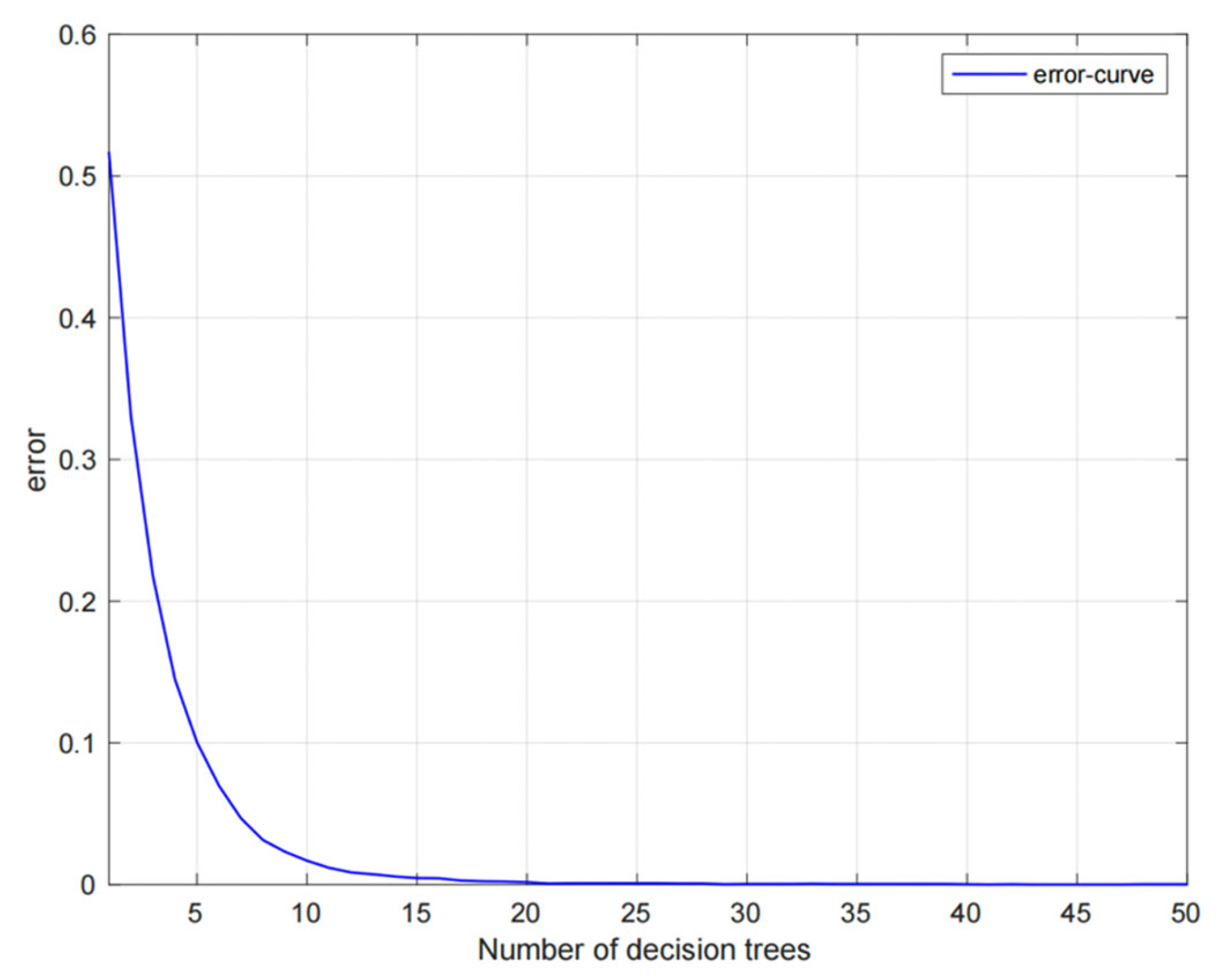

20]. In recent years, more and more researchers have studied the integration algorithm. As a kind of integration algorithm, random forest has attracted extensive attention. People have achieved significant results in applying it in the field of medicine. A researcher used six open metagenomic datasets of colorectal cancer with significant geographical differences, applied random forest to the dataset, and then compared with the AUC results of LASSO and SVM, found that random forest has the best classification performance in disease prediction, and the increase in the number of decision trees can improve the prediction performance of random forest model [

21]. In real life, when using the random forest algorithm, many unbalanced data sets will be encountered. To avoid the training complexity and overfitting problems caused by incorrect sampling methods, researchers first use the data center interpolation DCI to prevent the impact of category imbalance, and then use the improved sparrow search algorithm ISSA to optimize the random forest, and twelve common methods to deal with unbalanced data are compared on 20 real data sets. Finally, it is found that the optimized random forest method has the best classification performance [

22]. In order to effectively reduce the credit risk of banks, Sujuan Xu et al. used support vector machines to evaluate the credit of enterprises, screened 12 indicators and trained 10 sub support vector machines. Then, they used Bagging's method to improve generalization performance. In order to avoid not considering the output importance of sub support vector machine classifiers, they also introduced fuzzy sets to make up for the shortcomings. By conducting experiments on the dataset samples of science and technology innovation listed companies in the Shanghai and Shenzhen stock markets, and comparing the integration results of support vector machines with the experimental results of a single support vector machine, it was found that the classification accuracy of the integrated support vector machine was higher than that of a single support vector machine [

23]. Pawel Ziemba et al. first used filters, wrappers and embedded methods for credit data to preprocess data through saliency attributes, symmetric uncertainty, fast filters based on correlation and feature selection based on correlation, and then applied random forest, decision tree C4.5, naive Bayes classifier, k nearest neighbor Compared with logistic regression, these classification methods were tested on a huge dataset containing 91759 user information and 272 features, and AUC and Gini coefficient were used to evaluate the classification effect. The experimental results show that in most cases, the accuracy of random forest classifier is higher than other classifiers, and the effect is better [

24]. Siddhant Bagga et al. [

25] studied the credit risk of credit fraud. For highly unbalanced databases, they used logical regression, naive Bayes, random forest, k-nearest neighbor, multi-layer perceptron, Adaboost and Quadrant Discriminant Analysis to compare. The results showed that the effect of random forest classifier was more prominent. In order to help financial institutions reduce credit risk, Iain Brown et al. divided credit users into two categories to select credit portfolios and the most suitable credit scoring techniques. They conducted experiments on two real data sets obtained by major financial institutions in Benelux area, and applied and compared ten classifiers, namely, logistic regression, linear and quadratic discriminant analysis, neural network, least squares support vector machine, C4.5 decision tree, KNN, random forest, and gradient boosting, random forest and Gradient boosting have better classification effects on unbalanced data sets [

26]. The researchers used three real data sets obtained from different financial institutions to carry out experiments. They used batch learners such as logistic regression, decision tree, naive Bayes, random forest, and stream learners such as Hoeffding Tree, Hoeffding Adaptive Tree, Leveraging Bagging, and Adaptive Random Forest to conduct comparative experiments. The researchers used KS and PSI to evaluate the effect of the model. The experimental results showed that:, Stream learners have relatively more accurate classification performance [

27]. Marcos Roberto Machado et al. used two unsupervised algorithms, K-means and DBSACN, and supervised learning algorithms such as Adaboost, GB, decision tree, random forest, SVM and artificial neural network model to improve the accuracy of credit scoring prediction for users of financial institutions. The experiment was conducted on financial datasets provided by North American commercial banks, and researchers also combined supervised and unsupervised algorithms for the experiment. By comparing the unsupervised model with the supervised model, as well as the single model and the mixed model, the mixed model combining k-means and random forest has the best prediction effect on the MSE evaluation index [

28]. Indu Singh et al. used neural network, KNN, support vector machine and random forest as benchmark classifiers, and then used Bagging, boosting, stacking and other methods to aggregate the results of different benchmark classifiers to form a more robust integrated classifier. They used this classifier to classify credit users at multiple levels, and used PSO to optimize the clustering method. By conducting experiments on real public datasets obtained from the UCI machine learning library, the performance of the proposed methods was compared. The experimental results showed that using a clustering method based on multi-level classification PSO optimization can significantly improve classification accuracy [

29]. For credit card fraud, researchers proposed a machine learning method using random forest and support vector machine. First, random forest algorithm was used to select features to improve the accuracy of the model. Then, support vector machine was used to classify the data set generated by European transaction cardholders, and the accuracy of the model was evaluated through Accuracy, Recall and AUC. The experimental results show that the support vector machine classifier based on random forest can greatly improve the classification accuracy, up to 91% [

30]. Yueling Wang et al. used several classic machine learning methods, such as KNN, decision tree, random forest, naive Bayes, and logical regression, to classify loan applicants on a commercial bank loan information dataset, and used AUC, accuracy rate, and Recall as evaluation indicators for comparative analysis. The results show that using random forest to build a credit scoring model can more accurately predict the default of loan users [

31].

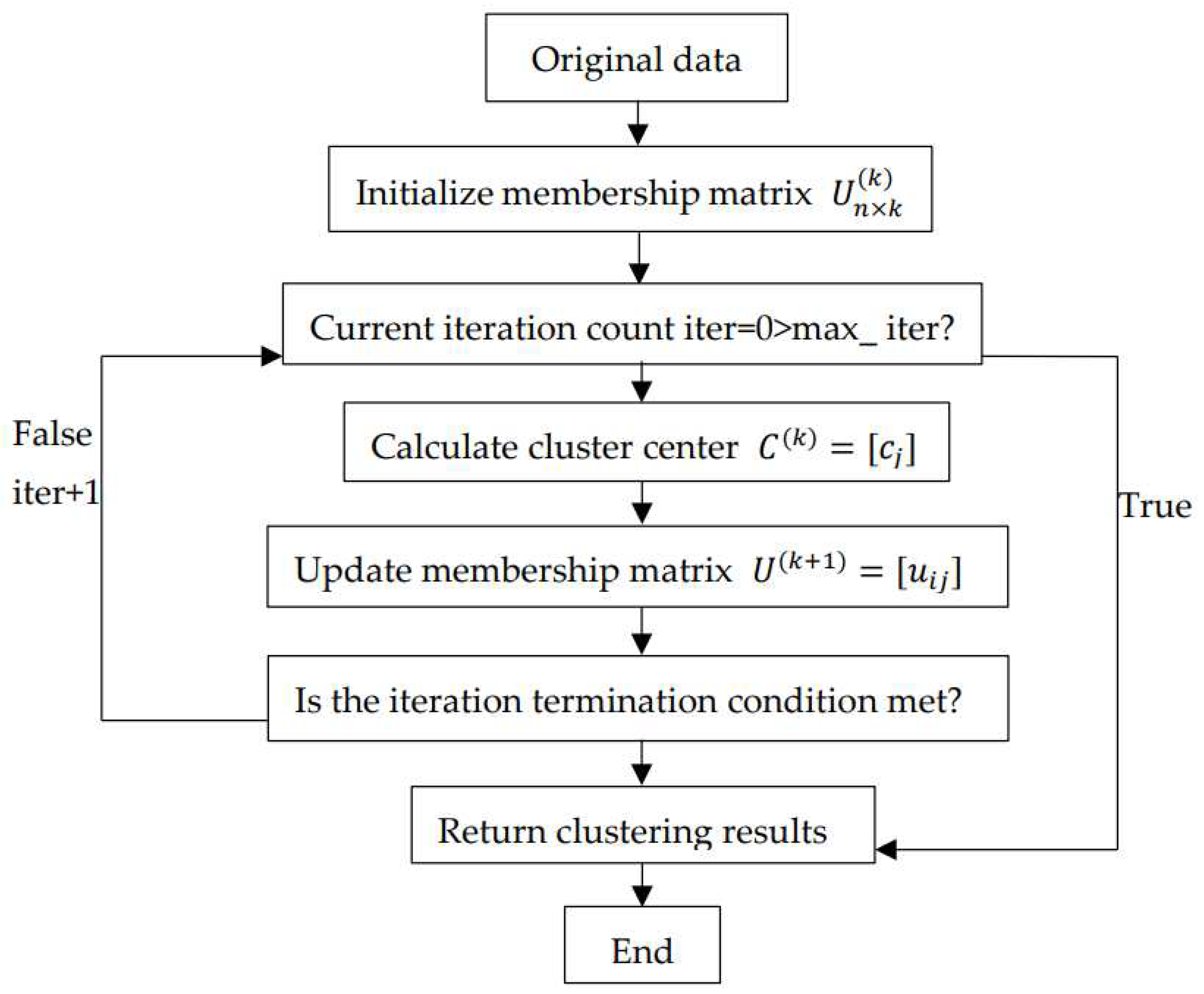

With the development of loan business for commercial banks and other financial institutions, real user datasets are often highly imbalanced. In response to this phenomenon, researchers have conducted in-depth research and analysis, and the relevant research is shown below. Zhao Zhao Xu and other scholars have applied the SMOTE algorithm to the medical field. Because medical data are often a large number of unbalanced data sets, this paper proposes a cluster based oversampling algorithm KNSMOTE, which first uses the k-means algorithm to cluster, then uses the SMOTE oversampling method to balance the data sets, and finally uses three integrated algorithms, Adaboost, Bagging and random forest, to apply. Through the experimental verification on 13 data sets, it is found that the combination of KNSMOTE oversampling method and random forest algorithm can maximize the effectiveness of classification, and the accuracy rate is as high as 99.84% [

32]. Lu Wang uses the integration algorithm for the unbalanced credit risk data set of listed companies, and combines SMOTE oversampling technology, particle swarm optimization algorithm and fuzzy clustering algorithm on the basis of the integration algorithm to balance the data. Through experiments on 251 data samples of listed companies in Shanghai Stock Exchange and Shenzhen Stock Exchange in China from 2007 to 2016, and using G-measure and F-measure as the evaluation indicators of model performance, the results show that the improved SMOTE oversampling technology and FCM technology proposed in this paper can effectively predict corporate credit risk [

33]. Dina Elreedy et al. elaborated the SMOTE oversampling method from both theoretical and experimental aspects. In order to better understand the SMOTE oversampling method, the researchers also conducted experiments on the artificial data set and the real data set generated by the multivariate Gaussian distribution. They used the support vector machine classifier with radial basis function and the KNN classifier to classify the data set after the application of SMOTE, and then compared with the results of the original data set after classification. The experimental results show that they are no longer limited to small-scale data, SMOTE oversampling technology has better accuracy on large-scale data sets [

34]. In the past, researchers mainly processed binary imbalanced data, but in the era of big data, with the increase of data volume, the categories of datasets are also constantly increasing, resulting in the emergence of multi class imbalanced data. In this paper, we use random forest and naive Bayes to classify five real multi class datasets with different scales. The experimental results show that SMOTE has a very significant balancing effect on multi class datasets [

35].

In addition to imbalanced datasets, optimizing the model is also an important task. For example, when facing high-dimensional nonlinear problems, the selection of support vector machine kernel functions and parameter optimization are indispensable processes. Wencheng Huang et al. applied SVM to the classification of railway dangerous goods transportation system. Researchers used genetic algorithm, grid search algorithm and particle swarm optimization to optimize SVM, and used ROC and AUC as evaluation indicators to compare and analyze the advantages and disadvantages of these three optimization algorithms. The experimental results show that there is no significant difference in time consumption among these three methods in railway dangerous goods transportation systems, but the accuracy of using genetic algorithms to optimize SVM is the highest [

36]. Zhou Tao et al. used 1252 cancer cases from 2013 to 2014 in a top three hospital in Yinchuan City, and classified patients by using support vector machines. In order to further improve the classification performance, researchers used genetic algorithms and particle swarm optimization to optimize the parameters of support vector machines. At the same time, they also used a combination of particle swarm optimization and principal component analysis The combination of genetic algorithm and principal component analysis for feature selection. The experimental results show that the parameter optimization and feature selection methods based on genetic algorithm have significantly better performance than parameter optimization algorithms and methods that only use principal component analysis for feature selection [

37]. Scholars use support vector machines for modeling in order to calculate turbine heat rate more accurately. This study adopts the least squares support vector machine on the basis of traditional support vector machines, reducing computational complexity. Through experiments using data from the last 17 days of May 2011, it was confirmed that the least squares support vector machine and using GSA to determine the optimal parameter combination can significantly reduce debugging time [

38]. Marcelo N. Kapp et al. proposed a new method for optimizing support vector machine parameters, which dynamically selects the optimal SVM model. Through testing on 14 synthetic and real datasets, the results show that the dynamically optimized SVM model is very effective in completely dynamic environments [

39]. Genetic algorithms have also played a great role in the medical field. Ahmed Gailan Qasem et al. used genetic algorithm to optimize BP neural network, SVM, CART and KNN, combined with Bagging, Boosting, Stacking and other integrated algorithms, used SMOTE oversampling technology to deal with unbalanced data sets, and used AUC, ROC and other evaluation indicators to evaluate the model effect after experiments on real data sets, which confirmed that using genetic algorithm to optimize model parameters can further improve the accuracy of model classification [

40]. Cheng Lung Huang et al. evaluated credit default risk and divided applicants into two categories: acceptance and rejection. They used traditional support vector machines, genetic algorithm optimized support vector machines, and grid search algorithm optimized support vector machines to conduct experiments on two real datasets in the UCI machine learning database, and compared them with other classifiers such as BP neural networks and decision trees. The results showed that:, SVM has good classification performance for binary classification problems, and the use of genetic algorithm for parameter optimization can further improve the accuracy of model classification [

41]. GA-SVM is also a commonly used machine learning tool in business crisis diagnosis. Liang Hsuan Chen et al. applied GA-SVM on a real dataset containing financial features and intellectual capital in Taiwan, and the experimental results showed an accuracy of up to 95% [

42].

Based on the research of the above scholars, the classification performance of classifiers varies for different credit datasets. We cannot clearly identify which classifier is the best and must analyze the specific problem. Therefore, for the credit dataset of the People's Bank of China, this article intends to use classic classifiers such as GA-SVM, SVM Grid, BP neural network, and decision tree to classify the data.