Submitted:

22 May 2023

Posted:

23 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Federated learning

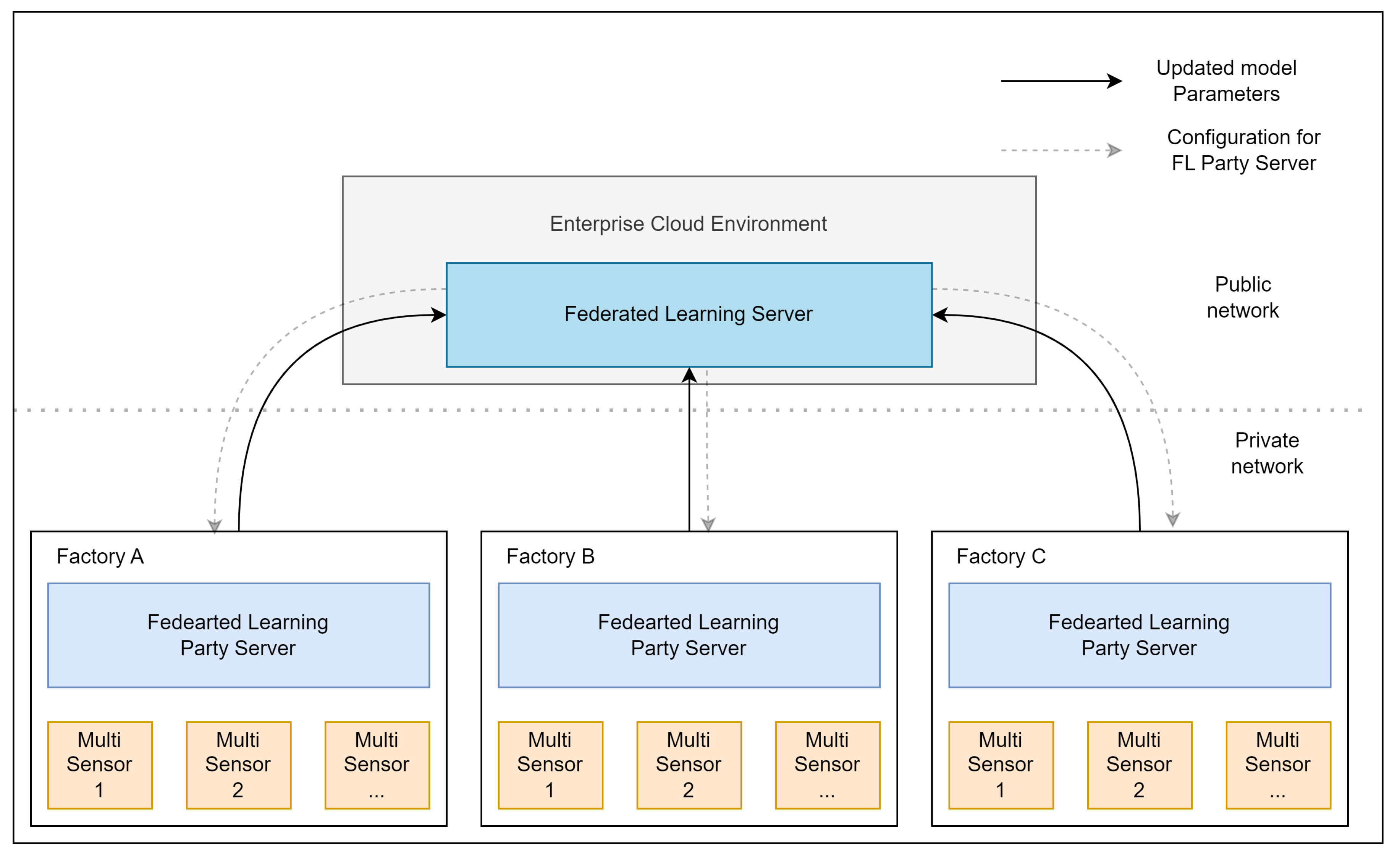

- A central server sends the initial version of a ML model to participating clients. An example ML model: a neural network with its architecture defining the amount of nodes, activation functions, amount of hidden-layers, etc.

- Each client fits the ML model to its own local data for a predefined number of times (i.e., epochs). In this step, fitting means to that the ML model recalculates its parameters aiming to minimize the difference between its predicted outputs and the true labels using a loss function (e.g., cross-entropy) and an optimization function (e.g., stochastic gradient descent).

- Each client sends back only the updated model parameters (not the actual data) and the central server keeps track of each client’s response, awaiting for the necessary quorum.

- Once the quorum is achieved, the central server aggregates the updated parameters from each client using a fusion algorithm and uses this aggregated updated parameters to improve the ML model.

1.2. Predictive Maintenance

2. Materials and methods

2.1. Materials

2.2. Methods

| Index | Architecture | Description |

| 1 | [39] | Industrial Internet Reference Architecture (IIRA) |

| 2 | [36] | A Multi-Agent Approach for optimizing Federated Learning in Distributed Industrial IoT |

| 3 | [38] | Industrial Federated Learning (IFL) system |

| 4 | [40] | Architecture for federated analysis and learning in the healthcare domain |

| 5 | [41] | Scalable production system for Federated Learning in the domain of mobile devices |

- Data security: data storage on-premise

- Required communication protocols: LoRa Network, 5G public network and WiFi connectivity.

- Sampling requirements: vibration sampling of minimum 200Hz

- Feedback signal: maximum feedback velocity

- Services: remote and VPN access to the MSP

- Storage capacity: storing capacity for a minimum of 6 months data

- Battery capacity: battery capacity for a minimum of 1 week MSP-operation

3. Architecture Setup

3.1. Multi sensor platform

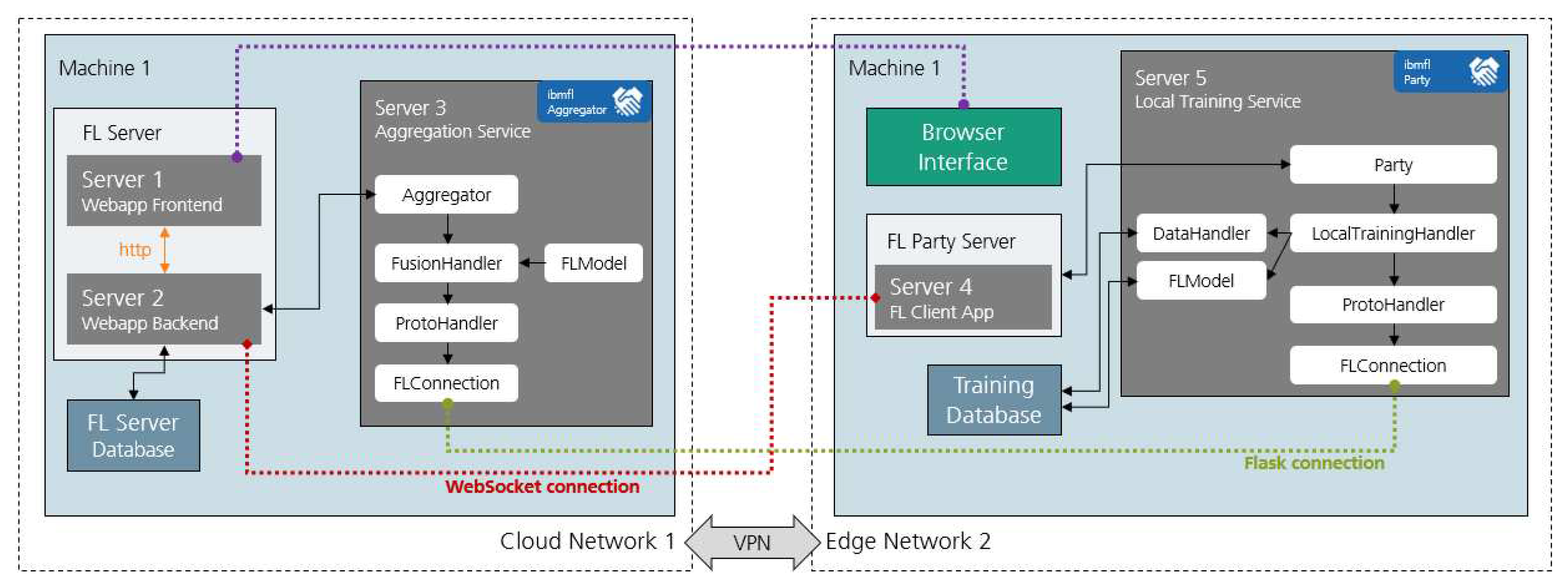

3.2. Federated Learning Platform

4. Conclusions

4.1. Adoption advantages

Cutting-edge maintenance strategy

Data privacy and governance

Federated Learning Operations

4.2. Implementation challenges

Data heterogeneity

Network congestion

Hardware acceleration

Hardware energy consumption

References

- Duan,., Da Xu,. Data Analytics in Industry 4.0: A Survey. Inf Syst Front (2021). [CrossRef]

- Krauß, J., Dorißen, J., Mende, H., Frye, M., Schmitt, R.H. (2019). Machine Learning and Artificial Intelligence in Production: Application Areas and Publicly Available Data Sets. In: Wulfsberg, J.P., Hintze, W., Behrens, BA. (eds) Production at the leading edge of technology. Springer Vieweg, Berlin, Heidelberg. [CrossRef]

- Cardoso, D.; Ferreira, L. Application of PredictiveMaintenance Concepts Using Artificial Intelligence Tools. Appl. Sci. 2021, 11, 18. [Google Scholar] [CrossRef]

- Tam, A. S. B., Chan, W. M., & Price, J. W. H. (2006). Optimal maintenance intervals for a multi-component system. Production Planning and Control, 17(8), 769-779.

- Errandonea, I., Beltrán, S., & Arrizabalaga, S. (2020). Digital Twin for maintenance: A literature review. Computers in Industry, 123, 103316. [CrossRef]

- Budach, L., Feuerpfeil, M., Ihde, N., Nathansen, A., Noack, N., Patzlaff, H.,... & Naumann, F. (2022). The Effects of Data Quality on Machine Learning Performance.

- J. Chen and X. Ran, "Deep Learning With Edge Computing: A Review," in Proceedings of the IEEE, vol. 107, no. 8, pp. 1655-1674, Aug. 2019. [CrossRef]

- S. S. Schmitt, P. Mohanram, R. Padovani, N. König, S. Jung and R. H. Schmitt, "Meeting the Requirements of Industrial Production with a Versatile Multi-Sensor Platform Based on 5G Communication," 2020 IEEE 31st Annual International Symposium on Personal, Indoor and Mobile Radio Communications, 2020, pp. 1-5. -5. [CrossRef]

- Deutsche IHK: Digitalisierungsumfrage 2021. Zeit für den digitalen Auf-bruch. Die IHK-Umfrage zur Digitalisierung. Deutscher Industrie- und Han-delskammertag e. V., 2021.

- Sotto, L., Treacy, B., & McLellan, M. (2010). Privacy and data security risks in cloud computing.. World Communications Regulation Report, 5(2), 38. 2.

- Ludwig, H., & Baracaldo, N. (2022). Introduction to Federated Learning. In H. Ludwig & N. Baracaldo (Eds.), Federated Learning: A Comprehensive Overview of Methods and Applications (pp. 1–23). [CrossRef]

- Manzini, R., Regattieri, A., Pham, H., & Ferrari, E. (2010). Maintenance for industrial systems (pp. 409-432). London: Springer.

- Brandner, M.; Fritz, T.: Predictive Maintenance. Basics, Strategies, Models. DLG Expert report 5/2019, 2019.

- Konečný, J., McMahan, H. B., Ramage, D., & Richtárik, P. (2016). Federated optimization: Distributed machine learning for on-device intelligence. arXiv preprint arXiv:1610.02527.

- Qiang Yang, Yang Liu, Tianjian Chen, and Yongxin Tong. 2019. Federated Machine Learning: Concept and Applications. ACM Trans. Intell. Syst. Technol. 10, 2, Article 12 (19), 19 pages. 20 March. [CrossRef]

- Popescu, T.D., Aiordachioaie, D. & Culea-Florescu, A. Basic tools for vibration analysis with applications to predictive maintenance of rotating machines: an overview. Int J Adv Manuf Technol 118, 2883–2899 (2022). [CrossRef]

- Tianfield, H. (2012). Security issues in cloud computing. 2012 IEEE International Conference on Systems, Man, and Cybernetics (SMC), 1082-1089.

- Becker, S., Styp-Rekowski, K., Stoll, O. V. L., & Kao, O. (2022). Federated Learning for Autoencoder-based Condition Monitoring in the Industrial Internet of Things. arXiv preprint arXiv:2211.07619.

- Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation) [2016] OJ L 119/1.

- Ludwig, H., Baracaldo, N., Thomas, G., Zhou, Y., Anwar, A., Rajamoni, S., … Others. (2020). IBM Federated Learning: an Enterprise Framework White Paper V0. 1. ArXiv Preprint ArXiv:2007. 10987.

- Mohanram, P.; Passarella, A.; Zattoni, E.; Padovani, R.; König, N.; Schmitt, R.H. 5G-Based Multi-Sensor Platform for Monitoring of Workpieces and Machines: Prototype Hardware Design and Firmware. Electronics 2022, 11, 1619. [Google Scholar] [CrossRef]

- Pham, H., & Wang, H. Imperfect maintenance. European journal of operational research 1996, 94(3), 425–438.

- A. A. Kumar S., K. Ovsthus and L. M. Kristensen., "An Industrial Perspective on Wireless Sensor Networks — A Survey of Requirements, Protocols, and Challenges," in IEEE Communications Surveys & Tutorials, vol. 16, no. 3, pp. 1391-1412, Third Quarter 2014. [CrossRef]

- Attaran, M. The impact of 5G on the evolution of intelligent automation and industry digitization. J Ambient Intell Human Comput ( 2021. [CrossRef] [PubMed]

- Lee, J., Singh, J., & Azamfar, M. (2019). Industrial artificial intelligence. arXiv preprint arXiv:1908.02150.

- Pham, Q. V., Dev, K., Maddikunta, P. K. R., Gadekallu, T. R., & Huynh-The, T. (2021). Fusion of federated learning and industrial internet of things: a survey. arXiv preprint arXiv:2101.00798.

- A. Husakovic, E. Pfann, and M. Huemer, “Robust machine learning based acoustic classification of a material transport process,” in 2018 14th Symposium on Neural Networks and Applications (NEUREL). IEEE, 2018, pp. 1–4.

- A. Ferdowsi, U. Challita, and W. Saad, “Deep learning for reliable mobile edge analytics in intelligent transportation systems: An overview,” ieee vehicular technology magazine, vol. 14, no. 1, pp. 62–70, 2019.

- Y. Cui, K. Cao and T. Wei, "Reinforcement Learning-Based Device Scheduling for Renewable Energy-Powered Federated Learning," in IEEE Transactions on Industrial Informatics, 2022. [CrossRef]

- R. C. Geyer, T. Klein, and M. Nabi, “Differentially private federated learning: A client level perspective,” arXiv preprint arXiv:1712.07557, 2017. arXiv:1712.07557, 2017.

- J. Konecnˇ y, H. B. McMahan, D. Ramage, and P. Richt ` arik, “Federated optimization: Distributed machine learning for on-device intelligence” arXiv preprint arXiv:1610.02527, 2016.

- M. G. Sarwar Murshed, Christopher Murphy, Daqing Hou, Nazar Khan, Ganesh Ananthanarayanan, and Faraz Hussain. 2021. Machine Learning at the Network Edge: A Survey. ACM Comput. Surv. 54, 8, Article 170 (22), 37 pages. 20 November. [CrossRef]

- S. S. Saha, S. S. Sandha and M. Srivastava, "Machine Learning for Microcontroller-Class Hardware: A Review," in IEEE Sensors Journal, vol. 22, no. 22, pp. 21362-21390, 15 Nov.15, 2022. [CrossRef]

- A. Burrello, M. Scherer, M. Zanghieri, F. Conti and L. Benini, "A Microcontroller is All You Need: Enabling Transformer Execution on Low-Power IoT Endnodes," 2021 IEEE International Conference on Omni-Layer Intelligent Systems (COINS), Barcelona, Spain, 2021, pp. 1-6. [CrossRef]

- M. Brandalero et al., "AITIA: Embedded AI Techniques for Embedded Industrial Applications," 2020 International Conference on Omni-layer Intelligent Systems (COINS), Barcelona, Spain, 2020, pp. 5. 5. [CrossRef]

- Zhang, W., Yang, D., Wu, W., Peng, H., Zhang, N., Zhang, H., & Shen, X. Optimizing federated learning in distributed industrial IoT: A multi-agent approach. IEEE Journal on Selected Areas in Communications 2021, 39, 3688–3703.

- Jane Webster and Richard Watson. 2002. Analyzing the Past to Prepare for the Future: Writing a Literature Review. MIS Q. 26, 2 (2002), xiii–xxiii.

- Hiessl, T., Schall, D., Kemnitz, J., & Schulte, S. (2020, July). Industrial federated learning–requirements and system design. In Highlights in Practical Applications of Agents, Multi-Agent Systems, and Trust-worthiness. The PAAMS Collection: International Workshops of PAAMS 2020, L’Aquila, Italy, October 7–9, 2020, Proceedings (pp. 42-53). Cham: Springer International Publishing.

- S.-W. Lin, B. Miller, J. Durand, G. Bleakley, A. Chigani, R. Martin, B. Murphy, M. Crawford, The industrial internet of things volume g1: reference architecture, Indus. l Internet Consortium 10 (2017) 10–46.

- Antunes, R. S., André da Costa, C., Küderle, A., Yari, I. A., & Eskofier, B. (2022). Federated learning for healthcare: Systematic review and architecture proposal. ACM Transactions on Intelligent Systems and Technology (TIST), 13(4), 1-23.

- Bonawitz, K., Eichner, H., Grieskamp, W., Huba, D., Ingerman, A., Ivanov, V.,... & Roselander, J. (2019). Towards federated learning at scale: System design. Proceedings of machine learning and systems, 1, 374-388.

- Roda, I., & Macchi, M. (2021). Maintenance concepts evolution: a comparative review towards Advanced Maintenance conceptualization. Computers in Industry, 133, 103531. [CrossRef]

- Stenström, C., Norrbin, P., Parida, A., & Kumar, U. (2016). Preventive and corrective maintenance – cost comparison and cost–benefit analysis. Structure and Infrastructure Engineering, 12(5), 603–617. [CrossRef]

- P. Henriquez, J. B. Alonso, M. A. Ferrer and C. M. Travieso, "Review of Automatic Fault Diagnosis Systems Using Audio and Vibration Signals," in IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 44, no. 5, pp. 642-652, May 2014. 20 May; 14. [CrossRef]

- Wang, Y., Deng, C., Wu, J., Wang, Y., & Xiong, Y. (2014). A corrective maintenance scheme for engineering equipment. Engineering Failure Analysis, 36, 269–283. [CrossRef]

- de Faria, H., Costa, J. G. S., & Olivas, J. L. M. (2015). A review of monitoring methods for predictive maintenance of electric power transformers based on dissolved gas analysis. Renewable and Sustainable Energy Reviews, 46, 201–209. [CrossRef]

- Sateesh Babu, G., Zhao, P., & Li, X. L. (2016). Deep convolutional neural network based regression approach for estimation of remaining useful life. In Database Systems for Advanced Applications: 21st International Conference, DASFAA 2016, Dallas, TX, USA, April 16-19, 2016, Proceedings, Part I 21 (pp. 214-228). Springer International Publishing.

- H. M. Hashemian and W. C. Bean, "State-of-the-Art Predictive Maintenance Techniques*," in IEEE Transactions on Instrumentation and Measurement, vol. 60, no. 10, pp. 3480-3492, Oct. 2011. [CrossRef]

- Oh, H., & Lee, Y. (2019, June). Exploring image reconstruction attack in deep learning computation offloading. In The 3rd International Workshop on Deep Learning for Mobile Systems and Applications (pp. 19-24).

- R. Shokri, M. Stronati, C. Song and V. Shmatikov, "Membership Inference Attacks Against Machine Learning Models," 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 2017, pp. 3-18. [CrossRef]

- Mireshghallah, F., Taram, M., Vepakomma, P., Singh, A., Raskar, R., & Esmaeilzadeh, H. (2020). Privacy in Deep Learning: A Survey. ArXiv [Cs.LG]. Retrieved from http://arxiv.org/abs/2004. 1225.

- Brauneck, A., Schmalhorst, L., Kazemi Majdabadi, M.M. et al. Federated machine learning in data-protection-compliant research. Nat Mach Intell 5, 2–4 (2023). [CrossRef]

- Q. Cheng and G. Long, "Federated Learning Operations (FLOps): Challenges, Lifecycle and Approaches," 2022 International Conference on Technologies and Applications of Artificial Intelligence (TAAI), Tainan, Taiwan, 2022, pp. 12-17. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).