Submitted:

24 May 2023

Posted:

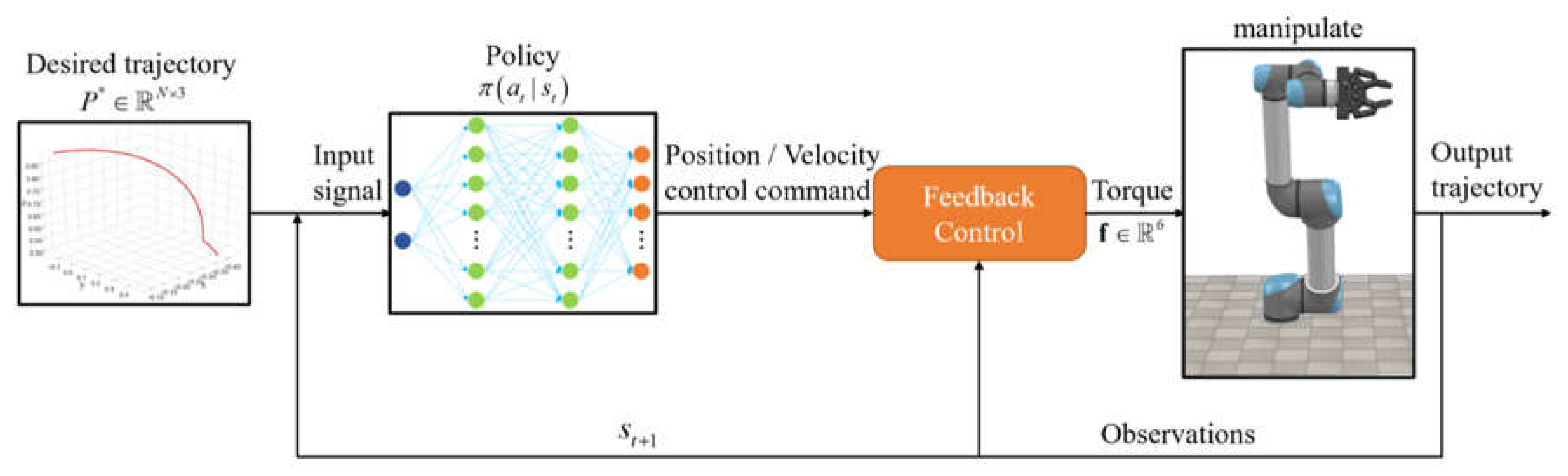

26 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Problem Statement

3. Method

3.1. Deep Q Network

3.2. Soft Actor Critic

4. Experiments and Results

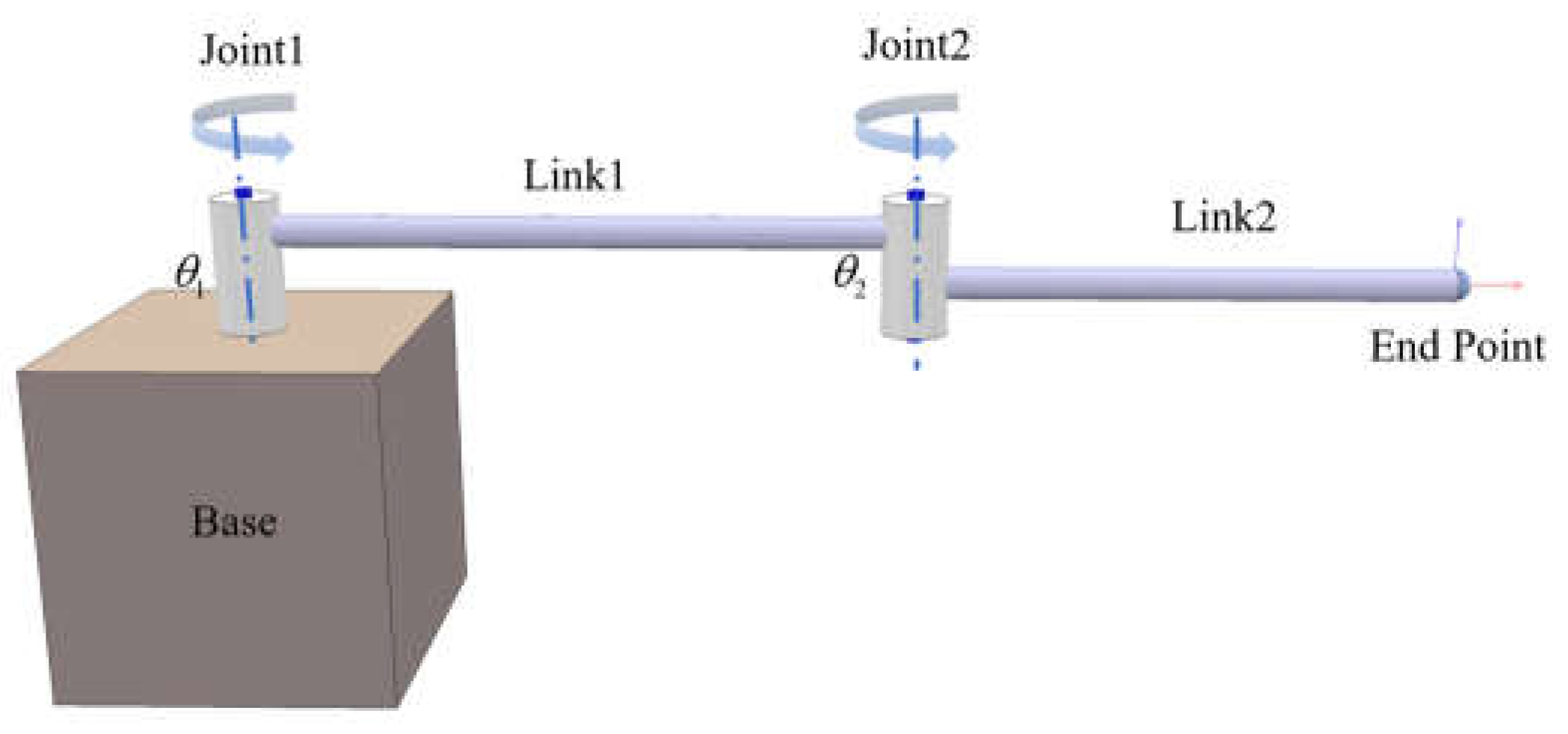

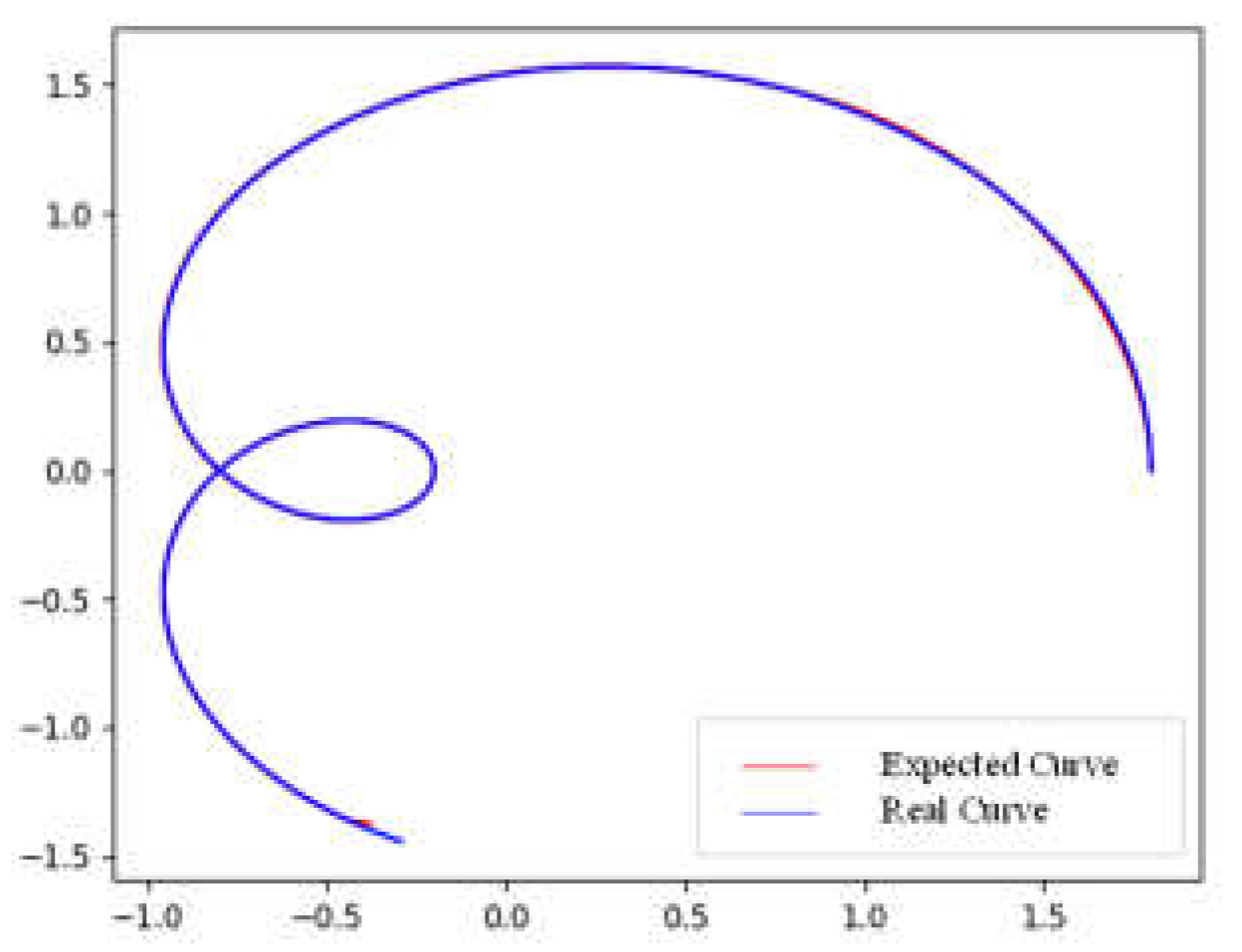

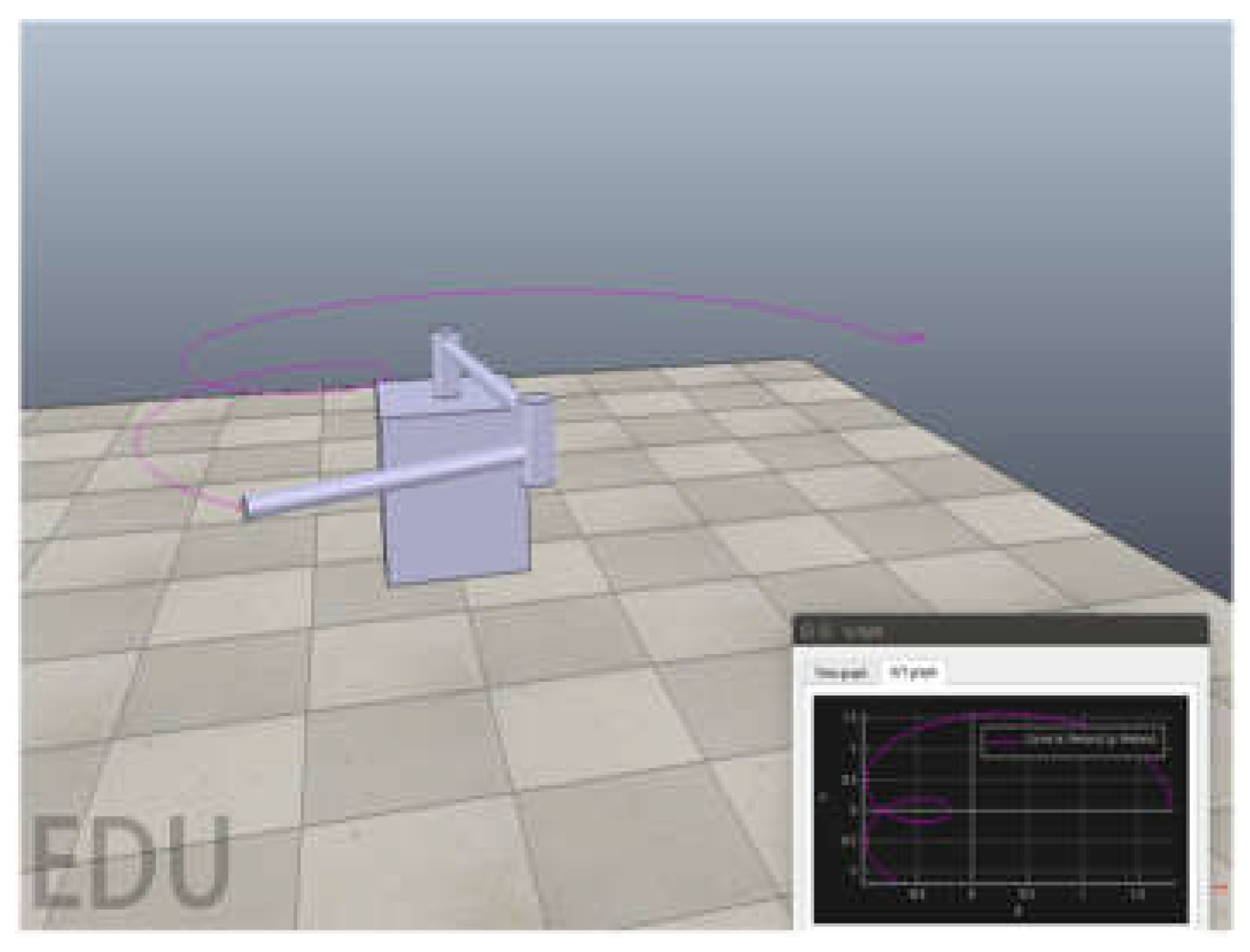

4.1. Planar two-link manipulator

4.2. UR5 manipulator

- The upper control method of the manipulator adopts two control methods, position control or velocity control. The position control is the control of the joint angle, and the input action is the increment of the joint angle. The range of the increment at each moment is set as [- 0.05, 0.05] rad. The velocity control is the control of joint angular velocity. The input action is the increment of joint angular velocity. The increment range of each moment is set to [-0.8, 0.8] rad/s in the experiment. In addition, the underlying control of the manipulator adopts the traditional PID torque control algorithm.

- Adding the noise to the observations: We set up two groups of control experiments, one of which adds random noise to the observations, the noise is adopted from the standard normal distribution N(0,1), the size is 0.005 *N(0,1).

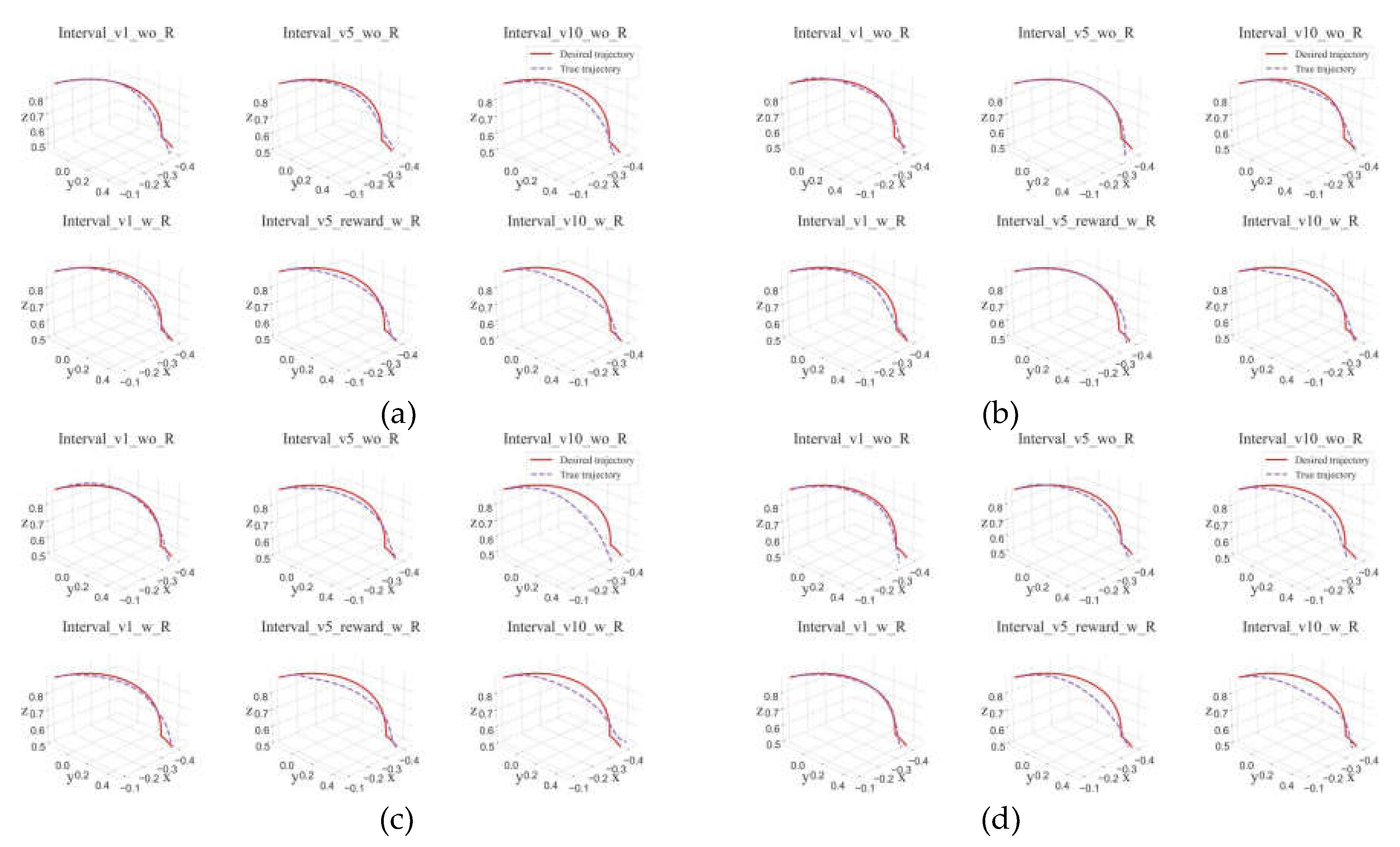

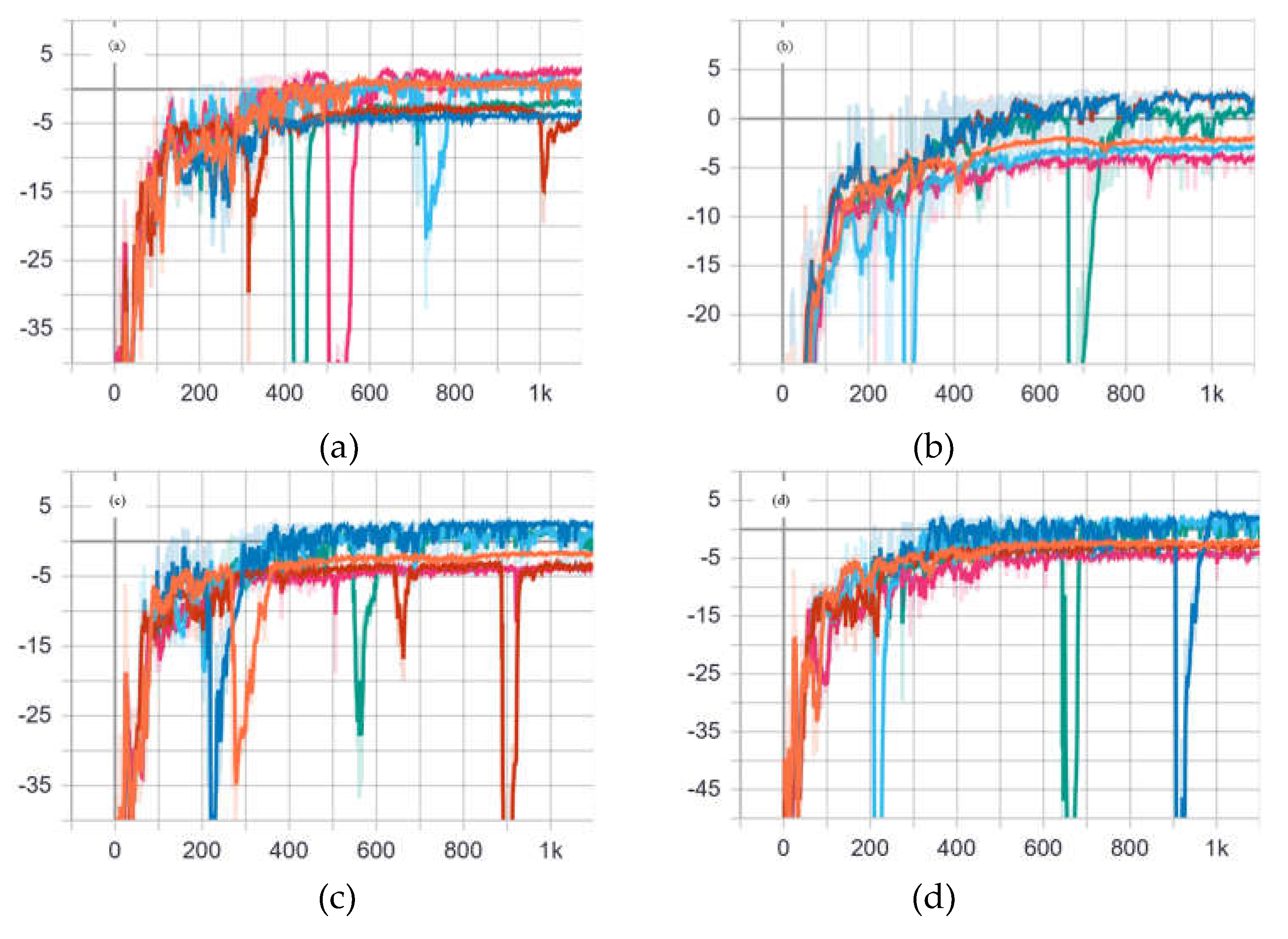

- Setting the time interval distance n. The target path points given by the manipulator at every n time are the target path points at the N*n time, where N=1, 2, 3..., and study the effect of different interval points on the tracking results. In our experiments, we set the interval distance interval=0, 5, 10 respectively.

- Terminal reward. Setting up a control experiment that during the training process, when the distance between the endpoint of the robotic arm and the target point is within 0.05m (the termination condition is met), an additional +5 reward is given to study its impact on the tracking results.

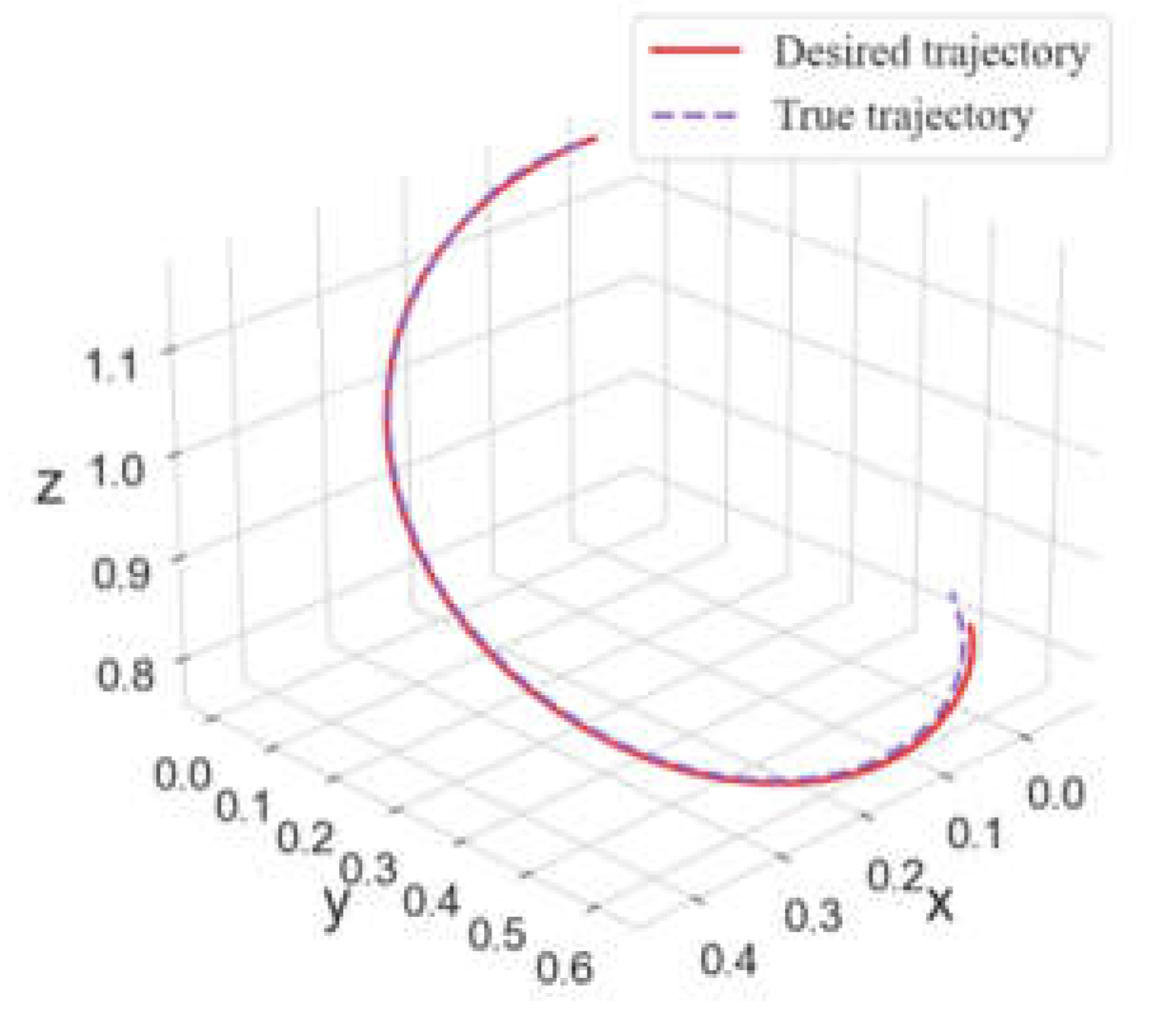

- In this experimental scenario, the target path generated by the RRT algorithm has obvious non-smoothness; while the tracking path generated by the reinforcement learning algorithm SAC based on the target path is also very smooth under the condition that the tracking accuracy is satisfied.

- By analyze the influence of the n value on the generated path, it can be found that when the n value is too large (n=10), its approaching effect on the target point is better, but its tracking effect on the target path is poor. But when n=1, the situation is opposite. Therefore, when selecting the value of n, it is necessary to balance the path tracking effect and the final position of the endpoint.

- Adding noise to the observations of the system during the simulation training process helps to improve the robustness of the control strategy and the anti-interference to noise, so that the strategy has better performance.

- During the simulation training process, when the endpoint of the manipulator reaches the allowable error range of the target point, adding a larger reward to the current strategy can make the approaching result of the robotic arm to the target point better, but it will lose some precision of path tracking;

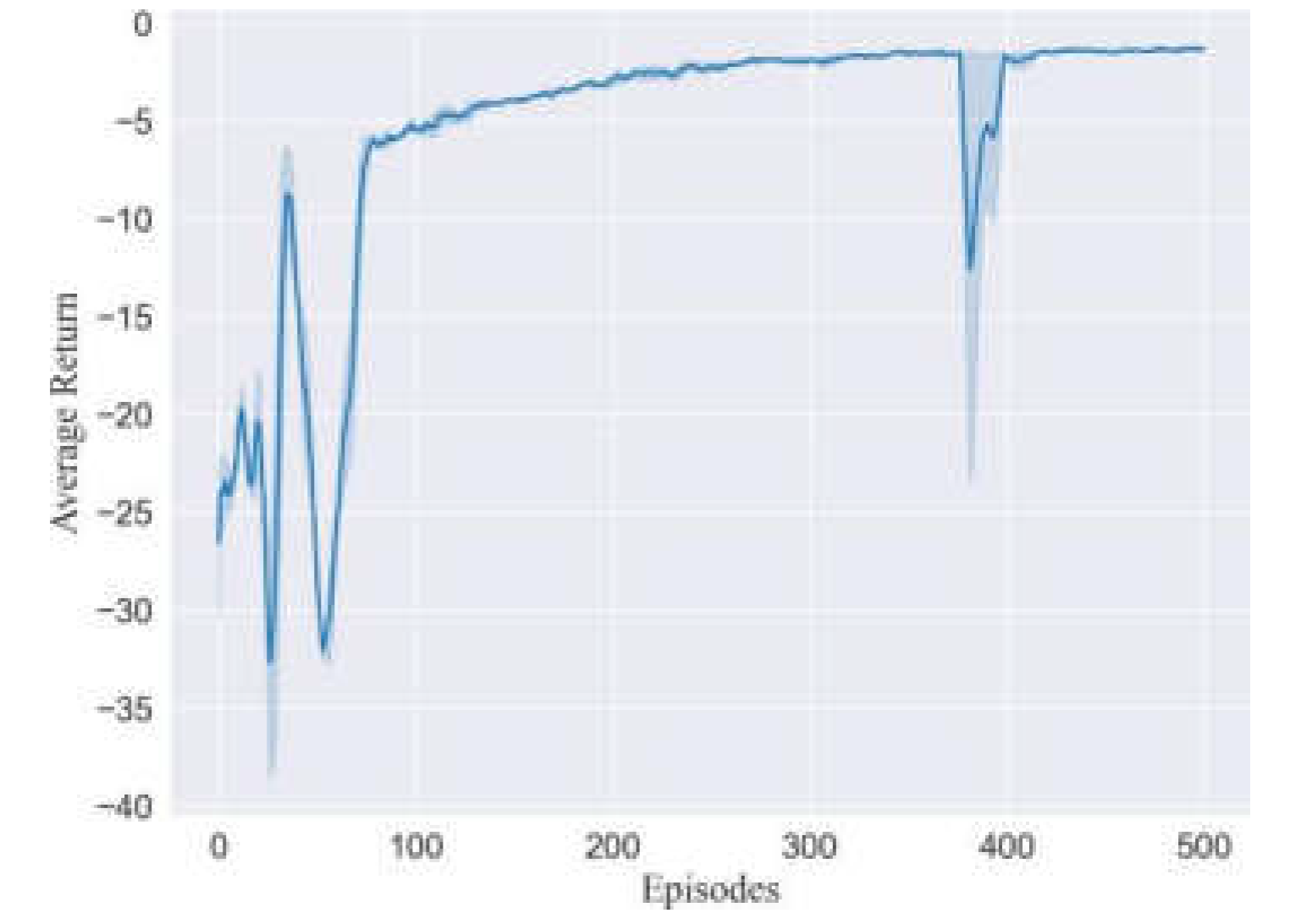

- Experiments show that the algorithm achieves good results in both position control and velocity control, and it can be seen from the curve of the training process that the curve can converge at an earlier time.

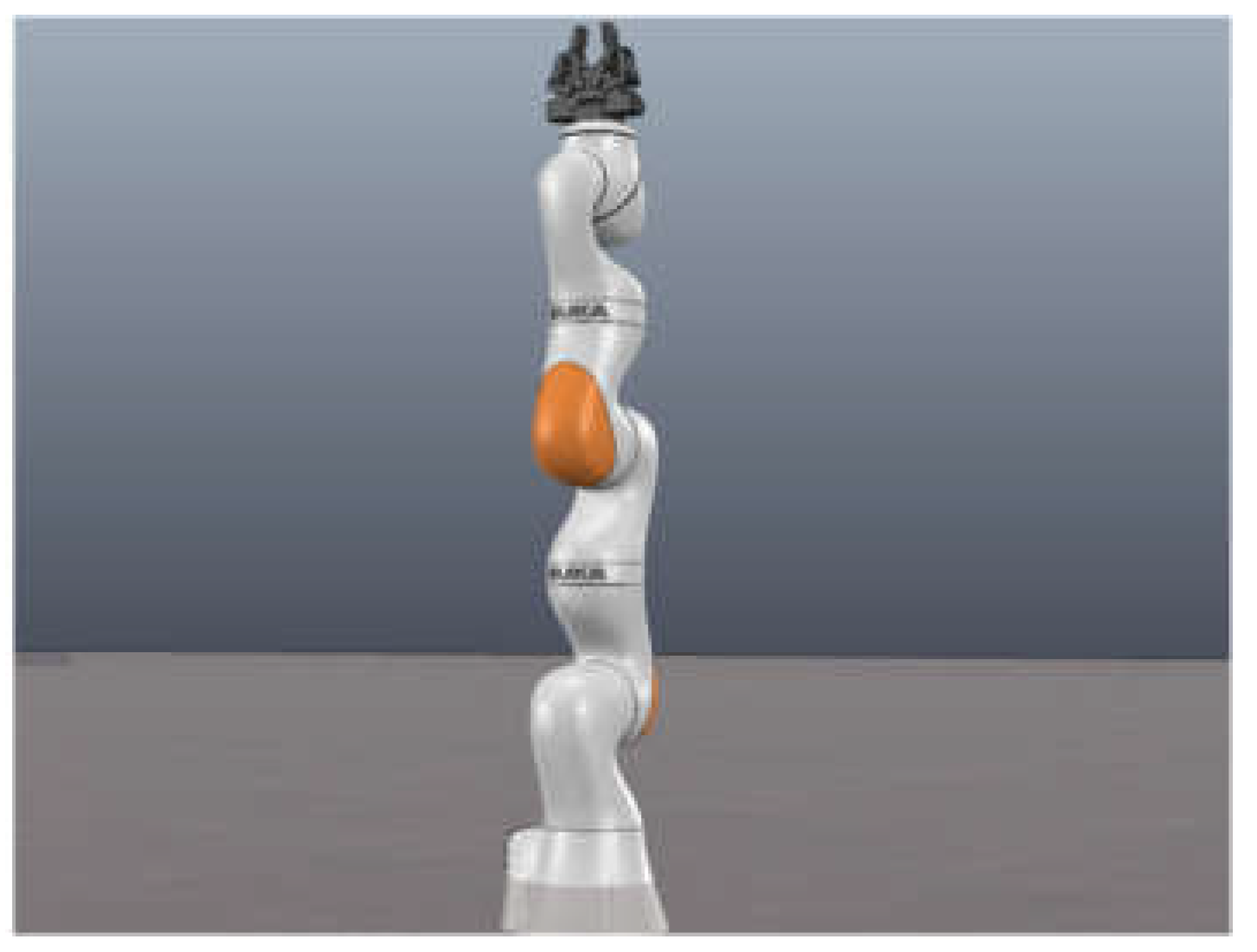

4.3. Redundant manipulator

5. Conclusion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Parlaktuna, O.; Ozkan, M. Adaptive control of free-floating space robots in Cartesian coordinates. Adv Robotics 2004, 18, 943–959. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, L. Adaptive neural network control for coordinated motion of a dual-arm space robot system with uncertain parameters. Appl Math Mech 2008, 29, 1131–1140. [Google Scholar] [CrossRef]

- Canudas, W.C.; Fixot, N. Robot control via robust estimated state feedback. IEEE T Automat Contr 1991, 36, 1497–1501. [Google Scholar] [CrossRef]

- Kim, E. ; Output feedback tracking control of robot manipulators with model uncertainty via adaptive fuzzy logic. IEEE T Fuzzy Syst 2004, 12, 368–378. [Google Scholar]

- Abdollahi, F.; Talebi, H. A, Patel RV. A stable neural network-based observer with application to flexible-joint manipulators. IEEE Trans Neural Netw 2006, 17, 118–129. [Google Scholar] [CrossRef]

- Kim, Y.H.; Lewis, F.L. Neural network output feedback control of robot manipulators. IEEE Trans Rob Autom 1999, 15, 301–309. [Google Scholar] [CrossRef]

- Selmic, R.R.; Lewis, F.L. Dead zone compensation in motion control systems using neural networks. IEEE Trans Automat Contr. 2000, 45, 602–613. [Google Scholar] [CrossRef]

- Zhu, W.H.; Lamarche, T. Dupuis, E. et al. Networked embedded control of modular robot manipulators using VDC. IFAC Proc 2014, 47, 8481–8486. [Google Scholar] [CrossRef]

- Cao, S.; Jin, Y.; Trautmann, T.; Liu, K. Design and Experiments of Autonomous Path Tracking Based on Dead Reckoning. Appl. Sci. 2023, 13, 317. [Google Scholar] [CrossRef]

- Cai, Z.X.; Robotics. Tsinghua university press: Beijing (BJ), China, 2000.

- Patolia, H.; Pathak, P.M.; Jain, S.C. Force control in single DOF dual arm cooperative space robot. P 2010 Spr Simul Multicon 2010, 1–8. [Google Scholar]

- Ma, B.L.; Huo, W. Adaptive Control of Space Robot System. Iet Control Theory A 1996, 13, 191–197. [Google Scholar]

- Spong, M.W. ;On the robust control of robot manipulators. Ieee T Automat Contr 1992, 37, 1782–1786. [Google Scholar] [CrossRef]

- Purwar, S.; Kar, I.N.; Jha, A.N. Adaptive output feedback tracking control of robot manipulators using position measurements only. Expert Syst Appl 2008, 34, 2789–2798. [Google Scholar] [CrossRef]

- Annusewicz-Mistal, A.; Pietrala, D.S.; Laski, P.A.; Zwierzchowski, J.; Borkowski, K.; Bracha, G.; Borycki, K.; Kostecki, S.; Wlodarczyk, D. Autonomous Manipulator of a Mobile Robot Based on a Vision System. Appl. Sci. 2023, 13, 439. [Google Scholar] [CrossRef]

- Zhang, T.; Song, Y.; Kong, Z.; Guo, T.; Lopez-Benitez, M.; Lim, E.; Ma, F.; Yu, L. Mobile Robot Tracking with Deep Learning Models under the Specific Environments. Appl. Sci. 2023, 13, 273. [Google Scholar] [CrossRef]

- Tappe, S.; Pohlmann, J.; Kotlarski, J. et al. Towards a follow-the-leader control for a binary actuated hyper-redundant manipulator. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 3195–3201.

- Palmer, D.; Cobos-Guzman, S.; Axinte, D. Real-time method for tip following navigation of continuum snake arm robots. Robot Auton Syst 2014, 62, 1478–1485. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement learning: An introduction; MIT press: Cambridge (MA), Britain, 2018. [Google Scholar]

- Guo, X. Research on the control strategy of manipulator based on DQN. master’s thesis, Beijing Jiaotong University, Beijing(BJ), China, 2018. [Google Scholar]

- Liu, Y.C.; Huang, C.Y. DDPG-Based Adaptive Robust Tracking Control for Aerial Manipulators With Decoupling Approach. IEEE Trans Cybern 2022, 52, 8258–8271. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.et al. Continuous control with deep reinforcement learning. ArXiv [Preprint] 2015, arXiv:1509.02971.

- Fujimoto, S.; Hoof, H.; Meger, D. Addressing function approximation error in actor-critic methods. International conference on machine learning 2018, PMLR, 1587–1596. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. International conference on machine learning 2018, 1861–1870. [Google Scholar]

- LaValle, SM. Rapidly-exploring random trees: A new tool for path planning. 1999, Research Report.

| Position Control | w/o observation noise | observation noise | |||||

| interval | interval | ||||||

| 1 | 5 | 10 | 1 | 5 | 10 | ||

| Average error between tracks (m) | w/o terminal reward | 0.0374 | 0.0330 | 0.0592 | 0.0394 | 0.0427 | 0.0784 |

| terminal reward | 0.0335 | 0.0796 | 0.0502 | 0.0335 | 0.0475 | 0.0596 | |

| Distance between end-point (m) | w/o terminal reward | 0.0401 | 0.0633 | 0.0420 | 0.0443 | 0.0485 | 0.0292 |

| terminal reward | 0.0316 | 0.0223 | 0.0231 | 0.0111 | 0.0148 | 0.0139 | |

| Velocity Control | w/o observation noise | observation noise | |||||

| interval | interval | ||||||

| 1 | 5 | 10 | 1 | 5 | 10 | ||

| Average error between tracks(m) | w/o terminal reward | 0.0343 | 0.0359 | 0.0646 | 0.0348 | 0.0318 | 0.0811 |

| terminal reward | 0.0283 | 0.0569 | 0.0616 | 0.0350 | 0.0645 | 0.0605 | |

| Distance between end-point (m) | w/o terminal reward | 0.0233 | 0.0224 | 0.0521 | 0.0456 | 0.0365 | 0.0671 |

| terminal reward | 0.0083 | 0.0030 | 0.0337 | 0.0275 | 0.0192 | 0.0197 | |

| Position Control | 0.5kg | 1kg | 2kg | 3kg | 5kg | ||

| Average error between tracks(m) | w/o observation noise | w/o terminal reward | 0.03742 | 0.03743 | 0.03744 | 0.03745 | 0.03746 |

| terminal reward | 0.03354 | 0.03354 | 0.03359 | 0.03355 | 0.03355 | ||

| observation noise | w/o terminal reward | 0.03943 | 0.03943 | 0.03943 | 0.03942 | 0.03941 | |

| terminal reward | 0.03346 | 0.03346 | 0.03346 | 0.03346 | 0.03345 | ||

| Distance between end-point(m) | w/o observation noise | w/o terminal reward | 0.04047 | 0.04047 | 0.04048 | 0.04049 | 0.04050 |

| terminal reward | 0.03165 | 0.03166 | 0.03157 | 0.03159 | 0.03161 | ||

| observation noise | w/o terminal reward | 0.04430 | 0.04441 | 0.04438 | 0.04430 | 0.04436 | |

| terminal reward | 0.01110 | 0.01109 | 0.01109 | 0.01108 | 0.01105 | ||

| Velocity Control | 0.5kg | 1kg | 2kg | 3kg | 5kg | ||

|---|---|---|---|---|---|---|---|

| Average error between tracks(m) | w/o observation noise | w/o terminal reward | 0.03426 | 0.03427 | 0.03425 | 0.03426 | 0.03425 |

| terminal reward | 0.02826 | 0.02825 | 0.02866 | 0.02873 | 0.02882 | ||

| observation noise | w/o terminal reward | 0.03478 | 0.03479 | 0.03483 | 0.03486 | 0.03497 | |

| terminal reward | 0.03503 | 0.03503 | 0.03503 | 0.03502 | 0.03501 | ||

| Distance between end-point(m) | w/o observation noise | w/o terminal reward | 0.02326 | 0.02444 | 0.02436 | 0.02430 | 0.02422 |

| terminal reward | 0.00831 | 0.01201 | 0.01395 | 0.01463 | 0.01513 | ||

| observation noise | w/o terminal reward | 0.04560 | 0.04562 | 0.04565 | 0.04569 | 0.04578 | |

| terminal reward | 0.02748 | 0.02746 | 0.02743 | 0.02741 | 0.02733 | ||

| Velocity control | w/o terminal reward | terminal reward | Jacobian matrix | ||||

| Interval | 1 | 5 | 10 | 1 | 5 | 10 | |

| Smoothness | 0.5751 | 0.3351 | 0.5925 | 0.0816 | 0.5561 | 0.4442 | 0.7159 |

| Energy consumption | 0.5kg | 1kg | 2kg | 3kg | 5kg | |

|---|---|---|---|---|---|---|

| Position Control | w/o terminal reward | 4.44438 | 4.71427 | 5.27507 | 5.79426 | 6.92146 |

| terminal reward | 5.01889 | 5.34258 | 5.95310 | 6.55227 | 7.76305 | |

| Velocity Control | w/o terminal reward | 4.97465 | 5.38062 | 6.23886 | 6.95099 | 8.33596 |

| terminal reward | 6.03735 | 6.37981 | 7.05696 | 7.75185 | 9.15828 | |

| Traditional | Jacobian matrix | 8.95234 | 9.81593 | 10.8907 | 10.9133 | 13.3241 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).