Submitted:

26 May 2023

Posted:

29 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

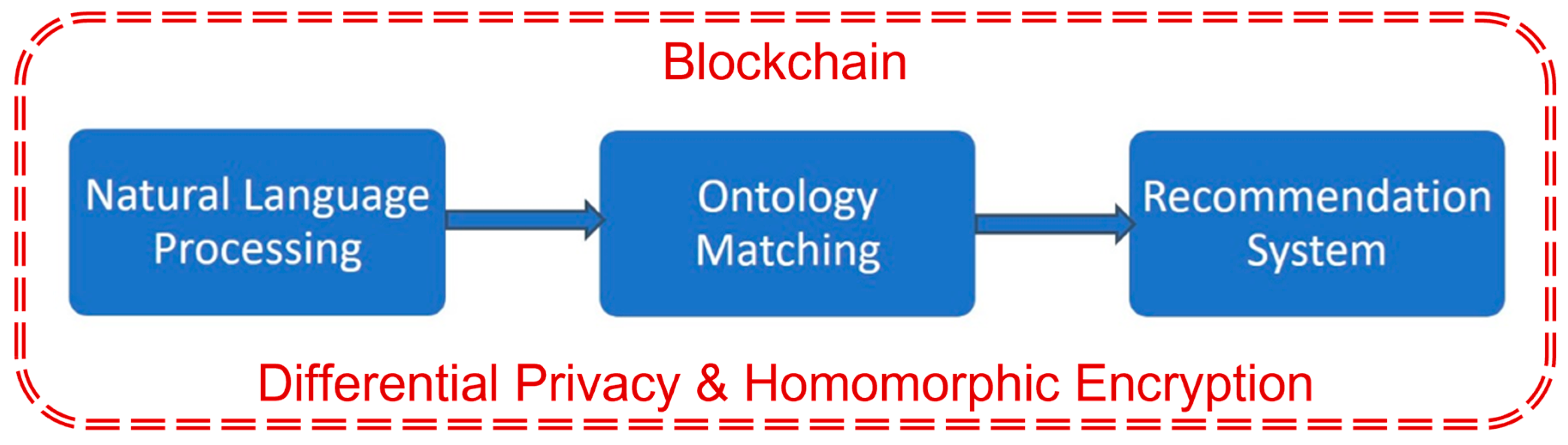

3. Proposed Approach

4. Materials and Methods

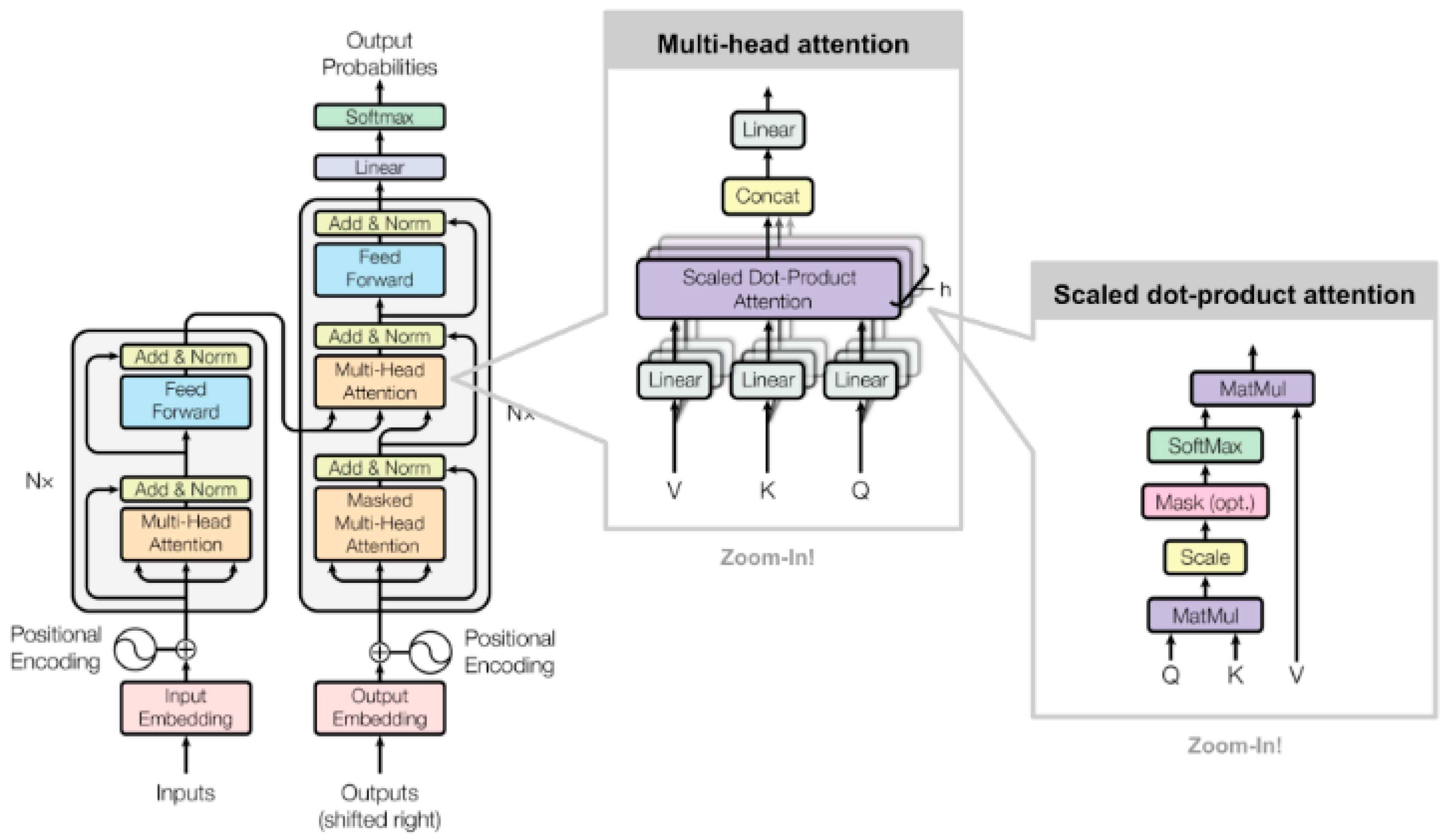

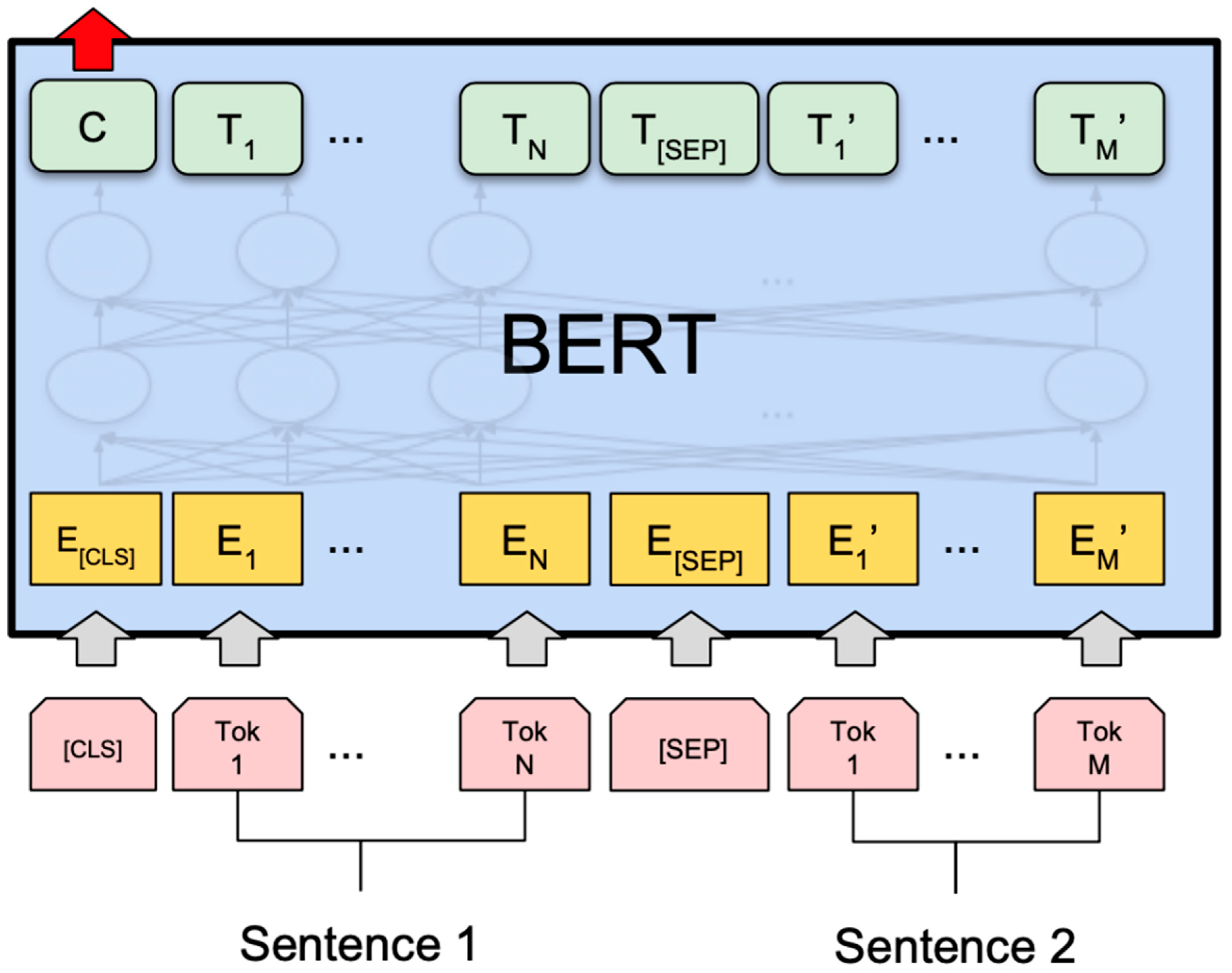

4.1. Natural Language Processing (NLP)

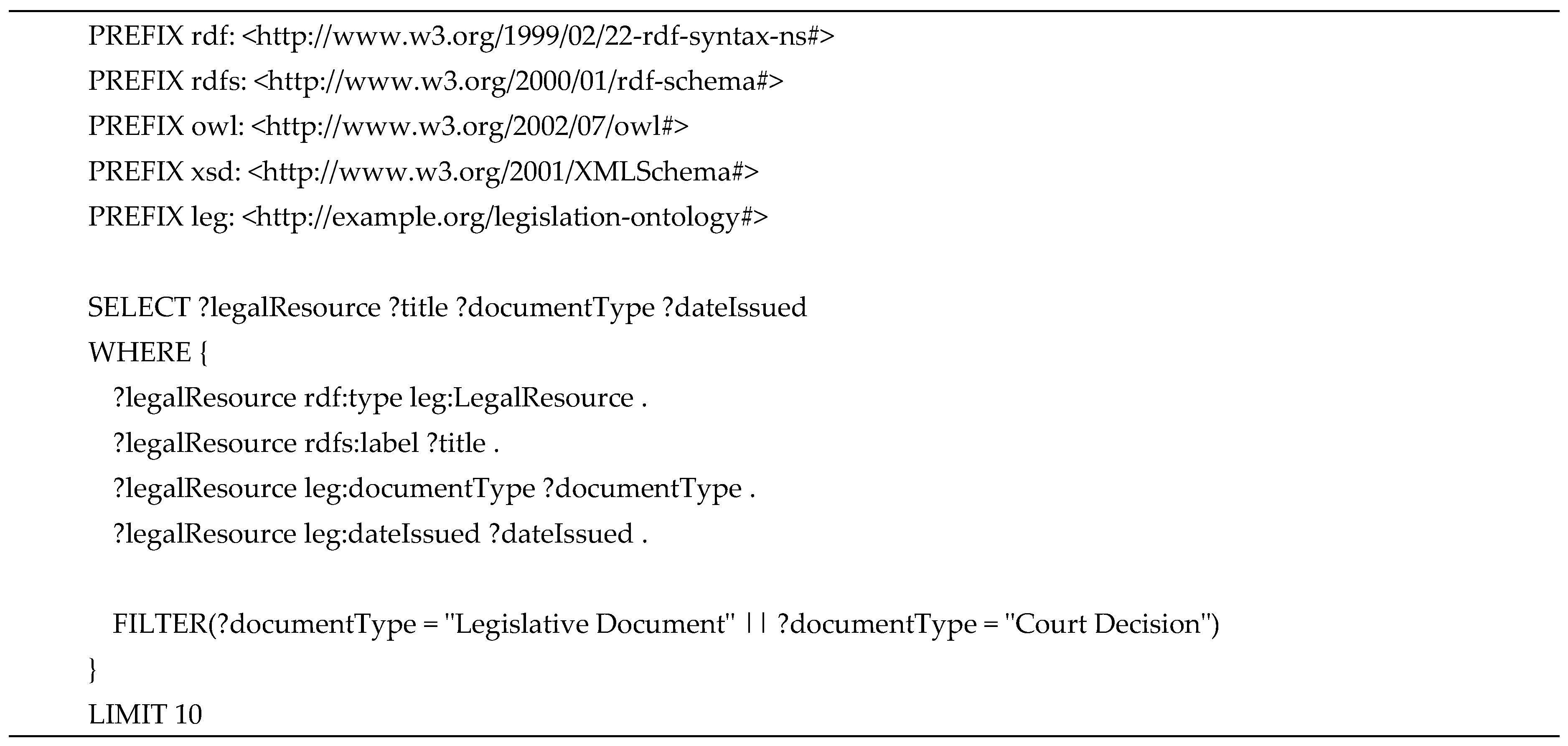

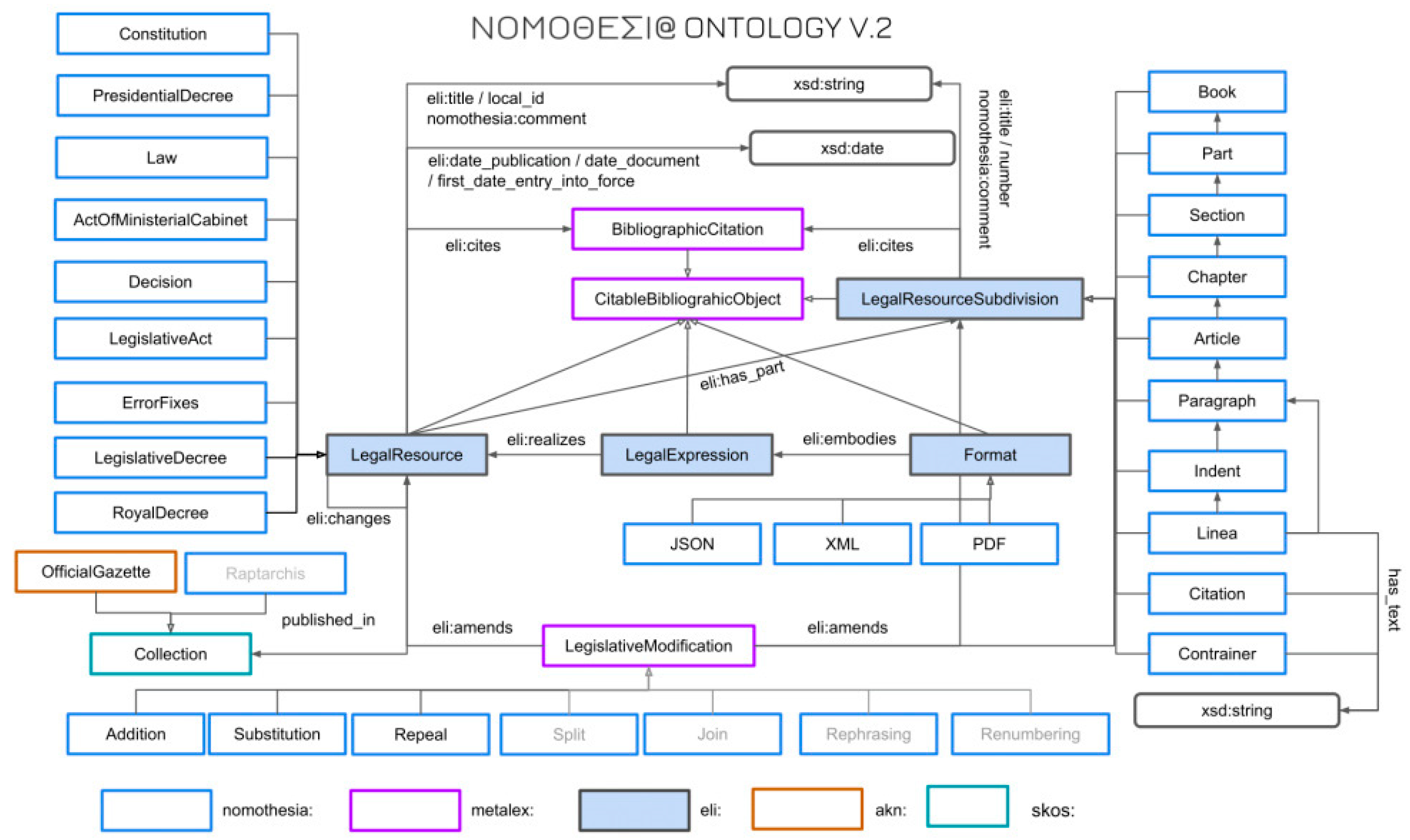

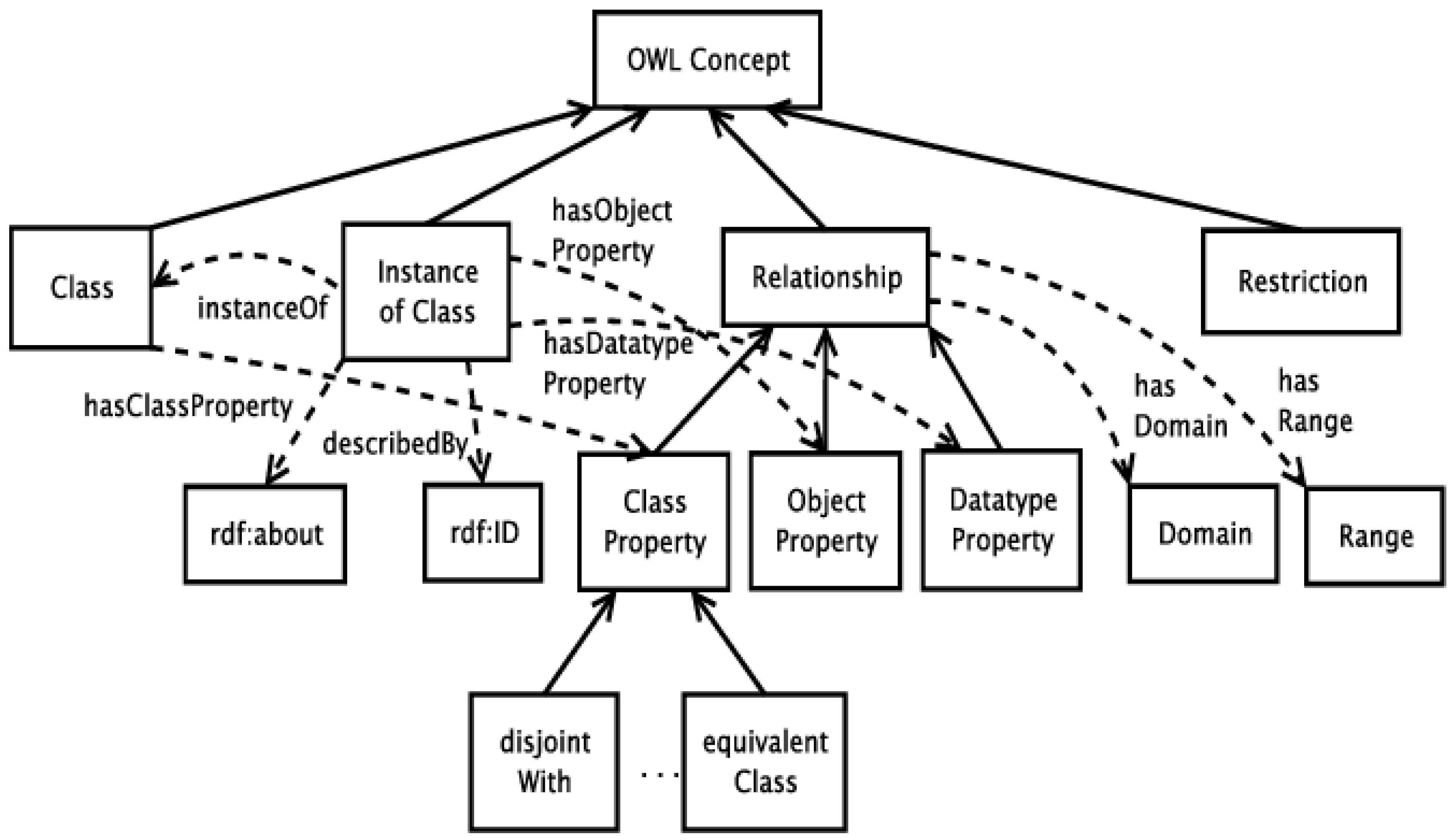

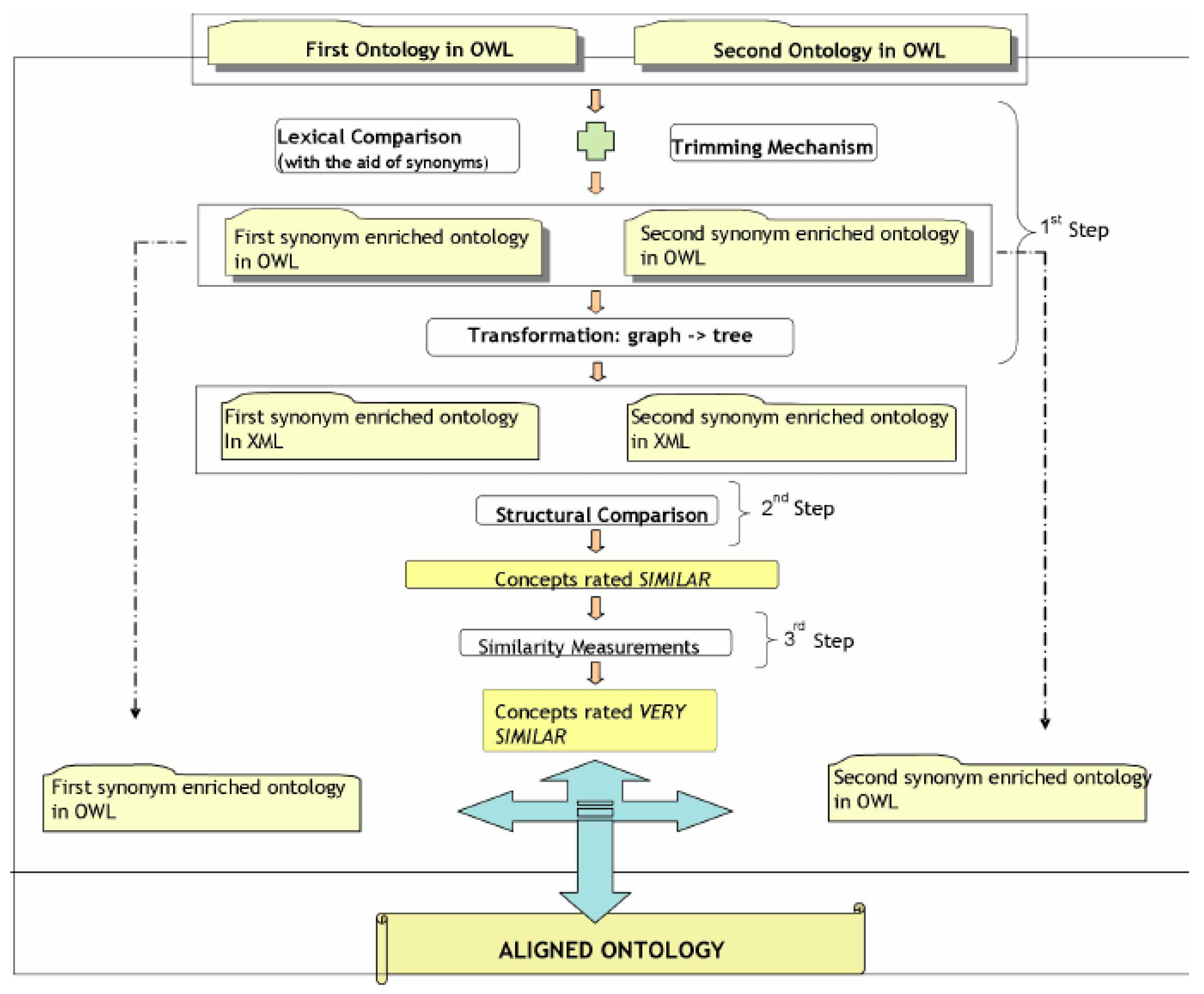

4.2. Ontology Matching (OM)

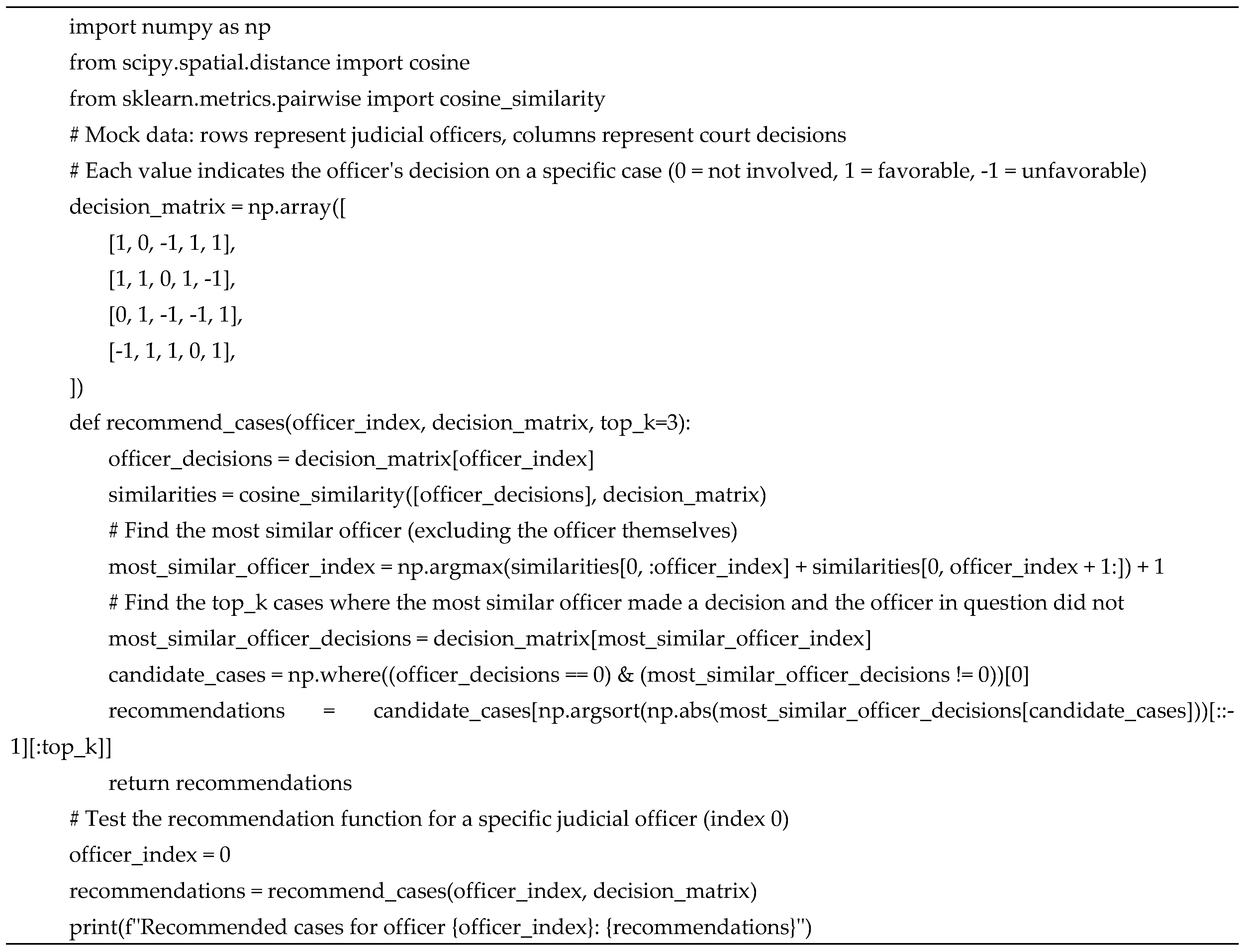

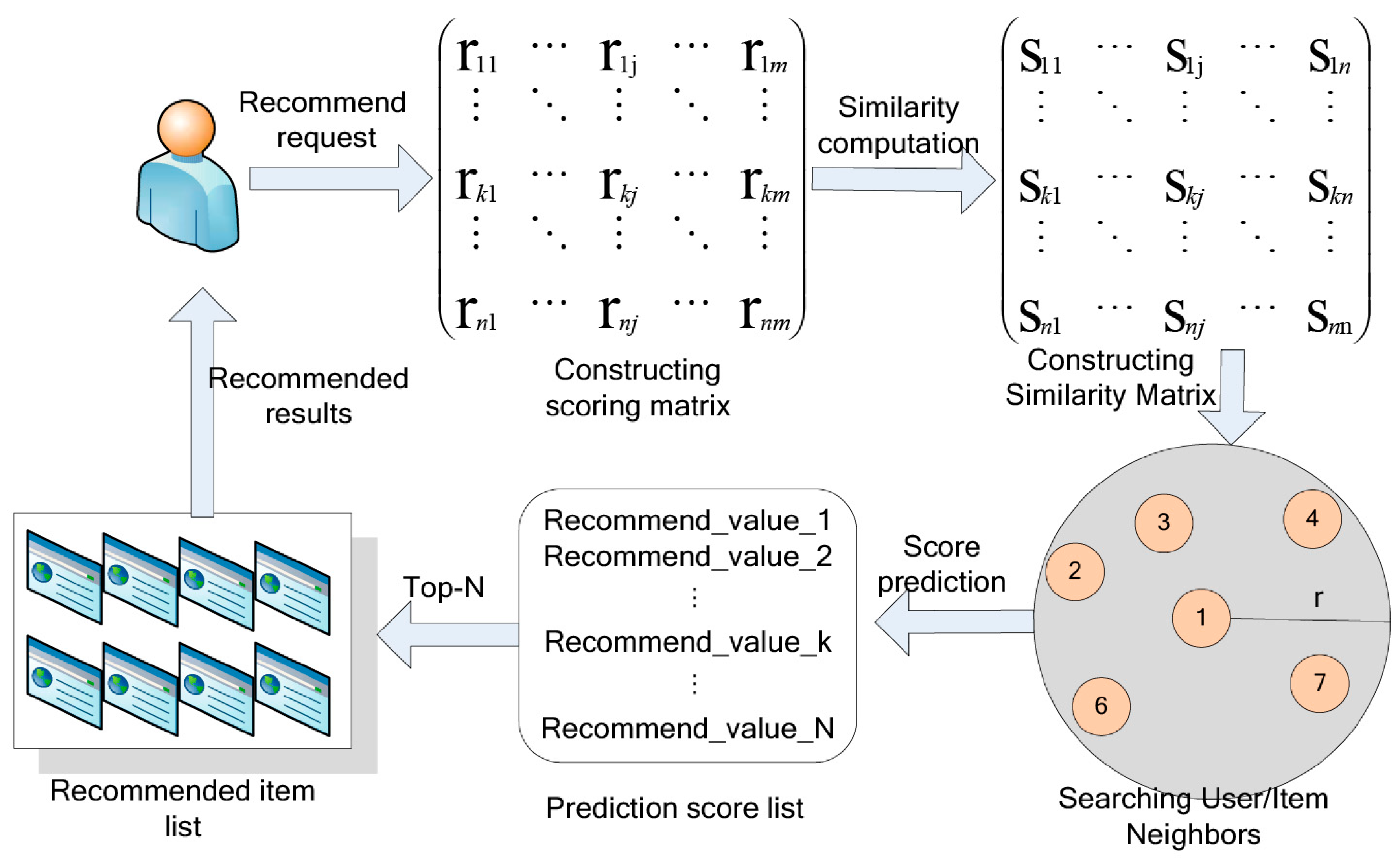

4.3. Recommendation System (RS)

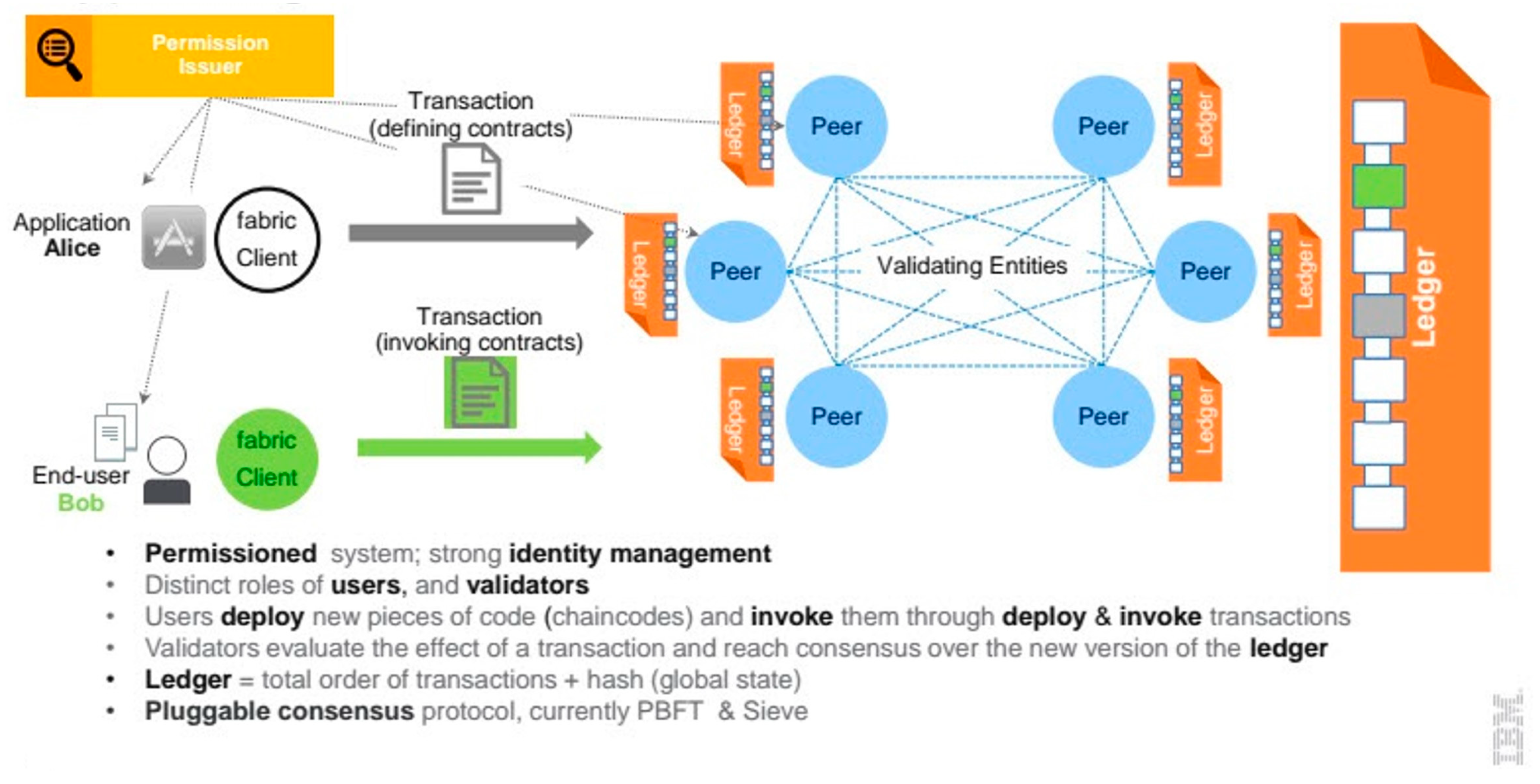

4.4. Blockchain

- Permissioned Network: Hyperledger Fabric is a permissioned blockchain technology, meaning that only authorized participants can access the network. This makes it suitable for applications where privacy and security are critical, such as in the financial and healthcare industries.

- Modular Architecture: Hyperledger Fabric has a modular architecture that allows for flexibility and customization. Developers can choose the components they need and customize them according to their specific requirements.

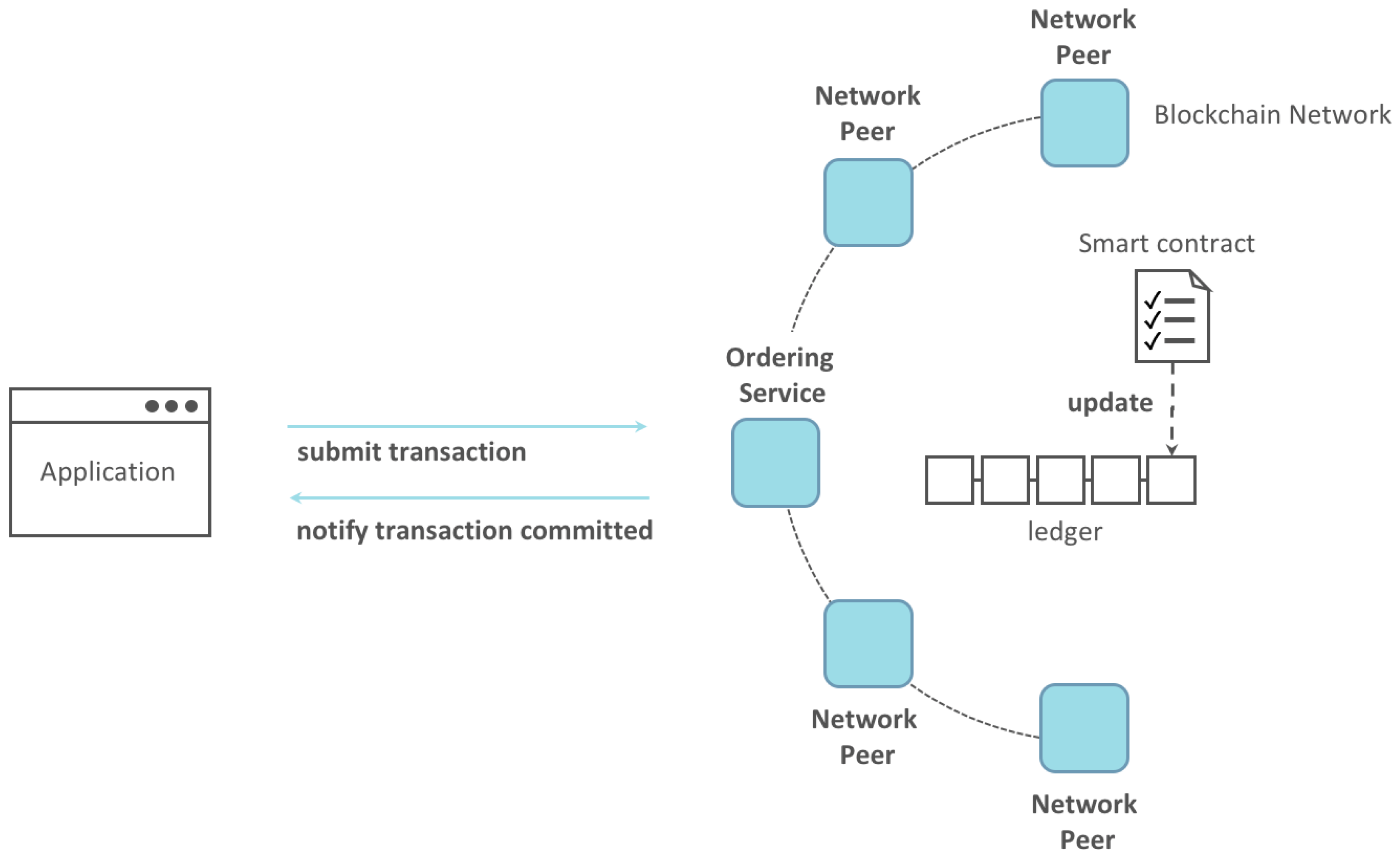

- Smart Contracts: Hyperledger Fabric supports the execution of smart contracts, which are self-executing contracts that can automate the enforcement of terms and conditions. Smart contracts can help to reduce the need for intermediaries and streamline business processes.

- Consensus Mechanism: Hyperledger Fabric uses a consensus mechanism that allows for multiple types of consensus algorithms to be used, depending on the specific requirements of the application. This flexibility allows developers to choose the most suitable consensus algorithm for their application.

- Privacy and Confidentiality: Hyperledger Fabric provides privacy and confidentiality features that can help to protect sensitive information and maintain confidentiality. This is achieved through the use of private channels, which allow for secure communication between selected network participants.

-

Secure and Transparent Document Management: Hyperledger Fabric can be used to securely store and manage legal documents, contracts, and transactions. By leveraging the immutability and tamper-proof features of the blockchain, it can ensure that all documents and transactions are recorded and verified and cannot be altered or deleted without the consent of all parties involved. The architecture employs cryptographic techniques to ensure the integrity and authenticity of legal documents and transactions. Each document or transaction is cryptographically signed by the parties involved and verified by the blockchain network, making it tamper-proof and immutable. Here are some of the cryptographic techniques that can be used in this architecture:

- Digital Signatures: Digital signatures can be used to verify the authenticity and integrity of legal documents and transactions. Each document or transaction can be cryptographically signed by the parties involved, ensuring that it cannot be altered or tampered with.

- Hash Functions: Hash functions can be used to create a unique digital fingerprint of legal documents and transactions. This can be used to verify the integrity of the document or transaction, ensuring that it has not been modified or tampered with.

- Public-Key Cryptography: Public-key cryptography can be used to ensure secure communication between parties involved in legal transactions. Each party can generate a public and private key pair, with the public key used for encryption and the private key used for decryption.

- Differential Privacy: Differential privacy can be used to add noise to statistical data to protect the privacy of individual data points while still allowing for useful analysis. This can be used to ensure that sensitive information is protected while still allowing for necessary analysis and decision-making.

- Homomorphic Encryption: Homomorphic encryption can be used to enable computation on encrypted data, without requiring the decryption of the data. This can help to ensure the privacy and confidentiality of sensitive information while still allowing for necessary computations.

- Access Controls: Access controls can be used to restrict access to sensitive information only to authorized users. This helps to prevent unauthorized access to confidential data and ensures that only those with a need-to-know have access to sensitive information.

- 2.

-

Consensus Mechanism: Hyperledger Fabric can provide a consensus mechanism that ensures that all parties involved in a legal transaction or decision agree. This can help to prevent disputes and ensure that all parties are held accountable for their actions. The proposed system can use one of the following consensus mechanisms, depending on the requirements and use case:

- Practical Byzantine Fault Tolerance (PBFT): PBFT is a consensus mechanism that ensures that all nodes in the network agree on the validity of a transaction or decision. It is commonly used in permissioned blockchain networks and provides fast confirmation times, making it suitable for the proposed system.

- Raft Consensus Algorithm: Raft is another consensus mechanism that is commonly used in permissioned blockchain networks. It ensures that all nodes in the network agree on the validity of a transaction or decision and provides fast confirmation times.

- Kafka-based Consensus: Kafka-based consensus is a consensus mechanism that is based on Apache Kafka, a distributed streaming platform. It provides fast confirmation times and ensures that all nodes in the network agree on the validity of a transaction or decision.

- 3.

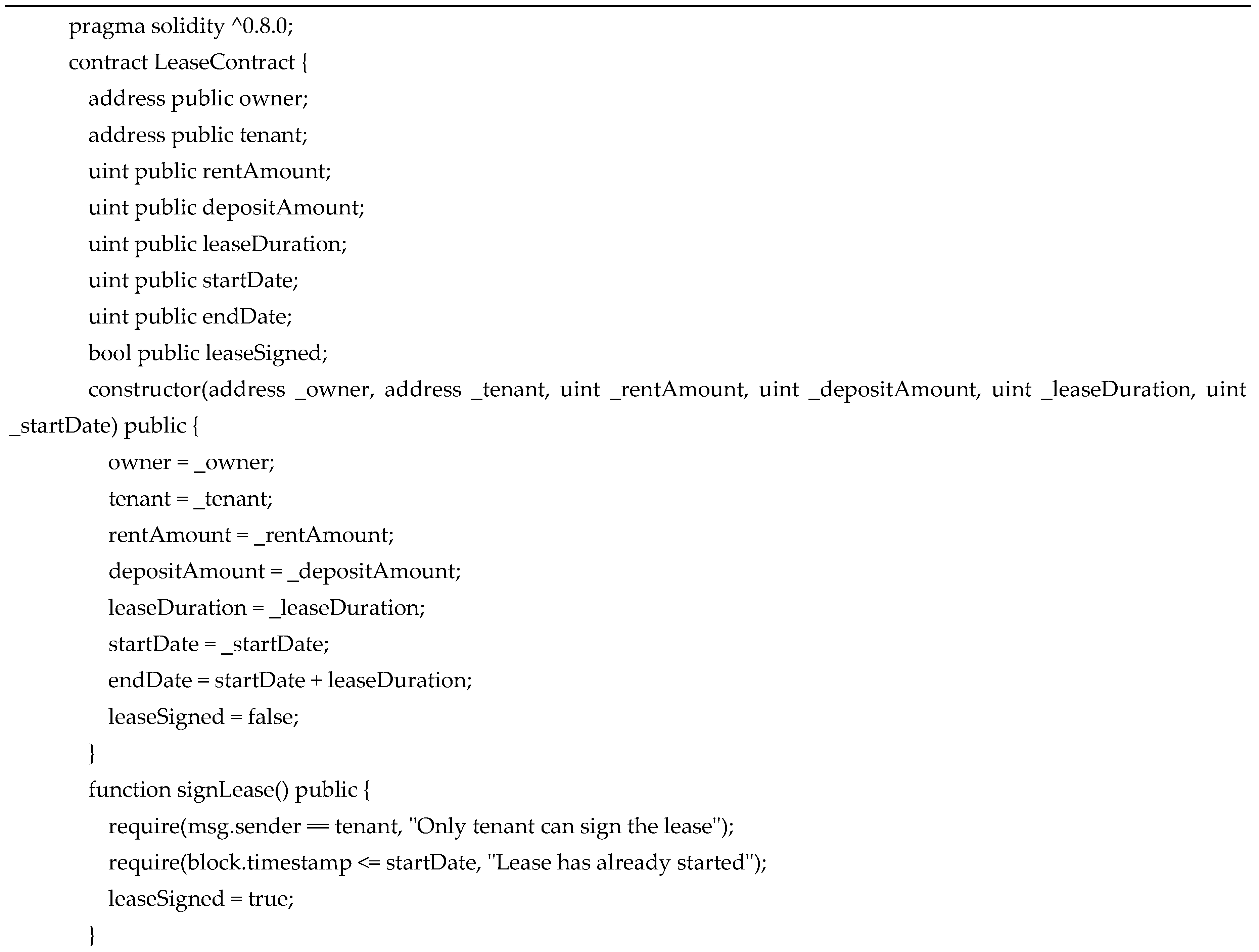

- Smart Contracts: Hyperledger Fabric can enable the development and execution of smart contracts, which can help to automate legal processes and enforce the terms of agreements. This can help reduce the time and cost of traditional legal processes. An example of a Hyperledger Fabric application based on a smart contract is presented in Figure 9.

- Constructor: Initializes the lease agreement with the details provided by the owner and tenant, such as the rent amount, deposit amount, lease duration, and start date.

- SignLease: Allows the tenant to sign the lease agreement, indicating they agree to the terms.

- PayRent: Allows the tenant to pay the rent amount to the owner. It verifies that the rent amount is correct and that the lease has not ended.

- RefundDeposit: This allows the owner to refund the deposit amount to the tenant once the lease has ended.

- GetLeaseDetails: Returns the details of the lease agreement, including the owner and tenant's addresses, the rent and deposit amounts, the lease duration, the start and end dates, and whether the lease has been signed.

- 4.

- Privacy and Confidentiality: Hyperledger Fabric provides privacy and confidentiality features that can help to protect sensitive information and maintain confidentiality. For example, zero-knowledge proofs can be used to enable selective disclosure of information, allowing only authorized parties to access specific data and information. Hyperledger Fabric also provides privacy and confidentiality features such as private channels, which allow a subset of network participants to conduct transactions without revealing the details to other participants.

- Natural Language Querying: ChatGPT can provide a natural language interface to interact with the blockchain module. Instead of using complex commands and APIs to interact with the blockchain, users can simply ask questions in natural language, and ChatGPT can generate the appropriate response. For example, a user can ask, "What is the most relevant legal case in the last five years?" ChatGPT can query the blockchain module to provide the case.

- Legal Document Analysis: ChatGPT can be trained to analyze legal documents such as contracts, agreements, and court decisions. By analyzing legal documents using NLP techniques, ChatGPT can identify relevant clauses, extract relevant information, and make recommendations for judicial decision-making. For example, ChatGPT can analyze a contract to identify the key terms and conditions and verify whether they have been met without privacy leakages.

- Legal Compliance Monitoring: ChatGPT can monitor legal compliance by analyzing legal documents and transactions on the blockchain in real time. By monitoring transactions on the blockchain, ChatGPT can identify potential compliance issues and alert the relevant parties. For example, ChatGPT can analyze a transaction to ensure it complies with relevant regulations and policies.

- Smart Contract Development: ChatGPT can assist in developing and testing smart contracts by generating test cases and providing feedback on the performance of the contracts. By generating test cases using natural language, ChatGPT can help to ensure that the contracts are robust and reliable. For example, ChatGPT can generate test cases to ensure that a smart contract executes the terms of an agreement correctly.

- Data Analysis: ChatGPT can be used to analyze data on the blockchain and provide insights into legal trends and patterns. By analyzing data using NLP techniques, ChatGPT can identify patterns and trends useful for judicial decision-making. For example, ChatGPT can analyze court decisions to identify common legal arguments and reasoning used by judges.

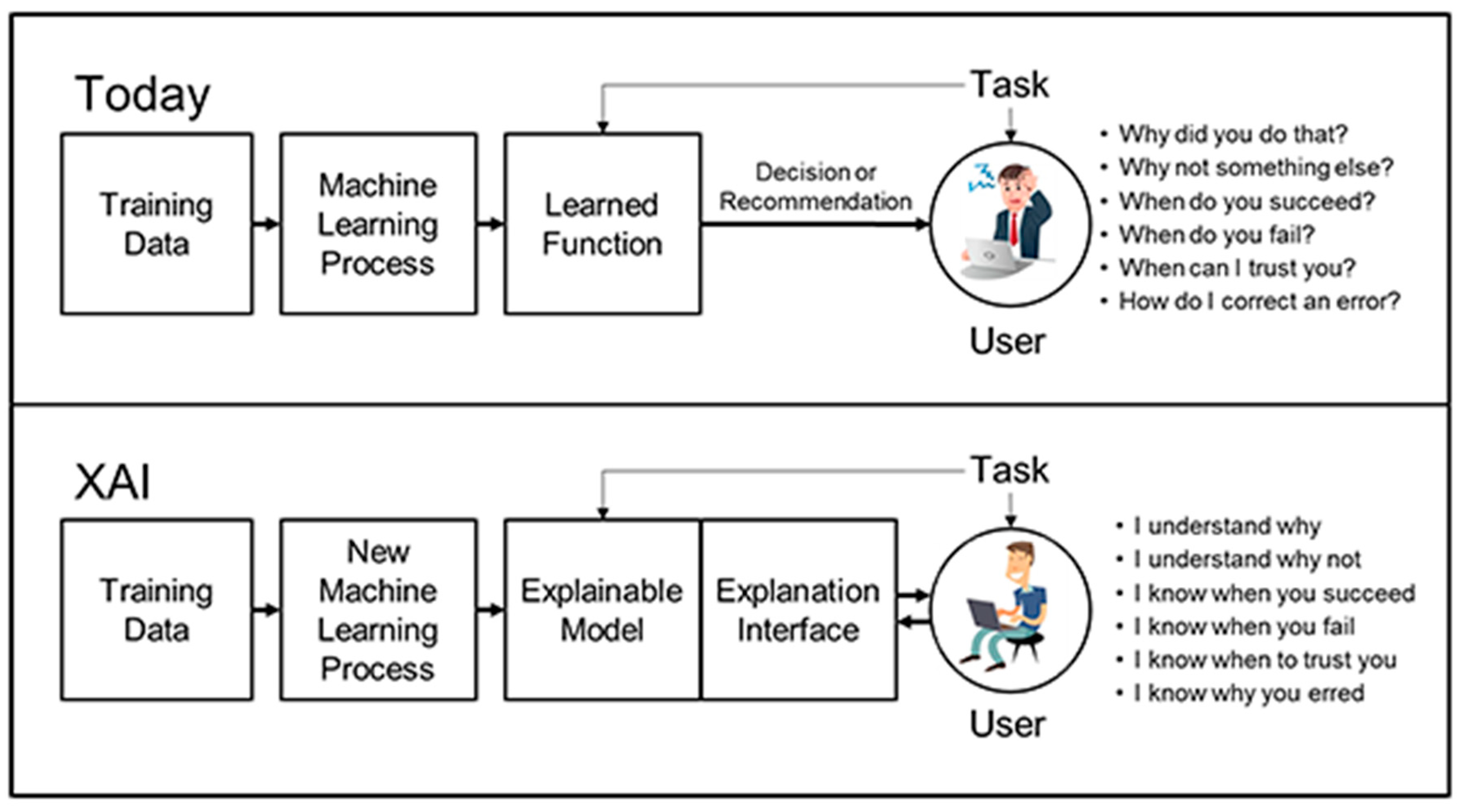

4.4. Explainable AI (XAI)

-

Interpretable Models: Interpretable models are a type of AI model that is designed to be easily understood and explainable. These models are built in a way that enables humans to interpret the decision-making process and understand the factors that influence the model's output. Interpretable models are particularly important in domains where the model's decisions can significantly impact people's lives, such as healthcare, finance, and justice. There are several types of interpretable models, each with strengths and weaknesses. Here are a few examples:

- Decision Trees: Decision trees are a type of interpretable model commonly used in decision-making tasks. Decision trees represent the decision-making process as a tree structure, with each node representing a decision based on a particular input feature. Decision trees are easy to interpret and can provide insights into which features are most important in making a decision.

- Linear Models: Linear models are a type of interpretable model used to make predictions based on linear relationships between input features and output. Linear models are easy to interpret and can provide insights into how individual input features influence the model's output.

- Rule-Based Models: Rule-based models are a type of interpretable model that use a set of rules to make decisions. Rule-based models are easy to interpret and can provide insights into the specific rules that the model uses to make decisions.

- Bayesian Networks: Bayesian networks are a type of interpretable model that represent the relationships between input features and output using a probabilistic graphical model. Bayesian networks are easy to interpret and can provide insights into the probabilistic relationships between input features and output.

- 2.

-

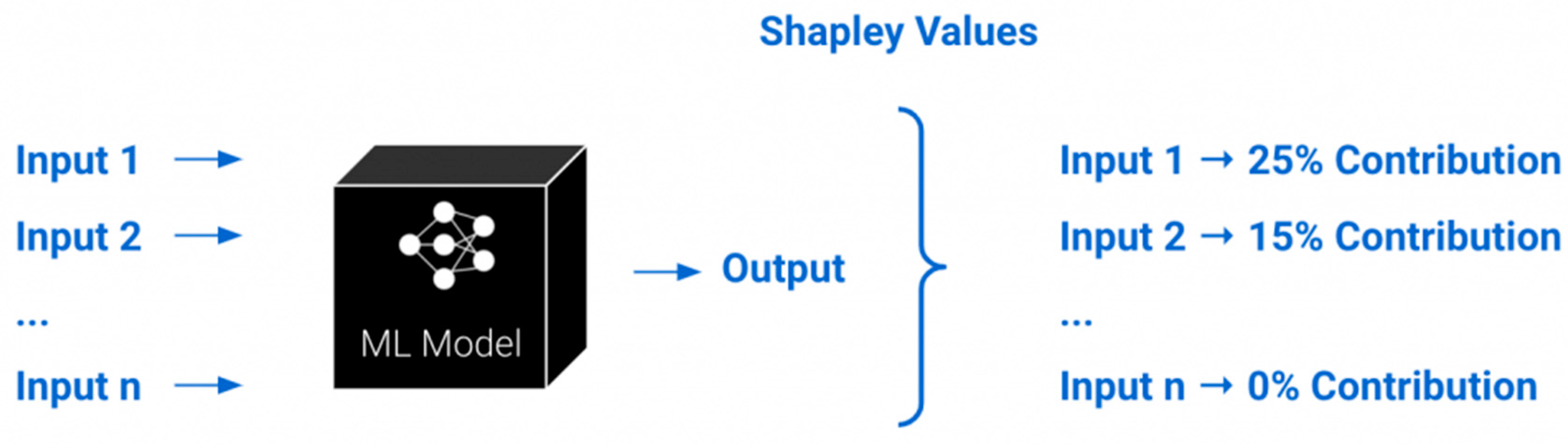

Feature Importance Analysis: Feature importance analysis is a technique used to identify the input features that are most important in making a decision in an AI model. The goal of feature importance analysis is to identify the specific input features that have the most significant impact on the model's output. By identifying the most important features, it is possible to gain insights into the decision-making process and to understand which factors are most influential in the model's output. There are several techniques that can be used to perform feature importance analysis, including:

- Correlation-based Feature Selection: This technique involves selecting input features that are most strongly correlated with the output. The features that have the highest correlation with the output are considered to be the most important.

- Recursive Feature Elimination: This technique involves recursively removing input features from the model and evaluating the model's performance after each removal. The features that have the most significant impact on the model's performance are considered to be the most important.

- Permutation Importance: This technique involves randomly shuffling the values of an input feature and evaluating the impact on the model's output. The features that have the most significant impact on the model's output are considered to be the most important.

- Information Gain: This technique involves calculating the reduction in entropy that is achieved by including an input feature in the model. The features that have the highest information gain are considered to be the most important.

- 3.

-

Visualization: Visualization is a technique used to represent data in a way that is more intuitive and understandable to humans. In the context of AI, visualization can be used to represent the decision-making process of a model or to provide insights into the factors that influence the model's output. Visualization techniques can help to make AI models more interpretable and understandable to humans, which is important for ensuring that they are used ethically and responsibly. There are several visualization techniques that can be used in AI, including:

- Heat Maps: Heat maps are a type of visualization that can be used to highlight the areas of an image that are most important in making a classification decision. Heat maps use color to represent the importance of each pixel in the image, with brighter colors indicating more important pixels.

- Visual Trees: Visual trees can be visualized as a tree structure, with each node representing a decision based on a particular input feature. Visual trees can be visualized using different shapes and colors to represent different types of nodes and branches.

- Scatter Plots: Scatter plots can be used to visualize the relationship between two input features and the output. Scatter plots can be used to identify patterns and relationships that may not be immediately apparent from the data.

- Bar Charts: Bar charts can be used to visualize the importance of different input features in making a decision. Bar charts can be used to compare the importance of different input features and to identify the most important features.

- 4.

-

Counterfactual Explanations: Counterfactual explanations are a type of explanation that shows how a different decision would have been made by an AI model if the input data had been different. Counterfactual explanations can be used to provide insights into the decision-making process of the model and to identify the specific factors that led to a particular decision. There are several techniques that can be used to generate counterfactual explanations, including:

- Perturbation-based Methods: Perturbation-based methods involve modifying the input data in a way that changes the model's output. The modifications can be made to a single feature or multiple features. The counterfactual explanation shows how the model's output would have changed if the input data had been modified in a particular way.

- Optimization-based Methods: Optimization-based methods involve finding the input data that results in a different output from the model. The optimization can be performed using different algorithms, such as gradient descent or genetic algorithms. The counterfactual explanation shows the modified input data that would have resulted in a different output from the model.

- Contrastive Explanations: Contrastive explanations involve comparing the input data to a counterfactual input that would have resulted in a different output from the model. The contrastive explanation shows the specific differences between the input data and the counterfactual input, which can provide insights into the factors that led to the model's decision.

- 5.

-

Natural Language Explanations: Natural language explanations are a type of explanation that is presented in natural language, making it easy for humans to understand. Natural language explanations can be used to explain the decision-making process of an AI model and to provide insights into the factors that influence the model's output. There are several techniques that can be used to generate natural language explanations, including:

- Rule-based Methods: Rule-based methods involve encoding the decision-making process of the model as a set of rules. The rules are then used to generate natural language explanations that describe the decision-making process in a way that is easy to understand.

- Text Generation: Text generation techniques involve using deep learning algorithms to generate natural language explanations based on the input data and the output of the model. The text generation algorithms can be trained on large datasets of human-generated text to ensure that the explanations are natural and easy to understand.

- Dialog Systems: Dialog systems involve using a chatbot or virtual assistant to provide natural language explanations. The chatbot can be trained on a large corpus of human-generated text and can use natural language processing techniques to understand the user's queries and provide relevant explanations.

5. Discussion

- AI and NLP: The integration of AI, particularly NLP, can assist in analyzing vast amounts of legal data, including case law, statutes, and legal documents. NLP techniques can extract relevant information, identify patterns, and help in understanding legal language. This can enhance the efficiency of legal research, aid in the interpretation of complex legal texts, and provide valuable insights to support judicial determinations. These transparent and understandable decisions can have a significant impact on individuals and society as a whole.

- ChatGPT and Explainable - Generative AI: ChatGPT, as a conversational AI model, can be utilized to interact with users and provide legal guidance or explanations. It can assist in answering legal queries, clarifying legal concepts, and offering insights into the reasoning behind legal decisions. Explainable AI methodologies ensure that the decision-making process of intelligent algorithms is transparent and interpretable, which is crucial for maintaining accountability and trust in the justice system.

- Ontological Alignment and the Semantic Web: Ontologies and the semantic web can facilitate the organization and linking of legal knowledge, enabling more efficient and comprehensive access to legal information. By aligning legal concepts and relationships, the framework can support automated reasoning and inference, leading to more accurate and consistent judicial determinations.

- Blockchain Technology: Blockchain can provide a secure and transparent infrastructure for managing legal documentation and transactions. It ensures the integrity and immutability of legal records, reducing the risk of tampering or unauthorized modifications. By utilizing blockchain, the framework can enhance trust, increase transparency in legal processes, and enable decentralized consensus mechanisms.

- Privacy Techniques: To address the sensitivity of legal data and uphold confidentiality, privacy techniques such as differential privacy and homomorphic encryption can be employed. Differential privacy adds noise to the data to protect individual privacy while still allowing for meaningful analysis. Homomorphic encryption allows computations to be performed on encrypted data, maintaining privacy during processing. These techniques help safeguard sensitive information and ensure compliance with privacy regulations.

- Efficiency and Expediency: AI and NLP techniques can streamline legal research and analysis, saving time and effort. Automated processes can assist in managing legal documentation and transactions, reducing administrative burdens.

- Diminished Error Propensity: By leveraging AI technologies, the framework can minimize human errors and biases in legal decision-making. Consistent application of legal principles and access to comprehensive legal knowledge can contribute to more accurate determinations.

- Uniform Approach to Judicial Determinations: The integration of AI and ontological alignment promotes consistency in interpreting and applying legal concepts. This can reduce discrepancies in legal outcomes and enhance the predictability of judicial decisions.

- Augmented Security and Privacy: Blockchain technology ensures the security and integrity of legal records, while privacy techniques protect sensitive data. This combination provides a robust framework for maintaining confidentiality, authenticity, and transparency in the justice system.

- Ethical and Legal Considerations: The use of explainable AI methodologies ensures that the ethical and legal implications of deploying intelligent algorithms and blockchain technologies in the legal domain are carefully examined. This scrutiny helps address concerns related to bias, accountability, and fairness.

- Complexity and Technical Challenges: Implementing and maintaining the proposed framework requires significant technical expertise and resources. Integrating AI, NLP, ontological alignment, blockchain, and privacy techniques can be complex and may involve challenges such as data integration, system interoperability, and algorithmic development. It may also require training and updating AI models to ensure their accuracy and reliability.

- Legal Interpretation and Contextual Understanding: Although AI and NLP techniques can assist in analyzing legal texts, understanding the nuances of legal language, context, and legal precedent is a complex task. Legal interpretation often requires human judgment, as laws can be subject to different interpretations based on the specific circumstances. AI models may struggle with capturing the full range of legal reasoning and the subjective elements involved in legal decision-making.

- Limited Generalization: AI models, including ChatGPT, have limitations in their ability to generalize and adapt to novel situations or legal scenarios outside their training data. They rely heavily on patterns and data they were trained on, which may not encompass the full complexity of legal issues. This can lead to inaccuracies or biases in the system's recommendations or decisions.

- Ethical and Bias Concerns: While efforts are made to ensure explainability and address biases, AI models are susceptible to inheriting biases present in the training data. If legal data used for training the AI system contains biases, such as historical discriminatory practices, it can perpetuate or amplify those biases in the recommendations or decisions. It is crucial to regularly assess and mitigate biases to ensure fairness and equity in the justice system.

- Security and Privacy Risks: While blockchain technology offers advantages in terms of security and transparency, it is not immune to vulnerabilities. The implementation of blockchain systems requires careful consideration of potential security risks, such as 51% attacks or smart contract vulnerabilities. Additionally, while privacy techniques like differential privacy and homomorphic encryption protect sensitive data, they may introduce computational overhead or reduce the utility of the data for analysis.

- Human-Technology Interaction and Trust: The framework's success relies on effective human-technology interaction and the trust placed in the system. Users, including judges, lawyers, and the public, need to understand the limitations and capabilities of the technology to make informed decisions. Building trust in AI-based systems within the legal domain may require time, education, and establishing clear mechanisms for human oversight and intervention.

- Legal and Regulatory Challenges: Integrating AI and blockchain technologies into the legal domain raises legal and regulatory challenges. There may be concerns about liability, accountability, and the legality of automated decision-making processes. Developing appropriate legal frameworks, addressing jurisdictional issues, and ensuring compliance with data protection and privacy regulations are essential considerations.

6. Conclusions

- Bias and Fairness: Continued research is needed to mitigate bias and ensure fairness in AI systems used within the legal domain. This involves developing techniques to detect and mitigate biases in training data, improving transparency in AI decision-making, and exploring ways to incorporate diverse perspectives and considerations of equity into AI models.

- Interdisciplinary Collaboration: Promoting collaboration between legal experts, computer scientists, ethicists, and social scientists is essential. Interdisciplinary research can help bridge the gap between technical capabilities and legal requirements, ensuring that AI systems align with legal principles, ethical standards, and societal needs. Such collaborations can also foster a better understanding of the implications and consequences of deploying intelligent algorithms and blockchain technologies in the justice system.

- Contextual Understanding and Legal Interpretation: Advancements in natural language processing and machine learning techniques can contribute to improving the contextual understanding of legal texts. Research can focus on developing AI models that can capture the intricacies of legal language, interpret the context of legal issues, and provide nuanced and reasoned legal explanations. This can enhance the accuracy and reliability of AI systems in assisting with legal decision-making.

- Explainability and Transparency: Research should continue to explore methods for enhancing the explainability and interpretability of AI models. This includes developing techniques that enable AI systems to provide clear and understandable explanations for their recommendations or decisions. Transparent AI systems can help build trust, facilitate human oversight, and allow for meaningful engagement with stakeholders within the legal system.

- Data Privacy and Security: Further research is necessary to address privacy and security concerns associated with the integration of blockchain technology. This includes exploring techniques for preserving data confidentiality while still leveraging the benefits of blockchain's transparency and immutability. Developing robust security measures to protect blockchain networks and legal data from potential attacks or vulnerabilities is also crucial.

- User Experience and Human-Technology Interaction: Understanding the needs and expectations of legal professionals, judges, lawyers, and the public is vital for the successful adoption of intelligent justice systems. Research can focus on improving the user experience, designing user-friendly interfaces, and studying the impact of AI systems on human decision-making processes. Examining the social acceptance, trust, and ethical implications of using AI within the legal domain is also important.

- Legal and Regulatory Frameworks: To ensure the responsible deployment of AI and blockchain technologies in the justice system, research should address the legal and regulatory challenges. This involves exploring the development of appropriate legal frameworks, examining liability and accountability issues, and considering the ethical and legal implications of automated decision-making processes. Collaborative efforts between researchers, policymakers, and legal experts are necessary to create comprehensive and adaptive legal frameworks.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- B. F. A, C. J. N, and A. J, “On the Integration of Enabling Wireless Technologies and Sensor Fusion for Next-Generation Connected and Autonomous Vehicles[J,” IEEE Access, vol. 10, pp. 14643–14668, 2022.

- O. Lizzi, “Towards a social and epistemic justice approach for exploring the injustices of English as a Medium of Instruction in basic education[J,” Educ. Rev.

- J. Cano, C. E. Jimenez, R. Hernandez, and S. Ros, “New tools for e-justice: legal research available to any citizen,” in 2015 Second International Conference on eDemocracy & eGovernment (ICEDEG), Apr. 2015, pp. 108–111. [CrossRef]

- F. Emmert-Streib, Z. Yang, H. Feng, S. Tripathi, and M. Dehmer, “An Introductory Review of Deep Learning for Prediction Models With Big Data,” Front. Artif. Intell., vol. 3, p. 4, 2020. [CrossRef]

- L. Alzubaidi et al., “Review of deep learning: concepts, CNN architectures, challenges, applications, future directions,” J. Big Data, vol. 8, no. 1, p. 53, Mar 2021. [CrossRef]

- G. Datta, N. Joshi, and K. Gupta, “Study of NMT: An Explainable AI-based approach[C]//2021,” in International Conference on Information Systems and Computer Networks (ISCON). IEEE, pp. 1–4.

- al, “Recent Technical Advances in Accelerating the Clinical Translation of Small Animal Brain Imaging: Hybrid Imaging,” Deep Learn.

- R. Boorugu and G. Ramesh, “A Survey on NLP based Text Summarization for Summarizing Product Reviews,” in 2020 Second International Conference on Inventive Research in Computing Applications (ICIRCA), Jul. 2020, pp. 352–356. [CrossRef]

- “Machine Learning Contract Search, Review and Analysis Software.” https://kirasystems.com/ (accessed May 26, 2023).

- “Legal Analytics by Lex Machina.” https://lexmachina.com/ (accessed May 26, 2023).

- “LegalZoom | Start a Business, Protect Your Family: LLC Wills Trademark Incorporate & More Online | LegalZoom.” https://www.legalzoom.com/country/gr (accessed May 26, 2023).

- “Save Time and Money with DoNotPay!” https://donotpay.com/ (accessed May 26, 2023).

- P. D. Callister, “Law, Artificial Intelligence, and Natural Language Processing: A Funny Thing Happened on the Way to My Search Results.” Rochester, NY, Oct. 14, 2020. Accessed: May 26, 2023. [Online]. Available: https://papers.ssrn.com/abstract=3712306.

- K. P. Nayyer, M. Rodriguez, and S. A. Sutherland, “Artificial Intelligence & Implicit Bias: With Great Power Comes Great Responsibility,” CanLII Authors Program, 2020. Accessed: May 26, 2023. [Online]. Available: https://www.canlii.org/en/commentary/doc/2020CanLIIDocs1609#!fragment/zoupio- _Toc3Page1/BQCwhgziBcwMYgK4DsDWszIQewE4BUBTADwBdoAvbRABwEtsBaAfX2zgGYAFMAc0I CMASgA0ybKUIQAiokK4AntADkykREJhcCWfKWr1m7SADKeUgCElAJQCiAGVsA1AIIA5AMK2RpM ACNoUnYhISA.

- M. A. Wojcik, “Machine-Learnt Bias? Algorithmic Decision Making and Access to Criminal Justice: Overall Winner, Justis International Law & Technology Writing Competition 2020, by Malwina Anna Wojcik of the University of Bologna,” Leg. Inf. Manag., vol. 20, no. 2, pp. 99–100, Jun. 2020. [CrossRef]

- M. Ridley, “Explainable Artificial Intelligence (XAI):,” Inf. Technol. Libr., vol. 41, no. 2, Art. no. 2, Jun. 2022. [CrossRef]

- “Research Librarians as Guides and Navigators for AI Policies at Universities,” LaLIST, Sep. 23, 2019. https://lalist.inist.fr/?p=41259 (accessed May 26, 2023).

- J. Turner, Robot Rules: Regulating Artificial Intelligence. Cham: Springer International Publishing, 2019. [CrossRef]

- B. Verheij, “Artificial intelligence as law: Presidential address to the seventeenth international conference on artificial intelligence and law,” Artif. Intell. Law, vol. 28, no. 2, pp. 181–206, Jun. 2020. [CrossRef]

- “Between Constancy and Change: Legal Practice and Legal Education in the Age of Technology | Law in Context. A Socio-legal Journal.” https://journals.latrobe.edu.au/index.php/law-in-context/article/view/87 (accessed May 26, 2023).

- D. Garingan and A. J. Pickard, “Artificial Intelligence in Legal Practice: Exploring Theoretical Frameworks for Algorithmic Literacy in the Legal Information Profession,” Leg. Inf. Manag., vol. 21, no. 2, pp. 97–117, Jun. 2021. [CrossRef]

- H. Aman, “The Legal Information Landscape: Change is the New Normal,” Leg. Inf. Manag., vol. 19, no. 2, pp. 98–101, Jun. 2019. [CrossRef]

- “Artificial intelligence and the library of the future, revisited | Stanford Libraries.” https://library.stanford.edu/blogs/digital-library-blog/2017/11/artificial-intelligence-and-library-future-revisited (accessed May 26, 2023).

- “Legal Knowledge and Information Systems,” IOS Press, Jan. 01, 2010. https://www.iospress.com/catalog/books/legal-knowledge-and-information-systems-8 (accessed May 26, 2023).

- “Litigating Artificial Intelligence | Emond Publishing.” https://emond.ca/Store/Books/Litigating-Artificial-Intelligence (accessed May 26, 2023).

- I. Angelidis, I. Chalkidis, C. Nikolaou, P. Soursos, and M. Koubarakis, “Nomothesia : A Linked Data Platform for Greek Legislation,” 2018. Accessed: May 26, 2023. [Online]. Available: https://www.semanticscholar.org/paper/Nomothesia-%3A-A-Linked-Data-Platform-for-Greek-Angelidis- Chalkidis/365f1283d60a5ecbe787a90efe972f37caea61fa.

- V. Demertzi and K. Demertzis, “A Hybrid Adaptive Educational eLearning Project based on Ontologies Matching and Recommendation System,” ArXiv200714771 Cs Math, Oct. 2020, Accessed: Oct. 24, 2021. [Online]. Available: http://arxiv.org/abs/2007.14771.

- K. Makris, N. Gioldasis, N. Bikakis, and S. Christodoulakis, “Ontology Mapping and SPARQL Rewriting for Querying Federated RDF Data Sources,” in On the Move to Meaningful Internet Systems, OTM 2010, R. Meersman, T. Dillon, and P. Herrero, Eds., in Lecture Notes in Computer Science. Berlin, Heidelberg: Springer, 2010, pp. 1108–1117. [CrossRef]

- I. A. A.-Q. Al-Hadi, N. M. Sharef, N. Sulaiman, and N. Mustapha, “REVIEW OF THE TEMPORAL RECOMMENDATION SYSTEM WITH MATRIX FACTORIZATION,” p. 16.

- C. C. Aggarwal, “Neighborhood-Based Collaborative Filtering,” in Recommender Systems: The Textbook, C. C. Aggarwal, Ed., Cham: Springer International Publishing, 2016, pp. 29–70. [CrossRef]

- M. L. Bai, R. Pamula, and P. K. Jain, “Tourist Recommender System using Hybrid Filtering,” in 2019 4th International Conference on Information Systems and Computer Networks (ISCON), Aug. 2019, pp. 746–749. [CrossRef]

- G. Cui, J. Luo, and X. Wang, “Personalized travel route recommendation using collaborative filtering based on GPS trajectories[J,” Int. J. Digit. Earth, vol. 11, no. 3, pp. 284–307, 2018.

- V. Alieksieiev and B. Andrii, “Information Analysis and Knowledge Gain within Graph Data Model,” in 2019 IEEE 14th International Conference on Computer Sciences and Information Technologies (CSIT), Sep. 2019, pp. 268–271. [CrossRef]

- A. Alkhalil, M. A. E. Abdallah, A. Alogali, and A. Aljaloud, “Applying big data analytics in higher education: A systematic mapping study,” Int. J. Inf. Commun. Technol. Educ. IJICTE, vol. 17, no. 3, pp. 29–51, 2021.

- C. Fan, M. Chen, X. Wang, J. Wang, and B. Huang, “A Review on Data Preprocessing Techniques Toward Efficient and Reliable Knowledge Discovery From Building Operational Data,” Front. Energy Res., vol. 9, 2021, Accessed: May 31, 2022. [Online]. Available: https://www.frontiersin.org/article/10.3389/fenrg.2021.652801.

- S. B. Kotsiantis, D. Kanellopoulos, and P. E. Pintelas, “Data Preprocessing for Supervised Leaning,” Int. J. Comput. Inf. Eng., vol. 1, no. 12, pp. 4104–4109, Dec. 2007.

- R. Anil, V. Gupta, T. Koren, and Y. Singer, “Memory-Efficient Adaptive Optimization.” arXiv, Sep. 11, 2019. [CrossRef]

- M. Anantathanavit and M.-A. Munlin, “Radius Particle Swarm Optimization,” in 2013 International Computer Science and Engineering Conference (ICSEC), Sep. 2013, pp. 126–130. [CrossRef]

- A. Ferrari, L. Zhao, and W. Alhoshan, “NLP for Requirements Engineering: Tasks, Techniques, Tools, and Technologies,” in 2021 IEEE/ACM 43rd International Conference on Software Engineering: Companion Proceedings (ICSE-Companion), Feb. 2021, pp. 322–323. [CrossRef]

- J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.” arXiv, May 24, 2019. [CrossRef]

- Y. Liu et al., “Summary of ChatGPT/GPT-4 Research and Perspective Towards the Future of Large Language Models.” arXiv, May 10, 2023. [CrossRef]

- A. Aksenov, V. Borisov, D. Shadrin, A. Porubov, A. Kotegova, and A. Sozykin, “Competencies Ontology for the Analysis of Educational Programs,” in 2020 Ural Symposium on Biomedical Engineering, Radioelectronics and Information Technology (USBEREIT), Feb. 2020, pp. 368–371. [CrossRef]

- M. Ali and F. M. Falakh, “Semantic Web Ontology for Vocational Education Self-Evaluation System,” in 2020 Third International Conference on Vocational Education and Electrical Engineering (ICVEE), Jul. 2020, pp. 1–6. [CrossRef]

- D. Allemang and J. Hendler, “Chapter 2 - Semantic modeling,” in Semantic Web for the Working Ontologist (Second Edition), D. Allemang and J. Hendler, Eds., Boston: Morgan Kaufmann, 2011, pp. 13–25. [CrossRef]

- “Semantic Web - W3C.” https://www.w3.org/standards/semanticweb/ (accessed May 26, 2023).

- M. Deschênes, “Recommender systems to support learners’ Agency in a Learning Context: a systematic review,” Int. J. Educ. Technol. High. Educ., vol. 17, no. 1, p. 50, Oct. 2020. [CrossRef]

- S. Aich, S. Chakraborty, M. Sain, H. Lee, and H.-C. Kim, “A Review on Benefits of IoT Integrated Blockchain based Supply Chain Management Implementations across Different Sectors with Case Study,” in 2019 21st International Conference on Advanced Communication Technology (ICACT), Oct. 2019, pp. 138–141. [CrossRef]

- B. Ampel, M. Patton, and H. Chen, “Performance Modeling of Hyperledger Sawtooth Blockchain,” in 2019 IEEE International Conference on Intelligence and Security Informatics (ISI), Jul. 2019, pp. 59–61. [CrossRef]

- V. Aleksieva, H. Valchanov, and A. Huliyan, “Implementation of Smart-Contract, Based on Hyperledger Fabric Blockchain,” in 2020 21st International Symposium on Electrical Apparatus Technologies (SIELA), Jun. 2020, pp. 1–4. [CrossRef]

- “Solidity — Solidity 0.8.20 documentation.” https://docs.soliditylang.org/en/v0.8.20/ (accessed May 26, 2023).

- K. Demertzis, L. Iliadis, and P. Kikiras, “A Lipschitz - Shapley Explainable Defense Methodology Against Adversarial Attacks,” in Artificial Intelligence Applications and Innovations. AIAI 2021 IFIP WG 12.5 International Workshops, I. Maglogiannis, J. Macintyre, and L. Iliadis, Eds., in IFIP Advances in Information and Communication Technology. Cham: Springer International Publishing, 2021, pp. 211–227. [CrossRef]

- K. Demertzis, L. Iliadis, P. Kikiras, and E. Pimenidis, “An explainable semi-personalized federated learning model,” Integr. Comput.-Aided Eng., vol. 29, no. 4, pp. 335–350, Jan. 2022. [CrossRef]

- D. Hou, T. Driessen, and H. Sun, “The Shapley value and the nucleolus of service cost savings games as an application of 1-convexity,” IMA J. Appl. Math., vol. 80, no. 6, pp. 1799–1807, Sep. 2015. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).