Submitted:

30 May 2023

Posted:

31 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Works

3. Background

3.1. Intrusion Detection System

3.2. UGR’ 16 Dataset

4. Proposed Model

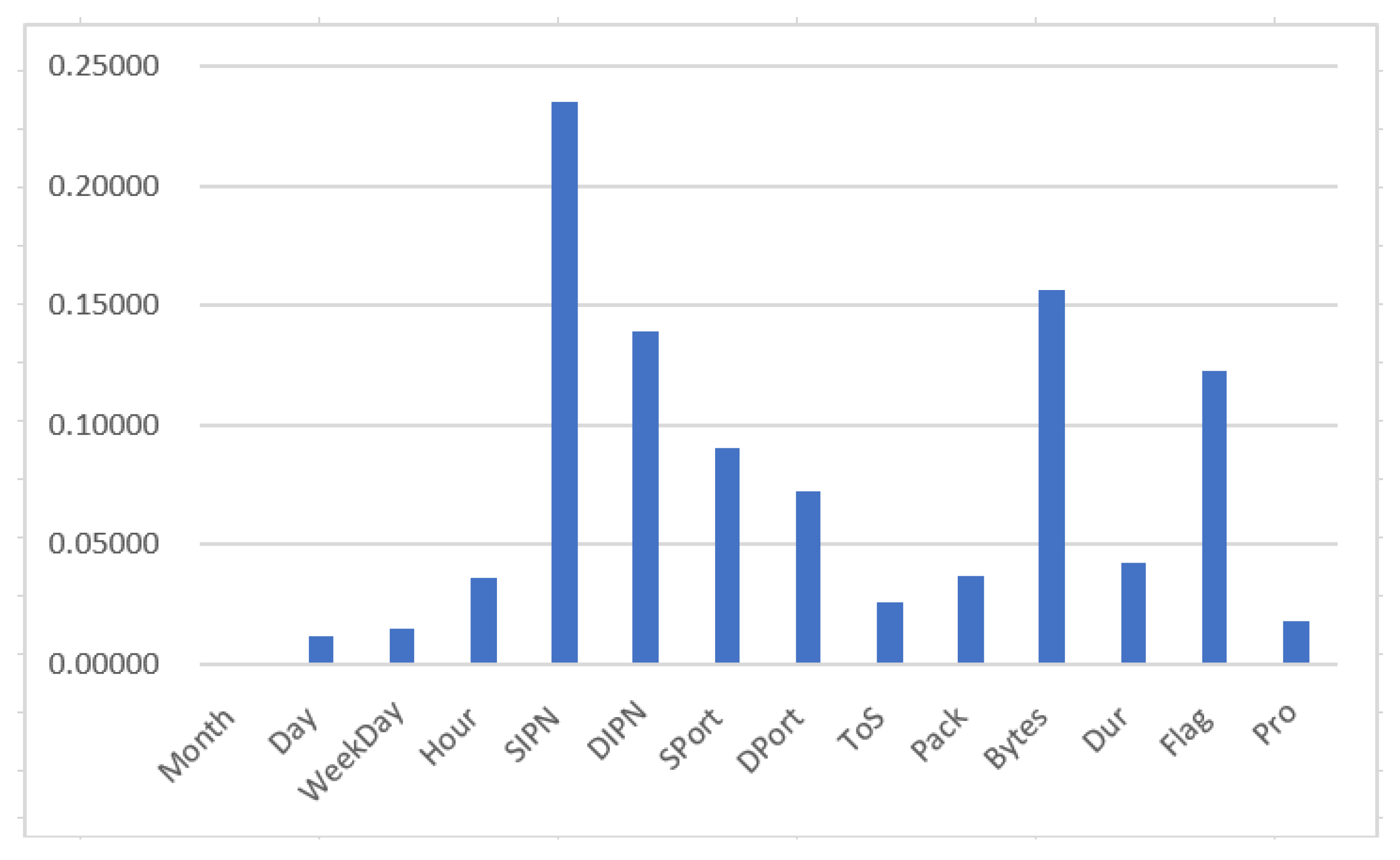

4.1. Data Preparation

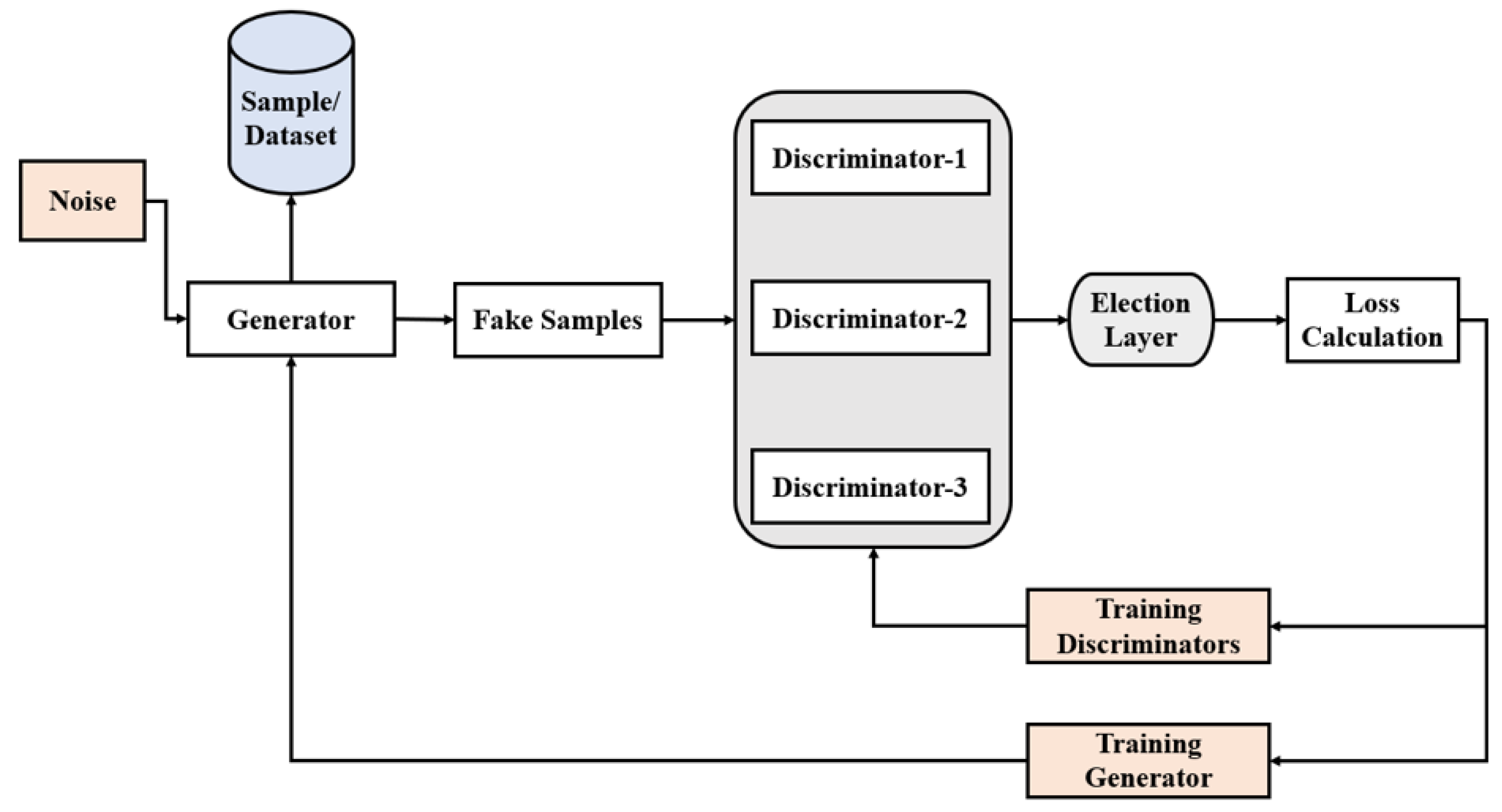

4.2. Setup of Proposed Model

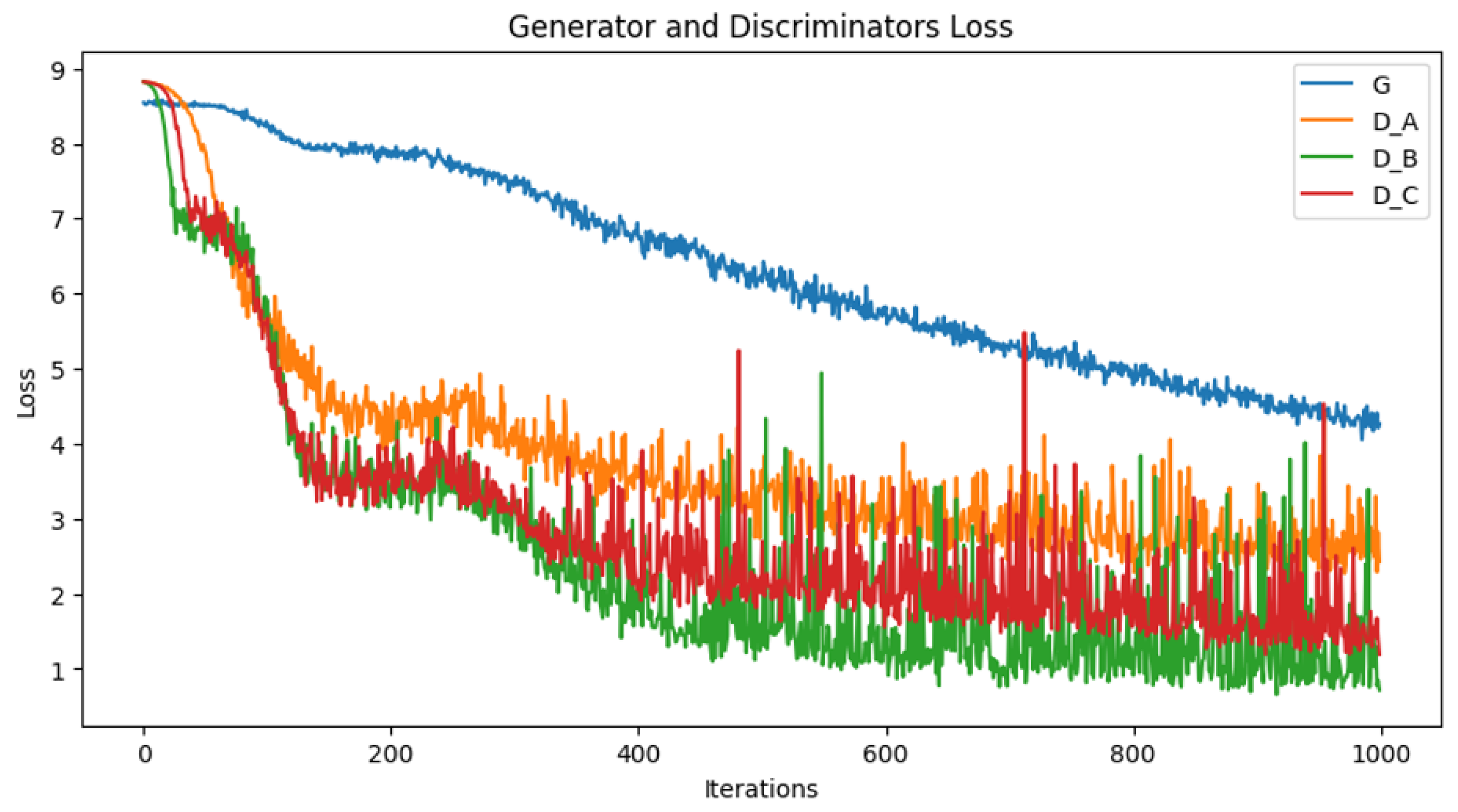

4.3. Training Phase

5. Experimental Results

5.1. Experimental Setup

5.2. Performance Metrics

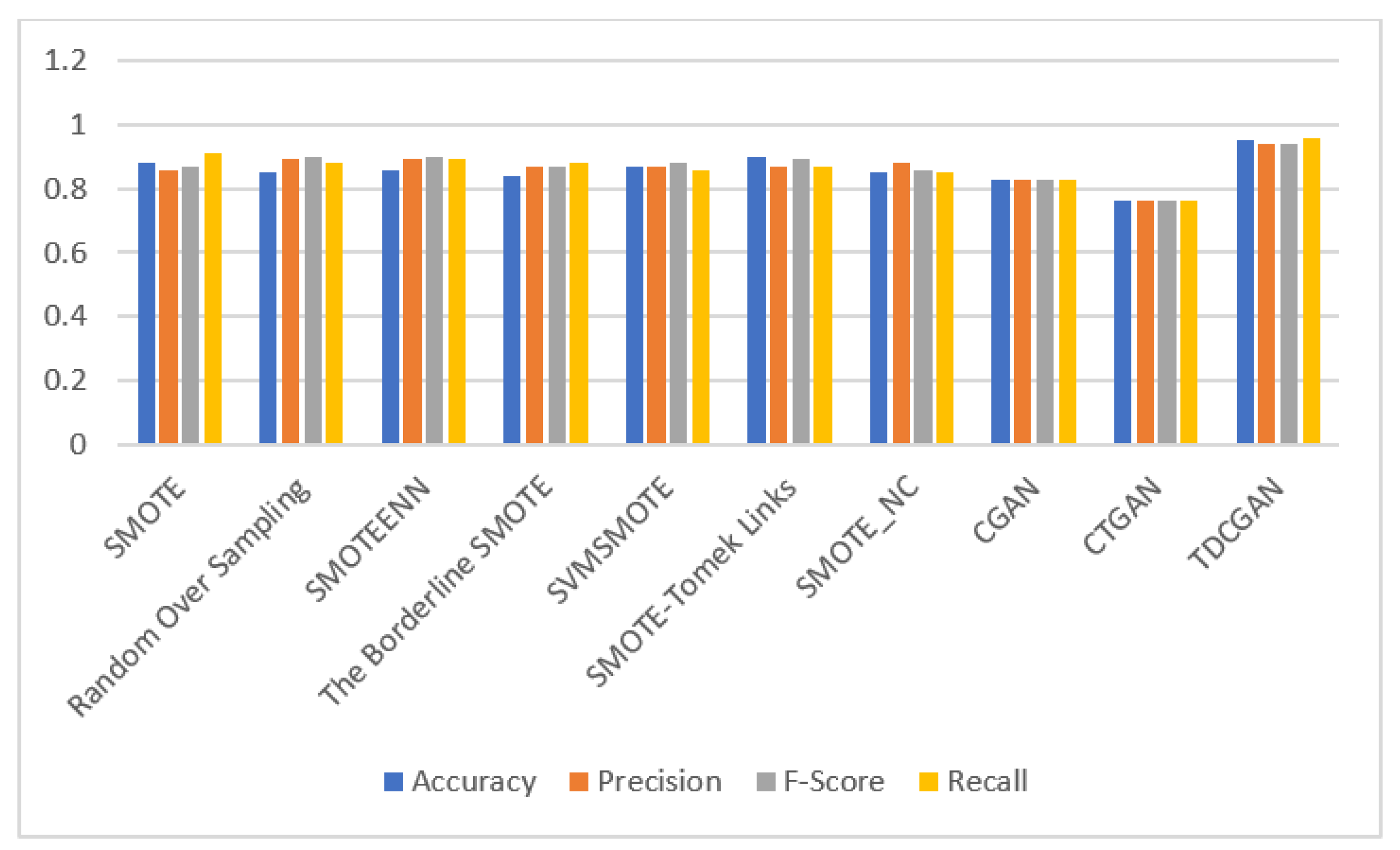

5.3. Experimental Results and Analysis

6. Conclusions and Future Works

Author Contributions

Funding

Conflicts of Interest

References

- Surakhi, O.M.; García, A.M.; Jamoos, M.; Alkhanafseh, M.Y. A Comprehensive Survey for Machine Learning and Deep Learning Applications for Detecting Intrusion Detection. 2021 22nd International Arab Conference on Information Technology (ACIT). IEEE, 2021, pp. 1–13. [CrossRef]

- Susilo, B.; Sari, R.F. Intrusion detection in IoT networks using deep learning algorithm. Information 2020, 11, 279. [Google Scholar] [CrossRef]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. Information Processing in Medical Imaging: 25th International Conference, IPMI 2017, Boone, NC, USA, June 25-30, 2017, Proceedings. Springer, 2017, pp. 146–157. 25 June. [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. Journal of artificial intelligence research 2002, 16, 321–357. [Google Scholar] [CrossRef]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. 2008 IEEE international joint conference on neural networks (IEEE world congress on computational intelligence). IEEE, 2008, pp. 1322–1328. [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets Advances in neural information processing systems. arXiv 2014, arXiv:1406.2661 2014. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 4681–4690.

- Su, H.; Shen, X.; Hu, P.; Li, W.; Chen, Y. Dialogue generation with gan. Proceedings of the AAAI Conference on Artificial Intelligence, 2018, Vol. 32. [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. Proceedings of the IEEE international conference on computer vision, 2017, pp. 2223–2232.

- Abdulrahman, A.A.; Ibrahem, M.K. Toward constructing a balanced intrusion detection dataset based on CICIDS2017. Samarra Journal of Pure and Applied Science 2020, 2, 132–142. [Google Scholar]

- Lee, J.; Park, K. GAN-based imbalanced data intrusion detection system. Personal and Ubiquitous Computing 2021, 25, 121–128. [Google Scholar] [CrossRef]

- Hajisalem, V.; Babaie, S. A hybrid intrusion detection system based on ABC-AFS algorithm for misuse and anomaly detection. Computer Networks 2018, 136, 37–50. [Google Scholar] [CrossRef]

- Kabir, E.; Hu, J.; Wang, H.; Zhuo, G. A novel statistical technique for intrusion detection systems. Future Generation Computer Systems 2018, 79, 303–318. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp 2018, 1, 108–116. [Google Scholar]

- Kumar, V.; Sinha, D.; Das, A.K.; Pandey, S.C.; Goswami, R.T. An integrated rule based intrusion detection system: Analysis on UNSW-NB15 data set and the real time online dataset. Cluster Computing 2020, 23, 1397–1418. [Google Scholar] [CrossRef]

- Seo, E.; Song, H.M.; Kim, H.K. GIDS: GAN based intrusion detection system for in-vehicle network. 2018 16th Annual Conference on Privacy, Security and Trust (PST). IEEE, 2018, pp. 1–6. [CrossRef]

- Cao, B.; Li, C.; Song, Y.; Qin, Y.; Chen, C. Network Intrusion Detection Model Based on CNN and GRU. Applied Sciences 2022, 12, 4184. [Google Scholar] [CrossRef]

- AlKhanafseh, M.Y.; Surakhi, O.M. VANET Intrusion Investigation Based Forensics Technology: A New Framework. 2022 International Conference on Emerging Trends in Computing and Engineering Applications (ETCEA). IEEE, 2022, pp. 1–7. [CrossRef]

- Denning, D.E. An intrusion-detection model. IEEE Transactions on software engineering 1987, 222–232. [Google Scholar] [CrossRef]

- Di Mattia, F.; Galeone, P.; De Simoni, M.; Ghelfi, E. A survey on gans for anomaly detection. arXiv 2019, arXiv:1906.11632 2019. [Google Scholar]

- Lin, Z.; Shi, Y.; Xue, Z. Idsgan: Generative adversarial networks for attack generation against intrusion detection. Advances in Knowledge Discovery and Data Mining: 26th Pacific-Asia Conference, PAKDD 2022, Chengdu, China, May 16–19, 2022, Proceedings, Part III. Springer, 2022, pp. 79–91. 16 May. [CrossRef]

- Zhao, S.; Li, J.; Wang, J.; Zhang, Z.; Zhu, L.; Zhang, Y. attackgan: Adversarial attack against black-box ids using generative adversarial networks. Procedia Computer Science 2021, 187, 128–133. [Google Scholar] [CrossRef]

- Maciá-Fernández, G.; Camacho, J.; Magán-Carrión, R.; García-Teodoro, P.; Therón, R. UGR ‘16: A new dataset for the evaluation of cyclostationarity-based network IDSs. Computers & Security 2018, 73, 411–424. [Google Scholar]

- Ndichu, S.; Ban, T.; Takahashi, T.; Inoue, D. AI-Assisted Security Alert Data Analysis with Imbalanced Learning Methods. Applied Sciences 2023, 13, 1977. [Google Scholar] [CrossRef]

- Wang, Z.; She, Q.; Ward, T.E. Generative adversarial networks in computer vision: A survey and taxonomy. ACM Computing Surveys (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Jiang, W.; Hong, Y.; Zhou, B.; He, X.; Cheng, C. A GAN-based anomaly detection approach for imbalanced industrial time series. IEEE Access 2019, 7, 143608–143619. [Google Scholar] [CrossRef]

- Yang, Y.; Nan, F.; Yang, P.; Meng, Q.; Xie, Y.; Zhang, D.; Muhammad, K. GAN-based semi-supervised learning approach for clinical decision support in health-IoT platform. Ieee Access 2019, 7, 8048–8057. [Google Scholar] [CrossRef]

- Wang, X.; Guo, H.; Hu, S.; Chang, M.C.; Lyu, S. Gan-generated faces detection: A survey and new perspectives. arXiv 2022, arXiv:2202.07145 2022. [Google Scholar]

- Xia, X.; Pan, X.; Li, N.; He, X.; Ma, L.; Zhang, X.; Ding, N. GAN-based anomaly detection: A review. Neurocomputing 2022. [Google Scholar] [CrossRef]

- Durgadevi, M. Generative Adversarial Network (GAN): A general review on different variants of GAN and applications. 2021 6th International Conference on Communication and Electronics Systems (ICCES). IEEE, 2021, pp. 1–8. [CrossRef]

- Zaidan, M.A.; Surakhi, O.; Fung, P.L.; Hussein, T. Sensitivity Analysis for Predicting Sub-Micron Aerosol Concentrations Based on Meteorological Parameters. Sensors 2020, 20, 2876. [Google Scholar] [CrossRef] [PubMed]

- Surakhi, O.; Serhan, S.; Salah, I. On the ensemble of recurrent neural network for air pollution forecasting: Issues and challenges. Adv. Sci. Technol. Eng. Syst. J 2020, 5, 512–526. [Google Scholar] [CrossRef]

| Attack | Label |

|---|---|

| DoS11 | DoS |

| DoS53s | DoS |

| DoS53a | DoS |

| Scan11 | Scan11 |

| Scan44 | Scan14 |

| Botnet | Nerisbotnet |

| IP in blacklist | Blacklist |

| UDP Scan | Anomaly-udpscan |

| SSH Scan | Anomaly-sshscan |

| SPAM | Anomaly-spam |

| From | To | Class Label | Counts | Percentage |

|---|---|---|---|---|

| 07/27/2016 | 07/31/2016 | background | 197185 | 98.5% |

| 07/27/2016 | 07/31/2016 | dos | 1169 | 0.6% |

| 07/27/2016 | 07/31/2016 | scan44 | 578 | 0.3% |

| 07/27/2016 | 07/31/2016 | blacklist | 545 | 0.3% |

| 07/27/2016 | 07/31/2016 | nerisbotnet | 227 | 0.1% |

| 07/27/2016 | 07/31/2016 | anomaly-spam | 170 | 0.1% |

| 07/27/2016 | 07/31/2016 | scan11 | 126 | 0.1% |

| Unit | Description |

|---|---|

| Processor | Intel® Xeon® |

| CPU | 2.30GHz with No.CPUs 2 |

| RAM | 12GB |

| OS | |

| Packages | TensorFlow 2.6.0 |

| Accuracy | Precision | F1 Score | Recall |

|---|---|---|---|

| 0.95 | 0.94 | 0.94 | 0.96 |

| Model | Accuracy | Precision | F1 Score | Recall |

|---|---|---|---|---|

| SMOTE | 0.88 | 0.86 | 0.87 | 0.91 |

| Random Over Sampling | 0.85 | 0.89 | 0.90 | 0.88 |

| SMOTEENN | 0.86 | 0.89 | 0.90 | 0.89 |

| The Borderline SMOTE | 0.84 | 0.87 | 0.87 | 0.88 |

| SVMSMOTE | 0.89 | 0.90 | 0.91 | 0.89 |

| SMOTE-Tomek Links | 0.90 | 0.87 | 0.89 | 0.87 |

| SMOTE_NC | 0.85 | 0.88 | 0.86 | 0.85 |

| CGAN | 0.83 | 0.83 | 0.83 | 0.83 |

| CTGAN | 0.76 | 0.76 | 0.76 | 0.76 |

| TDCGAN | 0.95 | 0.94 | 0.94 | 0.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).