Submitted:

01 June 2023

Posted:

01 June 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Design

2.3. Materials

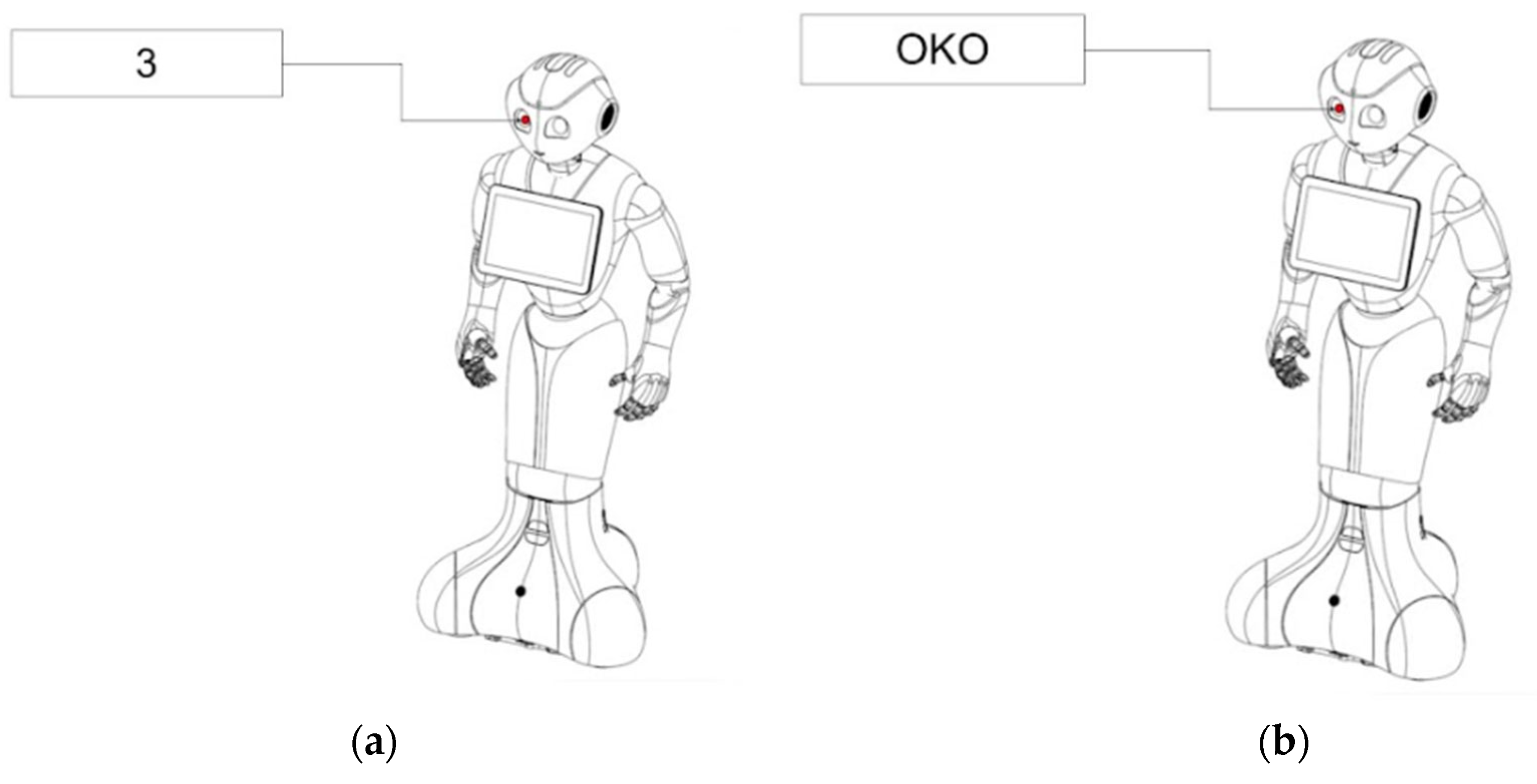

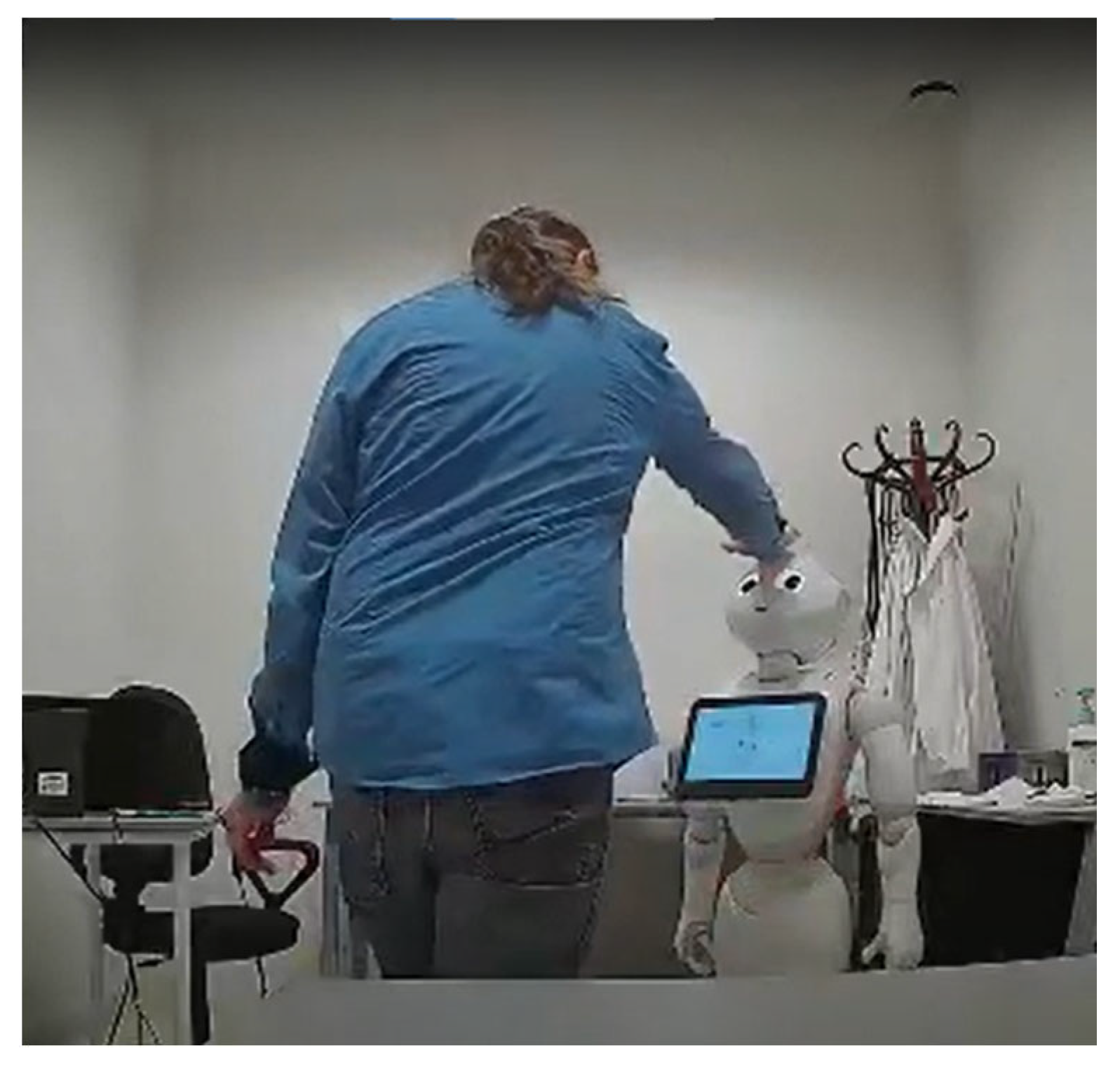

2.3.1. Pepper Humanoid Robot

2.3.2. Skin Conductance Response Measure and Signal Processing

2.3.3. Final Questionnaire

2.4. Procedure

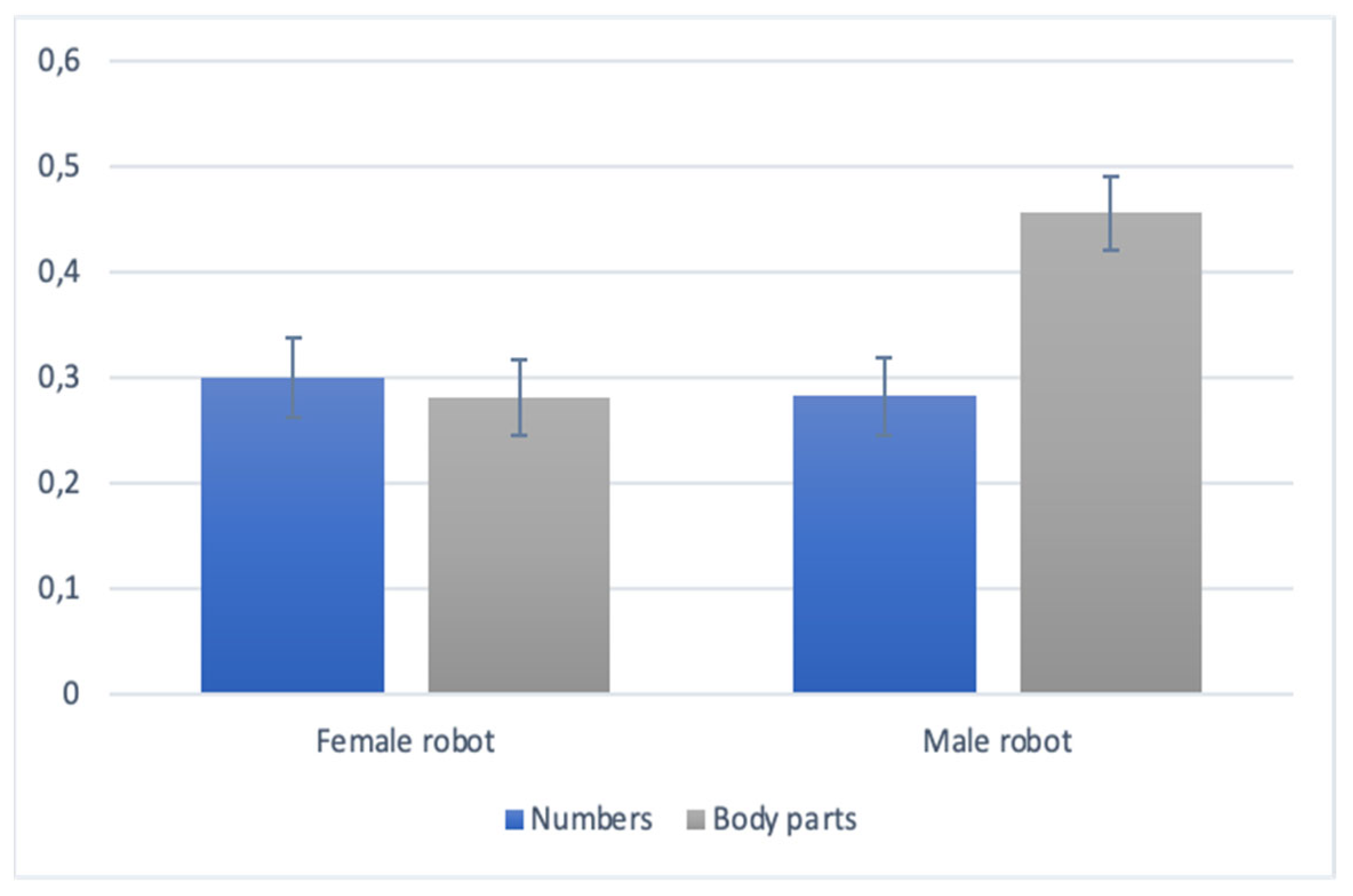

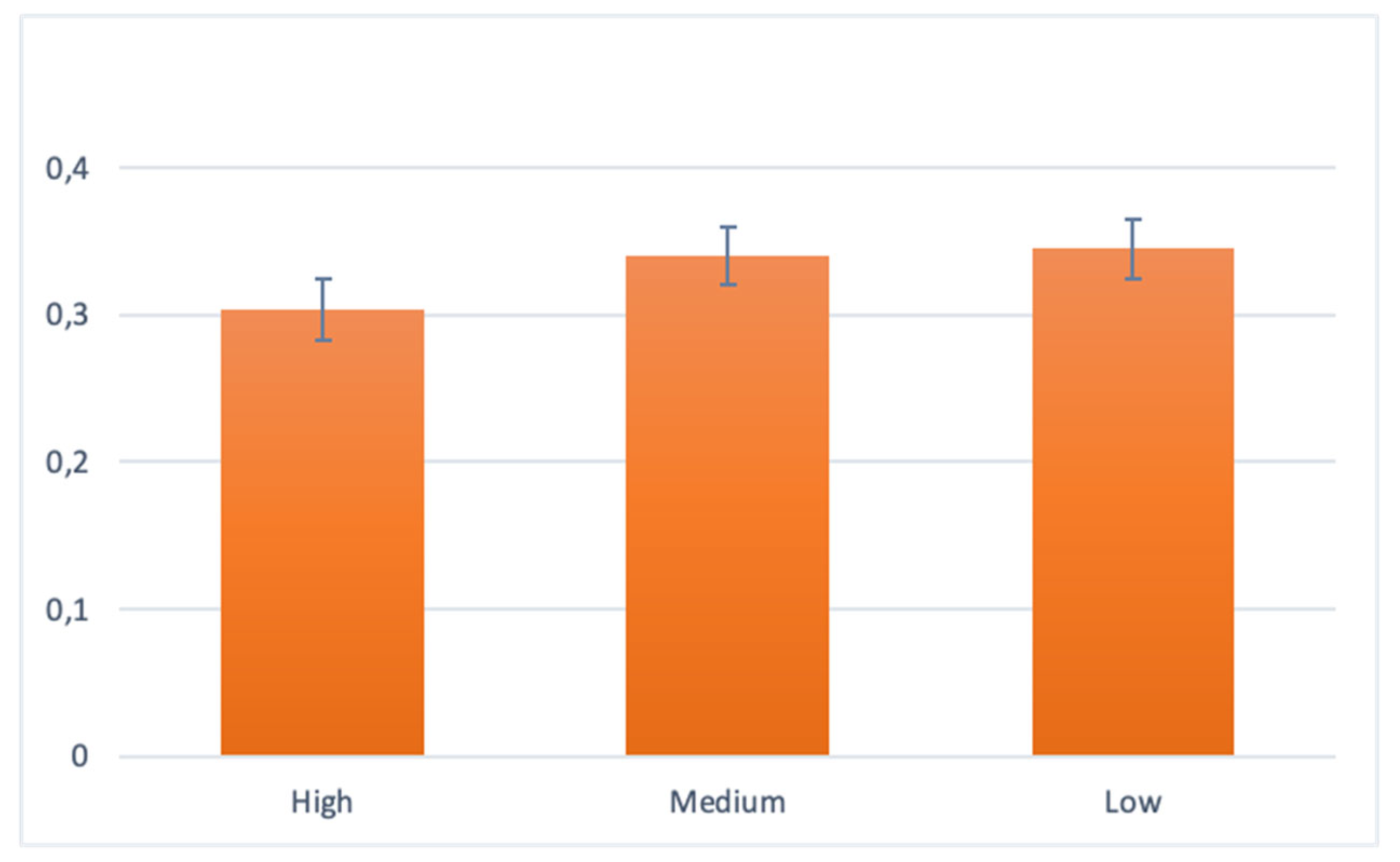

3. Results

4. Discussion

4.1. Body Part Availability

4.2. Anthropomorphic Framing of Body Parts

4.3. Anthropomorphic Framing of Gender

4.4. Attitudes Towards Robots

4.5. Significance

4.6. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Toh, L.P.E., Causo, A., Tzuo, P.W., Chen, I.M., & Yeo, S.H. A Review on the Use of Robots in Education and Young Children. Educational Technology & Society 2016, 19, 148–163.

- Broekens, J., Heerink, M., & Rosendal, H. Assistive social robots in elderly care: A review. Gerontechnology 2009, 8, 94–103. [CrossRef]

- Wada, K. & Shibata, T. Robot therapy in a care house - its sociopsychological and physiological effects on the residents. Proceedings 2006 IEEE International Conference on Robotics and Automation 2006, 2006. ICRA 2006, 3966-3971. [CrossRef]

- Shibata, T. & Wada, K. Robot therapy: a new approach for mental healthcare of the elderly - a mini-review. Gerontology. 2011, 57, 378–386. [CrossRef]

- Ogawa, K., Nishio, S., Koda, K., Balistreri, G., Watanabe, T., & Ishiguro, H. Exploring the natural reaction of young and aged person with Telenoid in a real world. Journal of Advanced Computational Intelligence and Intelligent Informatics 2011, 15, 592–597. [CrossRef]

- Turkle, S., Breazeal, C., Dasté, O., & Scassellati, B. (2023). Encounters with Kismet and Cog: Children Respond to Relational Artifacts.

- Tanaka, F., Cicourel, A., & Movellan, J.R. Socialization between toddlers and robots at an early childhood education center. Proceedings of the National Academy of Sciences of the United States of America 2007, 104, 17954–17958. [CrossRef]

- Yohanan, S., & MacLean, K.E. The Role of Affective Touch in Human-Robot Interaction: Human Intent and Expectations in Touching the Haptic Creature. International Journal of Social Robotics 2012, 4, 163–180. [CrossRef]

- Geva, N., Uzefovsky, F., & Levy-Tzedek, S. Touching the social robot PARO reduces pain perception and salivary oxytocin levels. Touching the social robot PARO reduces pain perception and salivary oxytocin levels. Scientific Reports 2020, 10. [CrossRef]

- Geva, N., Hermoni, N., & Levy-Tzedek, S. Interaction Matters: The Effect of Touching the Social Robot PARO on Pain and Stress is Stronger When Turned ON vs. OFF. Frontiers in Robotics and AI 2022, 9. [CrossRef]

- Hoffmann, L., & Krämer, N.C. The persuasive power of robot touch. Behavioral and evaluative consequences of non-functional touch from a robot. PLoS ONE 2021, 16. [CrossRef]

- van Erp, J.B.F., & Toet, A. Social Touch in Human-Computer Interaction. Frontiers in Digital Humanities 2015, 2. [CrossRef]

- Paulsell, S. & Goldman, M. The Effect of Touching Different Body Areas on Prosocial Behavior. The Journal of Social Psychology 1984, 122, 269–273. [CrossRef]

- Patterson, M.L. An arousal model of interpersonal intimacy. Psychological Review 1976, 83, 235–245. [Google Scholar] [CrossRef]

- Burleson, M.H., & Davis, M.C. (2014). Social touch and resilience. In M. Kent, M.C. Davis, & J. W. Reich (Eds.), The resilience handbook: Approaches to stress and trauma (pp. 131–143). Routledge/Taylor & Francis Group.

- Ditzen, B., Germann, J., Meuwly, N., Bradbury, T.N., Bodenmann, G., & Heinrichs, M. Intimacy as Related to Cortisol Reactivity and Recovery in Couples Undergoing Psychosocial Stress. Psychosomatic Medicine 2019, 81, 16–25. [CrossRef]

- Ditzen, B., Neumann, I.D., Bodenmann, G., von Dawans, B., Turner, R.A., Ehlert, U., & Heinrichs, M. Effects of different kinds of couple interaction on cortisol and heart rate responses to stress in women. Psychoneuroendocrinology 2007, 32, 565–574. [CrossRef]

- Morrison, I. Keep Calm and Cuddle on: Social Touch as a Stress Buffer. Adaptive Human Behavior and Physiology 2016, 2, 344–362. [Google Scholar] [CrossRef]

- Sawabe, T., Honda, S., Sato, W., Ishikura, T., Kanbara, M., Yoshikawa, S., Fujimoto, Y., & Kato, H. Robot touch with speech boosts positive emotions. Scientific Reports 2022, 12. [CrossRef]

- Jourard, S.M. An Exploratory Study of Body-Accessibility. British Journal of Social and Clinical Psychology 1966, 5, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Jones, S.E., & Yarbrough, A.E. A naturalistic study of the meanings of touch. Communication Monographs 1985, 52, 19–56. [CrossRef]

- Heslin, R., Nguyen, T.D., & Nguyen, M.L. Meaning of touch: The case of touch from a stranger or same sex person. Journal of Nonverbal Behavior 1983, 7, 147–157. [CrossRef]

- Ebesu Hubbard, A.S., Tsuji, A.A., Williams, C., & Seatriz, V. Effects of Touch on Gratuities Received in Same-Gender and Cross-Gender Dyads. Journal of Applied Social Psychology 2003, 33, 2427–2438. [CrossRef]

- Chaplin, W.F., Phillips, J.B., Brown, J.D., Clanton, N.R., & Stein, J.L. Handshaking, gender, personality, and first impressions. Journal of personality and social psychology 2000, 79, 110–117. [CrossRef]

- Andreasson, R., Alenljung, B., Billing, E., & Lowe, R. Affective Touch in Human–Robot Interaction: Conveying Emotion to the Nao Robot. International Journal of Social Robotics 2018, 10, 473–491.

- Li, J.J., Ju, W., Reeves, B. Touching a Mechanical Body: Tactile Contact with Intimate Parts of a Humanoid Robot is Physiologically Arousing. Journal of Human-Robot Interaction Steering Committee 2017, 6, 118–130. [CrossRef]

- Onnasch, L., & Roesler, E. Anthropomorphizing Robots: The Effect of Framing in Human-Robot Collaboration. Proceedings of the Human Factors and Ergonomics Society Annual Meeting 2019, 63, 1311–1315. [CrossRef]

- Salem, M., Eyssel, F., Rohlfing, K., Kopp, S., & Joublin, F. To Err is Human(-like): Effects of Robot Gesture on Perceived Anthropomorphism and Likability. International Journal of Social Robotics 2013, 5, 313–323. [CrossRef]

- Kahn, P.H., Kanda, T., Ishiguro, H., Freier, N.G., Severson, R.L., Gill, B.T., Ruckert, J.H., & Shen, S. “Robovie, you’ll have to go into the closet now”: Children’s social and moral relationships with a humanoid robot. Developmental Psychology 2012, 48, 303–314. [CrossRef]

- Hoff, K.A. & Bashir, M. Trust in automation: integrating empirical evidence on factors that influence trust. Hum Factors 2015, 57, 407–434. [CrossRef]

- Schermerhorn, P., Scheutz, M., & Crowell, C.R. Robot social presence and gender: Do females view robots differently than males? HRI 2008 - Proceedings of the 3rd ACM/IEEE International Conference on Human-Robot Interaction: Living with Robots 2008, 263–270. [CrossRef]

- Beran, T.N., Ramirez-Serrano, A., Kuzyk, R., Fior, M., & Nugent, S. Understanding how children understand robots: Perceived animism in child robot interaction. International Journal of Human Computer Studies 2011, 69, 539–550. [CrossRef]

- Bernstein, D., & Crowley, K. Searching for signs of intelligent life: An investigation of young children’s beliefs about robot intelligence. Journal of the Learning Sciences 2008, 17, 225–247. [CrossRef]

- Epley, N., Waytz, A., & Cacioppo, J.T. On seeing human: A three-factor theory of anthropomorphism. Psychological Review 2007, 114, 864–886. [CrossRef]

- Epley, N., Waytz, A., Akalis, S., & Cacioppo, J.T. When we need a human: Motivational determinants of anthropomorphism. Social Cognition 2008, 26, 143–155. [CrossRef]

- Sparrow, R. (2016). Kicking a robot dog. 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (pp. 229-229). [CrossRef]

- Darling, K. (2017). ‘Who’s Johnny?’ Anthropomorphic Framing in Human-Robot Interaction, Integration, and Policy. In P. Lin, G. Bekey, K. Abney, R. Jenkins (Eds.) Robot Ethics 2.0: From Autonomous Cars to Artificial Intelligence (pp. 173-188). Oxford University Press. [CrossRef]

- Kopp, T., Baumgartner, M., & Kinkel, S. How Linguistic Framing Affects Factory Workers’ Initial Trust in Collaborative Robots: The Interplay Between Anthropomorphism and Technological Replacement. International Journal of Human Computer Studies 2022, 158. [CrossRef]

- Coeckelbergh, M. You, robot: On the linguistic construction of artificial others. AI and Society 2011, 26, 61–69. [Google Scholar] [CrossRef]

- Westlund, J.M.K., Martinez, M., Archie, M., Das, M., & Breazeal, C. (2016). Effects of Framing a Robot as a Social Agent or as a Machine on Children’s Social Behavior. 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (pp.688-693). IEEE Press. [CrossRef]

- Waytz, A., Heafner, J., & Epley, N. The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle. Journal of Experimental Social Psychology 2014, 52, 113–117. [CrossRef]

- Dawson, M.E., Schell, A.M., & Filion, D.L. (2017) The electrodermal system. In Handbook of psychophysiology, 4th ed (pp.217-243). Cambridge University Press.

- Kuchenbrandt, D., Häring, M., Eichberg, J., Eyssel, F., & André, E. Keep an Eye on the Task! How Gender Typicality of Tasks Influence Human-Robot Interactions. International Journal of Social Robotics 2014, 6, 417–427. [CrossRef]

- Siegel, M., Breazeal, C., Norton, M.I. (2009). Persuasive Robotics: The influence of robot gender on human behavior. 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems( pp. 2563–2568). [CrossRef]

- Pelau, C., Anica-Popa, L., Bojescu, I., & Niculescu, M. (2022). Are Men More Affected by AI Anthropomorphism? Comparative Research on the Perception of AI Human-like Characteristics Between Genders. In R. Pamfilie, V. Dinu, C. Vasiliu, D. Pleșea, L. Tăchiciu (Eds.), 8th BASIQ International Conference on New Trends in Sustainable Business and Consumption (pp.680-687). ASE. [CrossRef]

- Cheng, T. -Y. & Chen, C. -C. Gender perception differences of robots with different degrees of anthropomorphism. 2021 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW) 2021, 1-2. [CrossRef]

- Cramer, H., Kemper, N., Amin, A., Wielinga, B. and Evers, V. ‘Give me a hug’: the effects of touch and autonomy on people's responses to embodied social agents. Computer Animation and Virtual Worlds 2009, 20, 437–445. [CrossRef]

- Nomura, T., Kanda, T., Suzuki, T. & Kato, K. Altered attitudes of people toward robots: Investigation through the Negative Attitudes toward Robots Scale. Proceedings of the AAAI - 06 Workshop on Human Implications of Human Robot Interaction 2006, 29-35.

- Piçarra, N., Giger, J.-C., Pochwatko, G., & Gonçalves, G. Validation of the Portuguese version of the Negative Attitudes towards Robots Scale. Revue Européenne de Psychologie Appliquée/European Review of Applied Psychology 2014, 65. [CrossRef]

- Makowski, D., Pham, T., Lau, Z.J., Brammer, J.C., Lespinasse, F., Pham, H., Schölzel, C., & Chen, S.H.A. NeuroKit2: A Python toolbox for neurophysiological signal processing. Behavior research methods 2021, 53, 1689–1696. [CrossRef]

- Pochwatko, G., Giger, J.- C., Różańska-Walczuk, M., Świdrak, J., Kukielka, K., Mozaryn, J. & Piçarra, N. Polish Version of the Negative Attitude Toward Robots Scale (NARS-PL). Journal of Automation, Mobile Robotics and Intelligent Systems. 2015, 9, 65–72. [CrossRef]

- Nilsen, W.J., & Vrana, S.R. Some touching situations: The relationship between gender and contextual variables in cardiovascular responses to human touch. Annals of Behavioral Medicine 1998, 20, 270–276. [CrossRef]

- Major, B. (1981). Gender Patterns in Touching Behavior. In Mayo, C., Henley, N.M. (eds) Gender and Nonverbal Behavior (pp. 15–37). Springer New York. [CrossRef]

- Roese, N.J., Olson, J.M., Borenstein, M.N., Martin, A., Ison, A., Shores, L., & Shores, A.J. Same-sex touching behavior: The moderating role of homophobic attitudes. Journal of Nonverbal Behavior 1992, 16, 249–259. [CrossRef]

- Dolinski, D. Male homophobia, touch, and compliance: A matter of the touched, not the toucher. Polish Psychological Bulletin 2013, 44, 457–461. [Google Scholar] [CrossRef]

- Henley, N.M. Status and sex: Some touching observations. Bulletin of the Psychonomic Society 1973, 2, 91–93. [Google Scholar] [CrossRef]

- Henley, N.M. (1977) Body Politics: Power, Sex and Nonverbal Communication.Englewood Cliffs, NJ: Prentice-Hall.

- Willemse, C.J.A.M., Toet, A., & van Erp, J.B.F. Affective and behavioral responses to robot-initiated social touch: Toward understanding the opportunities and limitations of physical contact in human-robot interaction. Frontiers in ICT 2017, 4. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).