4.2. Local Optimization

In the following, we introduce four heuristics that retain a subset (of size k) of each task. These services are the most promising items in terms of each . In this work, we assume that the higher the value of a QoS level, the better the service.

4.2.1. Fuzzy Pareto Dominance Heuristic (H1)

Many alternatives are available for implementing the fuzzy version of pareto dominance [

21,

31,

32], and [

33]. To compare 02 r-dimensional vectors

and

, we use the implementation specified in [

33] since it is slightly effective than the remaining alternatives and has zero hyper-parameters (in contrast to the others). Its definition is given in

12. The elementary fuzzy dominance (EFD) compares two scalar QoS values using Equation

11.

We assume that

and

, represent the values of the

QoS attribute of two existing services S and S’ (respectively). To compare S and S’ with respect to all QoS attributes, we use Equation

13 (Aggregated fuzzy Dominance or AFD for short).

The fuzzy contest function shown in Equation

14 (FC for short) inspects the fuzzy dominance power of a service w with respect to another service q.

Equation

15 computes the sorting score of a service

by achieving a comparison with the rest of candidate services of the current task (the larger the score, the better the rank).

In the experimental study, we will sort the services of each task according to the decreasing order of Equation

15 and take first k elements.

We illustrate the principle of H1 by comparing the services and of Table I:

If we apply Equation

13, we get

.

On the other hand: Consequently, , , ,

.

4.2.2. Zero Order Stochastic Dominance (H2)

This heuristic compares the services using the raw QoS values [

34] (see Equation

16).

To compare two S and S’ with respect to all QoS attributes, we use Equation

18 (Aggregated zero order stochastic dominance or AZSD for short).

To perform the majority vote (within a task), we need to compare each pair of services. To do so, we leverage the contest function shown in Equation

19, it is termed Aggregated zero order stochastic dominance contest (AZSDC) AZSDC returns 1 if

dominates

(in the sense of AZSD), otherwise, it returns 0.

Equation

20 calculates the sorting score of a service

by achieving a comparison with the rest of candidate services of a given task (the larger the score, the better the rank).

In the experiments, we will sort the services of each task according to the decreasing order of Equation

20 and take first k elements.

4.2.3. First Order Stochastic Dominance (H3)

Like H2, the first order stochastic dominance (H3) performs the same steps, except that it processes the cumulative distribution (CumulDistr) of the sample instead of the raw QoS. If we assume that is the QoS sample of the attribute of a given service S, then the cumulative distribution of is approximated as follows:

.

In addition, we increase the resolution (size) of

and set it to

, the added entries (i’) will have a score equal to

.

We have the same expressions mentioned in H2 for the rest of equations.

In the experiments, we will sort the services of each task according to the decreasing order of Equation

24 and take first k elements.

4.2.4. Majority Interval Dominance (H4)

In this heuristic, we first compute the median interval for each QoS attribute of each service ( this means that the

of each service

is represented with an interval

). then, we rank the services by comparing these representative intervals. To elucidate this idea, we consider the services S1 and S2 of Table I. The median interval of S1 is [5,30], and the corresponding one of S2 is [18,40]. To compare the median intervals, we use the function presented in [

19]; this function is defined in Equation

24 and it is termed majority interval dominance (MID). (we assume that the compared services

and

belong to the task j, and the current QoS attribute is d,

is represented with

and and

is represented with

).

Where Relu (Rectified linear unit) [

20] is the activation function used in deep learning.

For instance, if we assume that , then , and .

The aggregated majority interval dominance is shown in Equation

26.

represent the median intervals of the compared QoS attributes (having the

rank). Like H1, H2, and H3, the contest function is defined in Equation

27

In the experiments, we will sort the services of each task according to the decreasing order of Equation

28 and take first k elements.

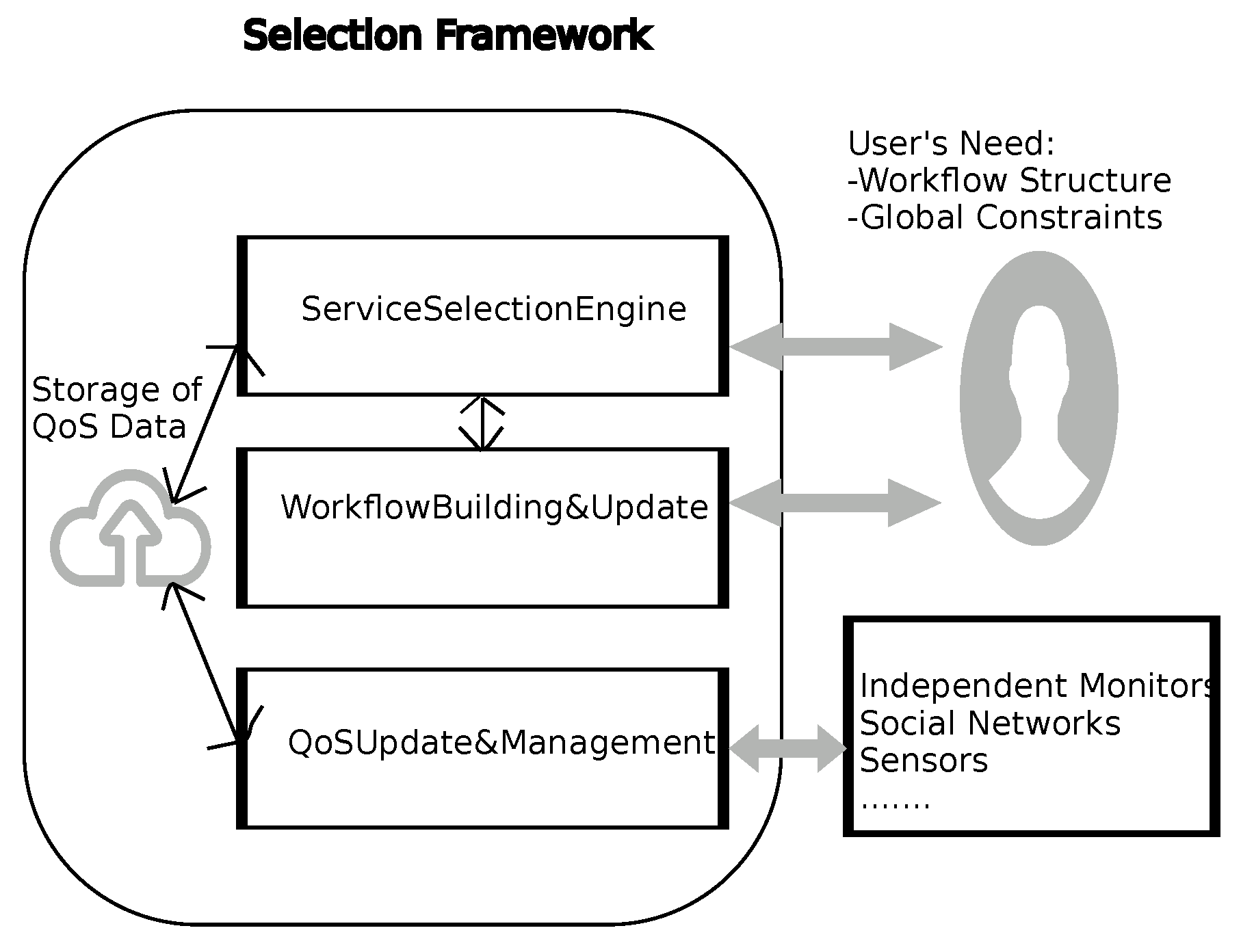

4.3. Global Optimization

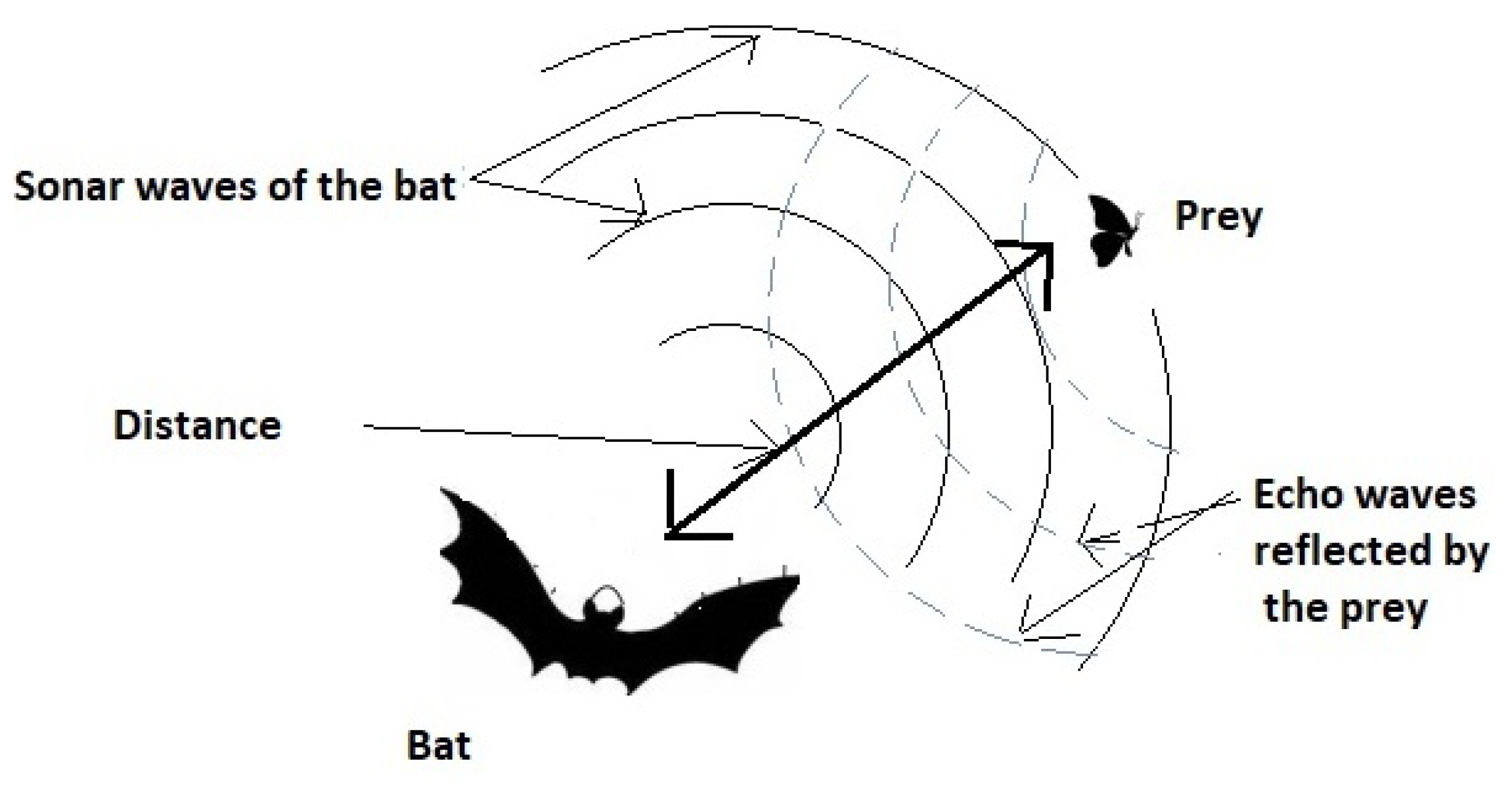

Once the n lists are given by the first step of the method, it is time now to perform a global search by composing and assessing the service compositions. To do so, we leverage a swarm intelligence metaheuristic that adapt the bat algorithm to our discrete context. This discrete optimization algorithm is chosen because of its ability to combine local search and global search in a harmonious way. Bat algorithm [

35] is a promising metaheuristic for continuous optimization. Its metaphor is based on the echolocation behaviour of micro-bats, that can vary frequencies, loudness, and pulse rates of emission to capture the prey (see

Figure 3).

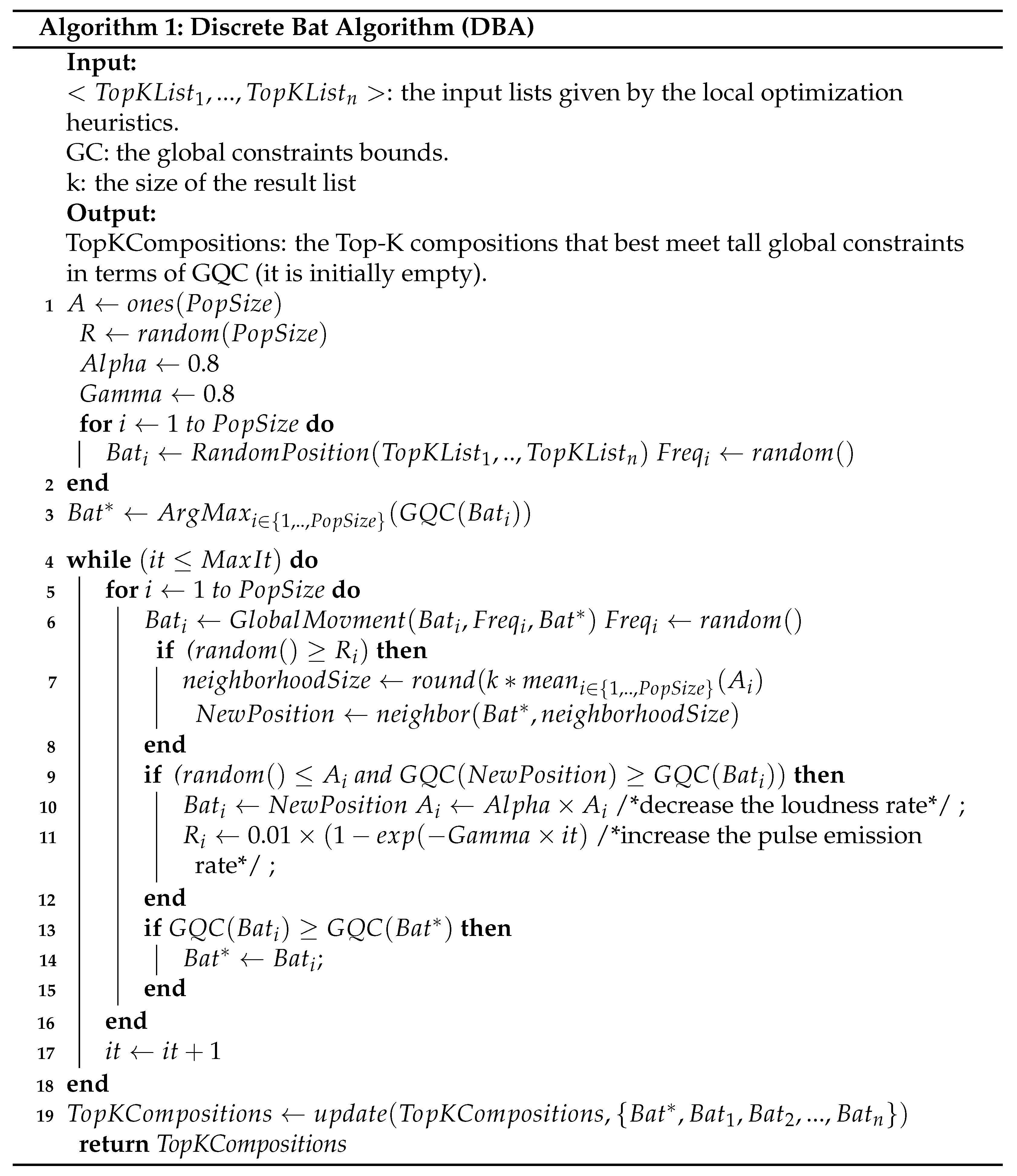

Before giving the pseudo-code of the discrete bat algorithm, we explain all its technical parameters.

Pop: it is a matrix of PopSize*n dimensions, it represents all the virtual bats. .

: the position of the best bat.

A: it stands for the loudness of the chirp; it is a vector of Popsize random numbers comprised in [0,1], it controls the neighborhood size of the local search. It is decreased along the execution of the metaheuristic.

Freq: it stands for the frequencies of the bats. It is a matrix of PopSize*n dimensions, it controls the size of the moving step during the global search phase. It is initialized with random values between 0 and 1.

R: it stands for the pulse emission rate of each bat. Technically, it is a n-dimension vector of random numbers (in [0,1]) that controls the execution of the local search.

Alpha: the factor of decreasing A.

Gamma: it is a factor that controls the increasing rate of the pulse emission rate R.

MaxIt: the maximum number of iterations of DBA.

The pseudo-code of DBA can be explained as follows: Line 1 : for each bat, we initialize its loudness, pulse emission rate, and their updating rates Alpha and Gamma.

Line 2: for each bat, we randomly initialize its position and its frequency that it is used as a step displacement in the GlobalMovment (of line 6). is a real value belonging to [0,1].

Line 3: we compute the best bat position of the swarm in terms of GQC. we update the best bat position of the swarm.

Lines 4-18 : this is the principal loop of the metaheuristic; it is constituted of MaxIt iterations.

Lines 5-16 : this is the loop that explores all the bats.

Line 6: this function creates a new composition by moving toward the best solution with a random step. More specifically, for each component (task) j of a given bat i, we replace it with the corresponding value in with a probability equal to ( with a probability). The frequency of each bat is changed after that.

Line7:with a probabiity , we create a neighborhood centered on the best bat .

The width of this neighborhood is equal to k times the average of all the possible loudnesses (this width is termed as spread); then, we create a new composition NewPosition as follows:

for each with a probability

. Knowing that and .

For instance, if a task j is constituted of the following ranked services , and we assume that (it is ranked third in the list), and , then the neighborhood of S4, according to line 7, is equal to . The probability of getting each of them as a value for is , respectively (since we approximate the Gaussian function for these three observations).

In lines 9-12, we accept the aforementioned solution NewPosition (i.e., we update ), with a probability . In addition, NewPosition must have a fitness better than that of .

We decrease the loudness and increase the pulse emission rate in order to reduce the chances of performing the local search in the future (i.e., line 7).

In lines 13-15, we update the best solution if the actual bat has a better fitness.

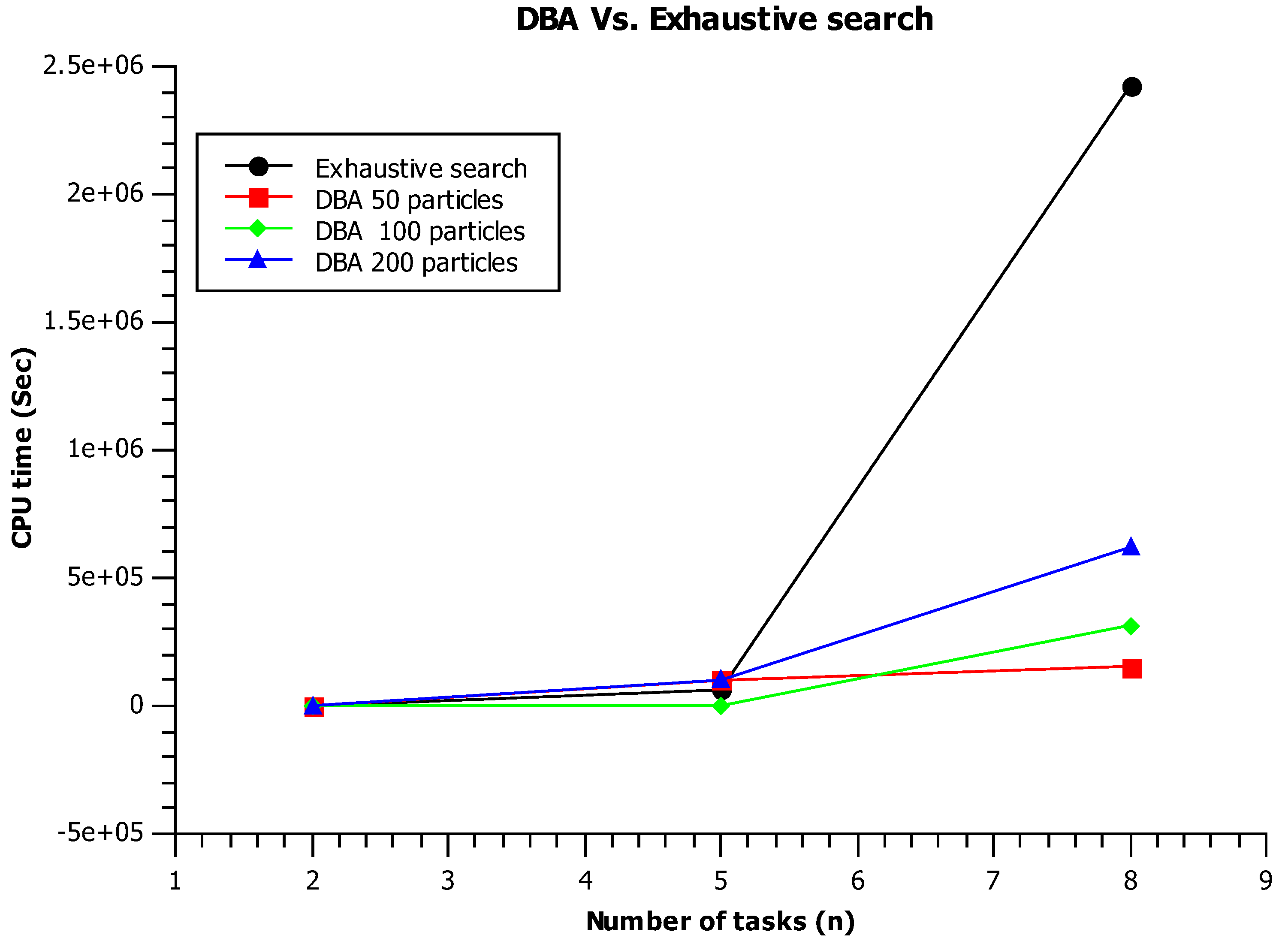

Finally, we notice that DBA has a time complexity of . We notice that the complexity of the fitness function GQC is .