Submitted:

01 June 2023

Posted:

05 June 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

3. Results

3.1. Biomedical Research

3.2. Medical Practice

3.4. Equations

4. Discussion

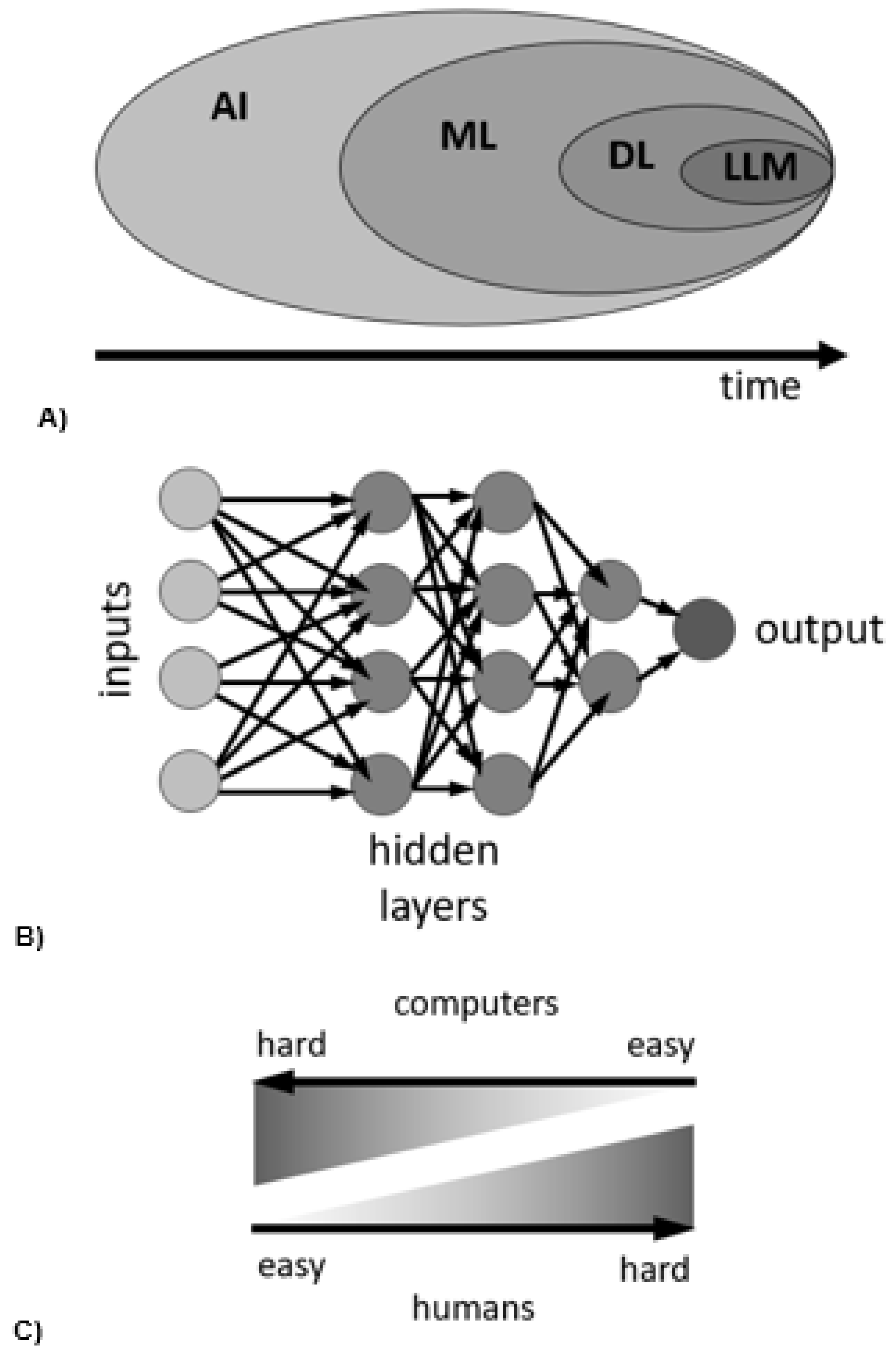

4.1. Risks & Limitations of Current AI Approaches

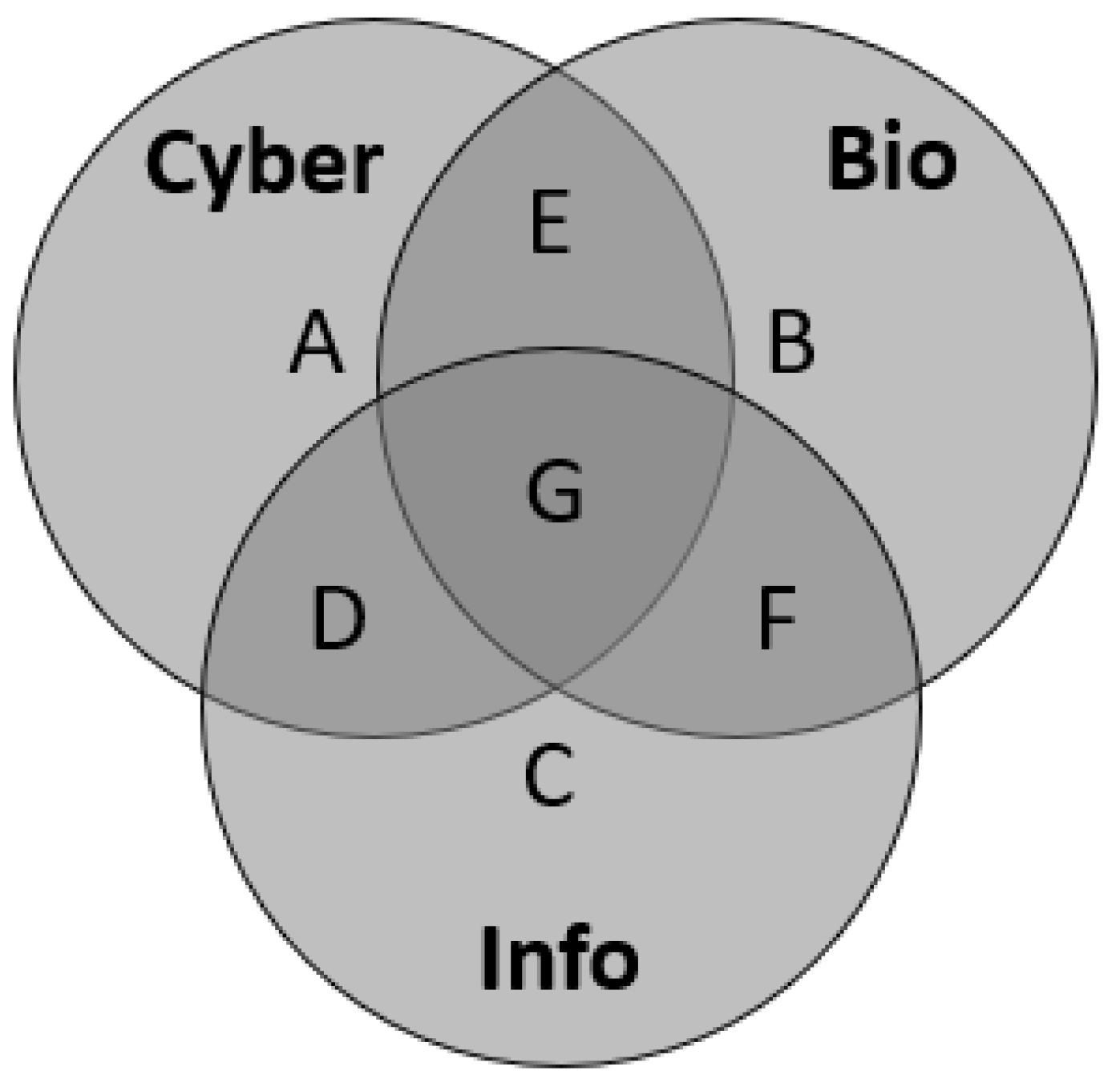

4.2. Weaponization of AI in Science & Healthcare

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kurzweil, R. How to Create a Mind: The Secret of Human Thought Revealed. Viking Books: New York, NY, USA, 2012. [Google Scholar]

- Moravec, H.P. When will computer hardware match the human brain? Journal of Evolution and Technology 1998, 1, 10. https://www.jetpress.org/volume1/moravec.pdf.

- Bhargava, P.; Ng, V. Commonsense Knowledge Reasoning and Generation with Pre-trained Language Models: A Survey. In Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence. arXiv 2022, arXiv:2201.12438. [Google Scholar] [CrossRef]

- Rezaei, N.; Reformat, M.Z. Utilizing Language Models to Expand Vision-Based Commonsense Knowledge Graphs. Symmetry 2022, 14, 1715. [Google Scholar] [CrossRef]

- Sardanelli, F.; Castiglioni, I.; Colarieti, A.; Schiaffino, S.; Di Leo, G. Artificial intelligence (AI) in biomedical research: discussion on authors’ declaration of AI in their articles title. Eur Radiol Exp 2023, 7, 2. [Google Scholar] [CrossRef]

- Driess, D.; Xia, F.; Sajjadi, M.S.; Lynch, C.; Chowdhery, A.; Ichter, B.; Wahid, A.; Tompson, J.; Vuong, Q.; Yu, T.; Huang, W. PaLM-E: An Embodied Multimodal Language Model. arXiv 2023. [Google Scholar] [CrossRef]

- Färber, M.; Ell, B.; Menne, C.; Rettinger, A. A Comparative Survey of DBpedia, Freebase, OpenCyc, Wikidata, and YAGO. Semantic Web Journal 2015, 1, 1–5. [Google Scholar]

- Wolfram, S. What Is ChatGPT Doing... and Why Does It Work? Stephen Wolfram: 2023. https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/.

- Wolfram, S. Wolfram|Alpha as the Way to Bring Computational Knowledge Superpowers to ChatGPT. Stephen Wolfram: 2023. https://writings.stephenwolfram.com/2023/01/wolframalpha-as-the-way-to-bring-computational-knowledge-superpowers-to-chatgpt/.

- Hogan, A.; et al. Knowledge Graphs. ACM Comput. Surv. 2021, 54, 71:1–71:37. [Google Scholar] [CrossRef]

- Xie, Y.; Pu, P. How Commonsense Knowledge Helps with Natural Language Tasks: A Survey of Recent Resources and Methodologies. arXiv 2021. [Google Scholar] [CrossRef]

- Weikum, G.; Dong, L.; Razniewski, S.; Suchanek, F. Machine Knowledge: Creation and Curation of Comprehensive Knowledge Bases. Foundations and Trends in Databases 2021, 10, 108–490. [Google Scholar] [CrossRef]

- Yan, J.; Wang, C.; Cheng, W.; Gao, M.; Zhou, A. A retrospective of knowledge graphs. Frontiers of Computer Science 2016, 12, 55–74. [Google Scholar] [CrossRef]

- Schneider, P.; Schopf, T.; Vladika, J.; Galkin, M.; Simperl, E.; Matthes, F. A Decade of Knowledge Graphs in Natural Language Processing: A Survey. arXiv 2022, arXiv:2210.00105. [Google Scholar] [CrossRef]

- Heinrich, L.; Bennett, D.; Ackerman, D.; et al. Whole-cell organelle segmentation in volume electron microscopy. Nature 2021, 599, 141–146. https://www.nature.com/articles/s41586-021-03977-3. [CrossRef]

- Musser, J.M.; Schippers, K.J.; Nickel, M.; Mizzon, G.; Kohn, A.B.; Pape, C.; Ronchi, P.; Papadopoulos, N.; Tarashansky, A.J.; Hammel, J.U.; et al. Profiling cellular diversity in sponges informs animal cell type and nervous system evolution. Science 2021, 374, 717–723. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.; Hariyani, Y.S.; Cho, Y.H.; Park, C. Automated White Blood Cell Counting in Nailfold Capillary Using Deep Learning Segmentation and Video Stabilization. Sensors 2020, 20, 7101. [Google Scholar] [CrossRef]

- Flight, R.; Landini, G.; Styles, I.B.; Shelton, R.M.; Milward, M.R.; Cooper, P.R. Automated noninvasive epithelial cell counting in phase contrast microscopy images with automated parameter selection. J. Microsc. 2018, 271, 345–354. [Google Scholar] [CrossRef] [PubMed]

- Lauring, M.C.; Zhu, T.; Luo, W.; et al. New software for automated cilia detection in cells (ACDC). Cilia 2019, 8, 1. [Google Scholar] [CrossRef] [PubMed]

- Ceran, Y.; Ergüder, H.; Ladner, K.; Korenfeld, S.; Deniz, K.; Padmanabhan, S.; Wong, P.; Baday, M.; Pengo, T.; Lou, E.; Patel, C.B. TNTdetect.AI: A Deep Learning Model for Automated Detection and Counting of Tunneling Nanotubes in Microscopy Images. Cancers 2022, 14, 4958. [Google Scholar] [CrossRef]

- Ai, Z.; Huang, X.; Feng, J.; Wang, H.; Tao, Y.; Zeng, F.; Lu, Y. FN-OCT: Disease Detection Algorithm for Retinal Optical Coherence Tomography Based on a Fusion Network. Front Neuroinform 2022, 16, 876927. [Google Scholar] [CrossRef]

- Choi, W.J.; Pepple, K.L.; Wang, R.K. Automated three-dimensional cell counting method for grading uveitis of rodent eye in vivo with optical coherence tomography. J Biophotonics 2018, 11, e201800140. [Google Scholar] [CrossRef]

- Hormel, T.T.; Hwang, T.S.; Bailey, S.T.; Wilson, D.J.; Huang, D.; Jia, Y. Artificial intelligence in OCT angiography. Prog. Retin. Eye Res. 2021, 85, 100965. [Google Scholar] [CrossRef]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly accurate protein structure prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Lin, J.; Ngiam, K.Y. How data science and AI-based technologies impact genomics. Singapore Med. J. 2023, 64, 59–66. [Google Scholar] [CrossRef]

- Mieth, B.; Rozier, A.; Rodriguez, J.A.; Höhne, M.M.C.; Görnitz, N.; Müller, K.R. DeepCOMBI: explainable artificial intelligence for the analysis and discovery in genome-wide association studies. NAR Genom. Bioinform. 2021, 3, lqab065. [Google Scholar] [CrossRef]

- Paul, D.; Sanap, G.; Shenoy, S.; Kalyane, D.; Kalia, K.; Tekade, R.K. Artificial intelligence in drug discovery and development. Drug Discov. Today 2021, 26, 80–93. [Google Scholar] [CrossRef]

- Vemula, D.; Jayasurya, P.; Sushmitha, V.; Kumar, Y.N.; Bhandari, V. CADD, AI and ML in drug discovery: A comprehensive review. Eur. J. Pharm. Sci. 2023, 181, 106324. [Google Scholar] [CrossRef] [PubMed]

- Sun, T.; Wei, Y.; Chen, W.; Ding, Y. Genome-wide association study-based deep learning for survival prediction. Stat. Med. 2020, 39, 4605–4620. [Google Scholar] [CrossRef] [PubMed]

- Sandberg, A.; Bostrom, N. Whole Brain Emulation: A Roadmap. Future of Humanity Institute, Oxford University, Technical Report #2008-3. 2008. https://www.fhi.ox.ac.uk/brain-emulation-roadmap-report.pdf.

- Arle, J.E.; Carlson, K.W. Medical diagnosis and treatment is NP-complete. Journal of Experimental & Theoretical Artificial Intelligence 2021, 33, 297–312. https://www.tandfonline.com/doi/full/10.1080/0952813X.2020.1737581. [CrossRef]

- Duong, M.T.; Rauschecker, A.M.; Rudie, J.D.; Chen, P.H.; Cook, T.S.; Bryan, R.N.; Mohan, S. Artificial intelligence for precision education in radiology. Br. J. Radiol. 2019, 92, 20190389. [Google Scholar] [CrossRef] [PubMed]

- Filice, R.W.; Kahn, C.E., Jr. Biomedical Ontologies to Guide AI Development in Radiology. J. Digit. Imaging 2021, 34, 1331–1341, Erratum in J. Digit. Imaging 2022, 35, 1419. [Google Scholar] [CrossRef] [PubMed]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef] [PubMed]

- Rezazade Mehrizi, M.H.; van Ooijen, P.; Homan, M. Applications of artificial intelligence (AI) in diagnostic radiology: a technography study. Eur. Radiol. 2021, 31, 1805–1811. [Google Scholar] [CrossRef]

- Schuur, F.; Rezazade Mehrizi, M.H.; Ranschaert, E. Training opportunities of artificial intelligence (AI) in radiology: a systematic review. Eur. Radiol. 2021, 31, 6021–6029. [Google Scholar] [CrossRef]

- Seah, J.; Boeken, T.; Sapoval, M.; Goh, G.S. Prime Time for Artificial Intelligence in Interventional Radiology. Cardiovasc. Intervent. Radiol. 2022, 45, 283–289. [Google Scholar] [CrossRef]

- Strohm, L.; Hehakaya, C.; Ranschaert, E.R.; Boon, W.P.C.; Moors, E.H.M. Implementation of artificial intelligence (AI) applications in radiology: hindering and facilitating factors. Eur. Radiol. 2020, 30, 5525–5532. [Google Scholar] [CrossRef]

- Sorantin, E.; Grasser, M.G.; Hemmelmayr, A.; Tschauner, S.; Hrzic, F.; Weiss, V.; Lacekova, J.; Holzinger, A. The augmented radiologist: artificial intelligence in the practice of radiology. Pediatr. Radiol. 2022, 52, 2074–2086. [Google Scholar] [CrossRef]

- Kather, J.N. Artificial intelligence in oncology: chances and pitfalls. J. Cancer Res. Clin. Oncol. 2023, 149, 7995–7996. [Google Scholar] [CrossRef]

- Jiang, F.; Jiang, Y.; Zhi, H.; et al. Artificial intelligence in healthcare: past, present and future. Stroke and Vascular Neurology 2017, 2, e000101. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.S.; Hussain, I.; Rahman, M.M.; Park, S.J.; Hossain, M.A. Explainable Artificial Intelligence Model for Stroke Prediction Using EEG Signal. Sensors 2022, 22, 9859. [Google Scholar] [CrossRef] [PubMed]

- Wartman, S.A.; Combs, C.D. Reimagining Medical Education in the Age of AI. AMA J Ethics 2019, 21, E146–E152. [Google Scholar] [CrossRef] [PubMed]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digital Health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- Amann, J.; Blasimme, A.; Vayena, E.; et al. Explainability for artificial intelligence in healthcare: a multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 2020, 20, 310. [Google Scholar] [CrossRef]

- Koski, E.; Murphy, J. AI in Healthcare. Stud Health Technol Inform 2021, 284, 295–299. [Google Scholar] [CrossRef]

- Bali, J.; Garg, R.; Bali, R.T. Artificial intelligence (AI) in healthcare and biomedical research: Why a strong computational/AI bioethics framework is required? Indian J. Ophthalmol. 2019, 67, 3–6. [Google Scholar] [CrossRef]

- Bali, J.; Bali, O. Artificial intelligence in ophthalmology and healthcare: An updated review of the techniques in use. Indian J. Ophthalmol. 2021, 69, 8–13. [Google Scholar] [CrossRef] [PubMed]

- Bobak, C.A.; Svoboda, M.; Giffin, K.A.; Wall, D.P.; Moore, J. Raising the stakeholders: Improving patient outcomes through interprofessional collaborations in AI for healthcare. Pacific Symposium on Biocomputing 2021, 26, 351–355. [Google Scholar] [PubMed]

- Chen, M.; Decary, M. Artificial intelligence in healthcare: An essential guide for health leaders. Healthc Manage Forum 2020, 33, 10–18. [Google Scholar] [CrossRef] [PubMed]

- Chomutare, T.; Tejedor, M.; Svenning, T.O.; Marco-Ruiz, L.; Tayefi, M.; Lind, K.; Godtliebsen, F.; Moen, A.; Ismail, L.; Makhlysheva, A.; et al. Artificial Intelligence Implementation in Healthcare: A Theory-Based Scoping Review of Barriers and Facilitators. Int. J. Environ. Res. Public Health 2022, 19, 16359. [Google Scholar] [CrossRef] [PubMed]

- Davenport, T.; Kalakota, R. The potential for artificial intelligence in healthcare. Future Health J. 2019, 6, 94–98. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6616181/. [CrossRef]

- De Togni, G.; Erikainen, S.; Chan, S.; Cunningham-Burley, S. What makes AI ‘intelligent’ and ‘caring’? Exploring affect and relationality across three sites of intelligence and care. Soc. Sci. Med. 2021, 277, 113874. [Google Scholar] [CrossRef]

- González-Gonzalo, C.; Thee, E.F.; Klaver, C.C.W.; Lee, A.Y.; Schlingemann, R.O.; Tufail, A.; Verbraak, F.; Sánchez, C.I. Trustworthy AI: Closing the gap between development and integration of AI systems in ophthalmic practice. Prog. Retin. Eye Res. 2022, 90, 101034. [Google Scholar] [CrossRef] [PubMed]

- Johnson, K.B.; Wei, W.Q.; Weeraratne, D.; Frisse, M.E.; Misulis, K.; Rhee, K.; Zhao, J.; Snowdon, J.L. Precision Medicine, AI, and the Future of Personalized Health Care. Clin. Transl. Sci. 2021, 14, 86–93. [Google Scholar] [CrossRef]

- Hadjiiski, L.; Cha, K.; Chan, H.P.; Drukker, K.; Morra, L.; Näppi, J.J.; Sahiner, B.; Yoshida, H.; Chen, Q.; Deserno, T.M.; et al. AAPM task group report 273: Recommendations on best practices for AI and machine learning for computer-aided diagnosis in medical imaging. Med Phys 2023, 50, e1–e24. https://aapm.onlinelibrary.wiley.com/doi/10.1002/mp.16188. [CrossRef]

- Yang, Y.C.; Islam, S.U.; Noor, A.; Khan, S.; Afsar, W.; Nazir, S. Influential Usage of Big Data and Artificial Intelligence in Healthcare. Computational and Mathematical Methods in Medicine 2021, 2021, 5812499. [Google Scholar] [CrossRef]

- von Gerich, H.; Moen, H.; Block, L.J.; Chu, C.H.; DeForest, H.; Hobensack, M.; Michalowski, M.; Mitchell, J.; Nibber, R.; Olalia, M.A.; Pruinelli, L.; Ronquillo, C.E.; Topaz, M.; Peltonen, L.M. Artificial Intelligence -based technologies in nursing: A scoping literature review of the evidence. Int. J. Nurs. Stud. 2022, 127, 104153. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Preininger, A. AI in Health: State of the Art, Challenges, and Future Directions. Yearb Med Inform 2019, 28, 16–26. [Google Scholar] [CrossRef] [PubMed]

- Wilson, A.; Saeed, H.; Pringle, C.; et al. Artificial intelligence projects in healthcare: 10 practical tips for success in a clinical environment. BMJ Health Care Inform 2021, 28, e100323. [Google Scholar] [CrossRef] [PubMed]

- Liu, P.R.; Lu, L.; Zhang, J.Y.; Huo, T.T.; Liu, S.X.; Ye, Z.W. Application of Artificial Intelligence in Medicine: An Overview. Curr. Med. Sci. 2021, 41, 1105–1115. [Google Scholar] [CrossRef] [PubMed]

- Mudgal, S.K.; Agarwal, R.; Chaturvedi, J.; Gaur, R.; Ranjan, N. Real-world application, challenges and implication of artificial intelligence in healthcare: an essay. Pan Afr Med J 2022, 43, 3. [Google Scholar] [PubMed]

- Naik, N.; Hameed, B.M.Z.; Sooriyaperakasam, N.; Vinayahalingam, S.; Patil, V.; Smriti, K.; Saxena, J.; Shah, M.; Ibrahim, S.; Singh, A.; Karimi, H.; Naganathan, K.; Shetty, D.K.; Rai, B.P.; Chlosta, P.; Somani, B.K. Transforming healthcare through a digital revolution: A review of digital healthcare technologies and solutions. Front. Digit. Health 2022, 4, 919985. [Google Scholar] [CrossRef] [PubMed]

- Noorbakhsh-Sabet, N.; Zand, R.; Zhang, Y.; Abedi, V. Artificial Intelligence Transforms the Future of Health Care. Am. J. Med. 2019, 132, 795–801. [Google Scholar] [CrossRef]

- Vaishya, R.; Javaid, M.; Khan, I.H.; Haleem, A. Artificial Intelligence (AI) applications for COVID-19 pandemic. Diabetes Metab Syndr 2020, 14, 337–339. [Google Scholar] [CrossRef]

- Scott, I.A.; Carter, S.M.; Coiera, E. Exploring stakeholder attitudes towards AI in clinical practice. BMJ Health Care Inform 2021, 28, e100450. [Google Scholar] [CrossRef]

- Shaban-Nejad, A.; Michalowski, M.; Bianco, S.; Brownstein, J.S.; Buckeridge, D.L.; Davis, R.L. Applied artificial intelligence in healthcare: Listening to the winds of change in a post-COVID-19 world. Exp. Biol. Med. 2022, 247, 1969–1971. [Google Scholar] [CrossRef]

- Unger, M.; Berger, J.; Melzer, A. Robot-Assisted Image-Guided Interventions. Front. Robot. AI 2021, 8, 664622. [Google Scholar] [CrossRef]

- Srivastava, S.K.; Singh, S.K.; Suri, J.S. State-of-the-art methods in healthcare text classification system: AI paradigm. Front. Biosci. 2020, 25, 646–672. [Google Scholar] [CrossRef]

- Han, H.; Liu, X. The challenges of explainable AI in biomedical data science. BMC Bioinformatics 2021, 22 (Suppl. 12), 443. [Google Scholar] [CrossRef] [PubMed]

- Stanfill, M.H.; Marc, D.T. Health Information Management: Implications of Artificial Intelligence on Healthcare Data and Information Management. Yearb Med Inform 2019, 28, 56–64. [Google Scholar] [CrossRef] [PubMed]

- Reddy, S.; Allan, S.; Coghlan, S.; Cooper, P. A governance model for the application of AI in health care. J Am Med Inform Assoc 2020, 27, 491–497. [Google Scholar] [CrossRef] [PubMed]

- Reddy, S.; Rogers, W.; Makinen, V.-P.; et al. Evaluation framework to guide implementation of AI systems into healthcare settings. BMJ Health Care Inform 2021, 28, e100444. [Google Scholar] [CrossRef] [PubMed]

- Roski, J.; Maier, E.J.; Vigilante, K.; Kane, E.A.; Matheny, M.E. Enhancing trust in AI through industry self-governance. J Am Med Inform Assoc 2021, 28, 1582–1590. [Google Scholar] [CrossRef] [PubMed]

- Frégnac, Y. How Blue is the Sky? eNeuro 2021, 8. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8174045/. [CrossRef]

- Stern, J. GPT-4 Might Just Be a Bloated, Pointless Mess. The Atlantic, Atlantic Media Company, 6 March 2023. https://www.theatlantic.com/technology/archive/2023/03/openai-gpt-4-parameters-power-debate/673290/.

- Kerasidou, C.X.; Kerasidou, A.; Buscher, M.; et al. Before and beyond trust: reliance in medical AI. J Med Ethics 2022, 48, 852–856. [Google Scholar] [CrossRef]

- Vallès-Peris, N.; Barat-Auleda, O.; Domènech, M. Robots in Healthcare? What Patients Say. Int. J. Environ. Res. Public Health 2021, 18, 9933. [Google Scholar] [CrossRef] [PubMed]

- Siala, H.; Wang, Y. SHIFTing artificial intelligence to be responsible in healthcare: A systematic review. Soc Sci Med 2022, 296, 114782. [Google Scholar] [CrossRef] [PubMed]

- Smallman, M. Multi Scale Ethics-Why We Need to Consider the Ethics of AI in Healthcare at Different Scales. Sci Eng Ethics 2022, 28, 63. [Google Scholar] [CrossRef] [PubMed]

- Vinge, V. The coming technological singularity: How to survive in the post-human era. San Diego State University. NASA Lewis Research Center VISION-21 Symposium CP-10129. Document ID: 1994002285, Accession Number: 94N27359. Whole Earth Review. 1993. https://ntrs.nasa.gov/api/citations/19940022856/downloads/19940022856.pdf.

- Park, M.; Leahey, E.; Funk, R.J. Papers and patents are becoming less disruptive over time. Nature 2023, 613, 138–144. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y. A Path Towards Autonomous Machine Intelligence. OpenReview Archive. (27 June 2022). https://openreview.net/pdf?id=BZ5a1r-kVsf.

- Stefano, G.B. Robotic Surgery: Fast Forward to Telemedicine. Med Sci Monit 2017, 23, 1856–1856. [Google Scholar] [CrossRef]

- Manickam, P.; Mariappan, S.A.; Murugesan, S.M.; Hansda, S.; Kaushik, A.; Shinde, R.; Thipperudraswamy, S.P. Artificial Intelligence (AI) and Internet of Medical Things (IoMT) Assisted Biomedical Systems for Intelligent Healthcare. Biosensors 2022, 12, 562. [Google Scholar] [CrossRef] [PubMed]

- Clery, D. Could a Wireless Pacemaker Let Hackers Take Control of Your Heart? Science 9 February 2015. www.science.org/content/article/could-wireless-pacemaker-let-hackers-take-control-your-heart.

- Poulsen, K. Hackers Assault Epilepsy Patients via Computer. Wired 29 March 2008. www.wired.com/2008/03/hackers-assault-epilepsy-patients-via-computer/.

- Paton, C.; Kobayashi, S. An Open Science Approach to Artificial Intelligence in Healthcare. Yearb Med Inform 2019, 28, 47–51. [Google Scholar] [CrossRef]

- Arquilla, J.; Ronfeldt, D. The Advent Of Netwar; Rand Corporation: Santa Monica, CA, USA, 1996. [Google Scholar] [CrossRef]

- Broniatowski, D.A.; Jamison, A.M.; Qi, S.; AlKulaib, L.; Chen, T.; Benton, A.; Quinn, S.C.; Dredze, M. Weaponized Health Communication: Twitter Bots and Russian Trolls Amplify the Vaccine Debate. Am J Public Health 2018, 108, 1378–1384. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6137759/. [CrossRef]

- Strudwicke, I.J.; Grant, W.J. #JunkScience: Investigating pseudoscience disinformation in the Russian Internet Research Agency tweets. Public Underst Sci 2020, 29, 459–472. https://journals.sagepub.com/doi/10.1177/0963662520935071. [CrossRef] [PubMed]

- Mønsted, B.; Sapieżyński, P.; Ferrara, E.; Lehmann, S. Evidence of complex contagion of information in social media: An experiment using Twitter bots. PLOS ONE 2017, 12, e0184148. [Google Scholar] [CrossRef]

- Ruiz-Núñez, C.; Segado-Fernández, S.; Jiménez-Gómez, B.; Hidalgo, P.J.J.; Magdalena, C.S.R.; Pollo, M.D.C.Á.; Santillán-Garcia, A.; Herrera-Peco, I. Bots’ Activity on COVID-19 Pro and Anti-Vaccination Networks: Analysis of Spanish-Written Messages on Twitter. Vaccines 2022, 10, 1240. [Google Scholar] [CrossRef] [PubMed]

- Weng, Z.; Lin, A. Public Opinion Manipulation on Social Media: Social Network Analysis of Twitter Bots during the COVID-19 Pandemic. Int. J. Environ. Res. Public Health 2022, 19, 16376. [Google Scholar] [CrossRef]

- Xu, W.; Sasahara, K. Characterizing the roles of bots on Twitter during the COVID-19 infodemic. J Comput Soc Sci 2022, 5, 591–609, Erratum in J Comput Soc Sci 2021, 5, 591–609. [Google Scholar] [CrossRef]

- Zhang, Y.; Song, W.; Shao, J.; Abbas, M.; Zhang, J.; Koura, Y.H.; Su, Y. Social Bots’ Role in the COVID-19 Pandemic Discussion on Twitter. Int. J. Environ. Res. Public Health 2023, 20, 3284. [Google Scholar] [CrossRef] [PubMed]

- Dunn, A.G.; Surian, D.; Dalmazzo, J.; Rezazadegan, D.; Steffens, M.; Dyda, A.; Leask, J.; Coiera, E.; Dey, A.; Mandl, K.D. Limited Role of Bots in Spreading Vaccine-Critical Information Among Active Twitter Users in the United States: 2017-2019. Am J Public Health 2020, 110, S319–S325. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7532316/. [CrossRef]

- Leskova, I.V.; Zyazin, S.U. The lack of confidence to vaccination as information planting. Probl Sotsialnoi Gig Zdravookhranenniiai Istor Med 2021, 29, 37–40. https://pubmed.ncbi.nlm.nih.gov/33591653/. [CrossRef]

- Imhoff, R.; Lamberty, P. A Bioweapon or a Hoax? The Link Between Distinct Conspiracy Beliefs About the Coronavirus Disease (COVID-19) Outbreak and Pandemic Behavior. Soc Psychol Personal Sci 2020, 11, 1110–1118. [Google Scholar] [CrossRef]

- Wakefield, J. Deepfake Presidents Used in Russia-Ukraine War. BBC News, BBC, 18 March 2022. https://www.bbc.com/news/technology-60780142.

| Key Findings |

|---|

| AI is positively disrupting both basic science research and the healthcare field. |

| In the lab, AI is aiding in drug discovery and automated image analysis. |

| In the clinic, AI is successfully used in radiological diagnosis, optical imaging, and surgery guidance. |

| Current approaches to AI are limited in reliability due to their lack of explainability (black box) and difficulty in confirming their solutions. |

| AI carries the risk of amplifying biases and being weaponized to spread anti-vaccine and other health disinformation. |

| Concerns of technological unemployment due to automation have likely been exaggerated (Moravec’s paradox). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).