1. Introduction

Identifying carcinogenic chemicals is crucial for human health, as they present a severe toxicological risk that can lead to adverse health effects, including cancer development. Carcinogens are substances that have the potential to initiate or promote the development of cancerous cells in living organisms. The standard method for assessing the carcinogenic potential of chemicals has been the 2-year rodent carcinogenicity assay, which has been used for over 50 years [

1]. This assay involves exposing rodents to various doses of a chemical over an extended period and monitoring for the development of tumors. While this method has provided valuable insights into the carcinogenicity of numerous substances, however, these long-term animal studies are resource-intensive, time-consuming, and ethically challenging. Moreover, environmental exposure to carcinogenic chemicals rarely occurs in isolation but rather involves complex mixtures. These mixtures consist of various manmade contaminants that pervade the air we breathe, the water we drink, the soil in which we grow our food, and the food we consume, leading to adverse health effects, including cancer. Notable examples of these contaminants include Pesticides, Per- and Polyfluorinated Substances (PFAS), polycyclic aromatic hydrocarbons (PAH), Metals, and polychlorinated biphenyls (PCB). These substances find their way into the environment through industrial processes, waste disposal, and the use of certain consumer products. As a result, individuals are exposed to a multitude of chemicals simultaneously, increasing the complexity of evaluating their carcinogenic potential.

The assessment of chemical mixture carcinogenicity is hindered by the vast number of combinations, resource-intensive experiments, and the absence of computational methods, resulting in limited data on the carcinogenicity of chemical mixtures. Further, assessing the carcinogenic chemical mixtures is a formidable task due to the added intricacy introduced by varying concentrations of the individual chemical components. Each chemical within a mixture can interact with other chemicals, potentially amplifying or attenuating their carcinogenic effects. Determining the precise contribution of each component to the overall carcinogenic potential becomes challenging, requiring sophisticated experimental designs and analytical techniques. Typically, the carcinogenic potential of chemical combinations is assessed, focusing on whether one chemical promotes the carcinogenicity initiated by another chemical, even if the second chemical, in isolation, does not exhibit carcinogenic properties at carcinogenic doses or different concentrations [

2,

3,

4]. Animal models are commonly used for such assessments, wherein animals are exposed to specific combinations of chemicals to observe their collective effects on tumor development and progression. Moreover, in-vitro cell line models have been utilized to study the combined carcinogenic effects of chemicals. Studies have demonstrated synergistic effects on DNA adduct formation when Phthalates, a group of chemicals commonly found in plastics and personal care products, are co-exposed in these cell line models [

5,

6]. These findings highlight the importance of considering chemical interactions within mixtures and their potential to enhance carcinogenic outcomes. Additionally, research has investigated the synergistic and combined anticancer effects of anticancer drugs in animal models and cell lines to combat carcinogenicity [

7,

8,

9]. To support the current study, an extensive collection of literature references on the combined effects of carcinogens and noncarcinogens in chemical mixtures, which we have utilized in this study (see

Supplementary Materials).

The computational method offers a highly efficient and cost-effective alternative to traditional experimental approaches for assessing the carcinogenic potential of chemical mixtures. While the Concentration Addition (CA) model has been widely utilized to calculate the toxicity of binary mixtures [

10,

11], its application in evaluating the carcinogenicity of mixtures has been somewhat limited. In an effort to overcome this limitation, Walker et al. conducted a study employing the dioxin toxic equivalency factor (TEF) approach to determine the dose-additive carcinogenicity of three compound mixtures of dioxins [

12]. The TEF approach allows for the conversion of different dioxin congeners into a common unit of toxicity, enabling the calculation of an equitoxic ratio for each compound in the mixture. By employing equal ratios of toxic equivalency (TEQs) for each compound, they aimed to represent an equitoxic ratio in their assessment of the mixture's carcinogenic potential. However, a significant challenge encountered when utilizing computational methods to evaluate mixture carcinogenicity is the lack of available information regarding the specific carcinogenic concentrations of classified carcinogens. Identifying the precise concentrations at which individual chemicals exhibit carcinogenic effects is crucial for accurate assessments. Unfortunately, such data is often unavailable or incomplete, impeding the comprehensive evaluation of mixture carcinogenicity using computational approaches. To address these challenges and improve the accuracy of mixture carcinogenicity assessments, further research is needed to expand the application of computational models beyond simple single chemical simulations or the simple application of QSAR methods for chemical mixtures. This includes developing and refining approaches that account for varying levels of carcinogenic potency among mixture components and integrating a vast number of mixture data to enhance the predictive capabilities of computational models.

There is a need for more experimental data regarding carcinogenic chemical mixtures and an absence of reliable computational methods for accurately predicting the carcinogenic potential of chemical mixtures. Recognizing this gap, the primary objective of our study is to introduce a novel methodology that addresses these challenges by predicting the dose-dependent carcinogenic potential of chemical mixtures while simultaneously enhancing the dataset on carcinogenic mixtures. To accomplish this, in addition to using the literature-reported carcinogenic mixtures, we have expanded the mixtures dataset by generating assumption-based virtual chemical mixtures for all the classification models. By incorporating these virtual mixtures, we aim to supplement the limited dataset and provide a more comprehensive representation of chemical mixtures. This dataset augmentation allows for a broader exploration of various mixture compositions and concentrations, enabling more robust predictions of carcinogenicity.

In our study, we have developed an innovative hybrid neural network method called 'HNN-MixCancer' specifically designed to predict the carcinogenic potential of various chemical binary mixtures accurately. This method combines the strengths of neural network algorithms with a mathematical framework that models the unique characteristics of chemical mixtures. By leveraging the complex relationships between mixture components and their concentrations modeled by a mathematical framework, the HNNMixCancer provides reliable predictions on the carcinogenicity of these mixtures. To ensure accuracy, we have constructed classification models within the HNN-MixCancer framework, considering factors such as the chemical composition and concentrations of the mixtures. Additionally, we have enhanced the training data by introducing assumption-based virtual chemical mixtures. Preliminary results from the HNNMixCancer demonstrate promising outcomes, successfully predicting the dose-dependent carcinogenic potential of chemical mixtures. Rigorous testing and validation processes against known experimental data have been conducted to evaluate the model's performance, ensuring its reliability and accuracy in predicting the carcinogenic properties of diverse chemical mixtures.

The HNNMixCancer opens up new avenues for predicting the carcinogenic potential of chemical mixtures, offering a valuable tool for risk assessment and decision-making in various industries and regulatory bodies. The introduction of this novel methodology, alongside the enhanced dataset on carcinogenic mixtures, represents a significant advancement in computational toxicology. Furthermore, the method developed in this study lays the foundation for future research endeavors, inspiring further innovation and exploration of New Approach Methodologies (NAM) in profiling mixture carcinogenesis alternatives to animal models.

2. Methods

2.1. Data Collection

I. Mixtures from the literature and Drug Combination Database (DCDB)

1. Literature-Derived Mixtures

We obtained 54 carcinogenic binary chemical mixtures (

Supplementary Table S1) and 25 binary drug mixtures without carcinogenic effects but exhibiting anticancer properties (

Supplementary Table S2) from various literature sources.

2. Mixtures from the drug combination database (DCDB)

We obtained 942 drug combinations from the DCDB database at

www.cls.zju.edu.cn/dcdb/download.jsf. Out of these combinations, 757 binary combinations were mapped with the corresponding drug bank ID using the DCC_ID of each drug. Mordred descriptors were calculated for a subset of 646 drug combinations. The SMILES and drug bank ID information for the DCDB drug combinations were sourced from

www.drugbank.ca.

Ia. Literature derived and DCDB mixtures data for binary classification models

We gathered 54 carcinogenic and 25 non-carcinogenic chemical combinations from existing literature, along with 30 drug combinations from DCDB identified as non-carcinogens. Due to the absence of precise dose values in this dataset, we assumed equal concentrations of the chemical components in each mixture when calculating descriptors.

Ib. Validating Binary Classification Models with Assumption-Based Virtual Mixture Dataset.

To test the accuracy of the prediction models developed for assumption-based virtual binary mixtures, we utilized a validation set comprising 54 carcinogenic and 25 non-carcinogenic binary mixtures collected from the literature (section 1a) and 30 binary drug combinations from the DCDB. The procedure for constructing the assumption-based virtual binary mixtures is described in section II.

II. Compilation of Mixture Data for the Creation of an Assumption-Based Virtual Mixture Dataset

Due to the limited availability of experimental data on the carcinogenicity of chemical mixtures for the purpose of developing machine learning models, we sought out individual chemicals classified as carcinogenic or non-carcinogenic from reputable data sources. These sources included the Military Exposure Guideline (MEG), the National Toxicology Program (NTP), the International Agency for Research on Cancer (IARC), the Japan Society for Occupational Health (JSOH), and the Carcinogenic Potency Database (CPDB). Subsequently, using a series of assumptions outlined in section IIa, we then generated mixtures comprising both carcinogenic and non-carcinogenic substances.

1. Chemical Exposure Guidelines for Deployed Military Personnel Version 1.3 (MEG)13

The carcinogenicity data were sourced from the Technical Guide 230 Environmental Health Risk Assessment and Chemical Exposure Guidelines for Deployed Military Personnel (MEG). The MEG dataset encompasses acute and chronic exposure data for carcinogenic air, water, and soil chemicals. These carcinogenic chemicals are classified into five distinct groups: Group A (Human Carcinogen), Group B (Probable human carcinogen), Group C (Possible human carcinogen), Group D (Not classifiable), and Group E (No evidence of carcinogenicity).

2. National Toxicology Program (NTP)14

We collected carcinogenic chemicals classified by the National Toxicology Program (NTP) that fall into two distinct groups: 1) chemicals that are reasonably anticipated to be human carcinogens and 2) chemicals that are known to be human carcinogens.

3. International Agency for Research on Cancer (IARC)15

We collected IARC carcinogenic chemicals that are categorized into five groups: Group 1 (Carcinogenic to humans), Group 2A (Probably carcinogenic to humans), Group 2B (Possibly carcinogenic to humans), Group 3 (Not classifiable as to its carcinogenicity to humans), and Group 4 (Probably not carcinogenic to humans).

4. The Japan Society for Occupational Health (JSOH)16

We collected carcinogenic chemicals from the JSOH (Japan Society for Occupational Health) published Recommendations for Occupational Exposure Limits, which categorize carcinogenic chemicals into three groups: Group 1 (carcinogenic to humans), Group 2A (probably carcinogenic to humans), and Group 2B (possibly carcinogenic to humans).

5. Carcinogenic Potency Database (CPDB)17

We obtained the median toxicity dose (TD50) rat carcinogenicity data from the CPDB. TD50 refers to the dose-rate in mg/kg body wt/day that, when administered throughout life, induces cancer in half of the test animals. For rat carcinogenicity, we obtained 561 carcinogenic chemicals annotated with TD50 values and 605 annotated as noncarcinogenic chemicals.

IIa. Generating Virtual Mixtures for Binary Classification Models: Assumptions and Methods

We employed permutations and combinations methods to generate binary and multiple mixture combinations from individual chemicals. Considering the vast number of possible combinations, we used a representative sampling approach to capture the diverse range of combinations while reducing the total number. This was accomplished by selecting chemical samples representing highly similar, medium similar, and low similar (diverse) combinations using similarity metrics such as Tanimoto similarity coefficients. We adopted different assumptions interchangeably to determine the carcinogenic and noncarcinogenic assignments for the generated combinations, aiming to achieve optimal predictive performance. Additionally, we applied the read-across procedure, which assumes that similar molecules exhibit similar characteristics, to assign carcinogenic properties. In all of the aforementioned assumptions, we assigned a value of '1' to indicate carcinogenicity for a component chemical or resultant mixture and a value of '0' to indicate non-carcinogenicity. For the training and test datasets, we included 80% of the generated data in the training set and the remaining 20% in the test set, following all assumptions.

Assumptions creating the chemical mixture combinations – case examples

Case 1: If each chemical in a combination is carcinogenic, the resultant mixture is considered carcinogenic or toxic (1 + 1 = 1).

Case 2: If each chemical in a combination is noncarcinogenic, the resultant mixture is considered noncarcinogenic (0 + 0 = 0).

Case 3: If each chemical in a combination is noncarcinogenic, but the resultant mixture is carcinogenic (0 + 0 = 1).

Case 4: If at least one chemical in a combination is carcinogenic, the resultant mixture is considered carcinogenic (1 + 0 = 1).

Case 5: If at least one chemical in a combination is carcinogenic, the resultant mixture is considered carcinogenic (0 + 1 = 1).

Similar assumptions were made for other mixture combinations, including multiple mixtures.

Considerations of Chemical Dose and Concentration

In this study, we report only the results of the virtual binary mixtures obtained from Case 1 (the combinations were formed by mixing carcinogen with another carcinogen chemical that produces a carcinogenic mixture) and Case 2 (the mixtures were formed by mixing noncarcinogen with another noncarcinogen chemical that produces a carcinogenic combination). Importantly, mixture formation requires concentration information of each component chemical that makes the mixture to calculate the mixture descriptor. Therefore, we used the reported concentration information associated with a chemical while making mixture combinations. In instances where concentration information was unavailable for certain chemical data, we assigned equal concentrations to those cases during the mixture formation process.

Binary chemical classification into carcinogenic and non-carcinogenic categories involved creating virtual mixtures from the following two data sources.

- (i)

Data Source 1 (MEG): Obtained 106 carcinogenic chemicals (Group A, B, and C) and 3 noncarcinogenic chemicals (Group E) with concentration information. Chemicals in Group D were not considered in either class. We created 5,565 carcinogenic binary mixtures from all possible combinations of the 106 carcinogenic single chemicals. We also created 3 noncarcinogenic binary mixtures from possible combinations of the 3 noncarcinogenic single chemicals. Then we calculated the concentration fraction using the concentration of each single chemical and computed the descriptors of the mixtures.

- (ii)

Data Source 5 (CPDB): After removing duplicates and conflicting chemicals, this data set contributed 508 carcinogenic and 580 non-carcinogenic chemicals compared to Data Source 1 (MEG). For CPDB data, we calculated the concentration fraction using the TD50 value of each single chemical for carcinogenic mixtures and equal concentration for non-carcinogenic mixtures (as TD50 values do not apply to non-carcinogens). Then we computed the descriptors of the mixtures.

The training set for the binary classification model included 5,565 carcinogenic mixtures from MEG and 5,000 randomly selected mixtures (out of 128,778) from CPDB. It also included 3 noncarcinogenic combinations from MEG and 10,000 randomly selected mixtures (out of 167,910) from CPDB. Thus, the final training set consisted of 10,565 carcinogenic mixtures and 10,003 noncarcinogenic mixtures.

Preparation of distinct Test data set: Separating Compounds into training and test set

To make a distinct test data set, compounds were separated into training and test sets to develop binary classification models, ensuring that the compounds in the test set were not present in the training set.

- (i)

Data Source 1 (MEG): The 106 carcinogenic chemicals were divided into 84 for training and 22 for test sets. From this data, 3,486 training and 231 test set carcinogenic binary mixtures were created by considering all possible combinations.

- (ii)

Data Source 5 (CPDB): Among the 508 carcinogens, 408 were allocated for training and 100 for the test set. This data created 83,028 training and 4,950 test sets carcinogenic binary mixtures by considering all possible combinations. Additionally, the 580 non-carcinogens were divided into 464 for training and 116 for test sets. From this data, 107,416 training and 6,670 test sets non-carcinogenic binary mixtures were created by considering all possible binary combinations.

The combined training set for the binary classification model consisted of 10,086 carcinogenic mixtures (3,486 from MEG and 6,600 randomly selected from the 83,028 in CPDB) and 10,003 non-carcinogenic mixtures (3 from MEG and 10,000 randomly selected from the 107,416 in CPDB). The combined test set included 5,181 carcinogenic mixtures (231 from MEG and 4,950 from CPDB) and 6,670 non-carcinogenic mixtures (from CPDB).

IIb. Generating Virtual Mixtures for Multiclass Classification Models: Assumptions and Methods

2.2. Assumptions

We focused on intra-class mixtures by combining two chemicals of the same class, resulting in a mixture representing that class. Inter-class mixtures were not reported in this study.

For multiclass classification, equal concentrations of component chemicals were used to calculate concentration fractions. Descriptors of the binary mixtures were then computed.

The multiclass models included three classes:

Class 0 for noncarcinogens,

Class 1 for possibly carcinogenic and not classifiable chemicals, and

Class 2 for carcinogens and probably carcinogens.

The dataset comprised 459 chemicals in class 0, 604 chemicals in class 1, and 555 chemicals in class 2, obtained from five different data sources. Mixtures for each class were created by combining two chemicals from the same class in equal concentrations.

- (i.)

Data 1 (MEG): Chemicals classified into Groups A and B were considered class 2. Chemicals in Groups C and D were classified as class 1. Chemicals in Group E were classified as class 0.

- (ii.)

Data 2 (NTP): Chemicals classified as "reasonably anticipated to be a human carcinogen" or "known to be human carcinogens" were classified as class 2.

- (iii.)

Data 3 (IARC): Chemicals classified into Groups 1 and 2A were categorized as class 2 carcinogens, while those in Groups 2B and 3 were classified as class 1 carcinogens.

- (iv.)

Data 4 (JSOH): Chemicals classified into Groups 1 and 2A were considered class 2 carcinogens, and those in Group 2B were classified as class 1 carcinogens.

A total of 882 carcinogenic chemicals and 2 noncarcinogenic chemicals were collected from the four data sources mentioned above. The combined dataset consisted of 2 instances in class 0, 604 instances in class 1, and 278 instances in class 2.

- (v.)

Data 5 (CPDB): From CPDB chemicals in data source 5, after removing duplicates and repetitive conflicting chemicals, an additional set of 277 carcinogenic chemicals and 457 noncarcinogenic chemicals were obtained. The 277 carcinogenic chemicals were categorized as class 2, and the 457 noncarcinogenic chemicals were categorized as class 0.

Using the individual chemical datasets mentioned above, we generated the following binary mixtures:

Class 0: 459 individual class 0 chemicals resulted in 105,111 binary mixtures.

Class 1: 604 individual class 1 chemicals resulted in 182,106 binary mixtures.

Class 2: 555 individual class 2 chemicals resulted in 153,735 binary mixtures.

For the multiclass classification model, we selected the following carcinogenic mixtures:

5,000 randomly chosen mixtures (out of 105,111) from class 0.

8,000 randomly chosen mixtures (out of 182,106) from class 1.

7,000 randomly chosen mixtures (out of 153,735) from class 2.

Preparation of distinct Test data set: Separating Compounds into training and test set

Multiclassification models were also developed by splitting compounds into training and test sets, ensuring that the compounds in the test set were not present in the training set.

- (i.)

Class 0: The 459 compounds in class 0 were divided into 367 for training and 92 for testing. All possible combinations resulted in 67,161 carcinogenic binary mixtures in the training set and 4,186 mixtures in the test set.

- (ii.)

Class 1: The 604 compounds in class 1 were divided into 482 compounds for training and 122 compounds for testing. All possible combinations resulted in 115,921 carcinogenic binary mixtures in the training set and 7,381 mixtures in the test set.

- (iii.)

Class 2: The 555 compounds in class 2 were divided into 443 for training and 112 for testing. All possible combinations resulted in 97,903 carcinogenic binary mixtures in the training set and 6,216 in the test set.

The combined training set for the multiclass classification model consisted of 20,000 randomly selected binary mixtures, with 5,000 from class 0, 8,000 from class 1, and 7,000 from class 2. The test set consisted of 17,783 binary mixtures, with 4,186 from class 0, 7,381 from class 1, and 6,216 from class 2.

IIC. Carcinogenicity Assessment of Virtual Mixtures using Concentration Addition (CA) Regression Models: Assumptions and Methods

Assumption:

We assumed that a mixture’s TD50 (median toxic dose) is the average of the TD50 values of its two component chemicals. This assumption is based on the Concentration Addition (CA) model, which assumes simple addition and a sum of Toxic Units (TUs) equal to 1. (See the regression model section below).

CPDB Data: 157,080 carcinogenic binary mixtures were generated by considering all possible combinations of 561 single carcinogenic chemicals. The TD50 value of each individual chemical was utilized to calculate the concentration fraction, and the descriptors for the resulting mixtures were computed.

Final Dataset: For our regression model, we selected 20,000 carcinogenic mixtures randomly from the pool of 157,080 combinations.

Preparation of distinct Test data set: Separating Compounds into training and test set

Regression models were developed by splitting the compounds into training and test sets and ensuring that the compounds in the test set were distinct from those in the training set.

CPDB Data: The 561 carcinogenic chemicals were divided into 449 for training and 112 for testing. All possible binary combinations resulted in 100,576 carcinogenic binary mixtures in the training set and 6,216 mixtures in the test set.

The final training set for the regression model comprised 20,000 randomly selected carcinogenic mixtures from the pool of 100,576 combinations. The test set consisted of 6,216 binary mixtures.

Regression Model

The concentration addition (CA) model describes mixture toxicity as follows:

whereas, EC50mix represents the median effective concentration of the mixture, CA, CB and CM denote the concentrations of components A, B, and the mixture, respectively, that are required to produce a median effect (50% effect) by the mixture, EC50A and EC50B refer to the median effective concentration of components A and B when acting individually as single compounds. If the sum of toxic units (TU) at the median inhibition is equal to 1, it indicates a simple addition.

This study employed the Concentration Addition (CA) model with a simple addition approach to determine the mixture TD

50 in the CPDB data. The TD

50 of the mixture given by the CA model is (from equation 1):

The sum of toxic units (TUs) of each component gives the joint TU of the mixture.

Considering the equitoxic ratio of the components A and B, the toxic unit of the two chemicals should be TU

A:TU

B = 0.5:0.5. Thus,

Hence, the TD

50 of the mixture is the average of the TD

50 of the component chemicals.

With

available, the concentration of the component chemicals A and B can be calculated by using the concentration fraction

and

as the scaling factor,

Descriptor Calculation

Descriptor Calculation for Component Chemicals

SMILES is the chemical structure representation using ASCII strings. Descriptors were calculated using Mordred descriptor calculator18 which calculates 1,613 2D molecular descriptors from SMILES. We kept a final set of 653 descriptors with no missing values in any of the calculated descriptors.

Descriptor Calculation and Integration of Dose-Dependent Relationship for the Chemical Mixture: Mathematical Method and Process

The dose-dependent ratio of different chemical components in a mixture is computed as follows:

All the collected data are converted to mol/L before calculating the log (1/EC50 or LC50, or ED50). Mole fractions of the component chemicals in a mixture were calculated from their median effective concentration when acting alone and their corresponding carcinogenicity or toxicity ratio in the mixture. Dose-dependent chemical mixture descriptors is calculated using three different mathematical formulas (Sum, Difference, and Norm) as the basis as described previously19.

Sum: The mixture descriptor

is calculated as the sum of the molecular descriptors or fingerprints

,

…

of the two or more components weighted by their respective mole fractions (dose-dependent)

,

…

in the mixture:

Difference: The mixture descriptor

is calculated as the absolute difference between the molecular descriptors:

Norm: The mixture descriptor

is calculated as below for the molecular descriptors

:

In this study, we used and reported only the Sum method results. Mixture descriptors ‘

for the 653 descriptors was calculated using the Sum method

19. The mixture descriptor

is calculated as the sum of the molecular descriptors

&

of the two component chemicals of the mixture each scaled by their respective concentration fractions

&

in mg/L.

Structural representation descriptor using SMILES of the chemicals:

The SMILES

for the mixture was generated by simple concatenation of the two SMILES strings

and

with a period (

) as the separator.

SMILES Preprocessing:

In our deep learning model, we utilize the ASCII strings of the SMILES representation of chemicals as an input feature. Python's Tokenizer class is employed to encode the SMILES string into numerical form. We consider the 94 characters in the ASCII table, represented by decimal numbers 33 to 126, to ensure that no character in the SMILES string, regardless of its format, is missed. These 94 characters made the vocabulary of the possible characters in the SMILES. A dictionary

is created, that maps every character in the list of 94 ASCII characters to a unique index. A vector is created for the SMILES of each compound by converting each character in the SMILES string to its corresponding index in the dictionary. The resulting vector for the SMILES is padded with 0s or truncated so that they are of uniform length, L. We previously described in detail, the SMILES preprocessing of chemicals20−22.

Machine Learning Method

Hybrid Neural Network Method

We utilized the Hybrid Neural Network (HNN) framework20−22, which we had developed for single chemical toxicity and carcinogen prediction. In contrast to the original model's one-hot encoding of SMILES, here we vectorize the SMILES using the method described in the SMILES preprocessing section. The HNN method, implemented using the Keras API in Python, comprises a convolutional neural network (CNN) for learning structural attributes (SMILES) and a feed-forward neural network (FFNN) for learning from chemical descriptors. A CNN framework processes the complex matrix generated from the SMILES strings, while a multilayer perceptron (MLP) FFNN is employed to process and learn from the chemical descriptors and fingerprints. In this approach, the Keras embedding layer is utilized to convert each character's index in the SMILES string into a dense vector of size 100. Consequently, the input 2D array of size KxL, where 'K' represents the number of SMILES and 'L' denotes the maximum length of SMILES, is transformed into a 3D array of size KxLx100. The 3D array, consisting of one-hot encoded SMILES strings and image bytes, serves as the input for the CNN, while the chemical descriptors and fingerprints are provided as input to the FFNN. The output from the pooling layer of the CNN is merged with the final fully connected layer of the FFNN to perform the ultimate classification. For binary classification, the sigmoid activation function is applied. At the same time, for multi-classification, the fully connected layer utilizes the softmax activation function, generating ‘N’ probabilities, each corresponding to a specific class. For all methods, 30 simulations/iterations are executed, and the average is calculated for statistical metrics such as AUC, accuracy, selectivity, sensitivity, and precision.

Developing Binary and Multiclass Classification Models using Alternative Machine Learning Methods

To compare and evaluate the predictions of the HNN method, we developed binary and multiclass classification models utilizing various machine learning techniques, including Random Forest (RF), Bagged Decision Tree (Bootstrap aggregating or Bagging), and Adaptive Boosting (AdaBoost).

Developing Regression Models using Alternative Machine Learning Methods

To compare and evaluate the predictions of the HNN method, we developed regression models using various machine learning methods, including Random Forest (RF), Support Vector Regressor (SVR), Gradient Boosting (GB), Kernel Ridge (KR), Decision Tree with AdaBoost (DT), and KNeighbors (KN). These models were implemented using the sklearn package in Python to generate the final consensus prediction of the mixture carcinogenicity. All these different machine learning methods were used for the comparative performances with our hybrid HNN method and to obtain consensus method predictions. The consensus predicted value was determined by calculating the average of the predicted values generated by the seven methods.

Model Evaluation

Binary and multiclass classification model:

We employed a robust evaluation process to evaluate the mixture classification models' performance. First, approximately 20% of the available data was randomly (until otherwise specified) allocated as the test set for each iteration to ensure an unbiased assessment. The evaluation process was repeated for 30 iterations, and the average results were used to gauge the model's performance.

Several metrics were employed to assess the classification models. Accuracy, which measures the proportion of correctly classified instances, was utilized as a primary evaluation metric. Additionally, the Area Under the Receiver Operating Characteristic Curve (AUC) was calculated to assess the model's ability to discriminate between different classes. Sensitivity, representing the true positive rate, and Specificity, representing the true negative rate, were also considered to evaluate the model's performance. These metrics provide insights into the model's ability to identify positive and negative instances within the dataset correctly. Supplementary Equation S1 provides further details on how these metrics were computed.

We utilized micro averaging for multiclass classification to calculate the average value across all classes. This technique involved converting the data into binary classes and assigning equal weight to each observation, enabling a fair evaluation of the model's performance across multiple classes. By considering the average performance across all classes, we obtained a comprehensive understanding of the model's overall classification accuracy and performance.

Regression model

In the regression analysis, a similar evaluation process was conducted. In each of the 30 iterations, approximately 20% of the data were randomly set aside (until otherwise specified) as the test set to evaluate the model's predictive performance. This random allocation ensured that the test set was representative of the entire dataset and reduced the potential for bias.

To assess the regression models, several metrics were employed. Mean Square Error (MSE), which quantifies the average squared difference between the predicted and actual values, provided a measure of the model's overall prediction accuracy. Mean Absolute Error (MAE) was also utilized to evaluate the average magnitude of the errors made by the model. Additionally, the Coefficient of Determination (R2), a measure of the proportion of variance in the dependent variable explained by the model, was calculated to assess the goodness of fit. Supplementary Equation S2 provides further details on how these metrics were computed.

By considering these metrics, we could comprehensively evaluate the regression models' performance. The MSE and MAE metrics provided insights into the accuracy and precision of the predictions. In contrast, the R2 metric allowed us to assess the overall goodness of fit of the model to the data. Through this rigorous evaluation process, we obtained a thorough understanding of the classification and regression models' performance in predicting the carcinogenic potential of chemical mixtures. These evaluation metrics enable us to assess the models' accuracy, discrimination ability, and predictive capabilities, thereby ensuring the reliability and robustness of our findings.

3. Results and Discussion

Here, we developed the Hybrid Neural Network framework called HNN-MixCancer (referred to as HNN) to predict the carcinogenic potential of chemical mixtures. To simulate the chemical mixtures, a mathematical framework was combined with the HNN framework. To assess the prediction performance of the HNN models, we constructed binary classification, multiclass classification, and regression models. To build these models, we utilized a combination of experimental data from the literature that provided information on chemical mixtures. However, given the limited availability of experimental mixture data, we also generated assumption-based virtual chemical mixtures to enrich the training dataset. This approach allowed us to compensate for the lack of empirical data and improve the robustness of our models. To compare and improve the prediction performance of the HNN method, we also developed classification models based on several other methods, including Random Forest (RF), Bootstrap Aggregating (Bagging), Adaptive Boosting (AdaBoost), Support Vector Regressor (SVR), Gradient Boosting (GB), Kernel Ridge (KR), Decision Tree with AdaBoost (DT), and KNeighbors (KN). These alternative methods were employed to develop binary, multiclass, and regression models, ensuring a comprehensive evaluation of the prediction performance. We also utilized these methods to generate a consensus prediction by considering their collective output.

The binary classification models were designed to determine whether a chemical mixture is carcinogenic or noncarcinogenic, providing a clear classification of its potential risk. On the other hand, the multiclass classification models aimed to categorize chemical mixtures into different classes based on their degree of carcinogenicity. These models facilitated a more nuanced understanding of the mixture's potential effects, allowing for a finer classification of their carcinogenic potential. When dose information was absent, both the binary and multiclass models relied on equal concentrations of the component chemicals to calculate the concentration fraction and derive a weighted mixture descriptor.

Regression models, on the other hand, were employed to predict the effective concentration at which a chemical mixture becomes carcinogenic. These models assumed a simple addition of the concentration addition (CA) model, incorporating an equitoxic ratio of the component chemicals to calculate the mixture's carcinogenicity. The regression models leveraged this information to estimate the specific concentration at which the mixture exhibits carcinogenic effects. To obtain the mixture descriptor, the weight was calculated using the TD50 (median toxic dose) of the single carcinogens, and the mixture TD50 was determined by averaging the TD50 values of the component chemicals. This approach allowed for the quantification and characterization of the carcinogenic potential of the chemical mixtures.

By employing these diverse models within the HNN framework, we aimed to provide accurate predictions of the dose-dependent carcinogenic potential of chemical mixtures. Through the binary and multiclass models, we obtained valuable insights into the classification of mixtures based on their carcinogenicity. In contrast, the regression models offered quantitative estimations of the effective concentrations at which carcinogenic effects occur. This comprehensive modeling approach allowed us to capture the complex relationships between mixture components, concentrations, and their carcinogenic potential, ultimately enhancing our understanding of the risks associated with chemical mixtures. The detailed results and their discussions are presented in the following sections.

I. Carcinogenicity Prediction through Binary Classification using Literature and DCDB Data

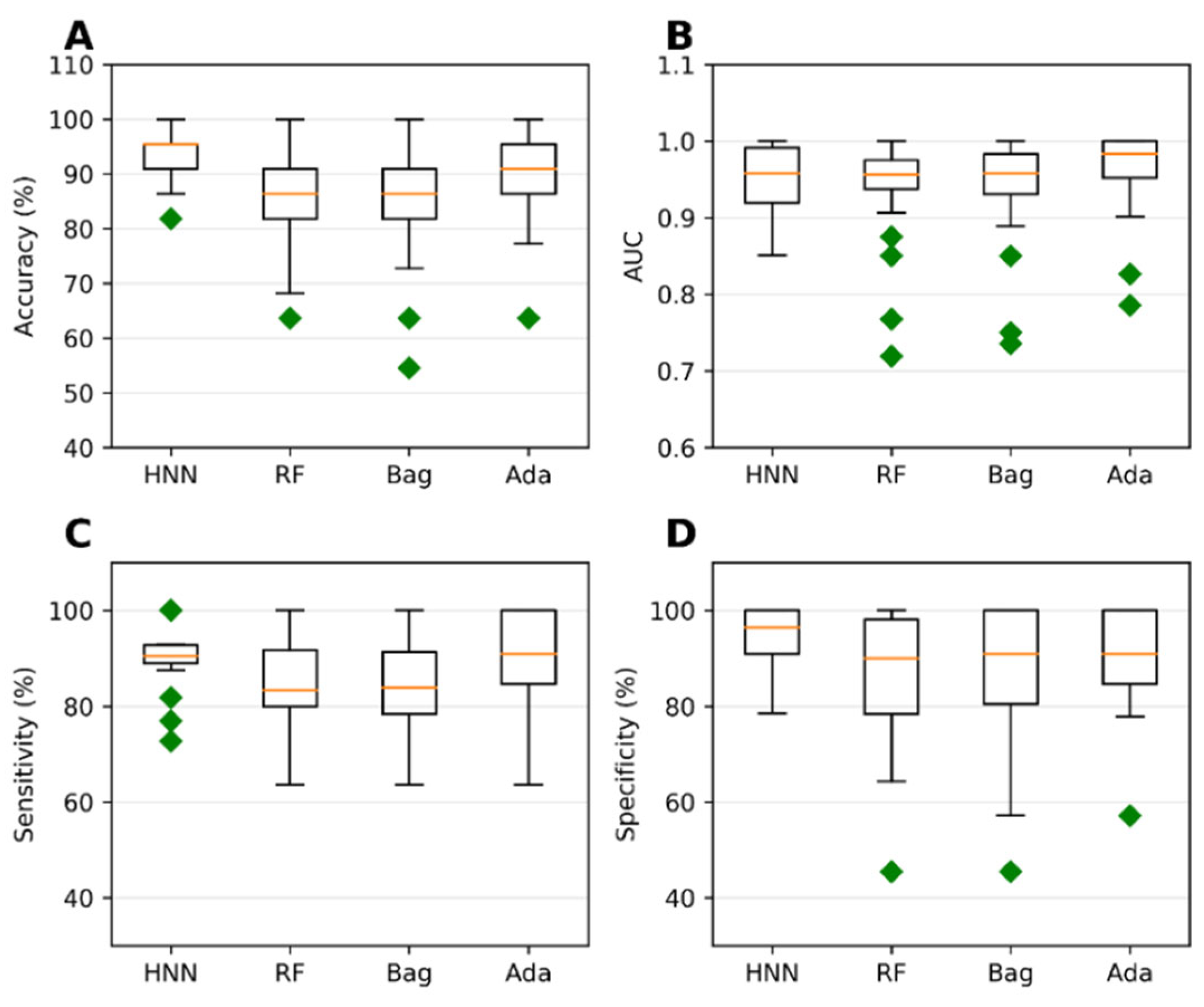

Binary classification models were developed using the HNN, RF, Bagging, and AdaBoost methods to predict carcinogenicity. The models were trained on a dataset consisting of 54 carcinogenic and 25 non-carcinogenic combinations from the literature, along with 30 drug combinations from the DCDB database classified as non-carcinogens. Among the models, the HNN demonstrated the highest accuracy of 92.72%, sensitivity of 90.85%, and specificity of 94.82% (

Figure 1a, c, d). Additionally, the HNN and AdaBoost achieved the highest AUC of approximately 0.96 compared to other methods (

Figure 1b). In summary, the HNN outperformed other machine learning methods in terms of accuracy and achieved the highest AUC for carcinogenicity prediction.

II. Predicting Carcinogenicity through Binary Classification using Assumption-based Virtual Binary Mixtures

During our comprehensive survey of various data sources, it became apparent that there was a lack of experimental data in the literature that could be used to train the binary mixture models required for our research on carcinogenicity prediction. In light of this limitation, we developed a strategy to address the issue by creating a virtual library of binary mixtures. This involved incorporating several assumptions, which are thoroughly explained in the methods section of our study. By employing this approach, we successfully expanded the training dataset by including both carcinogenic and noncarcinogenic mixtures. Subsequently, we utilized this augmented dataset to construct accurate and reliable machine learning models. A noteworthy advantage of utilizing assumption-based binary mixtures is the ability to encompass a diverse range of structural variations within our dataset. This inclusion significantly improves the representativeness and robustness of our models, enabling them to capture a broader spectrum of real-world scenarios.

A) Carcinogenicity Prediction of Chemical Mixtures using Randomly Selected Training and Test Sets

To assess the predictive capabilities of the HNN (Hybrid Neural Network) alongside other machine learning methods, we commenced the evaluation process by simulating randomly selected mixtures for both the training and test datasets. This approach allowed us to assess the performance and generalization ability of the models when challenged with new and unknown or unfamiliar carcinogenic data. By employing this rigorous testing methodology, we aimed to obtain reliable and unbiased predictions of carcinogenicity for chemical mixtures.

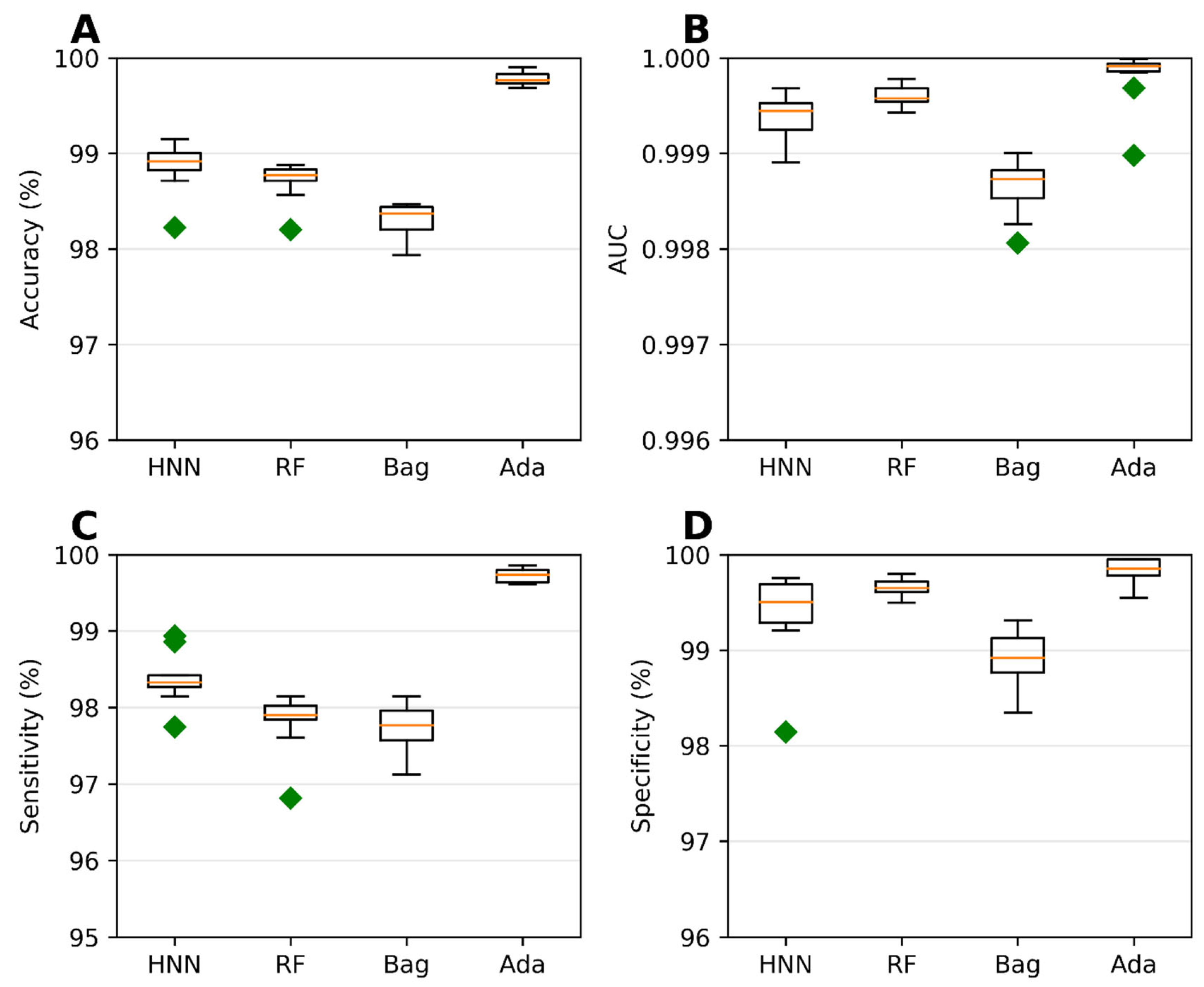

Carcinogenicity Prediction using Binary Classification

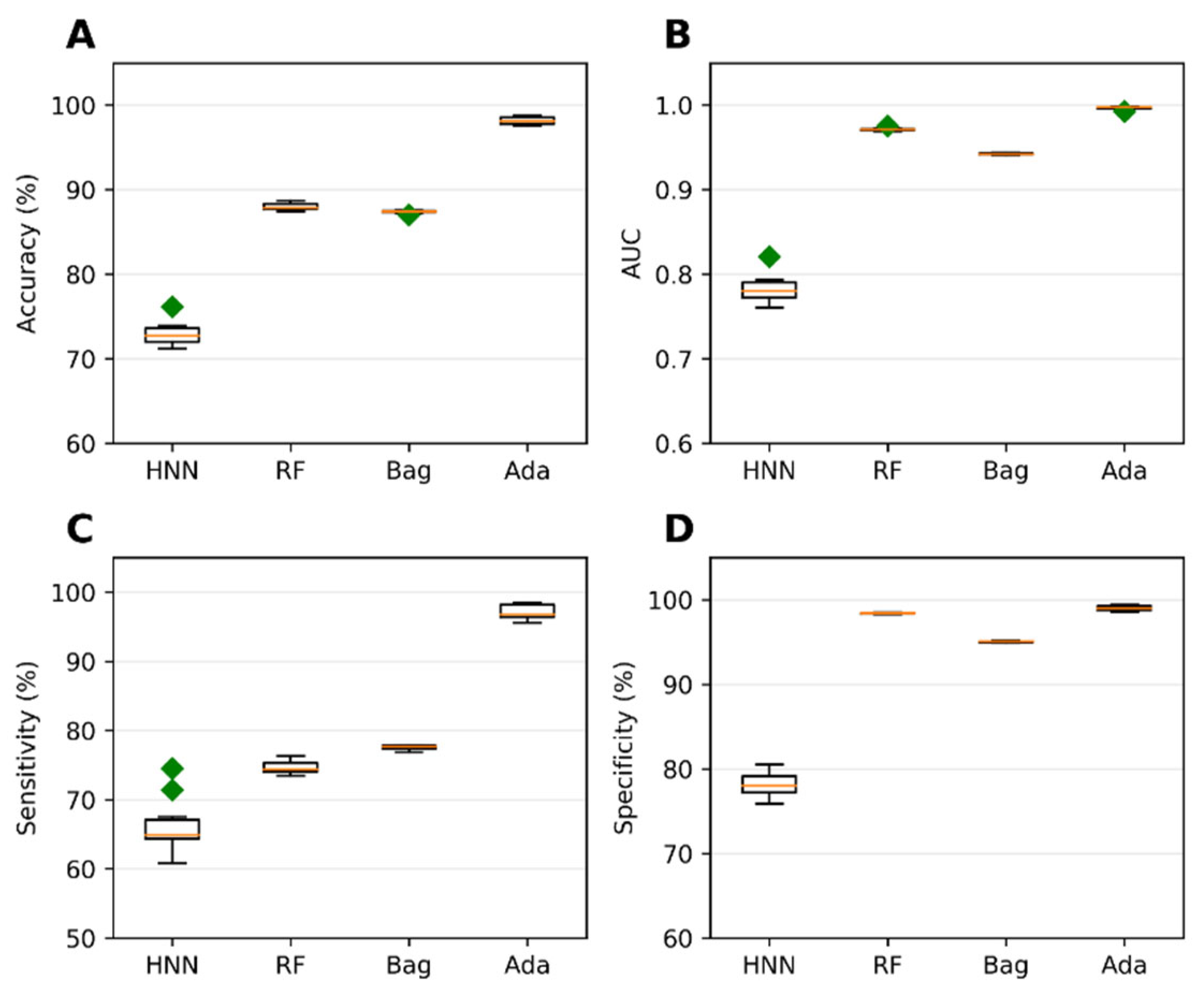

To assess the carcinogenic potential of virtual binary mixtures using a binary classification model, we formed carcinogenic mixtures by pairing carcinogens with other carcinogens. Conversely, noncarcinogenic mixtures were created by pairing noncarcinogens with other noncarcinogens. To compile our dataset of binary mixtures, we leveraged data from two reliable sources: the MEG and CPDB. Subsequently, binary classification models were developed using the HNN, RF, Bagging, and AdaBoost methods, considering 20,568 binary mixtures. In each simulation, a randomly selected 20% of the mixtures were reserved as a test set. The AdaBoost and HNN methods exhibited exceptional predictive performance among the binary classification models. Their statistical metrics, including accuracy, AUC, sensitivity, and specificity, surpassed 99% (

Figure 2). These findings highlight the superior capabilities of the AdaBoost and HNN in accurately predicting carcinogenicity within the context of binary mixtures.

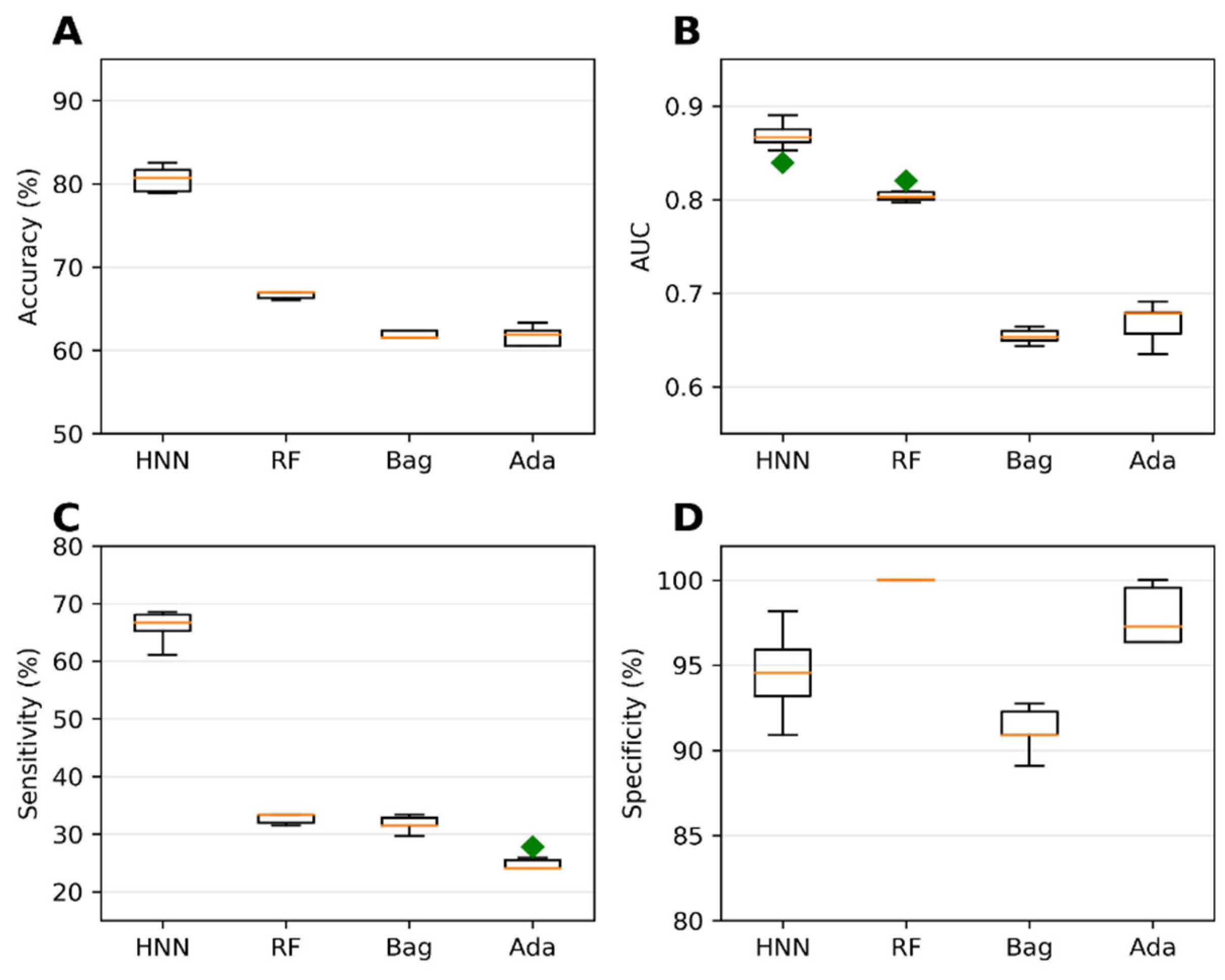

Validation of the binary classification models

Validation of the binary classification models was the next step in our study. The purpose was to conduct external validation to assess the prediction capability, reproducibility, and generalizability of the HNN to new and diverse datasets. The evaluation of the predictive ability of the binary classification models was performed using an external validation set as the test set. The external dataset used consisted of 79 mixture data obtained from the literature and 30 drug combinations sourced from the DCDB. We utilized a training set comprising 20,568 virtual binary mixtures to train the models. These mixtures were developed based on the binary mixture data from MEG and CPDB. The use of such a large training set enabled comprehensive coverage of various chemical combinations, contributing to the robustness of the models. Among the methods tested, the HNN demonstrated the highest accuracy of 80.55% during the external validation (

Figure 3a). This accuracy represents the HNN's ability to classify carcinogenic and non-carcinogenic mixtures correctly. The HNN-generated model also achieved an AUC of 0.86, indicating a robust discriminatory power in distinguishing between the two classes (

Figure 3b). Moreover, the sensitivity of the HNN model was determined to be 66.29%, indicating its capacity to identify carcinogenic mixtures (

Figure 3c) accurately. In contrast, the RF (Random Forest) model exhibited the best average specificity of 99.5% during the external validation (

Figure 3d). This signifies the RF model's ability to correctly classify non-carcinogenic mixtures with high precision, minimizing false positive predictions.

The exceptional predictive performance observed during the external validation demonstrates the HNN method's ability to predict the carcinogenicity of chemical mixtures. The high accuracy, AUC, and sensitivity obtained by the HNN provide strong evidence of its efficacy in identifying potential carcinogens accurately. Furthermore, the RF model's excellent specificity further highlights its capability to distinguish non-carcinogenic mixtures reliably. In summary, the external validation process confirmed the binary classification models' predictive capability, reproducibility, and generalizability. The HNN model exhibited excellent accuracy, AUC, and sensitivity in predicting the carcinogenicity of chemical mixtures, while the RF model excelled in specificity. These findings instill confidence in the HNN method's flexibility to predict the carcinogenic potential of various unknown chemical mixtures.

Carcinogenicity Prediction using Multiclass Classification

To enable the prediction of categorical carcinogenicity of chemical mixtures, we next proceeded to develop multiclass classification models. For this purpose, categorical data were collected from multiple sources, including MEG, NTP, IARC, and JSOH. Detailed information regarding the data sources, collection, training, and test set preparation can be found in the methods section. The chemicals included in the multiclass models were initially categorized into three classes: class 0, representing noncarcinogens, class 1, indicating possibly carcinogens and not classifiable chemicals; and class 2, representing carcinogens and probably carcinogens. This classification scheme allowed for a comprehensive assessment of the carcinogenic potential of chemical mixtures.

To construct the training sets, compounds within each class were paired together, creating mixtures belonging to the same class. The training set comprised 20,000 binary mixtures, which served as a foundation for training the multiclass classification models. Additionally, 20% of the mixtures were randomly selected for each simulation to form the test set, ensuring an unbiased evaluation of the models' performance. The multiclass classification models were developed using various methods, including HNN, RF, Bagging, and AdaBoost. These methods encompassed a range of algorithms and techniques, each with its own strengths and characteristics.

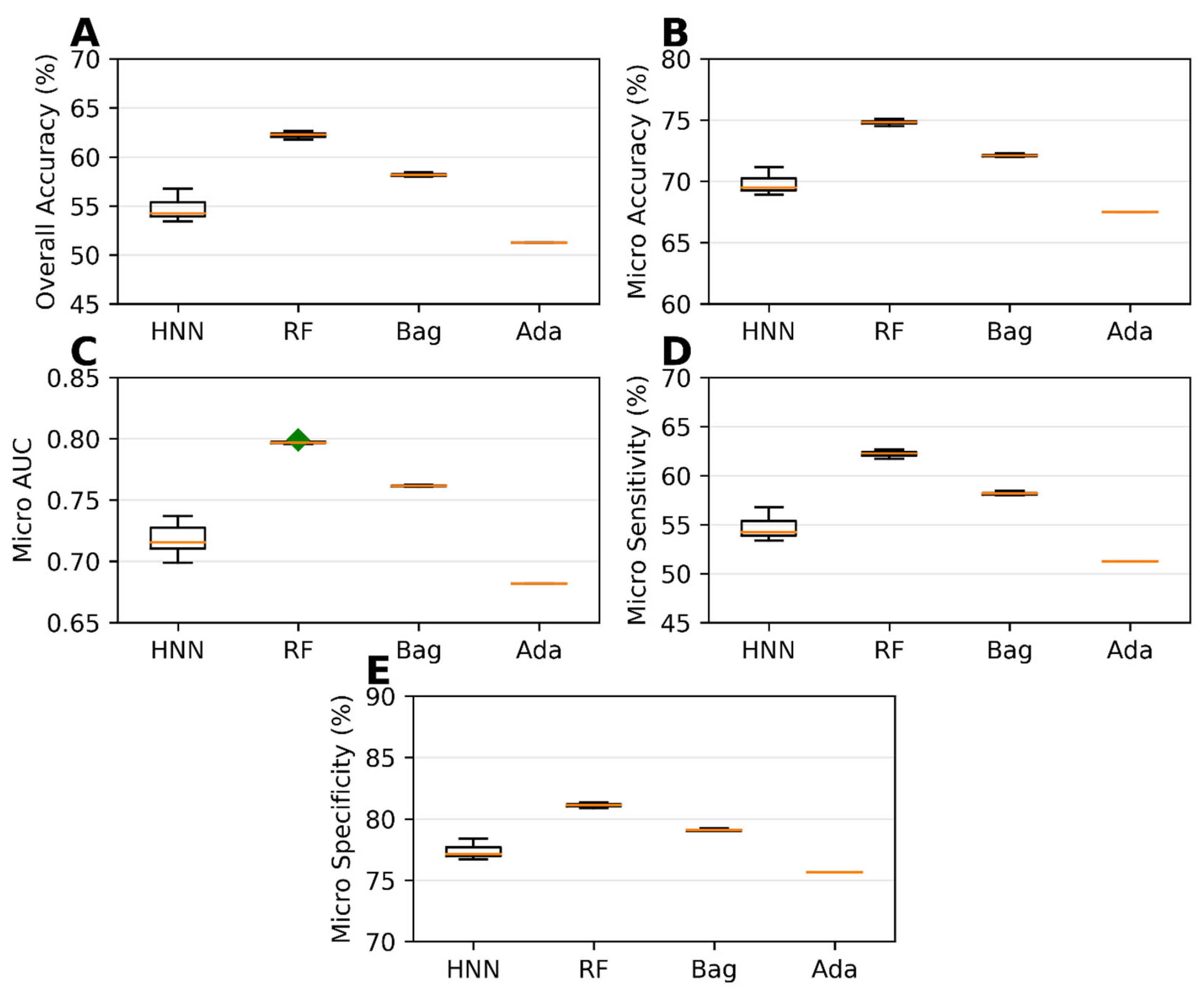

The HNN method exhibited the best predictive performance among the multiclass classification models. It achieved an overall accuracy of 96.03%, indicating its ability to correctly classify chemical mixtures into their respective classes (

Figure 4a). The micro accuracy was 97.35%, further highlighting the HNN method's capability to predict individual classes (

Figure 4b) accurately. Additionally, the HNN model achieved a micro AUC of 0.99, indicating a robust discriminatory power in distinguishing between the different classes (

Figure 4c). The micro sensitivity and micro specificity of the HNN model were found to be 96.03% and 98.01%, respectively (

Figure 4d and

4e), demonstrating its ability to identify both positive and negative cases correctly. In contrast, the AdaBoost method showed the lowest predictability among the multiclass classification models (

Figure 4). While the specific performance metrics for AdaBoost were not mentioned, it was observed that its predictive performance fell behind the other methods in terms of overall accuracy, AUC, sensitivity, and specificity. In summary, the development of multiclass classification models enabled the prediction of the categorical carcinogenicity of chemical mixtures. The HNN method exhibited superior predictive performance, achieving high accuracy, AUC, sensitivity, and specificity. This indicates its efficacy in accurately classifying chemical mixtures into their respective classes. On the other hand, AdaBoost showed the lowest predictability among the methods for multiclassification.

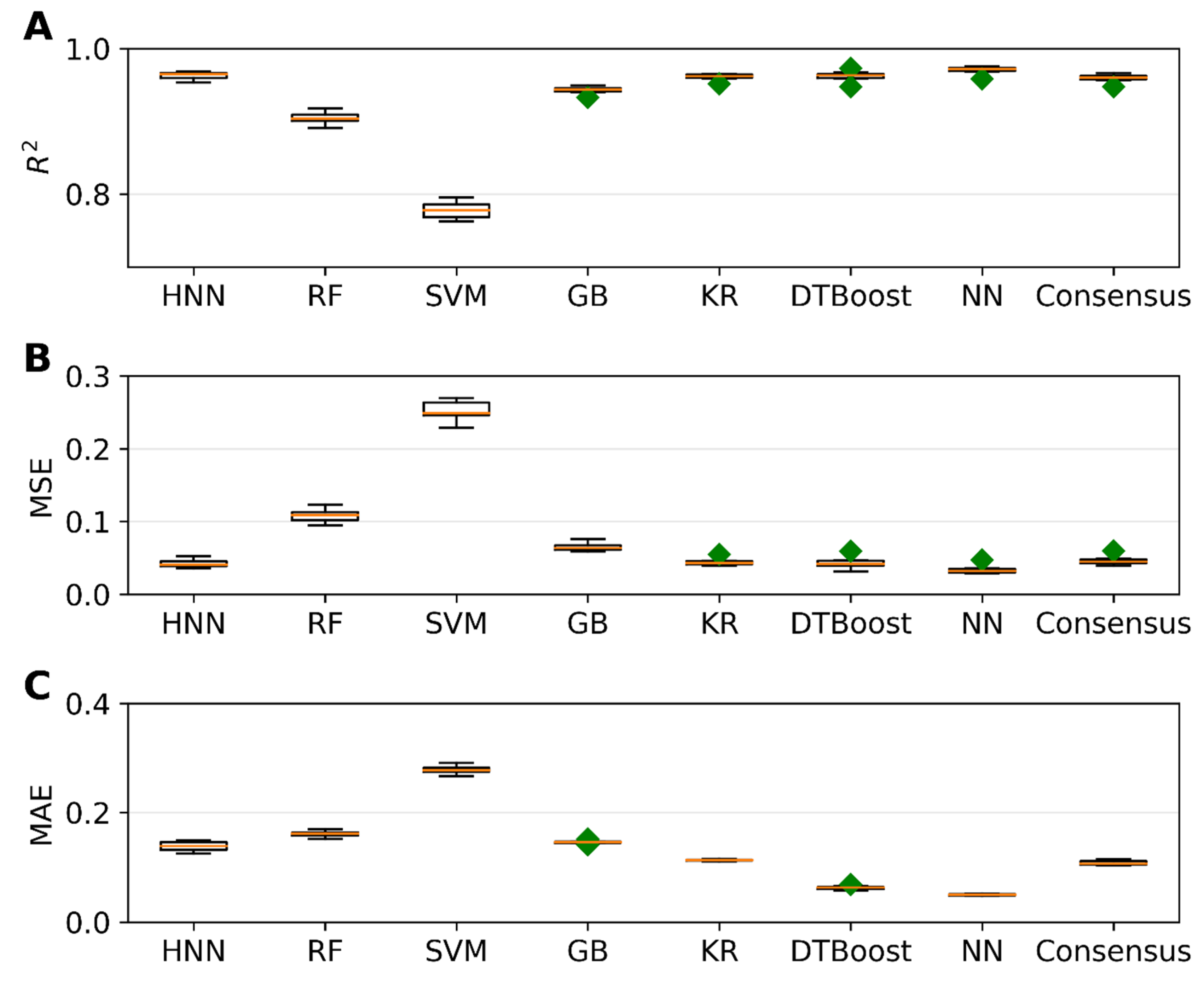

Carcinogenicity Prediction using Regression

Next, we aimed to predict carcinogenic potency by utilizing various regression models. To accomplish this, we employed the carcinogenic potency TD

50 data sourced from the CPDB, as outlined in the methods section. The regression models were developed using different methods, including HNN, RF, SVR, GB, KR, DT, and KN. To evaluate the performance of these regression models, we utilized three metrics: coefficient of determination (r

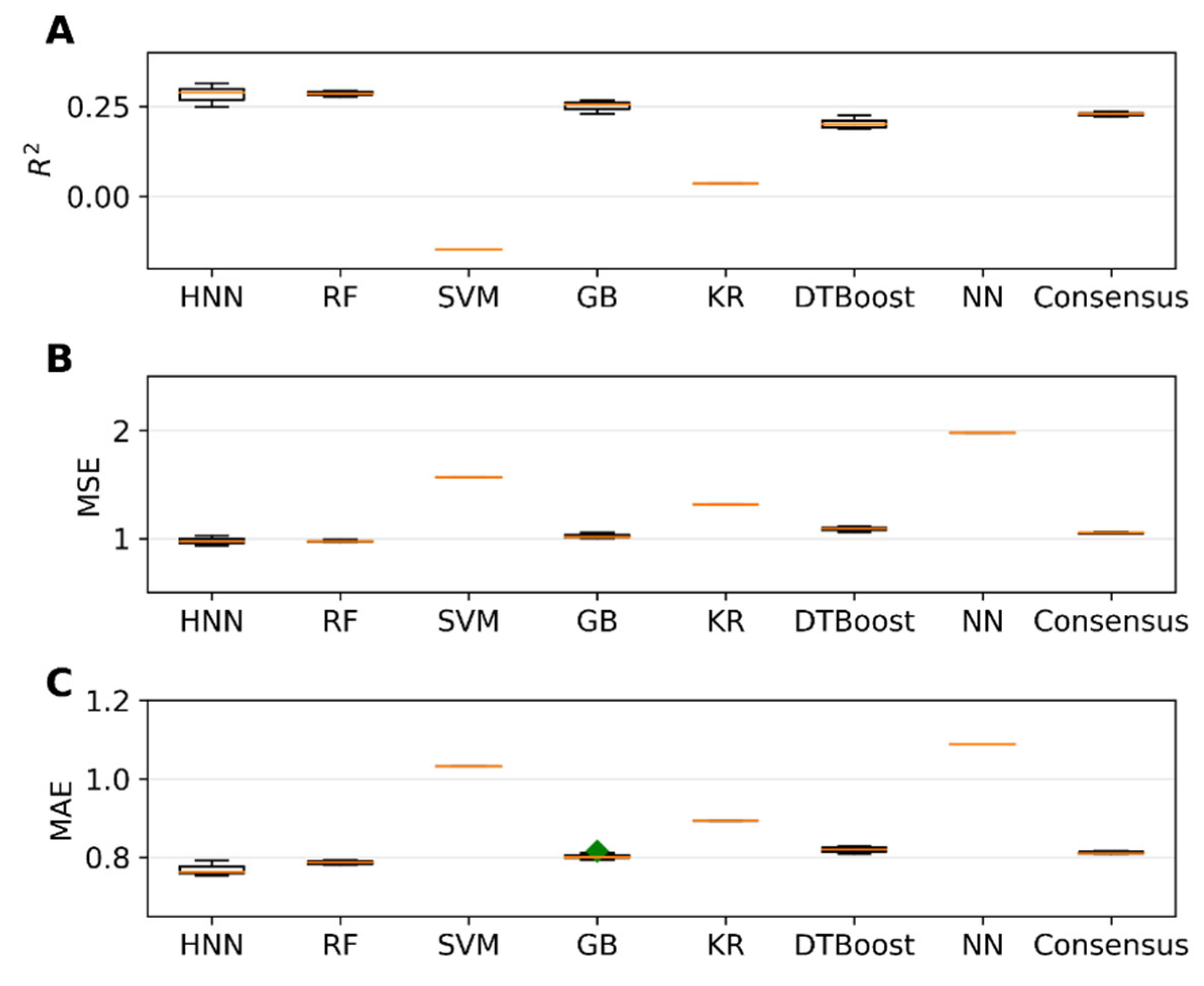

2), mean-square error (MSE), and mean absolute error (MAE). The results of our analysis are presented in

Figure 5. We randomly selected 20% of the 20,000 binary mixtures for each simulation as the test set, while the remaining 80% comprised the training set.

Figure 5a demonstrates that, except for the SVM method, all other methods achieved an r

2 value greater than 0.94. Notably, the HNN and NN methods exhibited the highest r

2, exceeding 0.96. Additionally, these methods yielded the lowest MSE of 0.03 (

Figure 5b) and MAE of 0.05 (

Figure 5c), respectively, showcasing their superior performance. In summary, by comparing various methods, we found that the HNN and NN approaches yielded the most accurate predictions, as indicated by their high r

2 values and low MSE and MAE scores. These findings highlight the potential of the HNN method in accurately estimating carcinogenic potency.

B) Predicting Carcinogenicity of Chemical Mixtures with Distinct Training and Test Set Data.

Next, after conducting initial tests on the predictive capability of the Hybrid Neural Network (HNN), we evaluated its performance alongside various machine learning methods for the distinct training and test data sets. To achieve this, we simulated training and test datasets comprising distinct chemical compounds. Our approach involved developing classification models by segregating compounds into the training and test sets, ensuring that each set consisted of unique compounds and combinations. By doing so, we ensured that none of the compounds present in the test set were included in the training set. This rigorous methodology was employed to avoid any potential biases or overlapping data between the training and test sets. It's important to note that we meticulously avoided selecting randomly chosen chemicals from the training set for each simulation to form the test set. This deliberate selection process ensured that the test set remained entirely independent, enabling a robust evaluation of the model's predictive capabilities. By adopting this comprehensive strategy, we aimed to provide a fair and unbiased assessment of the HNN's performance compared to other machine learning techniques in the context of chemical compound classification.

Carcinogenicity Prediction using Binary Classification

The single carcinogens obtained from the MEG and CPDB data sets were divided into training and test sets to predict the carcinogenicity of chemical mixtures. The binary mixtures were then formed by pairing one carcinogen with another carcinogen, while the binary noncarcinogen mixtures were created by pairing noncarcinogens. We employed the HNN, RF, Bagging, and AdaBoost methods to develop binary classification models using the training set, which consisted of 20,089 binary mixtures. Subsequently, we evaluated the performance of these models on the test set, which comprised 11,851 binary mixtures. Among the different methods, the AdaBoost models demonstrated the highest predictive performance. They achieved an accuracy of 98.16%, an Area Under the receiver operating characteristic Curve (AUC) of 0.996, a sensitivity of 97.07%, and a specificity of 99%. These results, as shown in

Figure 6, highlight the effectiveness of the AdaBoost approach in accurately classifying the carcinogenic potential of binary mixtures for the case of distinct data sets. Conversely, the performance of the HNN model was less accurate in this context which needs improvement in the distinct case which is discussed in the limitation section. It is worth noting that separating the training and test sets yielded improved predictive accuracy compared to the models developed without separating these sets (as shown in

Figure 2 and

Figure 6,

Supplementary Table S3). This suggests that the separation of data sets can contribute to better model performance, particularly in the case of binary classification for carcinogenicity prediction. In summary, we successfully predicted the carcinogenic potential of chemical mixtures by utilizing binary classification models. Moreover, separating the training and test sets further enhanced the accuracy of the models.

Carcinogenicity Prediction using Multiclass Classification

Carcinogenicity Prediction using Multiclass Classification involved the classification of single carcinogens obtained from MEG, NTP, IARC, and JSOH into different class categories: class 0, class 1, class 2, and class 3. This classification process is described in detail in the methods section. The single carcinogens were then divided into training and test sets before creating mixtures. The training set comprised 20,000 binary mixtures, while the test set consisted of 17,783 binary mixtures. Different multiclass classification models, namely HNN, RF, Bagging, and AdaBoost, were developed using the training set. These models were then evaluated for their predictive performance using the test set. Among the models, the RF models demonstrated the highest accuracy, micro accuracy, micro AUC, micro sensitivity, and micro specificity. Specifically, the RF models achieved an overall accuracy of 62.22%, a micro accuracy of 74.81%, a micro AUC of 0.79, a micro sensitivity of 62.22%, and a micro specificity of 81.11% (

Figure 7). HNN method performance was close to optimal. On the other hand, the AdaBoost method yielded the lowest performance metrics in this case of multi-classification, as shown in

Figure 7. However, it is noteworthy that when the training and test sets were separated, the highest overall accuracy obtained significantly decreased compared to the models built without separating these sets. This information is illustrated in

Figure 4 and

Figure 7, as well as in

Supplementary Table S4).

Carcinogenicity Prediction using Regression model

The single carcinogens from the CPDB data set were separated into training and test sets before forming their mixtures. The mixtures were formed with 20,000 binary mixtures in the training set, and the predictive performance of the models was tested for the 6,216 binary mixtures in the test set. The HNN, RF, SVR, GB, KR, DT, and KN-based regression models were developed for the 20,000 binary mixtures in the training set, and the predictive performance were tested on the 6,216 binary mixtures in the test set. The HNN method gave the highest r

2 of 0.38, and lowest MSE of 0.97 and the MAE of 0.76 (

Figure 8). In the regression model, the predictive performance of the models reduced drastically when the single chemicals were separated into training and test sets as compared to the regression models built without separating the training and sets (

Figure 5 and 8,

Supplementary Table S5).

In summary, several trends emerge when comparing the performance of machine learning models between distinct training and test datasets (where the training and test data are completely separated) and non-separated datasets (where the test data is randomly selected from within the training set). The predictive performance of the models shows the following patterns: a slight decrease for binary classification, a significant decrease for multiclass classification, and a drastic decrease for regression models.

To investigate deeper into these trends and explore the reasons behind them. In binary classification, the machine learning task involves predicting the carcinogenicity of a chemical, which means there are only two possible outcomes: carcinogen or non-carcinogen. When the training and test data are separated, the models tend to exhibit a slight decrease in performance. This can be attributed to the fact that the models are trained on a specific distribution of data and may struggle to generalize well to new instances in the test set.

Moving on to multiclass classification, the scenario becomes more complex as there are three possible outcomes: class 0, class 1, and class 2. In this case, when the training and test data are separated, the models experience a significant decrease in performance. The increasing number of classes to be represented poses a challenge for the models, as they need to learn more intricate decision boundaries and capture the distinctions among different classes. With the separation of training and test data, the models may have encountered only some classes during training, leading to reduced accuracy in predicting them during testing.

Regression models, which deal with continuous values and can yield an indefinite number of outcomes, exhibit a drastic decrease in performance when the training and test data are separated. Regression tasks involve predicting numerical values, such as predicting the toxicity level of a chemical. When a chemical present in the test set also appears in the training set, the models can make predictions with a higher degree of certainty, as they have learned patterns and correlations specific to that chemical. However, the uncertainty increases when a chemical in the test set is distinct from the training set (meaning it does not appear in the training set). The growing number of potential outcomes, combined with the lack of familiarity with the distinct chemical, makes it challenging for the models to predict its toxicity level accurately.

Taken together, when training and test data are separated, machine learning models experience varying degrees of decreased predictive performance. Binary classification models show a slight decrease, multiclass classification models experience a significant decrease, and regression models exhibit a drastic decrease. These trends can be attributed to factors such as the increasing number of classes or outcomes to be represented and the uncertainty that arises when encountering distinct instances in the test set that were not encountered during training. Understanding these trends can help guide designing and evaluating machine learning models in different scenarios, ensuring more accurate and reliable predictions.

4. Limitations

The performance of the Hybrid Neural Network (HNN) model in predicting the carcinogenicity of binary mixtures was found to be reduced when using different virtual mixtures in the training and testing data sets. This highlights the need for enhancements in several aspects of the model, including its architecture, hyperparameters, training process, and data representation. By addressing these limitations, the HNN model can be better tailored to the specific task of classifying the carcinogenic potential of binary mixtures. Despite outperforming other regression models and demonstrating optimal performance in multiclassification tasks, the HNN method still needs to achieve statistically superior results. The moderate accuracy observed in the binary classification of virtual mixtures, along with the limited explanatory power exhibited by the regression model on the distinct virtual mixture test data set, can be attributed to the inherent complexity and variability involved in accurately predicting carcinogenicity. Carcinogenicity is influenced by various factors, such as molecular properties, chemical interactions, and biological mechanisms, all of which contribute to the challenges faced by regression-based approaches in capturing the underlying relationships accurately.

Further, when individual chemicals are separated into distinct training and test sets, it can potentially undermine the HNN model's effectiveness in learning and generalizing from the data. This separation can lead to the loss of crucial information and disrupt the underlying patterns within the neural network. Moreover, it may overlook important contextual information related to the combined behavior of chemicals within mixtures, impeding the model's recognition of these chemicals' interconnectedness and collective influence on carcinogenic potential. Consequently, the model's capacity to capture the complex interplay of molecular properties, chemical interactions, and biological mechanisms contributing to carcinogenicity might be compromised. To overcome these challenges, strategies must be developed to acknowledge the holistic nature of binary mixtures. Instead of isolating individual chemicals, an approach that integrates the chemical components within the training and test sets can provide a more comprehensive representation of the underlying patterns. By preserving the combined behavior of mixtures, the model can more accurately capture the intricate relationships and interactions among the chemicals. This comprehensive approach enhances the model's predictive performance by effectively learning the complex interdependencies and synergistic effects within binary mixtures. Significant improvements can be achieved in the model's predictive performance by addressing the limitations posed by the separation of individual chemicals and implementing methodologies that preserve the holistic nature of binary mixtures. This comprehensive approach strengthens the model's ability to accurately predict the carcinogenicity of binary mixtures by capturing the intricate relationships and interactions among the chemicals. Additionally, including additional cases beyond the studied Case 1 and Case 2 in the binary classification models is expected to enhance the predictive capabilities of the HNN model. These additional cases, coupled with a comprehensive approach of separating the training and test data sets, will be incorporated into the next version of the model as part of ongoing improvements.

Additionally, to further enhance the overall predictive performance of the HNN, an extensive array of optimization techniques will be applied. This will involve fine-tuning the model's architecture and hyperparameters tailored explicitly to this dataset to achieve optimal performance. Potential improvements can be achieved by exploring adjustments to the number of hidden layers, neurons per layer, activation functions, and other architectural choices. Furthermore, refining the training process by optimizing the learning rate or incorporating regularization techniques may also lead to enhanced performance. To ensure the quality and representativeness of the training data, it is crucial to construct a diverse and well-balanced training set that adequately captures the essential characteristics of binary mixtures. To further improve the accuracy of the HNN, data augmentation techniques can be employed to introduce additional variations and increase the diversity of the training samples. Additionally, including additional relevant features holds the potential to enhance the accuracy of the HNN. For instance, incorporating molecular descriptors or biological data as additional features can provide comprehensive insights into the task of carcinogenicity prediction and significantly improve the model's performance.

In this study, we have considered equal doses or different concentrations of the component chemicals based on available literature. It has been well-documented that chronic exposure to mixtures of chemicals, which are individually non-carcinogenic at very low doses, can lead to carcinogenesis through synergistic interactions involving cancer-related mechanisms23. Thus, it would be advantageous to consider the individual doses of chemicals when predicting the carcinogenicity of binary mixtures and estimating the median effective dose of the component chemicals in regression models. This approach allows for a more accurate and realistic evaluation of mixture carcinogenicity. To achieve a more comprehensive understanding of mixture carcinogenesis, it is essential to model synergistic interactions that are specific to human cells24. Animal models may not accurately reflect human responses, and therefore, incorporating information specific to human cells in future predictions will contribute to a more reliable assessment of mixture carcinogenicity. Furthermore, this study serves as a proof-of-concept in developing classification-focused mixture models that combine elements of both whole mixture and component-based approaches. The ongoing development of the HNN, the updated version of the model, will incorporate all the aforementioned enhancements. Future iterations of the model will include complete dose-response data, mode of action, combined action (independent, synergistic, additive), and biological response data. These additions will enable a more robust evaluation of carcinogenicity in mixtures, providing a more comprehensive understanding of the complex interactions involved.

Figure 1.

The HNN, RF, Bagging, and AdaBoost models were utilized for binary classification and evaluated using the following statistical metrics: A) accuracy, B) AUC, C) sensitivity, and D) specificity. The models were applied to mixtures sourced from both the literature and the DCDB database.

Figure 1.

The HNN, RF, Bagging, and AdaBoost models were utilized for binary classification and evaluated using the following statistical metrics: A) accuracy, B) AUC, C) sensitivity, and D) specificity. The models were applied to mixtures sourced from both the literature and the DCDB database.

Figure 2.

Statistical Metrics of Binary Classification Models: The HNN, RF, Bagging, and AdaBoost models were assessed based on the following statistical metrics for assumption-based virtual binary mixtures generated from the MEG and CPDB databases of carcinogens and noncarcinogens: A) accuracy, B) AUC, C) sensitivity, and D) specificity.

Figure 2.

Statistical Metrics of Binary Classification Models: The HNN, RF, Bagging, and AdaBoost models were assessed based on the following statistical metrics for assumption-based virtual binary mixtures generated from the MEG and CPDB databases of carcinogens and noncarcinogens: A) accuracy, B) AUC, C) sensitivity, and D) specificity.

Figure 3.

Statistical metrics of the binary classification models (HNN, RF, Bagging, and AdaBoost) evaluated on the assumption-based virtual binary mixtures training dataset generated from the MEG and CPDB databases of carcinogens and noncarcinogens. A) Accuracy, B) AUC, C) Sensitivity, and D) Specificity. The external validation test set was developed using data collected from the literature and the DCDB database.

Figure 3.

Statistical metrics of the binary classification models (HNN, RF, Bagging, and AdaBoost) evaluated on the assumption-based virtual binary mixtures training dataset generated from the MEG and CPDB databases of carcinogens and noncarcinogens. A) Accuracy, B) AUC, C) Sensitivity, and D) Specificity. The external validation test set was developed using data collected from the literature and the DCDB database.

Figure 4.

Statistical metrics of multiclass classification models. A) Overall accuracy, B) Micro accuracy, C) Micro AUC, D) Micro sensitivity, and E) Micro specificity for assumption-based virtual binary mixtures training data set generated from MEG, NTP, IARC, and JSOH.

Figure 4.

Statistical metrics of multiclass classification models. A) Overall accuracy, B) Micro accuracy, C) Micro AUC, D) Micro sensitivity, and E) Micro specificity for assumption-based virtual binary mixtures training data set generated from MEG, NTP, IARC, and JSOH.

Figure 5.

displays the statistical metrics of various regression models, namely HNN, RF, SVR, GB, KR, DTBoost, NN, and Consensus, in relation to assumption-based mixtures. The metrics assessed include A) coefficient of determination (R2), B) mean squared error (MSE), and C) mean absolute error (MAE).

Figure 5.

displays the statistical metrics of various regression models, namely HNN, RF, SVR, GB, KR, DTBoost, NN, and Consensus, in relation to assumption-based mixtures. The metrics assessed include A) coefficient of determination (R2), B) mean squared error (MSE), and C) mean absolute error (MAE).

Figure 6.

Statistical metrics of binary classification models for assumption-based virtual binary mixtures. Models include HNN, RF, Bagging, and AdaBoost. Metrics: A) Accuracy, B) AUC, C) Sensitivity, and D) Specificity. Data sets generated from MEG and CPDB databases of carcinogens and noncarcinogens.

Figure 6.

Statistical metrics of binary classification models for assumption-based virtual binary mixtures. Models include HNN, RF, Bagging, and AdaBoost. Metrics: A) Accuracy, B) AUC, C) Sensitivity, and D) Specificity. Data sets generated from MEG and CPDB databases of carcinogens and noncarcinogens.

Figure 7.

Statistical metrics of multiclass classification models (HNN, RF, Bagging, and AdaBoost) for assumption-based virtual mixtures with separated training and test datasets: A) overall accuracy, B) micro accuracy, C) micro AUC, D) micro sensitivity, and E) micro specificity.

Figure 7.

Statistical metrics of multiclass classification models (HNN, RF, Bagging, and AdaBoost) for assumption-based virtual mixtures with separated training and test datasets: A) overall accuracy, B) micro accuracy, C) micro AUC, D) micro sensitivity, and E) micro specificity.

Figure 8.

The statistical metrics of the HNN, RF, SVR, GB, KR, DTBoost, NN, and Consensus based regression models A) coefficient of determination (R2), B) mean squared error (MSE), and C) mean absolute error (MAE) for the assumption-based mixtures with a separated data set.

Figure 8.

The statistical metrics of the HNN, RF, SVR, GB, KR, DTBoost, NN, and Consensus based regression models A) coefficient of determination (R2), B) mean squared error (MSE), and C) mean absolute error (MAE) for the assumption-based mixtures with a separated data set.