1. Introduction

The transportation industry in Canada is undergoing a technological revolution following the development and adoption of unmanned aircraft system (UAS) [

1]. An UAS is made up of an unmanned aerial vehicle (UAV) communicating through suitable devices with operators located at a ground control station (GCS) [

2]. Their application is expected to result in significant economic benefits due to their involvement in various economic sectors, ranging from personal operation to more advanced operations including search and rescue, infrastructure inspections, land mapping, environmental management, crisis management and monitoring [

3]. The use of UAS is governed by strict regulatory frameworks that prioritize safety [

4]. To guarantee safety, it is necessary to acquire and maintain situational awareness (SA) throughout the operation [

5,

6]. Existing Canadian government regulations mainly concern UAS operating in visual line-of-sight (VLOS), i.e. within maximum horizontal and vertical limits of 500 m and 120 m respectively [

5,

7]. During these operations, the pilot must keep an imaginary and uninterrupted straight line between him or herself and the UAV in order to control its position in relation to its surroundings. In certain scenarios where weather and environmental conditions lead to a loss of visibility within the spatial limits defined for VLOS, the pilot can be assisted by other operators generally referred to as visual observers [

5]. Their task is to maintain SA in operating contexts where the SA of the primary pilot is not optimal. They communicate in real time with the main pilot to transmit information relevant to the operation [

5]. The presence of visual observers can also allow operations to be conducted in extended visual line-of-sight (EVLOS), which is defined by an extension of the authorized spatial limits for VLOS [

7,

8,

9].

Following the consideration of VLOS and EVLOS, past decomposition of SA for UAS operations placed the human at the center of the operation, making them the primary entity responsible for decision-making [

6]. However, aerial transport is entering a new era in which UAV will be able to serve large urban, suburban and rural areas through the application of advanced air mobility (AAM) [

1]. AAM involves performing operations beyond visual line-of-sight (BVLOS) i.e. beyond the spatial limits which define VLOS and EVLOS [

1,

10].

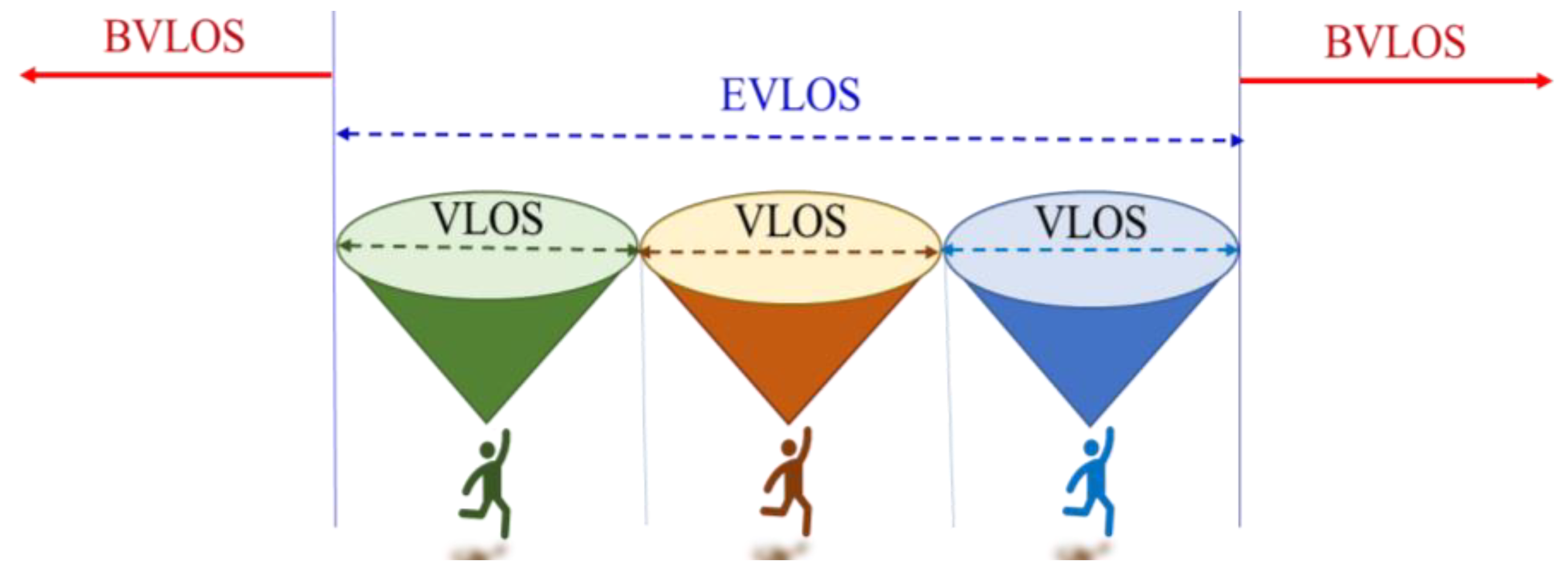

Figure 1 illustrates the spatial limits of VLOS, EVLOS and BVLOS.

AAM is characterized by the adoption of a highly dynamic and complex air transport system in which a network of UAVs are required to operate autonomously to accomplish their missions [

10]. The development of a regulatory structure that covers all aspects of AAM requires a deep analysis of the problem. At the request of the National Aeronautics and Space Administration (NASA), the National Academies of Sciences, Engineering, and Medicine (NASEM) conducted a study to assess the issues and challenges associated the AAM vision [

10]. The NASEM study found that, in addition to the regulatory and societal issues, close attention should be paid to the technical and technological aspects of the safe management of AAM [

10]. In anticipation of these needs NASA has set up the UAS traffic management system, a platform that takes advantage of digitalization to facilitate automatic air traffic management [

11,

12]. The UAS traffic management system assumes that UAVs operating in AAM are intended to be autonomous i.e. capable of making decisions and taking actions while communicating directly with each other, with the GCS and with other airspace occupants in order to achieve operational safety requirements. Safety issues can only be addressed if the task of acquiring and maintaining SA is not limited to the operators located at the GCS, but is also carried out individually by each UAV [

10]. This includes responsibility for making decisions and performing actions. There is therefore a need to analyze and understand the key aspects of SA as they relate to AAM. Such an analysis is based on the principle that AAM is a dynamic context in which each autonomous UAV must adjust itself according to its environment in order to ensure its own safety, that of other occupants of the airspace, and that of other elements of the environment, in the air or on the ground [

10]. The purpose of this article is therefore to propose a theoretical framework that represent a SA model associated to the UAV in AAM. With this work, we intend to contribute to research which will allow the realization of AAM, and we also expect to impact the safety of UAV operations on two main levels. Firstly, the SA decomposition contained in this paper may be used as a security checklist for UAVs before BVLOS operations; and secondly, this contribution may inform the establishment or updating of regulations relating to BVLOS operations.

This paper is structured as follows. We begin by discussing SA in

Section 2. Then, in

Section 3, we review the technologies and procedures that are currently used or that should be developed to facilitate the autonomy of UAVs. From there, we extract SA information relevant to the safety of UAV operations and present it in a goal-directed task analysis (GDTA) diagram (see

Section 4). In

Section 5, we use the system modeling language (SysML) to produce a high-level representation of the AAM. To this end, we present an overall structural vision of the AAM system in a block definition diagram (BDD) which highlights all the entities involved in the system and their relationship to the UAV. We continue with an activity diagram in which we model the overall behavior that UAVs in AAM should exhibit in order to achieve the SA goals described in

Section 4. In

Section 6, we present a case study in which we analyze an UAV to determine whether the technologies it uses are sufficient to achieve all the SA requirements necessary for safe operations. We then discuss this analysis and make some suggestions for the further use of this work. Finally, we end this paper with a conclusion in which we present some directions to be explored in future research.

2. Situational awareness

The concept of situational awareness (SA) applies in operational conditions which require, from one or more entities, the perception, filtering and organization of information in order to guide decision-making [

13]. This occurs in complex adaptive systems characterized by “a dynamic network of entities acting simultaneously while continuously reacting to each other’s actions” [

14]. The proper functioning of such systems requires communication and well-developed coordination between the various entities that constitute them. Interactions are therefore carried out according to the knowledge that each entity has of the system and the environment at each moment. The work of Endsley et al. [

15] on SA in dynamic systems has long been considered a point of reference in this field, and specifies that the process of acquiring and maintaining SA takes place at three levels [

15,

16]:

Perception of the status, attributes, and dynamics of the elements of the environment that are relevant to understanding a specific situation.

Understanding of the meaning and importance of the elements perceived in the first stage, depending on the situation and the intended goals.

Projection which facilitates proactive decision-making and the anticipation of possible consequences through prediction of the future state of the situation according to the dynamics of the elements perceived and understood at the two previous levels [

15,

16].

These three levels of SA guide the decisions to be made and the actions to be taken to achieve the goals of the entity concerned.

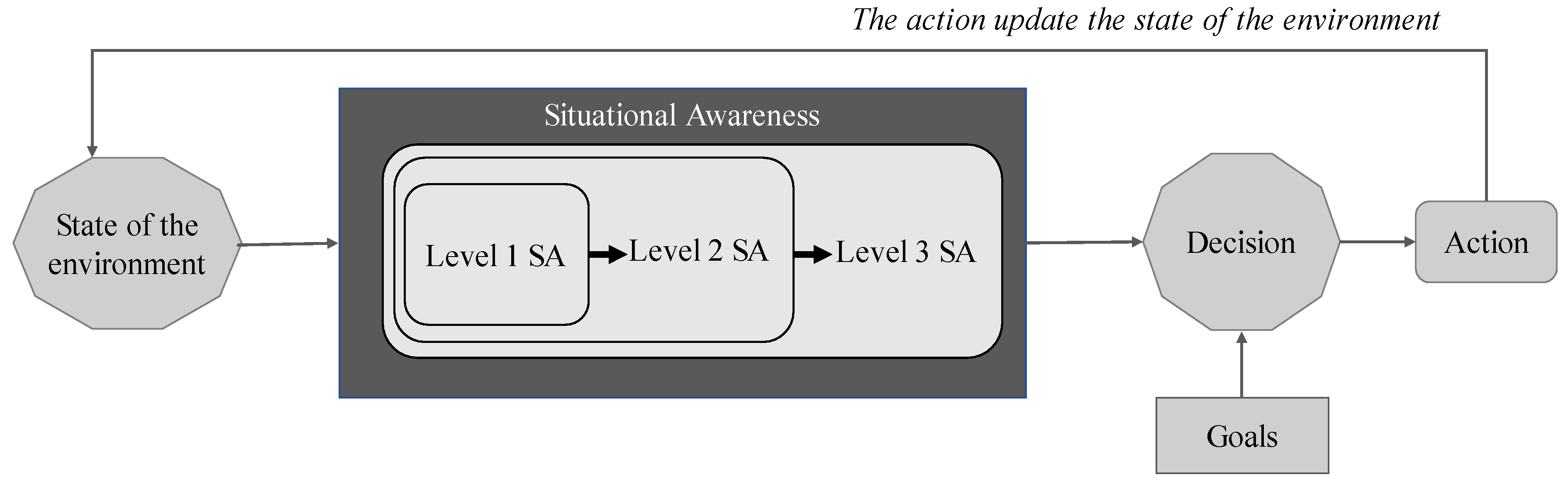

Figure 2 presents a simplified representation of the SA model devised by Endsley et al. [

15].

To better address the issue of SA in AAM, we assume that each autonomous UAV must consider itself to be the central entity in the system. As such, its main duty is to keep the AAM safe. To do so, it must employ different technologies and procedures which allow it to perceive and understand the AAM system in order to make effective decisions during the operation. In the next section, we analyse the technologies and procedures for BVLOS operations, and which are therefore necessary in AAM.

3. Analysis of technologies and procedures for beyond visual line-of-sight operations

Recent work has performed reviews of the technologies and procedures used to maintain control over operations beyond visual line-of-sight (BVLOS), and to capture, analyze and convey information relevant to the safety of BVLOS operations [

7,

8,

9,

17,

18]. We have analyzed these technologies and procedures and categorized them into four main groups: command and control of the UAV, detect-and-avoid (DAA), weather detection, and knowledge of the state of the UAV. We will now describe each of these groups.

3.1. Command and control of the UAV

Although autonomy is essential for UAVs in AAM, the presence of one or more pilots-in-command (PIC) at the ground control station (GCS) remains essential to maintain command and control of the UAV. Ensuring this requires command-and-control (C2) links to be maintained between the GCS and the autonomous UAV throughout the duration of the operation [

19]. The main C2 links are the autopilot-GCS link, the manual radio control (RC)-GCS link, and the first-person view (FPV) link [

17].

The autopilot-GCS communication link allows the PIC located at the GCS to maintain control over the general mission parameters of the UAV, such as its spatial location, its trajectory, and mission progress [

20,

21]. The RC-GCS link allows the PIC to maintain the possibility of taking manual control of the aircraft at all times [

22]. If one of these links is lost, an emergency procedure such as return-to-land (RTL) or a flight termination system (FTS) may be triggered [

17,

23,

24]. The RTL procedure is used to command the UAV to return when the autopilot-GCS link is lost for a certain period of time, about ten seconds [

17]. The goal of the RTL procedure is to try to re-establish this link to prevent the FTS procedure from being triggered [

17,

23,

24]. If the autopilot-GCS link is lost for too long (about two minutes for example) and the RC-GCS link is also lost, the FTS procedure stops the UAV’s motor and leads to the safe destruction of the aircraft [

17,

24]. These procedures help to prevent the UAV from flying outside authorized limits without supervision. Authorized flight limits are defined by a geo-fence function, which fix the minimum and maximum altitudes of the flight and delimiting the spatial area of operation using a set of GPS points around the intended flight area [

17]. FTS and RTL procedures can also be manually triggered by the PIC at the GCS.

The FPV link is used to keep the PIC visually aware of the situation around the UAV [

25,

26]. The UAV is equipped with a camera that transmits a video stream to the GCS. This video stream allows the PIC to perceive the scene as if they were on board the aircraft [

25]. The PIC is thus provided with information including (but not limited to) the aircraft's position, altitude, speed, direction and power consumption [

17]. FPV allows the PIC at the GCS to visually monitor the environment around the aircraft in order to, for example, avoid possible dangers by taking manual control of the aircraft when necessary [

27]. To avoid the risk of collisions, recently produced UAVs integrate technologies and procedures which allow them to detect and avoid obstacles.

3.2. Detect and Avoid

During an operation, each UAV must ensure its own safety and that of the other occupants of the airspace [

28]. To do so, it must be equipped with technological devices and functions which allow it to detect objects in a limited spatial area in order to anticipate any risk of collision [

17,

28]. Commonly used technologies include radar for detecting non-cooperative traffic obstacles (buildings, hot air balloons, etc.) and automatic dependent surveillance-broadcast (ADS-B) for cooperative traffic detection [

17,

29,

30]. For cooperative traffic, sensors allow the UAV to self-determine and broadcast its own spatial information such as its position and altitude while cooperating with other objects in the operating context to receive their information [

17,

31,

32]. The detect and avoid (DAA) process can be resumed in three main steps: sense, detect and avoid [

31]. The execution of the

sense step is carried out by sensors which collect the spatial locations of obstacles, their speed and their rate of acceleration or deceleration [

33]. Based on this information, the obstacles likely to collide with the UAV are identified in the

detect step. In the

avoid step, the UAV can reroute, or trigger the FTS procedure described in section 3.1 above.

3.3. Detection of weather conditions

The impact of meteorological conditions on operations is a crucial element of AAM. Weather conditions such as clouds, mist, fog or rain can degrade the quality of information transmitted in FPV, resulting in poor SA [

6]. In some cases, infrared cameras can improve SA around the UAV [

34]. Other weather conditions such as low temperatures and strong winds can lead to batteries draining rapidly or the deterioration of electronic components [

35]. Strong winds can compromise the safety of an operation by negatively influencing the stability of the UAV and the DAA [

6,

17,

18,

35]. This is because BVLOS operation include small vehicles with limited power, as well as low maximum take-off mass [

6,

17,

18,

35]. For SA of weather conditions to be effective, Jacob et al. [

18] specifically proposed that aerial vehicles should be equipped with real-time predictive capabilities, in order to detect wind speed and direction. Then, weather conditions unfavorable to the continuation of operations could be detected, and the UAV could take a safe action such as waiting in a secure area or executing an RTL or FTS procedure [

35]. In order to execute these corrective actions, it is imperative for the UAV to be in a good working condition. We highlight this issue in the next section.

3.4. Awareness of the state of the UAV

The malfunction of one of the components of the UAV can represent a major risk for the safety of the AAM. Thus, in addition to the technologies and procedures for the external control of the environment described above, the UAV must also know about its state at each moment before and during the flight. Both the PIC [

6] and the UAV itself must ensure the proper functioning of all its essential components (batteries, motor, cameras, propellers, wheels, etc.) [

36]. If one of its components is malfunctioning, remedial actions such as RTL or FTS procedures must be taken quickly to maintain the safety of the AAM.

Beyond the four categories of SA – described in sections

3.1, 3.2, 3.3 and

3.4, the UAV must also have information on the characteristics of the operating area. More specifically, the spatial operating limits must be defined so that it can respect the limits of authorized airspace, the topography, the relief, the maximum flight altitude achievable in the area, the boundaries of the area, as well as the presence of external objects (water bodies, airfields, manned aircraft, reserve landing sites in case of precautionary or emergency landing) [

4,

5].

In this section, we have analyzed the technologies and procedures necessary for BVLOS operation. This analysis allowed us to extract four general SA goals that each UAV should accomplish in the AAM. These goals are associated with the four groups of technologies and procedures that we have identified (UAV command and control, DAA, weather detection, and UAV status). In the next section, we represent these requirements in a decomposition of UAV SA from the AAM safety perspective.

4. Analysis of technologies and procedures for beyond visual line-of-sight operations

In this section, before presenting our UAV-related SA decomposition according to the AAM vision, we discuss a previous decomposition of SA as it relates to UAV flight operations in general [

6].

4.1. Related work

An SA decomposition related to UAV operations has been previously published which approached SA from a human-UAV interaction perspective [

6]. The SA requirements identified by that decomposition concerned the spatial location of the UAV vis-à-vis other airspace objects, the weather conditions around the UAV, the state of the UAV in terms of its logic (RTL and FTS), the UAV components (the camera, for example), the mission information, the commands necessary to direct the UAV, and the UAV ability to execute those commands. Emphasis was placed on the SA of the human vis-à-vis the UAV. Indeed, the only requirements associated with the UAV were that it understood the commands received remotely from the pilot, and that it executed the pre-programmed safety procedures (RTL and FTS) if necessary. The authors had performed an experiment in which the objective was to examine the incidents encountered during operations in order to identify the SA faults that caused them [

6]. According to the results, the incident most associated with the operational logic of the UAV was a crash; other incidents were all associated with poor acquisition of SA by the human operators, particularly in terms of spatial location, mission information, weather conditions, and the level of confidence attributed to the UAV. These incidents included the UAV becoming stuck in orbit, confusion when trying to avoid collisions, imprecise tracking of spatial locations, inconsistency between the camera and control displays, and operational information being misremembered by humans.

This examination of SA in a flight operation context clearly separates the SA of the human from that of the UAV, considering the human as the central entity in the operation and the entity mainly responsible for the acquisition and maintenance of SA to ensure flight safety. The underlying logic gives the human primary responsibility for making decisions and taking actions. We speculate that such an approach to SA in the context of UAV operations was due to UAV technologies available at that time. Today’s technology has made possible more autonomous UAVs that can perceive their environment themselves, analyze it, make decisions, and act. This will potentially solve the problem of human error when acquiring SA during operations. Another SA analysis should therefore be performed which considers the UAV as the main entity and emphasizes its self-localization in the airspace, its communication with the GCS, and its means of making decisions and undertaking actions to ensure the safety of BVLOS operations. This analysis is the focus of this work and is presented in the following section.

4.2. UAV-related situational awareness in advanced air mobility

Recent technological advances and the AAM vision mean that it is no longer a question of dividing the responsibility for SA between the UAV and the human, but of attributing it entirely to the UAV, which reports to the pilots-in-command (PIC) located at the GCS. In addition, like the PICs, the UAV operating in AAM must be responsible for making decisions and taking consequent actions, under the supervision of the PICs. Thus, in this section, we present a new decomposition of the SA goals that UAVs operating in AAM must achieve to ensure safe operation. To perform this decomposition, we first considered the SA goals outlined in section 4.1. In addition, we extracted other SA requirements for consideration from the analysis of the technologies and procedures necessary for BVLOS detailed in section 3, particularly DAA and communication with the GCS.

Following the approach proposed by Endsley et al. [

13], designing for SA requires the goals attached to the different tasks of the entity in which we are interested to be initially defined. These goals can be presented in a goal-directed task analysis (GDTA) diagram. The GDTA diagram identifies and prioritizes goals and decisions along with the elementary SA requirements necessary to resolve them [

13]. The root of the GDTA tree is the main goal of the studied entity, the internal nodes are a hierarchy of sub-goals (represented by rectangular shapes ) or decisions (represented by hexagonal shapes ), and the leaves are the elementary SA requirements necessary for the achievement of these sub-goals and consequently the main goal. The goal, sub-goals and SA requirements are defined in relation to the other entities in the system [

13].

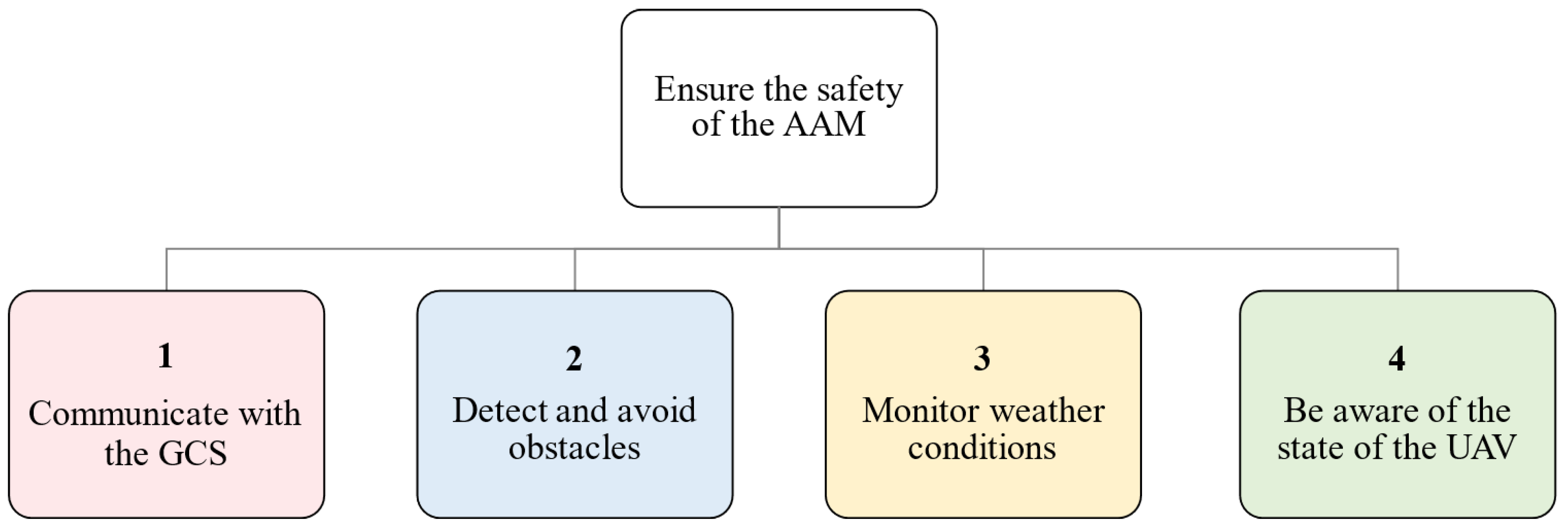

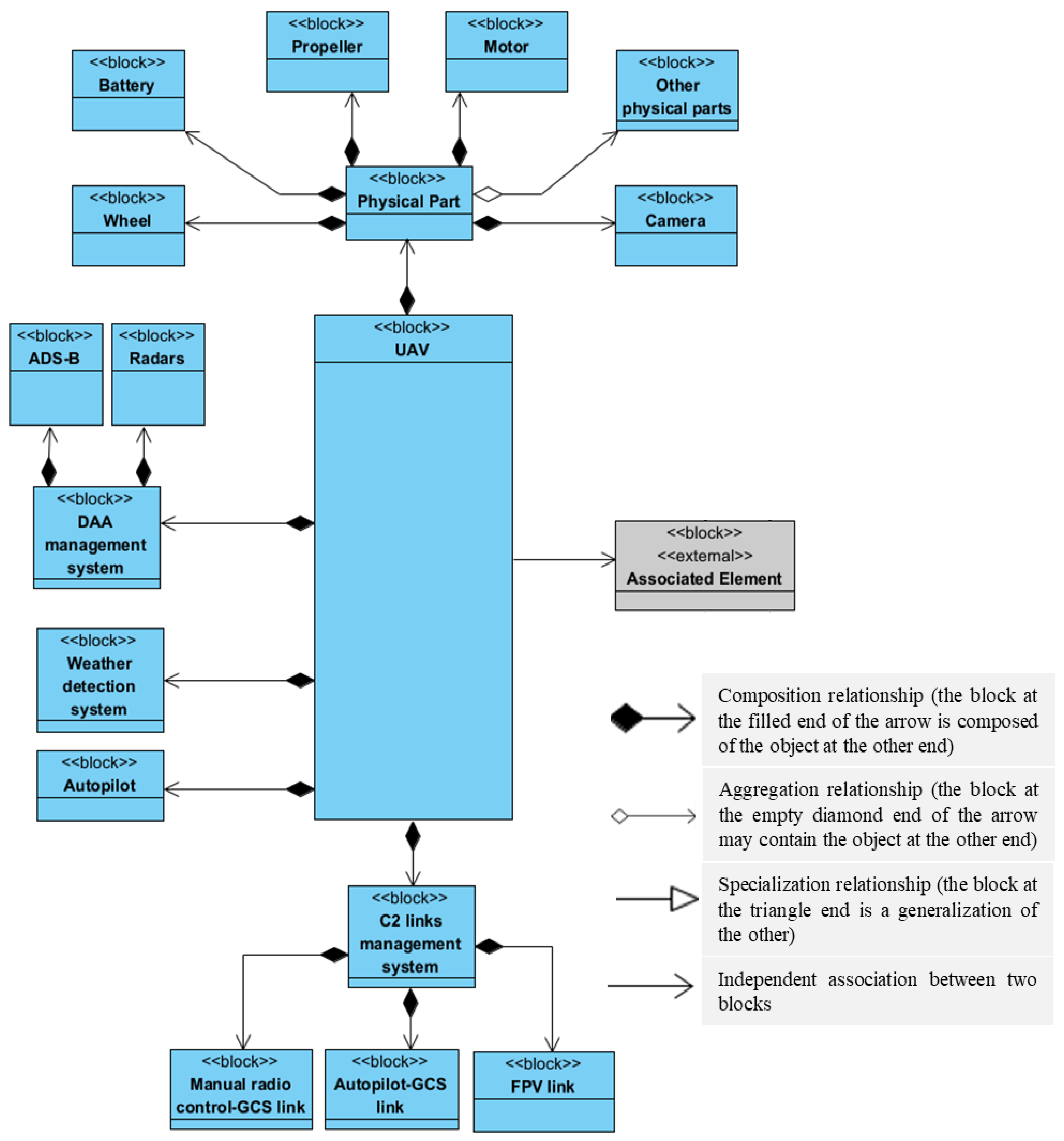

As we have emphasized, the UAV is the central entity of the AAM system. Its main goal is to ensure the safety of the AAM. To achieve this, it must be able to: (1) communicate with the GCS, (2) detect and avoid obstacles, (3) monitor weather conditions, and (4) be aware of its state. We illustrate these four sub-goals in

Figure 3, which represents the first level of the GDTA.

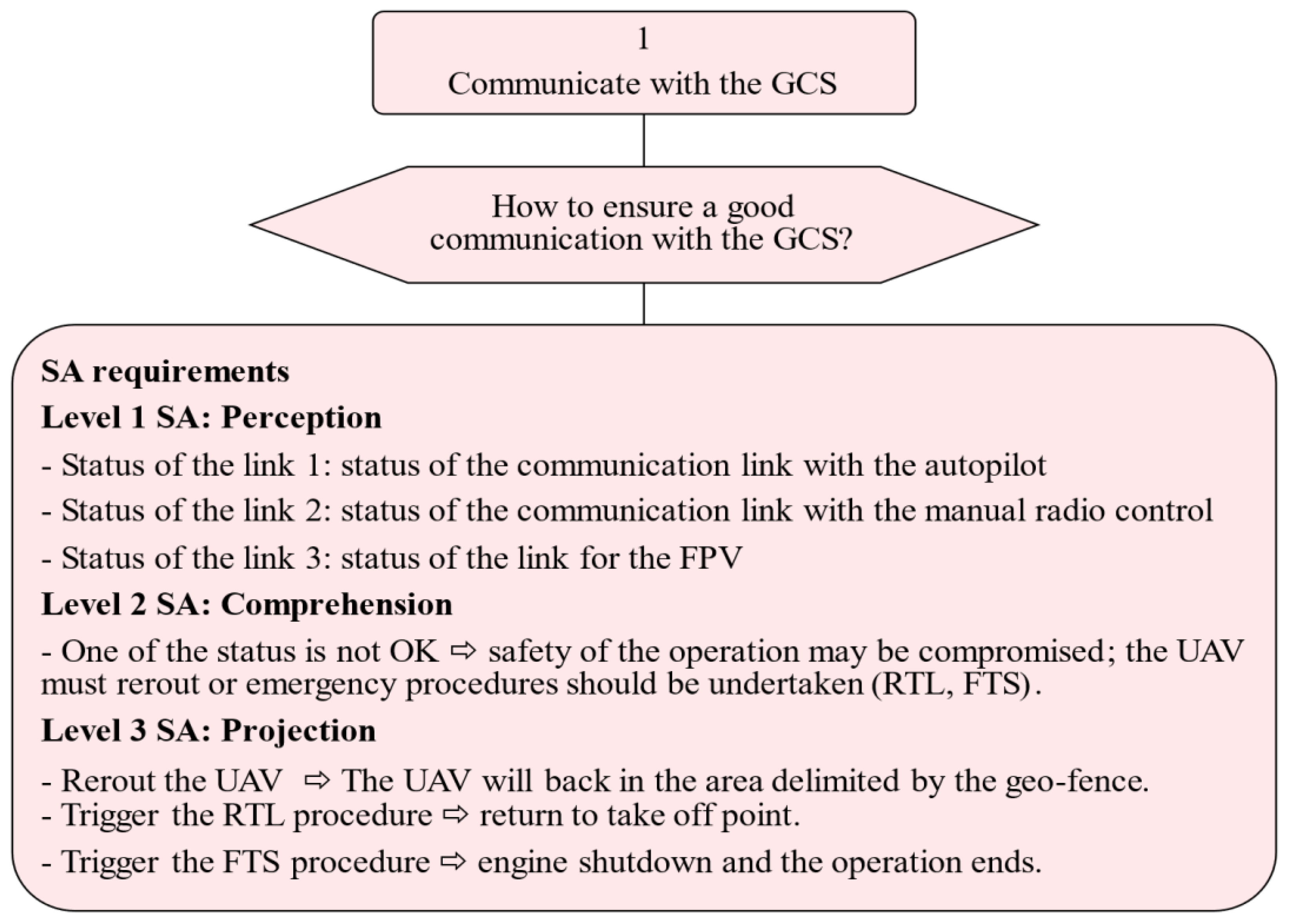

Figure 4 illustrates the decomposition of SA sub-goal (1): communicate with the GCS. To achieve this sub-goal, the UAV must be able to perceive the status of its C2 links (autopilot-GCS, GCS, and FPV). If one link is malfunctioning, it must take corrective action to maintain the safety of the AAM (by adjusting its behavior, rerouting, or performing RTL or FTS procedures).

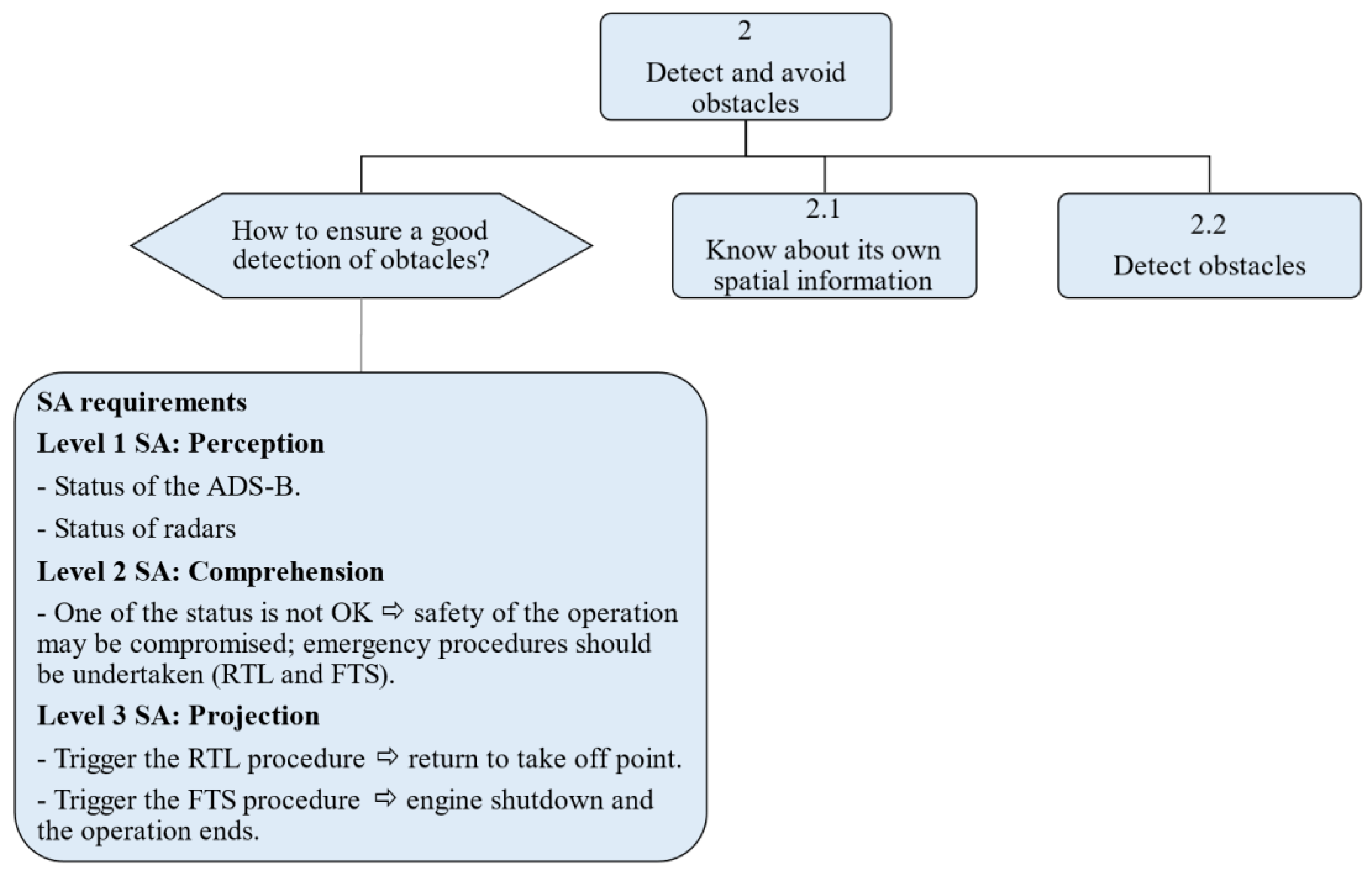

Figure 5 shows the decomposition of SA goal (2): detect and avoid obstacles. This sub-goal is broken down into a decision (represented by a hexagonal shape) and two other sub-goals denoted as sub-goals 2.1 and 2.2. The UAV must decide how to ensure that the technologies necessary to detect and avoid cooperative and non-cooperative traffic (ADS-B and radar respectively) are working properly. If there is a malfunction, it will take corrective action (RTL or FTS) to maintain the safety of the AAM.

In

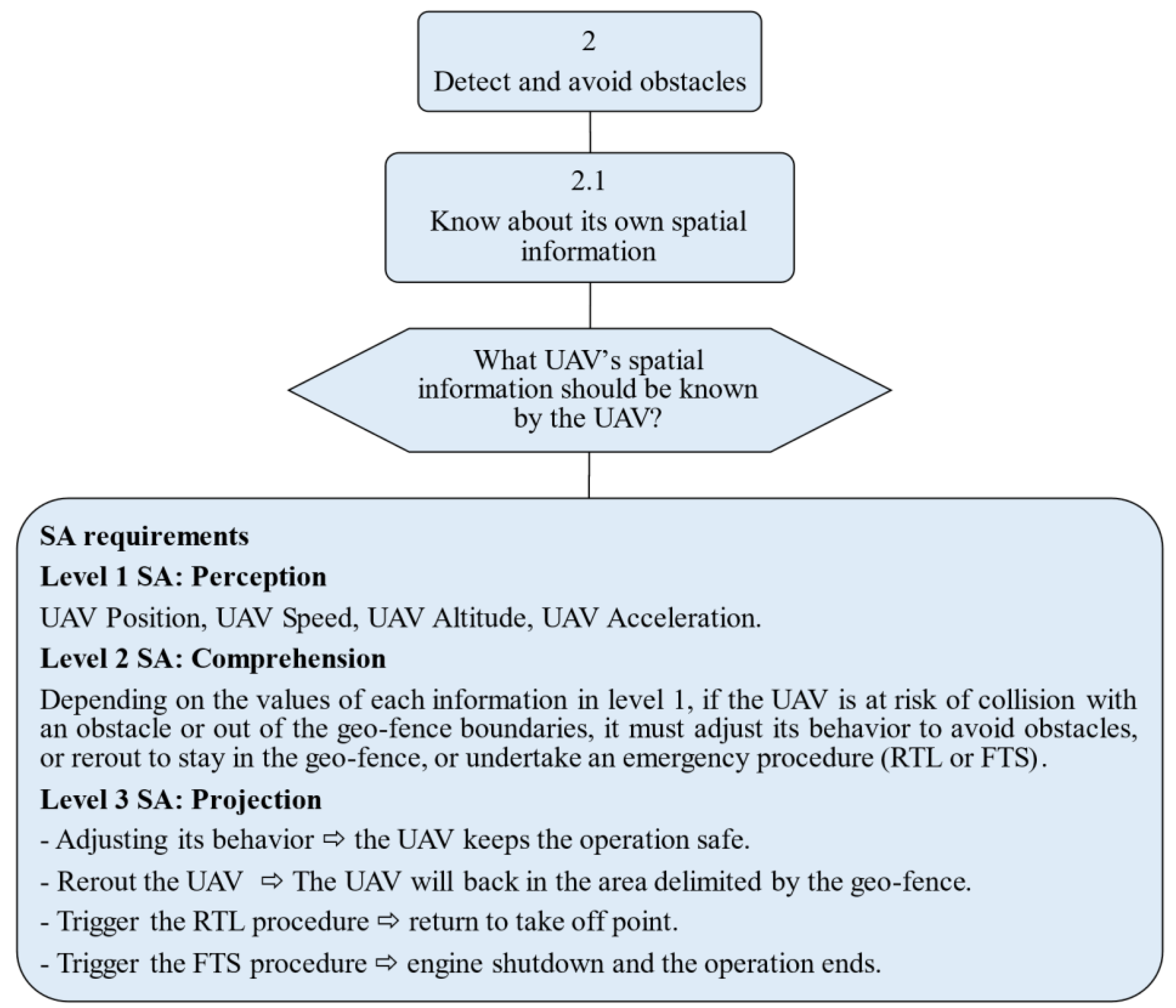

Figure 6 and

Figure 7 it is assumed that radar and ADS-B are working as expected. In

Figure 6, the UAV thus acquires its own spatial information (position, speed, altitude, and acceleration). In

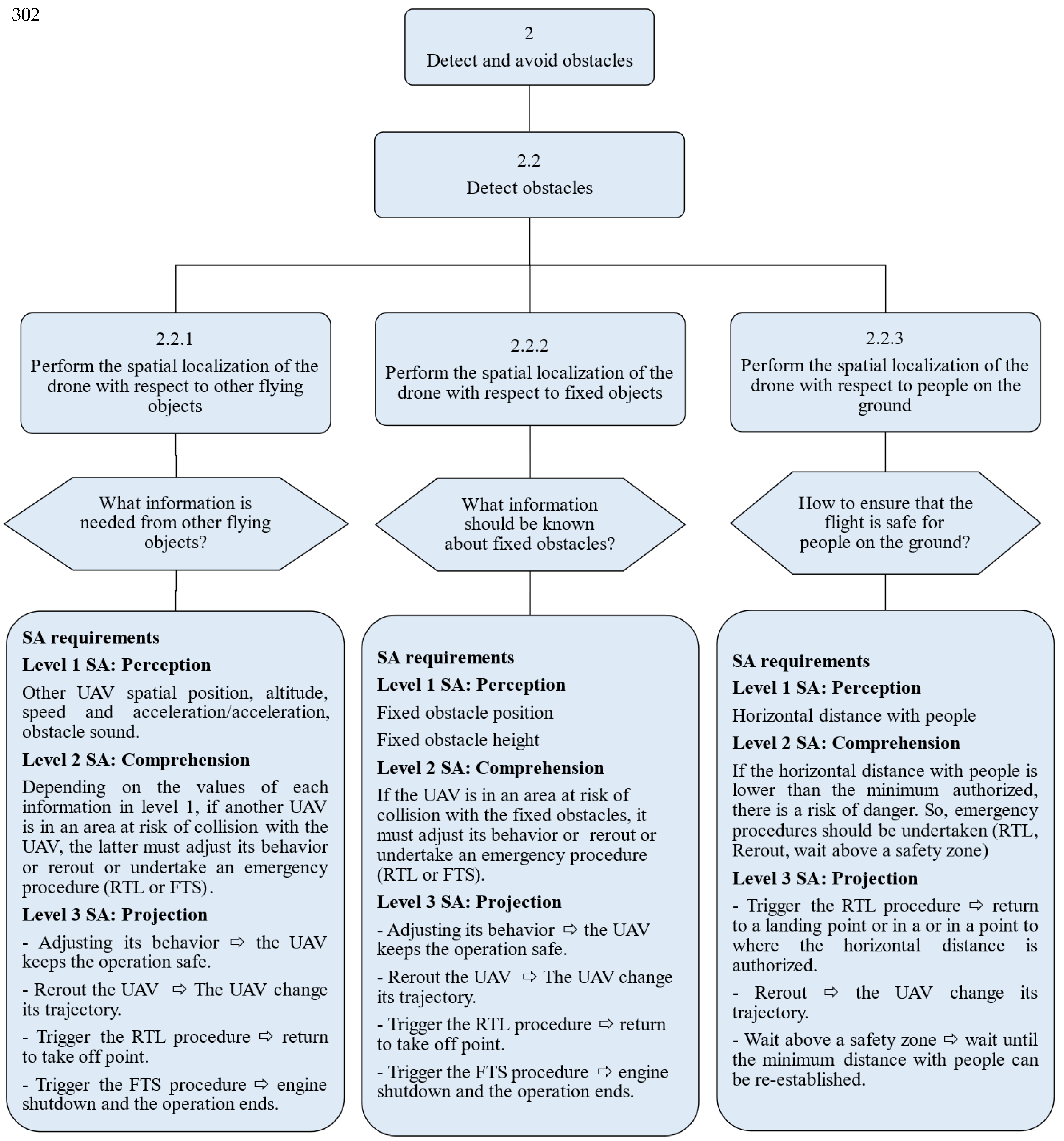

Figure 7 the UAV receives spatial information about obstacles. It then evaluates whether there is a risk of collision based on that information. If there is, it will execute a corrective action (adjusting its behavior, rerouting, or performing RTL or FTS procedures) in order to maintain the safety of the AAM.

In

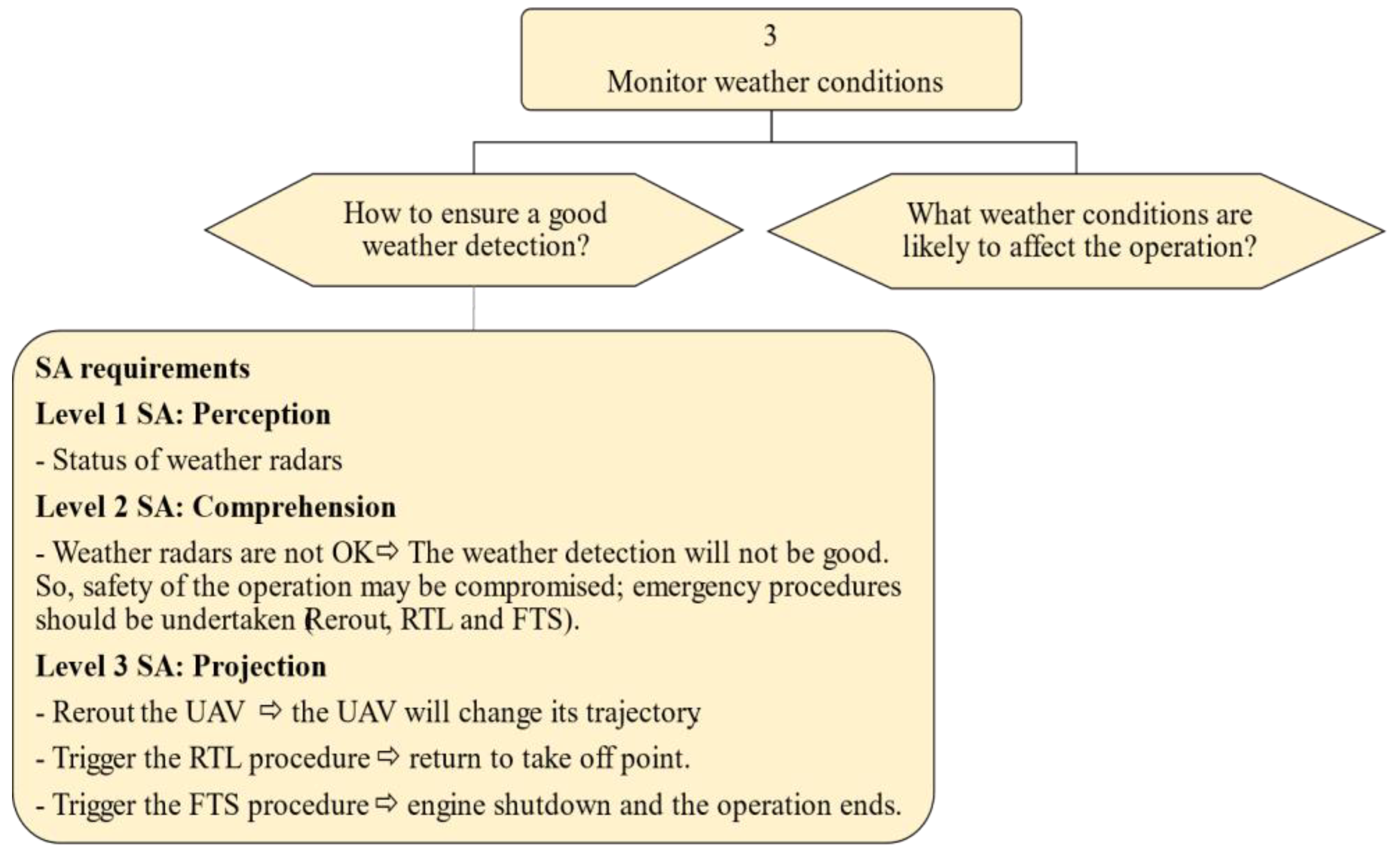

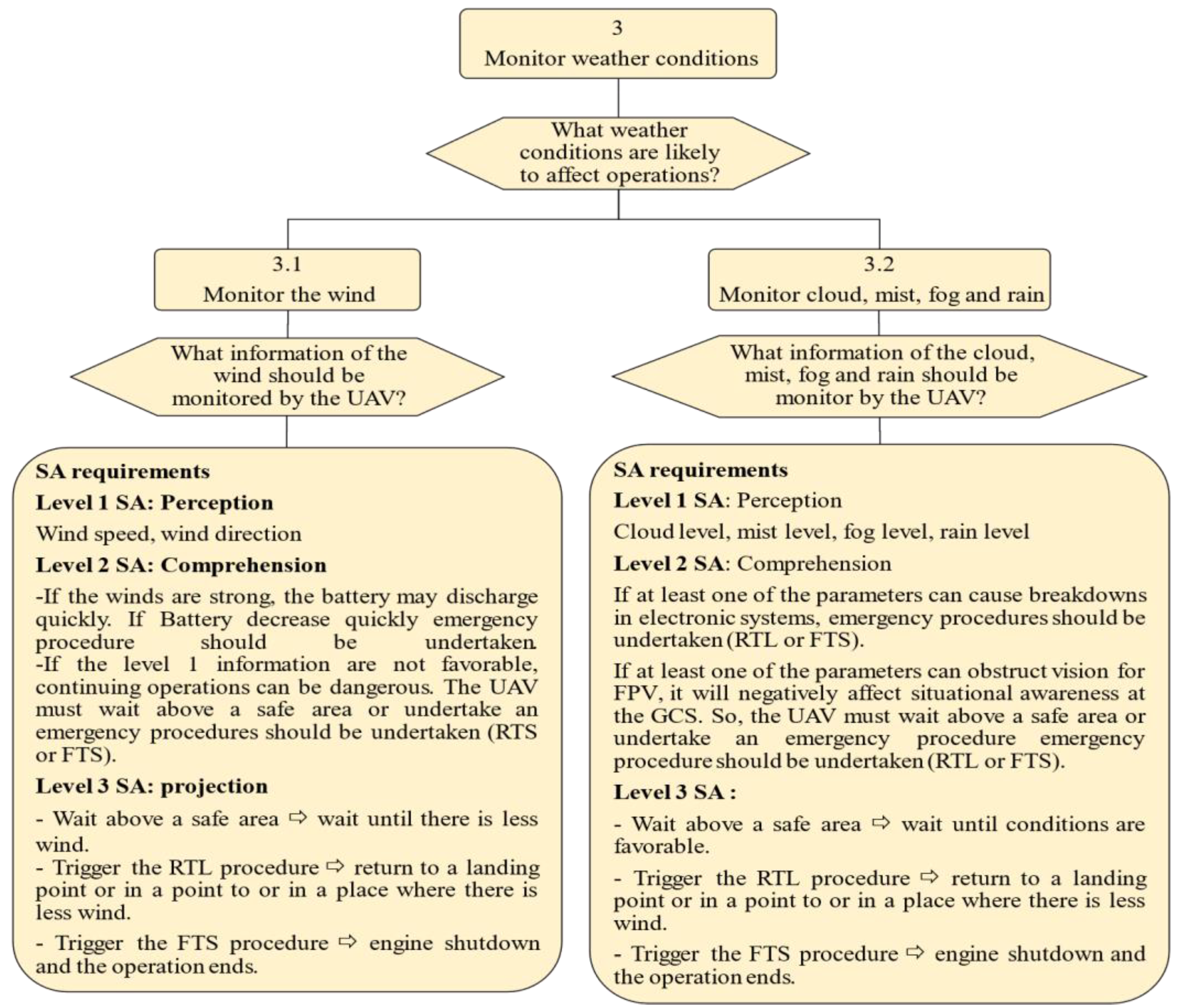

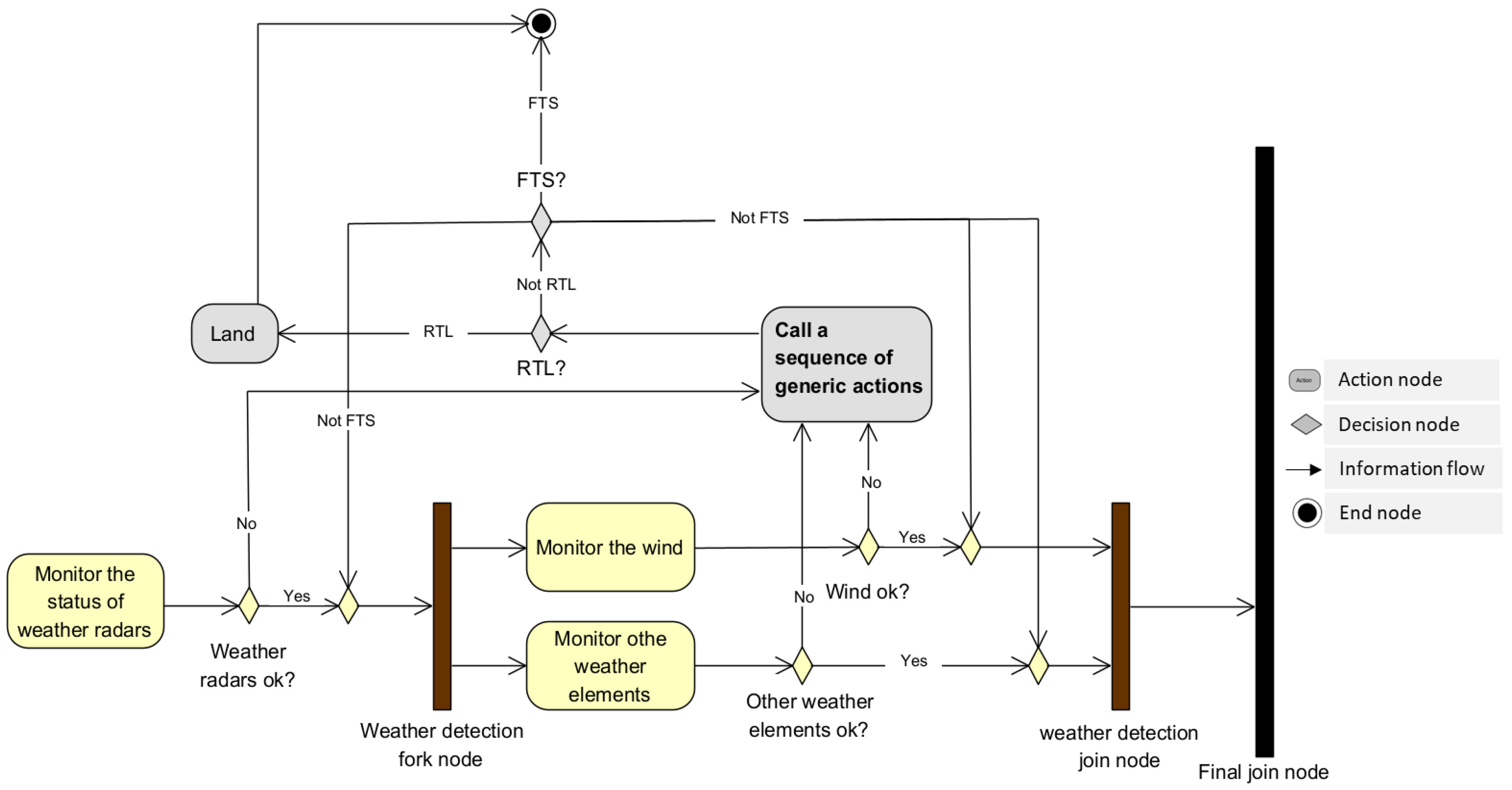

Figure 8 we decompose SA sub-goal (3): monitor weather conditions. To achieve this sub-goal, the UAV must check weather the weather radar is functioning correctly. If it is not the case, it must undertake a remediation action (rerouting or initiating an FTS or RTL procedure). In

Figure 9, it is assumed that the weather radar is functioning as expected. The UAV must then monitor the wind and other significant weather conditions such as cloud, mist, fog and rain. If at least one weather condition is likely to compromise the safety of the AAM, the UAV must execute a corrective action (waiting above a safe area or initiating an RTL or FTS procedure) to maintain the safety of the AAM. In

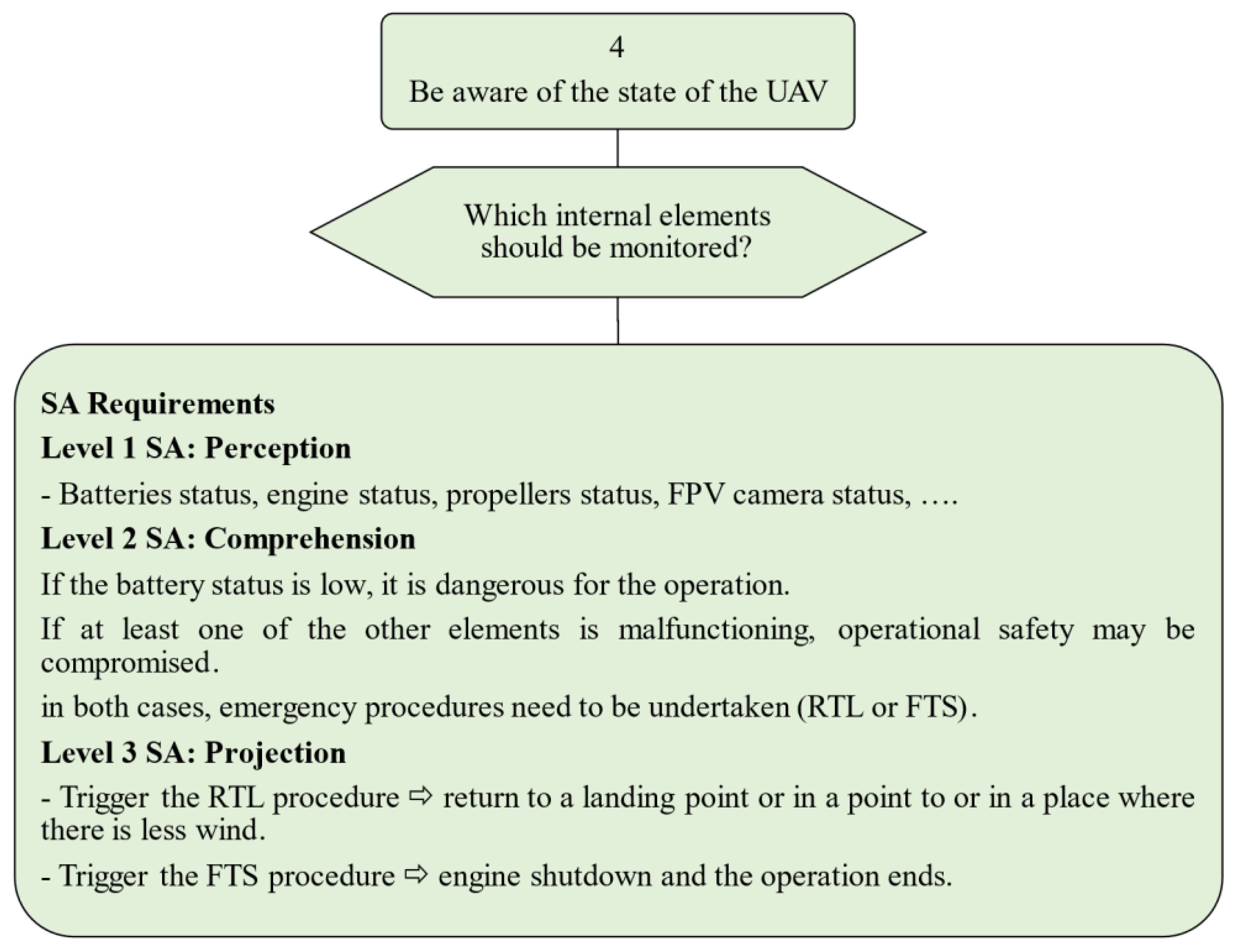

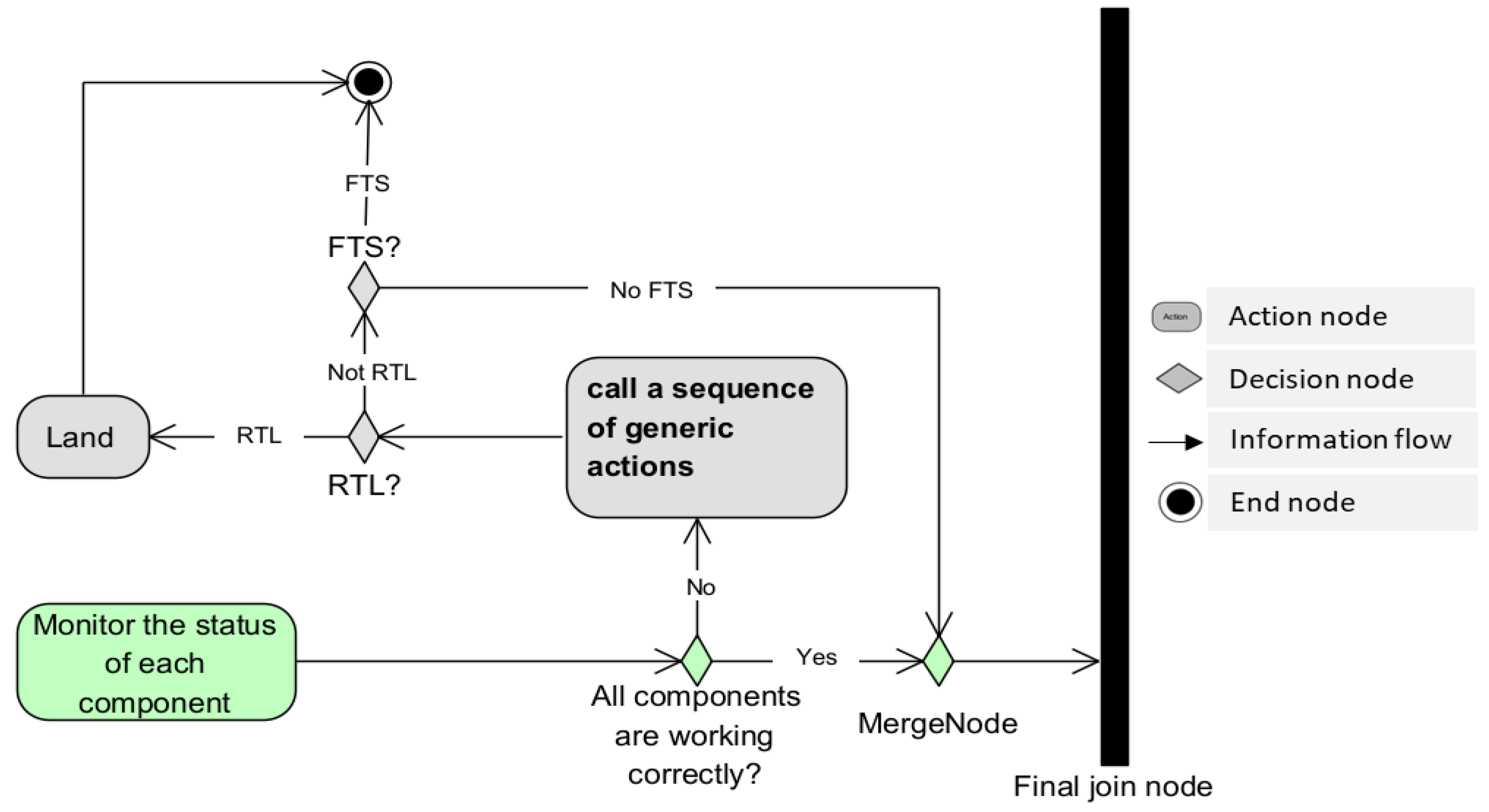

Figure 10, we decompose UAV SA sub-goal (4): to be aware of its state. To achieve this sub-goal, the UAV must monitor the status of its components (e.g., its batteries, motor, propellers, or FPV camera). If one or more components are malfunctioning, it must perform a corrective action to maintain the safety of the AAM (by initiating an RTL or FTS procedure).

Following this decomposition of the UAV’s SA into a goal and several sub-goals, we next propose an abstract model of the structure and behavior of the AAM system which places the UAV at its center.

5. High-level SysML modeling of the advanced air mobility

In this section we present an abstract representation of the AAM system from a SA perspective by performing high-level and simplified modeling of the operating context, in which the UAV is considered to be at the center of the system. We used the system modelling language (SysML) to model and analyze the specification, design and verification of complex systems both at the hardware and software level [

37,

38].

SysML consists of different diagrams for modeling system requirements, structures and behaviors [

37]. The structure of the AAM system is represented through a block definition diagram and the behavior of the system through an activity diagram, which illustrates the activities that the UAV should perform in order to achieve its SA goals.

5.1. Block definition diagram for the advanced air mobility

Block definition diagrams (BDDs) are used to represent the structural organization of a system [

37]. In a BDD, relationships between entities are represented by arrows. Arrows with filled diamonds at one end (

) represent composition relationships (i.e., the block at the filled diamond end of the arrow is composed of the object at the other end). Arrows with an empty diamond at one end (

) indicate aggregation relationships (i.e., the block at the empty diamond end of the arrow may contain the object at the other end). Simple arrows with a triangle at one end (

) represent specialization relationships (i.e., the block at the triangle end is a generalization of the object at the other end). Simple arrows (

) represent independent associations between two blocks.

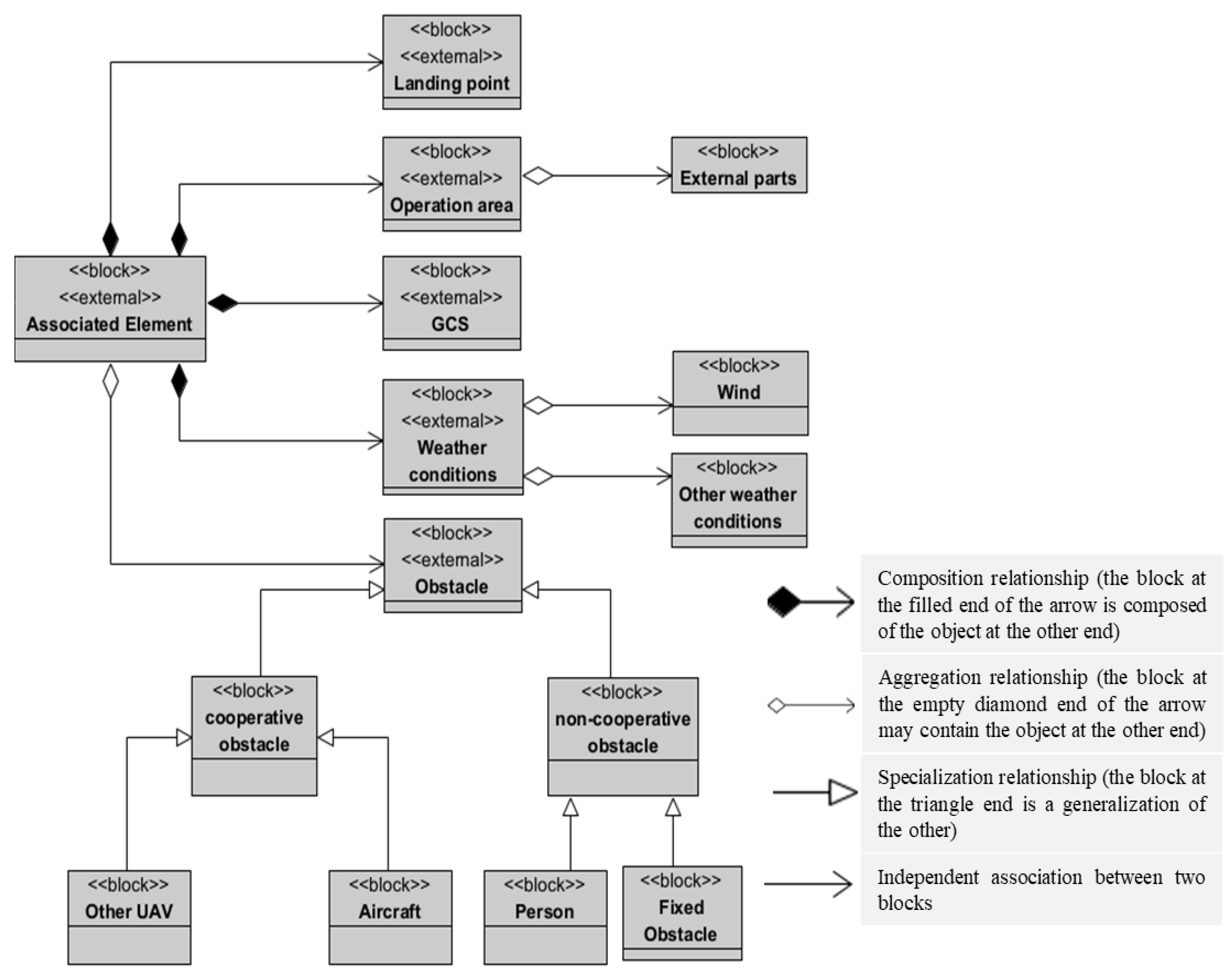

Figure 11 is a BDD of the AAM system. Since the UAV is the main entity in the AAM system, our modeling of the structure of the system consists in representing its main physical components and the subsystems which enable it to achieve SA goals (the DAA management system, weather detection system, autopilot, and the C2 links management system). The UAV’s SA depends on the reciprocity of the relationship between the UAV and the other components of the system. These other components are described in

Figure 12 and include obstacles, weather, GCS, area of operation and landing points.

5.2. Activity diagram of the UAV in the advanced air mobility

Activity diagrams are used to describe the behavior of a system in terms of control logic and actions [

37]. An activity diagram consists of an initial node (represented by a solid circle

), actions (represented by rectangles with rounded corners

), control flows between actions (represented by arrows

), decisions/merges (represented by diamonds

), and one or more final nodes (represented by an empty circle containing a solid one

). An activity diagram can also contain fork nodes which allow an action to be duplicated into a set of parallel sub-actions, each of which produces a token at the end of its execution. For the main action of the fork node to be considered complete, all the tokens produced by the parallel actions must compose a join node which produces the result of the action (the input of the associated fork node). Both fork and join nodes are represented by a thick line (

).

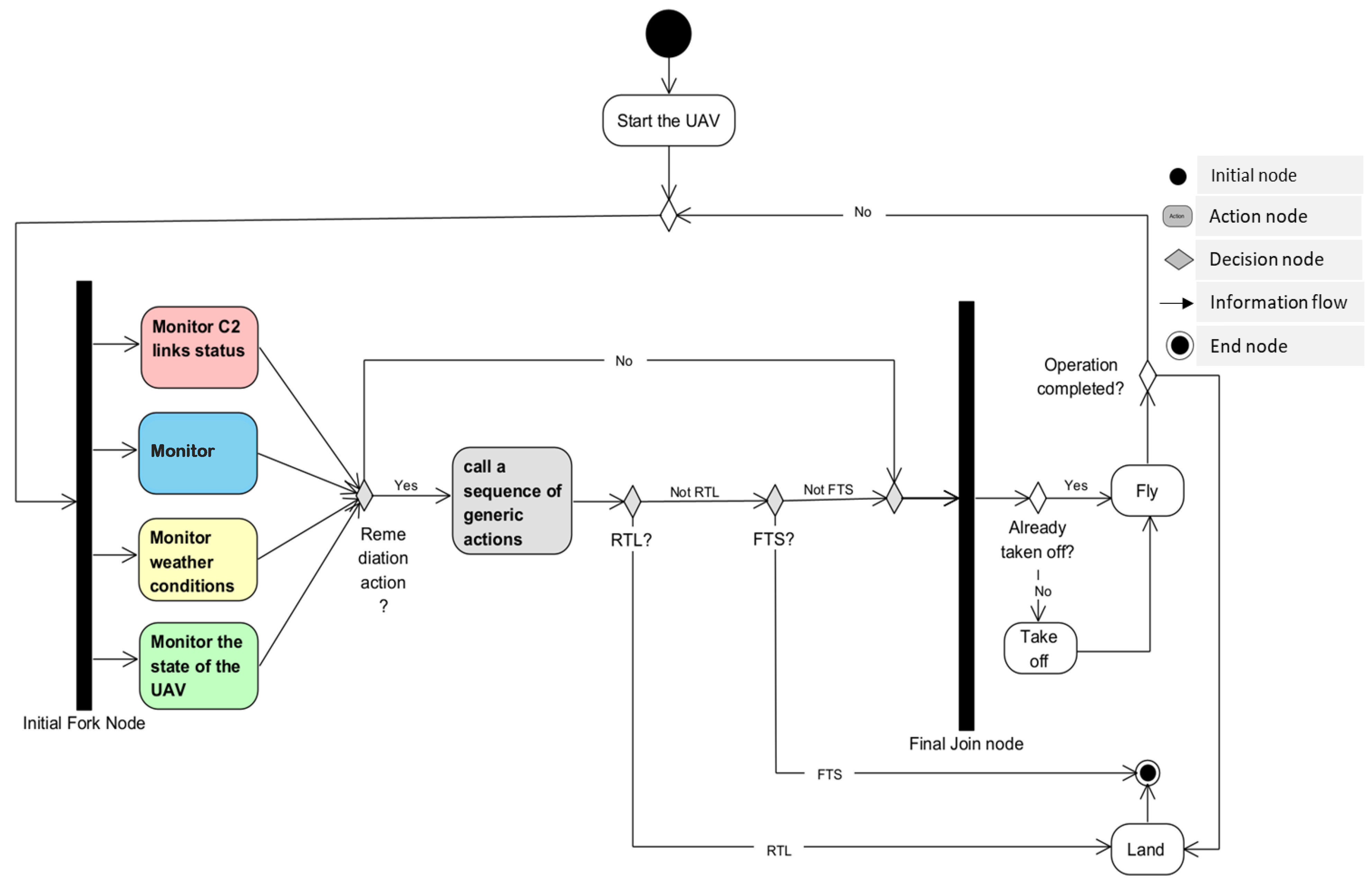

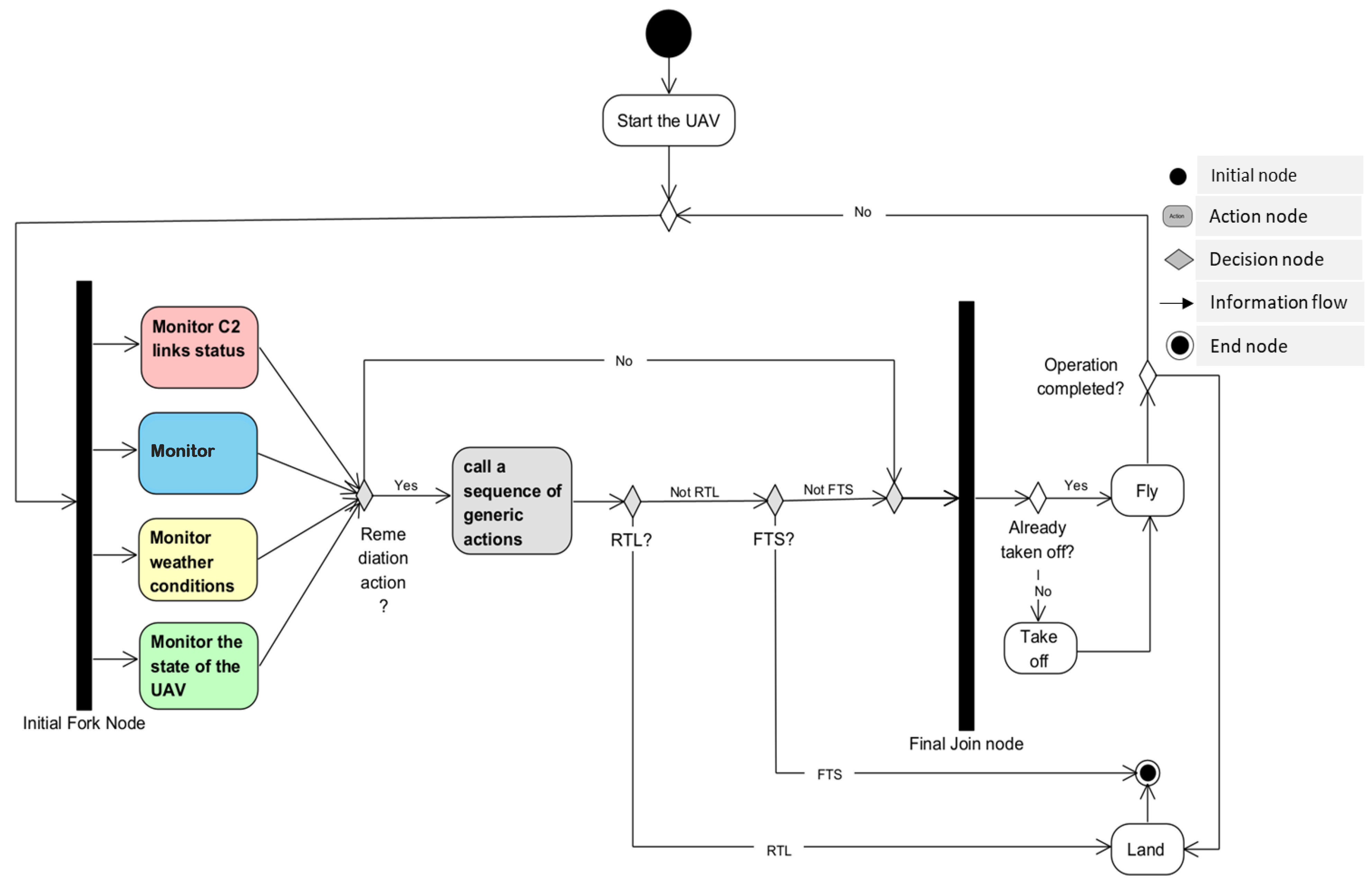

Figure 13 represents, at a high level, the SA activities that the UAV must perform to ensure the safety of the AAM. The decisions which lead to these actions are based on the information shared between the blocks of the UAV. The actions are performed repeatedly and in real time until the operation is completed.

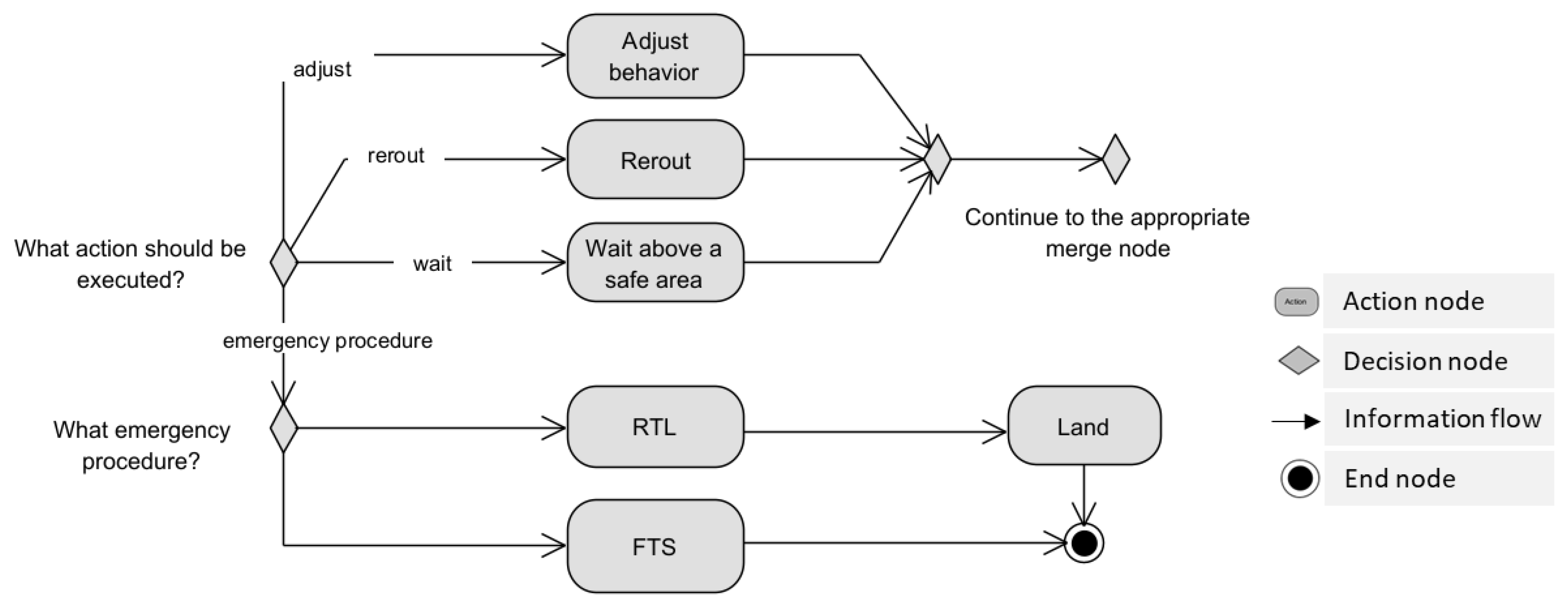

5.2.1. The sub-activity Call a sequence of generic actions

In

Figure 14 we describe the generic actions underlined in the sub-activity

Call a sequence of generic actions. These consist of generic resolution actions that can be taken to resolve detected problems. We have represented the sub-activity in this way because, according to the decomposition of SA performed in

Section 4, the problems that may arise during an operation have the same set of possible solutions. These solutions are divided into two main categories: solutions that could lead to the interruption of the operation, and solutions which lead to the continuation of the operation. The actions included in the second category consist, depending on the situation, of adjusting the behavior of the UAV, rerouting the UAV, or the UAV waiting above a safe area. The actions in the first category are the RTL and FTS emergency procedures.

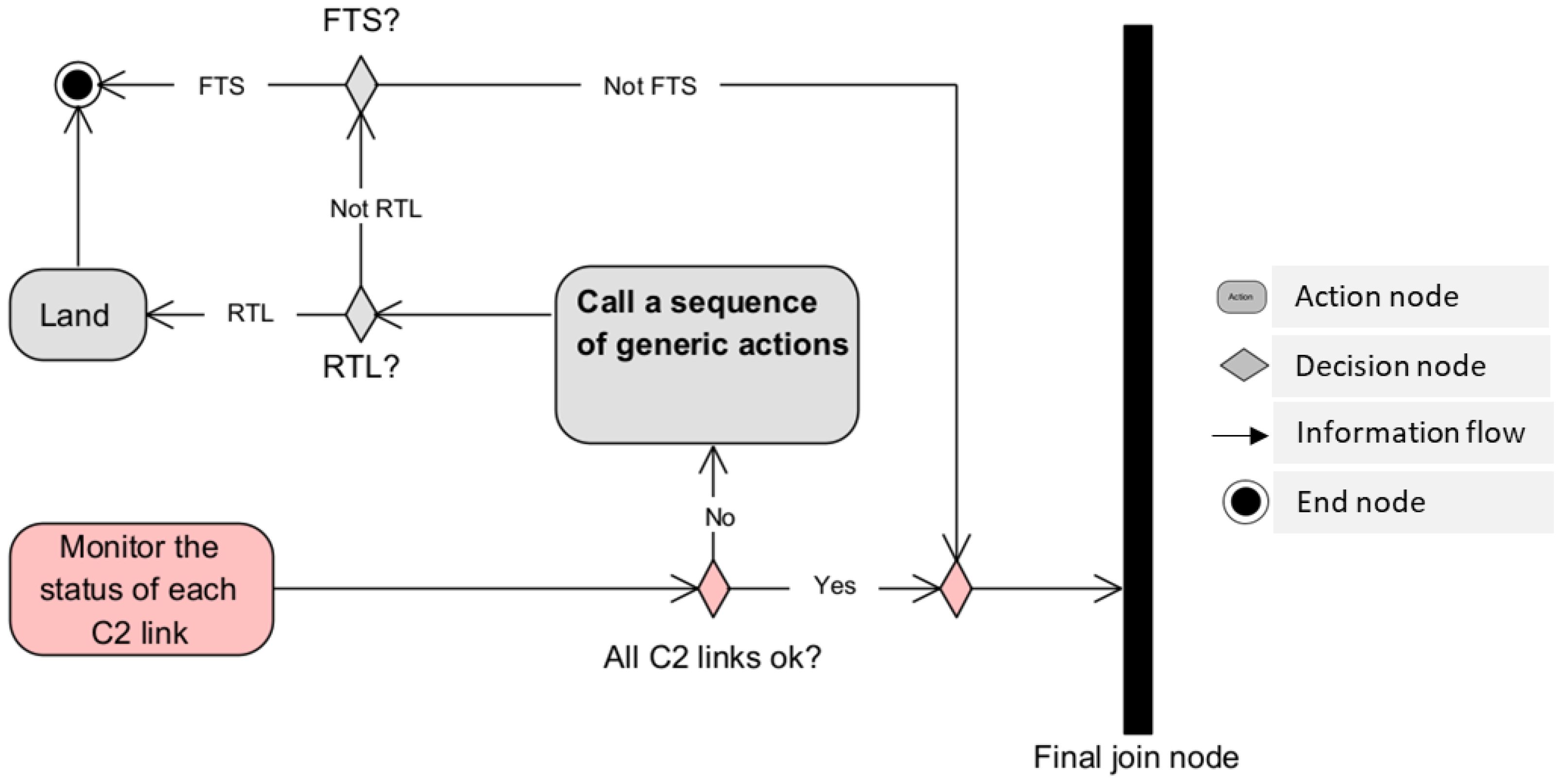

5.2.2. The sub-activity Monitor C2 links status

Figure 15 is a representation of SA actions and decisions relating to the UAV’s C2 links management subsystem. The main test to guide decision-making concerns the status of each of the three links supported by this subsystem (i.e., RC-GCS, autopilot-GCS and FPV). If one or more of these links is lost or is not functioning correctly, an appropriate sequence of generic actions must be performed to resolve the issue in order to maintain the safety of the AAM. If all the links are working correctly, the activity continues to the

Final Join node to wait for synchronization with the parallel sub-activities.

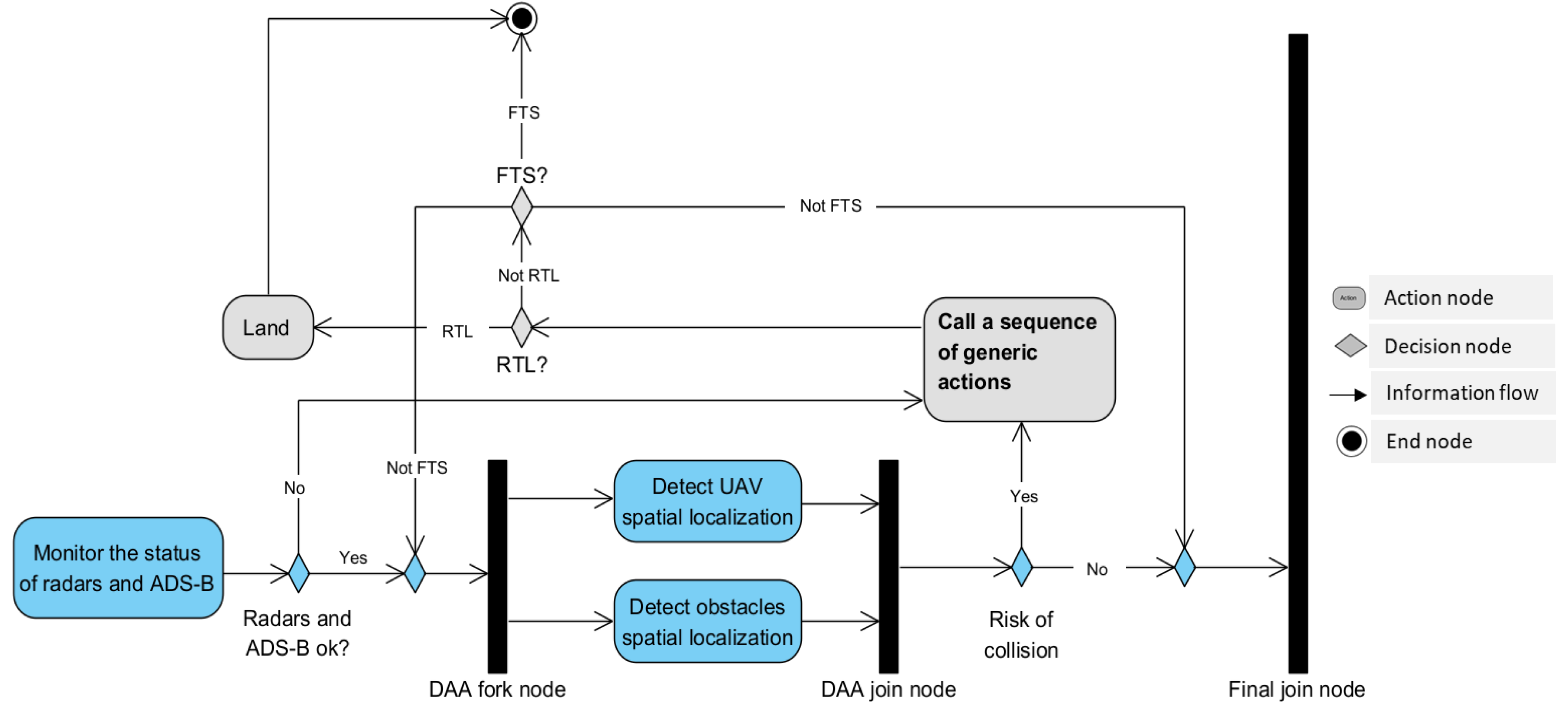

5.2.3. The sub-activity Monitor threats

Figure 16 presents SA actions and decisions relating to the DAA system. The first action of this sub-activity consists of evaluating the status of the radar and ADS-B systems. A decision is then made according to the status of these systems. If one or more of them is malfunctioning, an appropriate sequence of generic actions must be performed to resolve the issue in order to maintain the safety of the AAM. However, if everything works correctly, the token produced by the

DAA fork node disconnects into two parallel actions consisting of the self-detection of the UAV's spatial information and the spatial location of obstacles. Once this is done, the product tokens synchronize at the

DAA join node, and the product token is used to decide whether there is a risk of collision. If there is a risk of collision, an appropriate sequence of generic actions must be performed to resolve the issue in order to maintain the safety of the AAM. If there is no risk of collision, the activity continues to the

Final join node to wait for synchronization with the parallel sub-activities.

5.2.4. The sub-activity Monitor weather conditions

Figure 17 represents the actions and SA decisions related to the weather management system. The principle is the same as the previous sub-activity. The first action consists of evaluating the status of the weather radar. A decision is then made according to the status of the weather radar. If the radar is malfunctioning, an appropriate sequence of generic actions must be performed to resolve the problem. However, if everything works correctly, the token produced by the

Weather detection fork node splits in parallel to control parameters relating to the wind and to other weather conditions. If at least one of the weather conditions detected is unfavorable for the operation, an appropriate sequence of generic actions must be performed to resolve the issue. On the other hand, if weather conditions are generally favourable, the activity continues at the

Weather detection join node and the token is send to the

Final join node to wait for synchronization with the parallel sub-activities.

5.2.4. The sub-activity Monitor the state of the UAV

Figure 18 represents the SA actions and decisions related to the state of the UAV. Here, the decision concerns the operation of one or more components of the UAV. If one or more components are at risk of compromising the safety of the operation, an appropriate sequence of generic actions must be performed to resolve the problem. If all the components work correctly, the activity continues to the

Final join node to wait for synchronization with the parallel sub-activities.

When the Final join node receives all the tokens from the four parallel sub-activities, it performs the synchronization and passes the resulting token to the next step. This transmission means that all necessary checks and adjustments have been made and the operation can continue. The next action is either the Fly action if the UAV has already taken off, or the take off action if not. At any time, the UAV will check if the operation has been completed and will land if it has; if it has not, the UAV will continuously verify that the safety of the AAM is not being compromised by checking that it has resolved the four main SA goals. The loop continues in this way until the operation is completed.

This activity diagram presents, at a high level, the internal process that the UAV must perform to consistently achieve the SA goals necessary for the safety of an operation. In the next section we present a case study in which we analyze a specific UAV to verify whether the technologies it uses allow it to achieve the four SA goals described throughout this paper.

6. Case study

During this research project we worked with an industrial partner which uses several different types of UAVs, including the DJI Matrice 300 RTK released in May 2020. In this section, we analyze the DJI Matrice 300 RTK to determine whether the technologies it uses are sufficient to achieve the SA goals necessary for UAV operation in the AAM.

6.1. Analysis of the DJI Matrice 300 RTK

The dimensions of the DJI Matrice 300 RTK, when unfolded and excluding propellers, are 810×670×430 mm (L×W×H). It has a maximum takeoff weight of 9 kg and can operate for a maximum flight time of 55 minutes at a maximum speed of 23 m/s in manual mode and 17 m/s in automatic mode. It can operate at temperatures between -20 °C and 50 °C (-4 °F to 122 °F) and has a maximum wind resistance of 15 m/s (12 m/s when taking off or landing).

Table 1 shows the technologies used by this UAV to acquire and maintain SA according to the four main SA goals described above.

Table 1.

Analysis of the DJI Matrice 300 RTK according to the four main SA goals described above. The SA goals are identified in the left column. In the right column, we have analyzed the technologies present in the specifications and have associated them with each of these goals.

Table 1.

Analysis of the DJI Matrice 300 RTK according to the four main SA goals described above. The SA goals are identified in the left column. In the right column, we have analyzed the technologies present in the specifications and have associated them with each of these goals.

| UAV |

Matrice 300 RTK |

| C2 links management system (autopilot, manual radio control, FPV) |

− Operating frequency

− 2.4000-2.4835 GHz (commonly used for the RC-GCS link [ 17]) − 5.725-5.850 GHz (commonly used for the FPV [ 17]) − Max transmitting distance (unobstructed, free of interference)

∙

2.4000-2.4835 GHz: 29.5 dBm (FCC)

∙

5.725-5.850 GHz: 28.5 dBm (FCC).

|

| DAA management system |

− Vision system with forward/backward/left/right and upward/downward obstacle sensing range of 0.7–40m and 0.6–30m respectively. This also includes forward/backward/downward and left/right/upward fields of view (FOVs) of 65° (H), 50° (V) and 75° (H), 60° (V), respectively. − Infrared ToF sensing system 3 with an obstacle range between 0.1–8m and FOV of 30° (± 15°) − Top and bottom auxiliary lights with an effective lighting distance of 5m. |

| Weather management system |

Not described. |

| Underlined physical parts |

− An external LiPo battery, the WB37 Intelligent Battery with a charging time (using the BS60 Intelligent Battery Station) of 70 minutes at 15 °C to 45 °C or 130 minutes at 0 °C to 15 °C and an operating temperature of -4 °F to 104 °F (-20 °C to 40 °C) − An 18650 Li-ion built-in battery (5000 mAh @ 7.2 V) with a charging time of 135 minutes (using a USB charger with a specification of 12V / 2A) and an operating temperature of -4 °F to 122 °F (-20 °C to 50 °C). − Propellers − Diagonal wheelbase of 895 mm |

6.2. Discussion

The specifications of the DJI Matrice 300 RTK indicate that it has the technologies necessary to autonomously achieve SA goals related to the command and control of the UAV and the DAA. The specifications for the remote controller describe the radio frequencies used and other associated characteristics such as the maximum transmitting distance and the maximum effective isotropic radiated power. The 2.4000–2.4835 GHz radio frequency is commonly used for manual radio control from GCS [

17], and the 5.725–5.850 GHz radio frequency is used for the real-time airborne video transmission associated with FPV [

17]. The DAA specifications described relate to the vision system and the sensors available to scan objects around the UAV in order to gain their spatial information [

39]. Some physical parts are also described in the specifications list, including the batteries, propellers, and wheelbase.

However, we found some technological shortcomings. Firstly, the autopilot-GCS C2 link is not explicitly described. Secondly, the available specifications do not mention any technology for the detection of weather conditions. We note for example that there is a specification describing maximum wind resistance of the UAV, but there is no description of technologies for monitoring the characteristics of the wind. Thirdly, although the specifications detail some of the physical parts of the UAV, no description is provided of the procedures employed by the UAV to self-check the status of these physical parts (e.g., procedures to check battery level).

We have devised some hypotheses that may justify each of these shortcomings. With regard to the lack of a description of the GCS-autopilot link, we assume that, since this is an UAV suitable for BVLOS operations, it must have an autopilot and that the autopilot’s link with the GCS can be implicitly attested to by the statuses of the other C2 links (RC-GCS and FPV). Nevertheless, authors in [

17] specify that a 900 MHz C2 link is generally used for the GCS-autopilot link. The lack of a description of the procedures for the evaluation of the state of physical components could be because they are integrated, and this information will thus be provided immediately to the GCS during the operation

4. Finally, the absence of weather detection technologies may be due to the current unavailability of technologies such as miniaturized weather radar systems which fit the size, weight, and power requirements of small UAVs [

18]. A pilot using this UAV must therefore monitor and assess the characteristics of wind, cloud, mist, fog and rain themselves. This could be done through the FPV or by analysing other SA elements (for example, UAV instability or rapid battery drain could be caused by high winds or low temperatures).

Finally, we believe that the decomposition of SA performed in this paper advances research in the UAV field. We also consider that this study can be used by UAV designers to identify the SA safety requirements that a UAV must cover in order to include appropriate technologies to satisfy these requirements. In addition, we propose that a section in the general specifications of UAVs should be clearly focused on the description of SA specifications. Such a section should individually identify each SA goal and clearly specify the technologies which the UAV uses (or does not use) to achieve it. We also recommend that PICs assess the SA goals achieved by each UAV before beginning an operation. This will allow them to pay particular attention to the goals which are not achieved during the operation. Such an assessment will also involve taking into consideration the air risk classes that will be involved in the operation in order to measure the associated risk ratio [

40]. Finally, we believe that this work may add value to the information required to develop regulations for BVLOS operations, which are currently only authorized on a case-by-case basis.

7. Conclusion

As the AAM vision is developed, the future of aerial transport promises to be dense, complex, and highly dynamic. As a result, operations could quickly prove to be dangerous for UAVs, other occupants of the airspace, people participating in the operation, civilians, and material goods on the ground. Major safety issues must be foregrounded and fully considered. During operations, the task of acquiring and maintaining SA must be carried out by both the PIC at the GCS and by the UAV itself as an autonomous entity in the AAM system. This requires the use of technologies and procedures to allow the UAV to self-locate in the airspace, communicate with the GCS and other airspace occupants, and autonomously make decisions and take actions to effectively manage the operation. We have analyzed these technologies and procedures in this article and presented, in a GDTA, a decomposition of the SA of an UAV in the AAM. We then carried out SysML modeling to represent, at a high level, the structure and behavior of the AAM system while considering the UAV as the central entity of the AAM system. We have also presented a case study in which we have analyzed the DJI Matrice 300 RTK, one of the flagships UAVs of our industrial partner. This analysis had two main objectives: to demonstrate an example of the exploitation of this work, and to determine whether the analyzed UAV uses technologies which allow it to acquire and maintain good SA during operations. This analysis allowed us to highlight that the UAV did not use any technology to achieve the SA goal related to weather conditions. Thus, our conclusion is that the DJI Matrice 300 RTK cannot achieve all SA goals necessary to ensure the safety of the AAM. When performing an operation with this UAV, the PIC must ensure to achieve not covered SA goals themselves. Finally, this article focused on the decomposition of the SA of an UAV as the basic element of the AAM system. The next step will be to implement the SA module to be included in UAV’s embedded system. Future research should focus on analyzing distributed SA, in which UAVs work together towards a common goal as a swarm. In addition, for the evaluation of SA in an operational context, it would be interesting to propose an approach for generating various scenarios simulating situations that may be encountered when undertaking an operation using a single UAV or a swarm of UAVs.

Author Contributions

Under the supervision of G. Nicolescu and M. Ben Attia, S.A. Kamkuimo, R. Zrelli and H.A. Misson conducted the study. S.A. Kamkuimo was in charge of conceptualization, methodology and writing the original drafts. Review and editing have been made by F. Magalhaes and S.A. Kamkuimo.

Funding Statement: This research was supported by Mitacs (grant No. MITACS IT12973).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Transport Canada, Transport Canada's Drone Strategy to 2025, ed. T. Canada. 2021.

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of Small Forest Areas Using an Unmanned Aerial System. Remote. Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef]

- Sweeney, N. Civilian Drone Use in Canada 2017 [cited 2023 25/04/2023]; Available from: https://lop.parl.ca/sites/PublicWebsite/default/en_CA/ResearchPublications/201723E?

- Governement of Canada, Canadian Aviation Regulations (SOR/96-433), ed. D.o. Justice. 2022.

- Transport Canada, Aeronautical Information manual, ed. T. Canada. 2021.

- Drury, J.L., L. Riek, and N. Rackliffe. A decomposition of UAV-related situation awareness. in Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-robot interaction. 2006. [Google Scholar]

- Davies, L.; Bolam, R.C.; Vagapov, Y.; Anuchin, A. Review of Unmanned Aircraft System Technologies to Enable Beyond Visual Line of Sight (BVLOS) Operations. 2018; 6. [Google Scholar] [CrossRef]

- Bloise, N.; Primatesta, S.; Antonini, R.; Fici, G.P.; Gaspardone, M.; Guglieri, G.; Rizzo, A. A Survey of Unmanned Aircraft System Technologies to enable Safe Operations in Urban Areas. 2019. [Google Scholar] [CrossRef]

- Politi, E. , et al. A Survey of UAS Technologies to Enable Beyond Visual Line Of Sight (BVLOS) Operations. in VEHITS. 2021.

- National Academies of Sciences, Engineering and Medicine, Advancing aerial mobility: A national blueprint. 2020: National Academies Press.

- Prevot, T.; Rios, J.; Kopardekar, P.; Iii, J.E.R.; Johnson, M.; Jung, J. UAS Traffic Management (UTM) Concept of Operations to Safely Enable Low Altitude Flight Operations. 2016. [Google Scholar] [CrossRef]

- Blake, T. , What is Unmanned Aircraft Systems Traffic Management. 2021, NASA.

- Endsley, M.R., B. Bolté, and D.G. Jones, Designing for situation awareness: An approach to user-centered design. 2003: CRC press.

- Vankipuram, M.; Kahol, K.; Cohen, T.; Patel, V.L. Toward automated workflow analysis and visualization in clinical environments. J. Biomed. Informatics 2011, 44, 432–440. [Google Scholar] [CrossRef] [PubMed]

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors: J. Hum. Factors Ergon. Soc. 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Jane, G.V. Human Performance and Situation Awareness Measures. 2019. [Google Scholar] [CrossRef]

- Fang, S.X.; O’young, S.; Rolland, L. Development of Small UAS Beyond-Visual-Line-of-Sight (BVLOS) Flight Operations: System Requirements and Procedures. Drones 2018, 2, 13. [Google Scholar] [CrossRef]

- Jacob, J. Real-time Weather Awareness for Enhanced Advanced Aerial Mobility Safety Assurance. in AGU Fall Meeting Abstracts. 2020.

- Vasile, P.; Cioacă, C.; Luculescu, D.; Luchian, A.; Pop, S. Consideration about UAV command and control. Ground Control Station. J. Physics: Conf. Ser. 2019, 1297, 012007. [Google Scholar] [CrossRef]

- Unmanned Systems Technology (UST), UAV Autopilot Systems. 2022 13-01-2022 [cited 2023 23-05-2023]; Available from: https://www.unmannedsystemstechnology.com/expo/uav-autopilot-systems/.

- Nickols, F. and Y.J. Lin, Creating Precision Robots: A Project-based Approach to the Study of Mechatronics and Robotics. 2018: Butterworth-Heinemann.

- Stevenson, J.D.; O'Young, S.; Rolland, L. Assessment of alternative manual control methods for small unmanned aerial vehicles. J. Unmanned Veh. Syst. 2015, 3, 73–94. [Google Scholar] [CrossRef]

- Ro, K.; Oh, J.-S.; Dong, L. Lessons Learned: Application of Small UAV for Urban Highway Traffic Monitoring. In Proceedings of the 45th AIAA Aerospace Sciences Meeting and Exhibit, Reno, NV, USA, 8–11 January 2007. [Google Scholar] [CrossRef]

- Stansbury, R.; Wilson, T.; Tanis, W. A Technology Survey of Emergency Recovery and Flight Termination Systems for UAS. 2009. [Google Scholar] [CrossRef]

- Kim, D.-H.; Go, Y.-G.; Choi, S.-M. An Aerial Mixed-Reality Environment for First-Person-View Drone Flying. Appl. Sci. 2020, 10, 5436. [Google Scholar] [CrossRef]

- Smolyanskiy, N.; Gonzalez-Franco, M. Stereoscopic First Person View System for Drone Navigation. Front. Robot. AI 2017, 4. [Google Scholar] [CrossRef]

- Transport Canada, Flying your drone safely and legally. 2020.

- Lyu, H. Detect and avoid system based on multi sensor fusion for UAV. in 2018 International Conference on Information and Communication Technology Convergence (ICTC). 2018. IEEE.

- Minucci, F.; Vinogradov, E.; Pollin, S. Avoiding Collisions at Any (Low) Cost: ADS-B Like Position Broadcast for UAVs. IEEE Access 2020, 8, 121843–121857. [Google Scholar] [CrossRef]

- Shadab, N.; Xu, H. A systematic approach to mission and scenario planning for UAVs. 2016; 7. [Google Scholar] [CrossRef]

- Fasano, G. , et al., Sense and avoid for unmanned aircraft systems. IEEE Aerospace and Electronic Systems Magazine, 2016. 31(11): p. 82-110. [CrossRef]

- Zimmerman, J. , ADS-B 101: what it is and why you should care. Air Facts Journal, 2013.

- Lim, C.; Li, B.; Ng, E.M.; Liu, X.; Low, K.H. Three-dimensional (3D) Dynamic Obstacle Perception in a Detect-and-Avoid Framework for Unmanned Aerial Vehicles. 2019. [Google Scholar] [CrossRef]

- Müller, T. Robust drone detection for day/night counter-UAV with static VIS and SWIR cameras. in Ground/Air Multisensor Interoperability, Integration, and Networking for Persistent ISR VIII. 2017. SPIE. [CrossRef]

- National Civil Aviation Agency - Brazil, Drones and Meteorology. 2020.

- Dai, X.; Ke, C.; Quan, Q.; Cai, K.-Y. RFlySim: Automatic test platform for UAV autopilot systems with FPGA-based hardware-in-the-loop simulations. Aerosp. Sci. Technol. 2021, 114, 106727. [Google Scholar] [CrossRef]

- Hause, M. The SysML modelling language. in Fifteenth European Systems Engineering Conference. 2006.

- Holt, J. and S. Perry, SysML for systems engineering. Vol. 7. 2008: IET.

- Transport Canada, Regulations for Remotely Piloted Aircraft Systems (Civilian Drones). Vol. 2022. 2022.

- Transport Canada, Advisory Circular (AC) No. 903-001. 2021 26-02-2023]; Available from: https://tc.canada.ca/en/aviation/reference-centre/advisory-circulars/advisory-circular-ac-no-903-001.

Figure 1.

Illustration of spatial limits of visual line-of-sight (VLOS), extended visual line-of-sight (EVLOS) and beyond visual line-of-sight (BVLOS). The maximum horizontal and vertical limits around the pilot are 500 m and 200 m respectively [

5,

7]. EVLOS operations involve additional visual observers to cover more space [

1,

5,

7]. If an operation is beyond the VLOS of every observer in EVLOS, it is referred to as a BVLOS operation [

9,

10].

Figure 1.

Illustration of spatial limits of visual line-of-sight (VLOS), extended visual line-of-sight (EVLOS) and beyond visual line-of-sight (BVLOS). The maximum horizontal and vertical limits around the pilot are 500 m and 200 m respectively [

5,

7]. EVLOS operations involve additional visual observers to cover more space [

1,

5,

7]. If an operation is beyond the VLOS of every observer in EVLOS, it is referred to as a BVLOS operation [

9,

10].

Figure 2.

A simplified representation of the SA model devised by Endsley et al. [

13]. This model is an iterative process during which SA acquisition depends on the state of the environment [

13]. Decision-making is based on SA and according to the goals of the entity concerned. Decisions can lead to actions that modify the state of the environment in the interest of achieving the intended goals.

Figure 2.

A simplified representation of the SA model devised by Endsley et al. [

13]. This model is an iterative process during which SA acquisition depends on the state of the environment [

13]. Decision-making is based on SA and according to the goals of the entity concerned. Decisions can lead to actions that modify the state of the environment in the interest of achieving the intended goals.

Figure 3.

First level of the GDTA of the UAV in the AAM system. The main goal of the UAV is to ensure the safety of the AAM system. To achieve this goal, it should (1) ensure a good communication with the ground control station, (2) detect and avoid obstacles, (3) monitor weather conditions, and (4) be aware of its own state.

Figure 3.

First level of the GDTA of the UAV in the AAM system. The main goal of the UAV is to ensure the safety of the AAM system. To achieve this goal, it should (1) ensure a good communication with the ground control station, (2) detect and avoid obstacles, (3) monitor weather conditions, and (4) be aware of its own state.

Figure 4.

Decomposition of the first sub-goal of the UAV’s GDTA: (1) communicate with the GCS. The hexagonal shape represents the decision about how to ensure a good communication with the GCS. The answer to this question leads to the description of the three SA levels associated with communication with the GCS.

Figure 4.

Decomposition of the first sub-goal of the UAV’s GDTA: (1) communicate with the GCS. The hexagonal shape represents the decision about how to ensure a good communication with the GCS. The answer to this question leads to the description of the three SA levels associated with communication with the GCS.

Figure 5.

Decomposition of the second sub-goal of the UAV’s goal-directed task analysis: (2) detect and avoid obstacles. The hexagonal shape represents the decision about how to ensure that obstacles are detected. The answer to this question leads to the description of the three SA levels associated with obstacle detection technologies. The shapes denoted as 2.1 and 2.2 represent two other sub-goals which must be considered in order detect obstacles.

Figure 5.

Decomposition of the second sub-goal of the UAV’s goal-directed task analysis: (2) detect and avoid obstacles. The hexagonal shape represents the decision about how to ensure that obstacles are detected. The answer to this question leads to the description of the three SA levels associated with obstacle detection technologies. The shapes denoted as 2.1 and 2.2 represent two other sub-goals which must be considered in order detect obstacles.

Figure 6.

Decomposition of sub-goal 2.1 of the UAV’s goal-directed task analysis. The decomposition of this sub-goal allows the identification of SA requirements relating to the spatial information of the UAV which is necessary to ensure the safety of the AAM.

Figure 6.

Decomposition of sub-goal 2.1 of the UAV’s goal-directed task analysis. The decomposition of this sub-goal allows the identification of SA requirements relating to the spatial information of the UAV which is necessary to ensure the safety of the AAM.

Figure 7.

Decomposition of sub-goal 2.2 of the UAV’s GDTA. The achievement of this sub-goal requires three other sub-goals to be achieved. The decomposition of sub-goal 2.2.1 allows spatial information relating to other flying obstacles to be identified, which is necessary to assess the risk of collision. The decomposition of sub-goal 2.2.2 allows identification of information relating to nearby fixed obstacles in order to assess the risk of collision with them. The decomposition of sub-goal 2.2.3 allows identification of the information necessary to assess the proximity between the UAV and people on the ground.

Figure 7.

Decomposition of sub-goal 2.2 of the UAV’s GDTA. The achievement of this sub-goal requires three other sub-goals to be achieved. The decomposition of sub-goal 2.2.1 allows spatial information relating to other flying obstacles to be identified, which is necessary to assess the risk of collision. The decomposition of sub-goal 2.2.2 allows identification of information relating to nearby fixed obstacles in order to assess the risk of collision with them. The decomposition of sub-goal 2.2.3 allows identification of the information necessary to assess the proximity between the UAV and people on the ground.

Figure 8.

Decomposition of the third sub-goal of the UAV’s GDTA: (3) monitor weather conditions. The hexagonal shape on the left is a decision which leads to the identification of SA requirements related to weather detection technologies. The hexagonal shape on the right is a decision which identifies SA requirements related to weather conditions likely to affect the operation.

Figure 8.

Decomposition of the third sub-goal of the UAV’s GDTA: (3) monitor weather conditions. The hexagonal shape on the left is a decision which leads to the identification of SA requirements related to weather detection technologies. The hexagonal shape on the right is a decision which identifies SA requirements related to weather conditions likely to affect the operation.

Figure 9.

Decomposition of the decision about weather conditions to be monitored. These are wind cloud, mist fog and rain.

Figure 9.

Decomposition of the decision about weather conditions to be monitored. These are wind cloud, mist fog and rain.

Figure 10.

Decomposition of the fourth sub-goal of the of the UAV’s goal-directed task analysis: (4) monitor weather conditions. This sub-goal allows to identify main internal elements of the UAV that should be monitored during the operation.

Figure 10.

Decomposition of the fourth sub-goal of the of the UAV’s goal-directed task analysis: (4) monitor weather conditions. This sub-goal allows to identify main internal elements of the UAV that should be monitored during the operation.

Figure 11.

A high-level block definition diagram representing the structure of the advanced air mobility with the unmanned aerial vehicle as the central entity.

Figure 11.

A high-level block definition diagram representing the structure of the advanced air mobility with the unmanned aerial vehicle as the central entity.

Figure 12.

Description of the “Associated Element” block included in the block definition diagram of the advanced air mobility.

Figure 12.

Description of the “Associated Element” block included in the block definition diagram of the advanced air mobility.

Figure 13.

High-level modelling of the UAV behavior in the AAM system, shown in an activity diagram. Before taking off, the UAV must verify that it has achieved all its SA goals. To do so, it must follow sequences of parallel sub-activities which allow it to maintain SA and ensure the safety of the AAM system. The first step is followed by a fork node (labeled Initial Fork Node) which represents the main goal: ensuring the safety of the AAM system. From this node, a parallel branch is made to the sub-activities Monitor C2 links status, Monitor threats, Monitor weather conditions, Monitor the state of the UAV, which are related, respectively, to the C2 management system, the DAA management system, the weather detection system, and the system responsible for monitoring the state of the UAV. If the execution of one of these four actions leads to a need for remediation, the process continues through the sub-activity Call a sequence of generic actions and an appropriate remediation action is executed. These generic actions and each of the four other sub-activities are described below.

Figure 13.

High-level modelling of the UAV behavior in the AAM system, shown in an activity diagram. Before taking off, the UAV must verify that it has achieved all its SA goals. To do so, it must follow sequences of parallel sub-activities which allow it to maintain SA and ensure the safety of the AAM system. The first step is followed by a fork node (labeled Initial Fork Node) which represents the main goal: ensuring the safety of the AAM system. From this node, a parallel branch is made to the sub-activities Monitor C2 links status, Monitor threats, Monitor weather conditions, Monitor the state of the UAV, which are related, respectively, to the C2 management system, the DAA management system, the weather detection system, and the system responsible for monitoring the state of the UAV. If the execution of one of these four actions leads to a need for remediation, the process continues through the sub-activity Call a sequence of generic actions and an appropriate remediation action is executed. These generic actions and each of the four other sub-activities are described below.

Figure 14.

Modeling of the sub-activity Call a sequence of generic actions, containing the sequence of actions to resolve a problem detected during the verification of the situational awareness requirements.

Figure 14.

Modeling of the sub-activity Call a sequence of generic actions, containing the sequence of actions to resolve a problem detected during the verification of the situational awareness requirements.

Figure 15.

Modeling of the sub-activity Monitor C2 links status containing the sequence of actions to achieve the first SA goal.

Figure 15.

Modeling of the sub-activity Monitor C2 links status containing the sequence of actions to achieve the first SA goal.

Figure 16.

Modeling of the sub-activity Detect and avoid obstacles containing the sequence of actions to achieve the second SA goal.

Figure 16.

Modeling of the sub-activity Detect and avoid obstacles containing the sequence of actions to achieve the second SA goal.

Figure 17.

Modeling of the sub-activity Monitor weather conditions containing the sequence of actions to achieve the third SA goal.

Figure 17.

Modeling of the sub-activity Monitor weather conditions containing the sequence of actions to achieve the third SA goal.

Figure 18.

Modeling of the sub-activity Monitor the state of the UAV containing the sequence of actions to achieve the fourth SA goal.

Figure 18.

Modeling of the sub-activity Monitor the state of the UAV containing the sequence of actions to achieve the fourth SA goal.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

) represent composition relationships (i.e., the block at the filled diamond end of the arrow is composed of the object at the other end). Arrows with an empty diamond at one end (

) represent composition relationships (i.e., the block at the filled diamond end of the arrow is composed of the object at the other end). Arrows with an empty diamond at one end ( ) indicate aggregation relationships (i.e., the block at the empty diamond end of the arrow may contain the object at the other end). Simple arrows with a triangle at one end (

) indicate aggregation relationships (i.e., the block at the empty diamond end of the arrow may contain the object at the other end). Simple arrows with a triangle at one end ( ) represent specialization relationships (i.e., the block at the triangle end is a generalization of the object at the other end). Simple arrows (

) represent specialization relationships (i.e., the block at the triangle end is a generalization of the object at the other end). Simple arrows ( ) represent independent associations between two blocks.

) represent independent associations between two blocks. ), actions (represented by rectangles with rounded corners

), actions (represented by rectangles with rounded corners  ), control flows between actions (represented by arrows

), control flows between actions (represented by arrows  ), decisions/merges (represented by diamonds

), decisions/merges (represented by diamonds  ), and one or more final nodes (represented by an empty circle containing a solid one

), and one or more final nodes (represented by an empty circle containing a solid one  ). An activity diagram can also contain fork nodes which allow an action to be duplicated into a set of parallel sub-actions, each of which produces a token at the end of its execution. For the main action of the fork node to be considered complete, all the tokens produced by the parallel actions must compose a join node which produces the result of the action (the input of the associated fork node). Both fork and join nodes are represented by a thick line (

). An activity diagram can also contain fork nodes which allow an action to be duplicated into a set of parallel sub-actions, each of which produces a token at the end of its execution. For the main action of the fork node to be considered complete, all the tokens produced by the parallel actions must compose a join node which produces the result of the action (the input of the associated fork node). Both fork and join nodes are represented by a thick line ( ).

).