1. Introduction

Many scientific papers have been devoted to object measurement in only one dimension, such as length [

1,

2] or height [

3,

4,

5,

6,

7] or to the measurement and classification of surfaces [

8,

9,

10]. Such measurements are made to determine the size of objects [

3,

4], area [

11,

12], volume [

13,

14] or other needed measurements [

15].

For three-dimensional models images are obtained using LiDAR [

16,

17] laser-scanning technology. The quality of the LiDAR solution depends on the angular resolution settings of the LiDAR device, the measurement speed and the distance of the device from the measured object. With these parameters, the accuracy of the measurement can be adjusted as required. LiDAR-based measurements are so useful that attempts have been made to use a LiDAR-equipped Unmanned Aerial System (UAS) to separate and measure individual trees [

4] and to estimate the evolution of trees by measuring their size over time [

3]. Separating trees with LiDAR technology is used for mapping trees [

18]. However, the cost of access to LiDAR equipment is relatively high, and the equipment itself is available from a small number of specialist companies. Mobile LiDAR technology called Mobile Laser Scanning (MLS) and its Point Cloud is used to extract road boundaries, curbs and flat surfaces [

19]. Another classification method using drones is Unmanned Aerial Vehicle Structure from Motion (UAV-SfM). UAV-SfM is used mostly for object classification. Comparing LiDAR and SfM [

20] shows differences in image recognition between these two technologies. Image resolution with SfM technology is 116 points at 100 metres of altitude. This provides about 9 centimetres of image resolution. The other technology used in UAV photogrammetry employs Multi-View Stereopsis (MVS), known as UAV-MVS. This is a technique that combines photogrammetry and computer vision and is becoming increasingly popular for 3D reconstruction surveys. This technique needs computer 3D reconstruction, but it can produce very good resolution. In the paper “Multi-view stereopsis (MVS) from an unmanned aerial vehicle (UAV) for natural landform mapping” [

21] the image resolution at a height of 40 metres provides 6 points per square centimetre resolution. It provides two centimetres of resolution at 100 metres of altitude. The cost of one day of work for UAV-SfM technology is from USD 1600 in Europe (England). This does not include the cost of travel. UAV-SfM and UAV-MVS require a Real Time Kinetic (RTK) system with a ground station for high-precision measurements. This paper is partly dedicated to exploring the use of alternative optical observations for this purpose.

For the measurement of one- and two-dimensional objects, the most popular measurement methods are laser measurements, high-resolution or standard satellite photos, and an RTK system with a ground station.

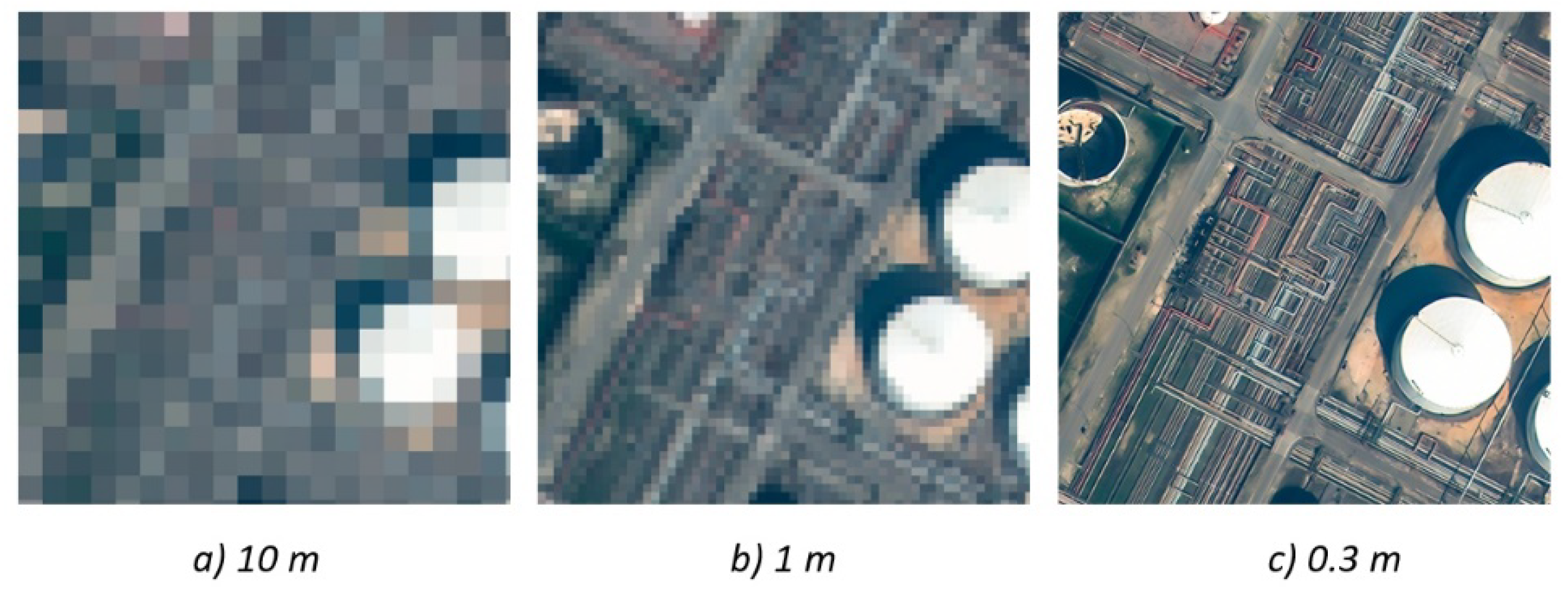

When accuracy is needed for estimating distance, size, or area (such as distances between cities), an exact measurement is not required. Such an estimate can be made from standard resolution (1 m per pixel) satellite imagery, and the quality and timeliness of the imagery does not affect the value of the estimate. When estimating distances measured in hundreds of metres, high-resolution satellite imagery (30 cm per pixel) may be used. Measurements and object detection with satellite pictures are described and compared in “Greenhouses Detection in Guanzhong Plain, Shaanxi, China: Evaluation of Four Classification Methods in Google Earth Engine” paper [

22]. Such an estimate cannot be considered a measurement, but it reflects the measured distance with a relatively low error factor (1–2% estimation error possible). Estimation from satellite imagery is relatively cheap and easy. Satellite imagery is now widely available. If high-resolution images are required, they should be purchased from specialised services. A comparison of the quality of satellite images is shown in

Figure 1 [

23].

When measuring objects a few tens to a hundred metres in size, accurate manual laser measurements or systems with a ground-based base station are used. Laser measurement is relatively cheap but requires professional measurement devices. The cost of a non-prism measurement device is over USD 2500. Measurement using Real Time Kinetic (RTK) involves using handheld systems or drones whose position is determined based on calculations of time and offset relative to the operator’s broadcast signal using Real Time Network (RTN) or according to a previously placed ground base station. The accuracy of calculations of GPS systems is 2 to 5 metres, while for the RTK system, the accuracy is 1 to 2 centimetres when using a ground base station. This is the accuracy used for surveying measurements in Geographic Information System GIS systems [

24]. Each of these methods has its own characteristics and can be applied in specific situations with the appropriate sensors [

25,

26].

For many applications accurate measurements are not required, such as estimating the amount of seed and fertiliser needed to plant fields, or the amount of fencing materials for multi-hectare fields. For such purposes approximating methods are used, which can be based on inaccurate data from satellite images or geodetic information. An accuracy of 1 metre in field size does not affect the quantity of seed required. Also, actual images are not required. However, estimating the area of crop losses caused by weather disasters or agricultural losses caused by animals requires actual pictures with a high resolution.

In this paper the authors have tried to determine how accurate measurements can be when obtained with an UAS equipped with default optical devices. Is it necessary to use additional devices for such measurements? What is the accuracy of measurements with a standard optical camera? The authors have endeavoured to find an inexpensive method for measuring objects using very high-resolution images with higher accuracy and a lower error rate than satellite pictures. The objects in question are about a hundred metres long, with tens of centimetres of acceptable error (less than 1%). The authors hope that this paper will help researchers reduce the cost of their research and increase the availability of measurement methods in situations where a 1% margin of error is acceptable.

2. Scope of inquiry

Glossary

UAV – Unmanned Air Vehicle (as a flying device)

GCS – Ground Control Station (as a control of flying device)

UAS – Unmanned Aircraft System – GCS and UAV as a completely communication and control system

GNSS – Global Navigation Satellite Systems

RTK – Real Time Kinematic

RTN – Real Time Network

RMS – Root Mean Square

GSD – Ground Sample Distance, meters per pixel

HFOV – Horizontal Field of View

LiDAR – Light Detection and Ranging

AGL – About Ground Level – altitude of flight

MTOM – Maximum Take Off Mass

This paper aims to address the question of the accuracy of measurements derived from images taken from a low-cost drone when there is no ground reference point. The work is based solely on information obtained from the screen of the application used to control the drone and the value of the GPS positions used by the drone during the flight. Both footage and photos taken during the flight are used for the measurement.

The most accurate photos can be taken from drones or from the ground with stand pods. But not all images can be taken from the ground, so for further analysis, only photos taken from the drone will be used. All the photos were taken over the past few days, so they are suitable for estimating the damage to farm fields caused by wind storms. Drones can have many different sensors, depending on the requirements [

25]. Two of the most popular methods used in papers for measurements are LiDAR [

1,

4,

8,

9,

10,

16,

25,

27] and GNSS RTK measurements [

28]. Other methods of measurement with drones involve optical sensors. This is an area of interest in this paper.

The cost of renting a drone for surveying services ranges from USD 150 per flight hour for traditional pilot-guided flights to USD 200 for flights scheduled for GIS purposes. For full-day operations, the cost to rent a drone with a pilot ranges from USD 300 to USD 800 for photogrammetry flights (EU costs). These costs are due to the large size of the LiDAR head as this necessitates the use of a large-size drone generating a lot of lifting power. A fixed-wing drone with LiDAR can fly at 70 km/h and can scan over 40 km2 on one flight (on a 1-hour flight) with 1000 metres Above Ground Level (AGL).

Multirotor with LIDAR can fly at 36 km/h, however for only 25 minutes, so it will cover 8 km

2 for 1000 metres AGL. For regular mapping flights, fixed-wing drones are used usually. For scanning at less than 300 metres (AGL) multirotor drones are mostly used. These drones do not need to embark on an extra flight when it comes to changing the flight directory. In addition, the large size of the drone (over Maximum Take Of Mass MTOM 25 kg) makes it necessary to obtain flight permits, occupation of airspace (depending on the country) to fly, and this increases the cost of measurements. For small multirotor drones with a maximum altitude of more than 120 metres AGL (in Europe) no flight permits are needed. The maximum resolution of middle-range LiDAR sensors, such as the OS1 is 0.08 metres for a distance of 50 metres (calculated with a LiDAR calculator) [

29]. Professional flights undertaking 3D mapping can reach a height of 19000 ft to 20000 ft AGL (5800 to 6100 metres AGL). A Vexcel Eagle Mark 3 camera with 450-megapixel resolution can produce photos with a resolution of 25x25 centimetres per pixel [

30].

A second method of measurement involves the use of satellite positioning systems. There are four core satellite navigation systems GPS (United States), GLONASS (Russian Federation), Beidou (China) and Galileo (European Union). Two other positioning systems are the Indian NavIC and the Japanese QZSS, albeit there exist local or alternative systems. For object measurements, any of the four main positioning systems can be used. For a more accurate measurement, an RTK base station is used. A base station is a fixed point located on the Earth’s surface, which measures positions with a high degree of accuracy, up to the level of single centimetres. The position of the drone is then determined based on the readout position of the navigation system and the offset from the ground base point.

Drones with a GNSS RTK system are being used in surveying. An example of the use of a drone-mounted RTK system is the work of Stoner R. Photogrammetry using UAV-mounted GNSS RTK [

31]. The drone used is a "The DJI Phantom 4 RTK UAV mounted with a camera equipped with an FC6310R lens (f = 8.8 mm), a resolution of 4864 x 3648 pixels, and with a pixel size of 2.61 x 2.61 um (total price approx. EUR 6000)" [

31]. It says "The DJI Phantom 4 RTK multicopter is an example of such a low-end UAV" [

31]. The accuracy of the positioning system for a Single Point without a GNSS RTK measurement is: Horizontal: 1.5 metres (RMS), Vertical: 3.0 metres (RMS). The measurement error with a standard drone and with a standard drone camera depends on many factors: among others, the quality of the camera optics, the angle of the camera and the associated change in perspective. The accuracy of the GPS sensors installed in the drone, and the interference from this system affect the accuracy of the measurement. Weather conditions and wind gusts also interfere with the response time of the GPS system. The agility of the GPS system may differ from the instantaneous response of the optical system. This makes it difficult to estimate the measurement error. Assuming that the error of a single measurement caused by the above conditions can be up to 2 metres, the diagram below shows how this error affects the measurement depending on the size of the object being measured.

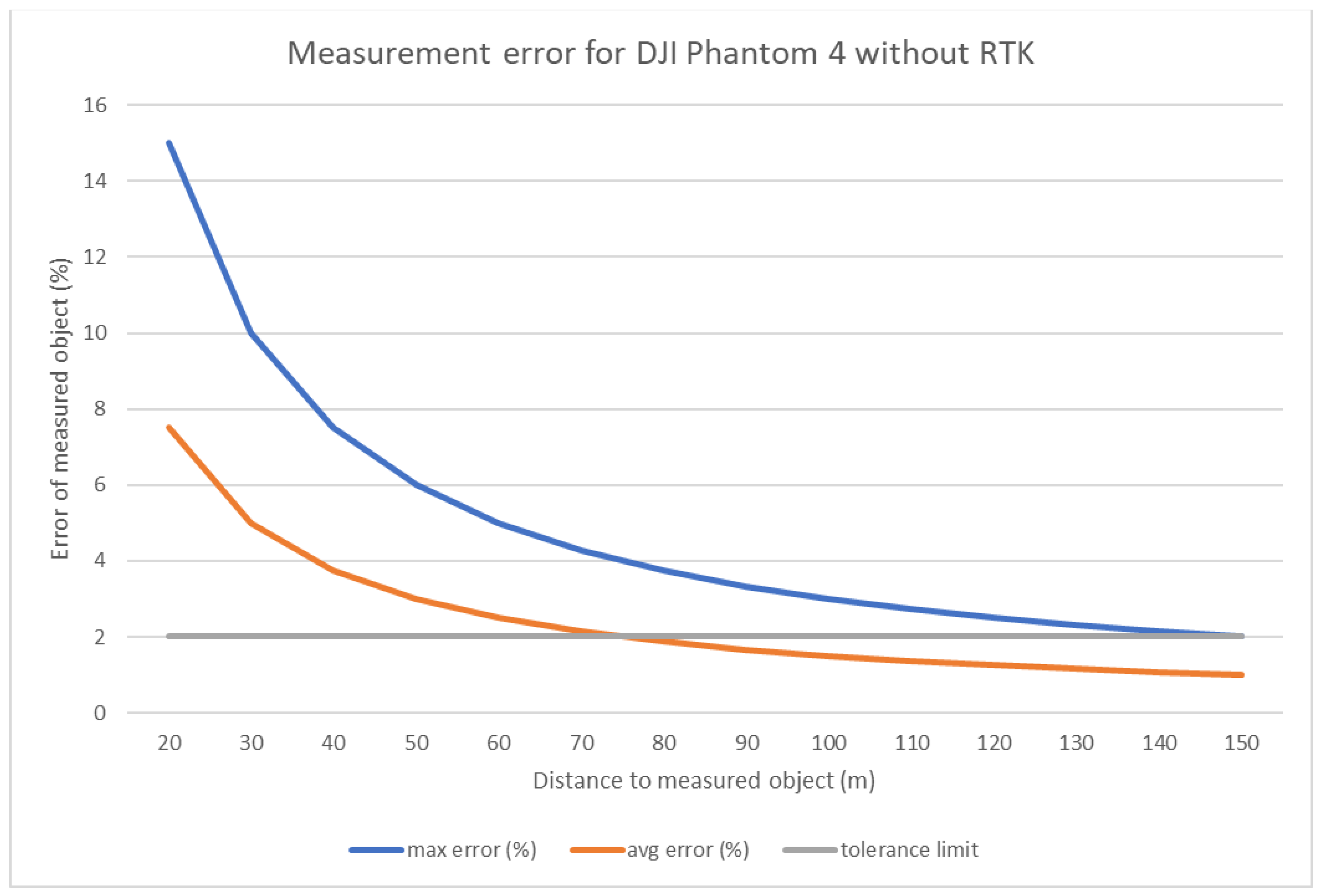

Figure 2.

Measurement error for DJI Phantom 4 (without RTK).

Figure 2.

Measurement error for DJI Phantom 4 (without RTK).

The chart shows a 2% error measurement with the DJI Phantom 4 drone. Assuming the most unfavourable case, the minimum size of the measured object should be 150 metres. For an average error measurement, the minimum object size should be 75 metres.

In RTK systems like the Low-Cost Rtk Gnss System For UAS Applications [

30], the accuracy of measurements can be increased. It is necessary to use a base ground station for this purpose. In the example given [

31], the "GNSS RTK Trimble Geo XR receiver with a Zephyr 2 antenna" [

31] was used. For horizontal measurements, the maximum possible precision is 3 centimetres. This is related to the speed of signal propagation and its uncertainty. Better accuracy cannot be obtained, although there are studies that write about an accuracy of 1 centimetre when measuring horizontally. The accuracy of the measurement is supposed to be improved by a ground base station [

31]. The cost of purchasing a GNSS station is EUR 1800. The total cost of the measurement apparatus used in the cited experiment is about EUR 7800 [

31] (around USD 8400). However, is such an expense necessary for indicative measurements of objects? Is the cost justified? How accurate can measurements be obtained with a standard UAS equipped with default optical devices? Is it necessary to use additional devices for such measurements? What is the accuracy of measurements with a standard optical camera? Are measurements done with cheap drones (less than USD 800) useful?

3. Material and methods

Measured 1: pictures from video without quality analise

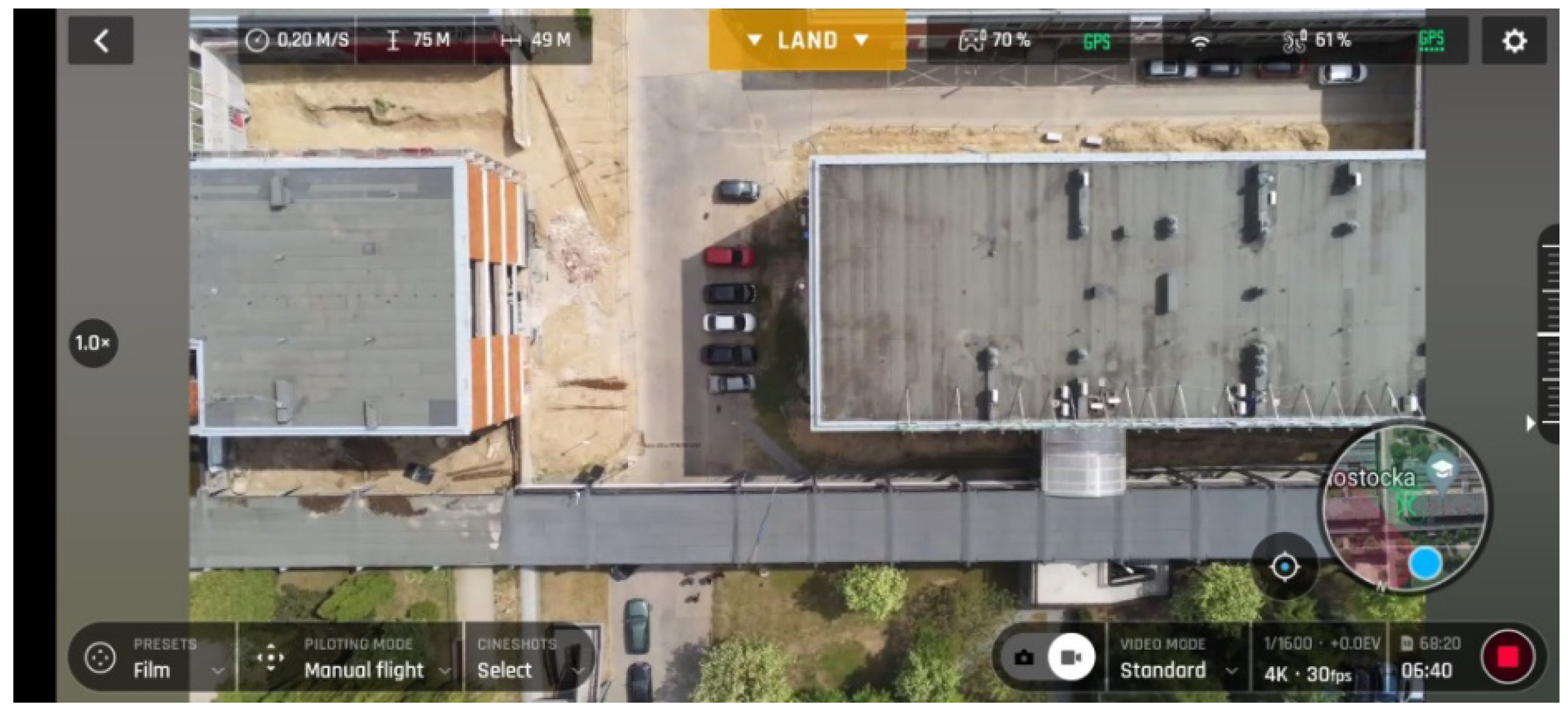

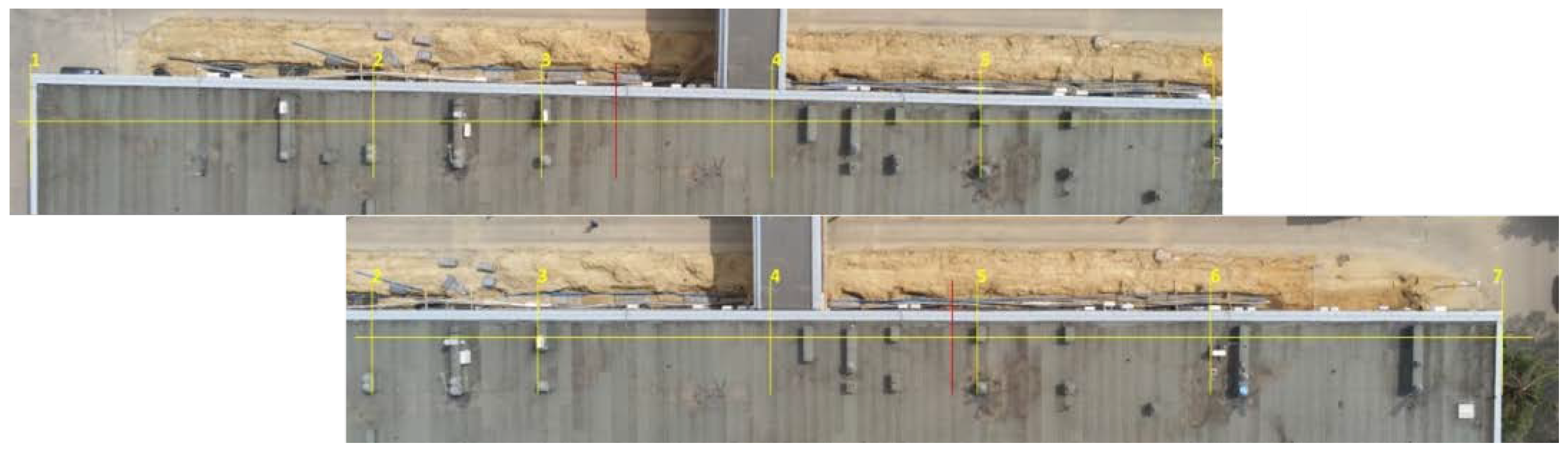

The measurement of the Technical University building was made using these two pictures taken from the video:

Figure 8.

Technical University in Bialystok- Measurement points.

Figure 8.

Technical University in Bialystok- Measurement points.

Both photos can be combined to create a complete picture of the building, but measurements for these pictures are calculated separately based on the centre of the picture and certain points of interest in the pictures:

East wall

Air chimney 1

Air condition 1

Middle of building

Air chimney 2

Air condition 2

West wall

A yellow lines in each picture indicates the middle of the picture and the middle point for calculation.

The pixel number on each picture is shown in the table below:

Table 2.

Experiment 1 measurements (in pixels).

Table 2.

Experiment 1 measurements (in pixels).

| p |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| 1st pic |

65 |

1151 |

1683 |

2413 |

3074 |

3812 |

|

| Name |

East wall |

Air chim 1 |

Air cond 1 |

middle |

Air chim 2 |

Air cond 2 |

West wall |

| 2nd pic |

|

82 |

607 |

1344 |

1999 |

2738 |

3663 |

Measurements in meters were calculated from the middle of the screen (red line on pictures), using the equation proportional for the full wide of the screen:

Middle point of the building is on the opposite edge of the building in each picture.

Counted length in meters for every point depends on picture (in meters) for 0,021437 m per pixel:

Table 3.

Experiment 1 measurements (in meters).

Table 3.

Experiment 1 measurements (in meters).

| p |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| 1st pic |

39,765 |

16,485 |

5,080 |

10,568 |

24,738 |

40,558 |

|

| 2nd pic |

|

39,400 |

28,146 |

12,347 |

1,693 |

17,535 |

37,364 |

Summarize building length was counted in two different ways:

The first way of counting (bold only) is:

The second way (underline) is:

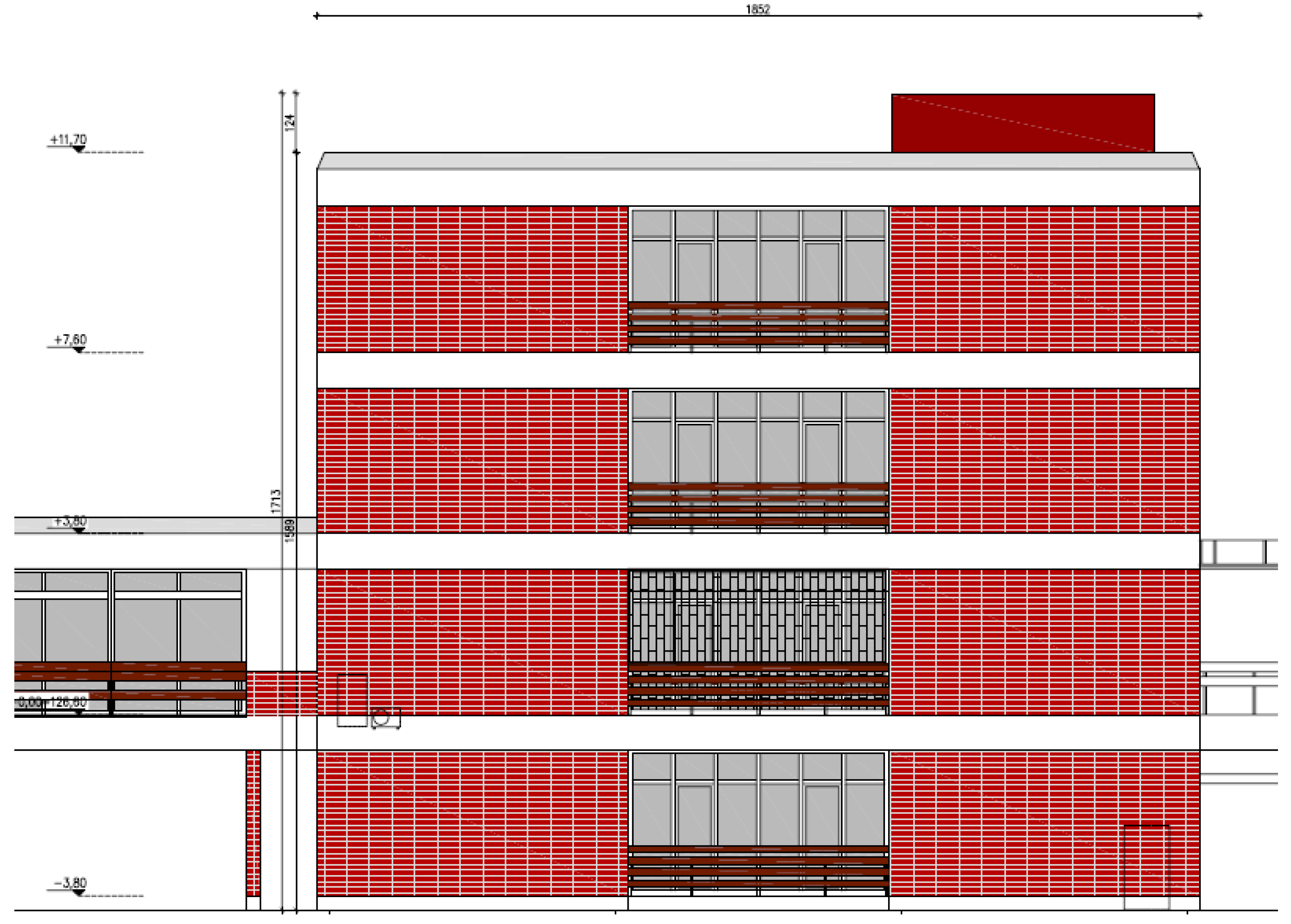

Real building length is 103,24 meters (technical documentation).

Accuracy of measurement (measurement error):

The second way of measurement produces a lower measurement error. For this size of building, measurements taken with the UAV RTK can result in a maximum error of 2.91% and an average error of 1.46%.

When comparing the real size of the building to the measurements obtained using the technical data and the possibility of obtaining results though UAV RTK without a ground station, the above method may be deemed insufficient.

The possibility of size correction can be obtained in two ways: performing flight altitude correction or performing camera angle width correction. In order to obtain the correct size, it would be necessary to make an altitude correction by raising the flight altitude by 1.9 metres or correcting the viewing angle to 70.2 degrees.

Counting flight height

Since in this experiment the measurements after lens correction are accurate to 4 decimal places and still do not give the true dimension, other parameters affecting the measurements should be checked. The lens and matrix errors have been corrected. The second parameter affecting the calculated size is the altitude of the flight.

In order to calculate the altitude of the actual flight, it is necessary to check which parameters the change in flight altitude will affect. The calculated building lengths are affected by the observation distance and the tangent of the angle. The angle was calculated in a very accurate way and is not subject to change. Therefore, it is necessary to calculate the actual height at which the measurement was made.

It was experimentally calculated that the observation height should be 61.17 cm. At this height, the measurement is correct to the nearest tenth of a centimetre. At the same time, at this distance, the size of the pixel is more than 2 centimetres. This means that the calculated accuracy is not possible. Calculating the pixel size to be 2 centimetres means that the measurement distance can be reduced to 61.15 centimetres, and it is this height that it was taken for height correction.

Correcting the measurement height by 125 centimetres means that the height given by the app was underestimated by 2.1%. To verify this, measuring the height is necessary.

Measurement problems: height and number of control points.

As altitude is a problem, it was decided to read altitude from the GPS position. The GPS position is not stored during filming, so the measurement method was changed. It was necessary to take photos instead of continuous filming. When taking photos, the drone camera has different parameters:

The horizontal field of view (HFOV) for taking photos is the same as filming and is approximately 69 degrees. Measured distortion is the same as for filming, so the same image correction is used for photo measurement.

The second problem with the first experiment is the number of control points. Eight control points are not enough for the measurement. Four more control points are needed to get a more accurate picture. This means that the photos should be taken from a lower height. It was decided to change the measurement from 60 to 40 metres. The height can be lower if the accuracy of the measurement is not sufficient.

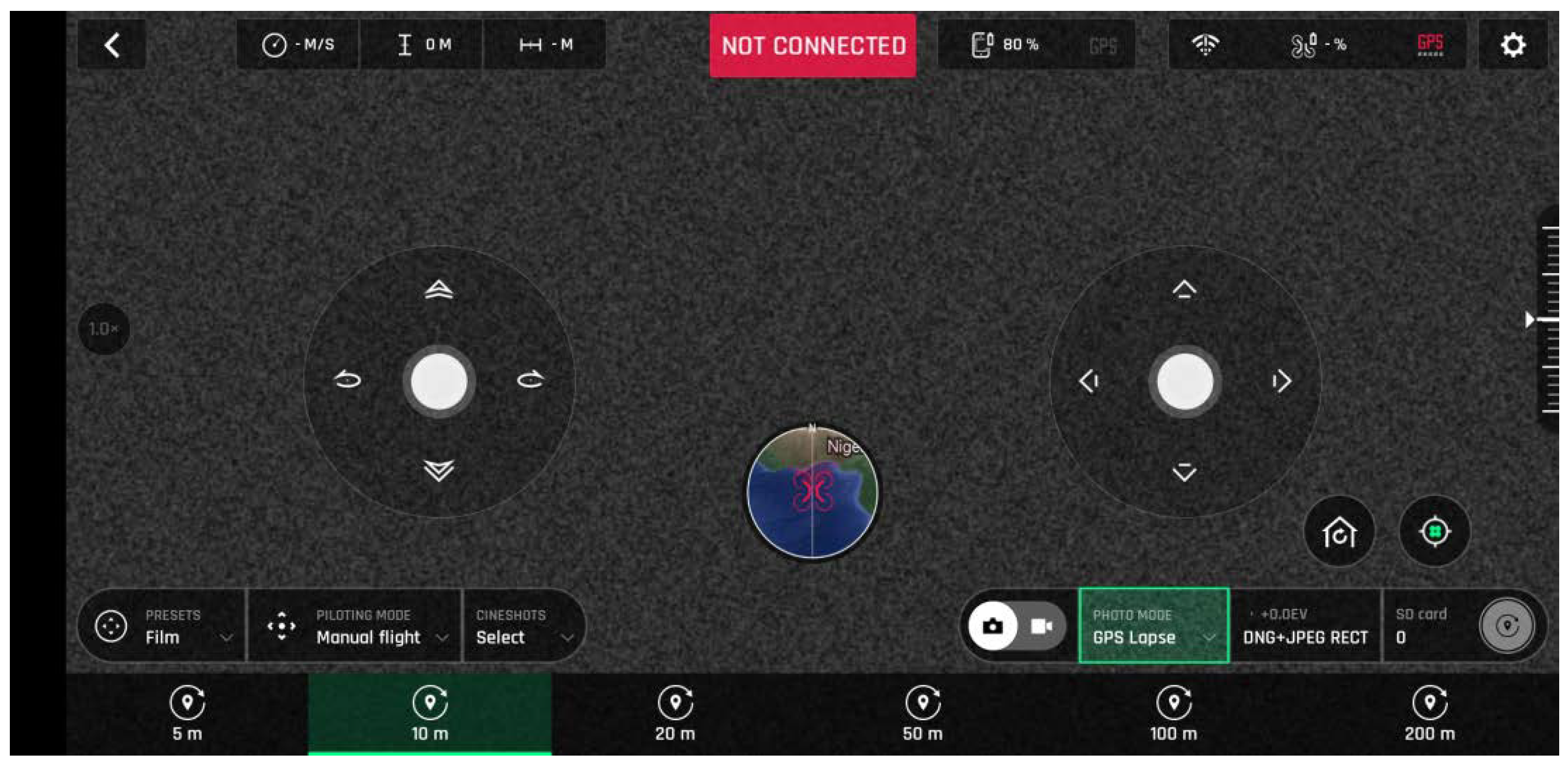

Measured 2: Measurement from photos with GPS height

The same drone, a Parrot Anafi, with the same camera, was used for the measurements. The measurements were taken using the GPS Lapse function [

37] with a parameter of 10 metres. This means that the drone itself takes a photo after 10 metres of flight regardless of the direction of flight. Photos with GPS position are saved automatically. The GPS position is saved in the EXIF data of the photo in JPG format. In addition, photos in DNG format were saved, but the information in these files was not used.

Figure 11.

Operation application with height of flight (bottom right corner and bottom line).

Figure 11.

Operation application with height of flight (bottom right corner and bottom line).

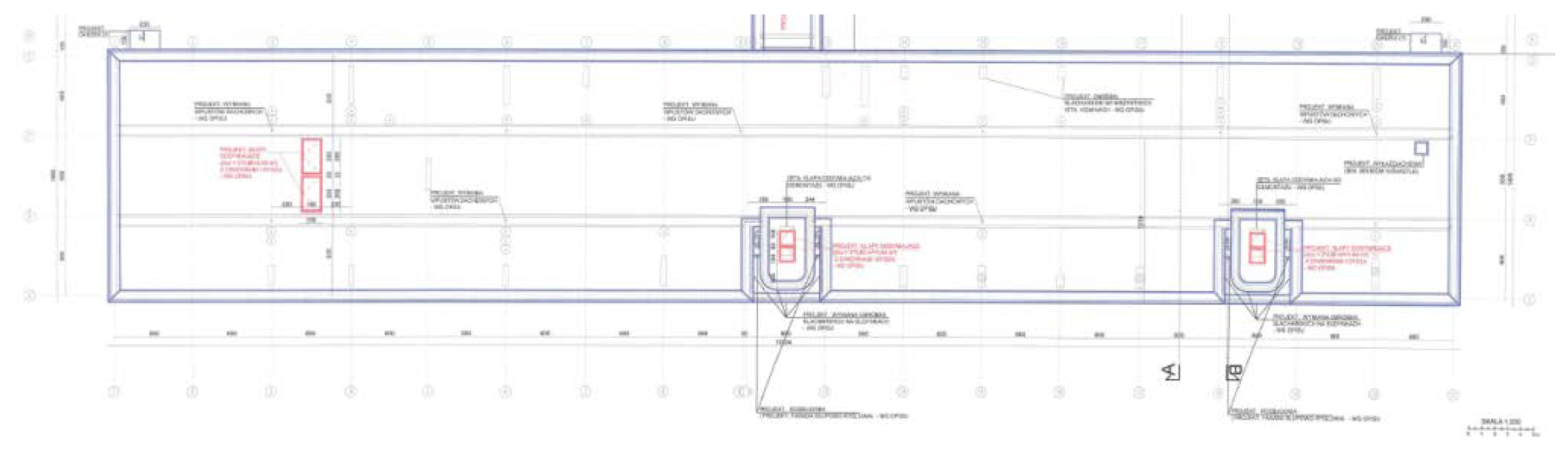

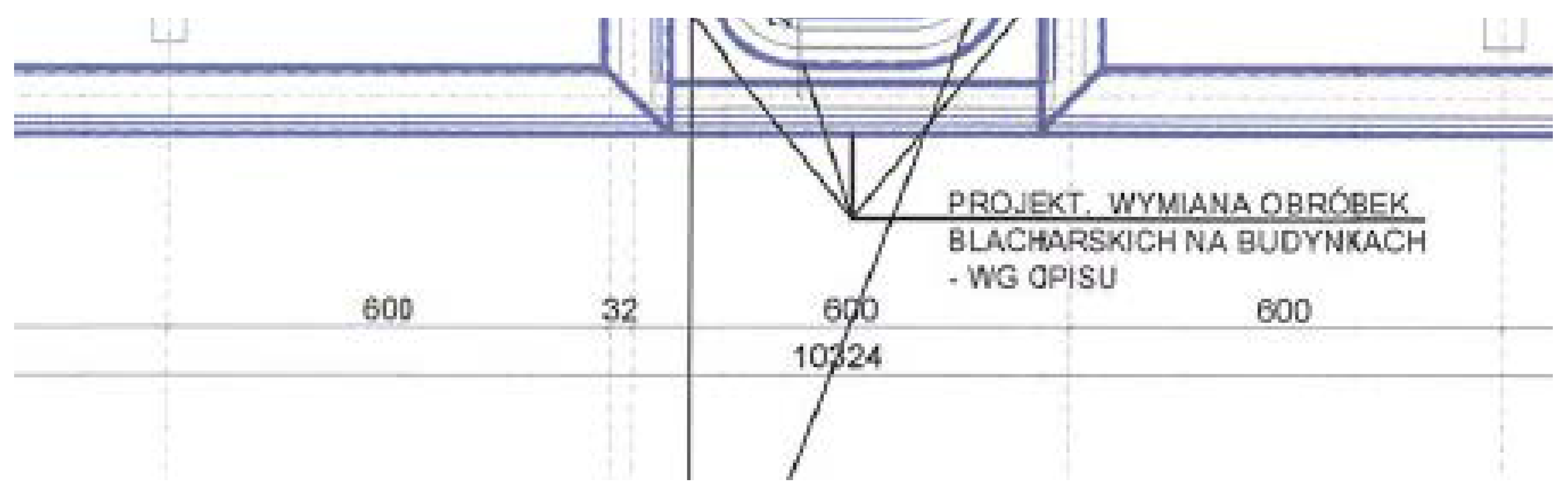

The filmed building has a protective tin apron laid on its edge. On this apron there are connections between successive sheets of metal. They are located every 2 meters or so, and it is these points that were taken as a characteristic element and adopted as Control Points. The Control Point number is a consecutive connection number. The beginning of the apron was taken as Control Point 1 (CP1). The last viewed is Control Point 54 (CP54).

In addition, it was observed that the beginning of the apron does not coincide with the beginning of the wall, so the offset of the beginning of the apron with respect to the wall had to be measured. The offset was measured using the traditional method. For the east corner along the long edge, it is -24.1 cm, for the west corner -25.8 cm. The measurement values should be added to the calculated length of the building before making a final comparison with the length of the building resulting from the technical design.

Flights were performed in possibly windless weather. For the entire length of the building, 10 photos were taken automatically and one additional photo taken manually when the edge of the building opposite from the start was reached. The measurements of the beginning and end of the building were corrected each time by the offset in pixels between the control point and the center of the image.

Feature points were determined for each image and the pixel number for each was recorded. Only some of the images were taken for measurements so that each point was covered by at least 2 measurements and points located at the edges of the images by three measurements.

All of heights for control points are in GPS built in Parrot Anafi drone.

GPS height of building was measured with photo taken in front of east corner of building seeing north edge of building:

Figure 12.

Top part of a Technical University building.

Figure 12.

Top part of a Technical University building.

Top of the building roof read from GPS Altitude data from picture:

Table 7.

GPS Altitude of top of an object.

Table 7.

GPS Altitude of top of an object.

| Picture name |

GPS height |

| P1480531.JPG |

159,85986330 |

Four flights were conducted for the purpose of measuring the building.

During each flight 11 pictures were taken, but just 5 of them were taken for measurement. It was enough to cover every single control point with a minimum of two measurements. It makes calculating more stable and gives two possible methods for checking results. Photos were taken to check the visibility of control points in other photos. The last control point on every picture is taken three times. Five pictures taken for measurements are shown below (unnecessary parts of the pictures are omitted).

Figure 13.

Shifted pictures taken during flight 1.

Figure 13.

Shifted pictures taken during flight 1.

We end up with a digital image or model of the building that consists of about 8000 pixels, which can be used for detailed measurements or analysis. Control points are not in the same position in every picture because of the different angles of view for each picture. Each of the four flights was made in similar weather conditions, although there were differences, as described below.

Measurement alghoritm

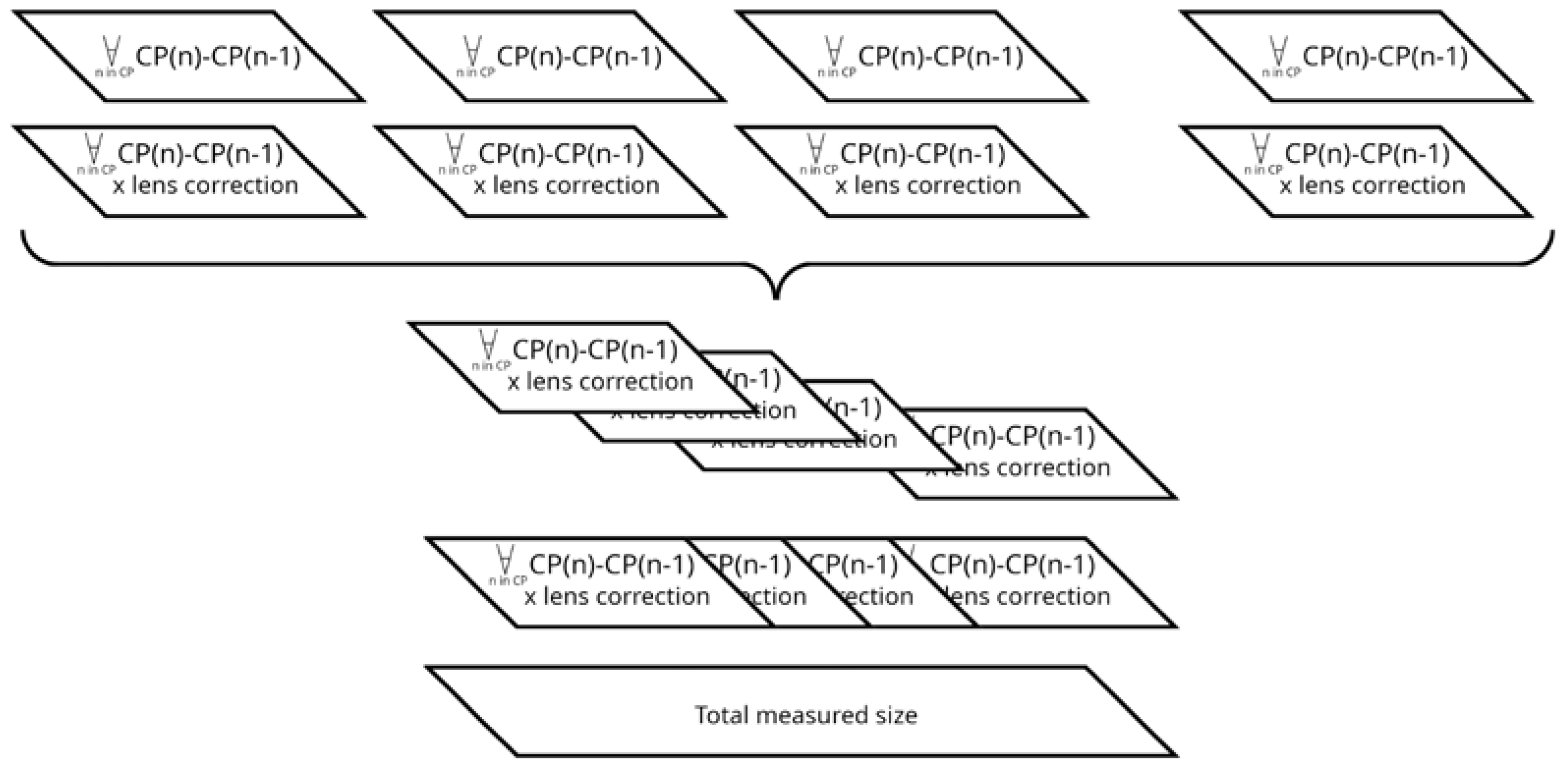

The distance between the points is calculated. The calculated distance is then recalculated with the lens correction that was shown in the previous experiment. Based on the difference in height and the previous recalculation of the distance from the centre of the images, the distance in centimetres is calculated. These operations are performed for each photo separately.

Then, distance measurements derived from each photo are integrated together, in the same way the photos themselves are overlaid, to produce a comprehensive representation. The calculated distances may differ between photos due to the different distance of each photo to the measured object. To compensate for the differences, an average is calculated from the individual measurements. This reduces the measurement error. The averaged distances between the control points (CPs) are then added together. The result obtained is compared with the length of the building from the construction documents (

Figure 7).

The steps of the algorithm are shown in

Figure 14.

Flight 1

The flight was taken from the east side to the west side of the building. The flight was taken over about 43 metres from the top of the roof. Weather condition should not interference with drone (cloudy but no rain, no strong wind). Each picture stores height in the EXIF data. The height of every picture is shown on the table:

Table 8.

GPS Altitude and drone position taken from used photos.

Table 8.

GPS Altitude and drone position taken from used photos.

| Picture name |

GPS height |

Measurement height from the top of building (m) |

| P1480559.JPG |

203,49528500 |

43,63542170 |

| P1480562.JPG |

203,04170230 |

43,18183900 |

| P1480564.JPG |

203,25070190 |

43,39083860 |

| P1480567.JPG |

204,00056460 |

44,14070130 |

| P1480569.JPG |

203,92114260 |

44,06127930 |

The number of pixels for Control Points of each picture is shown in the table below:

Table 9.

Pixel positions for Control Points (CPXX).

Table 9.

Pixel positions for Control Points (CPXX).

| Picture |

CP1 |

CP5 |

CP7 |

CP12 |

CP15 |

CP17 |

CP22 |

CP25 |

CP29 |

CP32 |

CP35 |

CP40 |

CP42 |

CP47 |

CP51 |

CP54 |

| P1480559.JPG |

2400 |

3071 |

3367 |

4110 |

4559 |

|

|

|

|

|

|

|

|

|

|

|

| P1480562.JPG |

127 |

787 |

1079 |

1812 |

2256 |

2552 |

3299 |

3752 |

4361 |

|

|

|

|

|

|

|

| P1480564.JPG |

|

|

|

299 |

735 |

1028 |

1766 |

2209 |

2809 |

3262 |

3718 |

4483 |

|

|

|

|

| P1480567.JPG |

|

|

|

|

|

|

|

33 |

614 |

1053 |

1494 |

2234 |

2535 |

3280 |

3886 |

4210 |

| P1480569.JPG |

|

|

|

|

|

|

|

|

|

|

13 |

723 |

1014 |

1741 |

2331 |

2650 |

Each picture was selected so that every difference between control points is counted twice: once for the left part of every picture, and once for the right part of every picture. The difference between control points is half of the picture. That makes self-correction and the measurement error average for the left and right side of the pictures.

The difference (in pixels) between Control Points measured for every picture is shown in the table below:

Table 10.

Difference between Control Points (in pixels).

Table 10.

Difference between Control Points (in pixels).

| Picture |

CP5-CP1 |

CP7-CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

| P1480559.JPG |

671 |

295 |

739 |

445 |

|

|

|

|

|

|

|

|

|

|

|

| P1480562.JPG |

660 |

292 |

732 |

442 |

296 |

743 |

450 |

605 |

|

|

|

|

|

|

|

| P1480564.JPG |

|

|

|

436 |

293 |

737 |

440 |

600 |

452 |

453 |

760 |

|

|

|

|

| P1480567.JPG |

|

|

|

|

|

|

|

581 |

439 |

440 |

738 |

301 |

745 |

603 |

321 |

| P1480569.JPG |

|

|

|

|

|

|

|

|

|

|

710 |

291 |

726 |

587 |

319 |

Because of the half picture shift for two pictures, the difference between the measurement for the same control points can be over 40 pixels. This is caused by the different view angles – around 0 degrees in the middle of the picture to 34 degrees at the far edge of picture.

The difference (in metres) between control points measured for every picture is shown in the table:

Table 11.

Difference between Control Points (in meters).

Table 11.

Difference between Control Points (in meters).

| Picture |

CP5-CP1 |

CP7-CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

| P1480559.JPG |

9,348 |

4,124 |

10,35 |

6,255 |

|

|

|

|

|

|

|

|

|

|

|

| P1480562.JPG |

9,1 |

4,026 |

10,11 |

6,122 |

4,081 |

10,3 |

6,246 |

8,397 |

|

|

|

|

|

|

|

| P1480564.JPG |

|

|

|

6,041 |

4,059 |

10,22 |

6,138 |

8,313 |

6,276 |

6,318 |

10,6 |

|

|

|

|

| P1480567.JPG |

|

|

|

|

|

|

|

8,187 |

6,186 |

6,214 |

10,43 |

4,242 |

10,5 |

8,539 |

4,566 |

| P1480569.JPG |

|

|

|

|

|

|

|

|

|

|

9,987 |

4,093 |

10,23 |

8,299 |

4,487 |

The difference in metres for the control points was calculated with lens corrections from the first experiment and are shown in the table.

Calculation building length for flight 1 data

The building length is calculated as the sum of the average measurements for every difference between control points.

for:

CPX, CPY – Control Point X, Control Point Y

n – number of measurements

For flight average difference between Control Points (in meters):

Table 12.

Difference between Control Points (in meters).

Table 12.

Difference between Control Points (in meters).

CP5-

CP1

|

CP7-

CP5

|

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

Summary |

| 8,61 |

3,79 |

9,51 |

5,71 |

3,8 |

9,55 |

5,75 |

7,73 |

5,81 |

5,83 |

9,62 |

3,89 |

9,67 |

7,82 |

4,21 |

101,31 |

Flight 2

The flight was taken from the east side to the west side of the building. The flight was taken over about 43 metres from the top of the roof. Weather condition should not interference with drone (cloudy but no rain, no strong wind). Each picture stores height in the EXIF data. The height of every picture is shown on the table:

Table 13.

GPS Altitude and drone position taken from used photos.

Table 13.

GPS Altitude and drone position taken from used photos.

| Picture name |

GPS height |

Measurement height from the top of building (m) |

| P1490570.JPG |

204,28657530 |

44,42671200 |

| P1490572.JPG |

204,90472410 |

45,04486080 |

| P1490575.JPG |

203,36010740 |

43,50024410 |

| P1490577.JPG |

203,89526370 |

44,03540040 |

| P1490580.JPG |

203,49316410 |

43,63330080 |

The number of pixels for Control Points for each picture is shown in the table below:

Table 14.

Pixel positions for Control Points (CPXX).

Table 14.

Pixel positions for Control Points (CPXX).

| Picture |

CP1 |

CP5 |

CP7 |

CP12 |

CP15 |

CP17 |

CP22 |

CP25 |

CP29 |

CP32 |

CP35 |

CP40 |

CP42 |

CP47 |

CP51 |

CP54 |

| P1490570.JPG |

|

|

|

|

|

|

|

|

|

|

|

396 |

698 |

1447 |

2044 |

2363 |

| P1490572.JPG |

|

|

|

|

|

|

|

|

417 |

853 |

1287 |

2018 |

2310 |

3049 |

3643 |

3963 |

| P1490575.JPG |

|

|

|

174 |

604 |

893 |

1616 |

2051 |

2640 |

3085 |

3533 |

4283 |

4588 |

|

|

|

| P1490577.JPG |

10 |

655 |

942 |

1660 |

2098 |

2394 |

3132 |

3581 |

4186 |

|

|

|

|

|

|

|

| P1490580.JPG |

2245 |

2928 |

3226 |

3980 |

4436 |

|

|

|

|

|

|

|

|

|

|

|

Each picture was selected so that every difference between control points is counted twice: once for the left part of every picture, and once for the right part of every picture. The difference between control points is half of the picture. That makes self-correction and the measurement error average for the left and right side of the pictures.

The difference (in pixels) between Control Points measured for every picture is shown in the table below:

Table 15.

Difference between Control Points (in pixels).

Table 15.

Difference between Control Points (in pixels).

| Picture |

CP5-CP1 |

CP7-CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

| P1490570.JPG |

|

|

|

|

|

|

|

|

|

|

|

4,283 |

10,62 |

8,466 |

4,524 |

| P1490572.JPG |

|

|

|

|

|

|

|

|

6,268 |

6,24 |

10,51 |

4,198 |

10,62 |

8,54 |

4,601 |

| P1490575.JPG |

|

|

|

5,972 |

4,014 |

10,04 |

6,042 |

8,181 |

6,181 |

6,222 |

10,42 |

4,236 |

|

|

|

| P1490577.JPG |

9,067 |

4,035 |

10,09 |

6,157 |

4,161 |

10,37 |

6,312 |

8,505 |

|

|

|

|

|

|

|

| P1490580.JPG |

9,515 |

4,151 |

10,5 |

6,353 |

|

|

|

|

|

|

|

|

|

|

|

Because of the half picture shift for two pictures, the difference between the measure-ment for the same control points can be over 40 pixels. This is caused by the different view angles – around 0 degrees in the middle of the picture to 34 degrees at the far edge of pic-ture.

The difference (in metres) between control points measured for every picture is shown in the table:

Table 16.

Difference between Control Points (in meters).

Table 16.

Difference between Control Points (in meters).

| Picture |

CP5-CP1 |

CP7-CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

| P1490570.JPG |

|

|

|

|

|

|

|

|

|

|

|

4,283 |

10,62 |

8,466 |

4,524 |

| P1490572.JPG |

|

|

|

|

|

|

|

|

6,268 |

6,24 |

10,51 |

4,198 |

10,62 |

8,54 |

4,601 |

| P1490575.JPG |

|

|

|

5,972 |

4,014 |

10,04 |

6,042 |

8,181 |

6,181 |

6,222 |

10,42 |

4,236 |

|

|

|

| P1490577.JPG |

9,067 |

4,035 |

10,09 |

6,157 |

4,161 |

10,37 |

6,312 |

8,505 |

|

|

|

|

|

|

|

| P1490580.JPG |

9,515 |

4,151 |

10,5 |

6,353 |

|

|

|

|

|

|

|

|

|

|

|

The difference in metres for the control points was calculated with lens corrections from the first experiment and are shown in the table.

Calculation building length for flight 2 data

The building length is calculated as the sum of the average measurements for every difference between control points.

For flight average difference between Control Points (in meters):

Table 17.

Difference between Control Points (in meters).

Table 17.

Difference between Control Points (in meters).

| CP5-CP1 |

CP7-CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

Summary |

| 8,68 |

3,81 |

9,58 |

5,73 |

3,82 |

9,53 |

5,74 |

7,77 |

5,8 |

5,8 |

9,73 |

3,94 |

9,92 |

7,91 |

4,24 |

101,99 |

Flight 3

The flight was taken from the east side to the west side of the building. The flight was taken over about 43 metres from the top of the roof. Weather condition should not interference with drone (cloudy but no rain, no strong wind). Each picture stores height in the EXIF data. The height of every picture is shown on the table:

Table 18.

GPS Altitude and drone position taken from used photos.

Table 18.

GPS Altitude and drone position taken from used photos.

| Picture name |

GPS height |

Measurement height from the top of building (m) |

| P1500581.JPG |

202,92485050 |

43,06498720 |

| P1500584.JPG |

203,20901490 |

43,34915160 |

| P1500586.JPG |

203,18800350 |

43,32814020 |

| P1500589.JPG |

203,68888850 |

43,82902520 |

| P1500591.JPG |

203,38568120 |

43,52581790 |

The number of pixels for Control Points for each picture is shown in the table below:

Table 19.

Pixel positions for Control Points (CPXX).

Table 19.

Pixel positions for Control Points (CPXX).

| Picture |

CP1 |

CP5 |

CP7 |

CP12 |

CP15 |

CP17 |

CP22 |

CP25 |

CP29 |

CP32 |

CP35 |

CP40 |

CP42 |

CP47 |

CP51 |

CP54 |

| P1500581.JPG |

2425 |

3115 |

3420 |

4190 |

|

|

|

|

|

|

|

|

|

|

|

|

| P1500584.JPG |

28 |

708 |

1005 |

1756 |

2209 |

2512 |

3275 |

3736 |

4356 |

|

|

|

|

|

|

|

| P1500586.JPG |

|

|

|

130 |

580 |

879 |

1628 |

2080 |

2684 |

3138 |

3596 |

4362 |

|

|

|

|

| P1500589.JPG |

|

|

|

|

|

|

|

|

336 |

777 |

1220 |

1958 |

2256 |

3004 |

3605 |

3927 |

| P1500591.JPG |

|

|

|

|

|

|

|

|

|

|

|

431 |

724 |

1471 |

2070 |

2391 |

Each picture was selected so that every difference between control points is counted twice: once for the left part of every picture, and once for the right part of every picture. The difference between control points is half of the picture. That makes self-correction and the measurement error average for the left and right side of the pictures.

The difference (in pixels) between Control Points measured for every picture is shown in the table below:

Table 20.

Difference between Control Points (in pixels).

Table 20.

Difference between Control Points (in pixels).

| Picture |

CP5-CP1 |

CP7-CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

| P1500581.JPG |

690 |

304 |

765 |

|

|

|

|

|

|

|

|

|

|

|

|

| P1500584.JPG |

680 |

297 |

750 |

450 |

303 |

763 |

458 |

616 |

|

|

|

|

|

|

|

| P1500586.JPG |

|

|

|

450 |

299 |

748 |

450 |

604 |

453 |

456 |

762 |

|

|

|

|

| P1500589.JPG |

|

|

|

|

|

|

|

|

441 |

443 |

736 |

297 |

748 |

599 |

320 |

| P1500591.JPG |

|

|

|

|

|

|

|

|

|

|

|

293 |

746 |

597 |

321 |

Because of the half picture shift for two pictures, the difference between the measure-ment for the same control points can be over 40 pixels. This is caused by the different view angles – around 0 degrees in the middle of the picture to 34 degrees at the far edge of pic-ture.

The difference (in metres) between control points measured for every picture is shown in the table:

Table 21.

Difference between Control Points (in meters).

Table 21.

Difference between Control Points (in meters).

| Picture |

CP5-CP1 |

CP7-CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

| P1500581.JPG |

9,489 |

4,194 |

10,59 |

|

|

|

|

|

|

|

|

|

|

|

|

| P1500584.JPG |

9,412 |

4,111 |

10,39 |

6,27 |

4,194 |

10,56 |

6,381 |

8,582 |

|

|

|

|

|

|

|

| P1500586.JPG |

|

|

|

6,226 |

4,137 |

10,36 |

6,253 |

8,356 |

6,281 |

6,336 |

10,6 |

|

|

|

|

| P1500589.JPG |

|

|

|

|

|

|

|

|

6,171 |

6,199 |

10,33 |

4,17 |

10,47 |

8,41 |

4,506 |

| P1500591.JPG |

|

|

|

|

|

|

|

|

|

|

|

4,072 |

10,38 |

8,324 |

4,461 |

The difference in metres for the control points was calculated with lens corrections from the first experiment and are shown in the table.

Calculation building length for flight 3 data

The building length is calculated as the sum of the average measurements for every difference between control points.

For flight average difference between Control Points (in meters):

Table 22.

Difference between Control Points (in meters).

Table 22.

Difference between Control Points (in meters).

CP5-

CP1 |

CP7-

CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

Summary |

| 8,82 |

3,87 |

9,76 |

5,82 |

3,89 |

9,76 |

5,87 |

7,88 |

5,8 |

5,84 |

9,73 |

3,84 |

9,73 |

7,79 |

4,17 |

102,56 |

Flight 4

The flight was taken from the east side to the west side of the building. The flight was taken over about 43 metres from the top of the roof. Weather condition should not interference with drone (cloudy but no rain, wind strong, but less than maximum wind for the drone). Each picture stores height in the EXIF data. The height of every picture is shown on the table:

Table 23.

GPS Altitude and drone position taken from used photos.

Table 23.

GPS Altitude and drone position taken from used photos.

| Picture name |

GPS height |

Measurement height from the top of building (m) |

| P1510592.JPG |

203,56230160 |

43,70243830 |

| P1510594.JPG |

204,63520810 |

44,77534480 |

| P1510597.JPG |

204,08076480 |

44,22090150 |

| P1510599.JPG |

204,39529420 |

44,53543090 |

| P1520603.JPG |

203,32363890 |

43,46377560 |

The number of pixels for Control Points for each picture is shown in the table below:

Table 24.

Pixel positions for Control Points (CPXX).

Table 24.

Pixel positions for Control Points (CPXX).

| Picture |

CP1 |

CP5 |

CP7 |

CP12 |

CP15 |

CP17 |

CP22 |

CP25 |

CP29 |

CP32 |

CP35 |

CP40 |

CP42 |

CP47 |

CP51 |

CP54 |

| P1510592.JPG |

|

|

|

|

|

|

|

|

|

|

|

428 |

720 |

1458 |

2051 |

2371 |

| P1510594.JPG |

|

|

|

|

|

|

|

|

397 |

829 |

1259 |

1985 |

2279 |

3013 |

3608 |

3926 |

| P1510597.JPG |

|

|

|

142 |

577 |

866 |

1594 |

2032 |

2622 |

3069 |

3517 |

4271 |

|

|

|

|

| P1510599.JPG |

48 |

708 |

997 |

1727 |

2167 |

2464 |

3203 |

3655 |

4256 |

|

|

|

|

|

|

|

| P1520603.JPG |

2367 |

3051 |

3352 |

4110 |

4571 |

|

|

|

|

|

|

|

|

|

|

|

Each picture was selected so that every difference between control points is counted twice: once for the left part of every picture, and once for the right part of every picture. The difference between control points is half of the picture. That makes self-correction and the measurement error average for the left and right side of the pictures.

The difference (in pixels) between Control Points measured for every picture is shown in the table below:

Table 25.

Difference between Control Points (in pixels).

Table 25.

Difference between Control Points (in pixels).

| Picture |

CP5-CP1 |

CP7-CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

| P1510592.JPG |

|

|

|

|

|

|

|

|

|

|

|

4,074 |

10,3 |

8,274 |

4,465 |

| P1510594.JPG |

|

|

|

|

|

|

|

|

6,174 |

6,146 |

10,38 |

4,202 |

10,49 |

8,504 |

4,545 |

| P1510597.JPG |

|

|

|

6,141 |

4,08 |

10,28 |

6,183 |

8,329 |

6,31 |

6,324 |

10,64 |

|

|

|

|

| P1510599.JPG |

9,383 |

4,108 |

10,38 |

6,255 |

4,222 |

10,51 |

6,426 |

8,544 |

|

|

|

|

|

|

|

| P1520603.JPG |

9,492 |

4,177 |

10,52 |

6,398 |

|

|

|

|

|

|

|

|

|

|

|

Because of the half picture shift for two pictures, the difference between the measure-ment for the same control points can be over 40 pixels. This is caused by the different view angles – around 0 degrees in the middle of the picture to 34 degrees at the far edge of pic-ture.

The difference (in metres) between control points measured for every picture is shown in the table:

Table 26.

Difference between Control Points (in meters).

Table 26.

Difference between Control Points (in meters).

| Picture |

CP5-

CP1 |

CP7-

CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

| P1510592.JPG |

|

|

|

|

|

|

|

|

|

|

|

4,074 |

10,3 |

8,274 |

4,465 |

| P1510594.JPG |

|

|

|

|

|

|

|

|

6,174 |

6,146 |

10,38 |

4,202 |

10,49 |

8,504 |

4,545 |

| P1510597.JPG |

|

|

|

6,141 |

4,08 |

10,28 |

6,183 |

8,329 |

6,31 |

6,324 |

10,64 |

|

|

|

|

| P1510599.JPG |

9,383 |

4,108 |

10,38 |

6,255 |

4,222 |

10,51 |

6,426 |

8,544 |

|

|

|

|

|

|

|

| P1520603.JPG |

9,492 |

4,177 |

10,52 |

6,398 |

|

|

|

|

|

|

|

|

|

|

|

The difference in metres for the control points was calculated with lens corrections from the first experiment and are shown in the table.

Calculation building length for flight 4 data

The building length is calculated as the sum of the average measurements for every difference between control points.

For flight average difference between Control Points (in meters):

Table 27.

Difference between Control Points (in meters).

Table 27.

Difference between Control Points (in meters).

CP5-

CP1 |

CP7-

CP5 |

CP12-CP7 |

CP15-CP12 |

CP17-CP15 |

CP22-CP17 |

CP25-CP22 |

CP29-CP25 |

CP32-CP29 |

CP35-CP32 |

CP40-CP35 |

CP42-CP40 |

CP47-CP42 |

CP51-CP47 |

CP54-CP51 |

Summary |

| 8,81 |

3,86 |

9,72 |

5,82 |

3,88 |

9,7 |

5,86 |

7,84 |

5,81 |

5,8 |

9,77 |

3,86 |

9,7 |

7,81 |

4,2 |

102,45 |

4. Results

Comparing the results and the real size of the building

The results obtained from the measurements and the actual size of the building and the percentage difference are shown in the table below:

Table 28.

Results comparison.

Table 28.

Results comparison.

| |

Real size |

Measured size |

Difference (in meters) |

Difference (in %) |

| Measurement 1 |

102,741 |

101,31 |

1,431 |

1,39282% |

| Measurement 2 |

102,741 |

101,99 |

0,751 |

0,73096% |

| Measurement 3 |

102,741 |

102,56 |

0,181 |

0,17617% |

| Measurement 4 |

102,741 |

102,45 |

0,291 |

0,28324% |

The actual size is determined by comparing the documented measurement of 103.24 metres with the observed differences of -0.241 and -0.258 metres in the physical condition of the flashings, as described in the experiment conditions.

The difference between the taken measurements and the actual size is 1.93% for the first measurement to 0.18% for the third measurement. The average error for the four flights is 0.65%, that equals 0.67 metres. These measurements are much more accurate than those obtained from public satellites, which have an error rate of 1%.

The results for the first and fourth flights are surprising. In the case of the first flight, in good conditions, the measured difference from the actual building length is 1.93%, which is far from expectations. This may be due to the final stage of the flight, where the altitude increased quite sharply, by almost 1 metre. This disturbance may have caused the measurements to be lower than the actual size of the building, thus underrepresenting its overall dimensions.

Extracting the average from multiple photos and taking into account the height of the measurements should compensate for the measurement error, but in the case of the beginning and end of the building, the measurement is taken from only two photos. If the height imbalance occurred on the two photos, the error will not be averaged, resulting in an erroneous measurement.

Flight four took place in windy weather. The altitude sensor is based on the GPS position and the wind should not affect the flight, but it was noticeable.

5. Discussion

Can measurements made with the use of a drone and an ordinary camera be used as a reliable source of data? With the results obtained and the calculation methods used in this work, it is not possible to treat the results as authoritative. The results obtained should be considered as estimates rather than precise measurements. However, due to the possibility of taking actual images and the higher accuracy of the obtained image compared to high-resolution satellite images, the method can be considered better due to the validity of the images. Estimations made on actual drone images can be used, for example, when estimating crop losses caused by storms or animal activity.

What can be done to make the results more accurate? The accuracy of the results can be increased by several possible actions: increasing the number of photos by lowering the flight altitude, making the photos denser, stabilising the flight by taking measurements in stable weather conditions and with automatic steering.

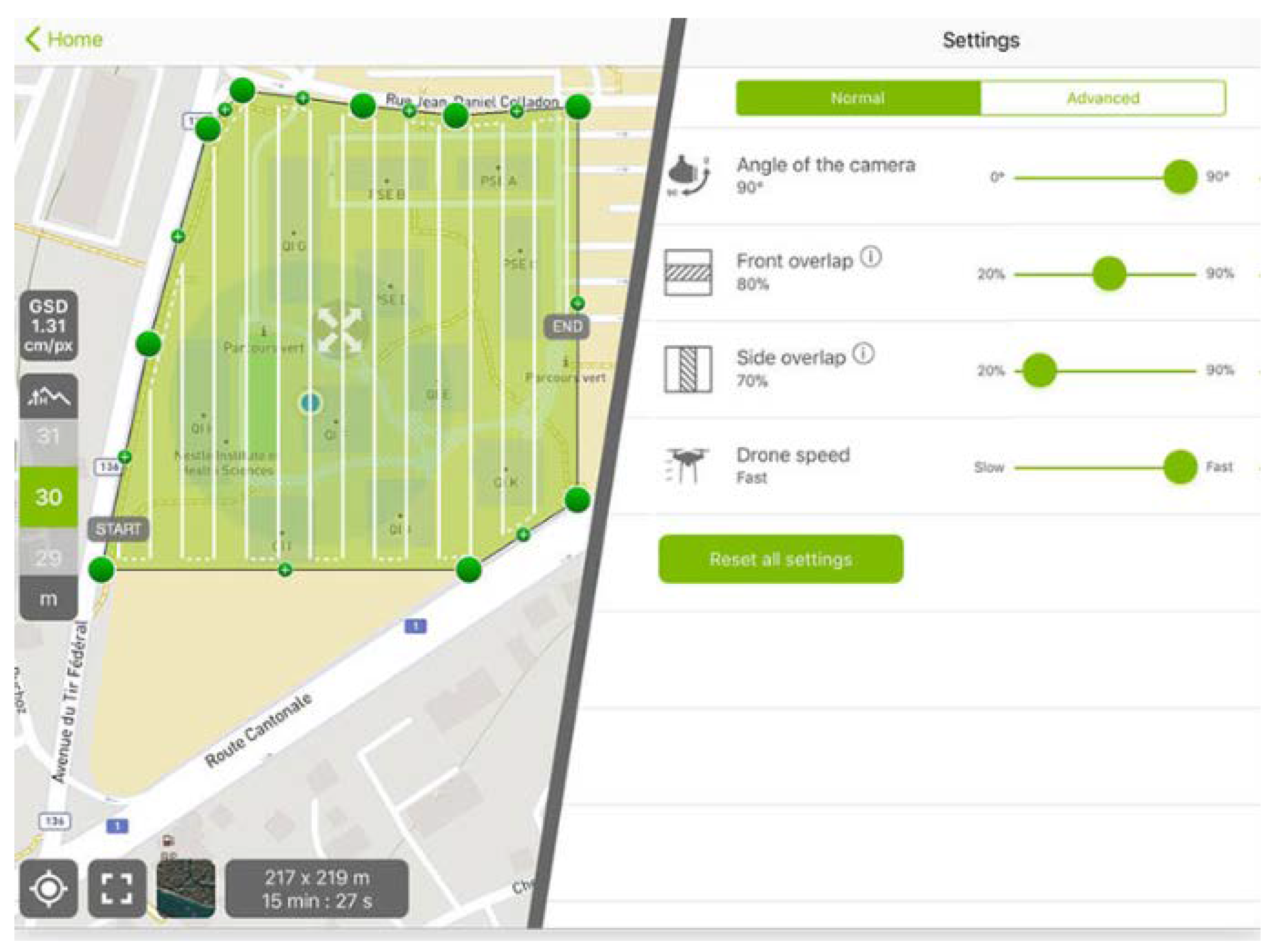

Increasing the number of photos means that more measurements would be taken for analysis and this would result in a smaller statistical error in the measurements. By averaging the results, a more accurate measurement can be obtained. The control of the drone can be automated by means of automatic routing using additional software. The software allows for planning automated flights, marking specific points of interest on the object being observed, and ensuring appropriate spacing between sequential flights. Flights in accordance with the predetermined points allows appropriate overlapping for photos. For the Parrot Anafi drone, it is possible to work with Pix4D software, which has the capability to guide the drone along a pre-set route using control points, automating the drone’s path and creating a 3D map of the photographed landscape.

Figure 15.

Pix4D application sample view [

38].

Figure 15.

Pix4D application sample view [

38].

At the same time, taking pictures with a generally available drone allows you to take pictures at a moment’s notice, without having to wait for a requested service. Thus, the reaction to the current state of the observed object can be immediate. In the case of measuring damage to agricultural crops caused by animals, the damage will not be obliterated. With an observation height of 40 metres, assuming 20% overlapping of images, a single photo could cover more than 100 square metres. When observing one hectare of area, it is enough to take and analyse 100 to 120 photos and with double coverage of the area, about 250 photos would suffice (with an accuracy of less than 2 cm per pixel (with 4608x3456 photo resolution).

For future work, other factors that may affect the quality of the images (lens distortion) or the positioning of the drone (GPS measurement error) should be examined. In addition, a survey flight should be performed using an application like Pix4D with a predetermined flight path that eliminates human control errors.

6. Conclusion

In the case of unpredictable weather conditions and variable survey conditions (weather or animal damage), the use of a low-budget drone may be the best and most accurate assessment tool. The quality of the images and their timeliness work in favour of the proposed solution. The estimation value surpasses the same activities using satellite imagery and is slightly better than high resolution satellite imagery. The availability of recent data for estimation and the straightforward nature of the required calculations make this method a realistic option for use. However, it is not suitable for use in surveying. There the required accuracy reaches 2 centimetres. Such accuracy is provided by RTK measurements.

Test measurements are needed. Add other measurements for other drone models or the same model but a different machine to check lens correction.

In future attempts to use a drone camera for measurements, it is worth conducting a measurement experiment under controlled conditions. It is planned to perform measurements on the ground with the camera positioned horizontally and to verify the accuracy of the calculated object size with the actual measured length. Also ground markers can make measurement more accurate, as was used in the UAV-Based Sensor System for Measuring Land Surface Albedo [

39].

In addition, it is planned to correct images not only in one axis, but over the entire surface of the lens, which will make it possible to measure the surface without rotating the drone. The possibility of automating the superimposition of images and the automatic search for corresponding points in the image is also being considered. This will allow automation of the entire estimation process.

In this paper, we have shown that a low-cost drone with an ordinary camera can be used to measure or estimate dimensions when time is of the essence. Such situations occur when it is necessary to estimate losses due to heavy rains, storms or animal activity. In such situations, the time to complete the damage estimate is more important than the accuracy of the measurement. The accuracy obtained (in the region of 1% measurement error) is sufficient for this type of loss estimation and is suitable for making quick measurements of a changing environment.

Acknowledgments

The work was supported by grant no. WZ//I-IIT/5/2023 and grant no. WI/WI-IIT/2/2021 from Bialystok University of Technology and funded with resources for research by the Ministry of Education and Science in Poland.

References

- Zhang, B. et. al. Estimation of Urban Forest Characteristic Parameters Using UAV-Lidar Coupled with Canopy Volume. Remote Sens. 2022, 14, 6375. [Google Scholar] [CrossRef]

- Lewicka, O.; Specht, M.; Specht, C. Assessment of the Steering Precision of a UAV along the Flight Profiles Using a GNSS RTK Receiver. Remote Sens. 2022, 14, 6127. [Google Scholar] [CrossRef]

- Tang, X. et. al. Monitoring of Monthly Height Growth of Individual Trees in a Subtropical Mixed Plantation Using UAV Data. Remote Sens. 2023, 15, 326. [Google Scholar] [CrossRef]

- Yin, D.; Le Wang, L. Individual mangrove tree measurement using UAV-based LiDAR data: Possibilities and challenges. Remote Sensing of Environment, 2019, 223, 34–49. [Google Scholar] [CrossRef]

- Krause, S.; Sanders, T.G.M.; Mund, J.-P.; Greve, K. UAV-Based Photogrammetric Tree Height Measurement for Intensive Forest Monitoring. Remote Sens. 2019, 11, 758. [Google Scholar] [CrossRef]

- Han, X. et. al. Measurement and Calibration of Plant-Height from Fixed-Wing UAV Images. Sensors 2018, 18, 4092. [Google Scholar] [CrossRef]

- Chen, S.; Nian, Y.; He, Z.; Che, M. Measuring the Tree Height of Picea crassifolia in Alpine Mountain Forests in Northwest China Based on UAV-LiDAR. Forests 2022, 13, 1163. [Google Scholar] [CrossRef]

- Liao, K. et. al. Examining the Role of UAV Lidar Data in Improving Tree Volume Calculation Accuracy. Remote Sens. 2022, 14, 4410. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with Application to Forest Inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Marinou, E. , et al.: Retrieval of ice-nucleating particle concentrations from LiDAR observations and comparison with UAV in situ measurements. 2019. [Google Scholar] [CrossRef]

- Mantelli, M. , et al: A novel measurement model based on abBRIEF for global localization of a UAV over satellite images. Robotics and Autonomous Systems, 2019, 112, 304–319. [Google Scholar] [CrossRef]

- Shuman N. M., Khan S., Nobahar M., and Amini, F. Downscaling Remotely Sensed Soil Moisture Variations Using Satellite And Unmanned Aerial Vehicle (UAV) Photogrammetry, AGU Fall Meeting 2021, held in New Orleans, LA, 13-17 December 2021, id. NS35C-0376, 2021. https://ui.adsabs.harvard.edu/abs/2021AGUFMNS35C0376S/abstract.

- Klapa, P.; Gawronek, P. Synergy of Geospatial Data from TLS and UAV for Heritage Building Information Modeling (HBIM). Remote Sens. 2023, 15, 128. [Google Scholar] [CrossRef]

- Bartholomeus, H.; Calders, K.; Whiteside, T.; Terryn, L.; Krishna Moorthy, S.M.; Levick, S.R.; Bartolo, R.; Verbeeck, H. Evaluating Data Inter-Operability of Multiple UAV–LiDAR Systems for Measuring the 3D Structure of Savanna Woodland. Remote Sens. 2022, 14, 5992. [Google Scholar] [CrossRef]

- Nikolakopoulos K. G.; Koukouvelas I. K. Emergency response to landslide using GNSS measurements and UAV, 2017, Proc. SPIE 10428, Earth Resources and Environmental Remote Sensing/GIS Applications VIII, 1042816 (5 October 2017, Warsaw, Poland). [CrossRef]

- Feng, B. et. al. Exploring the Potential of UAV LiDAR Data for Trunk Point Extraction and Direct DBH Measurement. Remote Sens. 2022, 14, 2753. [Google Scholar] [CrossRef]

- Solazzo D. et., al. Mapping and measuring aeolian sand dunes with photogrammetry and LiDAR from unmanned aerial vehicles (UAV) and multispectral satellite imagery on the Paria Plateau. Geomorphology, 2018, 319, 174–185. [Google Scholar] [CrossRef]

- Queinnec M. et., al. Mapping Dominant Boreal Tree Species Groups by Combining Area-Based and Individual Tree Crown LiDAR Metrics with Sentinel-2 Data. Canadian Journal of Remote Sensing, 2023, 49, 1. [Google Scholar] [CrossRef]

- Wang, N.; Shi, Z.; Zhang, Z. Road Boundary, Curb and Surface Extraction from 3D Mobile LiDAR Point Clouds in Urban Environment. Canadian Journal of Remote Sensing, 2022, 48, 504–519. [Google Scholar] [CrossRef]

- Jianghua L., Jinxing Z. and Wentao Y.: "Comparing LiDAR and SfM digital surface models for three land cover types" Open Geosciences, vol. 13, no. 1, 2021, pp. 497-504. [CrossRef]

- Harwin, SJ.: “Multi-view stereopsis (MVS) from an unmanned aerial vehicle (UAV) for natural landform mapping”. University Of Tasmania, 2015. Thesis. [CrossRef]

- Gao, C. et. al. Greenhouses Detection in Guanzhong Plain, Shaanxi, China: Evaluation of Four Classification Methods in Google Earth Engine. Canadian Journal of Remote Sensing, 2022, 48, 747–763. [Google Scholar] [CrossRef]

- Website. (online: 12.09.2022). https://www.euspaceimaging.com/true-30-cm-imagery/.

- Komara Djaja K. et., al. The Integration Of Geography Information System (Gis) And Global Navigation Satelite System-Real Time Kinematic (Gnss-Rtk) For Land Use Monitoring. International Journal of GEOMATE, 2017, 13, 31–34, Geotechnic, Construction Material & Environment, ISSN:2186-2990, Japan. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- P. Addabbo et al. A UAV infrared measurement approach for defect detection in photovoltaic plants. 2017 IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), 2017, pp. 345-350. https://ieeexplore.ieee.org/abstract/document/7999594. [CrossRef]

- Ye, W.; Qian, C.; Tang, J.; Liu, H.; Fan, X.; Liang, X.; Zhang, H. Improved 3D Stem Mapping Method and Elliptic Hypothesis-Based DBH Estimation from Terrestrial Laser Scanning Data. Remote Sens. 2020, 12, 352. [Google Scholar] [CrossRef]

- Ekaso D.; Nex F.; KerleN. Accuracy assessment of real-time kinematics (RTK) measurements on unmanned aerial vehicles (UAV) for direct geo-referencing. Geo-spatial Information Science, 23:2, 165-181. :2. [CrossRef]

- Website. (online: 24.09.2022). https://precisionlaserscanning.com/wp-content/uploads/2018/05/LIDAR-RESOLUTION-AT-DISTANCE-CALCULATOR.xlsx.

- Website. (online: 24.05.2023). https://opegieka.pl/najwydajniejsza-platforma-teledetekcyjna-w-polsce,73,pl#details.

- Štroner, M.; Urban, R.; Seidl, J.; Reindl, T.; Brouček, J. Photogrammetry Using UAV-Mounted GNSS RTK: Georeferencing Strategies without GCPs. Remote Sens. 2021, 13, 1336. [Google Scholar] [CrossRef]

- Stempfhuber W., Buchholz M. A Precise, Low-Cost Rtk Gnss System For Uav Applications. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XXXVIII-1/C22, 2011, ISPRS Zurich 2011 Workshop, 14-16 September 2011, Zurich, Switzerland.

- Website. (online: 24.09.2022). https://www.parrot.com/us/drones/anafi/technical-specifications.

- Website. (online: 24.09.2022). https://www.parrot.com/assets/s3fs-public/2020-07/white-paper_anafi-v1.4-en.pdf.

- Website. (online: 24.09.2022). https://www.deviceranks.com/en/camera-sensor.

- Website. (online: 24.09.2022). https://www.deviceranks.com/en/drone/-top_percent_1.

- Website. https://www.parrot.com/en/support/anafi/how-do-i-use-the-gpslapse-photo-mode.

- Website. (online: 12.09.2022). https://www.pix4d.com/product/pix4dcapture.

- Canisius, F.; Wang, S.; Croft, H.; Leblanc, S.G.; Russell, H.A.J.; Chen, J.; Wang, R. A UAV-Based Sensor System for Measuring Land Surface Albedo: Tested over a Boreal Peatland Ecosystem. Drones 2019, 3, 27. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).