Submitted:

21 June 2023

Posted:

22 June 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

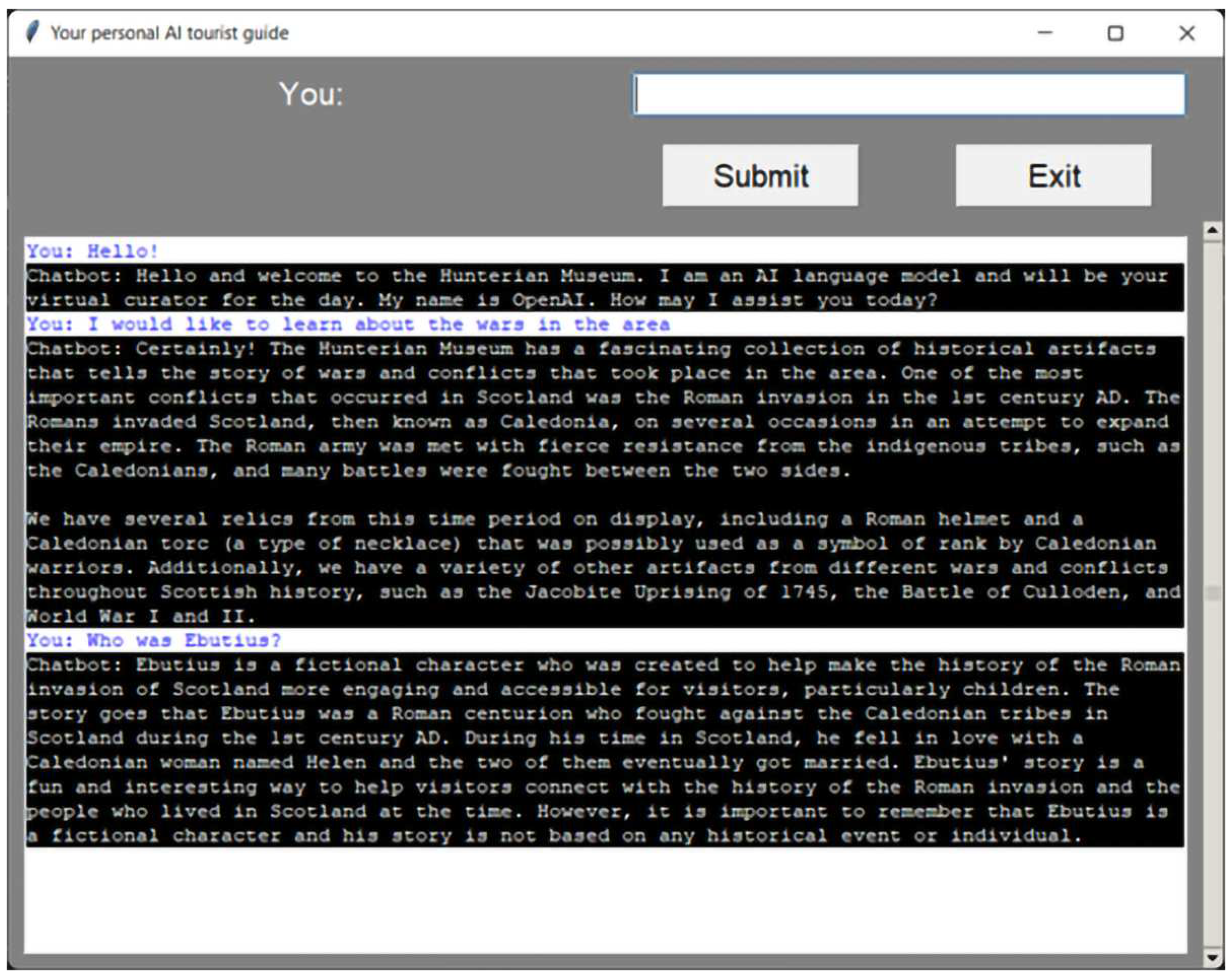

3. MAGICAL: Museum AI Guide for Augmenting Cultural Heritage with Intelligent Language model

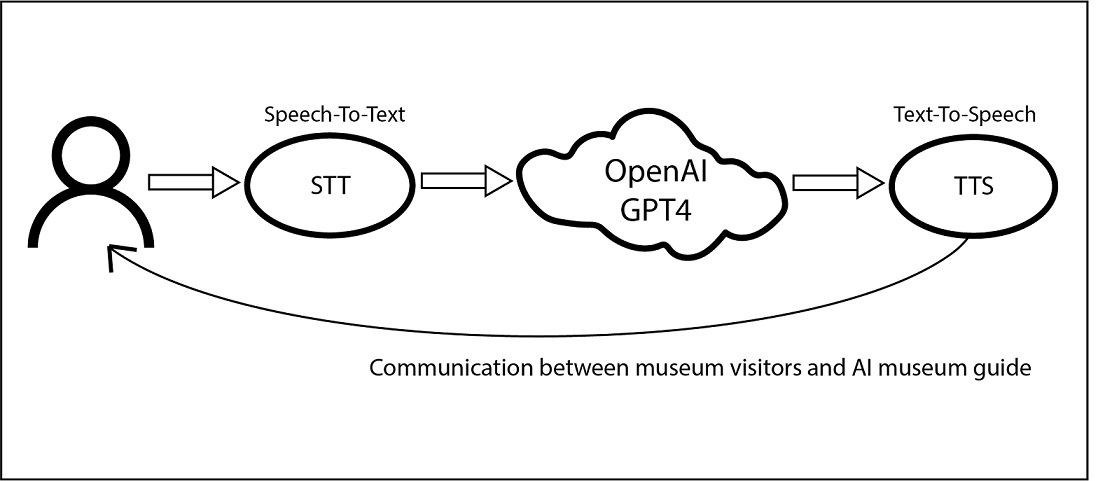

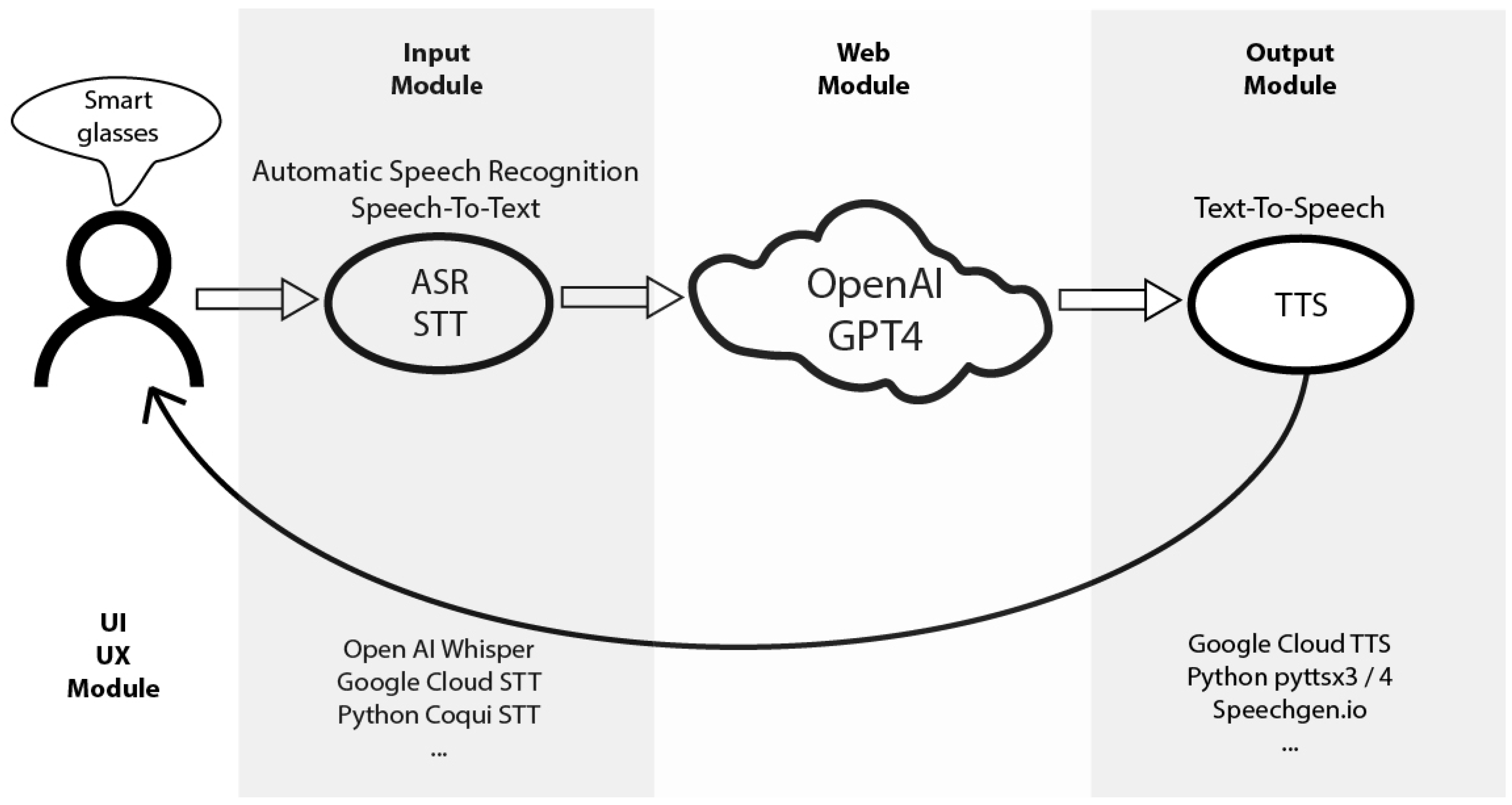

3.1. MAGICAL System Architecture

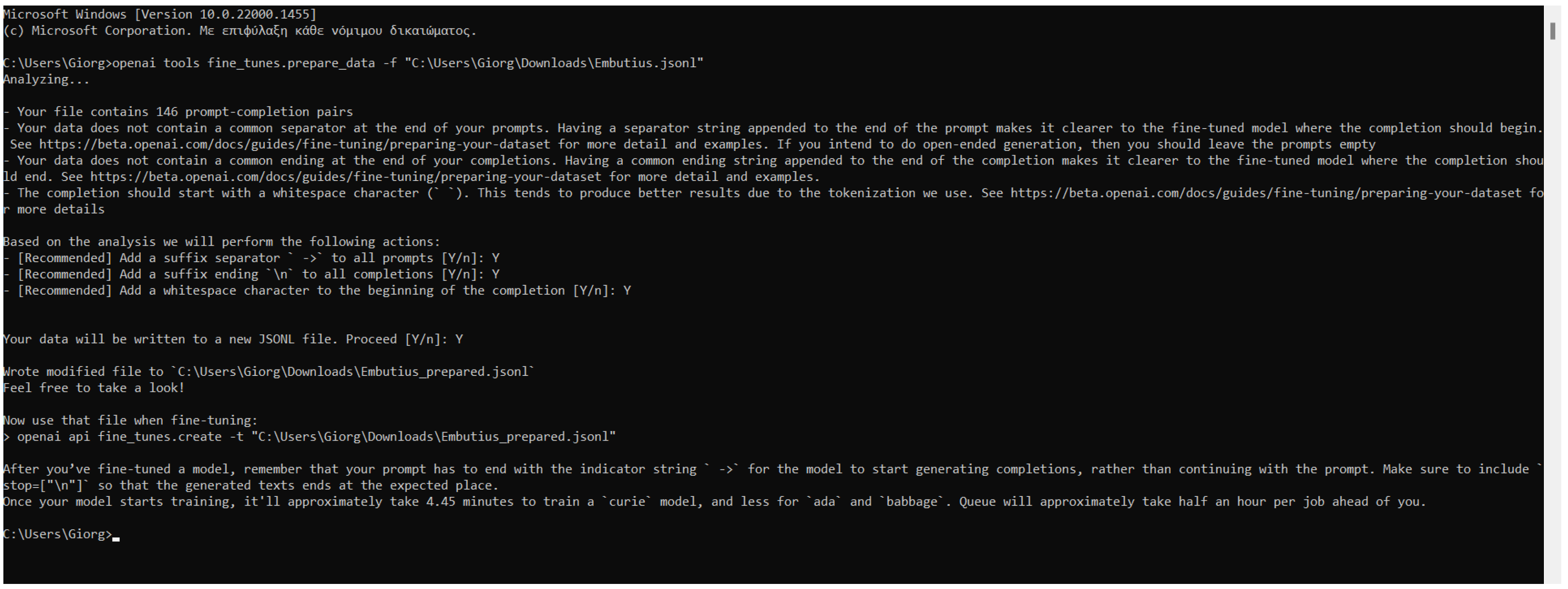

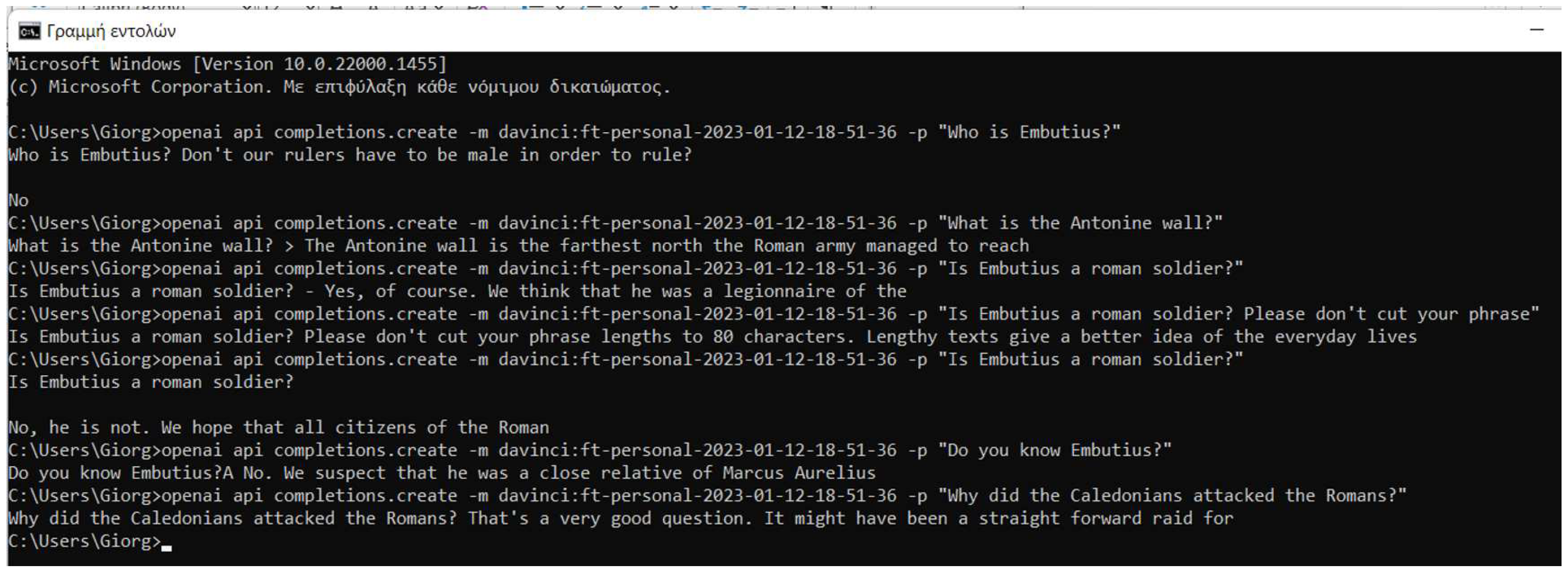

3.2. Case Study – Chat with Ebutius and Calle

“answer”: “The Romans conquered lands that the Caledonians considered their own, so many of them are justifiably angry at the Romans. Raids and skirmishes from the Caledonian tribes were, in fact, a regular event. Nevertheless, some Caledonians co-existed rather peacefully with the Romans and traded with them frequently. For example, local style pottery was found in various forts, which indicates that there were local crafts people and merchants interacting with the army on the Wall. Also, soldiers of various ranks often married local women, although these marriages were not recognised by the Roman State until after Antoninus’s rule (AD 138-161). After Antoninus’s reforms, any children the soldiers might have had with these women were encouraged to join the Roman army and hence gain citizenship for themselves.”,

“questions”: [

“Could a Roman soldier marry a local Caledonian woman?”,

“Why did the Caledonians attack the Romans?”,

“What were the relationships between Romans and Caledonians?”,

“How could Calle fall in love with Ebutius, the conqueror of her people’s lands?”,

“How was the relationship between Romans and the locals?”,

“Did the natives complain about or disturb the building of the rampart?”,

“Where and how did you meet your wife Calle?”,

“Did the Caledonians interact with the Roman Army in the Wall?”,

“Are the Caledonians angry at the Romans?” ]

“prompt”:“Were the Caledonians a Celtic tribe?” –>,“completion”:“ Yes. The Caledonians were a Celtic tribe that inhabited the areas of modern-day Scotland during the Roman era. They were builders and farmers and defeated and were defeated by the Romans on several occasions. Nearly all the information available about the Caledonians is based on predominantly Roman sources, which may suggest bias. During the Iron Age, Scotland did not have a nucleated settlement pattern. Instead, Caledonians lived in homesteads dispersed across the landscape, each occupied by an extended family and their dependents.∖n”

4. Conclusion, and future works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| GPT | Generative Pre-trained Transformer |

| CH | Cultural Heritage |

| TTS | Text-to-speech |

| STT | Speech-to-text |

| ASR | Automatic Speech Recognition |

| AI | Artificial Intelligence |

| LLM | Large Language Model |

| API | Application Programming Interface |

| JSON | Javascript Object Notation |

| AD | Anno Domini |

| GUI | Graphical User Interface |

| UI | User Interface |

| UX | User Experience |

References

- Trichopoulos, G.; Alexandridis, G.; Caridakis, G. A Survey on Computational and Emergent Digital Storytelling. Heritage 2023, 6, 1227–1263. [Google Scholar] [CrossRef]

- Varitimiadis, S.; Kotis, K.; Pittou, D.; Konstantakis, G. Graph-Based Conversational AI: Towards a Distributed and Collaborative Multi-Chatbot Approach for Museums. Applied Sciences 2021, 11. [Google Scholar] [CrossRef]

- Lawan, S. Challenges and Prospect of Museum Institutions in the 21st Century in Northern Nigeria. Journal of Social Sciences Advancement 2022, 3, 45–52. [Google Scholar] [CrossRef]

- Farahat, B.I.; Osman, K.A. Toward a new vision to design a museum in historical places. HBRC Journal 2018, 14, 66–78. [Google Scholar] [CrossRef]

- Carnall, M.; Ashby, J.; Ross, C. Natural history museums as provocateurs for dialogue and debate. Museum Management and Curatorship 2013, 28, 55–71. [Google Scholar] [CrossRef]

- Buchanan, S.A. Curation as Public Scholarship: Museum Archaeology in a Seventeenth-Century Shipwreck Exhibit. Museum Worlds 2016, 4, 155–166. [Google Scholar] [CrossRef]

- Adesso, G. Towards The Ultimate Brain: Exploring Scientific Discovery with ChatGPT AI. 2023. [CrossRef]

- Koubaa, A. GPT-4 vs. GPT-3.5: A Concise Showdown. 2023. [CrossRef]

- Ray, P.P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Currie, G.M. Academic integrity and artificial intelligence: is ChatGPT hype, hero or heresy? Seminars in Nuclear Medicine 2023. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:cs.CL/2303.08774].

- Lehman, J.; Gordon, J.; Jain, S.; Ndousse, K.; Yeh, C.; Stanley, K.O. Evolution through Large Models. arXiv 2022, arXiv:cs.NE/2206.08896]. [Google Scholar]

- Liu, Y.; Han, T.; Ma, S.; Zhang, J.; Yang, Y.; Tian, J.; He, H.; Li, A.; He, M.; Liu, Z.; Wu, Z.; Zhu, D.; Li, X.; Qiang, N.; Shen, D.; Liu, T.; Ge, B. Summary of ChatGPT/GPT-4 Research and Perspective Towards the Future of Large Language Models. arXiv 2023, arXiv:cs.CL/2304.01852]. [Google Scholar]

- Bubeck, S.; Chandrasekaran, V.; Eldan, R.; Gehrke, J.; Horvitz, E.; Kamar, E.; Lee, P.; Lee, Y.T.; Li, Y.; Lundberg, S.; Nori, H.; Palangi, H.; Ribeiro, M.T.; Zhang, Y. Sparks of Artificial General Intelligence: Early experiments with GPT-4. arXiv 2023, arXiv:cs.CL/2303.12712]. [Google Scholar]

- Chang, K.K.; Cramer, M.; Soni, S.; Bamman, D. Speak, Memory: An Archaeology of Books Known to ChatGPT/GPT-4. arXiv 2023, arXiv:cs.CL/2305.00118]. [Google Scholar]

- Siu, S.C. ChatGPT and GPT-4 for Professional Translators: Exploring the Potential of Large Language Models in Translation. Available at SSRN 4448091 2023. [Google Scholar] [CrossRef]

- Chen, F.; Han, M.; Zhao, H.; Zhang, Q.; Shi, J.; Xu, S.; Xu, B. X-LLM: Bootstrapping Advanced Large Language Models by Treating Multi-Modalities as Foreign Languages. arXiv 2023, arXiv:cs.CL/2305.04160]. [Google Scholar]

- Cheng, M.; Durmus, E.; Jurafsky, D. Marked Personas: Using Natural Language Prompts to Measure Stereotypes in Language Models. arXiv 2023, arXiv:cs.CL/2305.18189]. [Google Scholar]

- Jiang, H.; Zhang, X.; Cao, X.; Kabbara, J. PersonaLLM: Investigating the Ability of GPT-3. 5 to Express Personality Traits and Gender Differences. arXiv:cs.CL/2305.02547], 2023.

- Dehouche, N. Plagiarism in the age of massive Generative Pre-trained Transformers (GPT-3). Ethics in Science and Environmental Politics 2021, 21, 17–23. [Google Scholar] [CrossRef]

- Lee, M. A Mathematical Interpretation of Autoregressive Generative Pre-Trained Transformer and Self-Supervised Learning. Mathematics 2023, 11. [Google Scholar] [CrossRef]

- Mazzeo, R. Editorial. Topics in Current Chemistry 2016, 375, 1. [Google Scholar] [CrossRef]

- Liu, Y.; Lin, H.W. Construction of Interpretation and Presentation System of Cultural Heritage Site: An Analysis of the Old City, Zuoying. Heritage 2021, 4, 316–332. [Google Scholar] [CrossRef]

- Platia, N.; Chatzidakis, M.; Doerr, C.; Charami, L.; Bekiari, C.; Melessanaki, K.; Hatzigiannakis, K.; Pouli, P. “POLYGNOSIS”: the development of a thesaurus in an Educational Web Platform on optical and laser-based investigation methods for cultural heritage analysis and diagnosis. Heritage Science 2017, 5, 50. [Google Scholar] [CrossRef]

- Pressey, A.; Houghton, D.; Istanbulluoglu, D. The problematic use of smartphones in public: the development and validation of a measure of smartphone “zombie” behaviour. Information Technology & People 2023. ahead-of-print. [Google Scholar] [CrossRef]

- Appel, M.; Krisch, N.; Stein, J.P.; Weber, S. Smartphone zombies! Pedestrians’ distracted walking as a function of their fear of missing out. Journal of Environmental Psychology 2019, 63, 130–133. [Google Scholar] [CrossRef]

- Zhuang, Y.; Fang, Z. Smartphone Zombie Context Awareness at Crossroads: A Multi-Source Information Fusion Approach. IEEE Access 2020, 8, 101963–101977. [Google Scholar] [CrossRef]

- Min, B.S. Smartphone Addiction of Adolescents, Not a Smart Choice. jkms 2017, 32, 1563–1564, [http://www.e-sciencecentral.org/articles/?scid=1023456]. [Google Scholar] [CrossRef]

- Huh, J.; Park, S.; Lee, J.E.; Ye, J.C. Improving Medical Speech-to-Text Accuracy with Vision-Language Pre-training Model. arXiv 2023, arXiv:eess.AS/2303.00091]. [Google Scholar]

- Wahyutama, A.B.; Hwang, M. Performance Comparison of Open Speech-To-Text Engines using Sentence Transformer Similarity Check with the Korean Language by Foreigners. 2022 IEEE International Conference on Industry 4.0, Artificial Intelligence, and Communications Technology (IAICT), 2022, pp. 97–101. [CrossRef]

- Park, C.; Seo, J.; Lee, S.; Lee, C.; Moon, H.; Eo, S.; Lim, H. BTS: Back TranScription for Speech-to-Text Post-Processor using Text-to-Speech-to-Text. Proceedings of the 8th Workshop on Asian Translation (WAT2021); Association for Computational Linguistics: Online, 2021; pp. 106–116. [Google Scholar] [CrossRef]

- Saha, S. ; Asaduzzaman. Development of a Bangla Speech to Text Conversion System Using Deep Learning. 2021 Joint 10th International Conference on Informatics, Electronics & Vision (ICIEV) and 2021 5th International Conference on Imaging, Vision & Pattern Recognition (icIVPR), 2021, pp. 1–7. [CrossRef]

- Miller, C.; Tzoukermann, E.; Doyon, J.; Mallard, E. Corpus Creation and Evaluation for Speech-to-Text and Speech Translation. Proceedings of Machine Translation Summit XVIII: Users and Providers Track; Association for Machine Translation in the Americas: Virtual, 2021; pp. 44–53. [Google Scholar]

- Elakkiya, A.; Surya, K.J.; Venkatesh, K.; Aakash, S. Implementation of Speech to Text Conversion Using Hidden Markov Model. 2022 6th International Conference on Electronics, Communication and Aerospace Technology, 2022, pp. 359–363. [CrossRef]

- Nagdewani, S.; Jain, A. A REVIEW ON METHODS FOR SPEECH-TO-TEXT AND TEXT-TO-SPEECH CONVERSION. 2020.

- Tzoukermann, E.; Van Guilder, S.; Doyon, J.; Harke, E. Speech-to-Text and Evaluation of Multiple Machine Translation Systems. Proceedings of the 15th Biennial Conference of the Association for Machine Translation in the Americas (Volume 2: Users and Providers Track and Government Track); Association for Machine Translation in the Americas: Orlando, USA, 2022; pp. 465–472.

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. arXiv 2022, arXiv:eess.AS/2212.04356]. [Google Scholar]

- Vrettakis, E.; Kourtis, V.; Katifori, A.; Karvounis, M.; Lougiakis, C.; Ioannidis, Y. Narralive – Creating and experiencing mobile digital storytelling in cultural heritage. Digital Applications in Archaeology and Cultural Heritage 2019, 15, e00114. [Google Scholar] [CrossRef]

- Katifori, A.; Roussou, M.; Perry, S.; Drettakis, G.; Vizcay, S.; Philip, J. The EMOTIVE Project-Emotive Virtual Cultural Experiences through Personalized Storytelling. Cira@ euromed, 2018, pp. 11–20.

- Economou, M.; Young, H.; Sosnowska, E. Evaluating emotional engagement in digital stories for interpreting the past. The case of the Hunterian Museum’s Antonine Wall EMOTIVE experiences. 2018, pp. 1–8. [CrossRef]

- Trichopoulos, G.; Aliprantis, J.; Konstantakis, M.; Michalakis, K.; Mylonas, P.; Voutos, Y.; Caridakis, G. Augmented and personalized digital narratives for Cultural Heritage under a tangible interface. 2021, pp. 1–5. [CrossRef]

| 1 |

https://stt.readthedocs.io/en/latest/#, accessed on 15/06/2023 |

| 2 |

https://pypi.org/project/pyttsx4/, accessed on 15/06/2023 |

| 3 |

https://speechgen.io/, accessed on 15/06/2023 |

| 4 |

https://beta.openai.com/docs/guides/fine-tuning, accessed on 15/06/2023 |

| 5 |

https://openai.com/blog/openai-and-microsoft-extend-partnership, accessed on 15/06/2023 |

| No | Characteristic | GPT3 | GPT3.5 | GPT4 |

|---|---|---|---|---|

| 1 | Can be fine-tuned | Yes | Yes | Yes |

| 2 | Bias in text | No | No | No |

| 3 | Ease in guidance | Low | High | High |

| 4 | Can change style of the text | No | Yes | Yes |

| 5 | Truncated answers | Yes | No | No |

| 6 | Extra-long answers (babbling effect) | No | Yes | No |

| 7 | Can use other languages than English | No | Partially | Yes |

| 8 | Repeated meanings | Yes | Yes | No |

| 9 | Controversial answers | Yes | Yes | No |

| 10 | Input tokens limitation | 2048 (normal) - 4096 (max) | 4096 | None |

| 11 | Cost for training and use | High | Low | Low |

| 12 | Speed in responses | High | Medium | Low |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).